Benchmarking of CNN Models and MobileNet-BiLSTM Approach to Classification of Tomato Seed Cultivars

Abstract

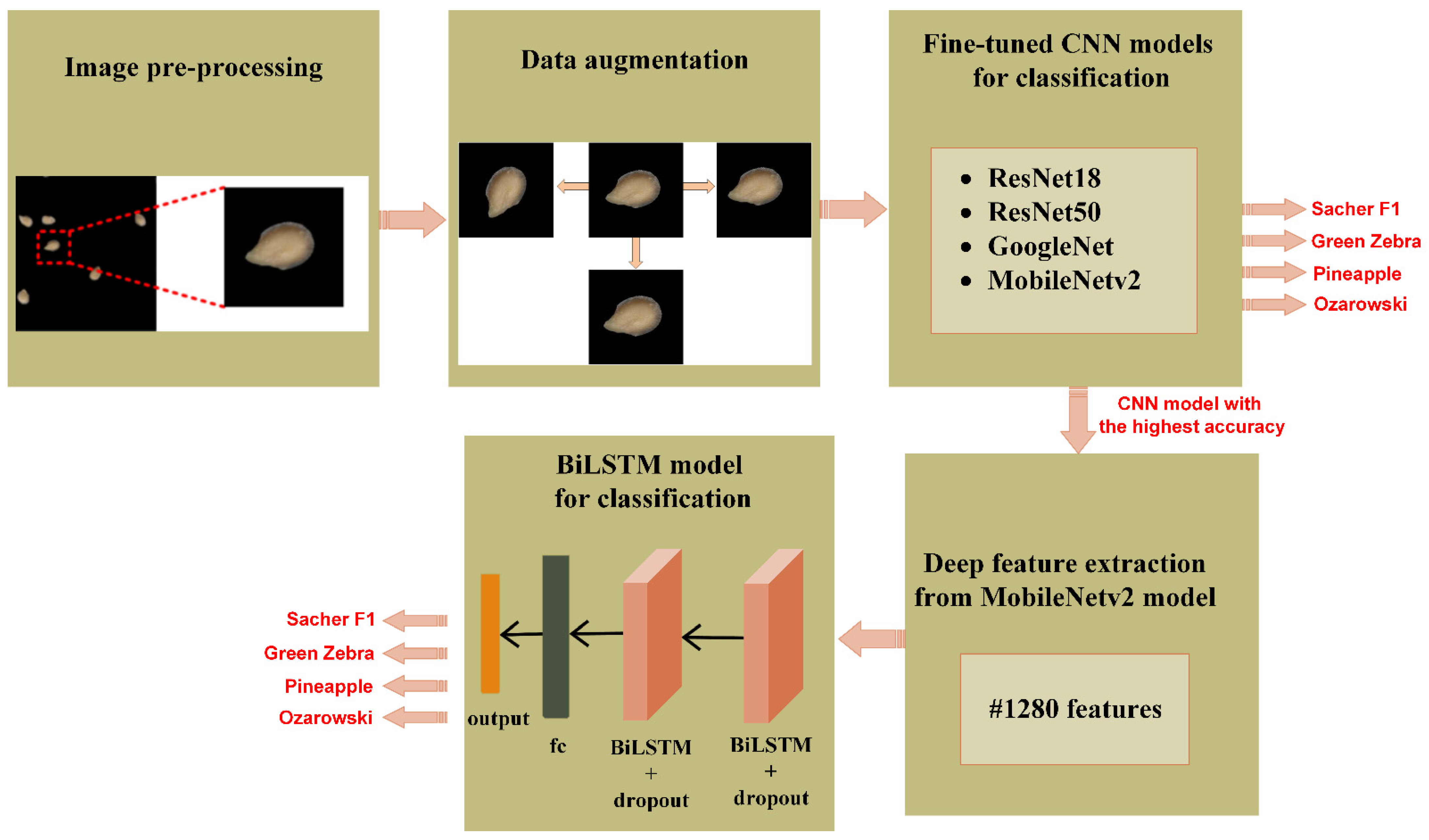

1. Introduction

- Developed procedure of automatic differentiation of seeds belonging to different tomato cultivars can be used in smart tomato cultivation,

- Classification and comparative analysis of tomato seeds with different CNN models ensured the selection of approach providing the most correct results,

- Classification with BiLSTM using deep features extracted from the CNN model can be used in smart farming for the identification, and selection of seed cultivars of the tomato characterized by the most desirable features.

2. Materials and Methods

2.1. Image Acquisition, and Preprocessing

2.2. Data Augmentation

2.3. Classification with Fine-Tuned CNN Models and BiLSTM Model

3. Results

4. Discussion

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Marmiroli, M.; Mussi, F.; Gallo, V.; Gianoncelli, A.; Hartley, W.; Marmiroli, N. Combination of Biochemical, Molecular, and Synchrotron-Radiation-Based Techniques to Study the Effects of Silicon in Tomato (Solanum Lycopersicum L.). Int. J. Mol. Sci. 2022, 23, 15837. [Google Scholar] [CrossRef]

- Zhou, T.; Li, R.; Yu, Q.; Wang, J.; Pan, J.; Lai, T. Proteomic Changes in Response to Colorless Nonripening Mutation during Tomato Fruit Ripening. Plants 2022, 11, 3570. [Google Scholar] [CrossRef]

- Shalaby, T.A.; Taha, N.; El-Beltagi, H.S.; El-Ramady, H. Combined Application of Trichoderma harzianum and Paclobutrazol to Control Root Rot Disease Caused by Rhizoctonia solani of Tomato Seedlings. Agronomy 2022, 12, 3186. [Google Scholar] [CrossRef]

- Jia, S.; Zhang, N.; Ji, H.; Zhang, X.; Dong, C.; Yu, J.; Yan, S.; Chen, C.; Liang, L. Effects of Atmospheric Cold Plasma Treatment on the Storage Quality and Chlorophyll Metabolism of Postharvest Tomato. Foods 2022, 11, 4088. [Google Scholar] [CrossRef]

- Dou, J.; Wang, J.; Tang, Z.; Yu, J.; Wu, Y.; Liu, Z.; Wang, J.; Wang, G.; Tian, Q. Application of Exogenous Melatonin Improves Tomato Fruit Quality by Promoting the Accumulation of Primary and Secondary Metabolites. Foods 2022, 11, 4097. [Google Scholar]

- Shrestha, S.; Deleuran, L.C.; Olesen, M.H.; Gislum, R. Use of multispectral imaging in varietal identification of tomato. Sensors 2015, 15, 4496–4512. [Google Scholar] [CrossRef] [PubMed]

- Kumar, M.; Tomar, M.; Bhuyan, D.J.; Punia, S.; Grasso, S.; Sa, A.G.A.; Carciofi, B.A.M.; Arrutia, F.; Changan, S.; Singh, S. Tomato (Solanum lycopersicum L.) seed: A review on bioactives and biomedical activities. Biomed. Pharmacother. 2021, 142, 112018. [Google Scholar] [CrossRef] [PubMed]

- Kumar, S.; Thirunavookarasu, S.N.; Sunil, C.; Vignesh, S.; Venkatachalapathy, N.; Rawson, A. Mass transfer kinetics and quality evaluation of tomato seed oil extracted using emerging technologies. Innov. Food Sci. Emerg. Technol. 2023, 83, 103203. [Google Scholar] [CrossRef]

- Giuffrè, A.; Capocasale, M. Physicochemical composition of tomato seed oil for an edible use: The effect of cultivar. Int. Food Res. J. 2016, 23, 583–591. [Google Scholar]

- Yasmin, J.; Lohumi, S.; Ahmed, M.R.; Kandpal, L.M.; Faqeerzada, M.A.; Kim, M.S.; Cho, B.-K. Improvement in purity of healthy tomato seeds using an image-based one-class classification method. Sensors 2020, 20, 2690. [Google Scholar] [CrossRef]

- Ropelewska, E.; Piecko, J. Discrimination of tomato seeds belonging to different cultivars using machine learning. Eur. Food Res. Technol. 2022, 248, 685–705. [Google Scholar] [CrossRef]

- Shrestha, S.; Deleuran, L.C.; Gislum, R. Classification of different tomato seed cultivars by multispectral visible-near infrared spectroscopy and chemometrics. J. Spectr. Imaging 2016, 5, a1. [Google Scholar] [CrossRef]

- Galletti, P.A.; Carvalho, M.E.; Hirai, W.Y.; Brancaglioni, V.A.; Arthur, V.; Barboza da Silva, C. Integrating optical imaging tools for rapid and non-invasive characterization of seed quality: Tomato (Solanum lycopersicum L.) and carrot (Daucus carota L.) as study cases. Front. Plant Sci. 2020, 11, 577851. [Google Scholar] [CrossRef]

- Karimi, H.; Navid, H.; Mahmoudi, A. Detection of damaged seeds in laboratory evaluation of precision planter using impact acoustics and artificial neural networks. Artif. Intell. Res. 2012, 1, 67–74. [Google Scholar]

- Borges, S.R.d.S.; Silva, P.P.d.; Araújo, F.S.; Souza, F.F.d.J.; Nascimento, W.M. Tomato seed image analysis during the maturation. J. Seed Sci. 2019, 41, 022–031. [Google Scholar] [CrossRef]

- Shrestha, S.; Knapič, M.; Žibrat, U.; Deleuran, L.C.; Gislum, R. Single seed near-infrared hyperspectral imaging in determining tomato (Solanum lycopersicum L.) seed quality in association with multivariate data analysis. Sens. Actuators B Chem. 2016, 237, 1027–1034. [Google Scholar] [CrossRef]

- Škrubej, U.; Rozman, Č.; Stajnko, D. Assessment of germination rate of the tomato seeds using image processing and machine learning. Eur. J. Hortic. Sci. 2015, 80, 68–75. [Google Scholar] [CrossRef]

- Nehoshtan, Y.; Carmon, E.; Yaniv, O.; Ayal, S.; Rotem, O. Robust seed germination prediction using deep learning and RGB image data. Sci. Rep. 2021, 11, 22030. [Google Scholar] [CrossRef]

- Sabanci, K.; Aslan, M.F.; Ropelewska, E.; Unlersen, M.F. A convolutional neural network-based comparative study for pepper seed classification: Analysis of selected deep features with support vector machine. J. Food Process Eng. 2022, 45, e13955. [Google Scholar] [CrossRef]

- Sabanci, K.; Aslan, M.F.; Ropelewska, E. Benchmarking analysis of CNN models for pits of sour cherry cultivars. Eur. Food Res. Technol. 2022, 248, 2441–2449. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Koklu, M.; Unlersen, M.F.; Ozkan, I.A.; Aslan, M.F.; Sabanci, K. A CNN-SVM study based on selected deep features for grapevine leaves classification. Measurement 2022, 188, 110425. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 1–48. [Google Scholar] [CrossRef]

- Unlersen, M.F.; Sonmez, M.E.; Aslan, M.F.; Demir, B.; Aydin, N.; Sabanci, K.; Ropelewska, E. CNN–SVM hybrid model for varietal classification of wheat based on bulk samples. Eur. Food Res. Technol. 2022, 248, 2043–2052. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Aslan, M.F.; Unlersen, M.F.; Sabanci, K.; Durdu, A. CNN-based transfer learning–BiLSTM network: A novel approach for COVID-19 infection detection. Appl. Soft Comput. 2021, 98, 106912. [Google Scholar] [CrossRef]

- Koklu, M.; Cinar, I.; Taspinar, Y.S. CNN-based bi-directional and directional long-short term memory network for determination of face mask. Biomed. Signal Process. Control 2022, 71, 103216. [Google Scholar] [CrossRef]

- Patel, K.K.; Kar, A.; Jha, S.N.; Khan, M.A. Machine vision system: A tool for quality inspection of food and agricultural products. J. Food Sci. Technol. 2012, 49, 123–141. [Google Scholar] [CrossRef]

- Alipasandi, A.; Ghaffari, H.; Alibeyglu, S.Z. Classification of three varieties of peach fruit using artificial neural network assisted with image processing techniques. Int. J. Agron. Plant Prod. 2013, 4, 2179–2186. [Google Scholar]

- Ropelewska, E.; Rutkowski, K.P. Differentiation of peach cultivars by image analysis based on the skin, flesh, stone and seed textures. Eur. Food Res. Technol. 2021, 247, 2371–2377. [Google Scholar] [CrossRef]

- Ropelewska, E.; Szwejda-Grzybowska, J. A comparative analysis of the discrimination of pepper (Capsicum annuum L.) based onthe cross-section and seed textures determined using image processing. J. Food Process Eng. 2021, 44, 13694. [Google Scholar]

- Ropelewska, E.; Sabanci, K.; Aslan, M.F.; Azizi, A. A Novel Approach to the Authentication of Apricot Seed Cultivars Using Innovative Models Based on Image Texture Parameters. Horticulturae 2022, 8, 431. [Google Scholar] [CrossRef]

- Ropelewska, E. The use of seed texture features for discriminating different cultivars of stored apples. J. Stored Prod. Res. 2020, 88, 101668. [Google Scholar] [CrossRef]

- Ropelewska, E.; Rutkowski, K.P. Cultivar discrimination of stored apple seeds based on geometric features determined using image analysis. J. Stored Prod. Res. 2021, 92, 101804. [Google Scholar] [CrossRef]

- Xu, P.; Tan, Q.; Zhang, Y.; Zha, X.; Yang, S.; Yang, R. Research on Maize Seed Classification and Recognition Based on Machine Vision and Deep Learning. Agriculture 2022, 12, 232. [Google Scholar] [CrossRef]

- Zhu, S.; Zhou, L.; Gao, P.; Bao, Y.; He, Y.; Feng, L. Near-Infrared Hyperspectral Imaging Combined with Deep Learning to Identify Cotton Seed Varieties. Molecules 2019, 24, 3268. [Google Scholar] [CrossRef]

- Huang, Z.; Wang, R.; Cao, Y.; Zheng, S.; Teng, Y.; Wang, F.; Wang, L.; Du, J. Deep learning based soybean seed classification. Comput. Electron. Agric. 2022, 202, 107393. [Google Scholar] [CrossRef]

- Luo, T.; Zhao, J.; Gu, Y.; Zhang, S.; Qiao, X.; Tian, W.; Han, Y. Classification of weed seeds based on visual images and deep learning. Inf. Process. Agric. 2021, 10, 40–51. [Google Scholar] [CrossRef]

- Jin, X.; Zhao, Y.; Bian, H.; Li, J.; Xu, C. Sunflower seeds classification based on self-attention Focusing algorithm. J. Food Meas. Charact. 2023, 17, 143–154. [Google Scholar] [CrossRef]

- Taheri-Garavand, A.; Nasiri, A.; Fanourakis, D.; Fatahi, S.; Omid, M.; Nikoloudakis, N. Automated In Situ Seed Variety Identification via Deep Learning: A Case Study in Chickpea. Plants 2021, 10, 1406. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhang, C.; Zhu, S.; Gao, P.; Feng, L.; He, Y. Non-Destructive and Rapid Variety Discrimination and Visualization of Single Grape Seed Using Near-Infrared Hyperspectral Imaging Technique and Multivariate Analysis. Molecules 2018, 23, 1352. [Google Scholar] [CrossRef]

- Uddin, M.; Islam, M.A.; Shajalal, M.; Hossain, M.A.; Yousuf, M.; Iftekhar, S. Paddy seed variety identification using t20-hog and haralick textural features. Complex Intell. Syst. 2022, 8, 657–671. [Google Scholar] [CrossRef]

- Jin, X.; Zhao, Y.; Wu, H.; Sun, T. Sunflower seeds classification based on sparse convolutional neural networks in multi-objective scene. Sci. Rep. 2022, 12, 19890. [Google Scholar] [CrossRef] [PubMed]

- Rahman, A.; Cho, B.K. Assessment of seed quality using non-destructive measurement techniques: A review. Seed Sci. Res. 2016, 26, 285–305. [Google Scholar] [CrossRef]

- Bafdal, N.; Ardiansah, I. Application of Internet of Things in smart greenhouse microclimate management for tomato growth. Int. J. Adv. Sci. Eng. Inf. Technol. 2021, 11, 427–432. [Google Scholar] [CrossRef]

- Morandin, L.A.; Laverty, T.M.; Kevan, P.G. Bumble bee (Hymenoptera: Apidae) activity and pollination levels in commercial tomato greenhouses. J. Econ. Entomol. 2001, 94, 462–467. [Google Scholar] [CrossRef]

- Sharma, M.; Rastogi, R.; Arya, N.; Akram, S.V.; Singh, R.; Gehlot, A.; Buddhi, D.; Joshi, K. LoED: LoRa and Edge Computing based System Architecture for Sustainable Forest Monitoring. Int. J. Eng. Trends Technol. 2022, 70, 88–93. [Google Scholar] [CrossRef]

- Joshi, K.; Diwakar, M.; Joshi, N.K.; Lamba, S. A concise review on latest methods of image fusion. Recent Adv. Comput. Sci. Commun. (Former. Recent Pat. Comput. Sci.) 2021, 14, 2046–2056. [Google Scholar] [CrossRef]

- Halgamuge, M.N.; Bojovschi, A.; Fisher, P.M.; Le, T.C.; Adeloju, S.; Murphy, S. Internet of Things and autonomous control for vertical cultivation walls towards smart food growing: A review. Urban For. Urban Green. 2021, 61, 127094. [Google Scholar] [CrossRef]

- Kirola, M.; Joshi, K.; Chaudhary, S.; Singh, N.; Anandaram, H.; Gupta, A. Plants diseases prediction framework: A image-based system using deep learning. In Proceedings of the 2022 IEEE World Conference on Applied Intelligence and Computing (AIC), Sonbhadra, India, 17–19 June 2022; pp. 307–313. [Google Scholar]

| Techniques | Lower Limit | Upper Limit |

|---|---|---|

| Rotation (Degree) | −45° | +45° |

| Scale (Percentage) | 90% | 110% |

| Translation (Pixel) | −15 px | +15 px |

| Model | Acc. (%) | Sens. | Spec. | Prec. | F1-Score | MCC |

|---|---|---|---|---|---|---|

| GoogleNet | 83.28 | 0.8328 | 0.9442 | 0.8362 | 0.8319 | 0.7787 |

| MobileNet | 93.44 | 0.9339 | 0.9781 | 0.9379 | 0.9342 | 0.9138 |

| ResNet18 | 90.62 | 0.9068 | 0.9688 | 0.9108 | 0.9067 | 0.8773 |

| ResNet50 | 92.19 | 0.9216 | 0.9740 | 0.9287 | 0.9213 | 0.8987 |

| Model | Acc. (%) | Sens. | Spec. | Prec. | F1-Score | MCC |

|---|---|---|---|---|---|---|

| MobileNet | 93.44 | 0.9339 | 0.9781 | 0.9379 | 0.9342 | 0.9138 |

| BiLSTM with deep features (#1280) | 96.09 | 0.9609 | 0.9870 | 0.9610 | 0.9609 | 0.9479 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sabanci, K. Benchmarking of CNN Models and MobileNet-BiLSTM Approach to Classification of Tomato Seed Cultivars. Sustainability 2023, 15, 4443. https://doi.org/10.3390/su15054443

Sabanci K. Benchmarking of CNN Models and MobileNet-BiLSTM Approach to Classification of Tomato Seed Cultivars. Sustainability. 2023; 15(5):4443. https://doi.org/10.3390/su15054443

Chicago/Turabian StyleSabanci, Kadir. 2023. "Benchmarking of CNN Models and MobileNet-BiLSTM Approach to Classification of Tomato Seed Cultivars" Sustainability 15, no. 5: 4443. https://doi.org/10.3390/su15054443

APA StyleSabanci, K. (2023). Benchmarking of CNN Models and MobileNet-BiLSTM Approach to Classification of Tomato Seed Cultivars. Sustainability, 15(5), 4443. https://doi.org/10.3390/su15054443