Abstract

In the present study, a deep learning-based two-scenario method is proposed to distinguish tomato seed cultivars. First, images of seeds of four different tomato cultivars (Sacher F1, Green Zebra, Pineapple, and Ozarowski) were taken. Each seed was then cropped on the raw image and saved as a new image. The number of images in the dataset was increased using data augmentation techniques. In the first scenario, these seed images were classified with four different CNN (convolutional neural network) models (ResNet18, ResNet50, GoogleNet, and MobileNetv2). The highest classification accuracy of 93.44% was obtained with the MobileNetv2 model. In the second scenario, 1280 deep features obtained from MobileNetv2 fed the inputs of the Bidirectional Long Short-Term Memory (BiLSTM) network. In the classification made using the BiLSTM network, 96.09% accuracy was obtained. The results show that different tomato seed cultivars can be distinguished quickly and accurately by the proposed deep learning-based method. The performed study is a great novelty in distinguishing seed cultivars and the developed innovative approach involving deep learning in tomato seed image analysis, and can be used as a comprehensive procedure for practical tomato seed classification.

1. Introduction

The tomato (Solanum lycopersicum L.) is characterized by high economic and nutritional values. It is widely cultivated and consumed, and its popularity is increasing [1,2]. The tomato is a well-known crop, and its production can depend on cultivars. The tomato fruit produced is commonly consumed fresh or in processed forms [3]. Due to its unique flavor, antioxidants, and bright color, the tomato is popular across the world [4]. The tomato is important for increasing the income of farmers and restructuring agriculture. Tomato fruit contains nutrients, including health-promoting compounds essential in a balanced diet, such as carotenoids, amino acids, phenols, sugars, vitamins, and minerals. Rising living standards and a focus on a healthy lifestyle have resulted in an increase in demand for high-quality fruit. However, tomato quality depends on the cultivar, and different cultivars differ in nutrients and flavors [5].

The increase in tomato demand due to its human consumption and health benefits caused intensive breeding efforts and the development of a large number of cultivars. The identification and discrimination of the cultivars can be important at all stages of the tomato seed production chain for seed producers, breeders, seed traders, processors, variety registration, and other end-users [6]. Furthermore, tomato seeds can be a part of tomato pomace, which is a waste product from fruit processing into ketchup, juices, purees, dried powders, or sauces. Tomato seeds can be used for further processing, for example, for the production of tomato seed oil [7,8]. The differences in tomato seed properties among cultivars have a significant influence on the properties of the tomato seed oil [9]. Therefore, identifying and distinguishing tomato seed cultivars are of great practical importance both for tomato production and seed processing. Tomato germplasm (seeds) can be reliably identified using biochemical or molecular markers, which are expensive, time-consuming, and destructive. Therefore, quick and robust methods and techniques for tomato cultivar identification are desirable [6]. Image analysis and spectral techniques are two non-destructive techniques for evaluating seed quality that are commonly used in agriculture. The machine vision system can provide robust, reliable, and rapid results [10]. Machine vision was used in tomato seed classification, among others, to discriminate healthy seeds from infected ones and foreign materials with a correctness of 97.7% [10]. Ropelewska and Piecko [11] discriminated tomato seeds belonging to five cultivars with an accuracy of 83.6%, and two tomato seeds cultivars were classified with a correctness reaching 99.75%. However, the authors only used traditional machine learning algorithms and did not consider deep learning models. Shrestha et al. [12] used multispectral images and chemometric methods to discriminate five cultivars of tomato seeds with an accuracy of 94% to 100%. In the case of tomato seeds, imaging and artificial intelligence also found applications for many other purposes. Galletti et al. [13] reported that tomato seed quality prediction is possible using optical imaging techniques and chemometric-based multispectral imaging. Artificial neural networks were used to detect damaged tomato seeds by Karimi et al. [14]. The X-ray image analysis was applied to determine the tomato seed maturity [15], whereas near-infrared hyperspectral imaging combined with multivariate data analysis was used in determining tomato seed quality by Shrestha et al. [16]. Image features combined with traditional machine learning [17] or deep learning [18] were used for the prediction of the germination rate of tomato seeds. The results of our previous studies proved that deep learning-based classification of seeds can be characterized by high accuracy, and is useful for cultivar identification of seeds [19]. Deep learning can improve the results obtained using traditional machine learning, and one of the most important advantages of deep learning is performing automatic feature extraction and classification. Whereas traditional machine learning requires handcrafted features [20].

This study is a great novelty in terms of distinguishing seed cultivars. The developed innovative approach involving deep learning in tomato seed image analysis is a comprehensive procedure for tomato seed classification in an objective and non-destructive manner. This approach was applied for the first time for the classification of tomato seeds. In this study, seeds from four different tomato cultivars were classified using deep learning-based models. Four different CNN models (ResNet18, ResNet50, GoogleNet, and MobileNet) were used. Deep learning models have been used instead of obtaining the physical properties of the seed with traditional image processing techniques, and classifying them with machine learning algorithms. These CNN models were chosen because they are widely used, and the sizes of the input images for the models are 224 × 224. The proposed method includes two scenarios. In the first scenario, tomato seeds were classified using four different CNN models. In the second scenario, deep features obtained from the most successful model in the first scenario were used. Using these deep features, tomato seeds were classified by Bidirectional Long Short-Term Memory (BiLSTM). The obtained features could also be classified by traditional machine learning algorithms. However, in this study, the deep learning-based BiLSTM model was preferred instead. The aim here was to increase the classification accuracy. The results obtained from the two scenarios were compared with each other.

As the approach involving different CNN models, such as ResNet18, ResNet50, GoogleNet, and MobileNetv2, as well as the BiLSTM network in tomato seed image analysis, has never been used before for tomato seed cultivar classification, the contribution of this study to smart tomato cultivation and smart farming is significant. It may be indicated as follows:

- Developed procedure of automatic differentiation of seeds belonging to different tomato cultivars can be used in smart tomato cultivation,

- Classification and comparative analysis of tomato seeds with different CNN models ensured the selection of approach providing the most correct results,

- Classification with BiLSTM using deep features extracted from the CNN model can be used in smart farming for the identification, and selection of seed cultivars of the tomato characterized by the most desirable features.

2. Materials and Methods

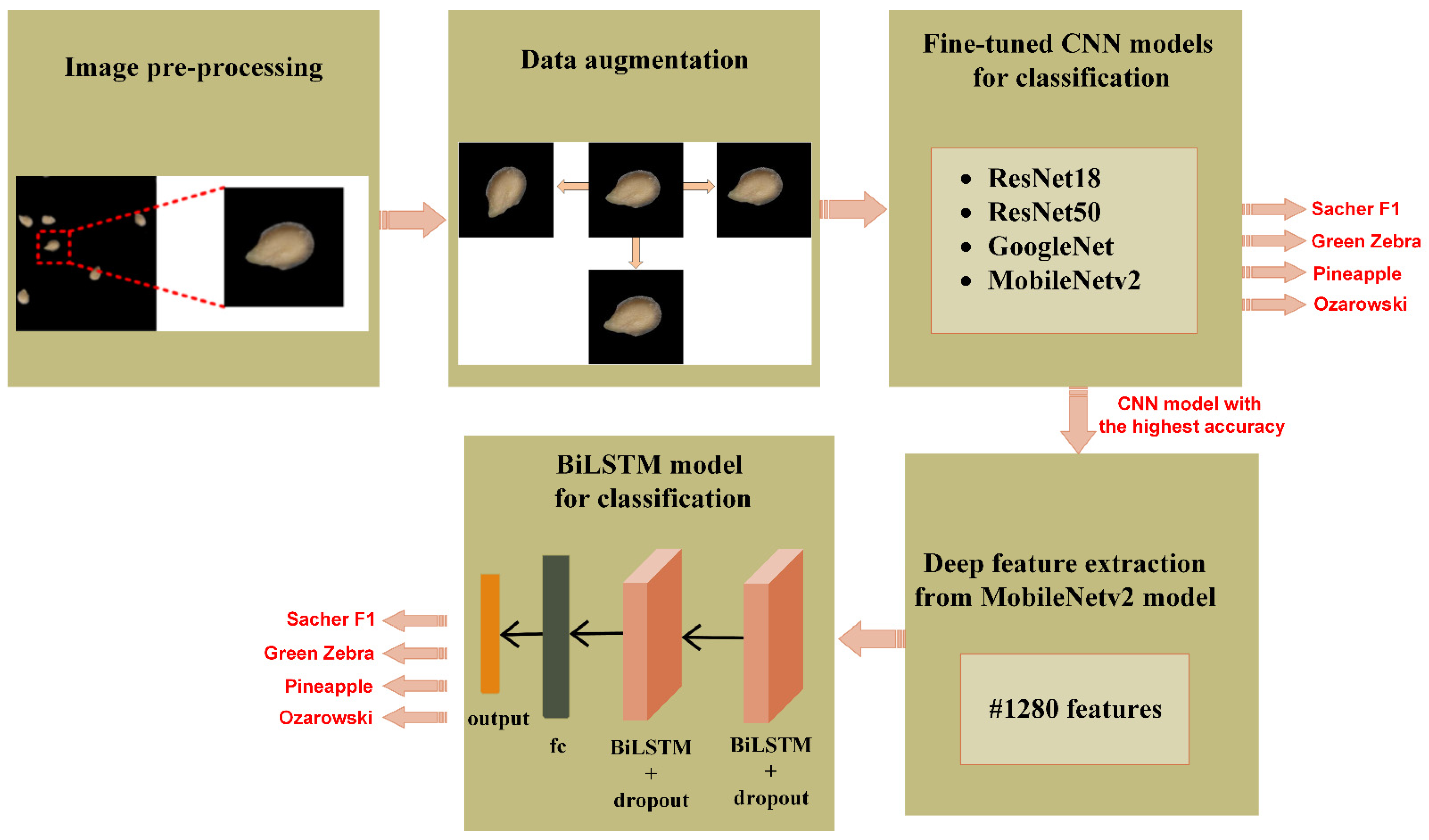

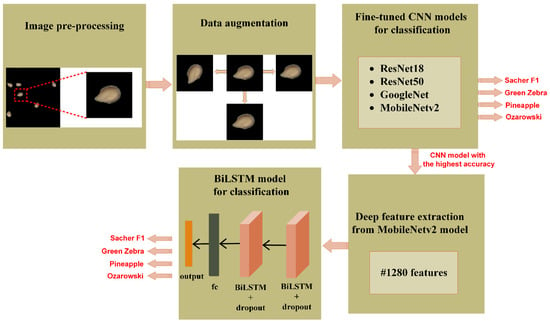

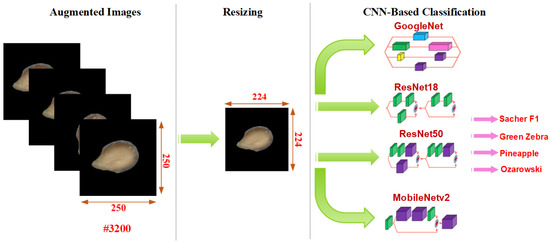

In this section, the classification methods of tomato seed cultivars were presented. The details of the approach proposed in this study are shown in Figure 1. At the beginning, images of tomatoes belonging to different cultivars were photographed in groups. Then, each tomato seed in these images was cropped using image processing techniques and recorded at a size of 250 × 250 pixels. The data augmentation procedure was then used in order to improve the classification success of CNN models. Afterwards, four CNN models were used to classify different cultivars of tomato seeds. Finally, deep features were extracted from the CNN model with the highest classification success and classified using BiLSTM. All of these stages were discussed in further depth below.

Figure 1.

General details of the proposed method.

2.1. Image Acquisition, and Preprocessing

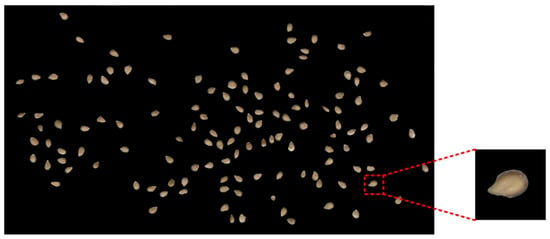

The seed images of different tomato varieties used in this study belong to the study by Ropelewska and Piecko [11]. In this study, tomatoes belonging to Sacher F1, Green Zebra, Pineapple, and Ozarowski cultivars were used. The tomatoes were purchased from a local producer in Poland. The tomato seeds were extracted manually from the fruit. Then, seeds were cleaned of a mucilaginous gel on the seed surface by rinsing in a sieve under tap water, mechanically removing the gel, and drying seeds using paper towels. Images of tomato seeds were obtained using a flatbed scanner. Two scans were performed for each cultivar. The images were recorded in 800 dpi resolution and TIFF format.

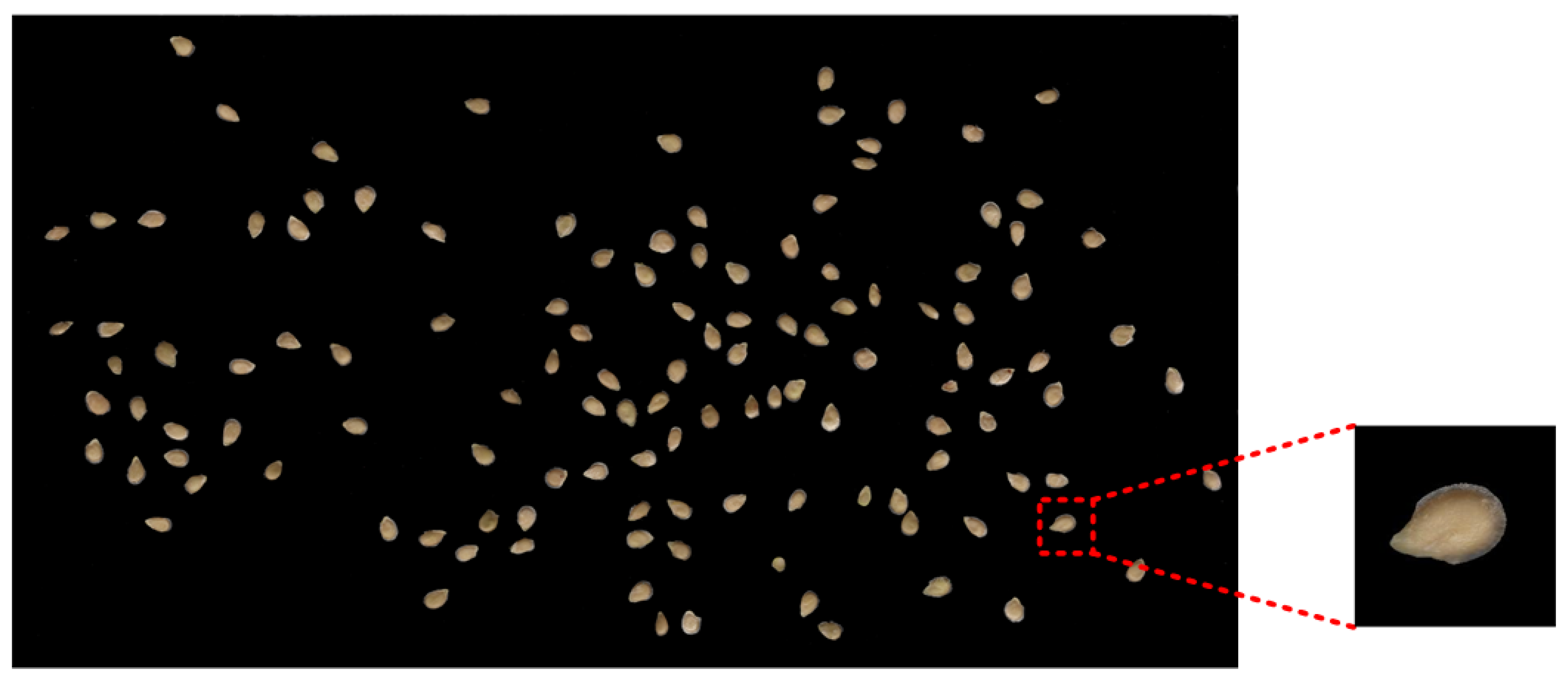

The images of tomato seeds were converted into binary ones with the use of the Otsu method [21]. In the next step, the noise present in the images was removed. In the case of raw images, the boundaries were determined for each seed. The related tomato seed image was cropped with a size of 250 × 250 pixels. Then, for the cropped image, the background was removed so that the seeds next to the seed to be cropped are not visible. Thus, only the related seed was displayed in the cropped tomato seed image. The image of tomato seed obtained using image preprocessing is presented in Figure 2. The obtained tomato seed images of the cultivars Sacher F1, Green Zebra, Pineapple, and Ozarowski tomato are labeled 1, 2, 3, and 4, respectively.

Figure 2.

A sample image of a tomato seed obtained as a result of image preprocessing.

2.2. Data Augmentation

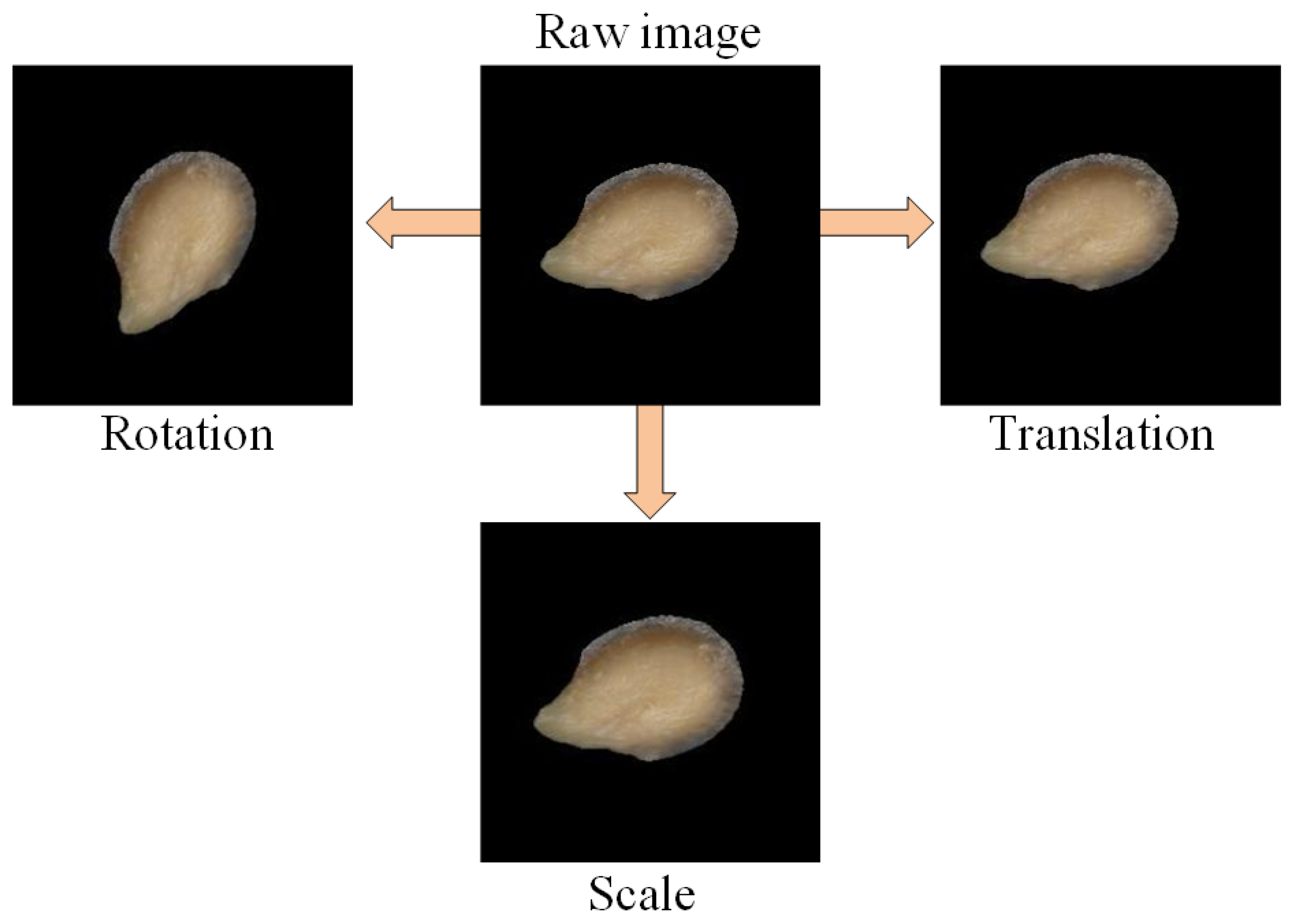

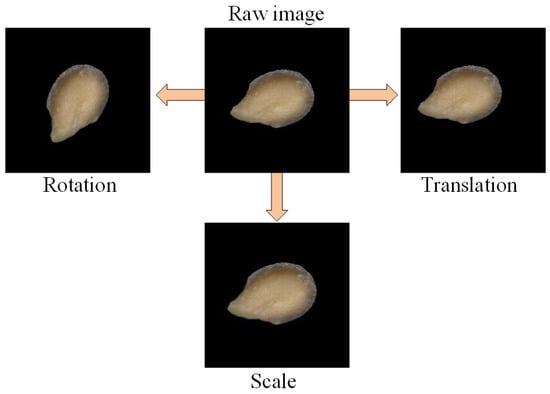

A rich data set increases the correctness of classification in deep learning. Therefore, the images in the dataset can be artificially increased by various manipulation techniques. All actions on raw images performed to increase the number of records in the dataset are referred to as data augmentation [22,23,24]. In this study, since there are 200 seed images of each tomato cultivar, the data augmentation process was performed. The data augmentation methods such as rotation, scaling, and translation presented in Table 1 were used to increase the data diversity. These techniques were applied separately for each tomato seed (Figure 3). The rotation angle, scaling factor, and the number of translation pixels were chosen at random within the limits specified in Table 1. Using data duplication techniques, 2400 new images were obtained. Thus, the dataset contains a total of 3200 images, of which 800 are original and the rest are new.

Table 1.

The data augmentation techniques and limits.

Figure 3.

Images of a sample tomato seed after using data augmentation techniques on a raw image.

2.3. Classification with Fine-Tuned CNN Models and BiLSTM Model

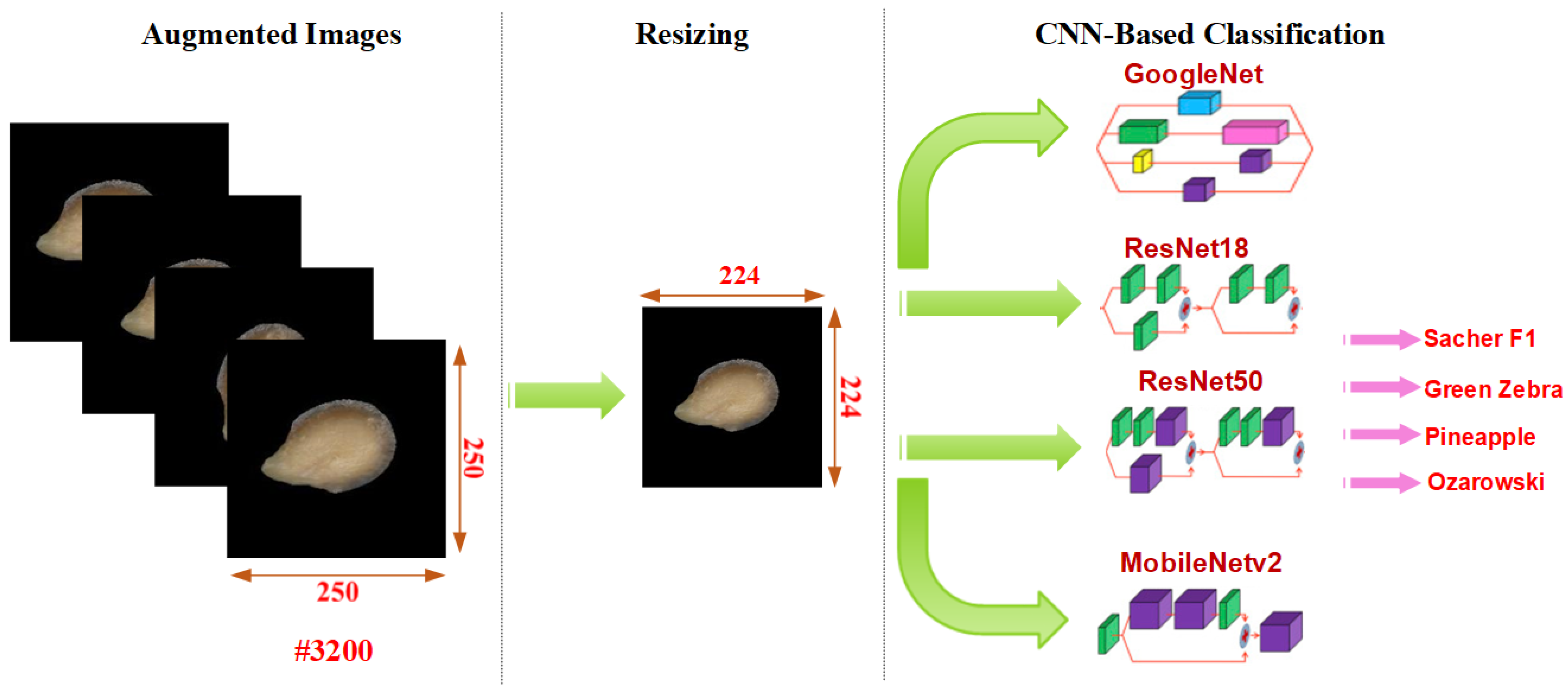

After the data augmentation process, four different CNN models were fed with images of tomato seeds. The well-known popular CNN models were used for the classification of tomato seeds. These CNN models are GoogleNet [25], MobileNetv2 [26], ResNet18 [27], and ResNet50 [27]. The outputs of these CNN models are set for four classes. The common feature of these selected models is that the image size for the input layer is 224 × 224 × 3. Therefore, the images in the dataset were resized and used in the models (Figure 4). Out of the 3200 images, 80% were used for training and 20% for the test phase. Classification performance metrics obtained from CNN models are detailed in the results section.

Figure 4.

Classification of tomato seed images with different CNN models.

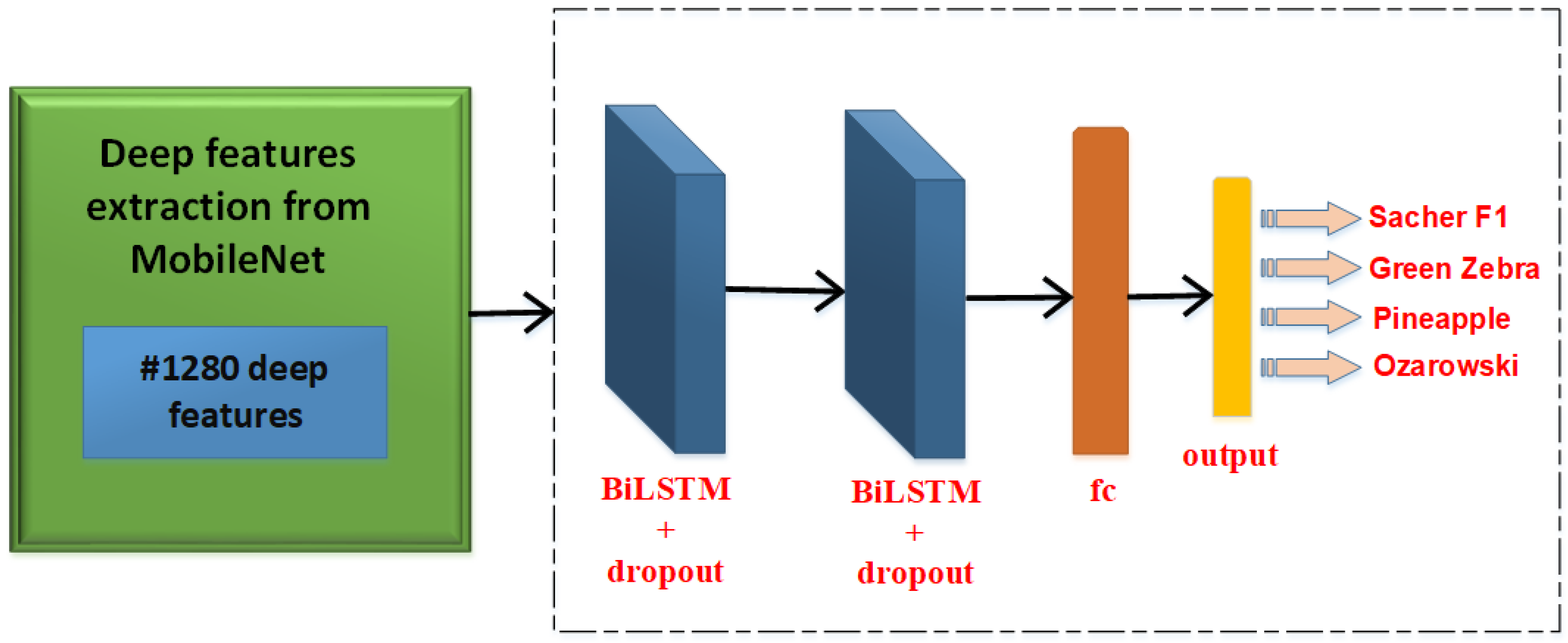

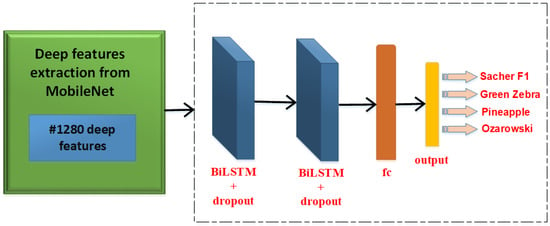

In the second scenario, 1280 deep features obtained from MobileNetv2 with the highest classification accuracy in the first scenario were used. The aim of this scenario was to increase the classification accuracy obtained with the CNN model using the BiLSTM network. These 1280 deep features fed the input of the BiLSTM network [28,29] (Figure 5). The same parameters were used in the two LSTM layers in the BiLSTM network. The number of neurons in the hidden layer is 250, the state activation function is tanh, and the gate activation function is sigmoid. Adam was used as the optimizer in the training parameters. In addition, Max. Epoch, Mini Batch Size, and Learning Rate values are 50, 512, and 0.001, respectively. In the second scenario, 80% of the data set was used for training, and 20% for the test phase.

Figure 5.

Classification of tomato seed images with BiLSTM.

3. Results

In this section, the classification success of four different CNN models in the classification of tomato seeds from different cultivars is discussed. ResNet18, ResNet50, GoogleNet, and MobileNetv2 CNN models were utilized, which are commonly used in deep learning investigations. There are 18, 50, 22, and 53 layers in the ResNet18, ResNet50, GoogleNet, and MobileNetv2 models, respectively. These CNN models use images with a size of 224 × 224 × 3 for input layers.

For the obtained results, the deep learning training and testing processes implemented were performed on a laptop with an Intel Core i7-7700HG CPU, 16 GB RAM, NVIDIA GeForce GTX 1050 4 GB. The new images created by the data augmentation step were resized and then trained with CNN models. Of the 4500 images in the data set, 80% of the training and the rest are reserved for the testing phase. The values of parameters used for training in CNN models are as follows:

Execution Environment: GPU, Learn Rate Drop factor: 0.1, Max Epochs: 5, Initial Learn Rate: 0.001, Mini Batch Size: 32, and Learn Rate Drop period: 20. The optimization algorithm is Stochastic Gradient Descent with Momentum (SGDM).

The results were determined after training and testing procedures for each CNN model. The formulas for classification performance metrics, such as accuracy, precision, sensitivity, sensitivity, specificity, F1-Score, and MCC are defined in Equations (1)–(6).

where : True Positive, : True Negative, : False Positive, and : False Negative.

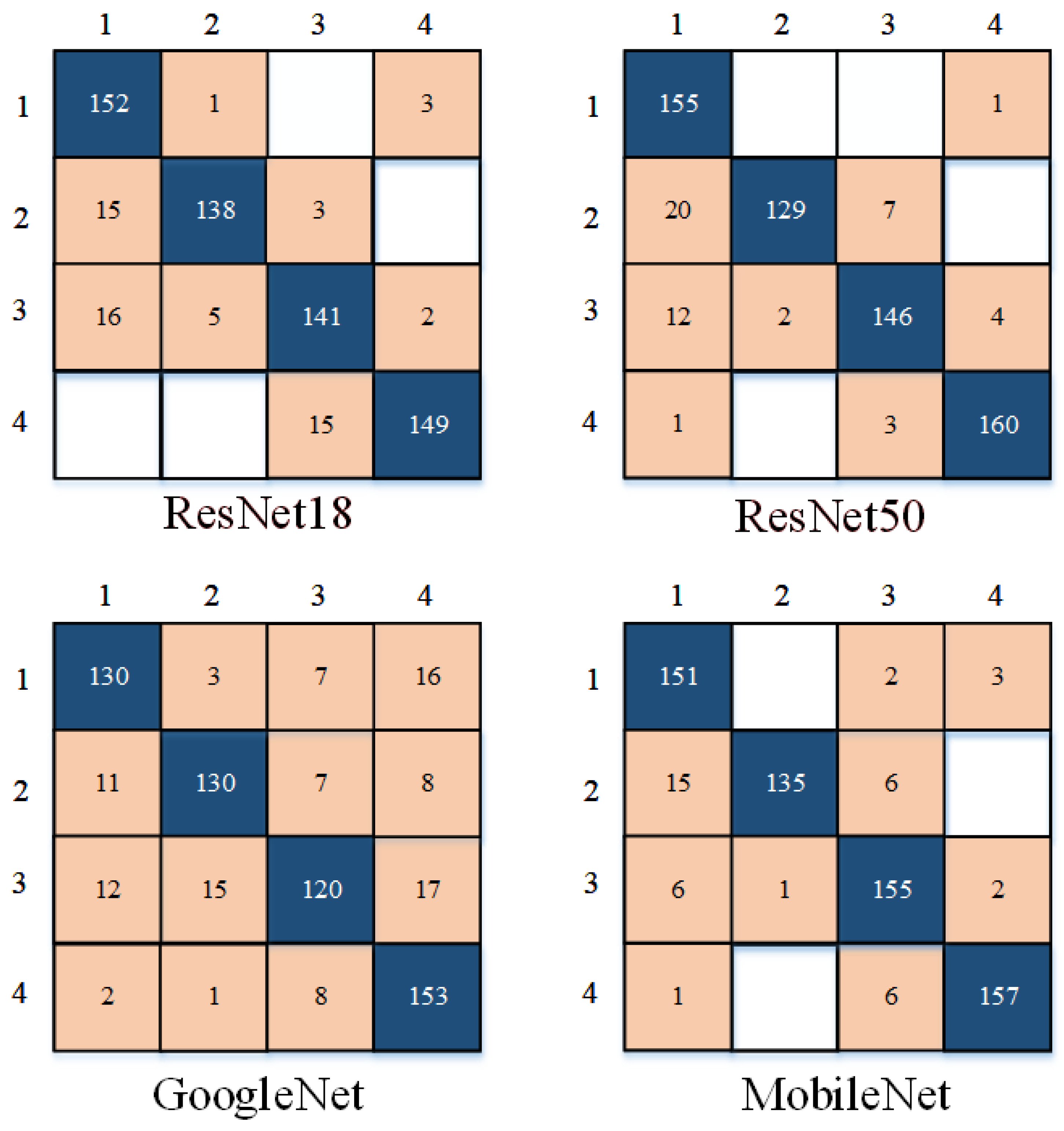

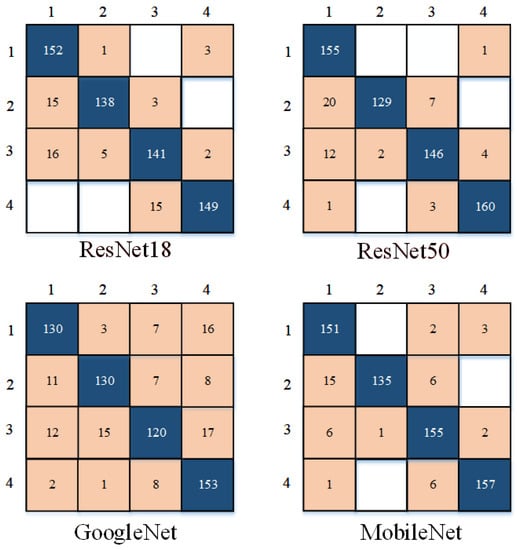

The confusion matrices of tomato seed classification determined by ResNet18, ResNet50, GoogleNet, and MobileNetv2 are shown in Figure 6. The tomato seeds were classified with very high accuracy. In the case of the ResNet18, seeds were correctly classified in 138 to 252 cases for class 2 and class 1, respectively. Great mixing of cases was observed between classes 1 and 2. Fifteen cases belonging to class 2 were incorrectly included in class 1, and one case from class 1 was incorrectly classified as class 2. Additionally, three cases belonging to class 2 were incorrectly classified as tomato seeds from class 3, and three cases from class 1 were incorrectly classified as class 4. In the case of class 3, as many as 16 cases were incorrectly included in class 1, five cases in class 2, and two cases in class 4, whereas 15 cases from class 4 were incorrectly classified as seeds from class 3. In the case of the ResNet50, the greatest mixing of tomato seed cases was found between classes 1 and 2. As many as 20 cases belonging to the actual class 2 were included in the predicted class 1. In addition, a great mixing of tomato seeds was observed for class 3. Twelve cases from the actual class 3 were incorrectly classified as tomato seeds belonging to class 1. The GoogleNet model was characterized by the lowest correctness of the four tomato seed cultivars. In the case of each class, many cases were misclassified. For class 1, most misclassified cases (16) were included in class 4. In the case of class 2, the largest number of misclassified cases (11) were classified as class 1. Many cases from class 3 were incorrectly included in each of the other classes, including 12 in class 1, 15 in class 2, and 17 in class 4. The correctness of tomato seed classification was higher for class 4. Only two cases were incorrectly classified as class 1, one case as class 2, and eight cases as class 3. The confusion matrix for the MobileNet presents the highest correctness of tomato seed classification. For class 2, 15 cases were incorrectly classified as class 1, and 6 as class 3. No case was included in the predicted class 4. In the case of other classes, only several cases were misclassified. In the case of actual class 1, two cases were misclassified as class 3 and three cases as class 4. None of the cases were misclassified as the predicted class 2. For the actual class 3, six cases were incorrectly included in the predicted class 1, one case in class 2, and two cases in class 4. The actual class 4 was characterized by one case misclassified as class 1, and six cases incorrectly included in the predicted class 4. None of the cases were incorrectly classified as class 2.

Figure 6.

Confusion matrices obtained by CNN models for the classification of tomato seeds.

In addition to confusion matrices, the classification performance metrics computed according to these confusion matrices are presented in Table 2. The highest correctness of classification of four cultivars of tomato seeds was confirmed by the highest accuracy of 93.44%, sensitivity of 0.9339, specificity of 0.9781, precision of 0.9379, F1-Score of 0.9342, and MCC of 0.9138. Whereas GoogleNet produced the lowest accuracy of the classification of tomato seeds belonging to four cultivars equal to 83.28% and the lowest values of sensitivity (0.8328), specificity (0.9442), precision (0.8362), F1-Score (0.8319), and MCC (0.7787).

Table 2.

Performance metrics of CNN models.

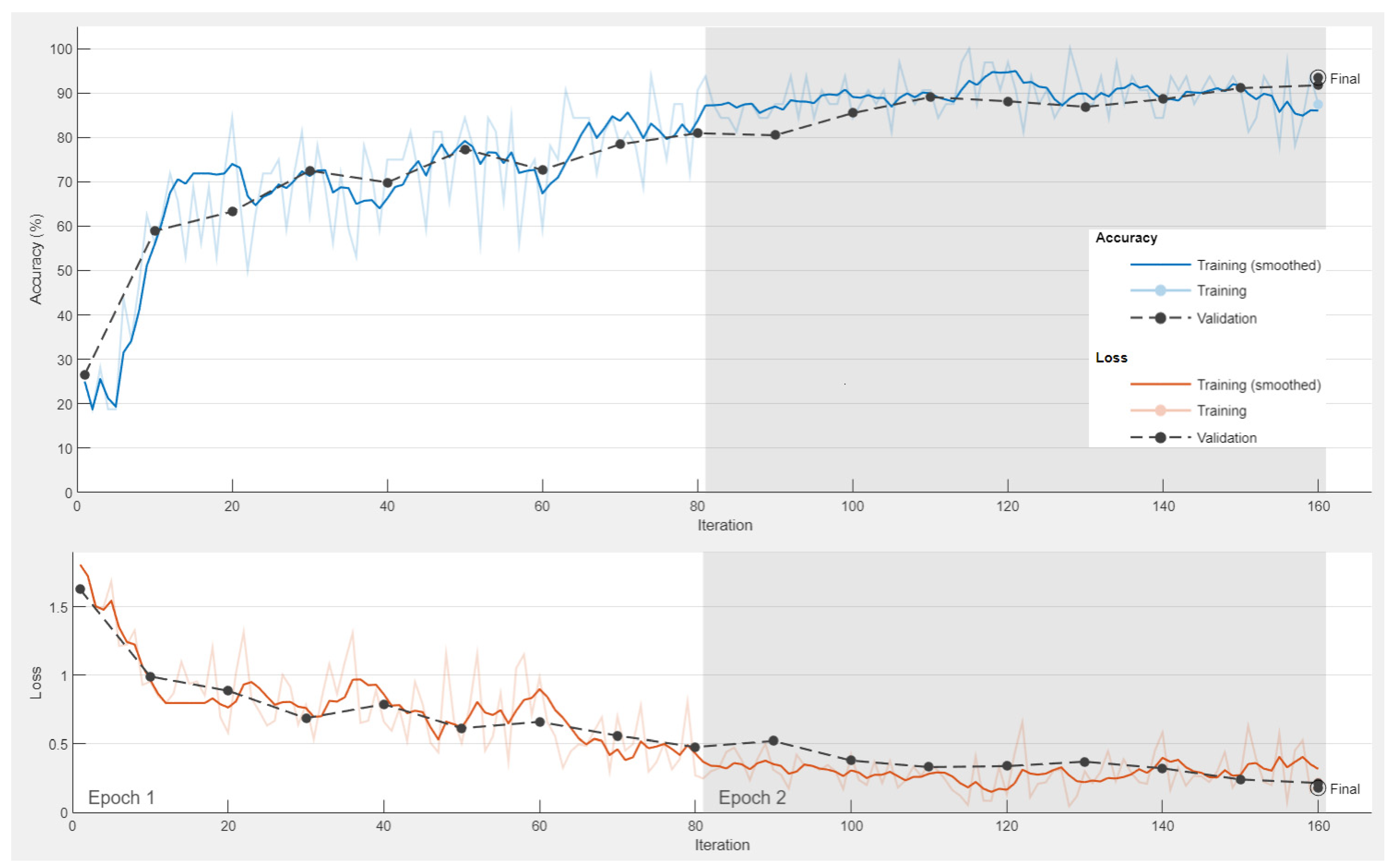

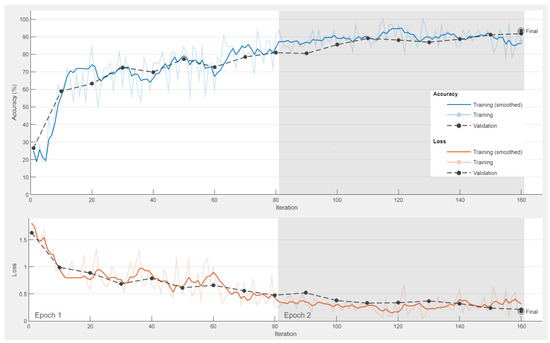

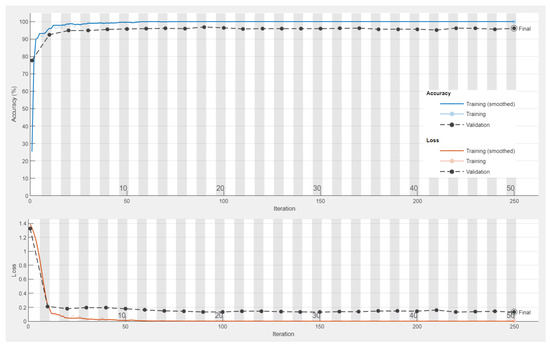

In Table 2, the classification performance metrics obtained with four different CNN models are compared. According to the results given in Table 2, the highest accuracy (93.44%) was achieved with the MobileNetV2 model. The accuracy values obtained with GoogleNet, ResNet18, and ResNet50 are 83.28%, 90.62%, and 92.19%, respectively. Therefore, the accuracy and loss graph of the training and testing (validation) steps of tomato seed classification were performed for the MobileNet model, which provided the best results (Figure 7).

Figure 7.

Training and loss graphs of the MobileNetv2 model.

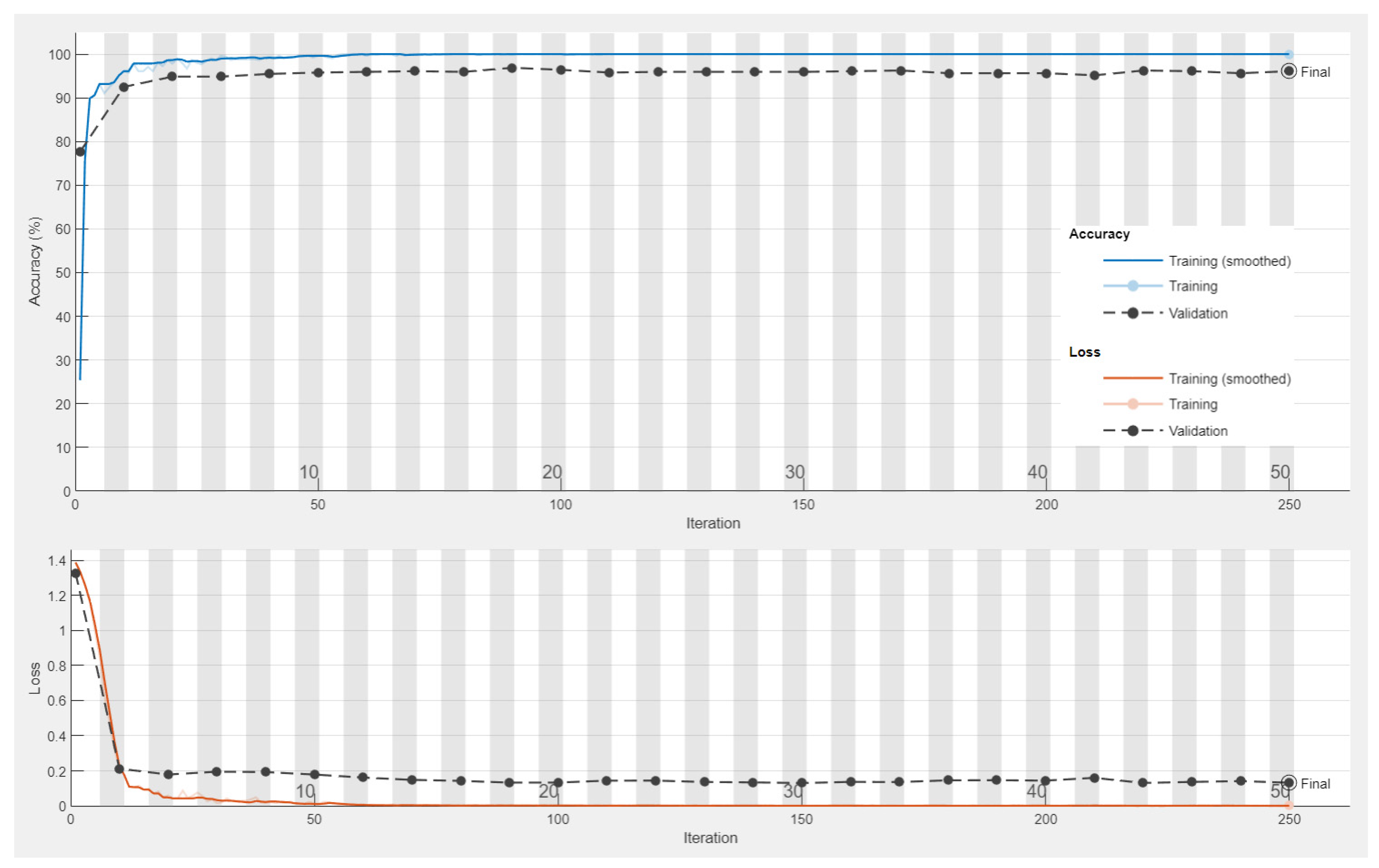

One thousand two hundred and eighty deep attributes were extracted from the fc layer of the MobileNetV2 model, which classified seeds belonging to four different tomato cultivars with the highest accuracy. These characteristics were extracted separately for the training dataset and the test dataset. The inputs of the BiLSTM model were fed with 1280 deep attributes, and reclassification operations were performed. The training and error graph of the classification performed with the BiLSTM model is given in Figure 8.

Figure 8.

Training and loss graphs of the BiLSTM model.

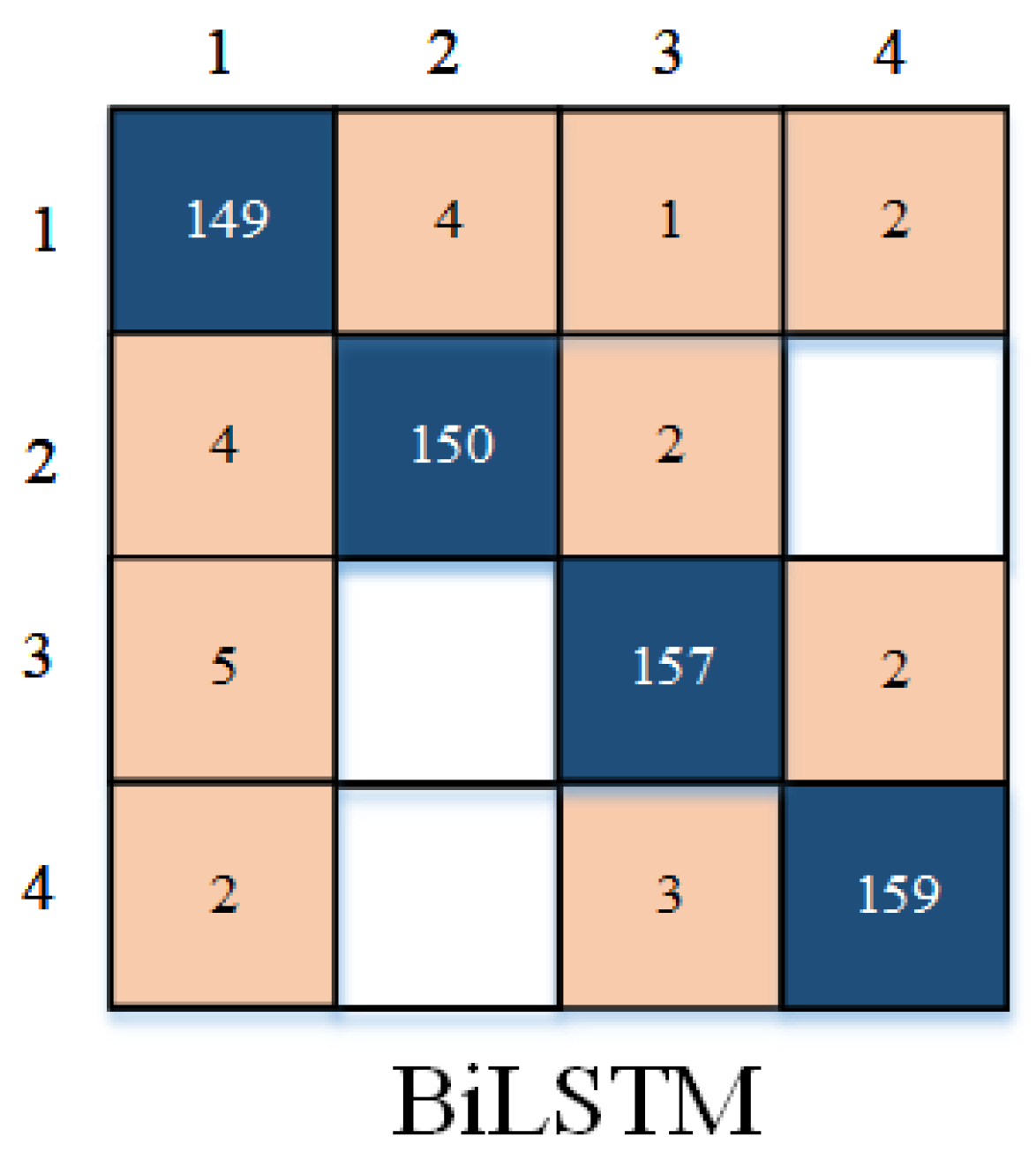

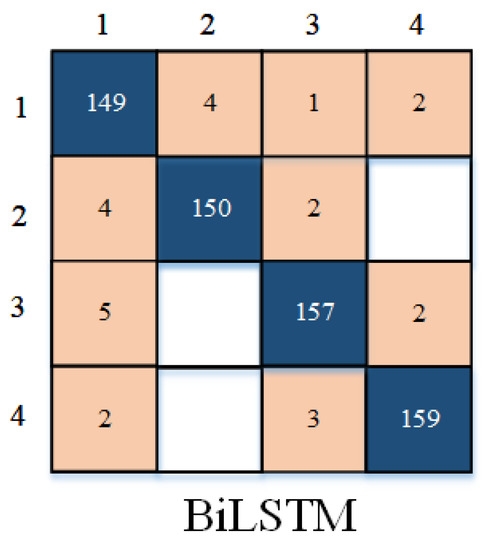

In addition, the confusion matrix obtained with the BiLSTM model is shown in Figure 9. The presented results are very satisfactory. In the case of each class, only single cases are misclassified. It was found that the tomato seeds were correctly classified in 149 cases for class 1, 150 cases for class 2, 157 cases for class 3, and 159 cases for class 4. The greatest misclassification occurred in the case of classes 1 and 3. For class 1, in total, seven cases were misclassified. From the actual class 1, four cases were incorrectly classified as class 2, one case as class 3, and two cases as class 4. Whereas, in the case of actual class 3, five cases were incorrectly included in the predicted class 1, and two cases were misclassified as the predicted class 4. For class 2, six cases were misclassified, including four cases incorrectly classified as the predicted class 1, and two cases incorrectly classified as class 3. In the case of actual class 4, only five cases were incorrectly classified, two cases as class 1, and three cases as class 3.

Figure 9.

Confusion matrix obtained by BiLSTM model for classification of tomato seeds.

The classification results obtained by the MobileNetv2 CNN model and BiLSTM model are given in Table 3. Comparing the performance metrics obtained by both models, it can be noticed that the BiLSTM model resulted in improving the accuracy and the values of sensitivity, specificity, precision, F1-Score, and MCC of the classification of four cultivars of tomato seeds compared with MobileNet. The results obtained by a BiLSTM model were the highest and were equal to 96.09% for accuracy, 0.9609 for sensitivity, 0.9870 for specificity, 0.9610 for precision, 0.9609 for the F1-Score, and 0.9479 for the MCC. These results confirmed that the tomato seed classification was very successful.

Table 3.

Performance metrics obtained as a result of BiLSTM model compared with MobileNet.

4. Discussion

The obtained results proved the great usefulness of MobileNet-BiLSTM and selected CNN models for the successful classification of tomato seeds belonging to different cultivars. The developed procedure combining color image acquisition with automatic feature extraction, and image classification by deep learning can be very promising in seed research. Machine vision provides a non-destructive, automated, and cost-effective alternative for the quality inspection of agricultural products and decision-making by a human [30]. Classification using machine vision can result in higher accuracy and a reduction in time [31]. Many previous studies proved the effectiveness of distinguishing seed cultivars belonging to different species, e.g., peach [32], tomato [11], pepper [33], apricot [34], or apple [35,36] using an approach combining image analysis and traditional machine learning with an accuracy close to 90–100%. In addition, deep learning models provided very high correctness in the classification of seeds of pepper [19], maize [37], cotton [38], soybean [39], and weed seeds [40] reaching accuracy even close to 100%. The own results of tomato seed classification reaching 96.09% accuracy are within the ranges obtained by other authors for different species. Due to better extracting structural and underlying features, such as texture, lines, and form, CNN can be advantageous in practical image classification [41]. In view of the wide and successive use of traditional machine learning and deep learning in the assessment of seed quality, further studies with the determining new research directions should be performed. The results of own studies presented in this paper provided a novel approach to the classification of tomato seed cultivars using CNN models and BiLSTM. The developed procedures may have practical applications and be useful in the study of other seeds.

The cultivar identification of seeds using non-destructive and accurate procedures may have a great practical application. Seeds can be considered the first crucial input for agriculture. The correct identification of seed cultivars is important for obtaining a uniform product and optimum yield potential. There is pressure on the producers, processors, and distributors of seeds to assert cultivar purity because misidentification can result in limiting plant productivity. The cultivar impurity may occur in the seed production chain, from cultivation to processing, and even be an intentional adulteration, and may not be detected by trained experts assessing seeds based on the morphology. More accurate seed cultivar identification carried out using genetic markers is unsuitable for routine screening due to destructiveness, time-consuming, and costs [42]. Due to shortcomings of seed cultivar identification by visual inspection, morphological analysis, and molecular markers, their practical application online and large scale is hindered. Therefore, the development of rapid and non-destructive methods is in demand. [43]. Although there are laboratories for purity testing of seeds, automatic seed classification systems using computer vision and image processing are desired [44]. Furthermore, methods of improving the accuracy of models are needed [45]. The constant need to develop objective and non-destructive methods increases the value and usefulness of own research presented in this paper.

Besides seed cultivar identification and classification, non-destructive seed quality assessment may include, among others, the determination of seed chemical composition, prediction of seed viability, and detection of insect infestation, diseases, and insect damage. Furthermore, besides machine vision, other non-destructive techniques used in seed research may be hyperspectral imaging, spectroscopy, electronic nose techniques, thermal imaging, and soft X-ray imaging [46]. The undertaken research involving an innovative approach involving deep learning to identify tomato seed cultivars set a new direction in the non-destructive testing of seeds.

In the case of tomatoes, automatic monitoring using different sensors may also have a wider application. For example, for the control of environment management in smart tomato greenhouses [47]. Besides the microclimate, the count bee movements can also be monitored in tomato greenhouses [48]. In this case, artificial intelligence could also be used. However, remote sensing requires advanced infrastructure and high computing power to process the data [49], and often image fusion to obtain useful data [50]. It has been reported that smart food growing can be facilitated by the application of the Internet of Things (IoT), which is considered a technological advancement [51]. Furthermore, artificial intelligence techniques can be used for plant disease detection and prediction [52]. Both own research and literature data have shown the extraordinary usefulness of artificial intelligence and sensor technology in growth monitoring and assessment of fruit and vegetable quality. Therefore, such research should be continued and constantly developed.

5. Conclusions

The approach involving CNN models and MobileNet-BiLSTM was successfully used to classify tomato seeds belonging to four cultivars. In the first scenario of this study, the classification of tomato seeds was successfully carried out with four different CNN models. In the second scenario, the deep features obtained from the CNN model with the highest classification accuracy were reclassified with BiLSTM. Before these stages, images of seeds belonging to four different tomato cultivars were obtained using a scanner. Each tomato seed was cropped from the raw image using image processing techniques. These cropped tomato seed images have been re-recorded. Then, the number of images in the data set was increased using data augmentation techniques. In the first scenario, tomato seeds were classified using four different CNN models, such as GoogleNet, MobileNetV2, ResNet18, and ResNet50. According to the results of the comparative analysis, the highest classification accuracy was achieved with the MobileNetV2 model. In the second scenario, 1280 deep features obtained from the MobileNetV2 model were used for BiLSTM. The classification accuracies obtained with the MobileNetV2 CNN model and BiLSTM are 93.44% and 96.09%, respectively. In addition, the results showed the success of CNN-based methods for tomato seed classification. This study provides guidance to researchers for future deep learning studies with the aim of determining the tomato seed cultivar. Since the proposed method includes a deep architecture, the number of data is very effective on system performance. Although the data augmentation techniques used for this contribute to the accuracy, they do not provide real and different data production. In this sense, it is planned to use more and more diverse seeds in future studies. In addition, parameter adjustments in the proposed model are also important for system performance. In this context, the parameters used in the CNN and BiLSTM architectures will be determined by hyperparameter optimization instead of trial and error.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Marmiroli, M.; Mussi, F.; Gallo, V.; Gianoncelli, A.; Hartley, W.; Marmiroli, N. Combination of Biochemical, Molecular, and Synchrotron-Radiation-Based Techniques to Study the Effects of Silicon in Tomato (Solanum Lycopersicum L.). Int. J. Mol. Sci. 2022, 23, 15837. [Google Scholar] [CrossRef]

- Zhou, T.; Li, R.; Yu, Q.; Wang, J.; Pan, J.; Lai, T. Proteomic Changes in Response to Colorless Nonripening Mutation during Tomato Fruit Ripening. Plants 2022, 11, 3570. [Google Scholar] [CrossRef]

- Shalaby, T.A.; Taha, N.; El-Beltagi, H.S.; El-Ramady, H. Combined Application of Trichoderma harzianum and Paclobutrazol to Control Root Rot Disease Caused by Rhizoctonia solani of Tomato Seedlings. Agronomy 2022, 12, 3186. [Google Scholar] [CrossRef]

- Jia, S.; Zhang, N.; Ji, H.; Zhang, X.; Dong, C.; Yu, J.; Yan, S.; Chen, C.; Liang, L. Effects of Atmospheric Cold Plasma Treatment on the Storage Quality and Chlorophyll Metabolism of Postharvest Tomato. Foods 2022, 11, 4088. [Google Scholar] [CrossRef]

- Dou, J.; Wang, J.; Tang, Z.; Yu, J.; Wu, Y.; Liu, Z.; Wang, J.; Wang, G.; Tian, Q. Application of Exogenous Melatonin Improves Tomato Fruit Quality by Promoting the Accumulation of Primary and Secondary Metabolites. Foods 2022, 11, 4097. [Google Scholar]

- Shrestha, S.; Deleuran, L.C.; Olesen, M.H.; Gislum, R. Use of multispectral imaging in varietal identification of tomato. Sensors 2015, 15, 4496–4512. [Google Scholar] [CrossRef] [PubMed]

- Kumar, M.; Tomar, M.; Bhuyan, D.J.; Punia, S.; Grasso, S.; Sa, A.G.A.; Carciofi, B.A.M.; Arrutia, F.; Changan, S.; Singh, S. Tomato (Solanum lycopersicum L.) seed: A review on bioactives and biomedical activities. Biomed. Pharmacother. 2021, 142, 112018. [Google Scholar] [CrossRef] [PubMed]

- Kumar, S.; Thirunavookarasu, S.N.; Sunil, C.; Vignesh, S.; Venkatachalapathy, N.; Rawson, A. Mass transfer kinetics and quality evaluation of tomato seed oil extracted using emerging technologies. Innov. Food Sci. Emerg. Technol. 2023, 83, 103203. [Google Scholar] [CrossRef]

- Giuffrè, A.; Capocasale, M. Physicochemical composition of tomato seed oil for an edible use: The effect of cultivar. Int. Food Res. J. 2016, 23, 583–591. [Google Scholar]

- Yasmin, J.; Lohumi, S.; Ahmed, M.R.; Kandpal, L.M.; Faqeerzada, M.A.; Kim, M.S.; Cho, B.-K. Improvement in purity of healthy tomato seeds using an image-based one-class classification method. Sensors 2020, 20, 2690. [Google Scholar] [CrossRef]

- Ropelewska, E.; Piecko, J. Discrimination of tomato seeds belonging to different cultivars using machine learning. Eur. Food Res. Technol. 2022, 248, 685–705. [Google Scholar] [CrossRef]

- Shrestha, S.; Deleuran, L.C.; Gislum, R. Classification of different tomato seed cultivars by multispectral visible-near infrared spectroscopy and chemometrics. J. Spectr. Imaging 2016, 5, a1. [Google Scholar] [CrossRef]

- Galletti, P.A.; Carvalho, M.E.; Hirai, W.Y.; Brancaglioni, V.A.; Arthur, V.; Barboza da Silva, C. Integrating optical imaging tools for rapid and non-invasive characterization of seed quality: Tomato (Solanum lycopersicum L.) and carrot (Daucus carota L.) as study cases. Front. Plant Sci. 2020, 11, 577851. [Google Scholar] [CrossRef]

- Karimi, H.; Navid, H.; Mahmoudi, A. Detection of damaged seeds in laboratory evaluation of precision planter using impact acoustics and artificial neural networks. Artif. Intell. Res. 2012, 1, 67–74. [Google Scholar]

- Borges, S.R.d.S.; Silva, P.P.d.; Araújo, F.S.; Souza, F.F.d.J.; Nascimento, W.M. Tomato seed image analysis during the maturation. J. Seed Sci. 2019, 41, 022–031. [Google Scholar] [CrossRef]

- Shrestha, S.; Knapič, M.; Žibrat, U.; Deleuran, L.C.; Gislum, R. Single seed near-infrared hyperspectral imaging in determining tomato (Solanum lycopersicum L.) seed quality in association with multivariate data analysis. Sens. Actuators B Chem. 2016, 237, 1027–1034. [Google Scholar] [CrossRef]

- Škrubej, U.; Rozman, Č.; Stajnko, D. Assessment of germination rate of the tomato seeds using image processing and machine learning. Eur. J. Hortic. Sci. 2015, 80, 68–75. [Google Scholar] [CrossRef]

- Nehoshtan, Y.; Carmon, E.; Yaniv, O.; Ayal, S.; Rotem, O. Robust seed germination prediction using deep learning and RGB image data. Sci. Rep. 2021, 11, 22030. [Google Scholar] [CrossRef]

- Sabanci, K.; Aslan, M.F.; Ropelewska, E.; Unlersen, M.F. A convolutional neural network-based comparative study for pepper seed classification: Analysis of selected deep features with support vector machine. J. Food Process Eng. 2022, 45, e13955. [Google Scholar] [CrossRef]

- Sabanci, K.; Aslan, M.F.; Ropelewska, E. Benchmarking analysis of CNN models for pits of sour cherry cultivars. Eur. Food Res. Technol. 2022, 248, 2441–2449. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Koklu, M.; Unlersen, M.F.; Ozkan, I.A.; Aslan, M.F.; Sabanci, K. A CNN-SVM study based on selected deep features for grapevine leaves classification. Measurement 2022, 188, 110425. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 1–48. [Google Scholar] [CrossRef]

- Unlersen, M.F.; Sonmez, M.E.; Aslan, M.F.; Demir, B.; Aydin, N.; Sabanci, K.; Ropelewska, E. CNN–SVM hybrid model for varietal classification of wheat based on bulk samples. Eur. Food Res. Technol. 2022, 248, 2043–2052. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Aslan, M.F.; Unlersen, M.F.; Sabanci, K.; Durdu, A. CNN-based transfer learning–BiLSTM network: A novel approach for COVID-19 infection detection. Appl. Soft Comput. 2021, 98, 106912. [Google Scholar] [CrossRef]

- Koklu, M.; Cinar, I.; Taspinar, Y.S. CNN-based bi-directional and directional long-short term memory network for determination of face mask. Biomed. Signal Process. Control 2022, 71, 103216. [Google Scholar] [CrossRef]

- Patel, K.K.; Kar, A.; Jha, S.N.; Khan, M.A. Machine vision system: A tool for quality inspection of food and agricultural products. J. Food Sci. Technol. 2012, 49, 123–141. [Google Scholar] [CrossRef]

- Alipasandi, A.; Ghaffari, H.; Alibeyglu, S.Z. Classification of three varieties of peach fruit using artificial neural network assisted with image processing techniques. Int. J. Agron. Plant Prod. 2013, 4, 2179–2186. [Google Scholar]

- Ropelewska, E.; Rutkowski, K.P. Differentiation of peach cultivars by image analysis based on the skin, flesh, stone and seed textures. Eur. Food Res. Technol. 2021, 247, 2371–2377. [Google Scholar] [CrossRef]

- Ropelewska, E.; Szwejda-Grzybowska, J. A comparative analysis of the discrimination of pepper (Capsicum annuum L.) based onthe cross-section and seed textures determined using image processing. J. Food Process Eng. 2021, 44, 13694. [Google Scholar]

- Ropelewska, E.; Sabanci, K.; Aslan, M.F.; Azizi, A. A Novel Approach to the Authentication of Apricot Seed Cultivars Using Innovative Models Based on Image Texture Parameters. Horticulturae 2022, 8, 431. [Google Scholar] [CrossRef]

- Ropelewska, E. The use of seed texture features for discriminating different cultivars of stored apples. J. Stored Prod. Res. 2020, 88, 101668. [Google Scholar] [CrossRef]

- Ropelewska, E.; Rutkowski, K.P. Cultivar discrimination of stored apple seeds based on geometric features determined using image analysis. J. Stored Prod. Res. 2021, 92, 101804. [Google Scholar] [CrossRef]

- Xu, P.; Tan, Q.; Zhang, Y.; Zha, X.; Yang, S.; Yang, R. Research on Maize Seed Classification and Recognition Based on Machine Vision and Deep Learning. Agriculture 2022, 12, 232. [Google Scholar] [CrossRef]

- Zhu, S.; Zhou, L.; Gao, P.; Bao, Y.; He, Y.; Feng, L. Near-Infrared Hyperspectral Imaging Combined with Deep Learning to Identify Cotton Seed Varieties. Molecules 2019, 24, 3268. [Google Scholar] [CrossRef]

- Huang, Z.; Wang, R.; Cao, Y.; Zheng, S.; Teng, Y.; Wang, F.; Wang, L.; Du, J. Deep learning based soybean seed classification. Comput. Electron. Agric. 2022, 202, 107393. [Google Scholar] [CrossRef]

- Luo, T.; Zhao, J.; Gu, Y.; Zhang, S.; Qiao, X.; Tian, W.; Han, Y. Classification of weed seeds based on visual images and deep learning. Inf. Process. Agric. 2021, 10, 40–51. [Google Scholar] [CrossRef]

- Jin, X.; Zhao, Y.; Bian, H.; Li, J.; Xu, C. Sunflower seeds classification based on self-attention Focusing algorithm. J. Food Meas. Charact. 2023, 17, 143–154. [Google Scholar] [CrossRef]

- Taheri-Garavand, A.; Nasiri, A.; Fanourakis, D.; Fatahi, S.; Omid, M.; Nikoloudakis, N. Automated In Situ Seed Variety Identification via Deep Learning: A Case Study in Chickpea. Plants 2021, 10, 1406. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhang, C.; Zhu, S.; Gao, P.; Feng, L.; He, Y. Non-Destructive and Rapid Variety Discrimination and Visualization of Single Grape Seed Using Near-Infrared Hyperspectral Imaging Technique and Multivariate Analysis. Molecules 2018, 23, 1352. [Google Scholar] [CrossRef]

- Uddin, M.; Islam, M.A.; Shajalal, M.; Hossain, M.A.; Yousuf, M.; Iftekhar, S. Paddy seed variety identification using t20-hog and haralick textural features. Complex Intell. Syst. 2022, 8, 657–671. [Google Scholar] [CrossRef]

- Jin, X.; Zhao, Y.; Wu, H.; Sun, T. Sunflower seeds classification based on sparse convolutional neural networks in multi-objective scene. Sci. Rep. 2022, 12, 19890. [Google Scholar] [CrossRef] [PubMed]

- Rahman, A.; Cho, B.K. Assessment of seed quality using non-destructive measurement techniques: A review. Seed Sci. Res. 2016, 26, 285–305. [Google Scholar] [CrossRef]

- Bafdal, N.; Ardiansah, I. Application of Internet of Things in smart greenhouse microclimate management for tomato growth. Int. J. Adv. Sci. Eng. Inf. Technol. 2021, 11, 427–432. [Google Scholar] [CrossRef]

- Morandin, L.A.; Laverty, T.M.; Kevan, P.G. Bumble bee (Hymenoptera: Apidae) activity and pollination levels in commercial tomato greenhouses. J. Econ. Entomol. 2001, 94, 462–467. [Google Scholar] [CrossRef]

- Sharma, M.; Rastogi, R.; Arya, N.; Akram, S.V.; Singh, R.; Gehlot, A.; Buddhi, D.; Joshi, K. LoED: LoRa and Edge Computing based System Architecture for Sustainable Forest Monitoring. Int. J. Eng. Trends Technol. 2022, 70, 88–93. [Google Scholar] [CrossRef]

- Joshi, K.; Diwakar, M.; Joshi, N.K.; Lamba, S. A concise review on latest methods of image fusion. Recent Adv. Comput. Sci. Commun. (Former. Recent Pat. Comput. Sci.) 2021, 14, 2046–2056. [Google Scholar] [CrossRef]

- Halgamuge, M.N.; Bojovschi, A.; Fisher, P.M.; Le, T.C.; Adeloju, S.; Murphy, S. Internet of Things and autonomous control for vertical cultivation walls towards smart food growing: A review. Urban For. Urban Green. 2021, 61, 127094. [Google Scholar] [CrossRef]

- Kirola, M.; Joshi, K.; Chaudhary, S.; Singh, N.; Anandaram, H.; Gupta, A. Plants diseases prediction framework: A image-based system using deep learning. In Proceedings of the 2022 IEEE World Conference on Applied Intelligence and Computing (AIC), Sonbhadra, India, 17–19 June 2022; pp. 307–313. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).