A Convolutional Neural Network for Coastal Aquaculture Extraction from High-Resolution Remote Sensing Imagery

Abstract

:1. Introduction

- Remote sensing diversity of breeding areas. There are differences in the types of aquaculture in different regions. Cage and raft cultures are different in size, colour, shape, and scale. As a result, the model’s generalization ability faces significant challenges in large-scale research areas, and the spatial distribution of samples is an essential research factor.

- The complex background interference of land and sea. Although the background of aquaculture is relatively simple in the ocean, there will be complex sea–land interlacing in offshore aquaculture areas. In addition, cages and rafts will also appear in tidal flats and ponds on land. The diversity and comprehensiveness of samples is also a key research factor to avoid aquaculture sea–land interference.

- The boundaries of breeding areas are difficult to accurately extract. Because seawater may randomly submerge the edges of cage and raft cultures, the boundaries are not completely straight, and irregular edges will appear. Therefore, affected by complex imaging factors, it is not easy to extract the precise boundaries of breeding areas.

- We constructed the sample database from the perspective of the balance of spatial distribution. Considering the differences in the size, colour, and shape of aquaculture areas in diverse regions, representative samples covering each region are labelled. In this way, the model has a good large-scale generalization ability in all areas.

- We expanded the sample database by taking confused land objects as negative samples. For the complex background conditions of land, the target of the land prone to misdetection by the model is labelled as the negative sample. Then, the interference of confusing land objects with aquaculture extraction from land areas is solved.

- We designed a multi-scale-fusion superpixel segmentation optimization module. Considering the problem of inaccurate boundaries of extraction results, we take full advantage of the sensitivity of superpixel segmentation to edge features and the abstraction of features by semantic segmentation networks. In this way, the network effectively optimized the accuracy of boundary identification and improved the overall accuracy of aquaculture extraction.

- Based on 640 scenes of Gaofen-2 satellite images, we extracted cage and raft culture areas near the coastline in mainland China, covering a range of 30 km outward from the coastline. The overall accuracy was satisfactory, and it can support the breeding area and quantity statistics. Compared with other mainstream methods, our proposed CANet achieved state-of-the-art performance.

2. Materials and Methods

2.1. Study Area

2.2. Data Source

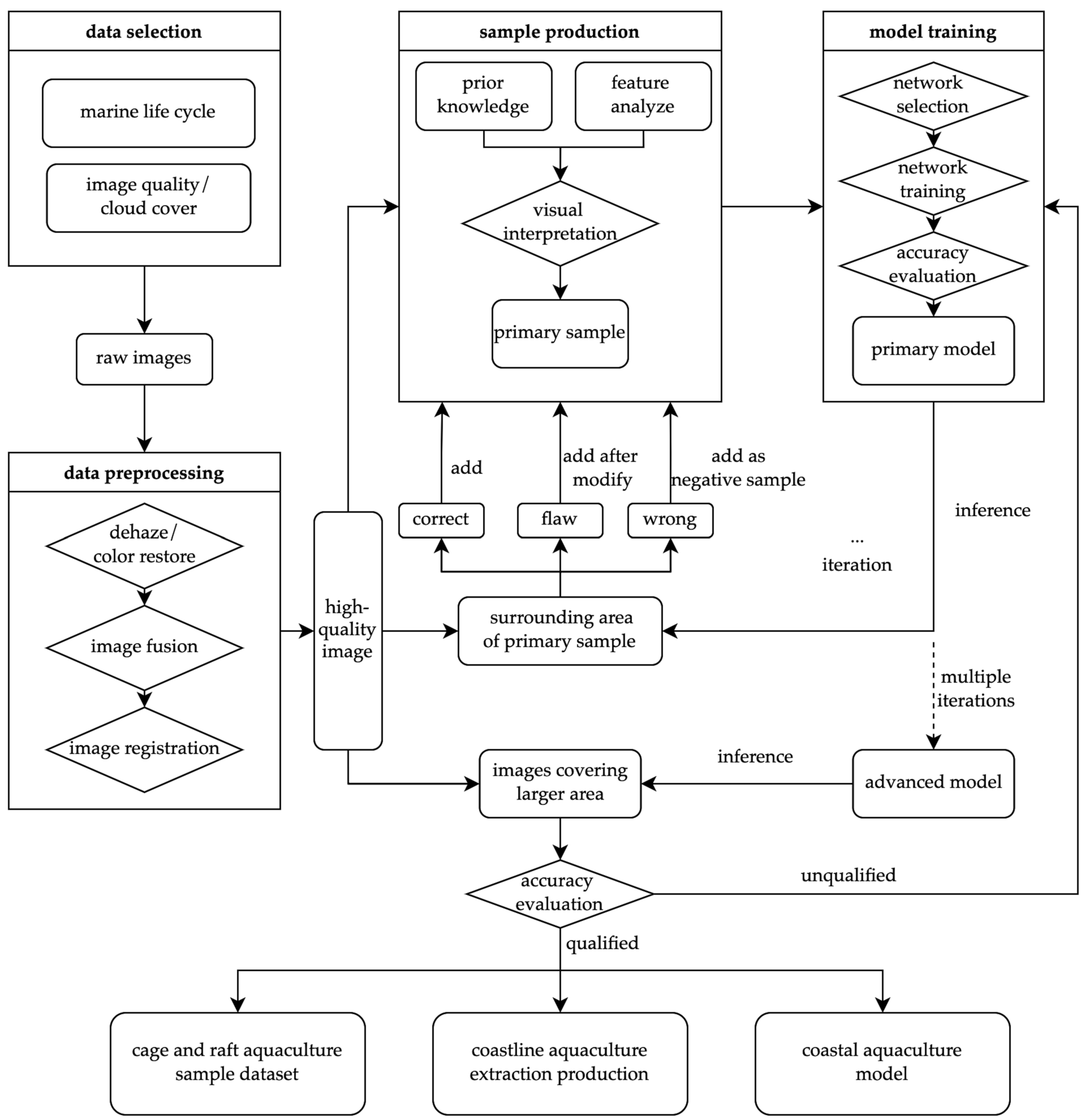

2.3. Overall Framework

2.4. Dataset

2.4.1. Data Processing

2.4.2. Samples

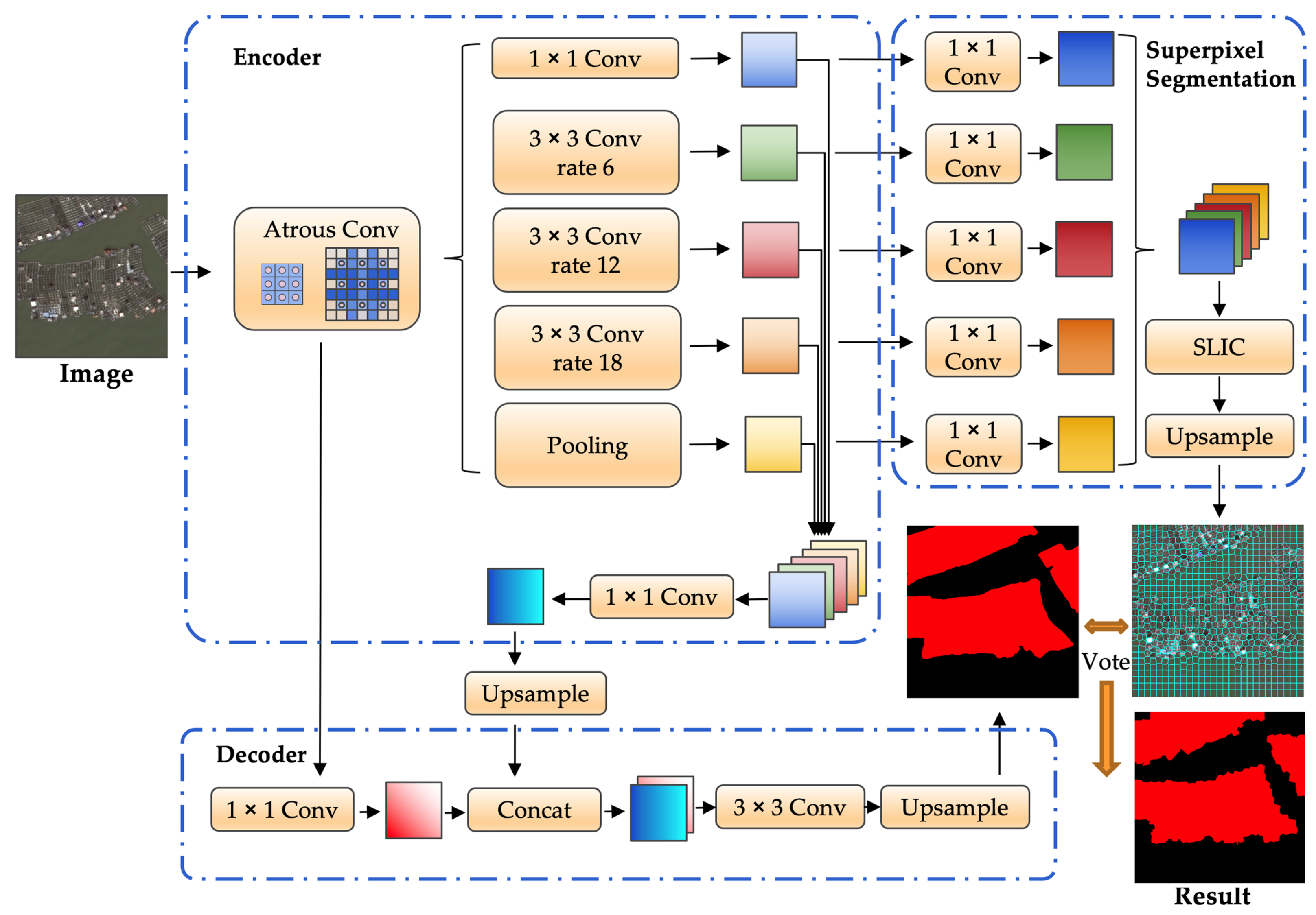

2.5. Coastal Aquaculture Network

2.5.1. Baseline

2.5.2. Superpixel Optimization

2.5.3. Network Architecture

2.5.4. Loss Function

2.6. Training Settings

2.7. Evaluation Metrics

3. Experimental Results

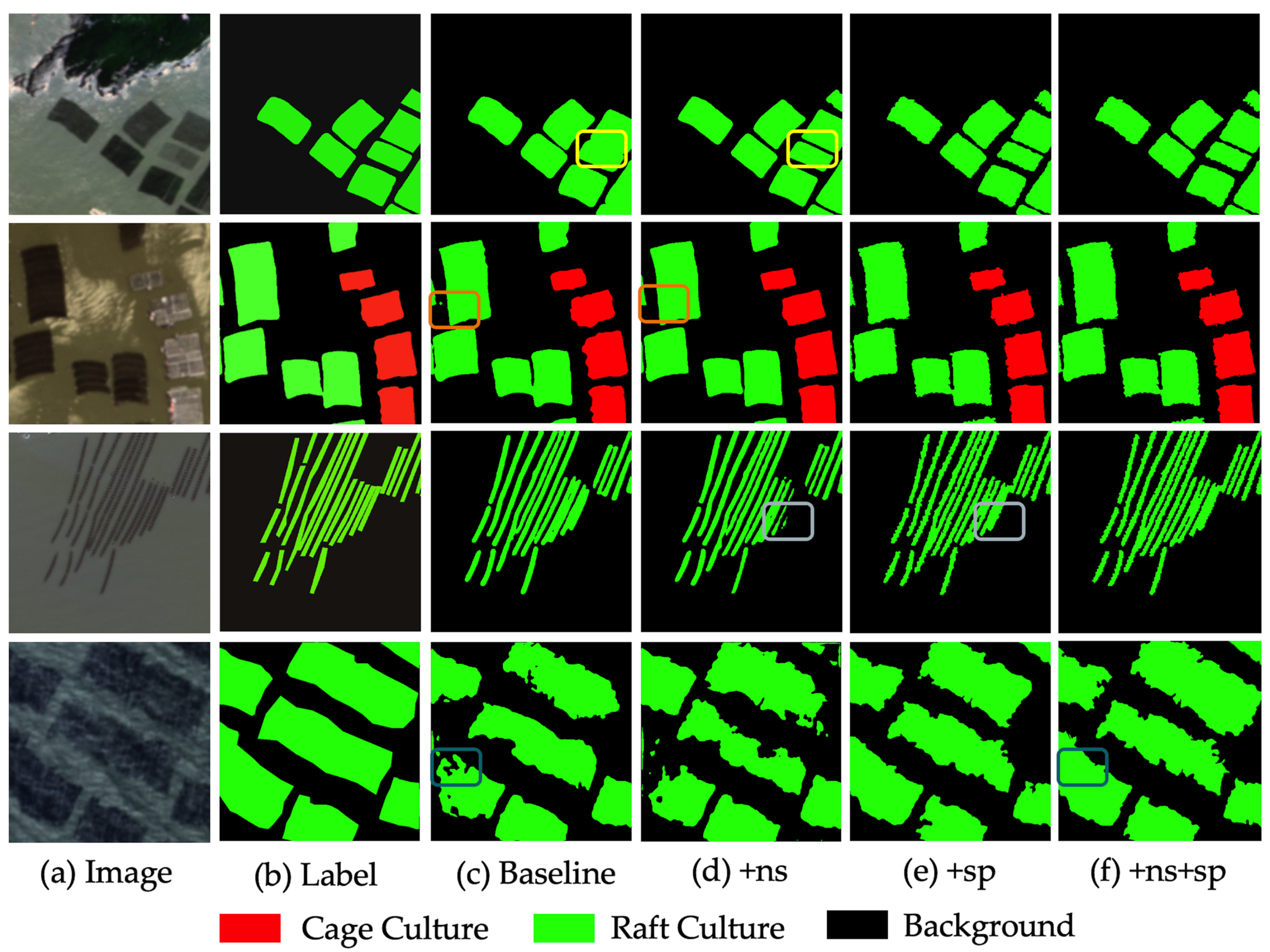

3.1. Ablation Study

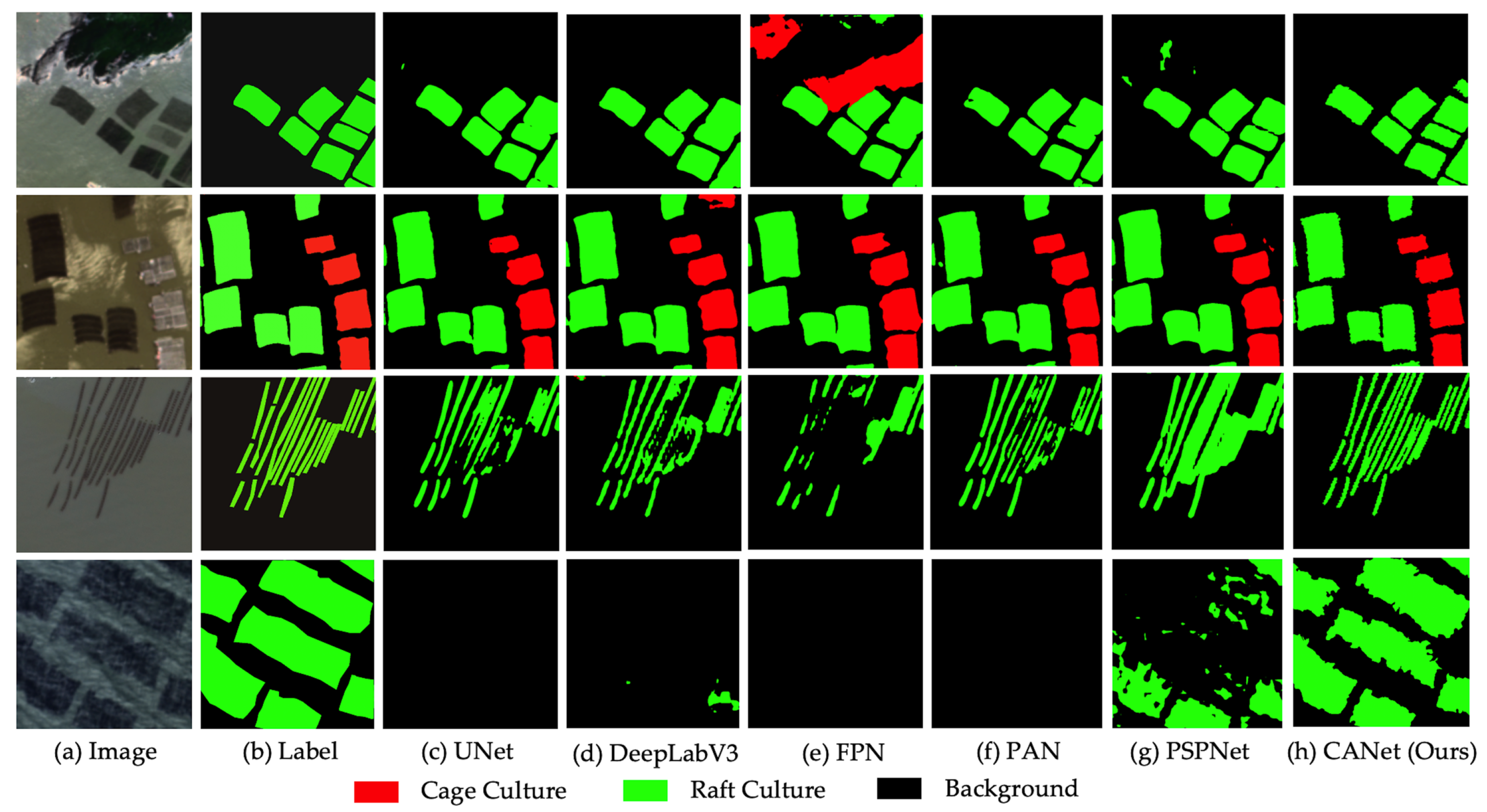

3.2. Comparing Methods

3.3. Large-Scale Mapping and Statistics

3.4. Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CANet | coastal aquaculture network |

| SLIC | simple linear iterative clustering |

| F1 | F1 score |

| TP | true positive |

| FP | false positive |

| FN | false negative |

| IoU | intersection over union |

| ns | negative sample technology |

| sp | superpixel optimization technology |

References

- Food and Agriculture Organization of the United Nations. The State of World Fisheries and Aquaculture 2020: Sustainability in Action; Food and Agriculture Organization of the United Nations: Rome, Italy, 2020. [Google Scholar]

- Hao, J.; Xu, G.; Luo, L.; Zhang, Z.; Yang, H.; Li, H. Quantifying the relative contribution of natural and human factors to vegetation coverage variation in coastal wetlands in China. Catena 2020, 188, 104429. [Google Scholar] [CrossRef]

- Rico, A.; Van den Brink, P.J. Probabilistic risk assessment of veterinary medicines applied to four major aquaculture species produced in Asia. Sci. Total. Environ. 2014, 468, 630–641. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Y.; Jin, R.; Zhang, X.; Wang, Q.; Wu, J. The considerable environmental benefits of seaweed aquaculture in China. Stoch. Environ. Res. Risk Assess. 2019, 33, 1203–1221. [Google Scholar] [CrossRef]

- Gao, Q.; Li, Y.; Qi, Z.; Yue, Y.; Min, M.; Peng, S.; Shi, Z.; Gao, Y. Diverse and abundant antibiotic resistance genes from mariculture sites of China’s coastline. Sci. Total. Environ. 2018, 630, 117–125. [Google Scholar] [CrossRef] [PubMed]

- Ottinger, M.; Clauss, K.; Kuenzer, C. Aquaculture: Relevance, distribution, impacts and spatial assessments—A review. Ocean. Coast. Manag. 2016, 119, 244–266. [Google Scholar] [CrossRef]

- Zhang, J.; Wu, W.; Ren, J.S.; Lin, F. A model for the growth of mariculture kelp Saccharina japonica in Sanggou Bay, China. Aquac. Environ. Interact. 2016, 8, 273–283. [Google Scholar] [CrossRef] [Green Version]

- Maiti, S.; Bhattacharya, A.K. Shoreline change analysis and its application to prediction: A remote sensing and statistics based approach. Mar. Geol. 2009, 257, 11–23. [Google Scholar] [CrossRef]

- Campbell, J.B.; Wynne, R.H. Introduction to Remote Sensing; Guilford Press: New York, NY, USA, 2011. [Google Scholar]

- Karthik, M.; Suri, J.; Saharan, N.; Biradar, R. Brackish water aquaculture site selection in Palghar Taluk, Thane district of Maharashtra, India, using the techniques of remote sensing and geographical information system. Aquac. Eng. 2005, 32, 285–302. [Google Scholar] [CrossRef]

- Kapetsky, J.M.; Aguilar-Manjarrez, J. Geographic Information Systems, Remote Sensing and Mapping for the Development and Management of Marine Aquaculture; Number 458; Food & Agriculture Organization: Rome, Italy, 2007. [Google Scholar]

- Seto, K.C.; Fragkias, M. Mangrove conversion and aquaculture development in Vietnam: A remote sensing-based approach for evaluating the Ramsar Convention on Wetlands. Glob. Environ. Chang. 2007, 17, 486–500. [Google Scholar] [CrossRef]

- Saitoh, S.I.; Mugo, R.; Radiarta, I.N.; Asaga, S.; Takahashi, F.; Hirawake, T.; Ishikawa, Y.; Awaji, T.; In, T.; Shima, S. Some operational uses of satellite remote sensing and marine GIS for sustainable fisheries and aquaculture. ICES J. Mar. Sci. 2011, 68, 687–695. [Google Scholar] [CrossRef] [Green Version]

- Zhang, T.; Yang, X.; Hu, S.; Su, F. Extraction of coastline in aquaculture coast from multispectral remote sensing images: Object-based region growing integrating edge detection. Remote Sens. 2013, 5, 4470–4487. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.; Ma, S.; Su, C.; Shang, Y.; Wang, T.; Yin, J. Coastal oyster aquaculture area extraction and nutrient loading estimation using a GF-2 satellite image. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4934–4946. [Google Scholar] [CrossRef]

- Sun, Z.; Luo, J.; Yang, J.; Yu, Q.; Zhang, L.; Xue, K.; Lu, L. Nation-scale mapping of coastal aquaculture ponds with sentinel-1 SAR data using google earth engine. Remote Sens. 2020, 12, 3086. [Google Scholar] [CrossRef]

- Zhu, H.; Li, K.; Wang, L.; Chu, J.; Gao, N.; Chen, Y. spectral characteristic analysis and remote sensing classification of coastal aquaculture areas based on GF-1 data. J. Coast. Res. 2019, 90, 49–57. [Google Scholar] [CrossRef]

- Kang, J.; Sui, L.; Yang, X.; Liu, Y.; Wang, Z.; Wang, J.; Yang, F.; Liu, B.; Ma, Y. Sea surface-visible aquaculture spatial-temporal distribution remote sensing: A case study in Liaoning province, China from 2000 to 2018. Sustainability 2019, 11, 7186. [Google Scholar] [CrossRef] [Green Version]

- Du, Y.; Wu, D.; Liang, F.; Li, C. Integration of case-based reasoning and object-based image classification to classify SPOT images: A case study of aquaculture land use mapping in coastal areas of Guangdong province, China. Gisci. Remote Sens. 2013, 50, 574–589. [Google Scholar] [CrossRef]

- Wei, Z. Analysis on the Relationship between Mangrove and Aquaculture in Maowei Sea Based on Object-Oriented Method. E3S Web Conf. 2020, 165, 03022. [Google Scholar] [CrossRef]

- Zhang, B.; Chen, Z.; Peng, D.; Benediktsson, J.A.; Liu, B.; Zou, L.; Li, J.; Plaza, A. Remotely sensed big data: Evolution in model development for information extraction [point of view]. Proc. IEEE 2019, 107, 2294–2301. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Juan, PR, USA, 17–19 June 1997; pp. 2881–2890. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. Transunet: Transformers make strong encoders for medical image segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar]

- Liu, Y.; Wang, Z.; Yang, X.; Zhang, Y.; Yang, F.; Liu, B.; Cai, P. Satellite-based monitoring and statistics for raft and cage aquaculture in China’s offshore waters. Int. J. Appl. Earth Obs. Geoinf. 2020, 91, 102118. [Google Scholar] [CrossRef]

- Cheng, B.; Liang, C.; Liu, X.; Liu, Y.; Ma, X.; Wang, G. Research on a novel extraction method using Deep Learning based on GF-2 images for aquaculture areas. Int. J. Remote Sens. 2020, 41, 3575–3591. [Google Scholar] [CrossRef]

- Su, H.; Wei, S.; Qiu, J.; Wu, W. RaftNet: A New Deep Neural Network for Coastal Raft Aquaculture Extraction from Landsat 8 OLI Data. Remote Sens. 2022, 14, 4587. [Google Scholar] [CrossRef]

- Ottinger, M.; Clauss, K.; Kuenzer, C. Large-scale assessment of coastal aquaculture ponds with Sentinel-1 time series data. Remote Sens. 2017, 9, 440. [Google Scholar] [CrossRef] [Green Version]

- Cui, B.; Fei, D.; Shao, G.; Lu, Y.; Chu, J. Extracting raft aquaculture areas from remote sensing images via an improved U-net with a PSE structure. Remote Sens. 2019, 11, 2053. [Google Scholar] [CrossRef] [Green Version]

- Fu, Y.; Ye, Z.; Deng, J.; Zheng, X.; Huang, Y.; Yang, W.; Wang, Y.; Wang, K. Finer resolution mapping of marine aquaculture areas using worldView-2 imagery and a hierarchical cascade convolutional neural network. Remote Sens. 2019, 11, 1678. [Google Scholar] [CrossRef] [Green Version]

- Cui, B.G.; Zhong, Y.; Fei, D.; Zhang, Y.H.; Liu, R.J.; Chu, J.L.; Zhao, J.H. Floating raft aquaculture area automatic extraction based on fully convolutional network. J. Coast. Res. 2019, 90, 86–94. [Google Scholar] [CrossRef]

- Lin, H.; Shi, Z.; Zou, Z. Maritime semantic labeling of optical remote sensing images with multi-scale fully convolutional network. Remote Sens. 2017, 9, 480. [Google Scholar] [CrossRef] [Green Version]

- Feng, Q.; Yang, J.; Zhu, D.; Liu, J.; Guo, H.; Bayartungalag, B.; Li, B. Integrating multitemporal Sentinel-1/2 data for coastal land cover classification using a multibranch convolutional neural network: A case of the Yellow River Delta. Remote Sens. 2019, 11, 1006. [Google Scholar] [CrossRef] [Green Version]

- Ferriby, H.; Nejadhashemi, A.P.; Hernandez-Suarez, J.S.; Moore, N.; Kpodo, J.; Kropp, I.; Eeswaran, R.; Belton, B.; Haque, M.M. Harnessing machine learning techniques for mapping aquaculture waterbodies in Bangladesh. Remote Sens. 2021, 13, 4890. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, C.; Ji, Y.; Chen, J.; Deng, Y.; Chen, J.; Jie, Y. Combining segmentation network and nonsubsampled contourlet transform for automatic marine raft aquaculture area extraction from sentinel-1 images. Remote Sens. 2020, 12, 4182. [Google Scholar] [CrossRef]

- Liu, S.; Gao, K.; Qin, J.; Gong, H.; Wang, H.; Zhang, L.; Gong, D. SE2Net: Semantic segmentation of remote sensing images based on self-attention and edge enhancement modules. J. Appl. Remote Sens. 2021, 15, 026512. [Google Scholar]

- Wang, Y.; Aubrey, D.G. The characteristics of the China coastline. Cont. Shelf Res. 1987, 7, 329–349. [Google Scholar] [CrossRef]

- Agency, C.I. The World Factbook 2011; Central Intelligence Agency: McLean, VI, USA, 2011. [Google Scholar]

- Li, W.; Zhao, S.; Chen, Y.; Wang, L.; Hou, W.; Jiang, Y.; Zou, X.; Shi, S. State of China’s climate in 2021. Atmos. Ocean. Sci. Lett. 2022, 15, 100211. [Google Scholar] [CrossRef]

- Boulay, S.; Colin, C.; Trentesaux, A.; Pluquet, F.; Bertaux, J.; Blamart, D.; Buehring, C.; Wang, P. Mineralogy and sedimentology of Pleistocene sediment in the South China Sea (ODP Site 1144). Proc. Ocean. Drill. Program Sci. Results 2003, 184, 1–21. [Google Scholar]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [Green Version]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An imperative style, high-performance deep learning library. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; pp. 8024–8035. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Kirillov, A.; Girshick, R.; He, K.; Dollár, P. Panoptic feature pyramid networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 6399–6408. [Google Scholar]

- Li, H.; Xiong, P.; An, J.; Wang, L. Pyramid attention network for semantic segmentation. arXiv 2018, arXiv:1805.10180. [Google Scholar]

| Area | Latitude | Average Water Depth | Climate | Average Annual Sunshine Hours |

|---|---|---|---|---|

| Jinzhou Bay in Liaoning | 3∼9 m | temperate monsoon climate | 2200∼3000 | |

| Sanggou Bay & Allen Bay in Shandong | 5∼10 m | temperate marine monsoon climate | 2200∼3000 | |

| Haizhou Bay in Jiangsu | 5∼10 m | temperate monsoon climate | 2200∼3000 | |

| Sansha Bay in Fujian | >10 m | subtropical monsoon climate | 2200∼3000 | |

| Zhenhai Bay in Guangdong | 5∼7 m | subtropical monsoon climate | 2200∼3000 | |

| Leizhou Bay in Guangdong | 5∼7 m | tropical monsoon climate | 1400∼2000 | |

| Qinzhou Bay in Guangxi | 2∼18 m | subtropical marine monsoon climate | 2400∼2600 | |

| South Bay in Hainan | 2∼10 m | tropical marine monsoon climate | 2400∼2600 |

| Methods | Background | Cage Culture | Raft Culture | Mean F1 | Mean IoU |

|---|---|---|---|---|---|

| baseline | 92.71 | 90.72 | 92.51 | 91.98 | 88.66 |

| +ns | 93.91 | 91.91 | 93.85 | 93.22 | 89.86 |

| +sp | 93.39 | 91.76 | 93.66 | 92.94 | 89.78 |

| +ns+sp | 95.49 | 92.55 | 95.87 | 94.64 | 90.91 |

| Methods | Background | Cage Culture | Raft Culture | Mean F1 | Mean IoU |

|---|---|---|---|---|---|

| UNet | 92.38 | 89.66 | 92.71 | 91.58 | 86.77 |

| DeepLabV3 | 92.77 | 89.55 | 92.97 | 91.76 | 86.82 |

| FPN | 93.09 | 86.19 | 94.08 | 91.12 | 85.88 |

| PAN | 93.66 | 92.17 | 92.66 | 92.83 | 87.92 |

| PSPNet | 94.21 | 91.15 | 94.47 | 93.28 | 88.43 |

| CANet | 95.49 | 92.55 | 95.87 | 94.64 | 90.91 |

| Province | Aquaculture Area () | Number of Aquaculture Targets | ||||

|---|---|---|---|---|---|---|

| Cage | Raft | Total | Cage | Raft | Total | |

| Liaoning | 3.99 | 671.20 | 675.19 | 947 | 51,285 | 52,232 |

| Hebei & Tianjin | 0.07 | 0.01 | 0.08 | 92 | 37 | 129 |

| Shandong | 6.79 | 564.16 | 570.95 | 2906 | 59,643 | 62,549 |

| Jiangsu | 2.29 | 653.93 | 656.21 | 2018 | 88,045 | 90,063 |

| Zhejiang & Shanghai | 1.89 | 54.78 | 56.67 | 1708 | 9552 | 11,260 |

| Fujian | 54.46 | 462.28 | 516.74 | 21,990 | 79,348 | 101,338 |

| Guangdong | 42.41 | 189.43 | 231.84 | 46,301 | 115,965 | 162,266 |

| Guangxi | 24.61 | 18.21 | 42.82 | 21,992 | 15,466 | 37,458 |

| Hainan | 3.13 | 0.73 | 3.86 | 2590 | 577 | 3167 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Deng, J.; Bai, Y.; Chen, Z.; Shen, T.; Li, C.; Yang, X. A Convolutional Neural Network for Coastal Aquaculture Extraction from High-Resolution Remote Sensing Imagery. Sustainability 2023, 15, 5332. https://doi.org/10.3390/su15065332

Deng J, Bai Y, Chen Z, Shen T, Li C, Yang X. A Convolutional Neural Network for Coastal Aquaculture Extraction from High-Resolution Remote Sensing Imagery. Sustainability. 2023; 15(6):5332. https://doi.org/10.3390/su15065332

Chicago/Turabian StyleDeng, Jinpu, Yongqing Bai, Zhengchao Chen, Ting Shen, Cong Li, and Xuan Yang. 2023. "A Convolutional Neural Network for Coastal Aquaculture Extraction from High-Resolution Remote Sensing Imagery" Sustainability 15, no. 6: 5332. https://doi.org/10.3390/su15065332