Corporate Sustainability Communication as ‘Fake News’: Firms’ Greenwashing on Twitter

Abstract

:1. Introduction

2. Theoretical Background

2.1. Greenwashing by Organizations

2.2. Greenwashing as Fake News: Conceptualization

2.3. Detecting Greenwashing

2.4. Relating Greenwashing to Organizational Financial Market Performance

3. Methods

3.1. Data

3.2. Analyses

3.2.1. Selecting Tweets Related to Environmental Sustainability

3.2.2. Linguistic Analysis

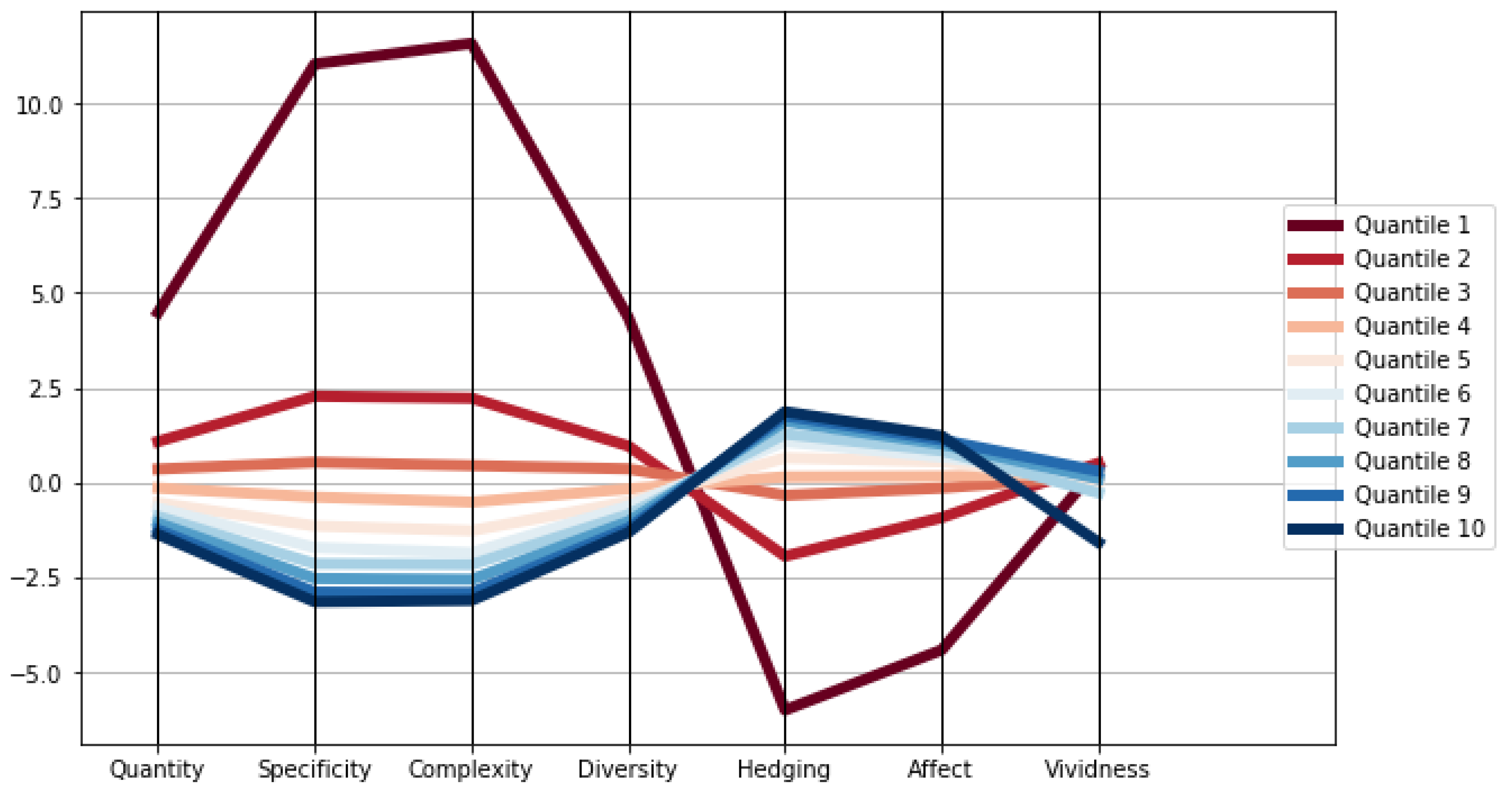

3.2.3. Profiling Greenwashing

3.2.4. Relating Greenwashing to Financial Market Performance

4. Results

5. Robustness Tests

5.1. Robustness Tests for RQ1: Validating our Greenwashing Detection Method

5.2. Robustness Tests for RQ2: Relating Greenwashing to Financial Market Performance

6. Discussion and Implications

6.1. Theoretical Implications

6.2. Methodological Implications

6.3. Empirical Implications

6.4. Implications for Policy and Practice

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Etter, M. Broadcasting, reacting, engaging—Three strategies for CSR communication in Twitter. J. Commun. Manag. 2014, 18, 322–342. [Google Scholar] [CrossRef]

- Orazi, D.C.; Chan, E.Y. “They did not walk the green talk!:” How information specificity influences consumer evaluations of disconfirmed environmental claims. J. Bus. Ethics 2020, 163, 107–123. [Google Scholar] [CrossRef] [Green Version]

- Gatti, L.; Pizzetti, M.; Seele, P. Green lies and their effect on intention to invest. J. Bus. Res. 2021, 127, 228–240. [Google Scholar] [CrossRef]

- Szabo, S.; Webster, J. Perceived greenwashing: The effects of green marketing on environmental and product perceptions. J. Bus. Ethics 2021, 171, 719–739. [Google Scholar] [CrossRef]

- de Freitas Netto, S.V.; Sobral, M.F.F.; Ribeiro, A.R.B.; Soares, G.R.D.L. Concepts and forms of greenwashing: A systematic review. Environ. Sci. Eur. 2020, 32, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Kim, E.-H.; Lyon, T.P. Greenwash vs. brownwash: Exaggeration and undue modesty in corporate sustainability disclosure. Organ. Sci. 2015, 26, 705–723. [Google Scholar] [CrossRef] [Green Version]

- Lyon, T.P.; Montgomery, A.W. The means and end of greenwash. Organ. Environ. 2015, 28, 223–249. [Google Scholar] [CrossRef]

- Marquis, C.; Toffel, M.W.; Zhou, Y. Scrutiny, norms, and selective disclosure: A global study of greenwashing. Organ. Sci. 2016, 27, 483–504. [Google Scholar] [CrossRef] [Green Version]

- Lyon, T.P.; Montgomery, A.W. Tweetjacked: The impact of social media on corporate greenwash. J. Bus. Ethics 2013, 118, 747–757. [Google Scholar] [CrossRef]

- Bowen, F.; Aragon-Correa, J.A. Greenwashing in corporate environmentalism research and practice: The importance of what we say and do. Organ. Environ. 2014, 27, 107–112. [Google Scholar] [CrossRef] [Green Version]

- Crilly, D.; Hansen, M.; Zollo, M. The grammar of decoupling: A cognitive-linguistic perspective on firms’ sustainability claims and stakeholders’ interpretation. Acad. Manag. J. 2016, 59, 705–729. [Google Scholar] [CrossRef]

- Torelli, R.; Balluchi, F.; Lazzini, A. Greenwashing and environmental communication: Effects on stakeholders’ perceptions. Bus. Strategy Environ. 2020, 29, 407–421. [Google Scholar] [CrossRef] [Green Version]

- Business for Social Responsibility. The State of Sustainable Business in 2019|Reports|BSR; Business for Social Responsibility: San Francisco, CA, USA, 2019; Available online: https://www.bsr.org/en/our-insights/report-view/the-state-of-sustainable-business-in-2019 (accessed on 15 May 2020).

- Atkinson, L.; Kim, Y. “I drink it anyway and I know I shouldn’t”: Understanding green consumers’ positive evaluations of norm-violating non-green products and misleading green advertising. Environ. Commun. 2015, 9, 37–57. [Google Scholar] [CrossRef]

- Baum, L.M. It’s not easy being green … or is it? A content analysis of environmental claims in magazine advertisements from the United States and United Kingdom. Environ. Commun. 2012, 6, 423–440. [Google Scholar] [CrossRef]

- De Jong, M.D.T.; Harkink, K.M.; Barth, S. Making green stuff? Effects of corporate greenwashing on consumers. J. Bus. Tech. Commun. 2018, 32, 77–112. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- TerraChoice. The Sins of Greenwashing: Home and Family Edition 2010; TerraChoice: Ottawa, ON, Canada, 2010; p. 22. Available online: https://www.map-testing.com/assets/files/2009-04-xx-The_Seven_Sins_of_Greenwashing_low_res.pdf (accessed on 10 May 2020).

- Carlson, L.; Grove, S.J.; Kangun, N. A content analysis of environmental advertising claims: A matrix method approach. J. Advert. 1993, 22, 27–39. [Google Scholar] [CrossRef]

- Sun, Z.; Zhang, W. Do government regulations prevent greenwashing? An evolutionary game analysis of heterogeneous enterprises. J. Clean. Prod. 2019, 231, 1489–1502. [Google Scholar] [CrossRef]

- Unruh, G.; Kiron, D.; Kruschwitz, N.; Reeves, M.; Rubel, H.; Meyer Zum Felde, A. Investing for a sustainable future: Investors care more about sustainability than many executives believe. MIT Sloan Manag. Rev. 2016, 57, 1–29. [Google Scholar]

- Siano, A.; Vollero, A.; Conte, F.; Amabile, S. “More than words”: Expanding the taxonomy of greenwashing after the Volkswagen scandal. J. Bus. Res. 2017, 71, 27–37. [Google Scholar] [CrossRef]

- Darke, P.R.; Ritchie, R.J.B. The defensive consumer: Advertising deception, defensive processing, and distrust. J. Mark. Res. 2007, 44, 114–127. [Google Scholar] [CrossRef]

- Pomering, A.; Johnson, L.W. Advertising corporate social responsibility initiatives to communicate corporate image: Inhibiting scepticism to enhance persuasion. Corp. Commun. Int. J. 2009, 14, 420–439. [Google Scholar] [CrossRef]

- Zinkhan, G.M.; Carlson, L. Green advertising and the reluctant consumer. J. Advert. 1995, 24, 1–6. [Google Scholar] [CrossRef]

- Davis, J.J. Ethics and environmental marketing. J. Bus. Ethics 1992, 11, 81–87. [Google Scholar] [CrossRef]

- Nyilasy, G.; Gangadharbatla, H.; Paladino, A. Perceived greenwashing: The interactive effects of green advertising and corporate environmental performance on consumer reactions. J. Bus. Ethics 2014, 125, 693–707. [Google Scholar] [CrossRef]

- Ewing, J. Daimler to Settle U.S. Emissions Charges for $2.2 Billion. The New York Times. 13 August 2020. Available online: https://www.nytimes.com/2020/08/13/business/daimler-emissions-settlement-us.html (accessed on 21 December 2020).

- Trefis Team. The Domino Effect of Volkswagen’s Emissions Scandal. Forbes. 28 September 2015. Available online: https://www.forbes.com/sites/greatspeculations/2015/09/28/the-domino-effect-of-volkswagens-emissions-scandal/ (accessed on 20 December 2020).

- George, J.F.; Carlson, J.R.; Valacich, J.S. Media selection as a strategic component of communication. MIS Q. 2013, 37, 1233–1251. [Google Scholar] [CrossRef]

- Zhou, L.; Burgoon, J.K.; Twitchell, D.P.; Qin, T.; Nunamaker, J.F., Jr. A comparison of classification methods for predicting deception in computer-mediated communication. J. Manag. Inf. Syst. 2004, 20, 139–166. [Google Scholar] [CrossRef]

- Feldman, L.; Hart, P.S. Climate change as a polarizing cue: Framing effects on public support for low-carbon energy policies. Glob. Environ. Change 2018, 51, 54–66. [Google Scholar] [CrossRef]

- Lefsrud, L.M.; Meyer, R.E. Science or science fiction? Professionals’ discursive construction of climate change. Organ. Stud. 2012, 33, 1477–1506. [Google Scholar] [CrossRef] [Green Version]

- Wittneben, B.B.F.; Okereke, C.; Banerjee, S.B.; Levy, D.L. Climate change and the emergence of new organizational landscapes. Organ. Stud. 2012, 33, 1431–1450. [Google Scholar] [CrossRef] [Green Version]

- Nemes, N.; Scanlan, S.J.; Smith, P.; Smith, T.; Aronczyk, M.; Hill, S.; Lewis, S.L.; Montgomery, A.W.; Tubiello, F.N.; Stabinsky, D. An Integrated Framework to Assess Greenwashing. Sustainability 2022, 14, 4431. [Google Scholar] [CrossRef]

- Bernard, J.-G.; Dennis, A.; Galletta, D.; Khan, A.; Webster, J. The Tangled Web: Studying Online Fake News. In Proceedings of the ICIS 2019, Munich, Germany, 15–18 December 2019; Available online: https://aisel.aisnet.org/icis2019/panels/panels/4 (accessed on 1 October 2022).

- Heede, R. Tracing anthropogenic carbon dioxide and methane emissions to fossil fuel and cement producers, 1854–2010. Clim. Change 2014, 122, 229–241. [Google Scholar] [CrossRef] [Green Version]

- Walker, K.; Wan, F. The harm of symbolic actions and green-washing: Corporate actions and communications on environmental performance and their financial implications. J. Bus. Ethics 2012, 109, 227–242. [Google Scholar] [CrossRef] [Green Version]

- Wu, M.-W.; Shen, C.-H. Corporate social responsibility in the banking industry: Motives and financial performance. J. Bank. Financ. 2013, 37, 3529–3547. [Google Scholar] [CrossRef]

- Okazaki, S.; Plangger, K.; West, D.; Menéndez, H.D. Exploring digital corporate social responsibility communications on Twitter. J. Bus. Res. 2020, 117, 675–682. [Google Scholar] [CrossRef]

- Khan, A.; Brohman, K.; Addas, S. The anatomy of ‘fake news’: Studying false messages as digital objects. J. Inf. Technol. 2022, 37, 122–143. [Google Scholar] [CrossRef]

- Wardle, C. The need for smarter definitions and practical, timely empirical research on information disorder. Digit. J. 2018, 6, 951–963. [Google Scholar] [CrossRef]

- Burgoon, J.K. Predicting veracity from linguistic indicators. J. Lang. Soc. Psychol. 2018, 37, 603–631. [Google Scholar] [CrossRef] [Green Version]

- Hauch, V.; Blandón-Gitlin, I.; Masip, J.; Sporer, S.L. Are computers effective lie detectors? A meta-analysis of linguistic cues to deception. Personal. Soc. Psychol. Rev. 2015, 19, 307–342. [Google Scholar] [CrossRef]

- Yang, J.; Basile, K. Communicating corporate social responsibility: External stakeholder involvement, productivity and firm performance. J. Bus. Ethics 2022, 178, 501–517. [Google Scholar] [CrossRef]

- Aguilera, R.V.; Waldman, D.A.; Siegel, D.S. Responsibility and organization science: Integrating micro and macro perspectives. Organ. Sci. 2022, 33, 483–494. [Google Scholar] [CrossRef]

- Li, W.; Li, W.; Seppänen, V.; Koivumäki, T. Effects of greenwashing on financial performance: Moderation through local environmental regulation and media coverage. Bus. Strategy Environ. 2023, 32, 820–841. [Google Scholar] [CrossRef]

- Testa, F.; Miroshnychenko, I.; Barontini, R.; Frey, M. Does it pay to be a greenwasher or a brownwasher? Bus. Strategy Environ. 2018, 27, 1104–1116. [Google Scholar] [CrossRef]

- Zhou, X.; Zafarani, R. A survey of fake news: Fundamental theories, detection methods, and opportunities. ACM Comput. Surv. 2020, 53, 1–40. [Google Scholar] [CrossRef]

- Burton, S.; Soboleva, A.; Daellenbach, K.; Basil, D.Z.; Beckman, T.; Deshpande, S. Helping those who help us: Co-branded and co-created Twitter promotion in CSR partnerships. J. Brand Manag. 2017, 24, 322–333. [Google Scholar] [CrossRef] [Green Version]

- Becker-Olsen, K.; Potucek, S. Greenwashing. In Encyclopedia of Corporate Social Responsibility; Idowu, S.O., Capaldi, N., Zu, L., Gupta, A.D., Eds.; Springer: Berlin, Germany, 2013; pp. 1318–1323. [Google Scholar] [CrossRef]

- Laufer, W.S. Social accountability and corporate greenwashing. J. Bus. Ethics 2003, 43, 253–261, JSTOR. [Google Scholar] [CrossRef]

- Delmas, M.A.; Burbano, V.C. The drivers of greenwashing. Calif. Manag. Rev. 2011, 54, 64–87. [Google Scholar] [CrossRef] [Green Version]

- Lyon, T.P.; Maxwell, J.W. Greenwash: Corporate environmental disclosure under threat of audit. J. Econ. Manag. Strategy 2011, 20, 3–41. [Google Scholar] [CrossRef]

- Sharma, K.; Qian, F.; Jiang, H.; Ruchansky, N.; Zhang, M.; Liu, Y. Combating fake news: A survey on identification and mitigation techniques. ACM Trans. Intell. Syst. Technol. 2019, 10, 21:1–21:42. [Google Scholar] [CrossRef]

- Mateo-Márquez, A.J.; González-González, J.M.; Zamora-Ramírez, C. An international empirical study of greenwashing and voluntary carbon disclosure. J. Clean. Prod. 2022, 363, 132567. [Google Scholar] [CrossRef]

- Seele, P.; Gatti, L. Greenwashing revisited: In search of a typology and accusation-based definition incorporating legitimacy strategies. Bus. Strategy Environ. 2017, 26, 239–252. [Google Scholar] [CrossRef]

- Chen, Y.-S.; Chang, C.-H. Greenwash and green trust: The mediation effects of green consumer confusion and green perceived risk. J. Bus. Ethics 2013, 114, 489–500. [Google Scholar] [CrossRef]

- Fabrizio, K.R.; Kim, E.-H. Reluctant Disclosure and Transparency: Evidence from Environmental Disclosures. Organ. Sci. 2019, 30, 1207–1231. [Google Scholar] [CrossRef]

- Kapantai, E.; Christopoulou, A.; Berberidis, C.; Peristeras, V. A systematic literature review on disinformation: Toward a unified taxonomical framework. New Media Soc. 2021, 23, 1301–1326. [Google Scholar] [CrossRef]

- Allcott, H.; Gentzkow, M. Social media and fake news in the 2016 election. J. Econ. Perspect. 2017, 31, 211–236. [Google Scholar] [CrossRef] [Green Version]

- Tandoc, E.C.; Lim, Z.W.; Ling, R. Defining “Fake News”: A typology of scholarly definitions. Digit. J. 2018, 6, 137–153. [Google Scholar] [CrossRef]

- Lazer, D.M.J.; Baum, M.A.; Benkler, Y.; Berinsky, A.J.; Greenhill, K.M.; Menczer, F.; Metzger, M.J.; Nyhan, B.; Pennycook, G.; Rothschild, D.; et al. The science of fake news. Science 2018, 359, 1094–1096. [Google Scholar] [CrossRef]

- Vosoughi, S.; Roy, D.; Aral, S. The spread of true and false news online. Science 2018, 359, 1146–1151. [Google Scholar] [CrossRef]

- Chen, Y.; Conroy, N.J.; Rubin, V.L. Misleading online content: Recognizing clickbait as “false news”. In Proceedings of the 2015 ACM on Workshop on Multimodal Deception Detection, Seattle, WA, USA, 9–13 November 2015; pp. 15–19. [Google Scholar] [CrossRef]

- Zubiaga, A.; Aker, A.; Bontcheva, K.; Liakata, M.; Procter, R. Detection and resolution of rumours in social media: A survey. ACM Comput. Surv. 2018, 51, 32:1–32:36. [Google Scholar] [CrossRef] [Green Version]

- Ireton, C.; Posetti, J.; UNESCO. Journalism, “Fake News” and Disinformation: Handbook for Journalism Education and Training; United Nations, Educational, Scientific and Cultural Organization: London, UK, 2018; Available online: http://unesdoc.unesco.org/images/0026/002655/265552E.pdf (accessed on 10 May 2020).

- Zhou, X.; Zafarani, R. Network-based fake news detection: A pattern-driven approach. ACM SIGKDD Explor. Newsl. 2019, 21, 48–60. [Google Scholar] [CrossRef]

- Finn, K. BP Lied about Size of U.S. Gulf Oil Spill, Lawyers Tell Trial. Reuters, 30 September 2013. Available online: https://www.reuters.com/article/us-bp-trial-idUSBRE98T13U20130930 (accessed on 20 December 2020).

- Ferns, G.; Amaeshi, K. Fueling climate (in)action: How organizations engage in hegemonization to avoid transformational action on climate change. Organ. Stud. 2021, 42, 1005–1029. [Google Scholar] [CrossRef]

- Feng, S.; Banerjee, R.; Choi, Y. Syntactic Stylometry for Deception Detection. In Proceedings of the 50th Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), Jeju Island, Republic of Korea, 8–14 July 2012; pp. 171–175. Available online: https://www.aclweb.org/anthology/P12-2034 (accessed on 28 May 2020).

- Zhou, X.; Jain, A.; Phoha, V.V.; Zafarani, R. Fake news early detection: A theory-driven model. Digit. Threat. Res. Pract. 2020, 1, 12:1–12:25. [Google Scholar] [CrossRef]

- Buller, D.B.; Burgoon, J.K. Interpersonal deception theory. Commun. Theory 1996, 6, 203–242. [Google Scholar] [CrossRef]

- Ekman, P. Telling Lies: Clues to Deceit in the Marketplace, Politics, and Marriage; W.W. Norton: New York, NY, USA, 2001. [Google Scholar]

- Fuller, C.M.; Biros, D.P.; Burgoon, J.; Nunamaker, J.F., Jr. An examination and validation of linguistic constructs for studying high-stakes deception. Group Decis. Negot. 2013, 22, 117–134. [Google Scholar] [CrossRef]

- Sporer, S.L. Reality monitoring and detection of deception. In The Detection of Deception in Forensic Contexts; Strömwall, L.A., Granhag, P.A., Eds.; Cambridge University Press, Cambridge Core: Cambridge, UK, 2004; pp. 64–102. [Google Scholar] [CrossRef]

- Vrij, A.; Edward, K.; Roberts, K.P.; Bull, R. Detecting deceit via analysis of verbal and nonverbal behavior. J. Nonverbal Behav. 2000, 24, 239–263. [Google Scholar] [CrossRef]

- Grice, P. Studies in the Way of Words; Harvard University Press: Cambridge, MA, USA, 1989. [Google Scholar]

- Ten Brinke, L.; Porter, S. Cry me a river: Identifying the behavioral consequences of extremely high-stakes interpersonal deception. Law Hum. Behav. 2012, 36, 469–477. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Johnson, M.K.; Raye, C.L. Reality monitoring. Psychol. Rev. 1981, 88, 67–85. [Google Scholar] [CrossRef]

- Steller, M.; Köhnken, G. Criteria-Based Content Analysis. In Psychological Methods in Criminal Investigation and Evidence; Raskin, D.C., Ed.; Springer Pub Co.: New York, NY, USA, 1989; pp. 217–245. [Google Scholar]

- Qin, T.; Burgoon, J.; Nunamaker, J.F., Jr. An exploratory study on promising cues in deception detection and application of decision tree. In Proceedings of the 37th Annual Hawaii International Conference on System Sciences, Big Island, HI, USA, 5–8 January 2004; pp. 23–32. [Google Scholar] [CrossRef]

- Courtis, J.K. Corporate report obfuscation: Artefact or phenomenon? Br. Account. Rev. 2004, 36, 291–312. [Google Scholar] [CrossRef]

- Bachenko, J.; Fitzpatrick, E.; Schonwetter, M. Verification and implementation of language-based deception indicators in civil and criminal narratives. In Proceedings of the 22nd International Conference on Computational Linguistics (COLING 2008), Manchester, UK, 18–22 August 2008; pp. 41–48. [Google Scholar]

- Duran, N.D.; Hall, C.; Mccarthy, P.M.; Mcnamara, D.S. The linguistic correlates of conversational deception: Comparing natural language processing technologies. Appl. Psycholinguist. 2010, 31, 439–462. [Google Scholar] [CrossRef]

- Fuller, C.M.; Biros, D.P.; Wilson, R.L. Decision support for determining veracity via linguistic-based cues. Decis. Support Syst. 2009, 46, 695–703. [Google Scholar] [CrossRef]

- Bond, G.D.; Lee, A.Y. Language of lies in prison: Linguistic classification of prisoners’ truthful and deceptive natural language. Appl. Cogn. Psychol. 2005, 19, 313–329. [Google Scholar] [CrossRef]

- DePaulo, B.M.; Lindsay, J.J.; Malone, B.E.; Muhlenbruck, L.; Charlton, K.; Cooper, H. Cues to deception. Psychol. Bull. 2003, 129, 74–118. [Google Scholar] [CrossRef] [PubMed]

- Whissel, C. The dictionary of affect in language. In Emotion: Theory, Research and Experience: The Measurement of Emotions; Plutchik, R., Kellerman, H., Eds.; Academic Press: Cambridge, MA, USA, 1989; Volume 4. [Google Scholar]

- Gosselt, J.F.; van Rompay, T.; Haske, L. Won’t get fooled again: The effects of internal and external CSR Eco-labeling. J. Bus. Ethics 2019, 155, 413–424. [Google Scholar] [CrossRef] [Green Version]

- Parguel, B.; Benoît-Moreau, F.; Larceneux, F. How sustainability ratings might deter ‘greenwashing’: A closer look at ethical corporate communication. J. Bus. Ethics 2011, 102, 15. [Google Scholar] [CrossRef] [Green Version]

- Ekwurzel, B.; Boneham, J.; Dalton, M.W.; Heede, R.; Mera, R.J.; Allen, M.R.; Frumhoff, P.C. The rise in global atmospheric CO2, surface temperature, and sea level from emissions traced to major carbon producers. Clim. Change 2017, 144, 579–590. [Google Scholar] [CrossRef] [Green Version]

- Barnett, M.L.; Henriques, I.; Husted, B.W. Beyond good intentions: Designing CSR initiatives for greater social impact. J. Manag. 2020, 46, 937–964. [Google Scholar] [CrossRef]

- Egginton, J.F.; McBrayer, G.A. Does it pay to be forthcoming? Evidence from CSR disclosure and equity market liquidity. Corp. Soc. Responsib. Environ. Manag. 2019, 26, 396–407. [Google Scholar] [CrossRef]

- Jizi, M. The influence of board composition on sustainable development disclosure. Bus. Strategy Environ. 2017, 26, 640–655. [Google Scholar] [CrossRef]

- Garcia-Castro, R.; Francoeur, C. When more is not better: Complementarities, costs and contingencies in stakeholder management. Strateg. Manag. J. 2016, 37, 406–424. [Google Scholar] [CrossRef]

- Lu, J.; Herremans, I.M. Board gender diversity and environmental performance: An industries perspective. Bus. Strategy Environ. 2019, 28, 1449–1464. [Google Scholar] [CrossRef]

- Surroca, J.; Tribó, J.A.; Waddock, S. Corporate responsibility and financial performance: The role of intangible resources. Strateg. Manag. J. 2010, 31, 463–490. [Google Scholar] [CrossRef]

- McHaney, R.; George, J.F.; Gupta, M. An exploration of deception detection: Are groups more effective than individuals? Commun. Res. 2018, 45, 1103–1121. [Google Scholar] [CrossRef]

- Wang, W.; Hernandez, I.; Newman, D.A.; He, J.; Bian, J. Twitter analysis: Studying US weekly trends in work stress and emotion. Appl. Psychol. 2016, 65, 355–378. [Google Scholar] [CrossRef]

- MSCI. MSCI ESG KLD STATS: 1991–2015 Data Sets; MSCI: New York, NY, USA, 2016. [Google Scholar]

- Awaysheh, A.; Heron, R.A.; Perry, T.; Wilson, J.I. On the relation between corporate social responsibility and financial performance. Strateg. Manag. J. 2020, 41, 965–987. [Google Scholar] [CrossRef]

- Zhang, W.; Wang, Q.; Li, X.; Yoshida, T.; Li, J. DCWord: A novel deep learning approach to deceptive review identification by word vectors. J. Syst. Sci. Syst. Eng. 2019, 28, 731–746. [Google Scholar] [CrossRef]

- Castelo, S.; Almeida, T.; Elghafari, A.; Santos, A.; Pham, K.; Nakamura, E.; Freire, J. A topic-agnostic approach for identifying fake news pages. In Proceedings of the 2019 World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 975–980. [Google Scholar] [CrossRef] [Green Version]

- Pennebaker, J.W.; Boyd, R.L.; Jordan, K.; Blackburn, K. The Development and Psychometric Properties of LIWC2015; The University of Texas at Austin: Austin, TX, USA, 2015; Available online: https://repositories.lib.utexas.edu/handle/2152/31333 (accessed on 24 May 2020).

- Braun, M.T.; Van Swol, L.M. Justifications Offered, Questions Asked, and Linguistic Patterns in Deceptive and Truthful Monetary Interactions. Group Decis. Negot. 2016, 25, 641–661. [Google Scholar] [CrossRef]

- Ho, S.M.; Hancock, J.T.; Booth, C.; Liu, X. Computer-mediated deception: Strategies revealed by language-action cues in spontaneous communication. J. Manag. Inf. Syst. 2016, 33, 393–420. [Google Scholar] [CrossRef]

- Ludwig, S.; van Laer, T.; de Ruyter, K.; Friedman, M. Untangling a web of lies: Exploring automated detection of deception in computer-mediated communication. J. Manag. Inf. Syst. 2016, 33, 511–541. [Google Scholar] [CrossRef] [Green Version]

- Fuller, C.M.; Biros, D.P.; Twitchell, D.P.; Burgoon, J.K. An analysis of text-based deception detection tools. In Proceedings of the AMCIS 2006 Proceedings, Americas Conference on Information Systems, Acapulco, Mexico, 4–6 August 2006; Available online: http://aisel.aisnet.org/amcis2006/418 (accessed on 24 May 2020).

- Whissell, C. Using the Revised Dictionary of Affect in Language to Quantify the Emotional Undertones of Samples of Natural Language. Psychol. Rep. 2009, 105, 509–521. [Google Scholar] [CrossRef]

- Jaidka, K.; Zhou, A.; Lelkes, Y. Brevity is the soul of Twitter: The constraint affordance and political discussion. J. Commun. 2019, 69, 345–372. [Google Scholar] [CrossRef]

- Venkatraman, N. The concept of fit in strategy research: Toward verbal and statistical correspondence. Acad. Manag. Rev. 1989, 14, 423–444. [Google Scholar] [CrossRef]

- Sabherwal, R.; Chan, Y.E. Alignment between business and IS strategies: A study of prospectors, analyzers, and defenders. Inf. Syst. Res. 2001, 12, 11–33. [Google Scholar] [CrossRef]

- Chen, Y.-Y.; Huang, H.-L. Knowledge management fit and its implications for business performance: A profile deviation analysis. Knowl.-Based Syst. 2012, 27, 262–270. [Google Scholar] [CrossRef]

- Barki, H.; Rivard, S.; Talbot, J. An integrative contingency model of software project risk management. J. Manag. Inf. Syst. 2001, 17, 37–69. [Google Scholar] [CrossRef]

- Hult, G.T.M.; Ketchen, D.J.; Cavusgil, S.T.; Calantone, R.J. Knowledge as a strategic resource in supply chains. J. Oper. Manag. 2006, 24, 458–475. [Google Scholar] [CrossRef]

- Venkatraman, N.; Prescott, J.E. Environment-strategy coalignment: An empirical test of its performance implications. Strateg. Manag. J. 1990, 11, 1–23. [Google Scholar] [CrossRef] [Green Version]

- Vorhies, D.W.; Morgan, N.A. A configuration theory assessment of marketing organization fit with business strategy and its relationship with marketing performance. J. Mark. 2003, 67, 100–115. [Google Scholar] [CrossRef] [Green Version]

- Vorhies, D.W.; Morgan, N.A. Benchmarking marketing capabilities for sustainable competitive advantage. J. Mark. 2005, 69, 80–94. [Google Scholar] [CrossRef]

- Brett, J.M.; Olekalns, M.; Friedman, R.; Goates, N.; Anderson, C.; Lisco, C.C. Sticks and stones: Language, face, and online dispute resolution. Acad. Manag. J. 2007, 50, 85–99. [Google Scholar] [CrossRef] [Green Version]

- Jensen, M.L.; Lowry, P.B.; Burgoon, J.K.; Nunamaker, J.F. Technology dominance in complex decision making: The case of aided credibility assessment. J. Manag. Inf. Syst. 2010, 27, 175–201. [Google Scholar] [CrossRef]

- Jensen, M.L.; Lowry, P.B.; Jenkins, J.L. Effects of automated and participative decision support in computer-aided credibility assessment. J. Manag. Inf. Syst. 2011, 28, 201–233. [Google Scholar] [CrossRef]

- Berrone, P.; Fosfuri, A.; Gelabert, L. Does greenwashing pay off? Understanding the relationship between environmental actions and environmental legitimacy. J. Bus. Ethics 2017, 144, 363–379. [Google Scholar] [CrossRef]

- Breusch, T.S.; Pagan, A.R. A Simple Test for Heteroscedasticity and Random Coefficient Variation. Econometrica 1979, 47, 1287–1294. [Google Scholar] [CrossRef]

- Wooldridge, J.M. Econometric Analysis of Cross Section and Panel Data, 2nd ed.; MIT Press: Cambridge, MA, USA, 2010. [Google Scholar]

- Henriques, I.; Sadorsky, P. The relationship between environmental commitment and managerial perceptions of stakeholder importance. Acad. Manag. J. 1999, 42, 87–99. [Google Scholar] [CrossRef]

- Topal, İ.; Nart, S.; Akar, C.; Erkollar, A. The effect of greenwashing on online consumer engagement: A comparative study in France, Germany, Turkey, and the United Kingdom. Bus. Strategy Environ. 2020, 29, 465–480. [Google Scholar] [CrossRef]

- Lacroix, K.; Gifford, R. Psychological barriers to energy conservation behavior: The role of worldviews and climate change risk perception. Environ. Behav. 2018, 50, 749–780. [Google Scholar] [CrossRef] [Green Version]

- van der Linden, S. The social-psychological determinants of climate change risk perceptions: Towards a comprehensive model. J. Environ. Psychol. 2015, 41, 112–124. [Google Scholar] [CrossRef]

- MacKay, B.; Munro, I. Information warfare and new organizational landscapes: An inquiry into the Exxonmobil–Greenpeace dispute over climate change. Organ. Stud. 2012, 33, 1507–1536. [Google Scholar] [CrossRef]

- Vilone, G.; Longo, L. Explainable artificial intelligence: A systematic review. arXiv 2020, arXiv:abs/2006.00093. [Google Scholar]

- Kumar, N.; Venugopal, D.; Qiu, L.; Kumar, S. Detecting anomalous online reviewers: An unsupervised approach using mixture models. J. Manag. Inf. Syst. 2019, 36, 1313–1346. [Google Scholar] [CrossRef]

- Siering, M.; Koch, J.-A.; Deokar, A.V. Detecting fraudulent behavior on crowdfunding platforms: The role of linguistic and content-based cues in static and dynamic contexts. J. Manag. Inf. Syst. 2016, 33, 421–455. [Google Scholar] [CrossRef]

- Vo, N.; Lee, K. The rise of guardians: Fact-checking URL recommendation to combat fake news. In Proceedings of the 41st International ACM SIGIR Conference on Research & Development in Information Retrieval, Ann Arbor, MI, USA, 8–12 July 2018; pp. 275–284. [Google Scholar] [CrossRef]

- Altowim, Y.; Kalashnikov, D.V.; Mehrotra, S. Progressive approach to relational entity resolution. Proc. VLDB Endow. 2014, 7, 999–1010. [Google Scholar] [CrossRef] [Green Version]

- Asudeh, A.; Jagadish, H.V.; Wu, Y.; Yu, C. On detecting cherry-picked trendlines. Proc. VLDB Endow. 2020, 13, 939–952. [Google Scholar] [CrossRef] [Green Version]

- Castillo, C.; Mendoza, M.; Poblete, B. Information credibility on Twitter. In Proceedings of the 20th International Conference on World Wide Web—WWW ’11, Hyderabad, India, 28 March–5 April 2011; pp. 675–684. [Google Scholar] [CrossRef]

- Charrad, M.; Ghazzali, N.; Boiteau, V.; Niknafs, A. NbClust: An R package for determining the relevant number of clusters in a data set. J. Stat. Softw. 2014, 61, 1–36. [Google Scholar] [CrossRef] [Green Version]

- Ferrara, E.; Varol, O.; Davis, C.; Menczer, F.; Flammini, A. The rise of social bots. Commun. ACM 2016, 59, 96–104. [Google Scholar] [CrossRef] [Green Version]

- Han, Y.; Lappas, T.; Sabnis, G. The importance of interactions between content characteristics and creator characteristics for studying virality in social media. Inf. Syst. Res. 2020, 31, 576–588. [Google Scholar] [CrossRef]

- Hoffart, J.; Suchanek, F.M.; Berberich, K.; Weikum, G. YAGO2: A spatially and temporally enhanced knowledge base from Wikipedia. Artif. Intell. 2013, 194, 28–61. [Google Scholar] [CrossRef] [Green Version]

- Hopkins, B.; Skellam, J.G. A new method for determining the type of distribution of plant individuals. Ann. Bot. 1954, 18, 213–227. [Google Scholar] [CrossRef]

- Kang, B.; Deng, Y. The maximum Deng entropy. IEEE Access 2019, 7, 120758–120765. [Google Scholar] [CrossRef]

- Kassambara, M.A. Practical Guide to Cluster Analysis in R: Unsupervised Machine Learning, 1st ed.; STHDA, 2017; pp. 1–38. Available online: http://www.sthda.com (accessed on 4 March 2020).

- Kaufman, L.; Rousseeuw, P.J. Finding Groups in Data: An Intro to Cluster Analysis; John Wiley & Sons: Hoboken, NJ, USA, 2009. [Google Scholar]

- Mather, P.M. Computational Methods of Multivariate Analysis in Physical Geography; John Wiley & Sons: Hoboken, NJ, USA, 1976. [Google Scholar]

- McQueen, J.B. Some methods for classification and analysis of multivariate observations. In Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability: Weather Modification; Cam, L.M.L., Neyman, J., Eds.; University of California Press: Berkeley, CA, USA, 1967; pp. 281–297. [Google Scholar]

- Nickel, M.; Murphy, K.; Tresp, V.; Gabrilovich, E. A review of relational machine learning for knowledge graphs. Proc. IEEE 2016, 104, 11–33. [Google Scholar] [CrossRef]

- Rubin, V.L.; Chen, Y.; Conroy, N.K. Deception detection for news: Three types of fakes. Proc. Assoc. Inf. Sci. Technol. 2015, 52, 1–4. [Google Scholar] [CrossRef]

- Shao, C.; Ciampaglia, G.L.; Varol, O.; Yang, K.-C.; Flammini, A.; Menczer, F. The spread of low-credibility content by social bots. Nat. Commun. 2018, 9, 4787. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sitaula, N.; Mohan, C.K.; Grygiel, J.; Zhou, X.; Zafarani, R. Credibility-based fake news detection. In Disinformation, Misinformation, and Fake News in Social Media: Emerging Research Challenges and Opportunities; Shu, K., Wang, S., Lee, D., Liu, H., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 163–182. [Google Scholar] [CrossRef]

- Spirin, N.; Han, J. Survey on web spam detection: Principles and algorithms. ACM SIGKDD Explor. Newsl. 2012, 13, 50–64. [Google Scholar] [CrossRef]

- Theocharis, Y.; Lowe, W.; van Deth, J.W.; García-Albacete, G. Using Twitter to mobilize protest action: Online mobilization patterns and action repertoires in the Occupy Wall Street, Indignados, and Aganaktismenoi movements. Inf. Commun. Soc. 2015, 18, 202–220. [Google Scholar] [CrossRef]

- Thorndike, R.L. Who belongs in the family? Psychometrika 1953, 18, 267–276. [Google Scholar] [CrossRef]

- Tibshirani, R.; Walther, G.; Hastie, T. Estimating the number of clusters in a data set via the gap statistic. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 2001, 63, 411–423. [Google Scholar] [CrossRef]

- Wardle, C.; Derakhshan, H. Information Disorder: Toward an Interdisciplinary Framework for Research and Policy Making (No. 162317GBR). Council of Europe. 2017. Available online: https://edoc.coe.int/en/media/7495-information-disorder-toward-an-interdisciplinary-framework-for-research-and-policy-making.html (accessed on 10 May 2020).

- Wu, Y.; Ngai EW, T.; Wu, P.; Wu, C. Fake online reviews: Literature review, synthesis, and directions for future research. Decis. Support Syst. 2020, 132, 113280. [Google Scholar] [CrossRef]

- Ye, J.; Skiena, S. Mediarank: Computational ranking of online news sources. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 2469–2477. [Google Scholar] [CrossRef]

- Zannettou, S.; Sirivianos, M.; Blackburn, J.; Kourtellis, N. The web of false information: Rumors, fake news, hoaxes, clickbait, and various other shenanigans. J. Data Inf. Qual. 2019, 11, 10:1–10:37. [Google Scholar] [CrossRef] [Green Version]

- Zhang, D.; Zhou, L.; Kehoe, J.L.; Kilic, I.Y. What online reviewer behaviors really matter? Effects of verbal and nonverbal behaviors on detection of fake online reviews. J. Manag. Inf. Syst. 2016, 33, 456–481. [Google Scholar] [CrossRef]

- Zhou, L.; Burgoon, J.K.; Nunamaker, J.F.; Twitchell, D. Automating linguistics-based cues for detecting deception in text-based asynchronous computer-mediated communication. Group Decis. Negot. 2004, 13, 81–106. [Google Scholar] [CrossRef]

| Cue | Indicator(s) | Valence | Measurement Software |

|---|---|---|---|

| Quantity |

| Truthful Truthful | LIWC |

| Specificity |

| Truthful Truthful Deceptive | LIWC |

| Complexity |

| Truthful Truthful Truthful | Custom-written Python code |

| Diversity |

| Truthful Truthful | LIWC |

| Hedging/uncertainty |

| Deceptive Deceptive Deceptive Deceptive Truthful | LIWC |

| Affect |

| Deceptive Deceptive Deceptive Truthful | LIWC |

| Vividness/dominance |

| Deceptive Deceptive | Whissel Dictionary |

| Quantile Range | No. of Observations | Average Greenwashing Score | Example Tweet |

|---|---|---|---|

| 1 (low greenwashing) | 8223 | 75.15 | New forecast predicts #oilsands output in #Alberta will more than triple by 2030, to 5M barrels a day http://on.wsj.com/KjcUjF (accessed on 15 April 2020) Oil & Gas firm (score: 75.62) |

| 2 | 8223 | 77.64 | |

| 3 | 8222 | 82.94 | |

| 4 | 8223 | 83.95 | [Company] sources battery cells from carbon-neutral production for the first time. That’s significantly more than 30% savings on the carbon footprint of the entire battery of future models. #Sustainability Auto firm (score: 84.88) |

| 5 | 8222 | 84.79 | |

| 6 | 8223 | 86.50 | |

| 7 | 8222 | 87.12 | Read about #[company’s] commitment to a #lowcarbon future http:// [company website] Oil & Gas firm (score: 91.24) |

| 8 | 8223 | 88.75 | |

| 9 | 8222 | 89.52 | |

| 10 (high greenwashing) | 8223 | 91.11 |

| Dependent Variable: Share Price | ||||

|---|---|---|---|---|

| Variables | Model (1) | Model (2) | Model (3) | Model (4) |

| Greenwashing (GW) | −0.47 ** | −0.77 ** | ||

| (0.04) | (0.07) | |||

| ESG Controversies (ESGC) | −0.05 ** | −1.10 ** | ||

| (0.00) | (0.18) | |||

| GW × ESGC | 0.24 ** | |||

| (0.04) | ||||

| Industry (0 = Auto, 1 = Oil) | −0.48 | −0.51 | −0.89 * | −0.92 * |

| (0.33) | (0.33) | (0.36) | (0.37) | |

| Region (0 = NA, 1 = Global) | −0.58 | −0.57 | −0.68 | −0.63 |

| (0.37) | (0.38) | (0.42) | (0.43) | |

| Size (0 = B20; 1 = T20) | 0.76 * | 0.74 * | 0.40 | 0.36 |

| (0.31) | (0.31) | (0.35) | (0.36) | |

| Gross Income | −0.21 ** | −0.21 ** | −0.03 † | −0.05 * |

| (0.01) | (0.01) | (0.02) | (0.02) | |

| Return on Assets | 0.08 ** | 0.07 ** | 0.10 ** | 0.09 ** |

| (0.00) | (0.00) | (0.00) | (0.00) | |

| Operating Income | 0.39 ** | 0.40 ** | 0.28 ** | 0.29 ** |

| (0.01) | (0.01) | (0.01) | (0.01) | |

| Profit | 0.07 ** | 0.07 ** | −0.03 ** | −0.03 * |

| (0.00) | (0.00) | (0.01) | (0.01) | |

| Revenue | 0.05 ** | 0.06 ** | 0.19 ** | 0.20 ** |

| (0.01) | (0.01) | (0.01) | (0.01) | |

| Constant | 1.99 ** | 4.01 ** | 1.74 ** | 5.07 ** |

| (0.43) | (0.47) | (0.50) | (0.60) | |

| Observations | 29,271 | 29,271 | 19,791 | 19,791 |

| Number of Firms | 50 | 50 | 42 | 42 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Oppong-Tawiah, D.; Webster, J. Corporate Sustainability Communication as ‘Fake News’: Firms’ Greenwashing on Twitter. Sustainability 2023, 15, 6683. https://doi.org/10.3390/su15086683

Oppong-Tawiah D, Webster J. Corporate Sustainability Communication as ‘Fake News’: Firms’ Greenwashing on Twitter. Sustainability. 2023; 15(8):6683. https://doi.org/10.3390/su15086683

Chicago/Turabian StyleOppong-Tawiah, Divinus, and Jane Webster. 2023. "Corporate Sustainability Communication as ‘Fake News’: Firms’ Greenwashing on Twitter" Sustainability 15, no. 8: 6683. https://doi.org/10.3390/su15086683

APA StyleOppong-Tawiah, D., & Webster, J. (2023). Corporate Sustainability Communication as ‘Fake News’: Firms’ Greenwashing on Twitter. Sustainability, 15(8), 6683. https://doi.org/10.3390/su15086683