Abstract

Deploying construction robots alongside workers presents the risk of unwanted forcible contact—a critical safety concern. To address a semantic digital twin where such contact-driven hazards can be monitored accurately, the authors present a single-shot deep neural network (DNN) model that can perform proximity and relationship detections simultaneously. Given that workers and construction robots must sometimes collaborate in close proximity, their relationship must be considered, along with proximity, before concluding an event is a hazard. To address this issue, we leveraged a unique two-in-one DNN architecture called Pixel2Graph (i.e., object + relationship detections). The potential of this DNN architecture for relationship detection was confirmed by follow-up testing using real-site images, achieving 90.63% recall@5 when object bounding boxes and classes were given. When integrated with existing proximity monitoring methods, single-shot visual relationship detection will enable the accurate identification of contact-driven hazards in a digital twin platform, an essential step in realizing sustainable and safe collaboration between workers and robots.

1. Introduction

The construction industry is increasingly turning its attention to robotic automation and digitization (or digital twinning), drawn by the promise of improved capital productivity and a solution to growing labor shortages. Recent changes in the market provide strong evidence for this shift. Major equipment manufacturers have begun retrofitting conventional equipment packages with autonomous kits (e.g., ExosystemTM, Built Robotics, San Francisco, CA, USA [1]). Startups that recently entered the market are bringing novel robotic solutions to a range of construction jobs (e.g., SpotTM, Boston Dynamics, Waltham, MA, USA [2]). Mckinsey and Company reports that venture capital investment in the construction robotics space surpassed USD 5 billion in 2019, a 60-fold increase over 2009 [3]. Looking forward, the construction robotics market is expected to achieve a compound annual growth rate (CAGR) of more than 23% from 2020 to 2027 [4]. These are clear indicators of growing momentum behind the adoption of robotic innovation in construction.

While the adoption of construction robots is no longer in the distant future, there remains a critical safety issue when deploying robots alongside field workers. According to the Census of Fatal Occupational Injuries conducted by the U.S. Bureau of Labor Statistics, 3645 contact-driven fatalities were reported in the construction sector between 2009 and 2018, a record unmatched by any other industry (BLS 2009–2018) [5]. As evidenced by numerous accident (or near miss) reports, contact-driven hazards (e.g., struck-by and caught-in/between) arise easily, unexpectedly, and frequently in construction environments. This is a chronic phenomenon in construction sites where the site layout is unstructured and movements of entities are erratic. It is clear that combining mobile robots with workers on foot in a shared workspace elevates the risk of forcible collisions.

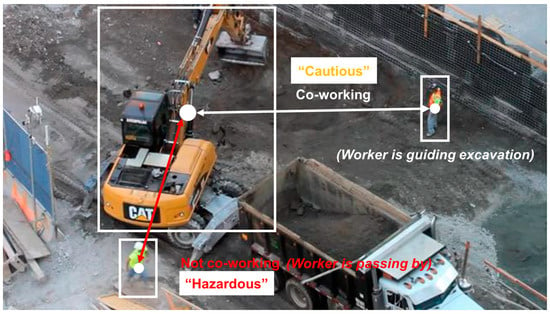

To sensibly identify and thus effectively intervene in potential contact-driven hazards, the authors call attention to the need for effective relationship detection along with proximity detection. The industry’s response to the issue of unwanted collisions has focused on proximity monitoring [6]. However, identifying a hazard based solely on proximity is sub-optimal. At times, field workers and robots are meant to collaborate with one another in close proximity. In these scenarios, proximity cannot be the sole determinant for a hazard, though it can be the precondition. To accurately identify a potential hazard, it is necessary to also consider the relationship of the associated entities to determine whether or not they are co-working, and therefore permitted to be in close proximity (Figure 1).

Figure 1.

DNN-based visual relationship detection.

Within this context, we present the potential of deep neural network (DNN)-based single-shot visual relationship detection with the ability to infer relationships among construction objects directly from a site image (Figure 1). A DNN, when equipped with well-matched architecture, can integrate both local and global features into a single composite feature map, potentially allowing for intuitive relationship detection directly from a single image, much like a human-vision system [7]. In addition, when trained with a sufficient amount of data, a DNN can extract universal features that allow for scalable relationship detection across diverse construction environments [7]. Last but not least, the DNN-based method requires minimal hardware, enhancing its feasibility in real-world applications.

Despite the potential of DNN-based visual relationship detection, this area has received little to no attention from the construction-focused research community. In this study, we train and evaluate three DNN models for visual relationship detection, each of which has a different level of task difficulty, conducting an examination of their potential for accurate single-shot visual relationship detection at progressively increasing levels of difficulty.

This paper is organized as follows: Section 2 provides the focus and scope of this study. Section 3 explains the need for relationship detection in identifying contact-driven hazards in robotic construction. Section 4 clarifies our objectives and describes our methods in detail. Section 5 and Section 6 present the fine-tuning and test results, discussing the potential of single-shot visual relationship detection and our technical contribution. Finally, Section 7 presents our conclusions.

2. Clarification and Scope of This Study

2.1. Applying a DNN-Based Visual Artificial Intelligence for Onsite Hazard Identification

Numerous studies have addressed construction hazard identification. Based on the prior literature, current hazard identification methods can be largely classified into (i) predictive (or proactive) and (ii) retrospective (or reactive) [8]. Predictive methods include job safety analysis, task-demand assessments, and task-planning safety sessions, while accident report analyses, lessons learned, and safety checklists fall into the retrospective methods [8].

These methods are valid during the planning/scheduling stage (preconstruction) or daily safety training sessions, which are considered essential and fundamental for supporting workers’ hazard identification. However, as is well documented in prior studies [9,10], these methods, while essential, do not suffice. “Although useful, such methods often fail to include hazards associated with adjacent work and changes in scope, methods, or conditions”, as noted by [9]. In light of this, we shift our focus from manual approaches to advanced technology. As an additional measure to identify construction hazards, we explore the potential of visual artificial intelligence (AI) powered by a two-in-one deep neural network (DNN), which includes object and relationship detection.

During construction, multiple sources of distraction, including significant levels of noise, vibration, an unstructured layout, and dynamic uncertainties, can easily undermine workers’ perception of safety [9,11]. Prior studies have highlighted that it is highly dangerous to assume workers’ perception levels are stable and that workers are capable of identifying hazards amid all uncertainties. While several prior studies focus on directly improving workers’ perception level and hazard identification capabilities, this study attempts to use technology to fill the gap.

2.2. The Major Focus: Contact-Driven Hazards Involving Heavy Equipment/Robots

A study conducted at the University of Colorado, Boulder [8], presented energy-based cognitive mnemonics and a safety meeting maturity model, introducing connections between energy sources and construction hazards. Using this theory, a hazard can be viewed as a situation made of a complex combination of energy sources, including gravity, motion, mechanical, electrical, pressure, temperature, chemical, biological, radiation, and sound. We endorse this approach, as we believe it is often invalid to classify a hazard into generalized categories. Visual AI-based approaches may not be valid for identifying hazards caused by invisible energies such as mechanical, electrical, pressure, temperature, chemical, biological, radiation, and sound. However, with proximity measurement and relationship detection functionalities, visual AI could be effective in identifying dynamic hazards caused by the motions of mobile objects, such as heavy equipment and robots, which is the exclusive focus of this study.

The Occupational Safety and Health Administration (OSHA) in the US announced the “Focus Four Hazards” in construction: (i) falls, (ii) struck-by, (iii) caught-in/between, and (iv) electrocution [12]. According to this classification, our focus falls into the struck-by and caught-in/between categories, namely, contact-driven hazards.

2.3. Construction Heavy Equipment/Robot as a Major Target Source of Hazard

We observed a major trend where, for heavy construction equipment, major OEM companies’ research and development efforts are focused on retrofitting existing machines into semi-autonomous or fully autonomous robots by adding an extra AI-embodied hardware kit, while maintaining their original mechanical structure and appearance. Consequently, we can expect future construction robots, especially earthmoving equipment such as excavators, wheel loaders, or cranes, to retain the same structure and appearance, while being enhanced with AI for task automation. Although this research is conducted with existing heavy equipment, given the facts above, the outcomes will certainly be applicable to semi-autonomous or fully autonomous construction equipment as well.

At the beginning of this study, we acknowledged that developing a comprehensive model that could cover all types of heavy equipment was not feasible, as it would require a vast number of diverse training images and labels. Since the objective of this study was not to develop a field-applicable model for industrial use, but rather to examine the potential of single-shot computer vision models, we narrowed our focus to excavators and earthmoving tasks, which are considered the most significant resources and tasks in many large construction projects. Conducting this research will guide us in determining correct future research directions for this field, and rest assured, the findings will still be applicable to other types of equipment, as the fundamentals of the models in terms of training and inferring visual scenes will remain firm.

3. The Need for Relationship Detection for the Accurate Identification of Contact-Driven Hazards

Injuries and fatalities resulting from forcible contact between workers and mobile objects have been an endemic problem in unstructured and changing construction sites (BLS 2009–2018) [5]. The risk of forcible contact will be elevated in robotic construction environments, where mobile robots with varying degrees of autonomy will be active alongside workers on foot. In this section, we look back on the hazardous nature of construction, review the major research focus in this area (i.e., proximity monitoring), and clarify the need for relationship detection along with proximity for the accurate identification of contact-driven hazards. Lastly, we review previous approaches to relationship detection, identifying the specific knowledge gap that our research addresses.

3.1. The Risk of Contact-Driven Accidents in Robotic Construction

Construction takes place in a congested and unstructured environment. The workspace is crowded with various types of motorized resources (excavators, front-end loaders, bulldozers, haulers, etc.) as well as field workers. Their routes and movements tend to be erratic due to the unstructured and changing site layouts. In such an environment, field workers often find themselves in close proximity with motorized resources, with the attendant risk of forcible collision. The number of contact-driven fatalities in construction speaks directly to the pervasiveness of such hazards. Over the last decade (2009~2018), 3645 U.S. construction workers have died as a result of being struck by (or caught between) equipment or a vehicle (BLS 2009–2018) [5]. Notably, this figure accounts for approximately 41% of total construction fatalities during this period (N = 8786) (BLS 2009–2018) [5].

A primary take-away of the above is that field workers will face a greater risk of contact-driven accidents when working in a robotic construction environment, with a variety of mobile robots working alongside them [13]. Earthmoving robots, such as autonomous excavators, front-end loaders, and haulers, will have routes and boundaries that overlap with field workers at times. More collaborative robots, such as those designed for bricklaying, rebar-tying, bolting, and drilling, will be located right next to workers. The close proximity of such robots raises the likelihood of accidents. Although these robots are equipped with safety functions (e.g., emergency stop), their situational awareness and intelligence remain limited [14,15]. In unstructured and changing construction environments, the chance that a robot misreads a scene and makes wrong decisions in path and movement planning remains high, which carries the attendant risk of fatal collisions. This risk to human safety significantly limits the current feasibility of construction robotics [13]. To realize effective and safe, and thereby sustainable, co-robotic construction, it is important and pressing to address such safety concerns.

3.2. Accident Prevention Has Focused on Proximity Monitoring

Monitoring the proximity of motorized resources to workers on foot has been the primary technique for preventing contact-driven accidents in construction [11,14,15]. Proximity monitoring enables the detection of potential hazards involving a worker within the action radius of a mobile object (equipment or robot) or within its planned route [14,15]. This in turn can allow for an immediate alert to be issued to the associated entities, making timely evasive actions possible [14,15]. As such, proactive proximity detection and intervention can effectively prevent a potential collision, forestalling a near-miss or worse from happening.

Proximity-based solutions have been continuously pursued in both commercial and academic fields. Technology developers have leveraged proximity sensors such as Radio Frequency Identification (RFID), Global Positioning System (GPS), and Radio Detection and Ranging (RADAR) to develop a variety of proximity monitoring and alert systems. Representative commercial products include the Proximity Alert System (PAS) by PBE Group Ltd., North Tazewell, VA, USA [16], EGOpro Safe Move by AME Ltd., Via Lucca, Florence, Italy [17], and Intelligent Proximity Alert System (IPAS) by KIGIS Ltd., Seoul, Republic of Korea [18].

Construction researchers have also examined the potential of computer vision as a complementary technology to proximity sensing. Earlier works, such as those of [19,20], presented vision-based proximity monitoring frameworks that used hand-engineered features, such as Histogram of Gradient (HoG). More recently, vision-based approaches have made large strides with the advancement of diverse DNNs and effective transfer learning techniques. [14] leveraged a deep Convolutional Neural Network (CNN), called You Only Look Once-V3 (YOLO-V3) [21], and developed a learning-based proximity monitoring method which is faster, more accurate, and more scalable than the hand-engineered method. A follow-up study by [11] further enhanced this approach with prediction functionality. This study added a trajectory prediction DNN based on a conditional Generative Adversarial Network (GAN) called Social-GAN (S-GAN) [22], demonstrating an average proximity error of less than one meter when predicting proximity 5.28 s into the future.

3.3. The Need for Relationship Detection

While proximity is a contributor to contact-driven accidents, solely relying on proximity when identifying a hazard has proven inadequate in practice. In construction, close proximity between a worker and a mobile object can arise unintentionally but can also happen naturally while they are collaborating (purposely interacting). Take, for example, two simple cases: (i) a bricklayer has just unwittingly entered the action radius of an autonomous excavator; and (ii) a bricklayer is finishing mortar joints immediately beside a semi-autonomous masonry robot piling up bricks. The first case is certainly a hazard where immediate intervention is called for. In contrast, the second is not, since these entities are meant to co-work at a close distance and the proximity is intended. As these examples demonstrate, proximity alone is not adequate to accurately define hazards in a construction setting. It is further necessary to consider the relationship between the worker and object, including and especially whether they are co-working or not. Without relationship detection, a hazard detection system that relies solely on proximity-based hazard identification would result in frequent nuisances and halts in operation, negatively impacting productivity. This approach is neither logical nor sustainable. As such, the importance of relationship detection will continue to increase in robotic construction environments where workers and robots increasingly work alongside one another.

3.4. Previous Approaches to Relationship Detection between Construction Objects

Despite the importance of relationship detection, it has received little attention in the construction academia, with only a small minority of studies attempting to address it. Ref. [23] worked on relationship detection between a worker and equipment—whether the worker is co-working with the equipment or not—by analyzing their positional and attentional cues. This study used the entities’ locations (i.e., the central coordinates of their bounding boxes) to describe their positional state, and their head poses (i.e., yaw, roll, and pitch angles), body orientations, and body poses (e.g., standing or bending) to determine attentional state. The attributes of every entity were then compared to one another to formulate a feature descriptor that represents the positional and attentional cues shared by the worker and the equipment (e.g., relative distance, direction, head yaw direction, and body orientation). This feature engineering has shown promising results when coupled with a Long Short-Term Memory (LSTM)-based binary classifier. Precision and recall of higher than 90% were achieved in a test that used two construction videos. To our knowledge, this study is the first and only attempt to directly classify the relationship between construction objects based exclusively on information that can be captured visually. Ref. [23] can further be credited with pioneering the explicit engineering of the feature descriptor to optimize relationship detection.

Nonetheless, it must not be overlooked that the hand-engineered approach is highly likely to encounter scalability challenges in real field applications [24,25,26]. Specifically, this approach struggles to achieve consistent accuracy across varied site conditions and is not able to reflect all possible scenarios. As pointed out by previous studies, hand-engineering for feature extraction and description is often challenged by scene variances. This is a major issue given the extremely high degree of scene variance found in wild construction environments. Hand-engineered features were found to be easily misled under varying viewpoints. In addition, hand-engineered descriptors were not capable of abstracting all the scene contexts potentially required for relationship detection. Although the approach proved effective under certain conditions, it could not be scaled and was not sustainable for varied wild conditions. This highlights the need for an approach that allows for feature extraction and descriptions that can be universally applied across diverse construction environments.

Furthermore, we should not take for granted the premise that all pieces of information required for relationship detection will be available. Relationship detection is a semantic inference, which requires a comprehensive understanding of a scene. In turn, the existing approach to visual relationship detection requires the collection of multiple pieces of information for each entity (e.g., worker or equipment), such as location, pose, posture, movement direction, and speed. The study by [23] assumed such information as being given. However, in real world settings this information may not be given. Gathering multiple pieces of information from an ongoing construction project is extremely challenging. Multimodal sensing with multiple corresponding pieces of data analytics would be required, introducing complexity, multiple potential failure points, and significant expense, suggesting this approach may struggle to produce practical and sustainable solutions for industry. This paper presents a novel, easy-to-apply, and sustainable approach to this fundamental challenge. Our research addresses the question of how the semantics from a scene can be inferred in a more intuitive way, similar to the human vision system, rather than being explicitly inferred from fragmented pieces of information extracted from separate sensors.

4. Deep Neural Network-Powered Single-Shot Visual Relationship Detection

Pursuing the goal of more scalable and intuitive relationship detection, this study examined the potential of DNN-powered single-shot visual relationship detection with the ability to directly infer relationships between pairs of construction objects from a site image. A DNN with deep convolutional neural network (CNN) layers is capable of abstracting coarse-to-fine learned features of an input image. These learned features, when trained with a balanced set of data, can lead to more scalable relationship detection in diverse construction environments under varied imaging conditions [7]. In addition, a DNN with a flexible CNN architecture can infer both local and global features into a composite feature map in a single step, which potentially allows for intuitive relationship detection to be achieved directly from an input image, like a human-vision system, without relying on other sensing modalities and data analytics [7]. As such, it is worthwhile to investigate the potential of a DNN-powered model to deliver an effective solution for scalable and intuitive relationship detection.

To this end, we leveraged a unique DNN architecture, Pixel2Graph [7], specializing in multi-scale feature abstraction and single-shot relationship detection. We started with a baseline model pre-trained with a benchmark dataset, Visual Genome [27]. From this foundation, we developed multiple construction models, fine-tuning them with construction data. The quantity of the data used for fine-tuning was carefully matched to the complexity of the architecture. Lastly, we tested our models using a dataset of construction images that had not previously been seen by the models, examining their performance potential in single-shot visual relationship detection. Note that the validation for tuning hyper-parameters related to architecture, weight initialization, and the optimization algorithm was completed in a preceding study [7] and was thus excluded here.

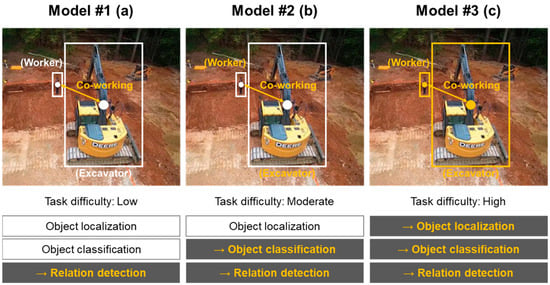

In this study, we developed three models featuring increasing levels of task difficulty, thereby examining the potential of DNN-powered single-shot relationship detection in a phased manner.

Model #1 (low level of difficulty)—Only Relationship Detection (Only-Rel, Figure 2a): Object bounding boxes (bboxes) and classes for all objects are provided, along with an input image. The model only infers their relationships.

Figure 2.

Different levels of task difficulty: low, moderate, and high. The color white represents the given information, and the color orange represents “to be estimated”.

Model #2 (moderate level of difficulty)—Object Classification + Relationship Detection (Cla-Rel, Figure 2b): Object bboxes for all objects are provided. The model classifies their classes and infers their relationships.

Model #3 (high level of difficulty)—Object Localization + Object Classification + Relationship Detection (Loc-Cla-Rel, Figure 2c): The model localizes and classifies all objects of interest and infers their relationships all at once.

The rest of this section describes (i) the network’s architecture; (ii) pre-training, fine-tuning, and test datasets; (iii) the fine-tuning process; and (iv) the evaluation metric.

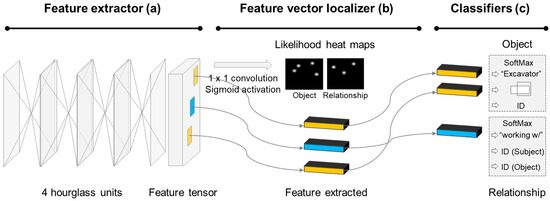

4.1. Pixel2Graph

Pixel2Graph [7] has a unique architecture consisting of three main modules: (i) a feature extractor (Figure 3a); (ii) a feature vector localizer (Figure 3b); and (iii) object and relationship classifiers (Figure 3c).

Figure 3.

Network architecture of Pixel2Graph.

- Feature extractor (Figure 3a): The four hourglass network units stacked in a row take a whole image as the input and extract meaningful features from the unstructured input (i.e., 2D image) into a fixed-size 3D feature tensor. An hourglass network unit is comprised of multiple convolutional layers of varying sizes with skip connections that enable the encoding and decoding of feature extraction [7]. By repeating the cohesive abstraction process, the feature extractor gathers both global (e.g., the connection between background and foreground objects) and local features (e.g., the connection between foreground objects) into a single 3D feature tensor, which can be useful for relationship detection as well as for object detection [7].

- Feature vector localizer (Figure 3b): The feature vector localizer then specifies the potential locations of objects and their relationships on the image’s coordinates by analyzing the 3D feature tensor. The feature vector localizer generates likelihood heat-maps of objects and their relationships independently through 1 × 1 convolution and sigmoid activation, wherein each heat value represents the likelihood that an entity (i.e., object or relationship) exists at the given location [7]. Based on the specified locations, the corresponding feature vectors of interest are selected and analyzed.

- Classifier (Figure 3c): The corresponding feature vectors are fed into the fully connected layer and Soft-Max classifier, in which final classifications of: (i) subject class (e.g., a worker), (ii) relationship (e.g., is guiding), and (iii) object class (e.g., an excavator) are made.

Compared to existing visual relationship detection DNNs, which are mostly supported by a region proposal network (RPN), this architecture has several distinctive features. In particular, the feature extractor, comprising multiple hourglass units, enables the feature abstraction process to form both global and local features into a single feature tensor, which is more effective for understanding a scene as a whole [7]. In addition, the associative embedding with likelihood heat maps for objects and relationships allows for a single-shot, end-to-end process, which is capable of more cohesive and intuitive inferences about relationships. Further details of Pixel2Graph’s architecture can be found in the preceding study [7].

4.2. Construction Data Collection and Annotation

It is axiomatic in deep learning that the more diverse the images a model trains with, the higher the accuracy and scalability the model can achieve [28,29,30]. We thus collected a large volume of construction images and annotated them through a comprehensive inspection process. We collected videos from ongoing construction sites as well as from YouTube, covering a range of construction operations and backgrounds. We then sampled one image per second from each video, avoiding duplications in our dataset. To reduce the time and effort required for such a massive amount of annotation, we leveraged web-based crowdsourcing with Amazon Mechanical Turk (AMT). We devised an annotation template that links the sampled images to the AMT server. This template leads AMT workers to annotate each object’s bounding box, class label, and relationships to others (Figure 4). We then followed these annotations with a complete inspection, confirming their validity. Figure 4 shows examples of several such annotated images. On each image, we labeled the bboxes and the classes of construction objects of interest and paired them by annotating the relationships between each pair of objects.

Figure 4.

Construction dataset: examples of annotated images.

Table 1 summarizes the details of the prepared construction dataset. A total of 150 construction videos were collected, each from a different site, from which 12,465 images were sampled at a rate of 1 sample per 30 frames, followed by annotation. This dataset comprised seven classes of objects: (i) worker; (ii) excavator; (iii) truck; (iv) wheel loader; (v) roller; (vi) grader; (vii) scraper; and (viii) car. Among those objects, four classes of relationship were identified and annotated: (i) guiding; (ii) adjusting; (iii) filling; and (iv) not working with. In total, 30,153 objects and 17,772 relationships among them were annotated. Following the standards set by previous DNN studies [21], we considered 3000 instances per each class sufficient for training.

Table 1.

Details of the annotated construction dataset.

From here, we took measures to make the given problem simpler. For the accurate identification of a contact-driven hazard, it is sufficient to identify whether two associated objects are co-working or not. It is not necessary to comprehend what the objects are doing. Therefore, our study focused specifically on identifying the co-working relationship between two objects. This allowed us to reorganize the four relationship classes into a binary—(i) co-working and (ii) not co-working—by considering the first three classes (i.e., guiding, adjusting, and filling) as co-working (Figure 4). In this dataset, the even ratio between co-working and not co-working (i.e., 53:47) was maintained, thereby avoiding biased training (Table 1).

4.3. Training Construction Models

We developed three construction models with different levels of task difficulty via transfer learning from a baseline model developed in the original study [7]: (i) Model #1, OnlyRel, (ii) Model #2, Cla-Rel, and (iii) Model #3, Loc-Cla-Rel. We started from the baseline model pre-trained with a universal dataset—Visual Genome—that is the most widely used benchmark dataset for developing visual relationship detection models. The Visual Genome dataset contains around 108,077 images that capture 3.8 million objects and 2.3 million relationships [27]. All the parameters of an empty Pixel2Graph architecture were initialized with pre-learned weights and continued to be updated through fine-tuning with the construction dataset. All code for training, fine-tuning, validation, and testing was written in Python 3 and its packages, including tensorflow-gpu (1.3.0), numpy, h5py, and simplejson. The complete code is available in the original GitHub repository [31].

The annotated dataset developed for this project was divided into two separate sets of images. The first set, consisting of 11,082 images (89%) was used for fine-tuning and the other set of 1383 images (11%) was saved to be used for testing. While splitting the construction dataset into these two categories, we ensured that there was no overlap in terms of site backgrounds or contexts, thereby avoiding potential overestimation in the final testing.

At the beginning of this research, we acknowledged that the number and diversity of the collected data might not suffice to achieve the best potential performance from the three models. Given this, to still assess the maximum performance potential of models trained with the available data, we assigned 89% of the data for training, assuming that the remaining 11%—approximately 15 videos, each from a different construction site—would still be adequate for testing and analysis.

4.4. Evaluation Metric

We adopted Recall@X, the one representative evaluation metric widely used in visual relationship detection research [7]. Recall@X reports the fraction of ground truth tuples to appear in a set of top X estimations. Considering the diversity of the construction dataset, this study applied Recall@5.

5. Results and Discussion

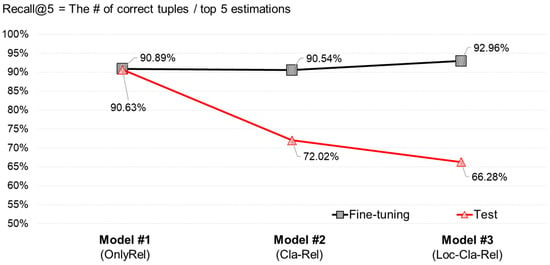

The results of fine-tuning were promising for all three models, as summarized in Figure 5. Overall, the fine-tuning on the construction training dataset was successful, with Recall@5s measured at over 90% for all three models. It was found that the Recall@5s based on the test dataset went up as the task difficulty went down: 90.63%, 72.02%, and 66.28% Recall@5s from OnlyRel (Model #1), Cla-Rel (Model #2), and Loc-Cla-Rel (Model #3) models, respectively.

Figure 5.

Recall@5s of three models based on fine-tuning and test datasets.

5.1. Model #1, OnlyRel: With Bboxes and Classes Given along with a 2D Image

The OnlyRel model has a low level of difficulty; it infers the relationship of each pair of entities (e.g., a worker and an excavator) that have predefined bboxes and classes. At this difficulty level, the fine-tuned model showed very promising results: it recorded 90.89% and 90.63% Recall@5s based on the fine-tuning and test datasets, respectively (Figure 5). As evidenced by the negligible performance difference between the two datasets, there was no trace of overfitting. The model’s performance using the previously unseen test dataset was successful, achieving the same high level of accuracy as seen on the fine-tuning dataset. From this result, it can be proven that a DNN, if equipped with well-fitted architecture (e.g., Pixel2Graph) and trained with sufficient data, can deliver accurate single-shot visual relationship detection. Given that the fine-tuned model inferred relationships with high accuracy from a single image, this result is noteworthy.

5.2. Model #2, Cla-Rel: With Bboxes Given along with a 2D Image

Compared to the OnlyRel model, the Cla-Rel model had a higher level of task difficulty. Given bboxes of target entities, it inferred their classes and relationships at the same time. The Cla-Rel model’s Recall@5 based on the fine-tuning dataset was as high as that of the OnlyRel model, with a Recall@5 measured at 90.54% (Figure 5). This finding proves the ability to successfully train the Cla-Rel model using construction datasets. On the other hand, the Cla-Rel model recorded a Recall@5 of 72.02% on the test dataset, which is significantly lower than that of the OnlyRel model (90.63%). This result implies that relationship detection accuracy can be affected by the classification results of detected objects. The Cla-Rel model abstracts object classification-related information as well as that for relationship detection into one composite feature tensor. This multiple information encoding in the current architecture is more challenging than focusing on one specific form of information (e.g., only relationship-related information). As a result, the object classification accuracy fell, resulting in a decreased relationship detection performance.

5.3. Model #3, Loc-Cla-Rel with Only a 2D Image

The Loc-Cla-Rel model has the highest level of task difficulty; it performs bbox localization, object classification, and relationship detection simultaneously in a single network. The Loc-Cla-Rel model is, simply put, a two-in-one model that performs object detection (i.e., object localization and classification) and relationship detection simultaneously.

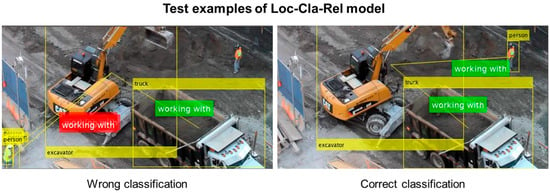

The Loc-Cla-Rel model achieved 92.96% and 66.28% Recall@5s on fine-tuning and test datasets, respectively (Figure 5). As shown in Figure 6, the model’s Recall@5 continued to improve with the fine-tuning dataset during the tuning session, converging at around 92.96%. This result showed that the network’s architecture is capable of learning the situational context of a construction scene and has great potential for relationship inference between construction objects in a single-stage process. On the test dataset, however, the model could not achieve the same level of performance as on the fine-tuning dataset, achieving a Recall@5 of 66.28%. Figure 7 shows a wrong and correct classification example in the test dataset. It is evident that the performance of the Loc-Cla-Rel model was significantly poorer than the others, necessitating further attention.

Figure 6.

RelObj model’s Recall@5s for relationship detection during fine-tuning.

Figure 7.

Loc-Cla-Rel model’s test examples: wrong and correct classifications.

5.4. Limitations of the Current Study and Proposed Future Research Directions

While the potential of the computer-vision-based, single-shot, visual relationship detection was confirmed, it was also evident that the performances of the three models were insufficient for real-field applications. The 90.63% Recall@5 of Model #1 (OnlyRel) is still not close enough for safety monitoring, not to mention Model #3 (Loc-Cla-Rel), with 66.28% Recall@5. Based on this training result and by comparing the training and test accuracy patterns over training epochs, we identified the following points for improvement:

- Addressing overfitting with a greater number of training samples: Although the model (Loc-Cla-Rel) showed a steadily increasing performance with the test dataset during fine-tuning, it started to converge at an early stage (Figure 6). It was clear that learning the situational context along with object detection is more challenging. In particular, it turned out that successful training for two-in-one detection (i.e., object and relationship detection) requires a larger volume of fine-tuning data than are needed for the OnlyRel (Model #1) or Cla-Rel (Model #2) models. A significant discrepancy between the Recall@5s, achieved using fine-tuning and test datasets, was confirmed—a typical symptom of overfitting (Figure 6). The Recall@5 achieved by the Cla-Loc-Rel model using the fine-tuning dataset was even higher than those achieved with the OnlyRel and Cla-Rel models, which further clarifies that there was overfitting during fine-tuning. However, this result does not necessarily represent the maximum performance potential of a single-shot relationship detection model. The 92.96% Recall@5 from the fine-tuning dataset clearly shows that the model has a high trainability but could not reach its maximum performance potential due to the limited number of fine-tuning data and resultant overfitting. We anticipate that a follow-up study featuring a fine-tuning dataset that is augmented in terms of both quantity and diversity will improve the Loc-Cla-Rel model’s performance. Recently, computational data synthesis and automated labeling with diverse graphic simulation engines (e.g., Blender, Unity, Omniverse) and physics models have emerged. This approach would allow us to create our own dataset under varying imaging conditions (e.g., illumination, viewpoint, and scale), which would be a valuable addition to follow-up studies. The more synthetic data we prepare for training, the more real data we can allocate for validation and testing. This approach would enable us to thoroughly assess models for real-field applications.

- Advancing DNN architecture: Lastly, an additional consideration that merits exploration is architecture modification. The original Pixel2Graph architecture integrates all learned features into one composite feature tensor. Branching this out into two separate tensors, with one tensor trained for object detection and the other for relationship detection, and with two separate cost functions, would provide another avenue to improving the two-in-one detection performance. Although the performance of the Loc-Cla-Rel model has not yet achieved sufficient accuracy for field applications, efforts to improve it have value given the inherent advantages of the two-in-one single-shot model.

6. Potential Use of Visual Relationship Detection and the Contribution of This Study

Along with proximity monitoring, relationship detection is essential for effective contact-driven hazard detection involving actuated robots or equipment. However, relationship detection—a semantic inference process—is not straightforward like object detection and requires holistic scene understanding. One possible way to achieve relationship detection would be to figure out the multiple attributes of associated entities (e.g., location, proximity, pose, action, and attention) and then infer their relationship based on these collected attributes via a pre-defined logic. However, this approach presents numerous challenges in terms of its real-word feasibility and sustainability, including the requirement for multiple sensing modalities and data analytics. In addition, developing a scalable inference logic is challenging since the relationship between two entities can be defined in countless ways. Given the above, the results achieved with DNN-powered single-shot visual relationship detection, which can complete relationship detection directly from a single image, is worthy of note as a sustainable measure.

Single-shot visual relationship detection, either coupled with another object detection DNN or by itself, can provide an effective solution to the accurate identification of contact-driven hazards. As noted before, it is reasonable to identify an event as a hazard only if a worker is in proximity to an actuated robot/equipment (e.g., the action radius of a robot or equipment) with no intention of co-working. If the worker is within proximity of an actuated robot and has the intention of co-working, such an event can be marked with a caution, and the collaboration between the worker and the robot (or the equipment) allowed to continue.

Accurate classification can be achieved through two different approaches: (i) first conduct proximity monitoring with an object detection DNN and then subsequently conduct relationship detection with the OnlyRel model; or (ii) conduct proximity monitoring and relationship detection simultaneously using the Loc-Cla-Rel model. The first approach is readily applicable. As proven in our prior studies [11,14], proximity monitoring can be achieved with an object detection DNN along with image rectification for distance measurement. In turn, the OnlyRel model can perform single-shot relationship detection by taking the 2D image and the detected bboxes and classes as input. Hazard identification (i.e., whether an event is hazardous or just cautious) can then be easily made based on the proximity and relationship information. Given the high accuracy of the OnlyRel model (i.e., 90.63% Recall@5 on the test dataset) and the fact that object detection accuracy is continuously on the rise through increased training data, the feasibility of this approach can be considered high.

The other approach is to leverage the Loc-Cla-Rel model for both proximity monitoring and relationship detection. Taking a 2D image as input, the Loc-Cla-Rel model can output target objects’ bboxes and classes and their relationships simultaneously. This approach can automate the overall process of hazard detection (both proximity monitoring and relationship detection) using simple image rectification. It was noted that training the Loc-Cla-Rel model was considerably more challenging than training the OnlyRel model, requiring a greater number of training data and potentially calling for architecture modification. However, we found that it is worth pursuing further improvements aimed at achieving the maximum performance potential of such a multi-in-one model, as this model promises to save significantly on computational costs in real-setting implementations.

To the best of our knowledge, this work is the first attempt to achieve single-shot visual relationship detection in the construction domain. Our model can directly infer the relationships among target objects by looking at a single image, like a human vision system. It can also be easily integrated with existing computer vision-based proximity monitoring methods without additional hardware. Integrating proximity monitoring and relationship detection into a single model will enable the accurate identification of contact-driven hazards, which in turn will enable safe and effective, and thereby sustainable, collaboration between workers and robots (or equipment).

Last but not least, we highlight the potential use of two-in-one DNN architecture in future construction studies to address digital twinning. Many visual site monitoring tasks (e.g., safety monitoring, progress monitoring, and quality control), or digital twinning tasks, may involve the need for multiple vision tasks, such as object detection, relationship detection, 2D/3D pose estimation, and semantic segmentation, all at once. We highlight that the architectures for such tasks are not that distinctive from one another, especially in the feature extraction stage. This suggests that there is a strong possibility of being able to manage multiple vision tasks within one composite architecture. By contrast, implementing these tasks in separate stages could introduce cumulative errors and would be computationally inefficient. Based on these potential advantages and the results achieved in this study, further investigation into multi-in-one solutions is warranted, especially for digital twinning studies.

7. Conclusions

Robotic automation and digitization have become central to construction innovation. It is anticipated that a variety of construction robots will be deployed on real-world construction sites in the near future. While the benefits from robotic solutions will be immense, the challenge of ensuring field worker safety will serve as a primary rate-limiter to industry adoption. Construction workers are central to the construction process and will remain so even in a highly automated robotic construction environment. Construction robots not only need to interact and collaborate with workers in situ, they also need to ensure the safety of workers in a sustainable manner. To this end, a solution that achieves the accurate identification of contact-driven hazards in a process that incorporates the identification of relationships between entities is a must. The pursuit of such a solution is the primary contribution of this study. The performances of the three models on the test dataset—90.63% for Model #1 (OnlyRel), 72.02% for Model #2 (Cla-Rel), and 66.28% for Model #3 (Loc-Cla-Rel)—would not suffice for field application. However, as more training data become available, and with continued advancements in new DNN architectures, cost functions, and training mechanisms, follow-up studies are anticipated to further improve the maximum performance potential of single-shot visual relationship detection.

Author Contributions

Conceptualization, D.K., S.L., V.R.K. and M.L.; data curation, D.K.; formal analysis, S.L.; funding acquisition, D.K. and S.L.; investigation, D.K. and S.L.; methodology, D.K., A.G., V.R.K. and M.L.; project administration, S.L.; resources, D.K.; supervision, S.L.; validation, D.K. and A.G.; visualization, D.K.; writing—original draft, D.K.; writing—review and editing, D.K., S.L., V.R.K. and M.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported financially by a National Science Foundation (NSF) Award (No. IIS-1734266; ‘Scene Understanding and Predictive Monitoring for Safe Human-Robot Collaboration in Unstructured and Dynamic Construction Environments’) and a Natural Sciences and Engineering Research Council of Canada (NSERC) Award (Collaborative Research and Development Grants, 530550-2018, ‘BIM-Driven Productivity Improvements for the Canadian Construction Industry’).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Built Robotics. 2023. Available online: https://www.builtrobotics.com/ (accessed on 29 March 2023).

- Boston Dynamics. 2023. Available online: https://bostondynamics.com/products/spot/ (accessed on 29 March 2023).

- McKinsey&Company. Rise of the Platform Era: The Next Chapter in Construction Technology. 2020. Available online: https://www.mckinsey.com/ (accessed on 29 March 2023).

- AMR (Allied Market Research). Construction Robotics Market Statistics. 2021. Available online: https://www.alliedmarketresearch.com/ (accessed on 29 March 2023).

- BLS, Bureau of Labor Statistics, Census of Fatal Occupational Injuries (CFOI). 2009–2018. Available online: www.bls.gov/iif/oshcfoi1.html (accessed on 29 March 2023).

- Jo, B.W.; Lee, Y.S.; Kim, J.H.; Kim, D.K.; Choi, P.H. Proximity warning and excavator control system for prevention of collision accidents. Sustainability 2017, 9, 1488. [Google Scholar] [CrossRef]

- Newell, A.; Deng, J. Pixels to graphs by associative embedding. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar] [CrossRef]

- Albert, A.; Hallowell, M.R.; Kleiner, B. Enhancing construction hazard recognition and communication with energy-based cognitive mnemonics and safety meeting maturity model: Multiple baseline study. J. Constr. Eng. Manag. 2013, 140, 04013042. [Google Scholar] [CrossRef]

- Albert, A.; Hallowell, M.R.; Kleiner, B.; Chen, A.; Golparvar-Fard, M. Enhancing construction hazard recognition with high-fidelity augmented virtuality. J. Constr. Eng. Manag. 2014, 140, 04014024. [Google Scholar] [CrossRef]

- Dong, X.S.; Fujimoto, A.; Ringen, K.; Stafford, E.; Platner, J.W.; Gittleman, J.L.; Wang, X. Injury underreporting among small establishments in the construction industry. Am. J. Ind. Med. 2011, 54, 339–349. [Google Scholar] [CrossRef] [PubMed]

- Kim, D.H.; Lee, S.H.; Kamat, V.R. Proximity prediction of mobile objects to prevent contact-driven accidents in co-robotic construction. J. Comput. Civ. Eng. 2020, 34, 04020022. [Google Scholar] [CrossRef]

- OSHA. The Occupational Safety and Health Administration, US. 2011. Available online: https://www.osha.gov/training/outreach/construction/focus-four (accessed on 22 May 2024).

- NIOSH. The National Institute of Occupational Safety and Health, Robotics and Workplace Safety. 2021. Available online: https://www.cdc.gov/niosh/newsroom/feature/robotics-workplace-safety.html (accessed on 29 March 2022).

- Kim, D.H.; Liu, M.; Lee, S.H.; Kamat, V.R. Remote proximity monitoring between mobile construction resources using camera-mounted UAVs. Autom. Constr. 2019, 99, 168–182. [Google Scholar] [CrossRef]

- Teizer, J.; Allread, B.S.; Fullerton, C.E.; Hinze, J. Autonomous pro-active real-time construction worker and equipment operator proximity safety alert system. Autom. Constr. 2010, 19, 630–640. [Google Scholar] [CrossRef]

- PBE Group. 2022. Available online: https://pbegrp.com/ (accessed on 29 March 2023).

- AME. 2022. Available online: https://www.ameol.it/en/egopro-safety/ (accessed on 29 March 2023).

- KIGIS. 2022. Available online: http://kigistec.com/ (accessed on 29 March 2023).

- Kim, H.J.; Kim, K.N.; Kim, H.K. Vision-based object-centric safety assessment using fuzzy inference: Monitoring struck-by accidents with moving objects. J. Comput. Civ. Eng. 2016, 30, 04015075. [Google Scholar] [CrossRef]

- Kim, K.N.; Kim, H.J.; Kim, H.K. Image-based construction hazard avoidance system using augmented reality in wearable device. Autom. Constr. 2017, 83, 390–403. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Gupta, A.; Johnson, J.; Fe-Fei, L.; Savarese, S.; Alahi, A. Social GAN: Socially acceptable trajectories with generative adversarial networks. arXiv 2018, arXiv:1803.10892. [Google Scholar]

- Cai, J.; Zhang, Y.; Cai, H. Two-step long short-term memory method for identifying construction activities through positional and attentional cues. Autom. Constr. 2019, 106, 102886. [Google Scholar] [CrossRef]

- Brilakis, M.W.; Park, G.; Jog, G. Automated vision tracking of project related entities. Adv. Eng. Inform. 2011, 25, 713–724. [Google Scholar] [CrossRef]

- Memarzadeh, M.; Golparvar-Fard, M.; Niebles, J.C. Automated 2D detection of construction equipment and workers from site video streams using histogram of oriented gradients and colors. Autom. Constr. 2013, 32, 24–37. [Google Scholar] [CrossRef]

- Park, M.W.; Brilakis, I. Construction worker detection in video frames for initializing vision trackers. Autom. Constr. 2012, 28, 15–25. [Google Scholar] [CrossRef]

- Visual Genome. 2017. Available online: https://homes.cs.washington.edu/~ranjay/visualgenome/index.html (accessed on 8 June 2024).

- Fang, Q.; Li, H.; Luo, X.; Ding, L.; Luo, H.; Rose, T.M.; An, W. Detecting non-hardhat-use by a deep learning method from far-field surveillance videos. Autom. Constr. 2018, 85, 1–9. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R. Faster R-CNN: Towards real-time object detection with region proposal netowkrs. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 1137–1149. [Google Scholar] [CrossRef]

- Kolar, Z.; Chen, H.; Luo, X. Transfer learning and deep convolutional neural networks for safety guardrail detection in 2D images. Autom. Constr. 2018, 89, 58–70. [Google Scholar] [CrossRef]

- Px2Graph. 2018. Available online: https://github.com/princeton-vl/px2graph (accessed on 30 April 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).