Developing and Validating an Instrument for Assessing Learning Sciences Competence of Doctoral Students in Education in China

Abstract

1. Introduction

2. Literature Review

2.1. Conceptual Definition of Learning Sciences Competence

2.2. Assessment Instruments for Learning Sciences Competence

3. Developing and Validating Learning Sciences Competence Assessment Instrument for Doctoral Students in Education

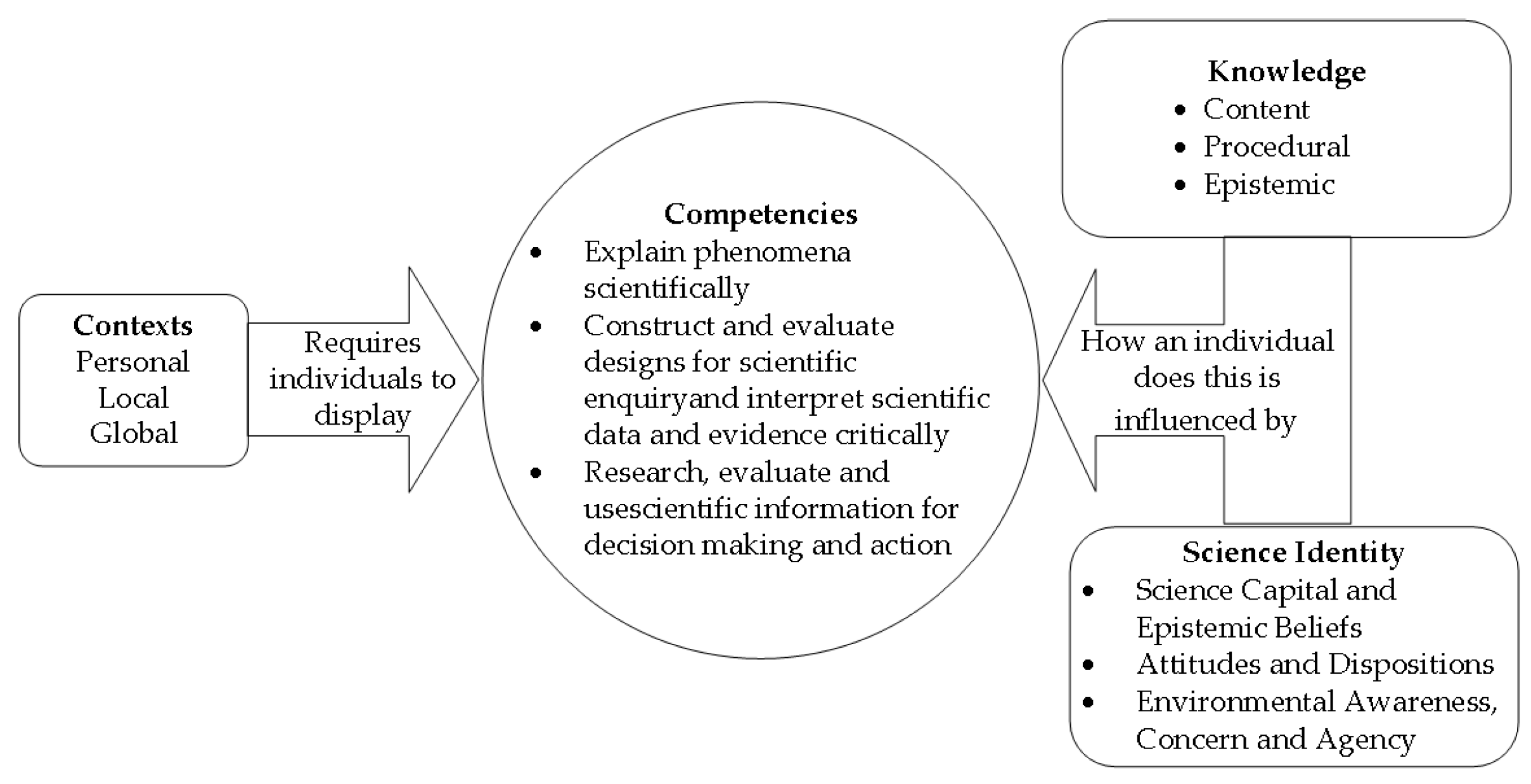

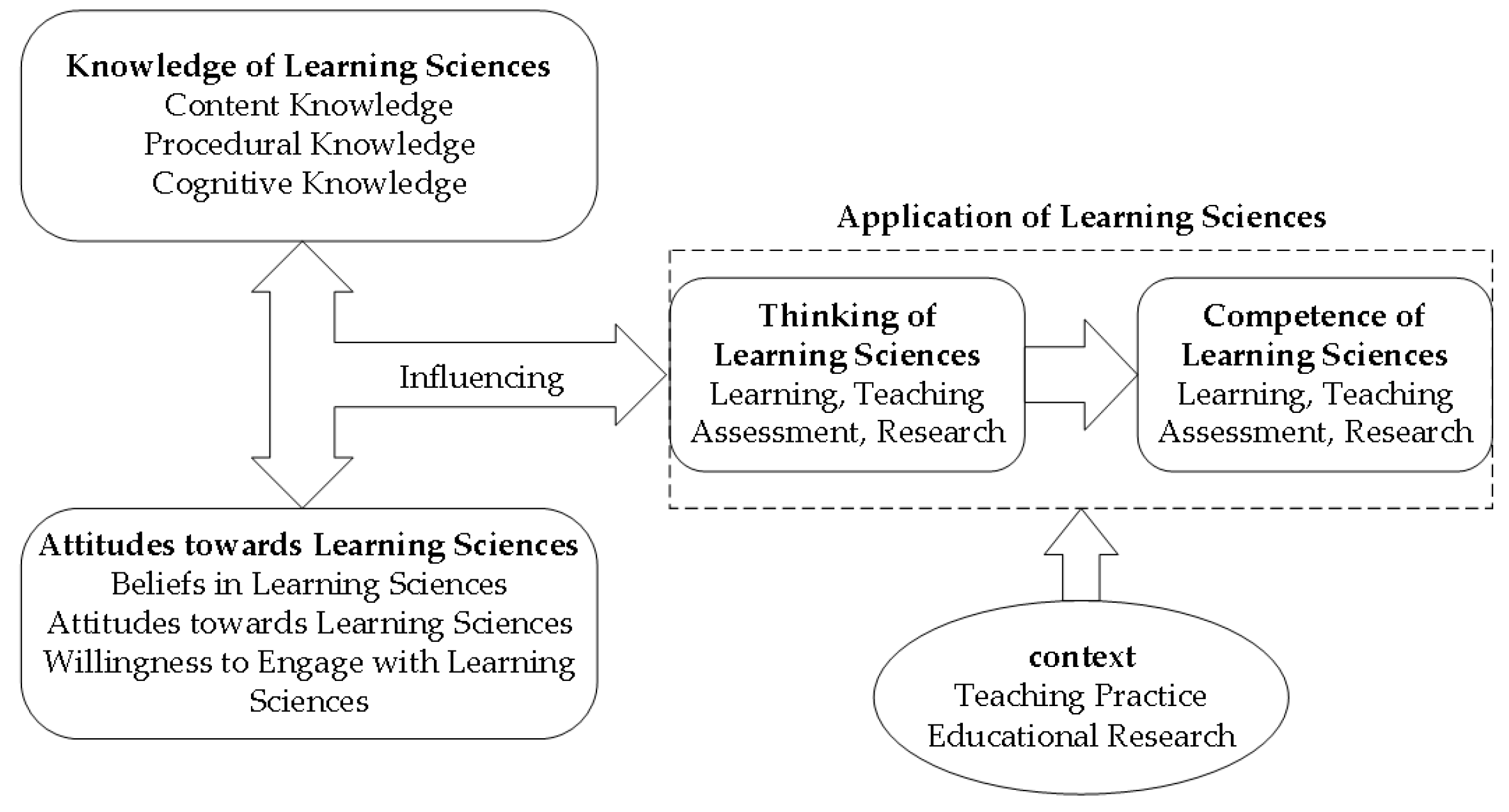

3.1. The Theoretical Foundation of the Assessment Instrument for Learning Sciences Competence

3.2. Design of Learning Sciences Competence Assessment Framework

3.3. Selecting and Developing Learning Sciences Competence Assessment Items

3.4. Validating the Learning Sciences competence Assessment instrument

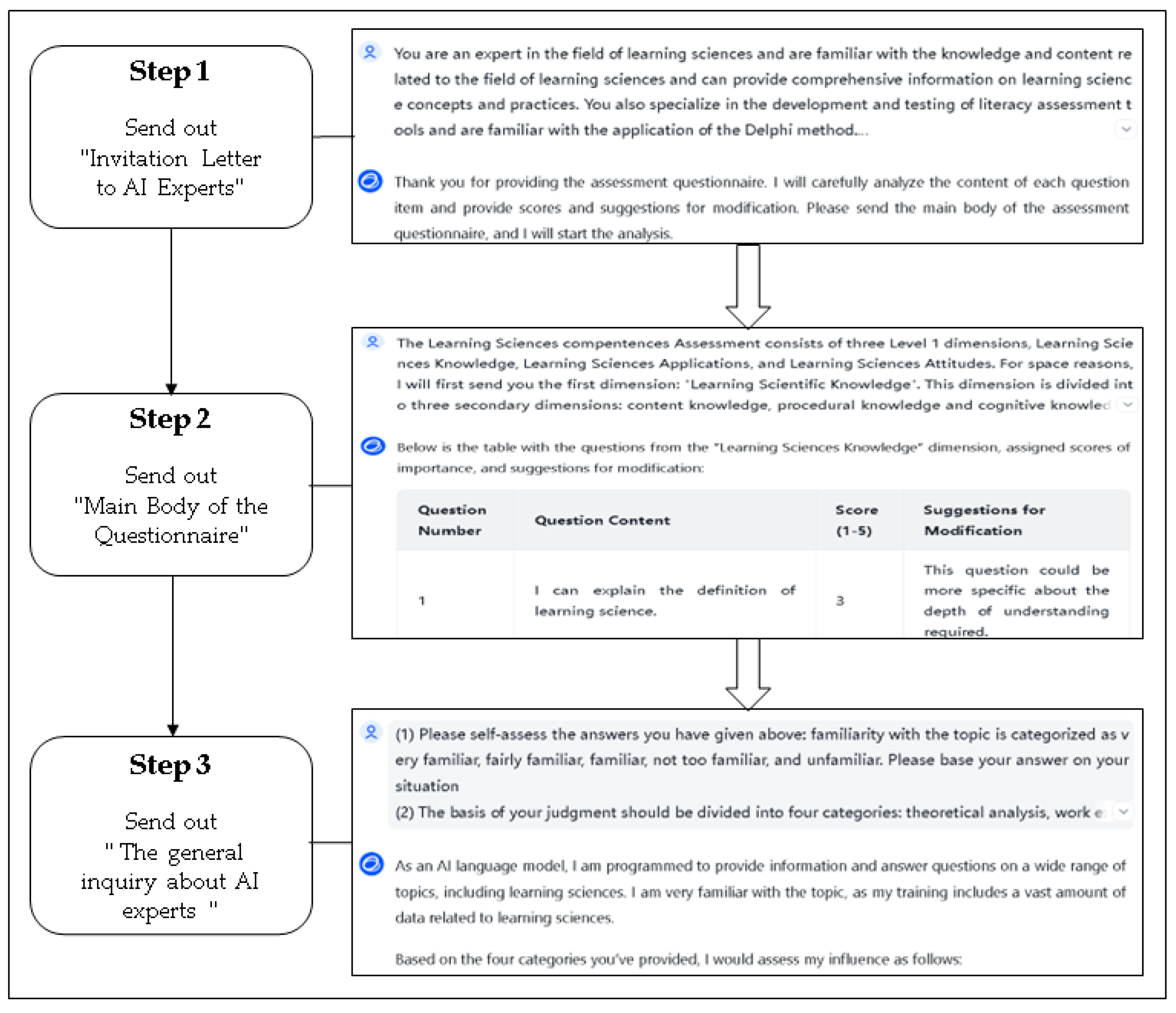

3.4.1. The Validation Method: The Delphi Method Based on LLM

3.4.2. Validation Steps

- Recruitment of AI Experts

- 2.

- Training of AI Experts

- 3.

- Compiling AI Expert Inquiry Instructions

- 4.

- Implementing Inquiry

3.4.3. Evaluation Indicators

3.4.4. Item Selection Method

3.4.5. Analysis of Results

- Analysis of the results of the first round of the Delphi method

- 2.

- Analysis of the results of the second round of the Delphi method

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sawyer, R.K. Optimising Learning Implications of Learning Sciences Research. In Innovating to Learn, Learning to Innovate; OECD: Paris, France, 2008; pp. 45–65. Available online: https://www.oecd-ilibrary.org/education/innovating-to-learn-learning-to-innovate/optimising-learning-implications-of-learning-sciences-research_9789264047983-4-en (accessed on 1 March 2024). [CrossRef]

- Oshima, J.; van Aalst, J.; Mu, J.; Chan, C.K. Development of the Learning Sciences: Theories, Pedagogies, and Technologies. In International Handbook on Education Development in Asia-Pacific; Lee, W.O., Brown, P., Goodwin, A.L., Green, A., Eds.; Springer Nature: Singapore, 2022; pp. 1–24. [Google Scholar] [CrossRef]

- Nathan, M.J.; Alibali, M.W. Learning sciences. WIREs Cogn. Sci. 2010, 1, 329–345. [Google Scholar] [CrossRef] [PubMed]

- Sawyer, R.K. An Introduction to the Learning Sciences. In The Cambridge Handbook of the Learning Sciences; Sawyer, R.K., Ed.; 3 versions; Cambridge University Press: Cambridge, UK, 2022; pp. 1–24. Available online: https://www.cambridge.org/core/books/cambridge-handbook-of-the-learning-sciences/an-introduction-to-the-learning-sciences/7A4FB448A227012F5D0A0165375380D7 (accessed on 5 May 2024). [CrossRef]

- Guerriero, S. Teachers’ Pedagogical Knowledge and the Teaching Profession. Teach. Teach. Educ. 2014, 2, 7. [Google Scholar]

- Ren, Y.; Pei, X.; Zhao, J.; Zheng, T.; Luo, L. Learning Science: Bringing New Perspectives to Teaching Reform; China Higher Education: Beijing, China, 2015; pp. 54–56. [Google Scholar]

- Opinions of the Ministry of Education on Strengthening Educational Scientific Research in the New Era. Available online: http://www.moe.gov.cn/srcsite/A02/s7049/201911/t20191107_407332.html (accessed on 7 May 2024).

- Shang, J.; Pei, L.; Wu, S. Historical traceability, research hotspots and future development of learning science. Educ. Res. 2018, 39, 136–145+159. [Google Scholar]

- Shulman, L.S.; Golde, C.M.; Bueschel, A.C.; Garabedian, K.J. Reclaiming Education’s Doctorates: A Critique and a Proposal. Educ. Res. 2006, 35, 25–32. [Google Scholar] [CrossRef]

- Chen, D.; Zhang, Y. A Multidimensional Interpretation of the Convergence of the Development of Doctor of Education and Doctor of Education. Res. Grad. Educ. 2019, 1, 53–58. [Google Scholar]

- Kolbert, J.B.; Brendel, J.M.; Gressard, C.F. Current Perceptions of the Doctor of Philosophy and Doctor of Education Degrees in Counselor Preparation. Couns. Educ. Superv. 1997, 36, 207–215. [Google Scholar] [CrossRef]

- Liang, L.; Cai, J.; Geng, Q. Learning science research and changes in educational practice: Innovation and development of research methodology. Res. Electrochem. Educ. 2022, 43, 39–45+62. [Google Scholar] [CrossRef]

- Sommerhoff, D.; Szameitat, A.; Vogel, F.; Chernikova, O.; Loderer, K.; Fischer, F. What Do We Teach When We Teach the Learning Sciences? A Document Analysis of 75 Graduate Programs. J. Learn. Sci. 2018, 27, 319–351. [Google Scholar] [CrossRef]

- Pei, X.; Howard-Jones, P.A.; Zhang, S.; Liu, X.; Jin, Y. Teachers’ Understanding about the Brain in East China. Procedia—Soc. Behav. Sci. 2015, 174, 3681–3688. [Google Scholar] [CrossRef]

- Ciascai, L.; Haiduc, L. The Opinion of Romanian Teachers Regarding Pupils Learning Science. Implication for Teacher Training. 2011. Available online: https://www.semanticscholar.org/paper/The-Opinion-of-Romanian-Teachers-Regarding-Pupils-.-Ciascai-Haiduc/30b9ca6de576b25236912f321b5be395b9f8dfd7#related-papers (accessed on 7 May 2024).

- Zhang, Y. Research on the Framework, Current Situation and Cultivation Strategy of Teacher Students’ Learning Science Literacy. Master’s Thesis, Jiangxi Normal University, Nanchang, China, 2023. Available online: https://kns.cnki.net/kcms2/article/abstract?v=NR7yonmY8oN2iZkzJU_aX1Ys5OnMjYEvA0egQtATinesenreTaW7ks9gqTpOPkvDd3Iy-06MfZSRCZ2kUU2MDC6pyMJEyXnaVhjJKunKvmEZzizY0kCjEAXxss_dqySR8fvNlqMA_hOKR7pwzIiwIg==&uniplatform=NZKPT&language=CHS (accessed on 9 March 2024).

- Lu, L.; Liang, W.; Shen, X. A review of learning science research in China—Based on 20 years of literature analysis. Educ. Theory Pract. 2012, 32, 56–60. [Google Scholar]

- Darling-Hammond, L.; Flook, L.; Cook-Harvey, C.; Barron, B.; Osher, D. Implications for educational practice of the science of learning and development. Appl. Dev. Sci. 2020, 24, 97–140. [Google Scholar] [CrossRef]

- Shang, J.J.; Pei, L. Reflections on some important issues of developing learning science. Mod. Educ. Technol. 2018, 28, 12–18. [Google Scholar]

- Xia, Q.; Hu, Q. Analysis of Teachers’ Learning Science Literacy Level—Based on Project Practice and Small Sample Research. Inf. Technol. Educ. Prim. Second. Sch. 2021, Z1, 17–19. [Google Scholar]

- OECD. The Nature of Learning: Using Research to Inspire Practice; Organisation for Economic Co-Operation and Development: Paris, France, 2010; Available online: https://www.oecd-ilibrary.org/education/the-nature-of-learning_9789264086487-en (accessed on 11 March 2024).

- Mayer, R.E. Applying the science of learning: Evidence-based principles for the design of multimedia instruction. Am. Psychol. 2008, 63, 760–769. [Google Scholar] [CrossRef]

- Rusconi, G. What Is an Assessment Tool? Types & Implementation Tips. 28 December 2023. Available online: https://cloudassess.com/blog/what-is-an-assessment-tool/ (accessed on 16 June 2024).

- Albu, C.; Lindmeier, A. Performance assessment in teacher education research—A scoping review of characteristics of assessment instruments in the DACH region. Z. Für Erzieh. 2023, 26, 751–778. [Google Scholar] [CrossRef]

- Li, J.; Yan, X.; Yang, Q. Practicing Learning, Researching and Training to Enhance Teachers’ Learning Science Literacy in Chaoyang District. Inf. Technol. Educ. Prim. Second. Sch. 2021, 9–12. [Google Scholar]

- Olaru, G.; Burrus, J.; MacCann, C.; Zaromb, F.M.; Wilhelm, O.; Roberts, R.D. Situational Judgment Tests as a method for measuring personality: Development and validity evidence for a test of Dependability. PLoS ONE 2019, 14, e0211884. [Google Scholar] [CrossRef] [PubMed]

- Lawlor, J.; Mills, K.; Neal, Z.; Neal, J.W.; Wilson, C.; McAlindon, K. Approaches to measuring use of research evidence in K-12 settings: A systematic review. Educ. Res. Rev. 2019, 27, 218–228. [Google Scholar] [CrossRef]

- Bakx, A.; Baartman, L.; van Schilt-Mol, T. Development and evaluation of a summative assessment program for senior teacher competence. Stud. Educ. Eval. 2014, 40, 50–62. [Google Scholar] [CrossRef]

- Jandhyala, R. Delphi, non-RAND modified Delphi, RAND/UCLA appropriateness method and a novel group awareness and consensus methodology for consensus measurement: A systematic literature review. Curr. Med. Res. Opin. 2020, 36, 1873–1887. [Google Scholar] [CrossRef]

- Circular of the Ministry of Education on the Issuance of the Compulsory Education Curriculum Program and Curriculum Standards (2022 Edition)—Government Portal of the Ministry of Education of the People’s Republic of China. Available online: http://www.moe.gov.cn/srcsite/A26/s8001/202204/t20220420_619921.html (accessed on 16 June 2024).

- OECD. PISA 2025 Science Framework (Draft). 2023. Available online: https://pisa-framework.oecd.org/science-2025/#section3 (accessed on 27 June 2024).

- Piaget, J. Principles of Genetic Epistemology: Selected Works Vol 7; Routledge: London, UK, 2013. [Google Scholar] [CrossRef]

- Darling-Hammond, L.; Schachner, A.C.W.; Wojcikiewicz, S.K.; Flook, L. Educating teachers to enact the science of learning and development. Appl. Dev. Sci. 2024, 28, 1–21. [Google Scholar] [CrossRef]

- Cook, D.B.; Klipfel, K.M. How Do Our Students Learn? An Outline of a Cognitive Psychological Model for Information Literacy Instruction. Ref. User Serv. Q. 2015, 55, 34–41. [Google Scholar] [CrossRef][Green Version]

- Busch, B.; Watson, E. The Science of Learning: 77 Studies That Every Teacher Needs to Know; Routledge/Taylor & Francis Group: New York, NY, USA, 2019; Volume xv, p. 172. [Google Scholar] [CrossRef]

- Shang, J.; Wang, Y.; He, Y. Exploring the mystery of learning: An empirical study of learning science in China in the past five years. J. East China Norm. Univ. (Educ. Sci. Ed.) 2020, 38, 162–178. [Google Scholar] [CrossRef]

- Dunlosky, J.; Rawson, K.; Marsh, E.; Nathan, M.; Willingham, D. Improving Students’ Learning With Effective Learning Techniques. Psychol. Sci. Public Interest 2013, 14, 4–58. [Google Scholar] [CrossRef] [PubMed]

- Boser, U. What Do Teachers Know about The Science of Learning? Available online: https://media.the-learning-agency.com/wp-content/uploads/2021/03/01151644/What-Do-Teachers-Know-About-The-Science-of-Learning-1.pdf (accessed on 2 March 2024).

- Kankanhalli, A. Artificial intelligence and the role of researchers: Can it replace us? Dry. Technol. 2020, 38, 1539–1541. [Google Scholar] [CrossRef]

- Hallstrom, J.; Norstrom, P.; Schonborn, K.J. Authentic STEM education through modelling: An international Delphi study. Int. J. Stem Educ. 2023, 10, 62. [Google Scholar] [CrossRef]

- Parmigiani, D.; Jones, S.-L.; Silvaggio, C.; Nicchia, E.; Ambrosini, A.; Pario, M.; Pedevilla, A.; Sardi, I. Assessing Global Competence within Teacher Education Programs. How to Design and Create a Set of Rubrics with a Modified Delphi Method. Sage Open 2022, 12, 21582440221128794. [Google Scholar] [CrossRef]

- Brady, S.R. Utilizing and Adapting the Delphi Method for Use in Qualitative Research. Int. J. Qual. Methods 2015, 14, 1609406915621381. [Google Scholar] [CrossRef]

- Fletcher, A.J.; Marchildon, G.P. Using the Delphi Method for Qualitative, Participatory Action Research in Health Leadership. Int. J. Qual. Methods 2014, 13, 1–18. [Google Scholar] [CrossRef]

- Yuan, Q.; Zong, Q.; Shen, H. Research on the Development and Application of Delphi Method in China—A Series of Papers of Knowledge Mapping Research Group of Nanjing University. Mod. Intell. 2011, 31, 3–7. [Google Scholar]

- Eubank, B.H.; Mohtadi, N.G.; Lafave, M.R.; Wiley, J.P.; Bois, A.J.; Boorman, R.S.; Sheps, D.M. Using the modified Delphi method to establish clinical consensus for the diagnosis and treatment of patients with rotator cuff pathology. BMC Med. Res. Methodol. 2016, 16, 56. [Google Scholar] [CrossRef] [PubMed]

- Hartman, F.T.; Baldwin, A. Using Technology to Improve Delphi Method. J. Comput. Civ. Eng. 1995, 9, 244–249. [Google Scholar] [CrossRef]

- Grigonis, R. AI Meets Delphi: Applying the Delphi Method Using LLMs to Predict the Future of Work. 20 October 2023. Available online: https://medium.com/predict/ai-meets-delphi-applying-the-delphi-method-using-llms-to-predict-the-future-of-work-27e1f0bba22e (accessed on 13 March 2024).

- Sterling, S.; Plonsky, L.; Larsson, T.; Kytö, M.; Yaw, K. Introducing and illustrating the Delphi method for applied linguistics research. Res. Methods Appl. Linguist. 2023, 2, 100040. [Google Scholar] [CrossRef]

- Hasson, F.; Keeney, S.; Mckenna, H. Research guidelines for the Delphi survey technique. J. Adv. Nurs. 2000, 32, 1008–1015. [Google Scholar] [CrossRef] [PubMed]

- Lin, L.; Wang, L.; Guo, J.; Wong, K.-F. Investigating Bias in LLM-Based Bias Detection: Disparities between LLMs and Human Perception. arXiv 2024. Available online: http://arxiv.org/abs/2403.14896 (accessed on 13 June 2024). [CrossRef]

- Huber, S.E.; Kiili, K.; Nebel, S.; Ryan, R.M.; Sailer, M.; Ninaus, M. Leveraging the Potential of Large Language Models in Education Through Playful and Game-Based Learning. Educ. Psychol. Rev. 2024, 36, 25. [Google Scholar] [CrossRef]

- Wang, X.; Gao, H.; Zheng, Y. A Critical Examination of the Role of ChatGPT in Learning Research: A Thing Ethnographic Study. Adv. Soc. Dev. Educ. Res. 2024. Available online: https://www.semanticscholar.org/paper/A-Critical-Examination-of-the-Role-of-ChatGPT-in-Wang-Gao/9ed6b4c723ea0b2f2bcee1130afa29cbc17784b4 (accessed on 28 May 2024). [CrossRef]

- Wang, M.; Wang, M.; Xu, X.; Yang, L.; Cai, D.; Yin, M. Unleashing ChatGPT’s Power: A Case Study on Optimizing Information Retrieval in Flipped Classrooms via Prompt Engineering. IEEE Trans. Learn. Technol. 2024, 17, 629–641. [Google Scholar] [CrossRef]

- Giray, L. Prompt Engineering with ChatGPT: A Guide for Academic Writers. Ann. Biomed. Eng. 2023, 51, 2629–2633. [Google Scholar] [CrossRef]

- Beiderbeck, D.; Frevel, N.; von der Gracht, H.A.; Schmidt, S.L.; Schweitzer, V.M. Preparing, conducting, and analyzing Delphi surveys: Cross-disciplinary practices, new directions, and advancements. MethodsX 2021, 8, 101401. [Google Scholar] [CrossRef]

- Wu, D.; Ding, H.; Chen, J.; Fan, Y. A Delphi approach to develop an evaluation indicator system for the National Food Safety Standards of China. Food Control 2021, 121, 107591. [Google Scholar] [CrossRef]

- Zheng, J.; Lou, L.; Xie, Y.; Chen, S.; Li, J.; Wei, J.; Feng, J. Model construction of medical endoscope service evaluation system-based on the analysis of Delphi method. BMC Health Serv. Res. 2020, 20, 629. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Zeng, Y.; Tian, F. Developing a third-degree burn model of rats using the Delphi method. Sci. Rep. 2022, 12, 13852. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Cao, J.; Wang, H.; Liang, X.; Wang, J.; Fu, N.; Cao, B. Constructing the curriculum of senior geriatric nursing program based on the Delphi method. Nurs. Res. 2018, 32, 59–62. [Google Scholar]

- Wang, C.; Sichin. Research on data statistical processing method in Delphi method and its application. J. Inn. Mong. Inst. Financ. Econ. (Gen. Ed.) 2011, 9, 92–96. [Google Scholar] [CrossRef]

- Ronfeldt, M.; Reininger, M. More or better student teaching? Teach. Teach. Educ. 2012, 28, 1091–1106. [Google Scholar] [CrossRef]

- Davis, K.A.; Grote, D.; Mahmoudi, H.; Perry, L.; Ghaffarzadegan, N.; Grohs, J.; Hosseinichimeh, N.; Knight, D.B.; Triantis, K. Comparing Self-Report Assessments and Scenario-Based Assessments of Systems Thinking Competence. J. Sci. Educ. Technol. 2023, 32, 793–813. [Google Scholar] [CrossRef]

- Coppi, M.; Fialho, I.; Cid, M. Developing a Scientific Literacy Assessment Instrument for Portuguese 3rd Cycle Students. Educ. Sci. 2023, 13, 941. [Google Scholar] [CrossRef]

- Li, J.; Xue, E. The Quest for Sustainable Graduate Education Development: Narrative Inquiry of Early Doctoral Students in China’s World-Class Disciplines. Sustainability 2022, 14, 11564. [Google Scholar] [CrossRef]

| Assessment Domain | Secondary Dimension | Tertiary Dimension | Reference |

|---|---|---|---|

| Knowledge of Learning Sciences | Content Knowledge | Overview of Learning Sciences; Research Topics and Main Viewpoints; Theoretical Foundations | [3,4] |

| Procedural Knowledge | Various Methodologies Applied by Learning Scientists | ||

| Cognitive Knowledge | Understanding the Rationale Behind Conducting Learning Sciences Research Selecting Appropriate Methodologies to Address Problems Critically Examining Research Findings in Learning Sciences | ||

| Application of Learning Sciences (Thinking of Learning Sciences and Competence of Learning Sciences) | Learning | Judging the Effectiveness of Learning Strategies | [22] |

| Teaching | Implementing Teaching Practices Based on Learning Sciences | ||

| Assessment | Conducting Teaching Assessment Based on Learning Sciences | ||

| Research | Conducting Research in Learning Sciences | ||

| Attitudes towards Learning Sciences | Beliefs in Learning Sciences | Understanding the Value | [25,33] |

| Attitudes towards Learning Sciences | Identification; Interest | ||

| Willingness to Engage with Learning Sciences | Participation in Training and Development; Adjusting Teaching and Research |

| Assessment Domain | Secondary Dimension | Specific Items | References |

|---|---|---|---|

| Knowledge of Learning Sciences | Content Knowledge | Q1. I am able to elucidate the definition of learning sciences. Q2. I am able to provide an overview of the historical and developmental aspects of the field of learning sciences. Q3. I am able to provide a comprehensive list of prominent experts and scholars, as well as journals and academic conferences, in the field of learning sciences, both domestically and internationally. Q4. I am able to delineate the mechanisms and processes underlying the occurrence of learning. Q5. I am able to design appropriate learning environments based on different learning theories for real-life situations. Q6. I am conversant with the techniques of learning analysis, including classroom discourse analysis and online learning analysis. Q7. I am able to delineate the concepts and roles of learning theories. Q8. I am able to delineate the fundamental tenets of behaviorism, cognitivism, constructivism, and humanism as they pertain to the field of learning theory. | [4,35] |

| Procedural Knowledge | Q9. I am aware of the advantages, disadvantages, and appropriate contexts for research methods such as experimental and survey research. Q10. I am conversant with the general process of Design-Based Research (DBR) methods. Q11. I am conversant with the methodologies employed in neuroscience research, including neurophysiological techniques such as eye-tracking and the use of wearable devices. Q12. I am conversant with the research methods and technologies based on big data and artificial intelligence, such as educational data mining. | [36] | |

| Cognitive Knowledge | Q13. It is evident that the essence of learning sciences is the elucidation of the nature of learning and the optimization of learning environment design. Q14. I am able to select the most appropriate learning sciences research methods for a given context with the aim of addressing the problems at hand. Q15. I am able to critically examine the current research outcomes in learning sciences. | [3] | |

| Application of Learning Sciences | Learning Strategies | Q16. Which review strategy is more effective for students when reviewing previously learned concepts: self-testing (e.g., doing practice questions) or re-reading (repeating textbook or notes)? Q17. Which learning strategy is more effective: highlighting key points with a highlighter or pen, or recording knowledge on blank paper? Q18. Which is more effective: integrating information through text and images, or providing text and images separately? Q19. Which learning strategy is more effective: “interleaved practice” (alternating practice with different types of questions) or “blocked practice” (concentrating practice on similar types of questions)? | [37,38] |

| Teaching Practices | Q20. As a language instructor endeavoring to facilitate students’ comprehension of the influence of writing purposes on text types, which approach would you select? Activity 1: Have students read news reports and opinion articles from newspapers. Then, in groups, have them discuss how to identify the author’s persuasive intent, the differences between the two, and how to reflect different purposes. Additionally, it is important to consider how to adapt the approach if the purpose of the news report shifts from informative to entertainment. Activity 2: Conduct a newspaper treasure hunt activity where students search for article titles with different writing purposes and record them in a table to visually understand the impact of writing purposes on text types. Q21. When presenting a teaching animation on the formation of thunderstorms, which approach would you select? Approach 1: The 2.5-min animation should be played continuously. Approach 2: The animation can be divided into 16 segments, each approximately 10 s in length, with accompanying descriptions. In order to facilitate the learning process, it is advisable to include a “continue” button, which will allow learners to click and play the next segment. Q22. When instructing students in the operations of algebraic equations, which pedagogical approach would you select? Approach 1: Provide students with algebraic problems for practice and mastery through exercises. Approach 2: Provide illustrative examples for students to learn from initially, and then present analogous problems for practice. | [22] | |

| Teaching Assessment | Q23. As a mathematics educator, following the instruction of binomial probability problems, a questionnaire was administered to ascertain the extent of student comprehension. The results indicate that the majority of the students believe they have a satisfactory grasp of the concepts. What inferences can be drawn from this data? | [22] | |

| Scientific Research | You are researching the factors influencing students’ learning effectiveness. Please choose the most appropriate option. Q24. Which question is more suitable for the study of the factors influencing students’ learning effectiveness? A. The relationship between study habits and academic performance B. The correlation between teacher age and teaching effectiveness Q25. After collecting data on student classroom participation, homework completion, and grades, how should you choose the analysis method? A. Summarizing data using descriptive statistics B. Using causal analysis models to explore the relationship between variables Q26. Upon the analysis of the data, it became evident that there was a significant correlation between a specific variable and academic performance. However, the causal relationship between the two remains uncertain. What is the appropriate course of action? A. Conduct regression analysis to explore causality. B. It is recommended that control variables be added in order to eliminate potential influences. Q27. Empirical evidence indicates that a specific learning methodology has a positive effect on academic performance. How can the reliability and validity of the results be ensured? A. Draw conclusions based solely on the results of this study. B. Compare and discuss the results of this study with those of other studies. | [39] | |

| Attitudes towards Learning Sciences | Beliefs in Learning Sciences | Q28. I am aware of the significance of learning sciences in the context of both teaching practice and research. Q29. I am aware of the advantages and limitations of the development of learning sciences research. | [16,33] |

| Attitudes towards Learning Sciences | Q30. I am eager to pursue further knowledge in the field of learning sciences. Q31. I am able to utilize learning sciences to address both teaching practice and research questions. Q32. I am able to adhere to the ethical principles of learning sciences research. Q33. I am profoundly interested in the field of learning sciences. Q34. I am proactive in disseminating knowledge about learning sciences to those around me. | ||

| Willingness to Engage with Learning Sciences | Q35. It is my hope that I will have the opportunity to participate in learning sciences-related courses or training activities. Q36. I am eagerly anticipating the opportunity to apply learning sciences in future teaching and research endeavors. Q37. I am prepared to modify my previous teaching and research methodologies in accordance with the findings of learning sciences research in a timely manner. |

| Number | Name | Developer | URL | Access Date |

| 1 | ERNIE Bot | Baidu | https://yiyan.baidu.com/ | accessed on 12 March 2024 |

| 2 | Spark Desk | Iflytek | https://xinghuo.xfyun.cn/ | accessed on 12 March 2024 |

| 3 | Tongyi Qianwen | Alibaba Group | https://qianwen.aliyun.com/ | accessed on 13 March 2024 |

| 4 | doubao AI | ByteDance | https://www.doubao.com/ | accessed on 13 March 2024 |

| 5 | Baichuan | Baichuan Intelligent Technology | https://www.baichuan-ai.com/ | accessed on 13 March 2024 |

| 6 | 360 GPT | 360 | https://chat.360.com/ | accessed on 13 March 2024 |

| 7 | HunyuanAide | Tencent | https://hunyuan.tencent.com/ | accessed on 14 March 2024 |

| 8 | Kimi Chat | Moonshot AI | https://kimi.moonshot.cn/ | accessed on 14 March 2024 |

| 9 | Tiangong Chat | KUNLUN TECH | https://chat.tiangong.cn/ | accessed on 14 March 2024 |

| 10 | ZhiPu AI | ZhiPu AI | https://chatglm.cn/ | accessed on 14 March 2024 |

| 11 | GPT-3.5 | OpenAI | https://openai.com/ | accessed on 14 March 2024 |

| 12 | Copilot GPT | Microsoft | https://copilot.microsoft.com/ | accessed on 14 March 2024 |

| 13 | Claude-3-Sonnet | Anthropic | https://www.anthropic.com/ | accessed on 15 March 2024 |

| 14 | Gemini-Pro | https://poe.com/Gemini-Pro | accessed on 15 March 2024 | |

| 15 | PaLM | https://ai.google/discover/palm2/ | accessed on 15 March 2024 | |

| 16 | Mistral-Large | Mistral AI | https://mistral.ai/news/mistral-large/ | accessed on 15 March 2024 |

| Item | First Round | Second Round | Item | First Round | Second Round | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| M | K | CV | M | K | CV | M | K | CV | M | K | CV | ||

| Q1 | 4.813 | 0.813 | 0.084 | 4.688 | 0.102 | 0.688 | Q20 | 4.563 | 0.563 | 0.112 | 4.500 | 0.141 | 0.563 |

| Q2 | 4.125 | 0.188 | 0.121 | 4.563 | 0.112 | 0.563 | Q21 | 4.563 | 0.563 | 0.112 | 4.438 | 0.116 | 0.438 |

| Q3 | 3.625 | 0.188 | 0.222 | - | - | - | Q22 | 4.375 | 0.438 | 0.142 | 4.563 | 0.112 | 0.563 |

| Q4 | 4.875 | 0.875 | 0.070 | 4.938 | 0.051 | 0.938 | Q23 | 3.438 | 0.188 | 0.260 | 4.625 | 0.134 | 0.688 |

| Q5 | 4.250 | 0.375 | 0.161 | 4.875 | 0.070 | 0.875 | Q24 | 4.813 | 0.813 | 0.084 | 4.625 | 0.108 | 0.625 |

| Q6 | 4.063 | 0.188 | 0.141 | 4.250 | 0.105 | 0.250 | Q25 | 4.688 | 0.750 | 0.128 | 4.813 | 0.084 | 0.813 |

| Q7 | 4.750 | 0.750 | 0.094 | 4.625 | 0.108 | 0.625 | Q26 | 4.813 | 0.813 | 0.084 | 4.750 | 0.094 | 0.750 |

| Q8 | 4.625 | 0.625 | 0.108 | 4.563 | 0.112 | 0.563 | Q27 | 4.938 | 0.938 | 0.051 | 4.813 | 0.084 | 0.813 |

| Q9 | 4.250 | 0.313 | 0.136 | 4.563 | 0.112 | 0.563 | Q28 | 5.000 | 1.000 | 0.000 | 4.938 | 0.051 | 0.938 |

| Q10 | 4.063 | 0.250 | 0.167 | - | - | - | Q29 | 4.500 | 0.500 | 0.115 | 4.563 | 0.138 | 0.625 |

| Q11 | 3.938 | 0.188 | 0.173 | - | - | - | Q30 | 4.813 | 0.813 | 0.084 | 4.875 | 0.070 | 0.875 |

| Q12 | 4.375 | 0.438 | 0.142 | 4.688 | 0.102 | 0.688 | Q31 | 4.938 | 0.938 | 0.051 | 4.938 | 0.051 | 0.938 |

| Q13 | 4.938 | 0.938 | 0.051 | 5.000 | 0.000 | 1.000 | Q32 | 4.688 | 0.688 | 0.102 | 4.688 | 0.102 | 0.688 |

| Q14 | 4.625 | 0.625 | 0.108 | 4.938 | 0.051 | 0.938 | Q33 | 4.750 | 0.750 | 0.094 | 4.688 | 0.102 | 0.688 |

| Q15 | 4.688 | 0.688 | 0.102 | 4.750 | 0.094 | 0.750 | Q34 | 4.313 | 0.313 | 0.111 | 4.563 | 0.112 | 0.563 |

| Q16 | 4.500 | 0.500 | 0.115 | 4.563 | 0.112 | 0.563 | Q35 | 4.750 | 0.750 | 0.094 | 4.625 | 0.108 | 0.625 |

| Q17 | 3.875 | 0.125 | 0.160 | 4.563 | 0.112 | 0.563 | Q36 | 5.000 | 1.000 | 0.000 | 5.000 | 0.000 | 1.000 |

| Q18 | 4.625 | 0.688 | 0.134 | 4.563 | 0.112 | 0.563 | Q37 | 4.938 | 0.938 | 0.051 | 4.813 | 0.084 | 0.813 |

| Q19 | 4.500 | 0.563 | 0.141 | 4.625 | 0.108 | 0.625 | |||||||

| First Round Inquiry | Second Round Inquiry | |||||

|---|---|---|---|---|---|---|

| Mean | Standard Deviation | Threshold | Mean | Standard Deviation | Threshold | |

| Arithmetic Mean | 4.5236 | 0.3887 | 4.1349 | 4.693 | 0.1757 | 4.5173 |

| Coefficient of Variation | 0.1109 | 0.0518 | 0.1627 | 0.0928 | 0.0333 | 0.1261 |

| Full Score Frequency | 0.5963 | 0.2707 | 0.3256 | 0.6985 | 0.1723 | 0.5262 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, X.; Zhang, B.; Gao, H. Developing and Validating an Instrument for Assessing Learning Sciences Competence of Doctoral Students in Education in China. Sustainability 2024, 16, 5607. https://doi.org/10.3390/su16135607

Wang X, Zhang B, Gao H. Developing and Validating an Instrument for Assessing Learning Sciences Competence of Doctoral Students in Education in China. Sustainability. 2024; 16(13):5607. https://doi.org/10.3390/su16135607

Chicago/Turabian StyleWang, Xin, Baohui Zhang, and Hongying Gao. 2024. "Developing and Validating an Instrument for Assessing Learning Sciences Competence of Doctoral Students in Education in China" Sustainability 16, no. 13: 5607. https://doi.org/10.3390/su16135607

APA StyleWang, X., Zhang, B., & Gao, H. (2024). Developing and Validating an Instrument for Assessing Learning Sciences Competence of Doctoral Students in Education in China. Sustainability, 16(13), 5607. https://doi.org/10.3390/su16135607