Abstract

Several assistive technologies (ATs) have been manufactured and tested to alleviate the challenges of deaf or hearing-impaired people (DHI). One such technology is sound detection, which has the potential to enhance the experiences of DHI individuals and provide them with new opportunities. However, there is a lack of sufficient research on using sound detection as an assistive technology, specifically for DHI individuals. This systematic literature review (SLR) aims to shed light on the application of non-verbal sound detection technology in skill development for DHI individuals. This SLR encompassed recent, high-quality studies from the prestigious databases of IEEE, ScienceDirect, Scopus, and Web of Science from 2014 to 2023. Twenty-six articles that met the eligibility criteria were carefully analyzed and synthesized. The findings of this study underscore the significance of utilizing sound detection technology to aid DHI individuals in achieving independence, access to information, and safety. It is recommended that additional studies be conducted to explore the use of sound detection tools as assistive technology, to enhance DHI individual’s sustainable quality of life.

1. Introduction

Globally, hearing loss is an increasing problem that affects the human population adversely. A report from the World Health Organization showed that there is an estimated 466 million deaf and hearing-impaired (DHI) individuals globally, of which 432 million are adults and the rest are children [1]. Furthermore, the availability of hearing aids is still under 10% of worldwide needs [2]. DHI individuals face different problems in their everyday activities as a result of not being able to communicate effectively. They also have less interaction with environmental sounds, which may expose them to hazards. Their ability to perceive the various sources of sound surrounding them is significant, as their next moves may depend upon the sound detected. Hence, there remains a need for other ATs that can assist with sound detection. There is a lack of adequate systematic literature reviews covering the aspect of sound detection. This systematic literature review (SLR) aims to explore the existing systems and techniques available for DHI individuals regarding non-verbal sound detection and suggest futuristic visions for DHI’s sustainable quality of life. In addition, to give convenient answers to the research questions (RQs) underneath:

RQ1: What common methods are used to inform deaf and hearing-impaired individuals about sound events?

RQ 2: What sustainable quality methods are used to interpret sound events for deaf and hearing-impaired individuals?

RQ 3: What are the target groups aimed for by the included studies?

RQ 4: What technologies and tools are used by deaf and hearing-impaired people to recognize a sound event?

1.1. Difficulties Faced by DHI Individuals

Deafness typically alludes to the serious hearing misfortune that almost no workable hearing sense exists. The expression “Impaired-of-Hearing” is regularly used to depict individuals with any level of hearing misfortune, from gentle to significant [3]. Deafness, as with any disability, has many consequences that affect people in this category. One of the great challenges confronting deaf people is effective communication with others without mediators or ATs. Moreover, they might be uninformed of urgent ambient sounds, for instance, blaze alarms, and vehicle horns, which lead to a late response reaction that could endanger their lives [4]. Using a mediator may be an effective way to communicate, but it may violate their privacy [5]. The vast majority of hearing individuals have either no/little culture or expertise in gesture-based communication [6]. Furthermore, many technologies are available but are not accessible by deaf people [7,8].

In contrast, impaired-of-hearing individuals face fewer troubles than deaf individuals do in their regular day, such as frustrated scholastic accomplishment, when compared with their hearing counterparts [9]. They are also less involved in conversation with hearing people.

Disabilities like deafness can influence an individual’s quality of life to varying degrees, and it is critical to investigate their needs to identify evidence-based strategies for helping them acquire sustainable quality for their everyday lives. Quality of life refers to a person’s overall well-being, encompassing their health, social engagement, contentment with their daily functioning, level of independence, and access to resources [10,11].

1.2. Sound Detection

The detection of sound and its translation into understandable forms for DHI people, such as sign language and text, positively affects the DHI individuals’ lives, empowering them to communicate effectively, access information, and engage fully in all facets of society. Sound detection and recognition mechanisms are deemed the foundation stone for numerous systems that are built on observation and safety [12]. These systems can be utilized to apprise people who are DHI about natural sounds, cautioning signals, and important sounds, which can improve the quality of life for deaf and hearing-impaired individuals in their daily activities. Sound detection passes through a few steps involving sound capture, location detection, analysis, and sound classification [13].

1.3. The Challenges and Missing Points Faced by Sound Detection

Noise is a significant factor affecting the sound quality in an outdoor environment [14], because the process of isolating a specific sound from multiple sounds presents a major difficulty [15,16]. Sound classification faces a challenge regarding the storage of large collections of sound samples, a task that cannot be accomplished on an individual level. Furthermore, the same sound has many similar shapes. In addition, sound localization is still insufficient if performed under unfavorable conditions [17,18].

2. Methodology

This SLR has been undertaken with consideration of the guidelines for preferred reporting items for systematic reviews and meta-analyses (PRISMA, see Supplementary Materials) [19].

2.1. Search Strategy

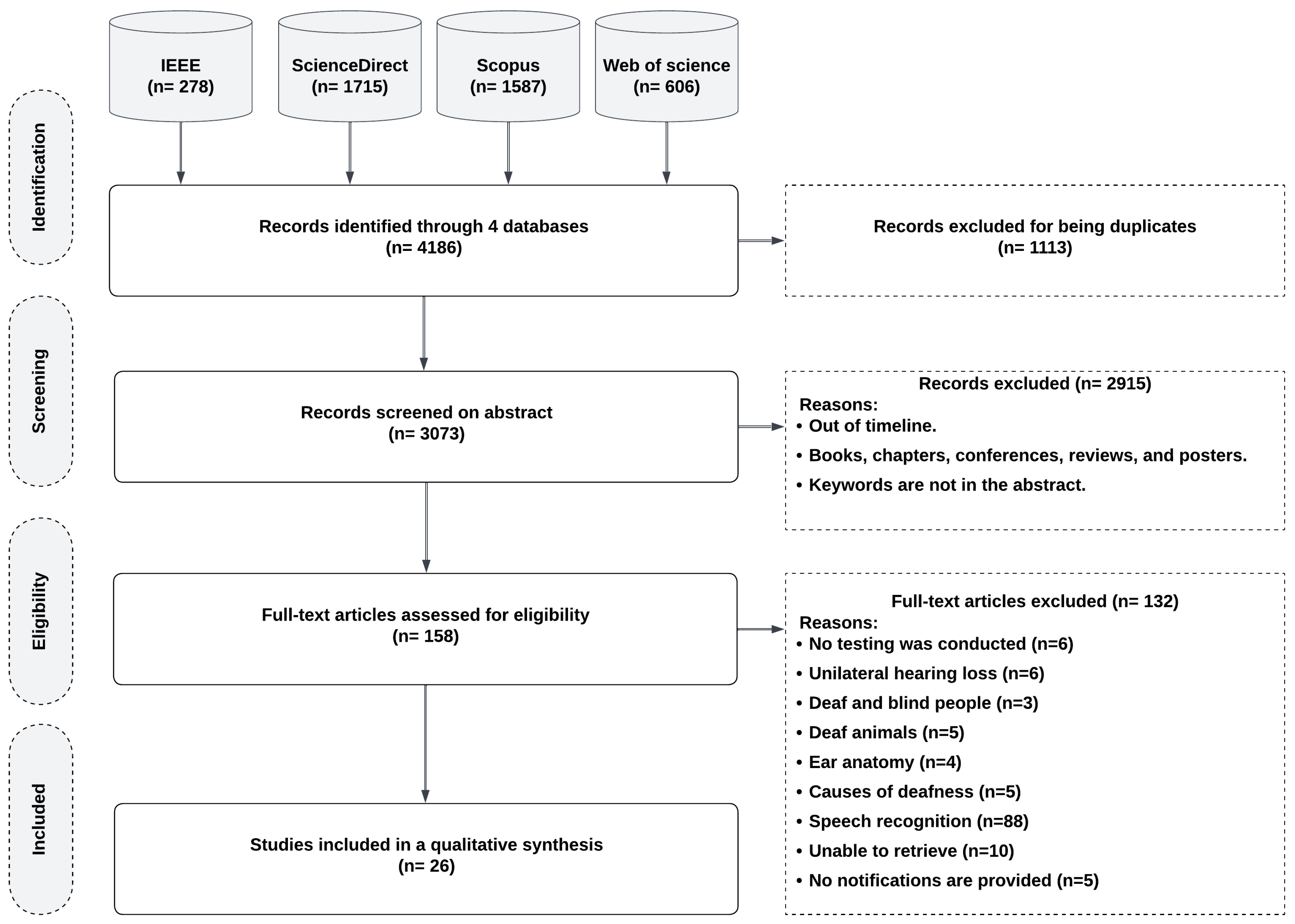

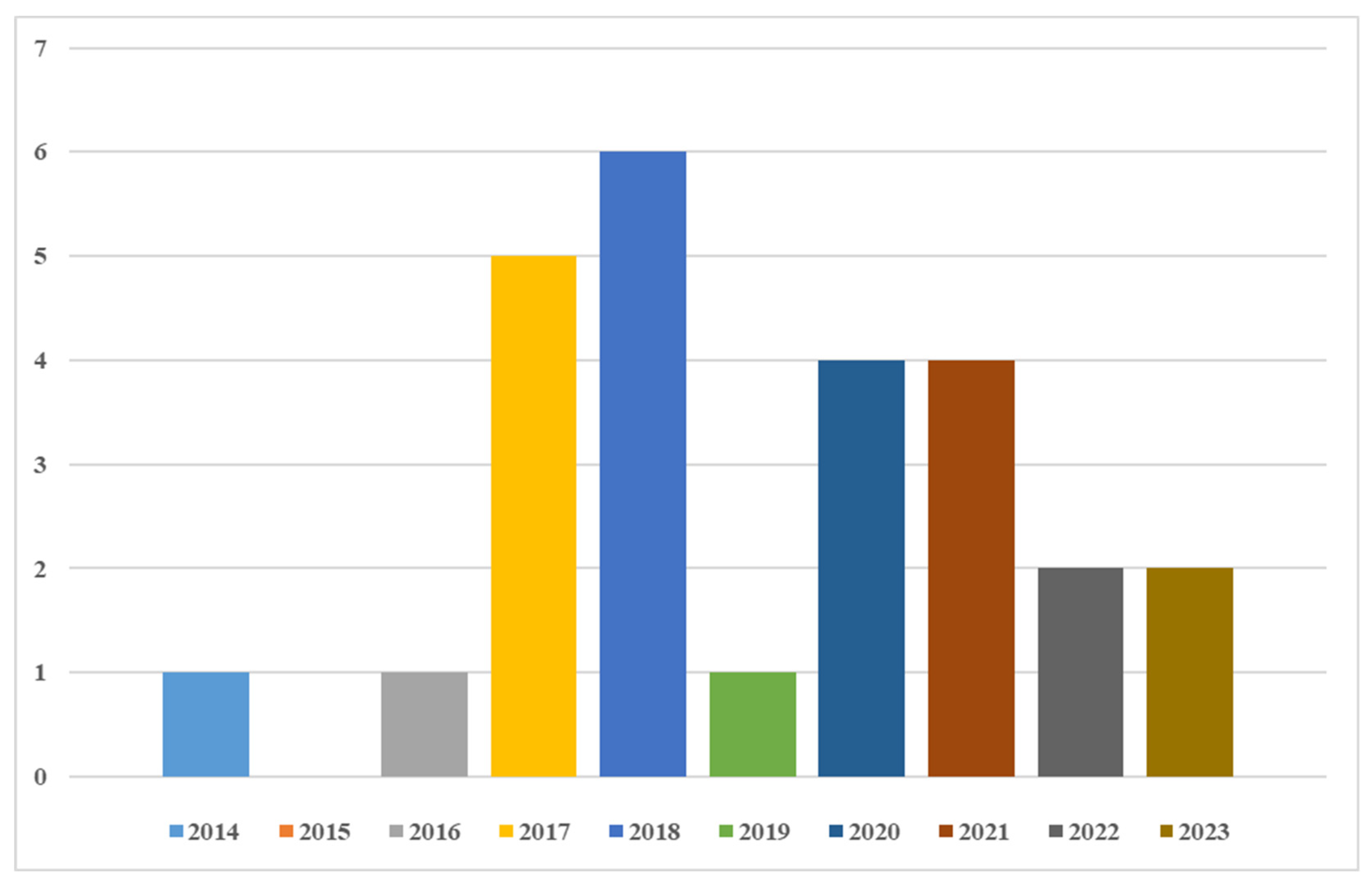

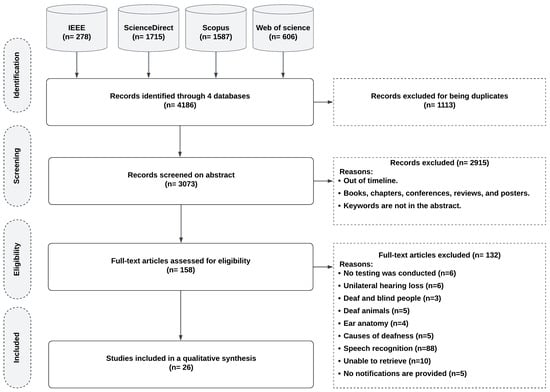

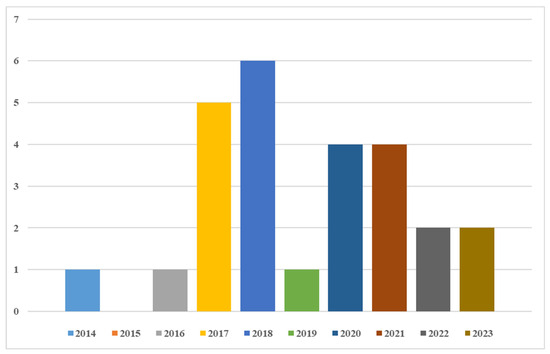

The SLR covered a period between 2014 and 2023. Four research databases, including IEEE, ScienceDirect, Scopus, and Web of Science, were used to retrieve journal papers relevant to the current study. Figure 1 depicts the SLR selection procedure. Figure 2 illustrates the ratings of the included papers based on their year of publication, with 2018 having the highest number of papers and 2015 having no papers.

Figure 1.

Candidacy method (PRISMA) for article selection.

Figure 2.

The distribution of publications by year.

2.2. Search Query

To retrieve studies that are relevant to the current study, the following nine keywords have been picked: “sound detection”, “sound uncover”, “sound reveal”, “sound alert”, “sound alarm”, “deaf”, “hard of hearing”,” impaired of hearing”, and “hearing loss”. To obtain the final formula of the query, in the beginning, appropriate keywords have been set up and linked using “AND”, for instance, “sound detection” AND “deaf”.

2.3. Inclusion and Exclusion Criteria

Inclusion and exclusion concepts were carefully used to filter out studies that did not meet the necessary criteria and remove any overlap from the desired results [20]. Following the application of inclusion and exclusion concepts, the results were carefully examined to filter out studies that did not meet the necessary criteria. The inclusion and exclusion criteria are presented in Table 1.

Table 1.

The inclusion and exclusion criteria of the studies included in the present SLR.

2.4. Selection Procedure

Through the database search, the retrieved studies were 4186; after discarding 1113 duplicated studies, 3073 studies qualified for the screening. A total of 1915 studies did not correspond to our inclusion criteria listed in Table 1. An aggregate of 158 studies were included to be assessed for eligibility, after discarding off-topic studies that did not answer resource questions of the study. In total, 132 studies were eliminated according to the predefined reasons. Finally, 26 journal articles were included in the definitive review.

3. Results

Table 2 shows the retrieved papers depending on an examination. Twenty-six included studies were analyzed carefully to answer research questions.

Table 2.

General description of the included studies.

3.1. Common Methods Used to Notify DHI Individuals About Sound Events

Notifications mean bringing the DHI individual’s attention to a sound event. Notifying DHI individuals about sound events can be achieved using vibratory, visual, or touch-based behavior. Vibration can be used on a wearable device to inform the person about the sound phenomenon [46]; vibrations have many meanings, especially for a deaf or blind person. Individuals who are DHI rely on vibration cues as the main available strategy for being cautioned about calls and short message service (SMS) on a mobile phone as an alternative to normal ringtones. Also, the vibration has multiple patterns and lengths, which are used to recognize sound categories by feeling vibrations [26]. In some situations, the vibration is insufficient for DHI individuals to experience sound; the vision can aid DHI individuals in debriefing concepts (or assigning meaning) to sound events. Only fifteen out of twenty-six articles used notification to alert DHI people about sound events. Vibration and visual notification have been implemented in articles [4,21,24,30,31,32,38,39,40,42]. Vibration behavior has been used in articles [25,26,27,28]. Visual notifications were used in article [23].

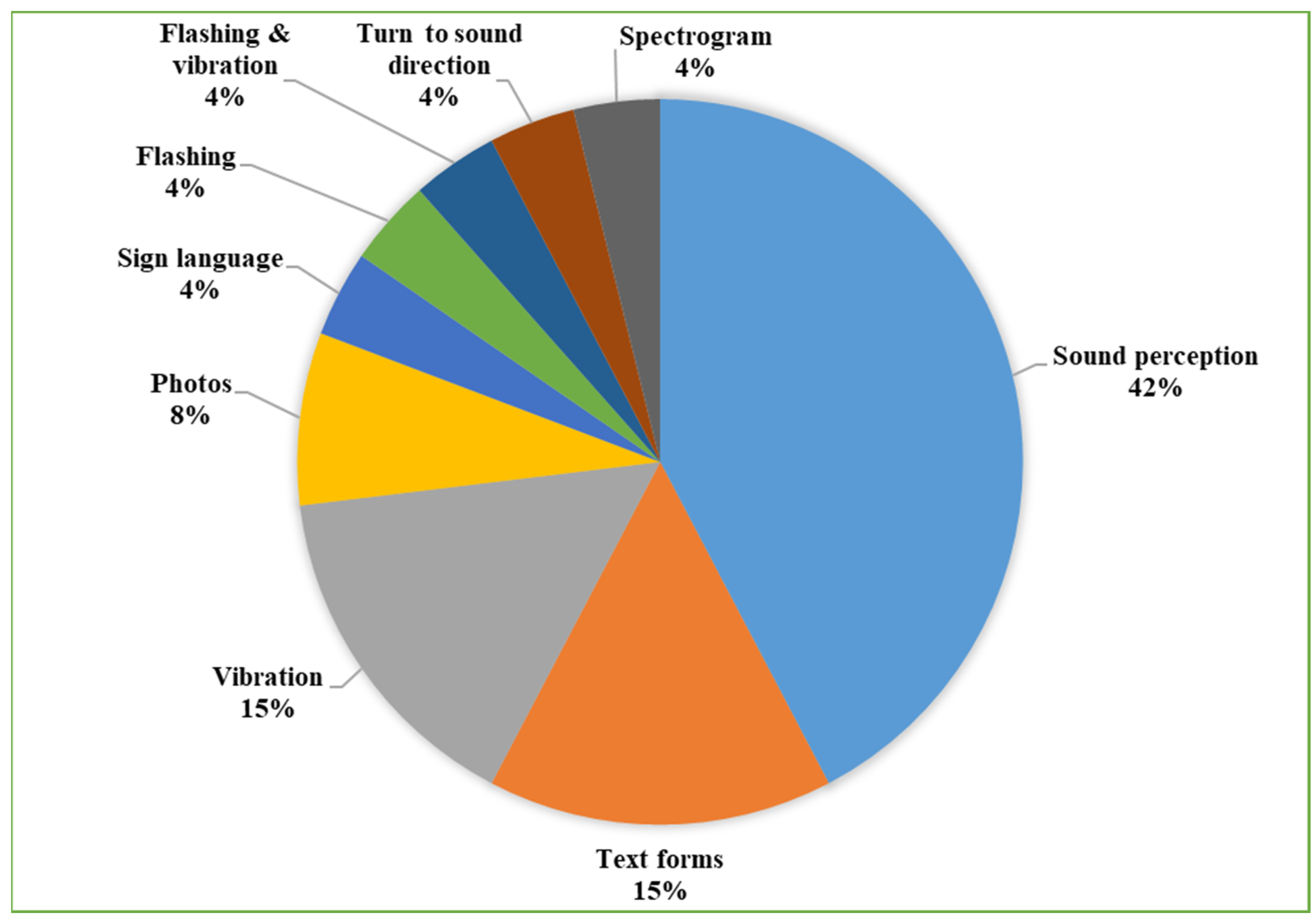

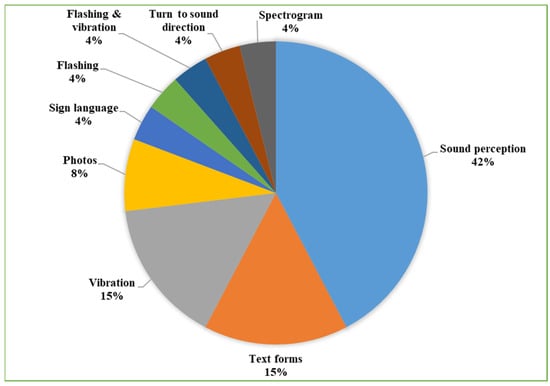

3.2. Common Methods Used to Interpret Sound Events for DHI Individuals

Interpreting sound events for DHI individuals involves converting auditory information into visual, vibrational, or aural information. Often, DHI individuals prefer visual methods to understand the information of sound, such as sign language, text, spectrograms, photos, and flashing in different colors. Vibration can also expose the meaning of sound to the deaf by providing convenient vibration. Moreover, interpreting sound or voice can be aurally special for hearing-impaired individuals by correcting their hearing senses by using CIs [47] or by improving their hearing sense with a hearing aid [48]. Figure 3 illustrates the various methods that were employed in each research to interpret sound events for DHI people. The method of revealing sound events through the ears was used in 42% of articles [22,29,33,34,35,36,37,41,43,44,45]. Text form was used in 15% of articles [4,21,32,39]. Vibration was implemented in 15% of articles [25,26,27,42]. Photos were implemented in 8% of articles [24,40]. Sign language was applied in 4% of articles to elucidate sound events [38]. Flashing was implemented in 4% of articles [23]. Flashing and vibration were carried out in 4% of articles [30]. Turn-to-sound direction was used in 4% of articles [28]. Spectrogram was utilized in 4% of articles [31].

Figure 3.

Methods used to interpret sound events for DHI people.

3.3. The Target Group Aimed by Included Studies

Some individuals may have mild-to-moderate hearing loss, while others may have severe-to-profound deafness. This variation in deafness severity can have an impact on the target groups that researchers choose to include in their studies. It is important for researchers to clearly define their target population and specify the criteria for inclusion in their studies. The included studies in this review targeted three different groups; 54% of research papers focused on hearing-impaired individuals [21,22,23,25,29,32,33,34,35,36,37,41,43,45], while 19% of studies focused on deaf [24,27,28,38,39], and 27% of studies targeted DHI individuals [4,26,30,31,40,42,44].

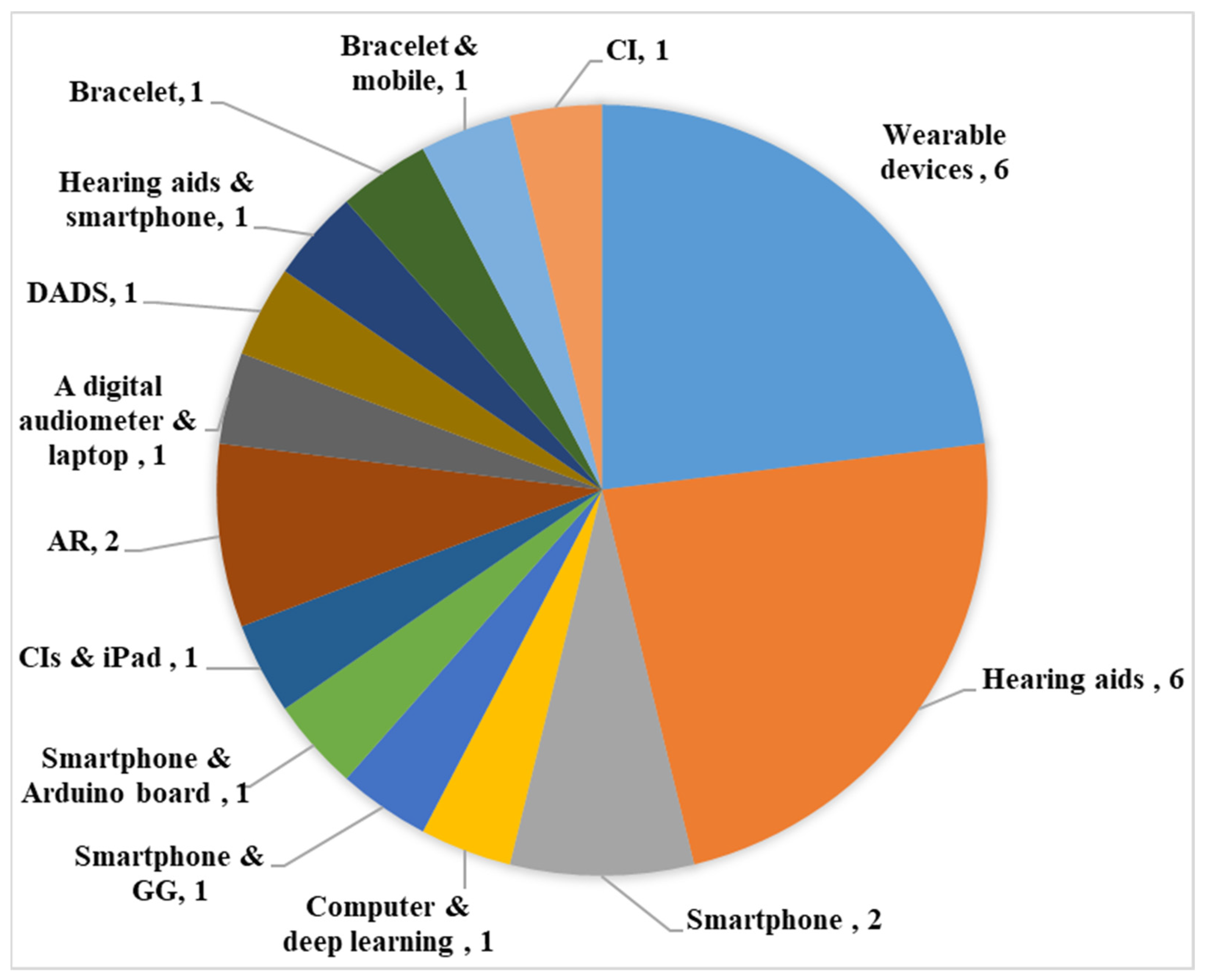

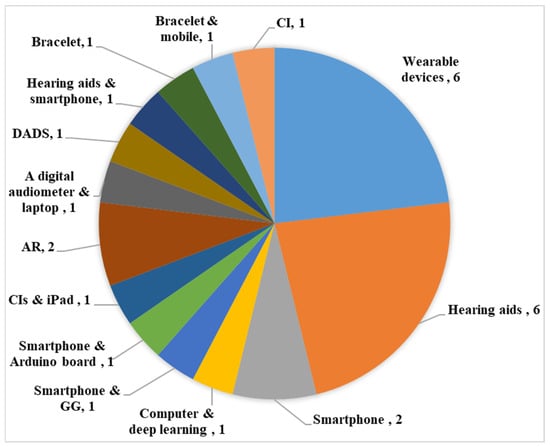

3.4. Assistive Technologies/Tools Used by DHI People to Recognize the Sound Event

Involving DHI individuals in community daily life activities has become a concern of Ats, to facilitate immersion in society, meet needs, and maintain privacy. Also, ATs play a role in enhancing auditory perceptions [49]. ATs comprise a wide scope of assistive, rehabilitative, and adaptive gadgets for individuals with exceptional needs [50]. Ats’ solutions have multiple forms; the most famous forms are implants, portables, and wearables, which have gained significant interest recently. These assistive technologies are essential for sustainable sound detection, by increasing sound detection systems’ accuracy, speeding up detection and alerting times, and improving user interfaces to make the technology more approachable and accessible. DHI people can benefit from ATs, each with specific advantages. The ideal option frequently varies depending on the person’s lifestyle, interests, and degree of hearing loss. Hearing aids, which simply amplify sound and are equipped with a noise suppression system, are used to treat hearing loss [51]. AR is an interactive technology that imitates the real world by using digital environmental [52]. Cochlear implants use a sound processor that sits behind the ear, picks up sounds, and sends sound signals to a transmitter located deep under the skin. The transmitter sends the sound via a wire that carries tiny electrodes attached to the inside of the ear [53]. Many included studies relied on artificial intelligence (AI) tools to provide ATs with intelligent, adaptive, and intuitive solutions that improve accessibility. Among these tools is ML, which focuses on creating statistical models and algorithms that let computers carry out tasks without direct human guidance [54]. ML algorithms gain knowledge from big data and then produce prognoses or make decisions by applying statistical analysis and leveraging patterns. ML is used to classify sound by examining data patterns that point to specific circumstances. These can be created and gathered from a variety of sources, including datasets and recorded sound. Several algorithms are employed for this, such as unsupervised learning algorithms that can find patterns in data without the requirement for explicit labels and supervised learning algorithms that are trained on labeled data. Following model training on the gathered dataset, the model can be used to predict specific sound events, based on data. To achieve this prediction, machine-learning researchers apply newly learned patterns to fresh data and use the output of the models to diagnose conditions. Deep learning is a branch of machine learning centered on multi-layered neural networks to analyze data and draw conclusions from large-scale databases that are efficient at tasks like audio processing, image identification, and natural language processing [55]. Deep learning uses representations derived from human brain knowledge to learn from unstructured or unlabeled data [56]. Using a deep learning approach is a big step towards creating automated systems that can identify hazardous situations, increase public safety, and improve the quality of life of DHI individuals [57]. Figure 4 demonstrates the different assistive technologies used in each study. The reviewed articles revealed the use of wearable devices in six articles [25,26,27,28,30,32]. Hearing aids were implemented in six articles [33,35,36,41,43,44]. The examined articles indicated the utilization of smartphones in two studies [29,38]. The reviewed articles revealed the use of computer and deep learning in one article [23]. The reviewed articles stated the use of smartphone and GG in one article [4]. One of the included studies implemented a smartphone and an Arduino board [21]. The review reported the utilization of CIs and iPad in one article [37]. AR technology has been used in two studies [31,42]. A digital audiometer and laptop were used in one retrieved study [22]. The review encompassed an article that used DADS [24]. One article among those reviewed displayed the utilization of hearing aids and smartphone [34]. The reviewed articles indicated the use of a bracelet in one article [39]. One article within the review mentioned the utilization of bracelets and smartphones [40]. The review reported the utilization of CIs in one study [45].

Figure 4.

Assistive technologies and tools used in included studies.

4. Discussion

People who are DHI have greatly benefited from the advancement and application of sound detection technologies, which have increased communication [4], safety [32], independence, and accessibility [38], leading to sustainability in their quality of life. Most of the included studies relied on vibration and visual. DHI individuals frequently rely more on other senses—for instance, vision and touch—to perceive sound and overstep these limitations in their auditory system [58]. Therefore, a multimodal approach that integrates multiple sensory channels may provide a more sustainable and effective means of communication and alertness for DHI individuals. Vibration may have limitations in conveying certain auditory information through different patterns of vibration, but it is very accurate in emulating the sound’s direction [18]. Visual notification can complement auditory input [59], particularly in situations where auditory information is limited or absent. Vision is considered the most important way to understand the information of sound, and it is also the first way to learn sign language, which is the primary language for deaf people [60]. Visual forms can include sign language, text, spectrogram, photos, and flashing to give an accurate meaning of sound.

Most research utilized sound perception to interpret sound for DHI individuals. This is because the essential goal of assistive hearing devices is to improve sustainable auditory perception, especially for those who are hard of hearing. Text forms aid DHI people in extracting the meaning of sound; however, text forms such as captioning require language competency for comprehension and recognizing meaning, which is limited to literate deaf people [61]. Therefore, text forms are especially important to the deaf, as they enable them to communicate with hearing people [62]. Detecting sound and translating it to sign language on deaf-assistive devices has significantly benefited deaf people in their daily lives. Although some deaf individuals know different communication modalities, such as spoken and written, they prefer sign language [63]. Vibration can interpret the sound for the deaf, but it is limited. For example, it alerts the deaf about the sound’s loudness by providing different intensities of vibration [30]; or different levels and magnitudes of vibration have been given for every sound [26].

Most of the included studies focused on individuals with hearing impairments, potentially indicating a larger global population of hearing-impaired individuals than those who are deaf [1]. Deaf individuals usually confront more considerable barriers and challenges in communication, social integration, and education compared to those who are hearing impaired, which may lead to a greater need for research and interventions specifically tailored to the needs of the deaf community. Particularly few ATs developed in a research setting can help deaf people [2].

The majority of the included papers relied on wearable devices and hearing aids as assistive technologies for DHI individuals. Wearable devices are widely adopted in the DHI community for the perception of sound and speech; they enable deaf individuals to carry out their daily activities independently, without relying on external assistance. In addition, wearable devices have remarkable potential due to their cost-effectiveness and light weight [4,64], providing real-time results with high accuracy in detecting speech and sound [46]. Wearable devices can be composed of a screen, camera, flashlight, microphone sensor, and vibration motor. Hearing aids are the primary treatment for hearing loss and are effective in restoring hearing [65], also considered a comparatively inexpensive solution. Smartphones are widely recognized as mobile assistive tools for DHI people because they contain valuable features, such as platforms that promote independent living, communication, social networking, a sense of security, and emergency assistance [66]. The smartphone has every feature required to construct a basic assistive device, but it lacks the ability to locate a sound source [67,68]. Also, smartphones have the capability to connect to other smart gadgets, for instance, smart homes [69]. AR has the potential to overcome communication barriers and promote inclusivity. AR can offer real-time captioning and environmental sound alerts [31]. Also, AR can used for sound direction definition [70]. There are other assistive technologies that have been used, including smart home, Raspberry Pi, and laptops that provide diverse needs within the DHI community. In addition, combining two or more assistive technologies in one system such as smartphone and Google glass enables researchers to perform specific tasks and obtain an efficient outcome.

5. Conclusions

Technologies such as sound detection have been developed to improve the quality of life for DHI individuals by enabling them to access essential information, greater independence, and safety. This SLR presented the detection of non-verbal sound technologies to improve DHI individuals’ lives by reviewing and examining twenty-six studies that met research criteria. The findings demonstrated that most of the included studies relied on vibration and visual alerts as the primary methods to inform DHI people about sound phenomena. This emphasizes the value of multimodal feedback in helping DHI people, since tactile and visual signals provide accessible, non-invasive ways to inform them about sounds. Also, most research utilized sound perception to interpret sound into interpretable formats for DHI people, which indicates the majority of these technologies targeted the hearing-impaired groups rather than those with total deafness or DHI. Wearable devices and hearing aids were the predominant form of assistive technology used in the majority of included studies, underscoring the convenience of such devices in the daily lives of DHI people.

5.1. Limitations

The SLR faced several limitations that should be acknowledged, such as the search process being confined to four chosen databases with specific keywords. In addition, the study was constrained by the articles selected within a given period. Also, the review focused on the journal articles. The review focused solely on articles available in full text. Additionally, the review targeted studies published in the English language. Some studies have been omitted because they lack sufficient information, such as not providing notifications to DHI people, or no adequate results were provided. The SLR targeted the DHI population which means deafblind people and unilateral hearing loss were omitted. Also, this SLR omitted causes of deafness and interventions such as ear anatomy. Furthermore, the SLR omitted speech recognition, which was deemed correlative to sound detection. These limitations may have omitted studies that were not included in the search strategy or design of SLR, which can limit the comprehensiveness of the review or access to certain resources.

5.2. Recommendations and Future Directions

Advances in detecting non-speech sound technologies can significantly influence the sustainability of quality of life for DHI individuals, as these technologies are essential to enhancing communication, safety, and overall well-being. After this SLR has been conducted, the authors recommend conducting more empirical studies and integrating the use of AI, which is important for ATs manufacturers, software designers, healthcare providers, and DHI people. For higher quality, ATs should accurately detect any sound anywhere, and should provide an understandable alert, whether audio-visual or sensory, for DHI individuals who do not want to miss any alert for any sound detected by ATs [71]. In addition to this, adopting different vibration patterns depends on the importance of the sound for DHI individuals. Creating a united platform to save all sounds, with consideration of different forms and shapes for each sound, will facilitate the identification and classification of sounds. Moreover, ATs should be accessible to DHI individuals at a reasonable price and should be highly accurate in recognizing speech and determining sound direction. Furthermore, ATs should be socially accepted among DHI people whether they are elderly, blind, or illiterate people, as well as protecting their privacy. We hope these recommendations can enlighten researchers, ATs manufacturers, and software developers to improve the sustainability of quality of life for DHI people.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/su16208976/s1, PRISMA Checklist.

Author Contributions

Conceptualization, H.B.M.M. and N.C.; methodology, H.B.M.M. and H.B.M.M.; validation, H.B.M.M. and N.C.; formal analysis, H.B.M.M. and N.C.; investigation, H.B.M.M.; data curation, H.B.M.M.; writing—original draft preparation, H.B.M.M.; writing—review and editing, H.B.M.M. and N.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

No data were used for this study.

Acknowledgments

The authors would like to acknowledge the department of Computer Information Systems—Near East University for their support.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- World Health Organization. Deafness and Hearing Loss. Available online: https://www.who.int/news-room/fact-sheets/detail/deafness-and-hearing-loss#:~:text=Over%205%25%20of%20the%20world’s,will%20have%20disabling%20hearing%20loss (accessed on 27 February 2024).

- Hermawati, S.; Pieri, K. Assistive technologies for severe and profound hearing loss: Beyond hearing aids and implants. Assist. Technol. 2019, 32, 182–193. [Google Scholar] [CrossRef] [PubMed]

- Zare, S.; Ghotbi-Ravandi, M.R.; ElahiShirvan, H.; Ahsaee, M.G.; Rostami, M. Predicting and weighting the factors affecting workers’ hearing loss based on audiometric data using C5 algorithm. Ann. Glob. Health 2019, 85, 88. [Google Scholar] [CrossRef] [PubMed]

- Alkhalifa, S.; Al-Razgan, M. Enssat: Wearable technology application for the deaf and hard of hearing. Multimed. Tools. Appl. 2018, 77, 22007–22031. [Google Scholar] [CrossRef]

- Mweri, J. Privacy and Confidentiality in Health Care Access for People Who are Deaf: The kenyan Case. Health Pol. 2018, 1, 2–5. [Google Scholar]

- Halim, Z.; Abbas, G. A kinect-based sign language hand gesture recognition system for hearing-and speech-impaired: A pilot study of Pakistani sign language. Assist. Technol. 2015, 27, 34–43. [Google Scholar] [CrossRef]

- Singleton, J.L.; Remillard, E.T.; Mitzner, T.L.; Rogers, W.A. Everyday technology use among older deaf adults. Disabil. Rehabil. Assist. Technol. 2019, 14, 325–332. [Google Scholar] [CrossRef]

- Dornhoffer, J.R.; Holcomb, M.A.; Meyer, T.A.; Dubno, J.R.; McRackan, T.R. Factors influencing time to cochlear implantation. Otol. Neurotol. 2020, 41, 173. [Google Scholar] [CrossRef]

- Hrastinski, I.; Wilbur, R.B. Academic achievement of deaf and hard-of-hearing students in an ASL/English bilingual program. J. Deaf Stud. Deaf Educ. 2016, 21, 156–170. [Google Scholar] [CrossRef]

- Ashori, M.; Jalil-Abkenar, S.S. Emotional intelligence: Quality of life and cognitive emotion regulation of deaf and hard-of-hearing adolescents. Deaf Educ. Int. 2021, 23, 84–102. [Google Scholar] [CrossRef]

- Jaiyeola, M.T.; Adeyemo, A.A. Quality of life of deaf and hard of hearing students in Ibadan metropolis, Nigeria. PLoS ONE 2018, 13, e0190130. [Google Scholar] [CrossRef]

- Otoom, M.; Alzubaidi, M.A.; Aloufee, R. Novel navigation assistive device for deaf drivers. Assist. Technol. 2020, 34, 129–139. [Google Scholar] [CrossRef] [PubMed]

- Mesaros, A.; Heittola, T.; Virtanen, T. Metrics for polyphonic sound event detection. Appl. Sci. 2016, 6, 162. [Google Scholar] [CrossRef]

- Mirzaei, M.R.; Ghorshi, S.; Mortazavi, M. Audio-visual speech recognition techniques in augmented reality environments. Visual Comput. 2014, 30, 245–257. [Google Scholar] [CrossRef]

- Gfeller, B.; Roblek, D.; Tagliasacchi, M. One-shot conditional audio filtering of arbitrary sounds. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 501–505. [Google Scholar]

- Agrawal, J.; Gupta, M.; Garg, H. A review on speech separation in cocktail party environment: Challenges and approaches. Multimed. Tools Appl. 2023, 82, 31035–31067. [Google Scholar] [CrossRef]

- Zhao, C.; Liu, Y.; Yang, J.; Chen, P.; Gao, M.; Zhao, S. Sound-localisation performance in patients with congenital unilateral microtia and atresia fitted with an active middle ear implant. Eur. Arch. Otorhinolaryngol. 2021, 278, 31–39. [Google Scholar] [CrossRef]

- Mirzaei, M.; Kán, P.; Kaufmann, H. Effects of Using Vibrotactile Feedback on Sound Localization by Deaf and Hard-of-Hearing People in Virtual Environments. Electronics 2021, 10, 2794. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. Syst. Rev. 2021, 10, 89. [Google Scholar] [CrossRef]

- Normadhi, N.B.A.; Shuib, L.; Nasir, H.N.M.; Bimba, A.; Idris, N.; Balakrishnan, V. Identification of personal traits in adaptive learning environment: Systematic literature review. Comput. Educ. 2019, 130, 168–190. [Google Scholar] [CrossRef]

- Aktaş, F.; Kavuş, E.; Kavuş, Y. A Real Time Infant Health Monitoring System for Hard of Hearing Parents by using Android-based Mobil Devices. J. Electr. Electron. Eng. 2017, 17, 3121–3127. [Google Scholar]

- Liu, T.; Wu, C.-C.; Huang, K.-C.; Liao, J.-J. Effects of frequency and signal-to-noise ratio on accuracy of target sound detection with varied inferences among Taiwanese hearing-impaired individuals. Appl. Acoust. 2020, 161, 107176. [Google Scholar] [CrossRef]

- Ramirez, A.E.; Donati, E.; Chousidis, C. A siren identification system using deep learning to aid hearing-impaired people. Eng. Appl. Artif. Intell. 2022, 114, 105000. [Google Scholar] [CrossRef]

- Saifan, R.R.; Dweik, W.; Abdel-Majeed, M. A machine learning based deaf assistance digital system. Comput. Appl. Eng. Educ. 2018, 26, 1008–1019. [Google Scholar] [CrossRef]

- Yağanoğlu, M.; Köse, C. Real-time detection of important sounds with a wearable vibration based device for hearing-impaired people. Electronics 2018, 7, 50. [Google Scholar] [CrossRef]

- Perrotta, M.V.; Asgeirsdottir, T.; Eagleman, D.M. Deciphering sounds through patterns of vibration on the skin. Neuroscience 2021, 458, 77–86. [Google Scholar] [CrossRef] [PubMed]

- Bandara, M.; Balasuriya, D. Design of a road-side threat alert system for deaf pedestrians. J. Inst. Eng. 2017, 2, 61–70. [Google Scholar] [CrossRef]

- Shimoyama, R. Wearable Hearing Assist System to Provide Hearing-Dog Functionality. Robotics 2019, 8, 49. [Google Scholar] [CrossRef]

- Teki, S.; Kumar, S.; Griffiths, T.D. Large-scale analysis of auditory segregation behavior crowdsourced via a smartphone app. PLoS ONE 2016, 11, e0153916. [Google Scholar] [CrossRef]

- Yağanoğlu, M.; Köse, C. Wearable vibration based computer interaction and communication system for deaf. Appl. Sci. 2017, 7, 1296. [Google Scholar] [CrossRef]

- Asakura, T. Augmented-Reality Presentation of Household Sounds for Deaf and Hard-of-Hearing People. Sensors 2023, 23, 7616. [Google Scholar] [CrossRef]

- Chin, C.-L.; Lin, C.-C.; Wang, J.-W.; Chin, W.-C.; Chen, Y.-H.; Chang, S.-W.; Huang, P.-C.; Zhu, X.; Hsu, Y.-L.; Liu, S.-H. A Wearable Assistant Device for the Hearing Impaired to Recognize Emergency Vehicle Sirens with Edge Computing. Sensors 2023, 23, 7454. [Google Scholar] [CrossRef]

- Lundbeck, M.; Grimm, G.; Hohmann, V.; Laugesen, S.; Neher, T. Sensitivity to angular and radial source movements as a function of acoustic complexity in normal and impaired hearing. Trends Hear. 2017, 21, 2331216517717152. [Google Scholar] [CrossRef] [PubMed]

- Bhat, G.S.; Shankar, N.; Panahi, I.M.S. Design and Integration of Alert Signal Detector and Separator for Hearing Aid Applications. IEEE Access 2020, 8, 106296–106309. [Google Scholar] [CrossRef] [PubMed]

- Lundbeck, M.; Grimm, G.; Hohmann, V.; Bramsløw, L.; Neher, T. Effects of Directional Hearing Aid Settings on Different Laboratory Measures of Spatial Awareness Perception. Audiol. Res. 2018, 8, 215. [Google Scholar] [CrossRef] [PubMed]

- Lundbeck, M.; Hartog, L.; Grimm, G.; Hohmann, V.; Bramsløw, L.; Neher, T. Influence of multi-microphone signal enhancement algorithms on the acoustics and detectability of angular and radial source movements. Trends Hear. 2018, 22, 2331216518779719. [Google Scholar] [CrossRef]

- Pralus, A.; Hermann, R.; Cholvy, F.; Aguera, P.-E.; Moulin, A.; Barone, P.; Grimault, N.; Truy, E.; Tillmann, B.; Caclin, A. Rapid Assessment of Non-Verbal Auditory Perception in Normal-Hearing Participants and Cochlear Implant Users. J. Clin. Med. 2021, 10, 2093. [Google Scholar] [CrossRef]

- da Rosa Tavares, J.E.; Victória Barbosa, J.L. Apollo SignSound: An intelligent system applied to ubiquitous healthcare of deaf people. J. Reliab. Intell. Environ. 2021, 7, 157–170. [Google Scholar] [CrossRef]

- Otálora, A.S.; Moreno, N.C.; Osorio, D.E.C.; Trujillo, L.A.G. Prototype of bracelet detection alarm sounds for deaf and hearing loss. ARPN J. Eng. Appl. Sci. 2017, 12, 1111–1117. [Google Scholar]

- An, J.-H.; Koo, N.-K.; Son, J.-H.; Joo, H.-M.; Jeong, S. Development on Deaf Support Application Based on Daily Sound Classification Using Image-based Deep Learning. JOIV: Int. J. Inform. Vis. 2022, 6, 250–255. [Google Scholar] [CrossRef]

- Jemaa, A.B.; Irato, G.; Zanela, A.; Brescia, A.; Turki, M.; Jaïdane, M. Congruent auditory display and confusion in sound localization: Case of elderly drivers. Transp. Res. Part F Traffic Psychol. Behav. 2018, 59, 524–534. [Google Scholar] [CrossRef]

- Mirzaei, M.; Kan, P.; Kaufmann, H. EarVR: Using ear haptics in virtual reality for deaf and Hard-of-Hearing people. IEEE Trans. Vis. Comput. Graph. 2020, 26, 2084–2093. [Google Scholar] [CrossRef]

- Brungart, D.S.; Cohen, J.; Cord, M.; Zion, D.; Kalluri, S. Assessment of auditory spatial awareness in complex listening environments. J. Acoust. Soc. Am. 2014, 136, 1808–1820. [Google Scholar] [CrossRef] [PubMed]

- Picou, E.M.; Rakita, L.; Buono, G.H.; Moore, T.M. Effects of increasing the overall level or fitting hearing aids on emotional responses to sounds. Trends Hear. 2021, 25, 23312165211049938. [Google Scholar] [CrossRef] [PubMed]

- Hamel, B.L.; Vasil, K.; Shafiro, V.; Moberly, A.C.; Harris, M.S. Safety-relevant environmental sound identification in cochlear implant candidates and users. Laryngoscope 2020, 130, 1547–1551. [Google Scholar] [CrossRef] [PubMed]

- Yağanoğlu, M. Real time wearable speech recognition system for deaf persons. Comput. Electr. Eng. 2021, 91, 107026. [Google Scholar] [CrossRef]

- Fullerton, A.M.; Vickers, D.A.; Luke, R.; Billing, A.N.; McAlpine, D.; Hernandez-Perez, H.; Peelle, J.E.; Monaghan, J.J.; McMahon, C.M. Cross-modal functional connectivity supports speech understanding in cochlear implant users. Cereb. Cortex 2023, 33, 3350–3371. [Google Scholar] [CrossRef]

- Dillard, L.K.; Der, C.M.; Laplante-Lévesque, A.; Swanepoel, D.W.; Thorne, P.R.; McPherson, B.; de Andrade, V.; Newall, J.; Ramos, H.D.; Kaspar, A. Service delivery approaches related to hearing aids in low-and middle-income countries or resource-limited settings: A systematic scoping review. PLoS Glob. Public Health 2024, 4, e0002823. [Google Scholar] [CrossRef]

- Han, J.S.; Lim, J.H.; Kim, Y.; Aliyeva, A.; Seo, J.-H.; Lee, J.; Park, S.N. Hearing Rehabilitation with a Chat-Based Mobile Auditory Training Program in Experienced Hearing Aid Users: Prospective Randomized Controlled Study. JMIR mHealth uHealth 2024, 12, e50292. [Google Scholar] [CrossRef]

- Kuriakose, B.; Shrestha, R.; Sandnes, F.E. Tools and technologies for blind and visually impaired navigation support: A review. IETE Tech. Rev. 2022, 39, 3–18. [Google Scholar] [CrossRef]

- Andersen, A.H.; Santurette, S.; Pedersen, M.S.; Alickovic, E.; Fiedler, L.; Jensen, J.; Behrens, T. Creating clarity in noisy environments by using deep learning in hearing aids. Semin. Hear. 2021, 42, 260–281. [Google Scholar] [CrossRef]

- Fitria, T.N. Augmented reality (AR) and virtual reality (VR) technology in education: Media of teaching and learning: A review. Int. J. Comput. Inf. Syst. 2023, 4, 14–25. [Google Scholar]

- Athanasopoulos, M.; Samara, P.; Athanasopoulos, I. Advances in 3D Inner Ear Reconstruction Software for Cochlear Implants: A Comprehensive Review. Methods Protoc. 2024, 7, 46. [Google Scholar] [CrossRef] [PubMed]

- Iyortsuun, N.K.; Kim, S.-H.; Jhon, M.; Yang, H.-J.; Pant, S. A review of machine learning and deep learning approaches on mental health diagnosis. Healthcare 2023, 11, 285. [Google Scholar] [CrossRef] [PubMed]

- Halbouni, A.; Gunawan, T.S.; Habaebi, M.H.; Halbouni, M.; Kartiwi, M.; Ahmad, R. Machine learning and deep learning approaches for cybersecurity: A review. IEEE Access 2022, 10, 19572–19585. [Google Scholar] [CrossRef]

- ZainEldin, H.; Gamel, S.A.; Talaat, F.M.; Aljohani, M.; Baghdadi, N.A.; Malki, A.; Badawy, M.; Elhosseini, M.A. Silent no more: A comprehensive review of artificial intelligence, deep learning, and machine learning in facilitating deaf and mute communication. Artif. Intell. Rev. 2024, 57, 188. [Google Scholar] [CrossRef]

- Smailov, N.; Dosbayev, Z.; Omarov, N.; Sadykova, B.; Zhekambayeva, M.; Zhamangarin, D.; Ayapbergenova, A. A novel deep CNN-RNN approach for real-time impulsive sound detection to detect dangerous events. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 271–280. [Google Scholar] [CrossRef]

- Ohshiro, K.; Cartwright, M. How people who are deaf, Deaf, and hard of hearing use technology in creative sound activities. In Proceedings of the 24th International ACM SIGACCESS Conference on Computers and Accessibility, Athens, Greece, 23–26 October 2022; pp. 1–4. [Google Scholar]

- Findlater, L.; Chinh, B.; Jain, D.; Froehlich, J.; Kushalnagar, R.; Lin, A.C. Deaf and hard-of-hearing individuals’ preferences for wearable and mobile sound awareness technologies. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–13. [Google Scholar]

- Birinci, F.G.; Saricoban, A. The effectiveness of visual materials in teaching vocabulary to deaf students of EFL. J. Lang. Linguist. Stud. 2021, 17, 628–645. [Google Scholar] [CrossRef]

- Campos, V.; Cartes-Velásquez, R.; Bancalari, C. Development of an app for the dental care of Deaf people: Odontoseñas. Univers. Access Inf. Soc. 2020, 19, 451–459. [Google Scholar] [CrossRef]

- Alothman, A.A. Language and Literacy of Deaf Children. Psychol. Educ. 2021, 58, 799–819. [Google Scholar] [CrossRef]

- Domagała-Zyśk, E.; Podlewska, A. Strategies of oral communication of deaf and hard-of-hearing (D/HH) non-native English users. Eur. J. Spec. Needs Educ. 2019, 34, 156–171. [Google Scholar] [CrossRef]

- Papatsimouli, M.; Sarigiannidis, P.; Fragulis, G.F. A Survey of Advancements in Real-Time Sign Language Translators: Integration with IoT Technology. Technologies 2023, 11, 83. [Google Scholar] [CrossRef]

- Sanders, M.E.; Kant, E.; Smit, A.L.; Stegeman, I. The effect of hearing aids on cognitive function: A systematic review. PLoS ONE 2021, 16, e0261207. [Google Scholar] [CrossRef] [PubMed]

- Guan, W.; Wang, S.; Liu, C. Influence of perceived discrimination on problematic smartphone use among Chinese deaf and hard-of-hearing students: Serial mediating effects of sense of security and social avoidance. Addict. Behav. 2023, 136, 107470. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Yang, Y.; Ye, Z.; Wang, Y.; Chen, Y. EarCase: Sound Source Localization Leveraging Mini Acoustic Structure Equipped Phone Cases for Hearing-challenged People. In Proceedings of the Twenty-Fourth International Symposium on Theory, Algorithmic Foundations, and Protocol Design for Mobile Networks and Mobile Computing, Washington, DC, USA, 23–26 October 2023; pp. 240–249. [Google Scholar]

- Ashraf, I.; Hur, S.; Park, Y. Smartphone sensor based indoor positioning: Current status, opportunities, and future challenges. Electronics 2020, 9, 891. [Google Scholar] [CrossRef]

- Khan, M.A.; Ahmad, I.; Nordin, A.N.; Ahmed, A.E.-S.; Mewada, H.; Daradkeh, Y.I.; Rasheed, S.; Eldin, E.T.; Shafiq, M. Smart android based home automation system using internet of things (IoT). Sustainability 2022, 14, 10717. [Google Scholar] [CrossRef]

- Gilberto, L.G.; Bermejo, F.; Tommasini, F.C.; García Bauza, C. Virtual Reality Audio Game for Entertainment & Sound Localization Training. ACM Trans. Appl. Percept. 2024. [Google Scholar] [CrossRef]

- Lee, S.; Hubert-Wallander, B.; Stevens, M.; Carroll, J.M. Understanding and designing for deaf or hard of hearing drivers on Uber. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–12. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).