Sustainable Agile Identification and Adaptive Risk Control of Major Disaster Online Rumors Based on LLMs and EKGs

Abstract

1. Introduction

2. Related Work

2.1. Generative Topic Models

2.2. Graph-Based Models

2.3. Statistical Classification Methods

2.4. Novel Framework for Proactive Rumor Management

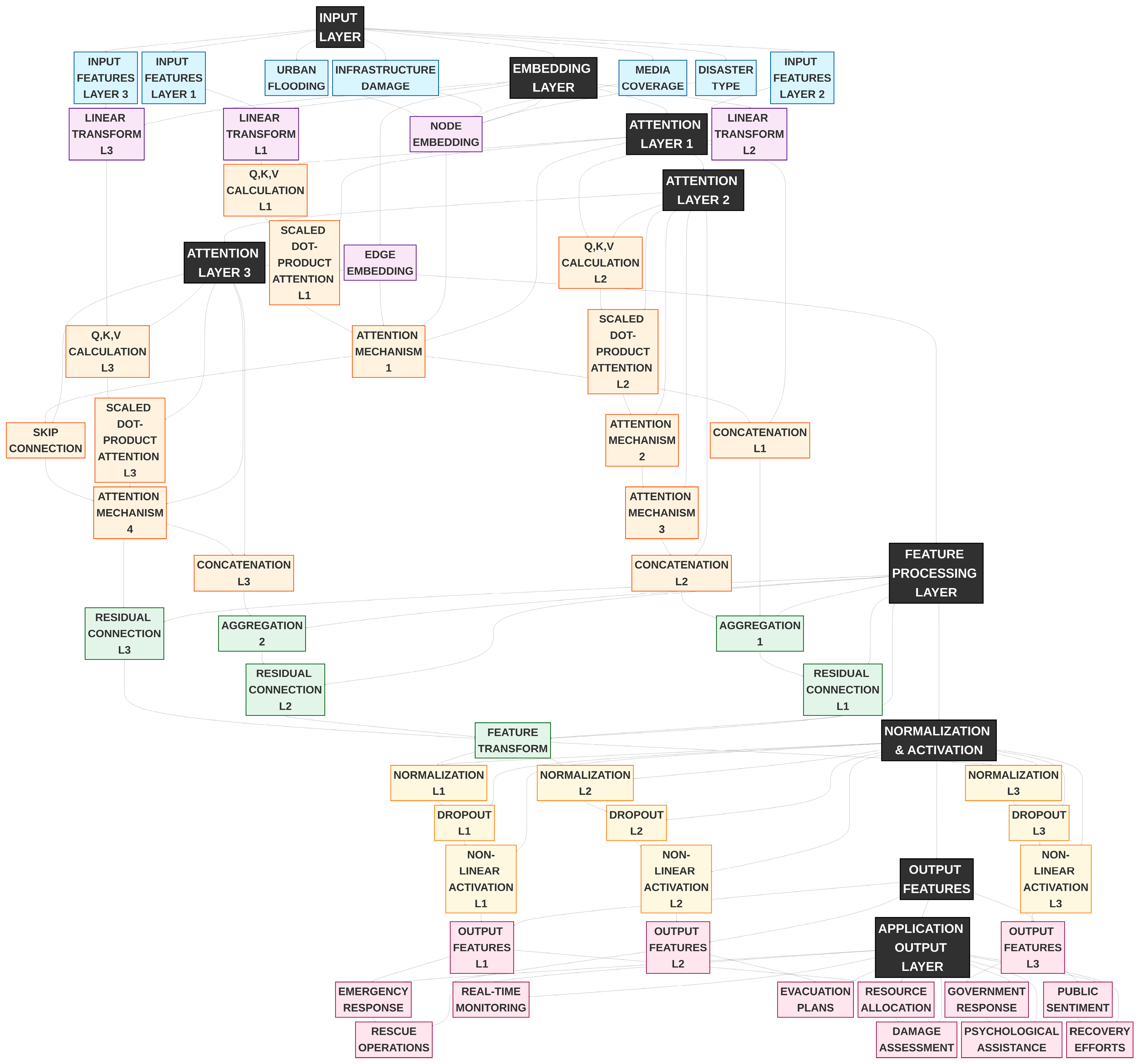

3. Proposed Approach

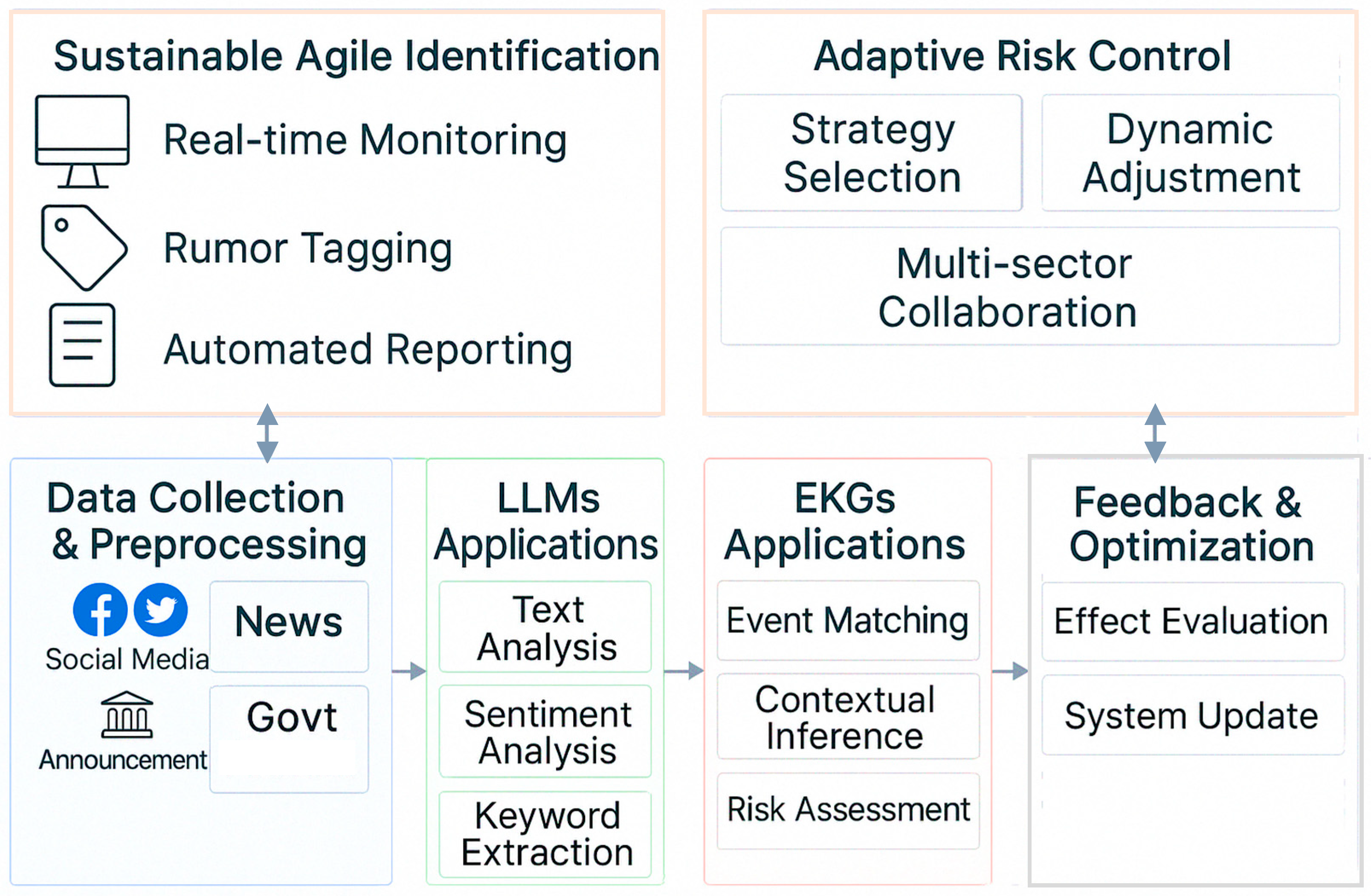

3.1. Overall Framework

3.2. Data Collection and Preprocessing

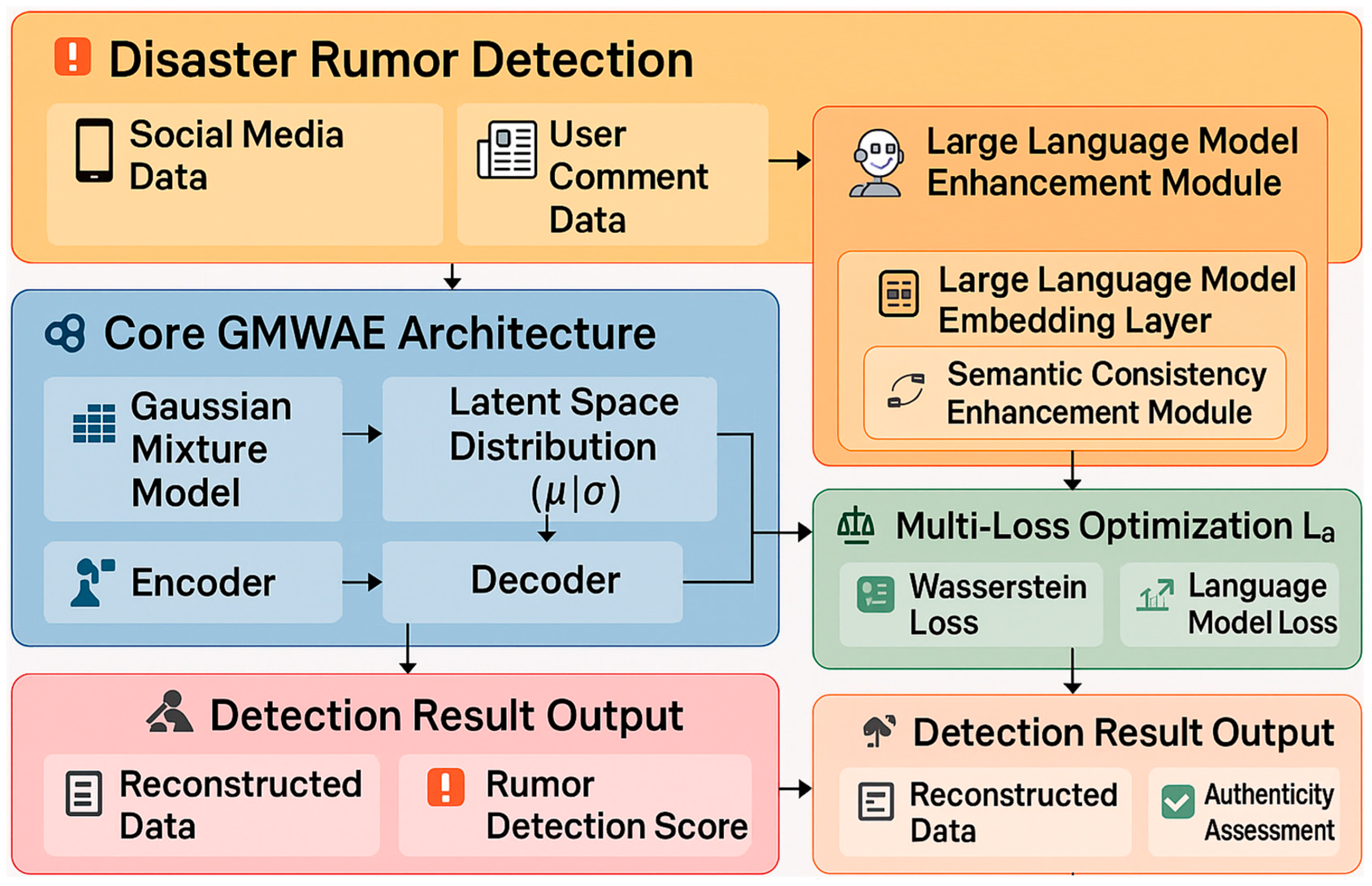

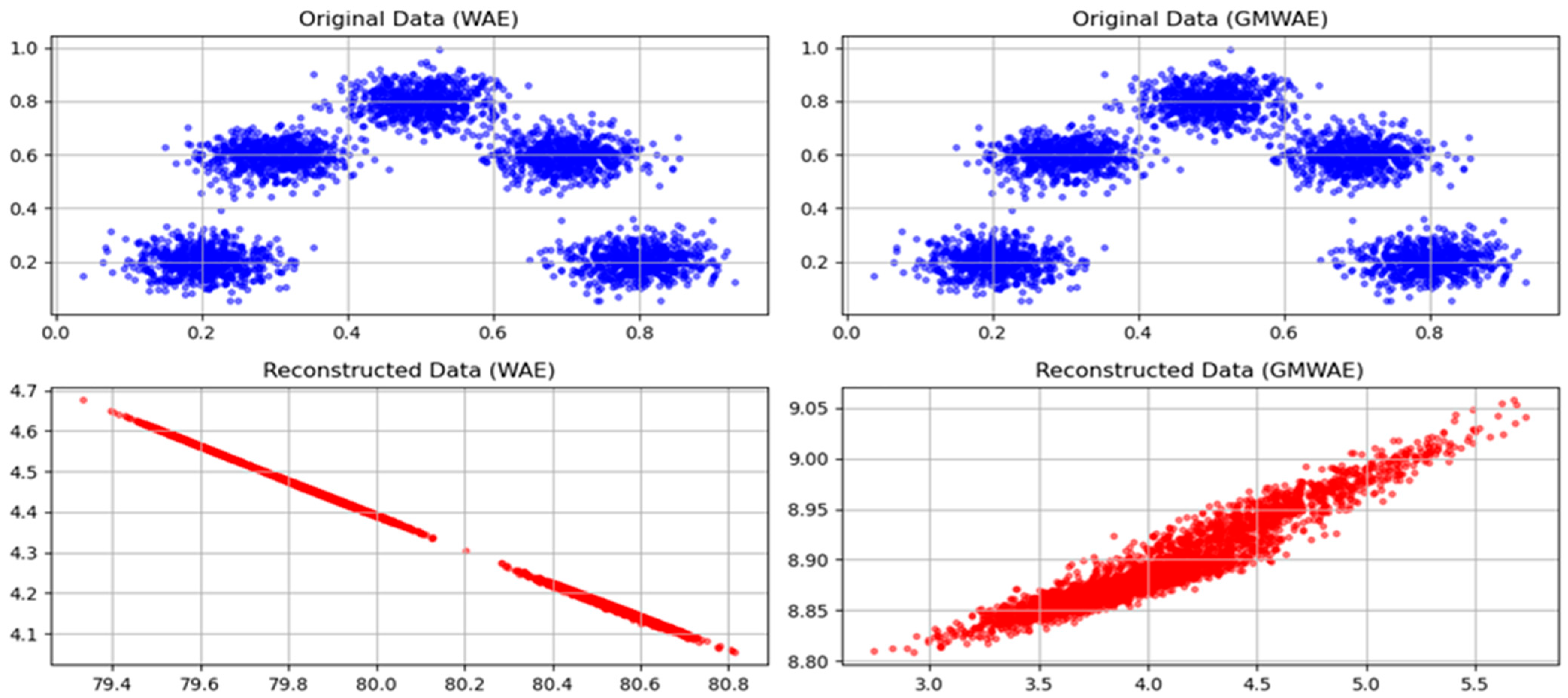

3.3. LLM Construction

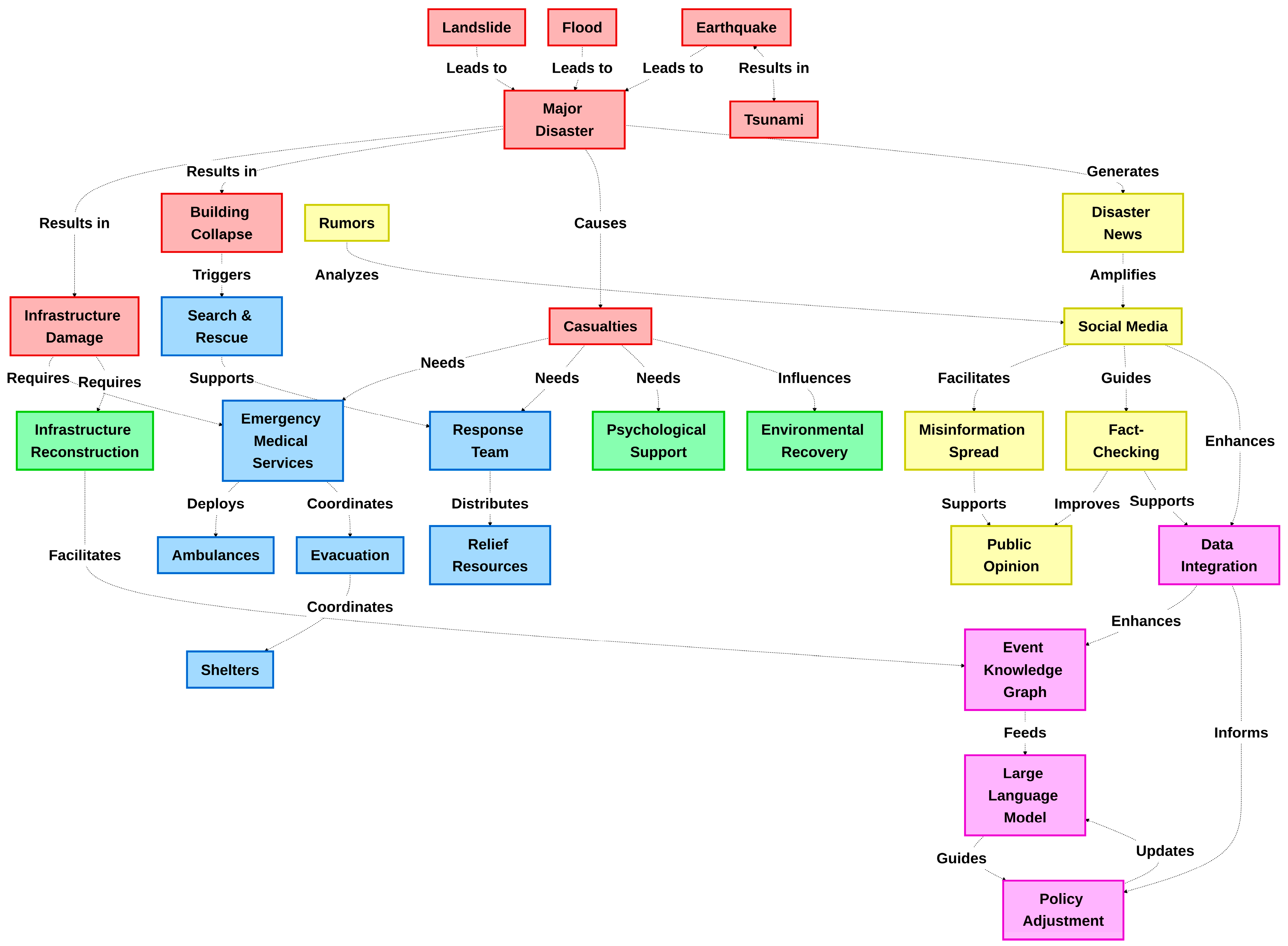

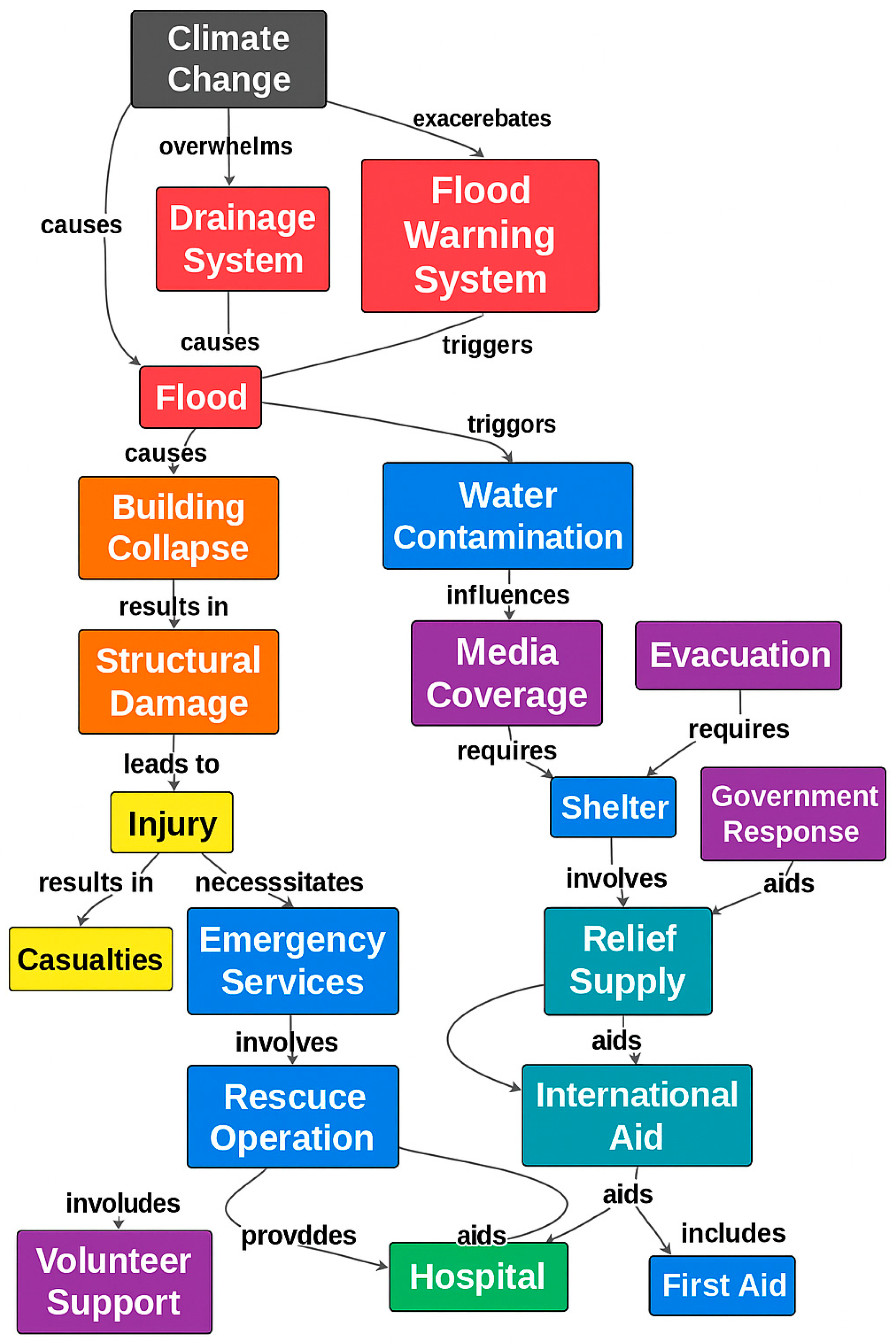

3.4. EKG Construction

3.5. Rumor Identification and Risk Control

4. Case Study of Major Disaster-Related Online Rumors

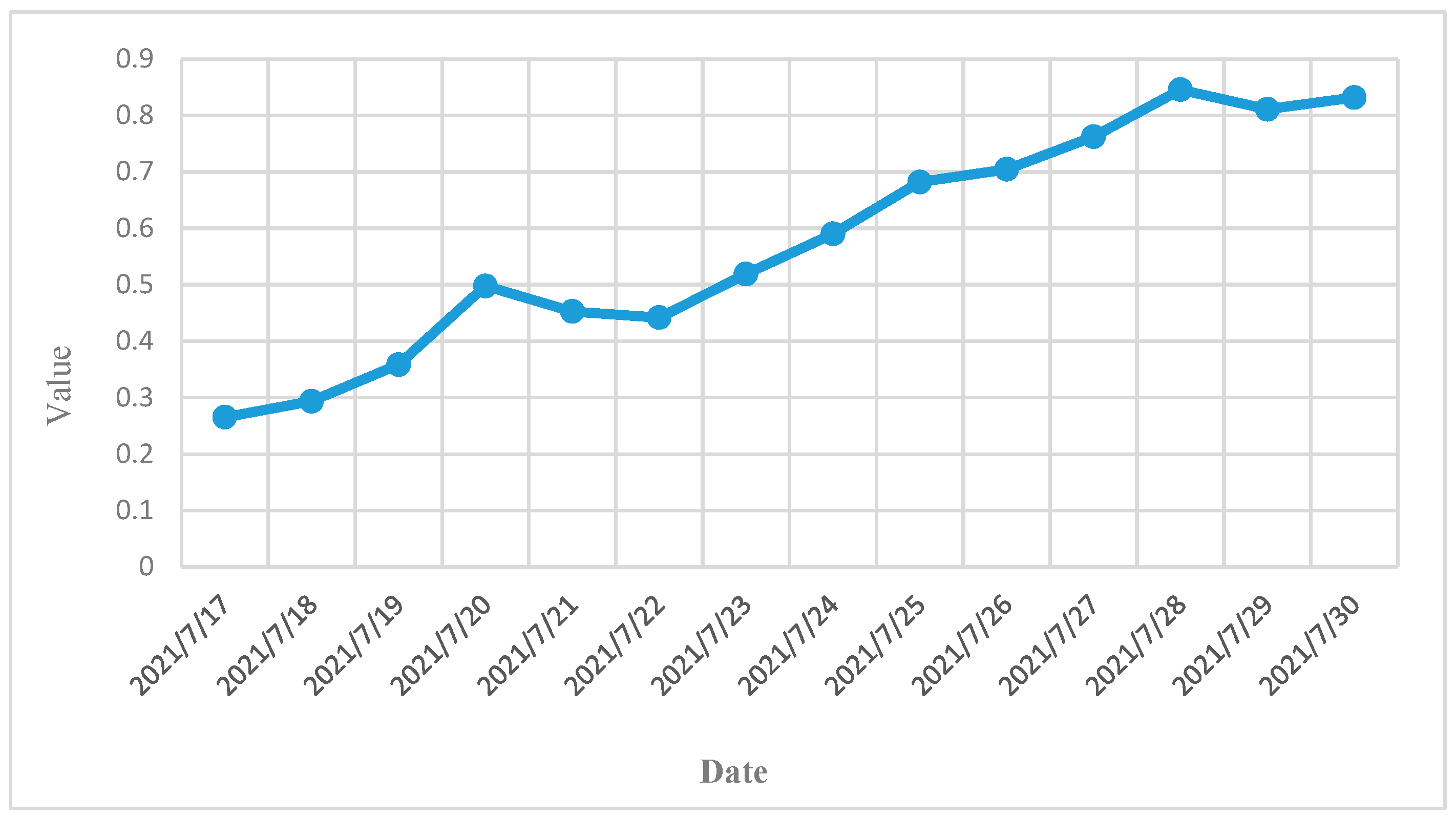

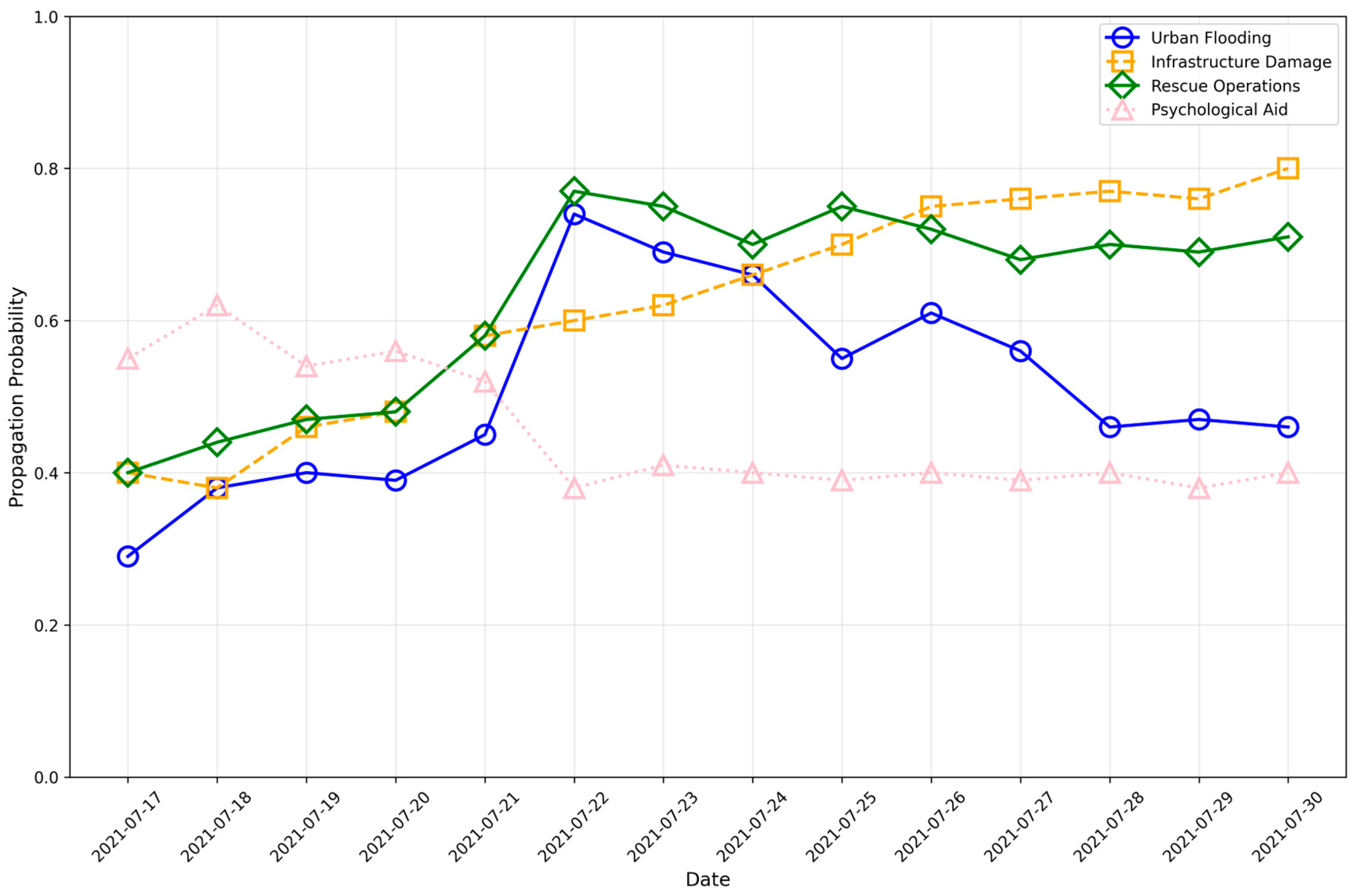

4.1. Data Collection and Preprocessing Related to the 2021 Zhengzhou Flood

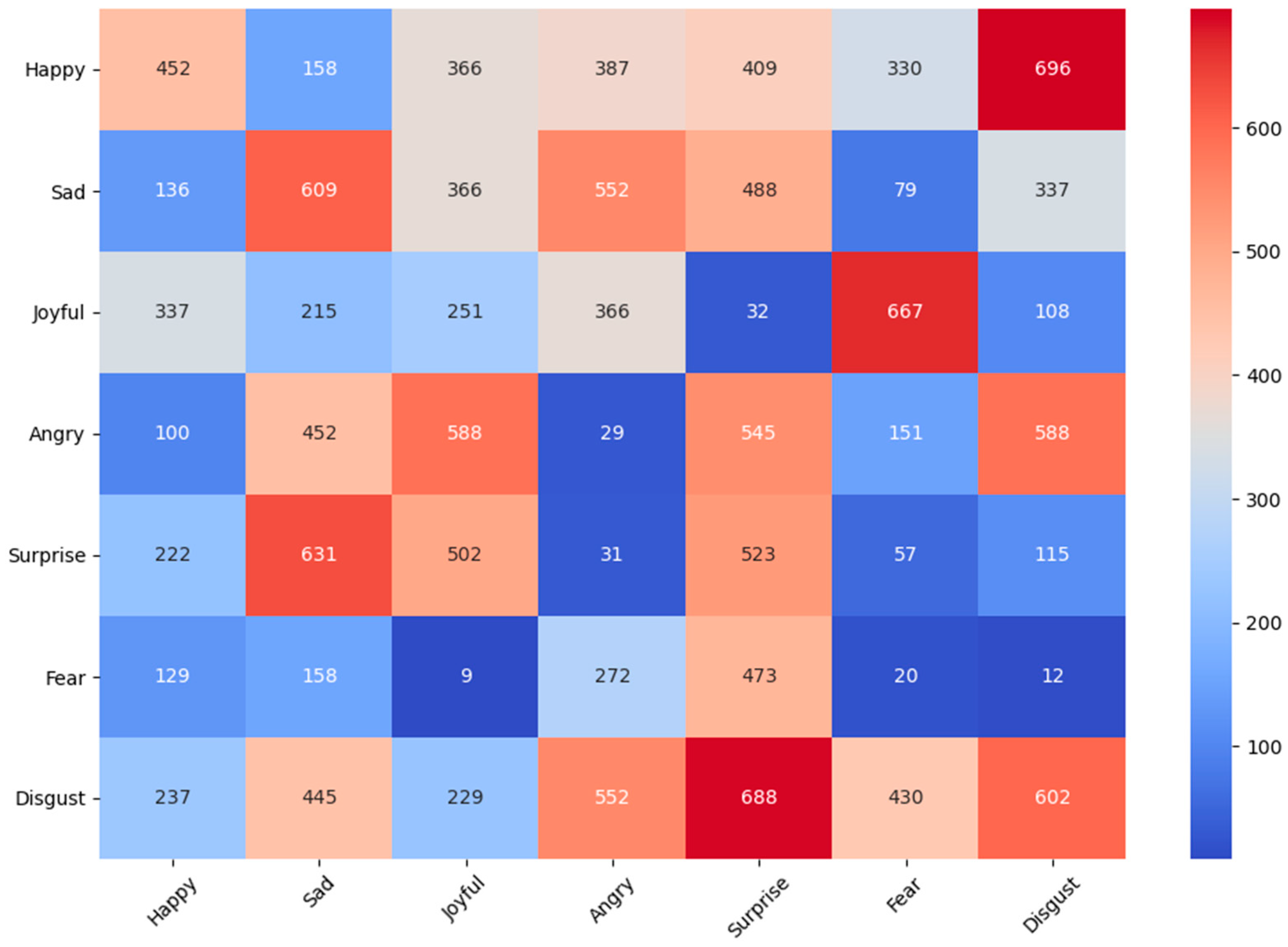

4.2. Emotion Analysis Based on LLMs

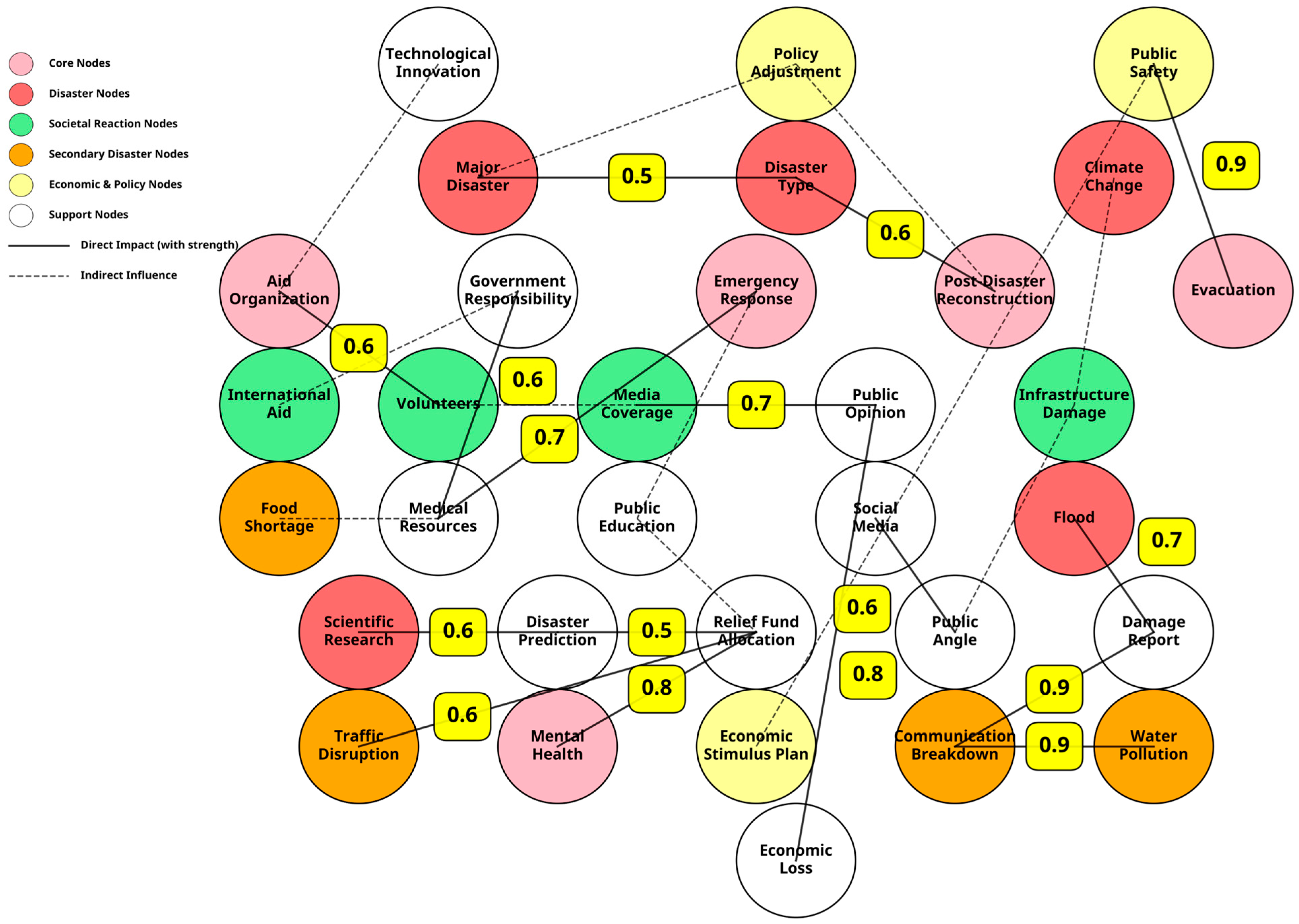

4.3. Research on the 2021 Zhengzhou Flood Case Study

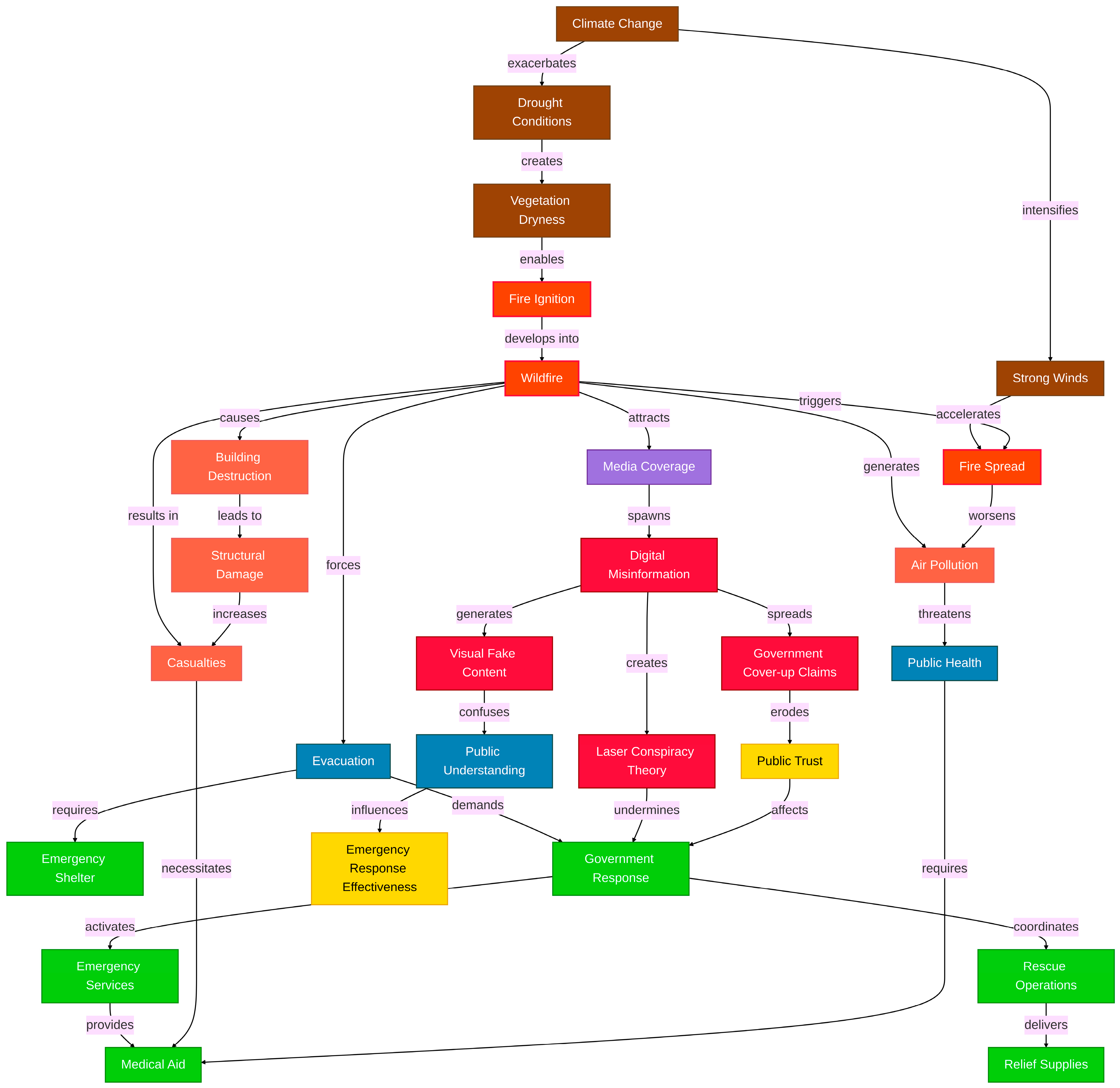

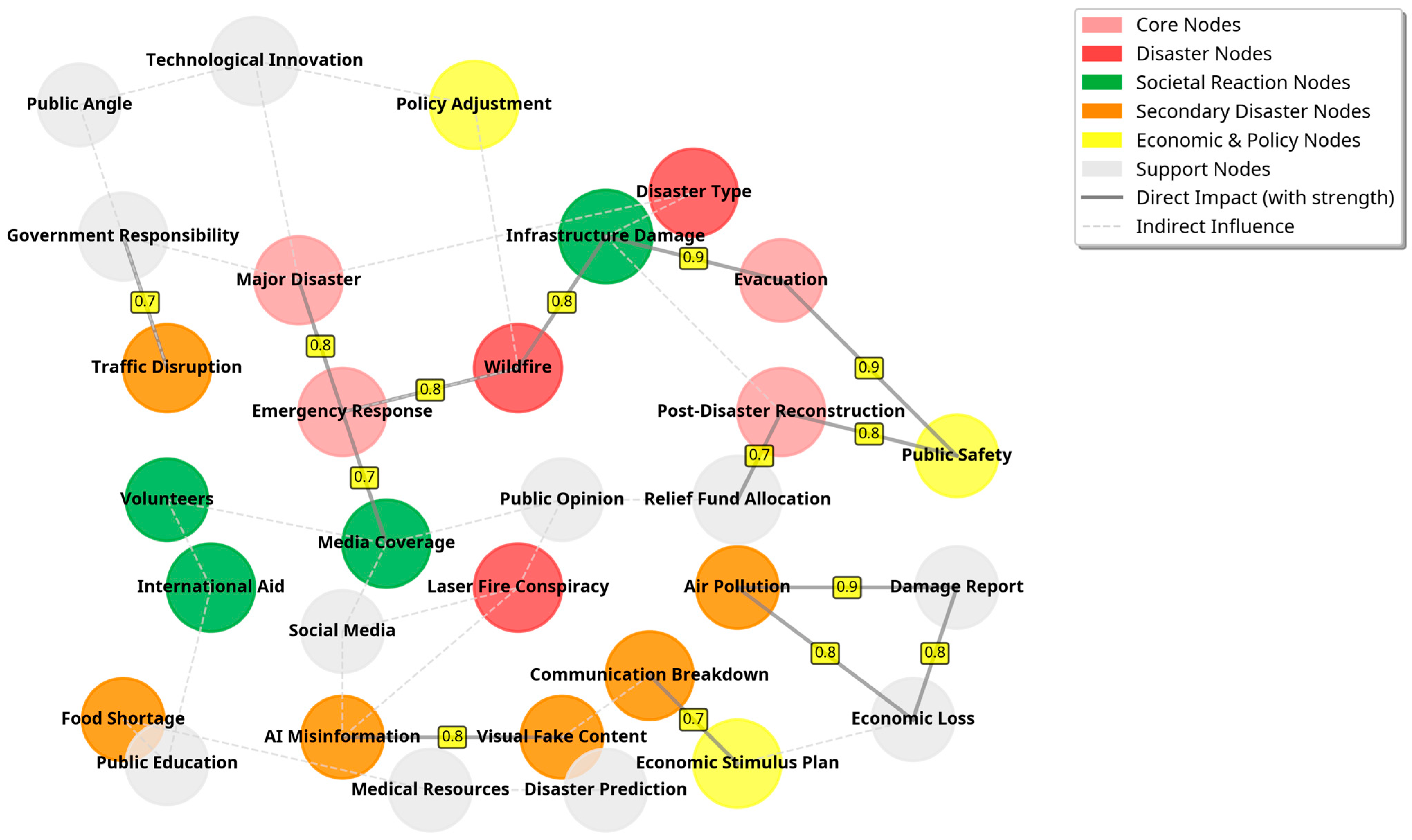

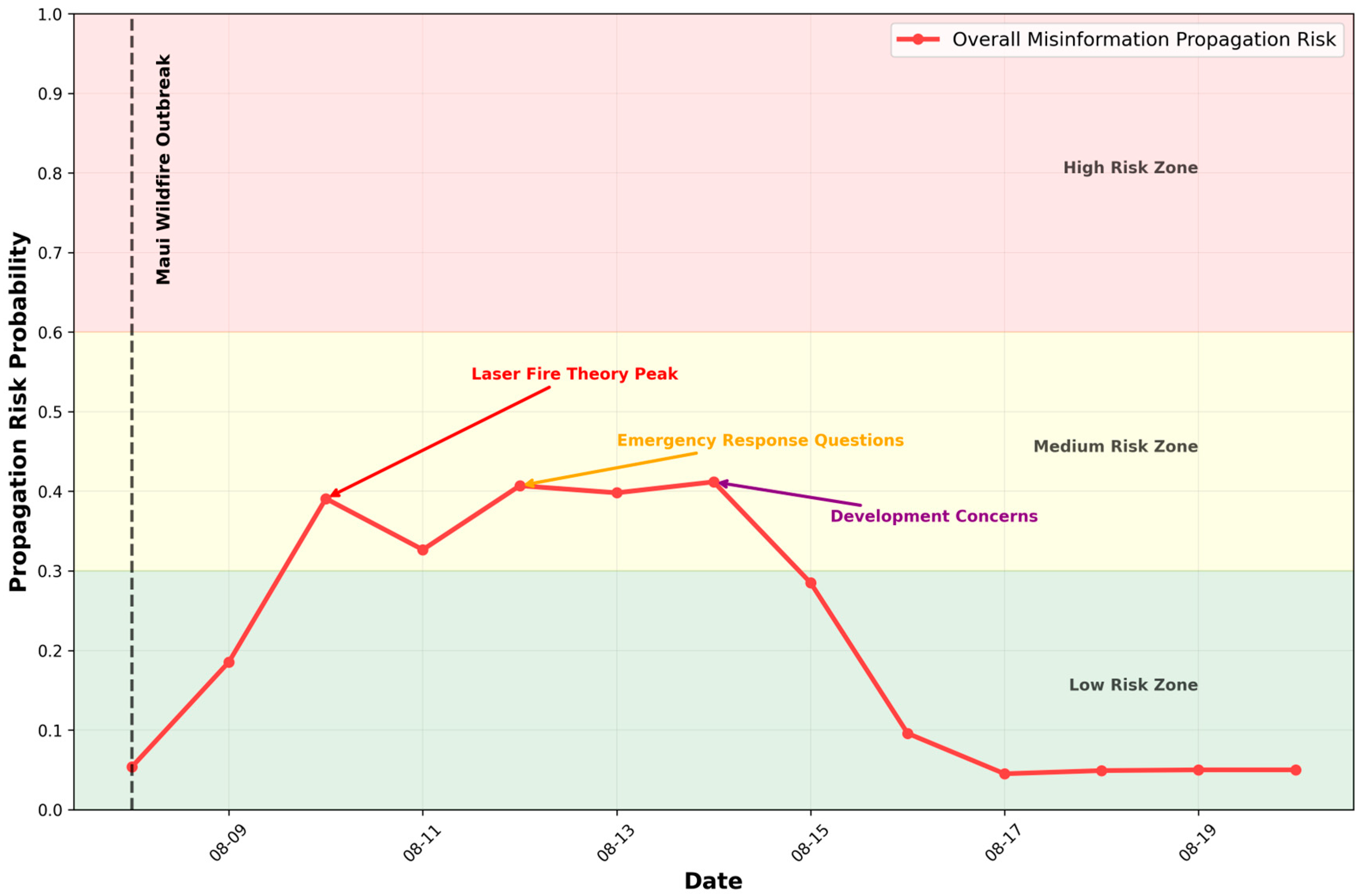

4.4. Supplementary Research on the 2023 Maui Wildfire Case Study

5. Discussion

6. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chen, L.; Liu, Y.; Chang, Y.D. Public opinion analysis of novel coronavirus from online data. J. Saf. Sci. Resil. 2020, 1, 120–127. [Google Scholar] [CrossRef] [PubMed]

- Alattar, F.; Shaalan, K. Emerging research topic detection using filtered-LDA. AI 2021, 2, 578–599. [Google Scholar] [CrossRef]

- Michal, K. Theory of Mind May Have Spontaneously Emerged in Large Language Models. arXiv 2024, arXiv:2302.02083v6. [Google Scholar] [CrossRef]

- Chen, Y.; Georgiou, T.T.; Tannenbaum, A. Optimal transport for Gaussian mixture models. IEEE Access 2018, 7, 6269–6278. [Google Scholar] [CrossRef] [PubMed]

- Delon, J.; Desolneux, A. A Wasserstein-type distance in the space of Gaussian mixture models. SIAM J. Imaging Sci. 2020, 13, 936–972. [Google Scholar] [CrossRef]

- Gaujac, B.; Feige, I.; Barber, D. Gaussian mixture models with Wasserstein distance. arXiv 2018, arXiv:1806.04465. [Google Scholar] [CrossRef]

- Kolouri, S.; Rohde, G.K.; Hoffmann, H. Sliced Wasserstein distance for learning Gaussian mixture models. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3427–3436. [Google Scholar]

- Liu, J.; Wang, P.; Shang, Z. IterDE: An Iterative Knowledge Distillation Framework for Knowledge Graph Embeddings. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 4488–4496. [Google Scholar] [CrossRef]

- Wang, S.; Zhou, X.G. Overview of Topic Detection and Tracking of Methods for Microblogs. In Proceedings of the International Conference on Education, Culture, Economic Management and Information Service, Changsha, China, 20–21 June 2020; pp. 242–248. [Google Scholar] [CrossRef]

- Zong, C.Q.; Xia, R.; Zhang, J.J. Topic Detection and Tracking. Text Data Min. 2021, 2, 201–225. [Google Scholar] [CrossRef]

- Valdez, D.; Pickett, A.C.; Goodson, P. Topic Modeling: Latent Semantic Analysis for the Social Sciences. Soc. Sci. Q. 2018, 99, 1665–1679. [Google Scholar] [CrossRef]

- Uthirapathy, S.E.; Sandanam, D. Topic Modelling and Opinion Analysis on Climate Change Twitter Data Using LDA And BERT Model. Procedia Comput. Sci. 2023, 218, 908–917. [Google Scholar] [CrossRef]

- Jaradat, S.; Matskin, M. On Dynamic Topic Models for Mining Social Media. Emerg. Res. Chall. Oppor. Comput. Soc. Netw. Anal. Min. 2019, 9, 209–230. [Google Scholar] [CrossRef]

- Zhu, H.M.; Qian, L.; Qin, W. Evolution analysis of online topics based on ‘word-topic’ coupling network. Scientometrics 2022, 127, 3767–3792. [Google Scholar] [CrossRef]

- Wang, J.M.; Wu, X.D.; Li, L. A framework for semantic connection based topic evolution with DeepWalk. Intell. Data Anal. 2018, 22, 211–237. [Google Scholar] [CrossRef]

- Virtanen, S. Uncovering dynamic textual topics that explain crime. R. Soc. Open Sci. 2021, 8, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Du, Y.; Yi, Y.; Li, X. Extracting and tracking hot topics of micro-blogs based on improved Latent Dirichlet Allocation. Eng. Appl. Artif. Intell. 2020, 87, 103279. [Google Scholar] [CrossRef]

- Liu, W.; Jiang, L.; Wu, Y.S. Topic Detection and Tracking Based on Event Ontology. IEEE Access 2020, 8, 2995776. [Google Scholar] [CrossRef]

- Peng, X.; Han, C.; Ouyang, F. Topic tracking model for analyzing student-generated posts in SPOC discussion forums. Int. J. Educ. Technol. High. Educ. 2020, 17, 35. [Google Scholar] [CrossRef]

- Chen, X. Monitoring of Public Opinion on Typhoon Disaster Using Improved Clustering Model Based on Single-Pass Approach. SAGE Open 2023, 13, 1–16. [Google Scholar] [CrossRef]

- Chen, X.; Qiu, Z.Z. Research on Text Classification Based on WAE Improved Model. Comput. Simul. 2022, 39, 331–336. [Google Scholar]

- Petrick, F.; Herold, C.; Petrushkov, P. Document-Level Language Models for Machine Translation. In Proceedings of the Eighth Conference on Machine Translation, Singapore, 6–7 December 2023; pp. 375–391. [Google Scholar] [CrossRef]

- Zhang, Y.H.; Song, K.H.; Cai, X.R. Multimodal Topic Detection in Social Networks with Graph Fusion. In Proceedings of the International Conference on Web Information Systems and Applications, Kaifeng, China, 24–26 September 2021; pp. 28–38. [Google Scholar] [CrossRef]

- Sagar, P.; Sanket, S.; Sandip, P. Topic Detection and Tracking in News Articles. In Proceedings of the International Conference on Information and Communication Technology for Intelligent Systems, Ahmedabad, India, 25–26 March 2017; pp. 420–426. [Google Scholar] [CrossRef]

- Guo, M.; Chen, C.; Hou, C.; Wu, Y.; Yuan, X. FGDGNN: Fine-Grained Dynamic Graph Neural Network for Rumor Detection on Social Media. In Findings of the Association for Computational Linguistics; Association for Computational Linguistics: Vienna, Austria, 2025; pp. 5676–5687. [Google Scholar] [CrossRef]

- Li, H.; Jiang, L.; Li, J. Continuous-time dynamic graph networks integrated with knowledge propagation for social media rumor detection. Mathematics 2024, 12, 3453. [Google Scholar] [CrossRef]

- Guan, S.; Cheng, X.; Bai, L.; Zhang, F.; Li, Z.; Zeng, Y.; Jin, X.; Guo, J. What is event knowledge graph: A survey. IEEE Trans. Knowl. Data Eng. 2022, 35, 7569–7589. [Google Scholar] [CrossRef]

- Mayank, M.; Sharma, S.; Sharma, R. DEAP-FAKED: Knowledge graph based approach for fake news detection. arXiv 2021, arXiv:2107.10648. [Google Scholar] [CrossRef]

- Qudus, U.; Röder, M.; Saleem, M.; Ngomo, A.C.N. Fact checking knowledge graphs—A survey. ACM Comput. Surv. 2025, 58, 1–36. [Google Scholar] [CrossRef]

- Kishore, A.; Kumar, G.; Patro, J. Multimodal fact checking with unified visual, textual, and contextual representations. arXiv 2025, arXiv:2508.05097. [Google Scholar] [CrossRef]

- Liu, Y.P.; Peng, H.; Li, J.X. Event detection and evolution in multi-lingual social streams. Front. Comput. Sci. 2020, 5, 213–227. [Google Scholar] [CrossRef]

- Sukhwan, J.; Wan, C.Y. An alternative topic model based on Common Interest Authors for topic evolution analysis. J. Informetr. 2020, 14, 101040. [Google Scholar] [CrossRef]

- Ankita, D.; Himadri, M.; Niladriar, S.D. Text categorization: Past and present. Artif. Intell. Rev. 2021, 54, 1–48. [Google Scholar] [CrossRef]

- Kuang, G.S.; Guo, Y.; Liu, Y. Bursty Event Detection via Multichannel Feature Alignment. In Proceedings of the ICBDC ‘20: The 5th International Conference on Big Data and Computing, Chengdu, China, 28–30 May 2020; pp. 39–45. [Google Scholar] [CrossRef]

- Dai, T.J.; Xiao, Y.P.; Liang, X. ICS-SVM: A user retweet prediction method for hot topics based on improved SVM. Digit. Commun. Netw. 2022, 8, 186–193. [Google Scholar] [CrossRef]

- Meysam, A.; Mohammad, R. Topic Detection and Tracking Techniques on Twitter: A Systematic Review. Complexity 2021, 4, 1–15. [Google Scholar] [CrossRef]

- Zhu, W.; Tian, A.; Yin, C. Instance-Aware Prompt Tuning for Large Language Models. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics, Bangkok, Thailand, 11–16 August 2024; Volume 1, pp. 14285–14304. [Google Scholar] [CrossRef]

- Zerveas, G.; Rekabsaz, N.; Eickhoff, C. Enhancing the ranking context of dense retrieval through reciprocal nearest neighbors. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–10 December 2023; pp. 8798–8807. [Google Scholar] [CrossRef]

- Zhang, X.; Yang, Q.; Xu, D. Xuan yuan 2.0: A large Chinese financial chat model with hundreds of billions parameters. arXiv 2023, arXiv:2305.12002. [Google Scholar] [CrossRef]

- Cho, S.; Jeong, S.; Seo, J. Discrete Prompt Optimization via Constrained Generation for Zero-shot Re-ranker. In Findings of the Association for Computational Linguistics: ACL 2023; Association for Computational Linguistics: Toronto, ON, Canada, 2023; pp. 960–971. [Google Scholar] [CrossRef]

- Sun, Z.; Shen, S.; Cao, S. Aligning Large Multimodal Models with Factually Augmented RLHF. In Findings of the Association for Computational Linguistics: ACL 2024; Association for Computational Linguistics: Toronto, ON, Canada, 2024; pp. 13088–13110. [Google Scholar] [CrossRef]

- Han, S.; Liu, J.C.; Wu, J.Y. Transforming Graphs for Enhanced Attribute Clustering: An Innovative Graph Transformer-Based Method. arXiv 2023, arXiv:2306.11307v3. [Google Scholar] [CrossRef]

- Ahsan, M.; Kumari, M.; Sharma, T.P. Rumors detection, verification and controlling mechanisms in online social networks: A survey. Online Soc. Netw. Media 2019, 14, 100050. [Google Scholar] [CrossRef]

- Lian, Y.; Liu, Y.; Dong, X. Strategies for controlling false online information during natural disasters: The case of Typhoon Mangkhut in China. Technol. Soc. 2020, 62, 101265. [Google Scholar] [CrossRef]

- Dong, Y.; Huo, L.; Perc, M.; Boccaletti, S. Adaptive rumor propagation and activity contagion in higher-order networks. Commun. Phys. 2025, 8, 261. [Google Scholar] [CrossRef]

- Liu, W.; Liu, J.; Niu, Z. Online social platform competition and rumor control in disaster scenarios: A zero-sum differential game approach with approximate dynamic programming. Complex Intell. Syst. 2025, 11, 461. [Google Scholar] [CrossRef]

| Approach | Key Studies | Methods/Technologies | Applications |

|---|---|---|---|

| Generative Topic Models | Danny et al. [11], Uthirapathy et al. [12], Jaradat et al. [13], Zhu et al. [14], Wang et al. [15], Virtanen et al. [16], Du et al. [17], Liu et al. [18], Peng et al. [19], Chen et al. [20,21], Petrick et al. [22] | LDA, BERT, Transformers, WAE | Thematic analysis, rumor detection, topic tracking |

| Graph-Based Models | Zhang et al. [23], Sagar et al. [24], Guo et al. [25], Li et al. [26], Guan et al. [27], Mayank et al. [28], Qudus et al. [29], Kishore et al. [30] | GNNs, EKGs, DEAP-FAKED, MultiCheck | Event detection, multimodal verification, fact-checking |

| Statistical Classification | Liu et al. [31], Sukhwan et al. [32], Ankita et al. [33], Kuang et al. [34], Dai et al. [35], Meysam et al. [36], Zhu et al. [37], Zerveas et al. [38], Zhang et al. [39], Cho et al. [40], Sun et al. [41], Han et al. [42] | GPT, CNN-RNN-LLM, RL, predictive modeling | Rumor labeling, event mining, risk prediction |

| Evaluation Dimension | Evaluation Criteria | Formula/Indicator |

|---|---|---|

| Accuracy | Concept and Entity Precision | Precision = TP/(TP + FP) |

| Event Relationship Accuracy | Recall = TP/(TP + FN) | |

| Completeness | Event Coverage | Coverage = Number of Entities/Total Entities |

| Entity and Attribute Completeness | Attribute Completeness = Defined Attributes/Total Required Attributes | |

| Scalability | Data Scalability | Scalability Factor = Processed Data Volume/Initial Data Volume |

| Structural Scalability | Structural Complexity = Numbers of Nodes/Number of Edges | |

| Query Efficiency | Response Time | Average Response Time |

| Resource Utilization | Resource Utilization Rate = Used Resources/Available Resources | |

| Inference Capability | Inference Accuracy | Inference Precision = Successful Inferences/Total Inferences |

| Inference Diversity | Inference Diversity Index = Inference Types/Total Inference Types |

| Classification Model | Precision | Recall | F1 Score |

|---|---|---|---|

| LDA + BERT | 0.81 | 0.77 | 0.82 |

| VAE + BERT | 0.86 | 0.84 | 0.88 |

| WAE + BERT | 0.91 | 0.89 | 0.92 |

| GMWAE + BERT | 0.94 | 0.93 | 0.95 |

| Evaluation Criteria | Evaluation Results for Figure 8 | Evaluation Results for Figure 9 |

|---|---|---|

| Concept and Entity Precision | 0.85 | 0.88 |

| Event Relationship Accuracy | 0.75 | 0.78 |

| Event Coverage | 0.80 | 0.85 |

| Entity and Attribute Completeness | 0.70 | 0.75 |

| Data Scalability | 0.90 | 0.92 |

| Structural Scalability | 0.85 | 0.88 |

| Response Time | 0.80 | 0.83 |

| Resource Utilization | 0.82 | 0.85 |

| Inference Accuracy | 0.88 | 0.91 |

| Inference Diversity | 0.87 | 0.89 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, X. Sustainable Agile Identification and Adaptive Risk Control of Major Disaster Online Rumors Based on LLMs and EKGs. Sustainability 2025, 17, 8920. https://doi.org/10.3390/su17198920

Chen X. Sustainable Agile Identification and Adaptive Risk Control of Major Disaster Online Rumors Based on LLMs and EKGs. Sustainability. 2025; 17(19):8920. https://doi.org/10.3390/su17198920

Chicago/Turabian StyleChen, Xin. 2025. "Sustainable Agile Identification and Adaptive Risk Control of Major Disaster Online Rumors Based on LLMs and EKGs" Sustainability 17, no. 19: 8920. https://doi.org/10.3390/su17198920

APA StyleChen, X. (2025). Sustainable Agile Identification and Adaptive Risk Control of Major Disaster Online Rumors Based on LLMs and EKGs. Sustainability, 17(19), 8920. https://doi.org/10.3390/su17198920