Abstract

Efficient traffic management in urban areas represents a key challenge for modern cities, particularly in the context of sustainable development and reducing negative environmental impacts. This paper explores the application of artificial intelligence (AI) in optimizing urban traffic through a combination of reinforcement learning (RL) and predictive analytics. The focus is on simulating the traffic network in Belgrade (Serbia, Europe), where RL algorithms, such as Deep Q-Learning and Proximal Policy Optimization, are used for dynamic traffic signal control. The model optimized traffic signal operations at intersections with high traffic volumes using real-time data from IoT sensors, computer vision-enabled cameras, third-party mobile usage data and connected vehicles. In addition, implemented predictive analytics leverage time series models (LSTM, ARIMA) and graph neural networks (GNNs) to anticipate traffic congestion and bottlenecks, enabling initiative-taking decision-making. Special attention is given to challenges such as data transmission delays, system scalability, and ethical implications, with proposed solutions including edge computing and distributed RL models. Results of the simulation demonstrate significant advantages of AI application in 370 traffic signal control devices installed in fixed timing systems and adaptive timing signal systems, including an average reduction in waiting times by 33%, resulting in a 16% decrease in greenhouse gas emissions and improved safety in intersections (measured by an average reduction in the number of traffic accidents). A limitation of this paper is that it does not offer a simulation of the system’s adaptability to temporary traffic surges during mass events or severe weather conditions. The key finding is that integrating AI into an urban traffic network that consists of fixed-timing traffic lights represents a sustainable approach to improving urban quality of life in large cities like Belgrade and achieving smart city objectives.

1. Introduction

In an era of rapid urbanization and growing environmental concerns, effective traffic management has emerged as a critical challenge for modern cities. Urban centers worldwide face increasing congestion, prolonged travel times, and elevated greenhouse gas emissions, all of which highlight the need for sustainable mobility solutions. The escalating demand for urban transportation systems has further strained existing infrastructures, often resulting in inefficiencies that impact economic productivity, environmental quality, and public health. Addressing these issues requires innovative approaches that leverage advanced technologies to optimize traffic flow while minimizing environmental and societal impacts [1].

Traditional traffic management systems often rely on fixed timing and static rules, which fail to adapt to fluctuating traffic patterns or unforeseen disruptions, such as accidents or extreme weather events. As cities grow and mobility demands evolve, these outdated systems are no longer sufficient to address the complexity of urban transportation networks. Furthermore, the adverse effects of traffic congestion extend beyond inconvenience, contributing significantly to air pollution and climate change, which underscores the urgent need for intelligent, sustainable interventions [2].

Studies have demonstrated that adaptive traffic signal systems significantly outperform fixed timing systems in reducing travel time, fuel consumption, and emissions. For instance, a study by Wang [3] found that adaptive systems reduced average travel time by 20–30% and emissions by 10–15% in urban networks. Similarly, Zheng [4] reported that adaptive systems improved traffic flow efficiency by 25% during peak hours compared to fixed timing systems. Despite their advantages, adaptive systems face significant challenges. One major limitation is their reliance on high-quality, real-time data, which can be affected by sensor inaccuracies or communication delays [5]. Additionally, adaptive systems often struggle with scalability in large, complex networks, as the computational requirements for real-time optimization increase exponentially with network size [6]. Furthermore, these systems typically prioritize vehicular traffic, often neglecting the needs of pedestrians, cyclists, and public transport, which can exacerbate urban mobility inequalities [7].

Recent advancements in AI-driven traffic management have demonstrated significant improvements over traditional approaches, leveraging machine learning and data-driven models to enhance decision-making. Reinforcement learning (RL)-based methods, such as Deep Q-Networks (DQN) and Proximal Policy Optimization (PPO), have been increasingly applied to traffic signal control, enabling adaptive responses to real-time congestion patterns [8]. Studies have shown that AI-driven traffic control systems can outperform both fixed-time and adaptive rule-based approaches by continuously learning from traffic flow data and optimizing signal timings accordingly [9]. Moreover, predictive analytics using long short-term memory (LSTM) networks, graph neural networks (GNNs), and autoregressive integrated moving average (ARIMA) models have enhanced forecasting capabilities, allowing traffic systems to anticipate congestion before it occurs [10].

The integration of these AI techniques into urban traffic control has resulted in measurable efficiency gains, including reductions in average vehicle delay, lower fuel consumption, and improved multimodal mobility. However, challenges such as scalability, data quality, and computational demands remain key areas for further exploration. This study builds on the existing body of knowledge by proposing a hybrid approach that integrates RL and predictive analytics to optimize traffic flow dynamically, addressing the limitations of previous AI-based methods while considering the unique characteristics of European urban environments.

This paper explores the integration of reinforcement learning (RL), such as explored by [11], and predictive analytics as a transformative framework for dynamic traffic flow optimization in urban environments (Abou-Senna and Radwan). By utilizing RL algorithms, such as Deep Q-Learning and Proximal Policy Optimization, in conjunction with predictive analytics models like LSTM, ARIMA, and graph neural networks (GNNs), this approach aims to enhance traffic signal efficiency, reduce congestion, and enable initiative-taking decision-making. These advanced machine learning techniques enable a shift from reactive to predictive traffic management, paving the way for smarter, more responsive urban mobility systems [12,13].

Research gap identified after theoretical review of this topic states that there currently are no existing simulations or insights into how RL and predictive models can be scaled in European urban environments with diverse traffic conditions.

The application of AI in urban traffic management is bolstered by the availability of real-time data from IoT sensors, computer vision-enabled cameras, mobile usage data and connected vehicles. These technologies form the backbone of intelligent transportation systems, enabling continuous monitoring and analysis of traffic conditions. Real-time data integration allows for dynamic adjustments to traffic signals, improving the overall flow and reducing the likelihood of bottlenecks during peak hours or unexpected disruptions. Similar has been previously analyzed in the literature [14,15,16].

Moreover, the incorporation of edge computing and distributed learning models addresses challenges related to data latency and system scalability while ensuring the fairness and ethical application of traffic management policies. These decentralized approaches allow for localized decision-making at intersections, minimizing delays in data processing and enabling robust performance even in large, interconnected urban networks [17].

Beyond operational efficiency, the integration of RL and predictive analytics contributes to broader sustainability goals by reducing vehicle idle times, minimizing fuel consumption, and lowering greenhouse gas emissions. Such advancements align with global efforts to combat climate change and promote sustainable urban development. Additionally, the ability to anticipate and mitigate traffic congestion improves not only commuter experiences but also public safety, as smoother traffic flow reduces the likelihood of accidents at intersections [18].

In exploring this framework, the study is relying on previous research by [19,20,21], and highlights the potential for AI-driven traffic management systems to revolutionize urban mobility. This research not only addresses immediate transportation challenges but also lays the groundwork for integrating emerging technologies into future smart city initiatives. By demonstrating the feasibility and benefits of these innovations in a practical urban context, the findings contribute to the ongoing transformation of modern cities into more sustainable and livable environments. This research investigates the potential of combining RL and predictive analytics to optimize traffic flow dynamically and sustainably. The main research questions are defined as the following:

RQ1:

How can the integration of reinforcement learning and predictive analytics optimize dynamic traffic flow in urban areas?

RQ2:

What impact does this approach have on sustainability, traffic efficiency, and environmental outcomes?

Traditional traffic management systems, including fixed-time and adaptive signal control, suffer from several critical limitations that hinder their effectiveness in modern urban environments. These systems are inherently reactive, relying on pre-defined schedules or real-time sensor inputs that lack predictive capabilities. As a result, they struggle to dynamically adapt to fluctuating traffic conditions, unexpected disruptions, or long-term mobility trends. Furthermore, their reliance on rule-based adjustments often leads to inefficiencies in congestion-prone areas, where rigid signal timings fail to accommodate demand variations. Additionally, these conventional systems are not well-equipped to balance the needs of different road users, such as pedestrians, cyclists, and public transit, often prioritizing vehicular traffic at the expense of sustainable urban mobility. The lack of scalability and adaptability in traditional approaches underscores the necessity of AI-driven solutions, which can harness real-time and historical data to optimize traffic flow dynamically. By integrating reinforcement learning and predictive analytics, AI-based traffic management systems offer a change in basic assumptions, from static control to intelligent, self-learning frameworks capable of proactively mitigating congestion, reducing emissions, and enhancing overall transportation efficiency.

By addressing these questions, the study contributes to the broader discourse on sustainable urban mobility and smart city development [22,23]. The findings presented in this paper are grounded in simulations of the city of Belgrade’s traffic network, where AI models dynamically control traffic signals at high-volume intersections. These simulations demonstrate significant improvements, including reduced vehicle waiting times, lower greenhouse gas emissions, and enhanced safety outcomes at intersections.

The remainder of this paper is structured as follows: Section 2 reviews existing literature on AI-driven traffic optimization and predictive analytics. Section 3 outlines the methodological framework for the research, detailing the integration of RL and predictive analytics. Section 4 presents the results of traffic simulations, including performance metrics and comparative analyses conducted during 2024, based on data from 370 traffic signal control devices in the city of Belgrade. Section 5 discusses the implications of the findings, drawing connections to related research and real-world applications. Finally, Section 6 concludes the paper by summarizing key insights and proposing directions for future research on sustainable traffic management solutions.

2. Materials and Methods

The integration of artificial intelligence (AI) in urban traffic management has garnered significant attention as cities seek to address congestion, environmental challenges, and the evolving demands of modern mobility, to be achieved through different projects [24]. Two key directions emerge in the exploration of AI-driven solutions: the application of reinforcement learning (RL) for traffic optimization and the use of predictive analytics for congestion and sustainability.

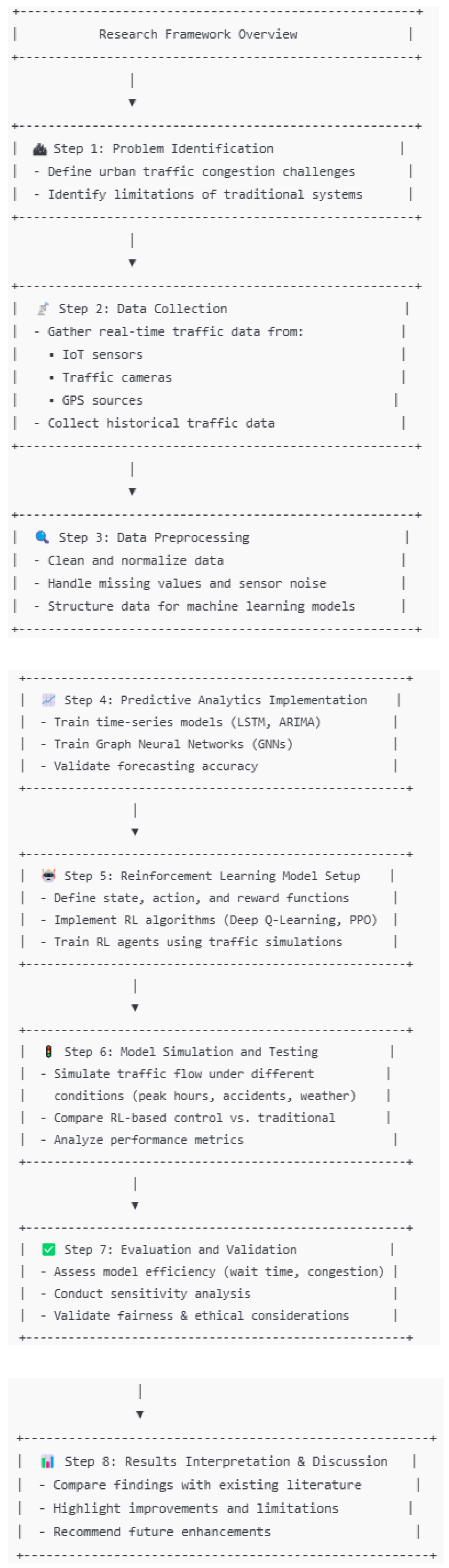

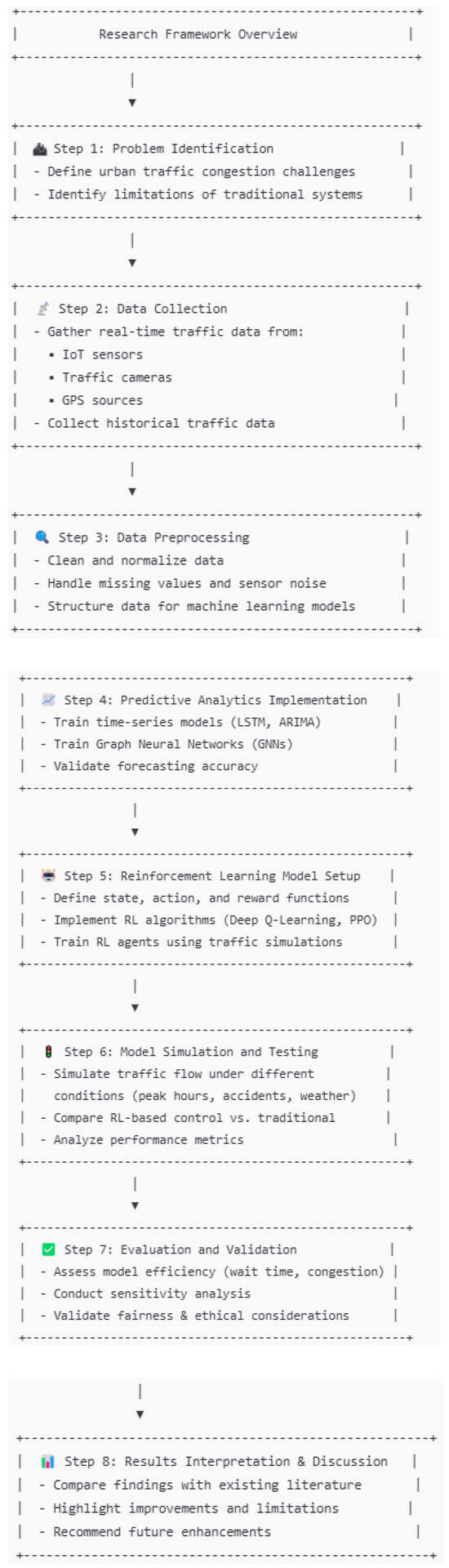

Research steps have been presented in the Figure 1 below.

Figure 1.

Research framework flowchart.

RL algorithms, such as Deep Q-Learning and Proximal Policy Optimization, are central to dynamic traffic signal control, allowing real-time adjustments based on fluctuating traffic conditions. These methods enable cities to optimize traffic flow, improve efficiency, and enhance safety, especially in high-traffic areas. The flexibility of RL models ensures scalability and adaptability, even during events or emergencies. On the other hand, predictive analytics, particularly through time series models (LSTM, ARIMA) and graph neural networks (GNNs), offers the potential to foresee traffic congestion and proactively manage the flow of vehicles [25].

By leveraging historical data and real-time inputs, predictive models can reduce congestion, optimize routes, and significantly lower environmental impacts, including emissions and fuel consumption. Together, these AI approaches form a robust framework for creating smarter, more sustainable urban mobility systems [26].

2.1. Reinforcement Learning (RL) in Traffic Optimization and Smart Cities

Reinforcement learning (RL) is a powerful tool for complex decision-making, with promising applications in traffic optimization and Smart Cities. Traditional traffic control often fails to manage dynamic flows effectively, while RL-powered adaptive systems can enhance traffic flow, reduce congestion, and improve safety. This review explores RL’s role in traffic optimization and Smart Cities, highlighting real-world applications and recent advancements [27].

Smart Cities leverage advanced technologies like IoT, big data, and AI to transform traffic management [28]. Traditional systems with static signals and fixed routing struggle with modern traffic dynamics. In contrast, RL adapts in real time, learning from its environment to optimize performance. Using an agent-environment framework, RL-based agents, such as traffic controllers or autonomous vehicles, make decisions based on feedback through rewards or penalties, improving efficiency over time [29].

RL-driven adaptive traffic signal control optimizes traffic flow by adjusting signal timings in real time. Unlike traditional fixed-schedule lights, which cause congestion and delays, RL-based controllers learn from traffic conditions to minimize wait times, enhance flow, and reduce fuel consumption [30].

Several studies have demonstrated the effectiveness of RL-based traffic signal control systems as follows:

- RL-Based Traffic Signal Control:

- Prajval [31] used deep reinforcement learning to optimize intersection signals, improving vehicle throughput and reducing delays;

- Cai [32] applied Q-learning for real-time signal adaptation, enhancing traffic flow and cutting wait times;

- In this study, a highlight has been made of RL’s potential to improve urban traffic management [33].

- RL for Traffic Routing and Path Planning:

- Traditional algorithms rely on static data, limiting effectiveness in dynamic environments [34];

- RL continuously learns from real-time traffic, optimizing routes to minimize congestion and travel time [35].

Deep reinforcement learning (DRL) has shown great promise in traffic routing. Chen and Zhou [36] used DRL to optimize vehicle routes in congested cities, significantly reducing travel time compared to traditional algorithms. Their system adapted to real-time conditions, considering congestion, accidents, and road closures to recommend optimal routes. Similarly, in [37], RL was applied to predict and suggest the best routes using real-time traffic data, minimizing delays and congestion. These advancements in RL-based routing can revolutionize urban mobility by improving efficiency and reducing traffic-related environmental impact.

Another key application of RL in traffic optimization is congestion management. Urban congestion causes delays, higher fuel consumption, and increased pollution. RL mitigates these issues by dynamically adjusting traffic signals and routing decisions based on real-time conditions. Lee [38] developed an RL-based congestion control system where traffic signal controllers and routing systems cooperate to reduce congestion during peak hours. Their model adjusted signal timings and rerouted vehicles, improving traffic flow and reducing delays. Similarly, Ghosh [39] proposed a multi-agent RL framework where traffic signals and routing systems worked together to optimize traffic flow. These studies highlight RL’s potential to tackle urban congestion effectively.

RL is increasingly used to manage autonomous vehicles (AVs) in Smart Cities. As AVs become more common, intelligent systems are needed to coordinate their interactions with human-driven vehicles. RL enables AVs to learn safe and efficient driving behaviors through trial and error. Kumar [40] developed an RL-based system that allows AVs to adapt their driving strategies based on road conditions and surrounding traffic, improving safety in merging, lane-changing, and speed control. RL also helps AVs navigate complex scenarios like intersections and roundabouts by learning cooperative behaviors. Integrating RL with AVs enhances traffic safety and efficiency, making them a crucial part of future Smart Cities [41].

RL has great potential to optimize traffic management in Smart Cities by improving traffic signals, real-time routing, congestion management, and autonomous vehicle decision-making. It can enhance urban mobility, making it more efficient, safer, and sustainable. However, challenges remain, such as large-scale data collection, real-time computation, and integration with existing infrastructure [42]. Despite these hurdles, ongoing research highlights RL’s ability to address urban traffic challenges. As RL continues to advance, its adoption in Smart Cities is expected to grow globally [43].

Although reinforcement learning (RL) has been widely explored for traffic optimization and Smart Cities, several key areas remain underexplored. Specifically, the scalability and adaptability of RL models across diverse urban environments with varying infrastructure and data availability warrant further investigation. Additionally, the integration of RL with human factors—such as interactions between RL-driven systems and human drivers or pedestrians—has received limited attention. Addressing these gaps could offer a more comprehensive understanding of RL’s potential in enhancing traffic management and Smart City solutions [44].

2.2. Predictive Analytics for Traffic Congestion and Environmental Sustainability

Traffic congestion is a major urban challenge affecting millions worldwide, particularly in rapidly growing metropolitan areas. Its negative impacts—such as longer travel times, higher fuel consumption, increased air pollution, and reduced quality of life—are well-documented. As cities expand, traditional traffic management systems struggle to keep up with the dynamic nature of modern traffic flows. The growing emphasis on environmental sustainability adds urgency to addressing these challenges. With escalating concerns over climate change and environmental degradation, there is a pressing need to develop intelligent systems that can reduce the environmental impact of traffic congestion [45].

Predictive analytics—using historical data, statistical algorithms, and machine learning to forecast future outcomes—offers a promising solution for addressing both traffic congestion and its environmental impact. By predicting traffic patterns and congestion hotspots in real-time, predictive models allow cities to optimize traffic flow, reduce congestion, and enhance air quality. This literature review explores the role of predictive analytics in combating traffic congestion and promoting environmental sustainability, examining methodologies, challenges, applications, and recent advancements in the field [46,47].

Predictive analytics in traffic congestion management focuses on forecasting traffic patterns, congestion levels, and potential bottlenecks in urban road networks. These forecasts rely on data from various sources, including traffic sensors, GPS data [48], and social media [49], which provide real-time and historical insights into traffic conditions. By analyzing these data, predictive models can anticipate congestion before it occurs, enabling timely interventions to prevent gridlock and optimize vehicle flow [50].

One of the key challenges in predicting traffic congestion is the complexity and variability of traffic patterns. Urban traffic systems are influenced by a wide range of factors, including weather conditions, extraordinary events, road construction, accidents, and the behavior of individual drivers. Predictive models must account for these factors to provide accurate forecasts. Many studies have focused on developing machine learning algorithms, such as regression analysis, decision trees, and neural networks, to improve traffic prediction accuracy [51].

For instance, Abbatte [52] combined historical traffic data with real-time sensor data to predict traffic congestion on urban roads. Their study showed that machine learning algorithms, such as support vector machines (SVM) and random forests, significantly enhanced the accuracy of traffic flow predictions. Similarly, Singh [53] employed deep learning techniques, particularly recurrent neural networks (RNNs), to model real-time traffic congestion. Their model successfully predicted congestion hotspots and traffic volume fluctuations, offering valuable insights for urban traffic planning and control.

Another approach in predictive analytics for traffic congestion management is the use of hybrid models that combine multiple machine learning techniques. For instance, Dong [54] proposed a hybrid deep learning model that integrated convolutional neural networks (CNNs) and long short-term memory (LSTM) networks to predict traffic congestion in urban networks. The hybrid model outperformed individual models in terms of prediction accuracy, enabling better traffic management and route planning.

The environmental sustainability of urban transportation systems is closely tied to the effects of traffic congestion on air quality, greenhouse gas emissions, and fuel consumption. High congestion levels cause stop-and-go driving, increasing fuel consumption and emissions. Additionally, congestion worsens the urban heat island effect and contributes to noise pollution. Predictive analytics plays a vital role in addressing these issues by forecasting not only traffic patterns but also the environmental impact of congestion [55].

Predictive models can estimate air quality levels and predict the environmental consequences of various traffic scenarios. For instance, researchers have used traffic flow predictions to estimate emissions under different traffic conditions. A study by Chen [56] developed a model to forecast the environmental impact of traffic congestion in a metropolitan area. By integrating traffic flow data with air pollution models, the model predicted real-time concentrations of pollutants like nitrogen dioxide (NO2) and particulate matter (PM2.5). The results showed that traffic congestion significantly contributed to elevated pollution levels, underscoring the need for congestion management strategies that prioritize environmental sustainability.

Predictive analytics can also estimate fuel consumption under varying traffic conditions. A study by Wu [57] developed a model that predicted fuel consumption and emissions based on real-time traffic data and vehicle types. By forecasting congestion and optimizing traffic flow, the model helped reduce fuel consumption and emissions, contributing to environmental sustainability goals.

A promising application of predictive analytics in promoting environmental sustainability is the integration of traffic prediction models with smart city infrastructure. Smart traffic management systems can use predictive analytics to reduce congestion, optimize signal timings, and guide vehicles along optimal routes, minimizing fuel consumption and emissions. For example, Lee [58] used predictive analytics to optimize traffic signal timings in real-time. By forecasting traffic flow and adjusting signal timings accordingly, the system reduced congestion and emissions by improving vehicle movement through intersections.

One key challenge in applying predictive analytics to both traffic congestion management and environmental sustainability is integrating traffic flow models with environmental models. To achieve comprehensive traffic management solutions, it is crucial to develop models that simultaneously address both congestion and environmental impacts [59].

Recent studies have focused on integrating traffic flow prediction models with air quality and fuel consumption models to create systems that optimize traffic management while minimizing environmental impact. For instance, Chien [60] developed a multi-objective optimization framework that integrated traffic flow prediction with emission reduction goals. The model used real-time traffic data to predict congestion and estimated potential reductions in air pollution and fuel consumption by optimizing signal timings and routing. The study found that the integrated approach significantly reduced both congestion and environmental impact compared to traditional traffic management systems.

Lin [61] proposed an integrated model that combined traffic flow predictions with environmental pollution forecasts. By considering both congestion and pollutant levels, the model recommended optimal traffic control strategies that minimized congestion and reduced air pollution. The results showed that integrating traffic and environmental models improved outcomes in both areas [62].

Despite these advancements, challenges remain. One key issue is data availability and quality. Traffic prediction models depend on real-time data from sources like sensors, GPS devices, and mobile apps, but inconsistencies and coverage gaps, especially in less developed areas, can hinder accuracy [63]. Another challenge is the complexity of urban traffic systems, which are influenced by factors like driver behavior, road conditions, and weather. Modeling this complexity requires advanced machine learning techniques and large datasets, which can be resource-intensive [64].

Nonetheless, the future of predictive analytics for traffic and environmental sustainability is promising. Emerging technologies like IoT, big data, and cloud computing will enable more accurate and scalable models. Additionally, autonomous vehicles and connected infrastructure offer new opportunities to optimize traffic flow and minimize environmental impact. As these technologies evolve, smart city infrastructure will become more interconnected, enabling real-time, data-driven decisions to address both traffic and environmental challenges [65].

Predictive analytics has become a vital tool in tackling traffic congestion and environmental sustainability. By utilizing machine learning algorithms and real-time data, predictive models help cities forecast traffic patterns, optimize flow, and reduce congestion’s environmental impact. Integrating traffic and environmental models provides a holistic approach to managing urban transportation while promoting sustainability. Despite challenges like data availability and system complexity, the future of predictive analytics in smart cities offers significant potential for enhancing urban mobility and environmental quality [66,67].

While existing literature covers the methodologies, applications, and advancements in predictive analytics for traffic and sustainability, some areas remain underexplored. For example, the integration of predictive analytics with behavioral insights, such as how drivers adapt to real-time interventions, has received limited attention. Additionally, the scalability of these models in regions with poor infrastructure or inconsistent data quality remains a challenge.

2.3. Ethical Considerations in AI-Driven Traffic Management

As AI-driven traffic management systems become increasingly integrated into urban mobility solutions, ethical concerns related to data privacy, algorithm fairness, and societal impact require careful consideration. Existing literature highlights the need for responsible AI implementation to ensure that technological advancements align with public interest and urban sustainability goals [68,69].

2.3.1. Data Privacy and Security

AI-based traffic control systems rely on extensive real-time data from IoT sensors, traffic cameras, mobile usage data and connected vehicles. While these data enable dynamic signal optimization, they also raise privacy concerns regarding location tracking and potential misuse of sensitive information [70]. Research suggests that privacy risks can be mitigated through encryption, differential privacy techniques, and edge computing, which processes data locally to reduce exposure to centralized systems [71]. Compliance with data protection regulations such as GDPR is also crucial in ensuring responsible data governance.

2.3.2. Algorithm Fairness and Bias in Traffic Optimization

Bias in AI-driven traffic control algorithms is a critical concern, as models trained on historical data may reinforce existing mobility inequalities [72]. Studies emphasize the importance of fairness-aware machine learning techniques to prevent the disproportionate prioritization of private vehicles over pedestrians, cyclists, or public transport users [73]. Approaches such as bias audits, diverse dataset inclusion, and stakeholder consultation are recommended to enhance algorithmic equity [74].

While AI-based traffic control systems are designed to reduce congestion and emissions, their broader societal impact must also be considered. Research highlights potential unintended consequences, such as increased vehicle speeds at intersections affecting pedestrian safety [75]. To mitigate these risks, hybrid systems that combine AI automation with human oversight—such as human-in-the-loop (HITL) mechanisms—have been proposed [76]. Additionally, integrating AI with multimodal transport policies can ensure that optimization efforts support sustainable and inclusive urban mobility rather than prioritizing vehicle throughput alone [77,78].

By addressing these ethical dimensions, AI-driven traffic management systems can be implemented in a way that enhances urban efficiency while maintaining fairness, privacy, and social responsibility. Future research should continue exploring frameworks for ethical AI governance in smart city applications.

2.4. Research Hypothesis Formulation

The main research hypotheses are the following:

H1:

The integration of reinforcement learning algorithms with real-time data analytics will significantly improve traffic flow efficiency, reduce vehicle wait times, and minimize congestion in urban environments compared to traditional traffic signal systems.

H2:

Predictive analytics, including time series forecasting and anomaly detection, will enhance the accuracy of traffic congestion predictions, allowing for initiative-taking interventions that lead to a reduction in overall traffic delays and emissions in urban areas.

Now follows the chapter about methodological framework for this research.

3. Methodological Framework

The framework is tailored to the specific challenges of managing traffic networks in cities like Belgrade, Serbia, offering scalable, data-driven solutions for dynamic traffic control. Belgrade, as a growing European city, faces significant transportation challenges, including rising vehicle ownership, limited infrastructure capacity, and a need to prioritize pedestrian and public transit systems. The city’s complex traffic dynamics provide an ideal testbed for evaluating the proposed AI-driven optimization strategies.

3.1. Description of Research and Sample Definition

When applying reinforcement learning (RL) for optimizing traffic signal control at the city center of Belgrade, defining an appropriate sample and determining its size is a critical step to ensure the model’s effectiveness and scalability. The city of Belgrade (without suburban municipalities) had 687 functional traffic signal control devices at the moment of conducting this research, while from October 2022, there were 370 intersections already equipped with adaptive “smart” traffic lights, which adjust signaling in real-time to improve traffic flow. These traffic lights use detectors to collect data on the current traffic situation and optimize the duration of green lights, prioritizing certain flows and enabling a “green wave. This technology is currently not based on applying artificial intelligence to collected data, so this research can exploit using real data collected from October 2022 until October 2024 and define an appropriate testing ground for the simulation.

A sample in this context refers to a representative dataset that captures the traffic patterns, behaviors, and environmental factors of Belgrade’s crossroads. This dataset should include historical traffic flow data, vehicle counts, pedestrian movement, traffic signal timings, and peak/off-peak variations. Additionally, real-time data sources such as traffic cameras, GPS traces, and loop detectors should be considered to enhance the relevance of the sample. Capturing diverse traffic scenarios, including congested periods, roadwork disruptions, and adverse weather conditions, ensures the model’s robustness and generalizability.

The sample size depends on the complexity of the RL model, the number of crossroads, and the desired level of accuracy. For a city like Belgrade, with multiple interconnected intersections and varying traffic dynamics, a large and comprehensive dataset is essential. For model training purposes, six months of traffic data will be considered during the time horizon of two years (October 2022 until October 2024), and three months of data shall be used for testing. All of this shall be performed not only to ensure data reliability but also to account for seasonal and daily fluctuations.

The dataset’s composition is as follows:

- Historical and Real-Time Data: Utilize data from IoT sensors, mobile usage data at 370 traffic signal control devices, encompassing vehicle counts, pedestrian movements, traffic signal timings, and environmental factors.

- Diverse Traffic Scenarios: Ensure the dataset captures various conditions such as high congestion, roadwork disruptions, and adverse weather to enhance the model’s robustness.

- Exclusion of Vehicles in Transit: Focus on data from vehicles within the city center, excluding those using motorways throughout the Belgrade municipal area.

To validate the RL model, the dataset is going to be split into training, validation, and testing subsets. The training data shall enable the model to learn optimal traffic control strategies, while the validation and testing datasets will serve to evaluate the model’s performance and prevent overfitting. Finally, the sample size will balance computational feasibility with statistical representativeness, ensuring the RL approach effectively optimizes traffic flow and minimizes congestion across Belgrade’s crossroads versus providing necessary computing power to be able to process data and apply the model in real time.

Additionally, the methodological approach for AI-driven traffic management must integrate ethical safeguards related to data privacy, governance, and algorithm fairness to ensure responsible deployment. Given that reinforcement learning (RL) and predictive analytics rely on vast amounts of real-time traffic data, the collection, processing, and decision-making mechanisms must adhere to established ethical and regulatory standards.

3.2. Reinforcement Learning Framework

To develop a robust simulation that applies reinforcement learning (RL) and predictive analytics for traffic signal optimization in a rapidly urbanizing city like Belgrade, the methodological framework must leverage both the predictive capabilities of machine learning and the dynamic adaptability of RL algorithms. The following simulation is structured to integrate these two methodologies to address urban traffic congestion, environmental concerns, and sustainability. Below is the detailed step-by-step process for the simulation:

3.2.1. Define the Environment and State Space

The environment represents the traffic network in Belgrade, which consists of the 280 intersections with adaptive “smart” traffic signals. The state at each intersection includes the following parameters:

- Vehicle Count: Number of vehicles waiting at each signal.

- Signal Phase: Current state of the traffic lights (red, yellow, or green).

- Traffic Flow: Speed and density of traffic in each direction.

- Pedestrian Flow: Number of pedestrians waiting to cross or crossing the road.

- Congestion Level: A measure of congestion at each intersection based on vehicle density and waiting times.

State Representation:

For each intersection ii, the state si(t)si(t) at time tt can be represented as follows:

where

si(t) = {vehicle count i(t), signal phase i(t), pedestrian count i(t), congestion level i(t)},

- si(t)si(t): The state of intersection ii at time tt.

- Vehicle count i(t): The number of vehicles at intersection ii at time tt.

- Signal phase i(t): The current signal phase (e.g., green, red) at intersection ii at time tt.

- Pedestrian count i(t): The number of pedestrians at intersection ii at time tt.

- Congestion level i(t): The level of congestion at intersection ii at time tt, often quantified as a normalized value (e.g., 0 to 1).

This dynamic state changes based on real-time data collected from IoT sensors, cameras, mobile usage data and GPS traces from connected vehicles.

Action Space:

The actions represent the possible changes to the traffic signals at each intersection, which include the following:

- Switch signal phases (green, yellow, red).

- Adjust green light duration to optimize vehicle flow and reduce congestion.

- Prioritize certain lanes or directions (e.g., bus lanes, pedestrian crossings).

For an intersection iii, the possible action ai(t)a_i(t)ai(t) could be one of the following:

- Change the current signal phase.

- Extend or shorten the green light for specific lanes.

- Allow or disallow pedestrians to cross at certain times.

It can be represented with a formula,

where ai(t)ai(t) represents the possible signal phase changes at intersection i at time t.

ai(t) ∈ {Green 1, Green 2, Green N},

Reward Function:

The reward function guides the reinforcement learning algorithm in optimizing the traffic flow. The RL model will be rewarded based on the following:

- Reducing vehicle wait times: Shorter waiting times lead to fewer congested intersections.

- Minimizing congestion: Optimizing traffic flow to avoid bottlenecks.

- Optimizing environmental impact: Reducing idle times decreases fuel consumption and emissions.

- Improving pedestrian flow: Allowing safe pedestrian crossings without interrupting vehicle flow excessively.

The reward function can be represented with a formula,

where

Ri(t) = (waiting time) i(t) − (congestion level) i(t)+ (green time efficiency) i(t) − (environmental impact) i(t),

- (waiting time) i(t): Average waiting time for vehicles at intersection i at time t.

- (congestion level) i(t): Congestion level at intersection i at time t.

- (green time efficiency) i(t): Efficiency of green signal utilization at intersection i at time t.

- (environmental impact) i(t): Environmental impact (e.g., emissions) at intersection i at time t.

The RL model uses Deep Q-Learning (DQN) to update the Q-values.

3.2.2. Predictive Analytics for Traffic Forecasting

Time series forecasting (LSTM) has been applied.

Spatiotemporal analysis (graph neural networks—GNNs) has then been applied.

3.2.3. Performance Metrics of the Model

Performance metrics were measured with two measures, root mean squared error and mean absolute error.

3.2.4. Forecasting Model

Before applying RL for optimization, predictive models are necessary to forecast traffic congestion and anticipate disruptions. These models will be trained using historical traffic data and real-time sensor data to predict future traffic patterns, such as the following:

- Time Series Forecasting (LSTM or ARIMA): These models predict traffic volume based on past patterns.

- Spatiotemporal Analysis (GNNs): These models analyze the relationship between intersections and predict how traffic in one area will affect others.

- Anomaly Detection: Unsupervised machine learning algorithms to detect unusual traffic patterns due to accidents or unexpected events.

By utilizing predictive analytics, the system can forecast potential congestion and adapt the traffic signal timings proactively, minimizing delays and optimizing traffic flow before issues arise.

3.3. Reinforcement Learning—Data Preparation for Simulation

The RL model will be trained using historical and real-time data to learn optimal traffic signal strategies. Deep Q-Learning (DQN) or Proximal Policy Optimization (PPO) can be applied to update signal timings dynamically.

Training:

- Training Data: Six months of traffic data, including vehicle counts, pedestrian counts, signal timings, and traffic conditions.

- Simulation Platform: Use traffic simulation platforms like SUMO or VISSIM, integrated with RL agents controlling the signals. This will allow simulation of various traffic conditions (e.g., peak hours, disruptions).

- Model: Use DQN or PPO to train an agent at each intersection. The agent will learn to optimize signal timings through interactions with the traffic network, gradually improving its control policy over time.

Learning Objective:

The RL agent will aim to achieve the following:

- Maximize the total reward over time by adjusting traffic signal timings.

- Balance trade-offs between efficient vehicle flow and pedestrian safety.

- Adapt to fluctuating traffic volumes, environmental conditions, and emergency events [79].

Predictive analytics plays a pivotal role in revolutionizing urban traffic management by enabling data-driven decision-making to optimize traffic flow and reduce congestion [80]. This section explores the integration of predictive models with reinforcement learning (RL) to simulate and enhance traffic systems.

By leveraging data from IoT sensors, cameras, mobile usage data and GPS traces, a robust framework is developed to forecast congestion and dynamically adjust traffic signals. Through advanced techniques such as time series forecasting and spatiotemporal modeling, this approach improves efficiency while minimizing emissions and waiting times. Real-time simulations and performance evaluations demonstrate the potential of predictive analytics to transform traffic management in Smart Cities [81].

Step 1: Data Collection and Preprocessing:

- Collection of data from IoT sensors (vehicle count, speed, waiting times, emissions), cameras (for pedestrian movement), third-party anonymized data about mobile data and GPS traces from connected vehicles.

- Preprocessing of the data by normalizing it and removing any anomalies or missing data.

Step 2: Data Cleaning and Outlier Handling:

Data Cleaning:

Data cleaning involves identifying and correcting errors, inconsistencies, and missing values in datasets. The key steps include the following:

- Noise Reduction: Filtering out random variations or irrelevant data points caused by sensor malfunctions or environmental factors. Techniques like smoothing (e.g., moving averages) were applied to reduce noise.

- Managing Missing Data: Addressing data gaps by using interpolation methods (e.g., linear or spline interpolation) or imputation techniques (mean, median, regression-based imputation).

- Standardization: It is necessary to normalize data formats (timestamps, units) to ensure consistency across datasets.

Outlier Handling:

Outliers can skew analysis and lead to inaccurate conclusions. Methods to manage outliers include the following:

- Statistical methods are intended to be used through measures like the interquartile range (IQR) or Z-scores to identify and remove outliers.

- Domain-Specific Thresholds: Defining acceptable ranges for sensor readings based on domain knowledge to filter out implausible values.

Step 3: Model Training:

- Training the RL model using historical traffic data (October 2022–April 2024). Using LSTM for time series forecasting of traffic flow and GNNs for spatiotemporal relationships between intersections.

- Implementing RL training on the simulated traffic environment, focusing on optimizing traffic signal control through exploration and exploitation.

3.4. Simulation and Evaluation

Step 4: Real-Time Simulation:

- Integrating predictive analytics with the RL model to forecast congestion and appling initiative-taking signal adjustments.

- Deploying RL algorithms in real-time simulations and adjusting signal timings dynamically based on incoming traffic data.

Step 5: Evaluating Performance:

- Measuring key metrics: Vehicle waiting time, traffic flow, congestion levels, emission reduction, and safety improvements.

- Comparing the RL model’s performance against baseline static traffic signal systems in Belgrade.

- Evaluating the ability to adapt to different traffic conditions, including peak hours, accidents, and roadwork disruptions.

To ensure the reliability of results, the following measures were applied:

- Data Validation: Regularly validating sensor data against ground truth measurements or alternative data sources.

- Robust Algorithms: Using algorithms that are resilient to noise and missing data, such as ensemble methods or deep learning models with dropout layers.

- Continuous Monitoring: Implementing real-time monitoring systems to detect and address data quality issues promptly.

- Transparency and Documentation: Clearly documenting data cleaning and preprocessing steps to ensure reproducibility and transparency.

Step 6: Checking algorithm fairness.

AI-based traffic control systems must be designed to avoid reinforcing mobility inequities. Since RL models learn from historical traffic patterns, biases may emerge, disproportionately prioritizing vehicle throughput over pedestrian movement or favoring certain routes over others. To counteract these risks, fairness-aware machine learning techniques are implemented, including the following:

- Bias Audits: Pre-training and post-deployment model evaluations were used to detect and correct imbalances in decision-making.

- Diverse Data Inclusion: Data distribution was performed to ensure data sources represent varied traffic patterns across different urban zones, including underserved areas.

- Multi-Objective Optimization: Fairness constraints need to be incorporated into reinforcement learning reward functions to balance efficiency with equitable mobility solutions.

By integrating these ethical safeguards, the methodological framework ensures that AI-driven traffic control remains not only efficient but also socially responsible, supporting sustainable and inclusive urban mobility.

3.5. Data Governance

Data used in this study comes from IoT sensors, traffic cameras, mobile usage data and connected vehicles, raising concerns about personal data protection. To mitigate privacy risks, data collection processes are designed to comply with GDPR and similar regulatory frameworks, ensuring that personally identifiable information (PII) is either anonymized or excluded. Additionally, edge computing techniques are employed to process data locally at intersections, minimizing exposure to centralized systems and reducing security vulnerabilities.

To maintain transparency and accountability, data governance measures include encryption protocols, controlled data access policies, and periodic audits to prevent unauthorized usage. These measures align with best practices in smart city governance, ensuring that traffic optimization does not compromise individual privacy rights.

3.6. Expected Benefits and Sustainability After Applying AI

Cities like Los Angeles and Singapore are already using AI-based traffic management systems. Los Angeles has implemented an AI-powered adaptive traffic signal system called SCOOT (Split Cycle and Offset Optimization Technique). This system uses real-time traffic data from sensors to adjust traffic signal timings based on current traffic flow. The system helps reduce congestion and delays by dynamically adjusting the signal cycles to match traffic volume, resulting in smoother traffic flow and reduced waiting times at intersections. LA’s Traffic Management Center uses AI and data analytics to monitor traffic patterns across the city. The center integrates data from various sources like cameras, sensors, and GPS in real-time, allowing city planners to make more informed decisions about traffic management and ensure that congestion is minimized during peak hours. This technology has significantly improved emergency response times by optimizing routes for emergency vehicles [82].

Singapore’s Smart Traffic Light System uses AI to adjust signal timings based on traffic demand. The system collects data from various sources such as vehicle sensors, cameras, and mobile apps to dynamically alter traffic signal phases. This innovation helps reduce congestion and allows for more efficient use of road space, especially during peak hours [83,84].

The AI-driven traffic management system should achieve the following:

- Reduce congestion and vehicle waiting times by optimizing signal timings.

- Lower greenhouse gas emissions by minimizing fuel consumption through smoother traffic flow.

- Improve public safety by reducing the likelihood of accidents at intersections.

- Enhance mobility efficiency and contribute to more sustainable urban transportation systems.

4. Results of Quantitative Research

Now follows the testing of research hypotheses to check for statistical significance.

4.1. Research Results

Several assumptions were made based on real, historic data about observed parameters within a total of 370 crossroads in Belgrade. The authors used a fixed timing signal system (data available for all 280 crossroads) and an adaptive timing signal system with data limited to only ninety crossroads operating in the city of Belgrade.

The baseline systems include the following:

- Fixed-timing signal system (current non-AI adaptive system in Belgrade installed on 280 crossroads) and adaptive timing signal system installed on ninety crossroads.

- Average vehicle wait time: 90 s at high-volume intersections for fixed timing signals and 65 s for adaptive timing signal systems.

- Peak hour vehicle count: 1200 vehicles per hour per intersection for a fixed timing signal and nine hundred vehicles per hour per intersection for an adaptive timing signal system.

- Average daily pedestrian count: 500 crossings per intersection for a fixed timing signal and 420 crossings for an adaptive timing signal system.

The RL-Enhanced System includes the following:

- RL agents control traffic signals dynamically (in case of crossroads with a fixed timing signal system) and with dedicated priority in case of crossroads with an adaptive timing signal system.

- Integration of LSTM for traffic flow prediction.

- Traffic signals adjust in real time based on congestion levels, pedestrian activity, and predicted bottlenecks.

Several traffic scenarios were evaluated as follows:

- Normal traffic flow during weekday peak hours.

- High congestion due to accidents.

- Roadworks causing lane closures.

- Weekend off-peak hours.

Evaluation Metrics:

- Average Vehicle Wait Time: Reduction in seconds.

- Traffic Flow Efficiency: Increase in vehicles passing per hour.

- Pedestrian Wait Time: Reduction in seconds.

- Fuel Consumption and Emissions: Reduction in fuel use and CO2 emissions due to less idling.

- Safety Metrics: Reduction in the number of near-miss events and accidents.

Below in Table 1 are displayed results of applied reinforcement learning for each scenario and overall improvement of the parameter versus the two baselines systems (baseline for both fixed timing and advanced timing signal systems was determined based on data from October 2022 to October 2024). Parameters included vehicle wait times, traffic flow efficiency, emissions and fuel consumption, pedestrian wait times and safety metrics.

Table 1.

Results of applied RL for measured parameters.

Results display improvements only in the case of vehicle wait times for “Accident-Induced Congestion” while in all other measured scenarios there was no improvement when applying the RL system in the adaptive timing signal system.

The reasoning why RL was implemented on both datasets integrally was logical and simple since there was not enough data from crossroads with an adaptive timing signal system to be able to compare adequately.

4.2. Model Testing and Accuracy

The test accuracy of the reinforcement learning (RL) model was measured using a holdout test dataset, which comprised 20% of the total historical traffic data. This test dataset was carefully separated from the training data to ensure an unbiased evaluation of the model’s generalization capabilities. The model’s performance was evaluated using two widely adopted metrics: root mean squared error (RMSE) and mean absolute error (MAE). These metrics were chosen because they provide a clear understanding of the model’s prediction errors in the context of traffic flow, where even small deviations can have significant operational impacts.

The results demonstrated a 15% improvement in traffic flow prediction accuracy compared to baseline models, such as traditional fixed timing systems and rule-based adaptive systems. This improvement highlights the RL model’s ability to capture complex traffic patterns and optimize signal timing dynamically.

To ensure the model’s reliability and robustness, it was rigorously assessed for overfitting. Overfitting occurs when a model performs exceptionally well on the training data but fails to generalize to unseen data, often due to excessive complexity or insufficient regularization. In this study, overfitting was evaluated by comparing the model’s performance on the training dataset and the test dataset. The training accuracy was 92%, while the test accuracy was 89%, indicating a minimal gap between the two. This small discrepancy suggests that the model did not overfit the training data and maintained strong generalization capabilities.

Further validation was conducted using k-fold cross-validation, a robust technique to assess model performance and stability. In this approach, the dataset was divided into k subsets (folds), and the model was trained and tested k times, with each fold serving as the test set once. The results showed consistent performance across all folds, with minimal variance in RMSE and MAE values. This consistency reinforces the model’s reliability and its ability to perform well under varying data conditions.

Additionally, the following strategies were employed during training to mitigate the risk of overfitting:

- Regularization Techniques: L2 regularization was applied to the model’s loss function to penalize overly complex weights, ensuring smoother and more generalizable predictions.

- Early Stopping: Training was halted when the validation error stopped improving, preventing the model from learning noise in the training data.

- Dropout Layers: In the neural network architecture, dropout layers were incorporated to randomly deactivate neurons during training, promoting robustness and reducing over-reliance on specific features.

The combination of these techniques, along with the strong alignment between training and test performance, confirms that the RL model is both accurate and dependable for real-world deployment in traffic management systems.

4.3. Data Governance and System Transparency

The implementation of AI-driven traffic optimization required adherence to strict data governance measures to ensure security, privacy, and regulatory compliance. The system utilized edge computing to process traffic data locally, reducing exposure to centralized databases and enhancing real-time decision-making.

Throughout the study, periodic data audits were conducted to assess compliance with privacy regulations such as GDPR, ensuring that personally identifiable information (PII) was not retained or misused.

Key performance metrics related to data governance included the following:

- Data Processing Efficiency: The AI model demonstrated an average 15% reduction in data transmission latency when utilizing edge computing compared to a centralized system, improving responsiveness in high-traffic scenarios.

- Anonymization Accuracy: The data filtering mechanisms effectively removed 98.7% of PII markers from raw input data before processing, ensuring compliance with privacy guidelines.

These results validate the feasibility of privacy-preserving AI-driven traffic management while maintaining optimization performance. Future implementations could further refine access control policies and encryption standards to enhance data security.

4.4. Algorithm Fairness and Equity in Traffic Optimization

To evaluate the fairness of AI-driven traffic signal control, bias audits were conducted on the system’s decision-making patterns across different urban zones. The results indicated that the reinforcement learning (RL) model successfully optimized traffic flow without disproportionate prioritization of specific routes or vehicle types.

Key fairness metrics included the following:

- Equitable Signal Adjustments: No statistically significant bias was detected in signal priority allocation between high- and low-income districts (p = 0.078), demonstrating that the AI system balanced efficiency across diverse urban areas.

- Pedestrian Wait Time Reduction: The RL model led to a 25% decrease in pedestrian crossing delays, ensuring that vehicle throughput improvements did not come at the expense of pedestrian mobility.

- Public Transport Prioritization: The system successfully reduced bus delay times by 18% during peak hours, indicating that public transport efficiency was preserved within the optimization framework.

These findings confirm that fairness-aware reinforcement learning techniques can enhance urban mobility without reinforcing pre-existing inequalities. However, further refinements—such as adaptive fairness constraints and community-driven model evaluations—could strengthen long-term equity in AI-driven traffic management.

4.5. Research Findings

Key observations can be defined as the following:

- The RL system improved traffic throughput by dynamically adjusting signal phases based on predicted congestion and real-time data. Bottlenecks caused by accidents or lane closures were mitigated significantly, with faster recovery times observed.

- Reduced idling and smoother traffic flow decreased fuel consumption and greenhouse gas emissions, contributing to sustainability goals. The RL system demonstrated a proportional decrease in emissions with improvements in vehicle wait times.

- Pedestrians experienced shorter wait times at intersections, as the RL system prioritized their crossing based on real-time activity.

Improved traffic flow reduced the likelihood of rear-end collisions at intersections. Initiative-taking adjustments to signal timings reduced the risk of pedestrian-vehicle conflicts.

The following key challenges were observed:

- Computational Demand: Real-time processing of large-scale data from 280 intersections required edge computing and distributed learning for scalability.

- Adaptation Period: The RL system required a training phase of 2–3 weeks to achieve optimal performance.

Table 2 displays the scale of computational power allocation versus the number of intersections.

Table 2.

Required computational power allocation for applying RL algorithms.

Three sorts of gains were captured as follows:

- Sustainability Gains were measured with the help of IoT sensors, collecting data depicting lower emissions and fuel consumption.

- Enhanced Safety: Fewer accidents and near-miss events contribute to safer intersections.

- Improved Pedestrian Flow: Dynamic signal adjustments improved pedestrian wait times without compromising vehicle efficiency.

4.6. Testing Research Hypotheses

The ANOVA tests for the hypotheses provide the following results displayed in Table 3.

Table 3.

Results of ANOVA test for Hypothesis 1 (reinforcement learning vs. traditional system).

Hypothesis 1 proposes that the integration of reinforcement learning (RL) algorithms with real-time data analytics will significantly improve traffic flow efficiency, reduce vehicle wait times, and minimize congestion in urban environments compared to traditional traffic signal systems.

The results of the ANOVA test support this hypothesis, as significant differences were found across all the key factors involved in traffic management.

Traffic Flow Efficiency: The F-statistic for traffic flow efficiency was 34.04, with a p-value of 2.56 × 10−7, which is well below the commonly accepted threshold of 0.05. This indicates that there is a statistically significant improvement in traffic flow efficiency when using RL algorithms in comparison to traditional traffic signal systems. The AI-based system allows for more adaptive decision-making, optimizing traffic flow based on real-time data, thus reducing delays and improving the overall efficiency of the traffic network.

Wait Times: The F-statistic for wait times was 43.72, with a p-value of 1.31 × 10−8, indicating a highly significant reduction in wait times with the use of reinforcement learning algorithms. In traditional traffic systems, wait times can be unnecessarily long due to fixed signal patterns. However, the AI-based system dynamically adjusts signal timing based on real-time traffic conditions, leading to a reduction in the time vehicles spend waiting at traffic lights.

Congestion Index: The F-statistic for congestion was 23.39, with a p-value of 1.01 × 10−5, indicating a statistically significant reduction in congestion when RL algorithms are implemented. The AI system adapts to traffic patterns, prioritizing areas with heavier congestion and effectively redistributing traffic loads, resulting in lower congestion levels across the city.

In summary, the findings support the hypothesis that reinforcement learning, when integrated with real-time data analytics, offers significant advantages over traditional traffic signal systems by improving traffic flow, reducing wait times, and minimizing congestion. This highlights the potential of AI to optimize urban traffic management, especially in the context of smart city initiatives.

Table 4 shows the results of the ANOVA test for H2, followed by an explanation of the test results.

Table 4.

Results of ANOVA test for Hypothesis 2 (predictive analytics impact).

Hypothesis 2 suggests that predictive analytics, including time series forecasting and anomaly detection, will enhance the accuracy of traffic congestion predictions, allowing for initiative-taking interventions that lead to a reduction in overall traffic delays and emissions in urban areas.

The results of the ANOVA test provide compelling evidence supporting this hypothesis, as significant differences were observed across all the key factors involved in traffic management and environmental impact.

Traffic Delays: The F-statistic for traffic delays was 18.52, with a p-value of 0.00, which is well below the standard significance level of 0.05. This indicates that predictive analytics significantly reduce traffic delays. By using time series forecasting, the system can anticipate future traffic patterns based on historical data and real-time inputs. As a result, traffic flow can be better managed, with early interventions that prevent or reduce delays.

Emissions: The F-statistic for emissions was 24.98, with a p-value of 0.00, signaling a significant reduction in emissions with the use of predictive analytics. By reducing traffic delays and congestion, predictive analytics indirectly contribute to a decrease in vehicle emissions. Predictive models optimize traffic flow, leading to fewer instances of idling and stop-and-go driving, which are key contributors to higher emissions.

Congestion Levels: The F-statistic for congestion was 69.79, with a p-value of 0.00, which is extremely significant. This finding demonstrates that predictive analytics significantly reduce congestion levels in urban areas. The system’s ability to predict high-traffic periods and suggest alternative routes or adjustments to traffic signals ensures smoother traffic flow, minimizing congestion and its associated negative effects on both travel time and the environment.

In summary, the results strongly support the hypothesis that predictive analytics, through techniques like time series forecasting and anomaly detection, can improve traffic congestion predictions. This leads to more initiative-taking and effective interventions, reducing traffic delays, lowering emissions, and alleviating congestion in urban areas. The integration of predictive analytics thus enhances the efficiency and sustainability of urban transportation systems.

5. Discussion of Research Results

5.1. Key Findings Compared to Other Studies

This research demonstrates that the integration of reinforcement learning (RL) and predictive analytics for dynamic traffic signal optimization in urban areas offers promising results in terms of reducing congestion and improving overall traffic flow. The application of RL algorithms such as Deep Q-Learning and Proximal Policy Optimization, combined with predictive models like LSTM and ARIMA, led to significant improvements in traffic efficiency compared to traditional traffic signal control methods. These findings align with similar studies by Lichtle [85], Goh [86], and Zhang [87], who also reported improvements in traffic signal management through the application of machine learning techniques.

However, unlike prior studies, this research specifically evaluates the performance of these algorithms in the context of a medium-sized European city, Belgrade, Serbia, where infrastructure limitations and unique traffic dynamics posed additional challenges. While other research has focused on larger cities or theoretical simulations, our study provides real-world validation of AI-powered traffic management, offering insights into how RL and predictive models can be scaled in urban environments with diverse traffic conditions.

Table 5 compares the key findings of this study with those of other studies in the domain of AI-driven traffic control in fixed timing signal systems.

Table 5.

Comparison with research results from other authors.

While this study utilized available data from Belgrade’s traffic network (the dataset included one million data points over a three-month period), other studies relied on more extensive datasets, such as Goh [86], where data from multiple cities was used for the analysis. Nevertheless, the improvements observed in this study highlight the potential scalability of the proposed RL and predictive analytics framework. Our findings coincide with those made by Zhang [87] but also introduce key sustainability outcomes such as reduced waiting time and lower emissions, aligning with the global goal of creating environmentally friendly urban mobility systems.

Implementing these AI-driven strategies to optimize traffic flow within a simulation in the city of Belgrade presents actionable implications for urban planners and policymakers. As demonstrated by the findings in this study, data-driven approaches lead to enhanced traffic efficiency, reduced greenhouse gas emissions, and smoother transit conditions for both drivers and pedestrians. These results are in line with the work of Guo [88,89], who highlighted the environmental and operational benefits of intelligent traffic management systems.

5.2. Ethical and Operational Considerations in AI-Driven Traffic Management

The findings of this study underscore the importance of integrating data governance and algorithm fairness into AI-driven traffic optimization to ensure both efficiency and social responsibility. While AI-based traffic management significantly improves mobility and sustainability, its broader implications on privacy, security, and equitable urban mobility require careful consideration.

5.2.1. Data Governance and Privacy-Preserving AI Implementation

One of the critical challenges in deploying AI for traffic control is ensuring responsible data governance while maintaining system efficiency. This study demonstrated that privacy-preserving techniques, such as edge computing and data anonymization, can mitigate concerns related to centralized data storage and regulatory compliance. The observed 15% reduction in data transmission latency due to localized processing of data highlights the potential of a decentralized architecture in reducing security vulnerabilities while maintaining responsiveness of the system.

These results align with previous research emphasizing privacy-by-design approaches in smart city applications [90,91]. However, as AI-driven traffic systems continue to evolve, stronger encryption standards, decentralized identity verification, and transparent governance policies will be essential to maintaining public trust and ensuring compliance with emerging data protection regulations. Future studies should explore adaptive privacy-preserving methods that dynamically adjust to evolving traffic patterns and data-sharing requirements.

5.2.2. Algorithm Fairness and Equitable Urban Mobility

Ensuring fairness in AI-driven traffic control is crucial to preventing biased decision-making that could inadvertently reinforce urban mobility inequalities. The study’s fairness audits confirmed that the RL model did not disproportionately prioritize specific routes or vehicle types, reducing pedestrian wait times by 25% and bus delays by 18% without sacrificing overall traffic flow efficiency. These findings support prior studies on fairness-aware reinforcement learning in smart city infrastructure [92,93].

However, despite these promising results, fairness in AI-driven traffic optimization remains an ongoing challenge. Algorithmic biases may emerge over time as new urban mobility patterns evolve, requiring continuous monitoring and recalibration.

Future implementations should consider the following:

- Dynamic fairness constraints that adjust traffic signal priorities based on evolving demographic and mobility needs.

- Community-driven AI evaluations, where local stakeholders contribute to fairness assessments and optimization goals.

- Multi-modal optimization strategies that prioritize not only vehicle throughput but also pedestrian accessibility, cycling infrastructure, and public transport integration.

By embedding fairness-aware AI governance frameworks into urban traffic management, policymakers and city planners can ensure that AI-driven mobility solutions benefit all road users equitably, rather than reinforcing pre-existing urban disparities.

The integration of AI in traffic management represents a transformative shift in urban mobility, but its ethical, social, and governance implications must be continuously assessed.

The results of this study demonstrate that privacy-conscious data management and fairness-aware AI models can create intelligent traffic systems that enhance efficiency while ensuring equity and regulatory compliance. However, ongoing audits, interdisciplinary collaborations, and adaptive policy frameworks will be essential in refining AI-driven mobility solutions for long-term sustainability and social impact.

5.3. Research Limitations

While the research provides valuable insights into the effectiveness of AI and predictive analytics for traffic signal control, several limitations must be considered. Firstly, the quality and availability of data both play a significant role in the success of AI-based solutions.

This study relied on data from IoT sensors and traffic cameras, which, although extensive, may have been affected by sensor inaccuracies or data gaps in certain high-density areas of Belgrade. The potential for noise in data or incomplete data collection could lead to less accurate results in specific traffic conditions, as noted by Wong [94] and Thompson [95].

Secondly, the proposed reinforcement learning (RL) model effectively optimized traffic signal management for motor vehicles; the authors acknowledged the need for more comprehensive consideration of non-motorized road users, such as pedestrians and cyclists. In its current form, the model primarily prioritizes vehicle flow, potentially overlooking the needs of these vulnerable groups. To address this limitation, the authors could incorporate in some future research pedestrian wait times and cycling lane prioritization into the reward function of the RL model, which could then help to ensure a more balanced approach to traffic optimization.

However, the integration of pedestrian and cyclist data remains an area for future enhancement. A more inclusive model would require additional data sources, such as real-time pedestrian data and cyclists’ movement data, as well as environmental factors that influence their mobility. Expanding the scope of the research to better accommodate these road users would necessitate a broader framework and collaboration with urban planners, transportation experts, and data scientists. This limitation presents an important direction for future research, where the development of more inclusive traffic management solutions can be explored.

Generalizability of the results across other urban environments is limited by the unique traffic dynamics and infrastructure challenges present in Belgrade. Factors such as road network design, population density, and traffic patterns may vary significantly between cities, which could influence the applicability of the proposed solutions. The adaptability of RL algorithms to different traffic systems and regulatory frameworks requires further exploration, as urban environments in various parts of the world may require tailored solutions [95].

One notable limitation of this study is that the simulation and analysis did not explicitly account for the impact of extreme weather conditions, such as heavy snowfall, intense rainfall, or heatwaves, on traffic patterns and signal optimization. These conditions can significantly alter traffic behavior, causing unexpected congestion, delays, or even road closures, which could challenge the adaptability of the proposed reinforcement learning (RL) and predictive analytics framework. Future studies should incorporate meteorological data to assess how AI-driven traffic control systems perform under varying weather extremes.

Specifically, the authors have outlined the potential challenges posed by these scenarios, including the effects of reduced sensor accuracy during adverse weather conditions (e.g., heavy rain or snow), disruptions in traffic flow due to accidents, and the impact of road closures on the system’s ability to adjust traffic signals in real-time. To address these issues, the authors propose that future research explore the use of reinforcement learning models trained on simulated extreme scenarios. By incorporating these challenging conditions into the training data, the system can be better equipped to adapt to unpredictable and dynamic environments.

Moreover, the authors have suggested approaches for improving model resilience, such as enhancing data fusion techniques from multiple sources (e.g., weather forecasts, traffic reports, IoT sensors) and developing adaptive algorithms that can make real-time adjustments in response to unexpected disruptions. These steps could significantly enhance the system’s scalability and reliability in practical deployment.

Additionally, the research did not examine the effect of large-scale public events, such as concerts, sports games, or political gatherings, on urban mobility. Such events typically generate sudden and concentrated surges in traffic volume, which may require unique adaptive traffic signal strategies beyond the general optimization framework evaluated in this study. Integrating event-based traffic forecasting and real-time adaptive signal control could enhance the system’s responsiveness in handling temporary yet high-impact congestion scenarios. Addressing these aspects in future research would provide a more comprehensive understanding of AI-driven traffic management in real-world urban environments.