Toward Sustainable Education: A Contextualized Model for Educational Technology Adoption for Developing Countries

Abstract

1. Introduction

1.1. Background

1.2. Current Research Status on Educational Technology Adoption Models for Developing Countries

1.3. Critical Review of Existing Technology Adoption Models

1.4. Research Problem Statement

1.5. Research Questions

1.6. Research Contributions

1.7. Paper Organization

2. Need for an Educational Technology Adoption Model for Developing Countries

2.1. Difference Between Advanced and Developing Countries in the Adoption of Educational Technology

- Investment in Infrastructure and Resources [18]: Advanced countries invest heavily in educational technology, providing modern tools such as computers, projectors, Internet connections, and others, while developing countries often rely on traditional methods due to a lack of infrastructure.

- Teacher Training and Support [18]: Advanced nations have established programs for integrating technology into education, ensuring teachers are trained in using these tools effectively. In contrast, developing countries face foundational challenges that hinder teacher training and technology adoption.

2.2. Challenges Faced by Developing Countries in the Adoption of Education Technologies

- Limited access to resources [18]: The lack of technical support, electricity, Internet, devices, and financial resources significantly hampers technology adoption, especially in rural areas and emerging economies.

- Lack of training and skills [18]: Teachers in developing countries often receive inadequate training and support, resulting in low technology adoption rates. Many lack the professional readiness to effectively utilize emerging technologies in education.

- Cultural and social factors [23]: These factors heavily influence technology adoption, particularly in mobile learning within Arab Gulf countries, affecting acceptance among students and instructors.

- Resistance to technology [24]: Teachers’ attitudes toward technology create challenges in the classroom. Their willingness to integrate technology depends on perceived benefits versus concerns, complicating adoption efforts.

- Overemphasis on technology and underemphasis on pedagogy: Many programs prioritize acquiring technology over its integration into educational frameworks and pedagogy.

2.3. Difference Between the Current Model to Be Developed and Existing Previous Models

3. Construction of the ETADC Model

3.1. The Scope of Searching Base Models for Constructing the ETADC Model

3.2. The Properties of Base Models

3.3. Identifying Components for the ETADC Model from the Base Models

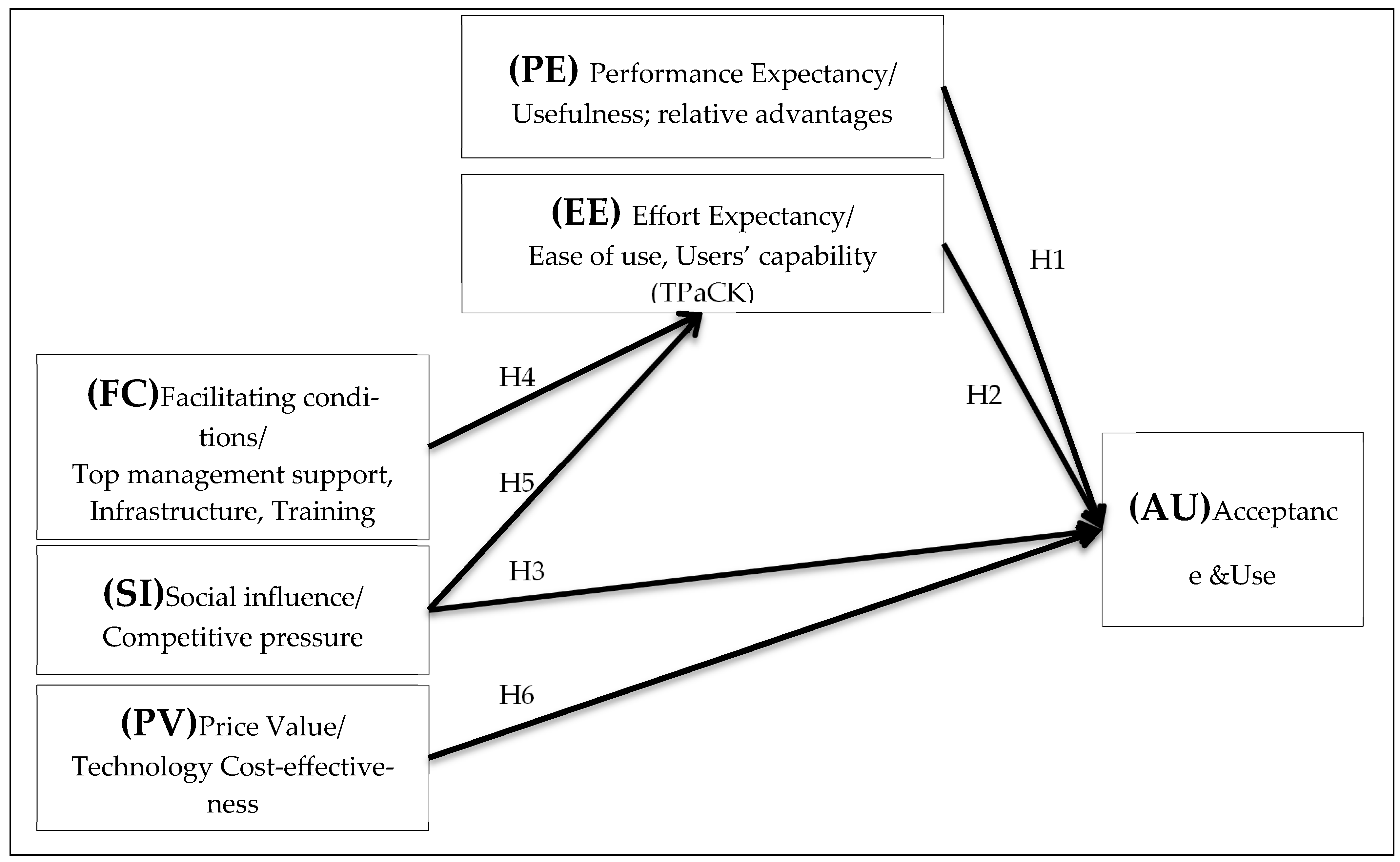

3.3.1. The Sharing of Cause–Effect Links of Dominant Technology Adoption Models and Hypotheses Development

- PE → BIU

- EE → BIU

- SI → BIU

3.3.2. The Shared Components of Many Educational Technology Adoption Models

- a.

- Performance expectancy

- Cloud-based collaborative learning technology in Malaysian universities [59];

- In Jordan, performance expectancy was found to affect behavioral intentions to use Moodle, an e-learning system [26];

- A study comparing Qatar and the USA found performance expectancy to be a significant predictor of behavioral intention in both samples [60];

- In Nigeria, performance expectancy was determined to be a significant factor influencing the behavioral intention to use Canvas, an educational technology platform [27];

- Research in Pakistan identified system characteristics, which are closely related to performance expectancy, as strong predictors of perceived usefulness in e-learning systems adoption [61];

- b.

- Effort Expectancy

- c.

- Social Influence

3.3.3. Special Links in the ETADC Model for Considering the Context of Developing Countries’ Education Settings

- a.

- Facilitating Conditions

- Content knowledge (CK) refers to the specific subject matter that teachers must be well-versed in. This involves a deep understanding of the concepts, inquiries, and skills related to the discipline being taught, whether it be mathematics, science, literature, or any other field.

- Pedagogical knowledge (PK) pertains to the instructional methods and strategies that teachers use to facilitate learning. This includes knowledge about how students learn, understanding various teaching approaches, and the ability to adapt their methods to meet diverse student needs.

- Technological knowledge (TK) involves an understanding of how to use various technologies effectively in educational settings. This includes familiarity with digital tools, applications, and resources that can enhance teaching and learning experiences.

- b.

- Price Value

3.4. ETADC Model Structure

4. Validation of the Developed Educational Technology Adoption for Developing Countries (ETADC) Model Through Meta-Analytic and Structural Equation Modeling (MASEM)

4.1. Selection of Studies for Meta-Analysis and Dataset Preparation

4.2. Data Analysis Using Two-Stage Structural Equation Modeling

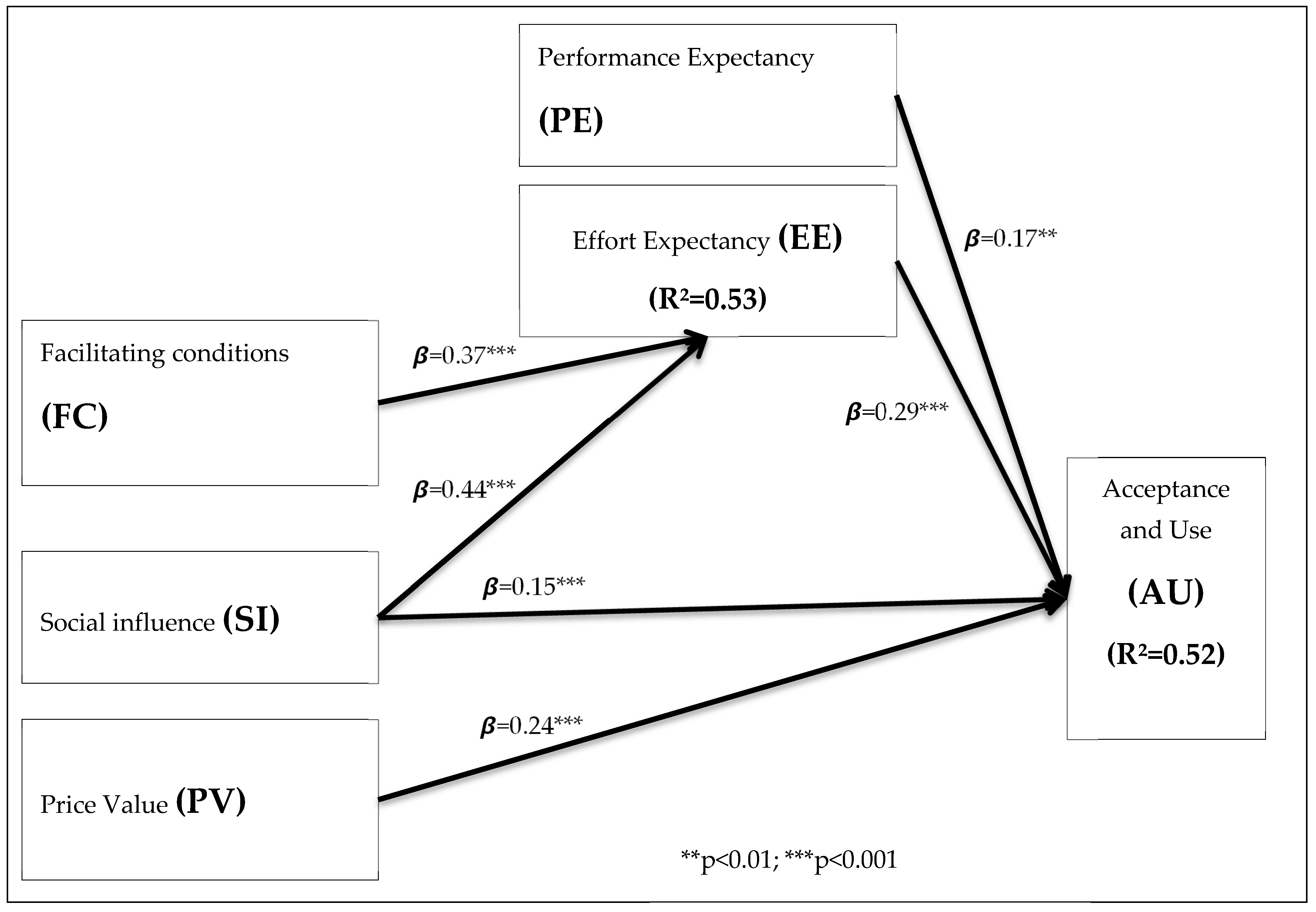

4.3. Interpretation of Results and ETADEC Model Validation

- First, check the model fit: evaluate the overall model fit using indices such as RMSEA (root mean square error of approximation), SRMR (standardized root mean squared residual), CFI (comparative fit index), and TLI (Tucker–Lewis’s index);

- Second, assess the explanatory power of the model (R2);

- Third, assess the path coefficients (β) to ensure that the significance and strength of path coefficients align with theoretical expectations.

4.3.1. ETADEC Model Fit Assessment

4.3.2. Assessment of the ETADC Model’s Explanatory Power (R2)

4.3.3. Assessment of the ETADC Model’s Path Coefficients (β)

4.3.4. The ETADC Model’s Innovativeness

4.3.5. Practical Application of the ETADC Model

- Performance expectancy (PE): a technological factor, similar to perceived usefulness, that helps identify suitable education technology by analyzing features;

- Price value (PV): a technological factor essential when considering technology purchases;

- Facilitating conditions (FC): a factor that pivots on the availability of devices, resources, and infrastructure, including top management support and expertise, and is crucial for successful technology adoption through effort expectancy (EE);

- Effort expectancy (EE): a factor that pivots on users’ capability and is impacted by FC and SI;

- Social influence (SI): A significant sociocultural factor impacting both technology identification and adoption processes.

- 5 stars if the technology is designed for educational purposes;

- 4 stars if the technology is developed as a tool that can be applied for educational purposes;

- 3 stars if the technology is designed for business purposes and can be applied to education;

- 2 stars if the technology is designed for entertainment but can be applied for educational purposes;

- 1 star if the technology is designed for lifestyle or other purposes but can be applied for educational purposes.

- 5 stars if the educational technology can offer at least five or more features;

- 4 stars if the educational technology can offer at least four features;

- 3 stars if the educational technology can offer at least three features;

- 2 stars if the educational technology can offer at least two features;

- 1 star if the educational technology can offer at least one feature.

- 5 stars if the educational technology is accessible for FREE or USD 0 (pricing per item);

- 4 stars if the educational technology is accessible for between USD 1–20 (pricing per item);

- 3 stars if the educational technology is accessible for between USD 20–50 (pricing per item);

- 2 stars if the educational technology is accessible for between USD 50–100 (pricing per item);

- 1 star if the educational technology is accessible for USD 100 or more (pricing per item).

- 5 stars if the educational technology is available for five or more types of devices;

- 4 stars if the educational technology is available for four types of devices;

- 3 stars if the educational technology is available for three types of devices;

- 2 stars if the educational technology is available for two types of devices;

- 1 star if the educational technology is available for one type of device.

- 5 stars if the download size of the educational technology is below 50 MB;

- 4 stars if the download size of the educational technology is between 50 MB and 100 MB;

- 3 stars if the download size of the educational technology is between 100 MB and 150 MB;

- 2 stars if the download size of the educational technology is between 150 MB and 199 MB;

- 1 star if the download size of the educational technology is 200 MB or above.

- 5 stars if the educational technology has above 100 M downloads;

- 4 stars if the educational technology has 10 M+ to 100 M downloads;

- 3 stars if the educational technology has 100 K+ to 10 M downloads;

- 2 stars if the educational technology has 50 K to 100 K downloads;

- 1 star if the educational technology has below 50 K downloads.

- 5 stars if the educational technology has reached the plateau of productivity (the technology becomes widely accepted and integrated into regular use);

- 4 stars if the educational technology has reached the slope of enlightenment (gradual understanding and practical applications of the technology begin to crystallize as more success stories emerge);

- 3 stars if the educational technology has reached the trough of disillusionment (realization of the technology’s limitations, leading to disappointment and reduced interest);

- 2 stars if the educational technology has reached the peak of inflated expectations (high expectations are fueled by hype and speculative success stories);

- 1 star if the educational technology is still on the technology trigger (the initial emergence of the technology, generating interest and media buzz).

- 5 stars if teachers have approved the educational technology;

- 0 stars if teachers have not yet approved the educational technology.

- 5 stars if the educational technology has above 1 M reviews;

- 4 stars if the educational technology has 100 K+ to 1 M reviews;

- 3 stars if the educational technology has 50 K to 100 K reviews;

- 2 stars if the educational technology has 10 K to 50 K reviews;

- 1 star if the educational technology has below 10 K reviews.

- 5 stars if the educational technology is rated 4.5 stars and above;

- 4 stars if the educational technology is rated 3.5 to 4.4 stars;

- 3 stars if the educational technology is rated 2.5 to 3.4 stars;

- 2 stars if the educational technology is rated 1.5 to 2.4 stars;

- 1 star if the educational technology is rated 1.4 stars and below.

5. Research Implications and Conclusions

5.1. Theoretical Contributions: Model Superiority

- It was specifically developed for the unique challenges of developing countries, whereas most primary models focus on advanced countries, making them less effective in this context;

- The ETADC model is tailored for education and validated with data exclusively from the education sector, unlike previous models that were adapted from other fields.

- Unlike primary models that target specific technologies, the ETADC model addresses educational technology adoption in general;

- It uses a large sample size (8934) from various countries, enhancing its validity, while primary models often rely on small, localized samples;

- The ETADC model considers a crucial pedagogical variable: TPACK articulates the essential knowledge that educators must possess to effectively integrate technology into their teaching practices;

- The ETADC model considers crucial variables such as cost-effectiveness, customization, alignment with academic goals, and the unique cultural, infrastructural, and economic factors in developing countries.

5.2. Practical Implications

- Performance Expectancy: institutions should meticulously assess a technology’s features and its relevance to curriculum goals to ensure it enhances teaching–learning outcomes before adoption.

- Facilitating conditions and effort expectancy: successful technology adoption requires strong organizational support, adequate resources, training, and teachers who can effectively integrate technology into their teaching practices.

- Price value: the benefits of adopting a new technology must outweigh its costs; otherwise, it is not worth the investment.

- Effort expectancy: through TPACK, the model aims to articulate the essential knowledge that educators must possess to effectively integrate technology into their teaching practices.

- Social influence: developing countries can enhance their educational standards by learning from successful technology integration in advanced countries, such as China’s community-based professional development strategies.

- Selecting suitable technologies;

- Purchasing software that satisfies the requirements.

- Piloting, trialing, and testing a technology to be adopted;

- Conducting regular partnership meetings to monitor and evaluate the adoption, integration, and effects of the technology.

5.3. Conclusions

- (1)

- It delineates the foundational framework of the ETADC model, highlighting its components and how they relate to the specific needs of educational institutions in developing countries;

- (2)

- This research rigorously validates the effectiveness and generalizability of the ETADC model, ensuring that it holds across various contexts and can be reliably applied to different educational settings;

- (3)

- The study outlines a detailed list of requirements and actionable steps necessary for the successful adoption of technology in these educational environments, guiding stakeholders involved in the decision-making process.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| % | Percent | JR | Job Relevance |

| A | Anxiety | MASEM | Meta-analytic Structural Equation Modeling |

| AG | Age | MSE | Mobile Self-Efficacy |

| AI | Artificial Intelligence | N | Number of studies |

| AN | Anthropomorphism | OA | Operational ability |

| AT | Attitude Toward Using | OPQ | Output Quality |

| AT | Attitude | OU | Objective Usability |

| AU | Acceptance and Use | PA | Perceived Autonomy |

| AW | Awareness | PCR | Perceived cyber risk |

| BIA | Behavior Intention to Adopt | PE | Performance Expectancy |

| BIU | Behavior Intention | PEC | Perception of external control |

| BIU | Behavior Intention to Use | PENJ | Perceived Enjoyment |

| CA | Computer Anxiety | PEU | Perceived Ease of Use |

| CBAM | Concerns-Based Adoption Model | PI | Personal Innovativeness |

| CFI | Comparative Fit Index | PL | Playfulness |

| CM | Complexity | PR | Perceived risk |

| CP | Compatibility | PRA | Perceived relative advantage |

| CPF | Computer Playfulness | PT | Perceived Trust |

| CS | Cyber Security | PU | Perceived usefulness |

| CSE | Computer self-efficacy | PV | Price Value |

| DCs | Developing Countries | R2 | The coefficient of determination |

| DF | Degree of freedom | RA | Relative advantage |

| DOI | Diffusion of Innovations Theory | RD | Results Demonstrability |

| EdTech | Educational Technology | RMSEA | Root Mean Square Error of Approximation |

| EE | Effort Expectancy | SA | Satisfaction |

| EF | Efficiency | SE | Self-Efficacy |

| ETADC | Educational Technology Adoption for Developing Countries | SEM | Structural Equation Modeling |

| EX | Experience | SI | Social influence |

| FC | Facilitating Conditions | SIS | social isolation |

| GD | Gender | SN | Subjective Norms |

| GOFI | Goodness-of-fit indices | SRMR | Standardized Root Mean Squared Residual |

| H | Hypothesis | ST | Stress |

| H | Habit | TAM | Technology Acceptance Model, |

| HEIs | Higher education institutions | TC | Technology challenges |

| HM | Hedonic Motivation | TLI | Tucker–Lewis index |

| ICT | Information and Communication Technologies | TOE | Technology–Organization–Environment |

| IM | Image | TPACK | Technological Pedagogical Content Knowledge |

| IMGN | Imagination | TR | Trust |

| IMRN | Immersion | TSSEM | Two-Stage Structural Equation Modeling |

| INTR | Interaction | TTF | Task–Technology Fit |

| IoT | Internet of Things | UB | Use Behavior |

| IU | Intention to Use a Technology | UTAUT | Unified Theory of Acceptance and Use of Technology |

| IU | The Intention of Use | WTU | Willingness to Use |

| IV | Intrinsic Value | β | Path coefficients |

Appendix A

| Number of Studies | Theories | Major Factors Impacting Technology Adoption | References | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Technology-Related Factors | Context-Related Factors | Individual-Related Factors | |||||||||

| Interface Quality: Ease of Use | Functionality: Perceived Technology Fit | Technology Accessibility (Price Value) | Usefulness, Performance Expectancy | Top Management Support, Facilitating Conditions, Infrastructure | Academic Expertise, Training, Habit | Competitive Pressure, Hedonic Motivation (Social Influence), Curiosity, Cultural Influence | Effort Expectancy: Capabilities Perceived Self-Efficacy | Users’ Satisfaction, Positive Attitude Toward Technology | |||

| 1 | TAM | √ | √ | √ | √ | [88] | |||||

| 2 | TAM | √ | √ | √ | √ | √ | [89] | ||||

| 3 | TAM | √ | √ | √ | [55] | ||||||

| 4 | TAM | √ | √ | √ | √ | √ | [39] | ||||

| 5 | TAM | √ | √ | √ | √ | [54] | |||||

| 6 | TAM | √ | √ | √ | √ | √ | √ | √ | [79] | ||

| 7 | TAM | √ | √ | [90] | |||||||

| 8 | TAM | √ | √ | √ | √ | √ | [80] | ||||

| 9 | TAM | √ | √ | √ | √ | √ | [40] | ||||

| 10 | TAM | √ | √ | √ | √ | [46] | |||||

| 11 | TAM | √ | √ | [52] | |||||||

| 12 | TAM | √ | √ | [74] | |||||||

| 13 | TAM | √ | √ | [91] | |||||||

| 14 | TAM | √ | √ | √ | √ | [92] | |||||

| 15 | TAM | √ | √ | √ | [93] | ||||||

| 16 | TAM | √ | √ | √ | [58] | ||||||

| 17 | TAM | √ | √ | √ | √ | √ | √ | [75] | |||

| 18 | TAM | √ | √ | √ | √ | √ | √ | √ | √ | [49] | |

| 19 | TAM | √ | √ | [94] | |||||||

| 20 | TAM | √ | √ | √ | √ | [95] | |||||

| 21 | TAM | √ | √ | √ | √ | √ | √ | √ | [96] | ||

| 22 | TAM | √ | √ | √ | [78] | ||||||

| 23 | TAM | √ | √ | √ | √ | √ | √ | √ | [97] | ||

| 24 | TAM | √ | √ | √ | √ | [98] | |||||

| 25 | TAM | √ | √ | √ | √ | [99] | |||||

| 26 | TAM | √ | √ | √ | [100] | ||||||

| 27 | TAM | √ | √ | √ | √ | [101] | |||||

| 28 | TAM | √ | √ | √ | √ | [102] | |||||

| 29 | TAM | √ | √ | √ | [103] | ||||||

| 30 | TAM | √ | √ | [104] | |||||||

| 31 | TAM | √ | √ | [105] | |||||||

| 32 | TAM | √ | √ | √ | √ | √ | √ | √ | √ | [106] | |

| 33 | TAM | √ | √ | √ | √ | [107] | |||||

| 34 | UTAUT | √ | √ | √ | √ | √ | √ | √ | √ | [108] | |

| 35 | UTAUT | √ | √ | √ | [48] | ||||||

| 36 | UTAUT | √ | √ | √ | √ | √ | √ | √ | [109] | ||

| 37 | UTAUT | √ | √ | √ | [37] | ||||||

| 38 | UTAUT | √ | √ | [38] | |||||||

| 39 | UTAUT | √ | √ | √ | √ | √ | [110] | ||||

| 40 | UTAUT | √ | √ | √ | [51] | ||||||

| 41 | UTAUT | √ | √ | √ | √ | [77] | |||||

| 42 | UTAUT | √ | √ | √ | √ | √ | [81] | ||||

| 43 | UTAUT | √ | √ | √ | √ | √ | √ | √ | [111] | ||

| 44 | UTAUT | √ | √ | √ | √ | [44] | |||||

| 45 | UTAUT | √ | √ | √ | √ | √ | [76] | ||||

| 46 | UTAUT | √ | √ | √ | [47] | ||||||

| 47 | UTAUT | √ | √ | √ | √ | √ | √ | [26] | |||

| 48 | UTAUT | √ | √ | √ | [112] | ||||||

| 49 | UTAUT | √ | √ | √ | [113] | ||||||

| 50 | UTAUT | √ | √ | √ | √ | √ | [56] | ||||

| 51 | UTAUT | √ | √ | √ | [114] | ||||||

| 52 | UTAUT | √ | √ | √ | [115] | ||||||

| 53 | UTAUT | √ | √ | [116] | |||||||

| 54 | UTAUT | √ | √ | √ | √ | √ | [117] | ||||

| 55 | UTAUT | √ | √ | √ | √ | √ | √ | √ | [70] | ||

| 56 | UTAUT | √ | √ | √ | √ | [43] | |||||

| 57 | UTAUT | √ | √ | √ | √ | √ | √ | [57] | |||

| 58 | UTAUT | √ | √ | √ | √ | [118] | |||||

| 59 | UTAUT | √ | √ | [119] | |||||||

| 60 | UTAUT | √ | √ | √ | √ | √ | [50] | ||||

| 61 | TAM & UTAUT | √ | √ | √ | [41] | ||||||

| 62 | TAM & UTAUT | √ | √ | [53] | |||||||

| 63 | TAM & DOI | √ | √ | √ | √ | [120] | |||||

| 64 | DOI | √ | √ | √ | √ | [45] | |||||

| 65 | NA | √ | √ | √ | [121] | ||||||

| 66 | NA | √ | √ | √ | √ | √ | √ | √ | √ | [122] | |

| 67 | NA | √ | √ | √ | [123] | ||||||

| 68 | NA | √ | √ | √ | [124] | ||||||

| 69 | NA | √ | √ | [125] | |||||||

| 70 | NA | √ | [126] | ||||||||

| Total | 33 | 41 | 9 | 55 | 28 | 19 | 33 | 29 | 40 | ||

| Estimate | Std. Error | Lowbound | Upbound | z Value | Pr(>|z|) | |

|---|---|---|---|---|---|---|

| AU on EE | 0.293149 | 0.040248 | 0.214265 | 0.372033 | 7.2837 | 3.249 × 10−13 *** |

| AU on PE | 0.169997 | 0.061223 | 0.050002 | 0.289992 | 2.7767 | 0.0054917 ** |

| AU on PV | 0.242560 | 0.032{4 | 0.178403 | 0.306717 | 7.4101 | 1.263 × 10−13 *** |

| AU on SI | 0.150857 | 0.044516 | 0.063607 | 0.238107 | 3.3888 | 0.0007019 *** |

| EE on FC | 0.374236 | 0.035246 | 0.305156 | 0.443316 | 10.6180 | <2.2 × 10−16 *** |

| EE on SI | 0.437334 | 0.042322 | 0.354385 | 0.520283 | 10.3336 | <2.2 × 10−16 *** |

| PE with FC | 0.618127 | 0.021354 | 0.576273 | 0.659981 | 28.9461 | <2.2 × 10−16 *** |

| PV with FC | 0.629135 | 0.022312 | 0.585404 | 0.672866 | 28.1970 | <2.2 × 10−16 *** |

| PE with PV | 0.543783 | 0.027304 | 0.490268 | 0.597299 | 19.9156 | <2.2 × 10−16 *** |

| SI with PV | 0.540050 | 0.020028 | 0.500796 | 0.579304 | 26.9647 | <2.2 × 10−16 *** |

| SI with FC | 0.425293 | 0.026239 | 0.373867 | 0.476720 | 16.2087 | <2.2 × 10−16 *** |

| PE with SI | 0.574790 | 0.018721 | 0.538098 | 0.611482 | 30.7036 | <2.2 × 10−16 *** |

| Goodness-of-Fit Indices: | Value |

|---|---|

| Sample size | 8934.0000 |

| Chi-square of the target model | 43.2121 |

| DF of the target model | 3.0000 |

| p-value of the target model | 0.0000 |

| Number of constraints imposed on “Smatrix” | 0.0000 |

| Manually adjusted DF | 0.0000 |

| Chi-square of the independence model | 4776.8168 |

| DF of the independence model | 15.0000 |

| RMSEA | 0.0387 |

| RMSEA, lower 95% CI | 0.0290 |

| RMSEA, upper 95% CI | 0.0494 |

| SRMR | 0.0476 |

| TLI | 0.9578 |

| CFI | 0.9916 |

| AIC | 37.2121 |

| BIC | 15.9192 |

References

- Bozkurt, A. Educational Technology Research Patterns in the Realm of the Digital Knowledge Age. J. Interact. Media Educ. 2020, 2020, 18. [Google Scholar] [CrossRef]

- Burch, P.; Miglani, N. Technocentrism and social fields in the Indian EdTech movement: Formation, reproduction and resistance. J. Educ. Policy 2018, 33, 590–616. [Google Scholar] [CrossRef]

- Buabeng-Andoh, C. Exploring University students’ intention to use mobile learning: A research model approach. Educ. Inf. Technol. 2021, 26, 241–256. [Google Scholar] [CrossRef]

- Almaiah, D.; Alismaiel, O. Examination of factors influencing the use of mobile learning system: An empirical study. Educ. Inf. Technol. 2019, 23, 885–909. [Google Scholar] [CrossRef]

- Silva, A.; Garzón, D. Identifying the Cognitive and Digital Gap in Educational Institutions Using a Technology Characterization Software. Int. J. Virtual Pers. Learn. Environ. 2023, 13, 1–12. [Google Scholar] [CrossRef]

- Madni, H.; Ali, J.; Husnain, H.; Masum, M.H.; Mustafa, S.; Shuja, J.; Maray, M.; Hosseini, S. Factors Influencing the Adoption of IoT for E-Learning in Higher Educational Institutes in Developing Countries. Front. Psychol. 2022, 13, 915596. [Google Scholar] [CrossRef]

- Saleh, S.; Nat, M.; Aqel, M. Sustainable Adoption of E-Learning from the TAM Perspective. Sustainability 2022, 14, 3690. [Google Scholar] [CrossRef]

- Ali, J.; Madni, H.; Jahangeer, M.; Danish, M. IoT Adoption Model for E-Learning in Higher Education Institutes: A Case Study in Saudi Arabia. Sustainability 2023, 15, 9748. [Google Scholar] [CrossRef]

- Ullah, N.; Al-Rahmi, W.; Alzahrani, A.; Alfarraj, O.; Alblehai, F. Blockchain Technology Adoption in Smart Learning Environments. Sustainability 2021, 13, 1801. [Google Scholar] [CrossRef]

- Yip, K.; Lo, P.; Ho, K.; Chiu, D. Adoption of mobile library apps as learning tools in higher education: A tale between Hong Kong and Japan. Online Inf. Rev. 2020, 45, 389–405. [Google Scholar] [CrossRef]

- Teo, T.; Doleck, T.; Bazelais, P.; Lemay, D. Exploring the drivers of technology acceptance: A study of Nepali school students. Educ. Technol. Res. Dev. 2019, 67, 495–517. [Google Scholar] [CrossRef]

- Binaymin, S.; Rutter, M.; Smith, S. Extending the Technology Acceptance Model to Understand Students’ Use of Learning Management Systems in Saudi Higher Education. Int. J. Emerg. Technol. Learn. (iJET) 2019, 14, 4–21. [Google Scholar] [CrossRef]

- Miller, J.; Khera, O. Digital Library Adoption and the Technology Acceptance Model: A Cross-Country Analysis. Electron. J. Inf. Syst. Dev. Ctries. 2010, 40, 1–19. [Google Scholar] [CrossRef]

- Tarhini, A.; Hone, K.; Liu, X.; Tarhini, T. Examining the moderating effect of individual-level cultural values on users’ acceptance of E-learning in developing countries: A structural equation modeling of an extended technology acceptance model. Interact. Learn. Environ. 2016, 25, 306–328. [Google Scholar] [CrossRef]

- Alshihi, H.; Sharma, S.; Sarrab, M. Neural network approach to predict mobile learning acceptance. Educ. Inf. Technol. 2018, 23, 1805–1824. [Google Scholar] [CrossRef]

- Batucan, G.; Gonzales, G.; Balbuena, M.; Pasaol, K.R.; Seno, D.; Gonzales, R. An Extended UTAUT Model to Explain Factors Affecting Online Learning System Amidst COVID-19 Pandemic: The Case of a Developing Economy. Front. Artif. Intell. 2022, 5, 768831. [Google Scholar] [CrossRef]

- Venkatesh, V.; Zhang, X. Unified Theory of Acceptance and Use of Technology: U.S. Vs. China. J. Glob. Inf. Technol. Manag. 2010, 13, 5–27. [Google Scholar] [CrossRef]

- Hennessy, S.; D’Angelo, S.; McIntyre, N.; Koomar, S.; Kreimeia, A.; Cao, L.; Brugha, M.; Zubairi, A. Technology Use for Teacher Professional Development in Low- and Middle-Income Countries: A systematic review. Comput. Educ. Open 2022, 3, 100080. [Google Scholar] [CrossRef]

- Alowayr, A.; Al-Azawei, A. Predicting mobile learning acceptance: An integrated model and empirical study based on higher education students’ perceptions. Australas. J. Educ. Technol. 2021, 37, 38–55. [Google Scholar] [CrossRef]

- Orozco-Messana, J.; Martínez-Rubio, J.; Gonzálvez-Pons, A. Sustainable Higher Education Development through Technology Enhanced Learning. Sustainability 2020, 12, 3600. [Google Scholar] [CrossRef]

- Walsh, P.P.; Murphy, E.; Horan, D. The role of science, technology and innovation in the UN 2030 agenda. Technol. Forecast. Soc. Change 2020, 154, 119957. [Google Scholar] [CrossRef]

- Adenle, A.; Steur, H.; Mwongera, C.; Rola-Rubzen, M.; Barcellos, M.; Font Vivanco, D.; Timilsina, G.; Possas, C.; Alders, R.; Chertow, M.; et al. Correction: Global UN 2030 agenda: How can Science, Technology and Innovation accelerate the achievement of Sustainable Development Goals for All? PLOS Sustain. Transform. 2024, 3, e0000100. [Google Scholar] [CrossRef]

- Alsswey, A.; Al-Samarraie, H.; El-Qirem, F.A.; Zaqout, F. M-learning technology in Arab Gulf countries: A systematic review of progress and recommendations. Educ. Inf. Technol. 2020, 25, 2919–2931. [Google Scholar] [CrossRef]

- Bice, H.; Tang, H. Teachers’ beliefs and practices of technology integration at a school for students with dyslexia: A mixed methods study. Educ. Inf. Technol. 2022, 27, 10179–10205. [Google Scholar] [CrossRef]

- Okai-Ugbaje, S.; Ardzejewska, K.; Imran, A. A mobile learning framework for higher education in resource constrained environments. Educ. Inf. Technol. 2022, 27, 11947–11969. [Google Scholar] [CrossRef]

- Abbad, M. Using the UTAUT model to understand students’ usage of e-learning systems in developing countries. Educ. Inf. Technol. 2021, 26, 7205–7224. [Google Scholar] [CrossRef]

- Yakubu, N.; Dasuki, S. Factors affecting the adoption of e-learning technologies among higher education students in Nigeria: A structural equation modelling approach. Inf. Dev. 2018, 35, 026666691876590. [Google Scholar] [CrossRef]

- Alyoussef, I. Factors Influencing Students’ Acceptance of M-Learning in Higher Education: An Application and Extension of the UTAUT Model. Electronics 2021, 10, 3171. [Google Scholar] [CrossRef]

- Teng, Z.; Cai, Y.; Gao, Y.; Zhang, X.; Xinlong, L. Factors Affecting Learners’ Adoption of an Educational Metaverse Platform: An Empirical Study Based on an Extended UTAUT Model. Mob. Inf. Syst. 2022, 2022, 5479215. [Google Scholar] [CrossRef]

- Kiwanuka, A. Acceptance Process: The Missing Link between UTAUT and Diffusion of Innovation Theory. Am. J. Inf. Syst. 2015, 3, 40–44. [Google Scholar] [CrossRef]

- Zaineldeen, S.; Li, H.; Koffi, A.; Mohammed, B. Technology Acceptance Model’ Concepts, Contribution, Limitation, and Adoption in Education. Univers. J. Educ. Res. 2020, 8, 5061–5071. [Google Scholar] [CrossRef]

- Venkatesh, V.; Davis, F. A Theoretical Extension of the Technology Acceptance Model: Four Longitudinal Field Studies. Manag. Sci. 2000, 46, 186–204. [Google Scholar] [CrossRef]

- Venkatesh, V.; Morris, M.; Davis, G.; Davis, F. User Acceptance of Information Technology: Toward a Unified View. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef]

- Blut, M.; Chong, A.; Tsigna, Z.; Venkatesh, V. Meta-Analysis of the Unified Theory of Acceptance and Use of Technology (UTAUT): Challenging its Validity and Charting a Research Agenda in the Red Ocean. J. Assoc. Inf. Syst. 2022, 23, 13–95. [Google Scholar] [CrossRef]

- Tamilmani, K.; Rana, N.; Fosso Wamba, S.; Dwivedi, R. The extended Unified Theory of Acceptance and Use of Technology (UTAUT2): A systematic literature review and theory evaluation. Int. J. Inf. Manag. 2020, 57, 102269. [Google Scholar] [CrossRef]

- Venkatesh, V.; Bala, H. Technology Acceptance Model 3 and a Research Agenda on Interventions. Decis. Sci. 2008, 39, 273–315. [Google Scholar] [CrossRef]

- Al-Adwan, A.S.; Al-Debei, M.M. The determinants of Gen Z’s metaverse adoption decisions in higher education: Integrating UTAUT2 with personal innovativeness in IT. Educ. Inf. Technol. 2024, 29, 7413–7445. [Google Scholar] [CrossRef]

- Wiangkham, A.; Vongvit, R. Exploring the Drivers for the Adoption of Metaverse Technology in Engineering Education using PLS-SEM and ANFIS. Educ. Inf. Technol. 2023, 29, 7385–7412. [Google Scholar] [CrossRef]

- Al-Adwan, A.; Li, N.; Al-Adwan, A.; Abbasi, G.; Albelbisi, N.; Habibi, A. Extending the Technology Acceptance Model (TAM) to Predict University Students’ Intentions to Use Metaverse-Based Learning Platforms. Educ. Inf. Technol. 2023, 28, 15381–15413. [Google Scholar] [CrossRef]

- Koutromanos, G.; Mikropoulos, T.; Mavridis, D.; Christogiannis, C. The mobile augmented reality acceptance model for teachers and future teachers. Educ. Inf. Technol. 2023, 29, 7855–7893. [Google Scholar] [CrossRef]

- Chatterjee, S.; Bhattacharjee, K. Adoption of artificial intelligence in higher education: A quantitative analysis using structural equation modelling. Educ. Inf. Technol. 2020, 25, 3443–3463. [Google Scholar] [CrossRef]

- Saif, N.; Khan, S.U.; Shaheen, I.; Alotaibi, F.A.; Alnfiai, M.M.; Arif, M. Chat-GPT; validating Technology Acceptance Model (TAM) in education sector via ubiquitous learning mechanism. Comput. Hum. Behav. 2024, 154, 108097. [Google Scholar] [CrossRef]

- Tseng, T.; Lin, S.; Wang, Y.-S.; Liu, H.-X. Investigating teachers’ adoption of MOOCs: The perspective of UTAUT2. Interact. Learn. Environ. 2019, 30, 635–650. [Google Scholar] [CrossRef]

- Strzelecki, A.; Cicha, K.; Rizun, M.; Rutecka, P. Acceptance and use of ChatGPT in the academic community. Educ. Inf. Technol. 2024, 29, 22943–22968. [Google Scholar] [CrossRef]

- Wang, S.; Yu, H.; Hu, X.; Li, J. Participant or spectator? Comprehending the willingness of faculty to use intelligent tutoring systems in the artificial intelligence era. Br. J. Educ. Technol. 2020, 51, 1657–1673. [Google Scholar] [CrossRef]

- Strzelecki, A. To use or not to use ChatGPT in higher education? A study of students’ acceptance and use of technology. Interact. Learn. Environ. 2024, 32, 5142–5155. [Google Scholar] [CrossRef]

- Wang, Y.-Y.; Wang, Y.-S.; Jian, S.-E. Investigating the Determinants of Students’ Intention to Use Business Simulation Games. J. Educ. Comput. Res. 2019, 58, 073563311986504. [Google Scholar] [CrossRef]

- Wang, Y.-Y.; Chuang, Y.-W. Investigating the potential adverse effects of virtual reality-based learning system usage: From UTAUT2 and PPVITU perspectives. Interact. Learn. Environ. 2023, 32, 5106–5125. [Google Scholar] [CrossRef]

- Unal, E.; Uzun, A. Understanding university students’ behavioral intention to use Edmodo through the lens of an extended technology acceptance model. Br. J. Educ. Technol. 2020, 52, 619–637. [Google Scholar] [CrossRef]

- Lin, J.-W.; Lai, Y.-C. User acceptance model of computer-based assessment: Moderating effect and intention-behavior effect. Australas. J. Educ. Technol. 2019, 35. [Google Scholar] [CrossRef]

- Bazelais, P.; Binner, G.; Doleck, T. Examining the key drivers of student acceptance of online labs. Interact. Learn. Environ. 2022, 32, 1460–1475. [Google Scholar] [CrossRef]

- Chen, H.-L.; Widarso, G.; Sutrisno, H. A ChatBot for Learning Chinese: Learning Achievement and Technology Acceptance. J. Educ. Comput. Res. 2020, 58, 073563312092962. [Google Scholar] [CrossRef]

- Bilquise, G.; Ibrahim, S.; Salhieh, S.E. Investigating student acceptance of an academic advising chatbot in higher education institutions. Educ. Inf. Technol. 2023, 29, 6357–6382. [Google Scholar] [CrossRef]

- Estriégana, R.; Medina, J.; Barchino, R. Student acceptance of virtual laboratory and practical work: An extension of the technology acceptance model. Comput. Educ. 2019, 135, 1–14. [Google Scholar] [CrossRef]

- Barrett, A.; Pack, A.; Quaid, E. Understanding learners’ acceptance of high-immersion virtual reality systems: Insights from confirmatory and exploratory PLS-SEM analyses. Comput. Educ. 2021, 169, 104214. [Google Scholar] [CrossRef]

- Khechine, H.; Raymond, B.; Augier, M. The adoption of a social learning system: Intrinsic value in the UTAUT model. Br. J. Educ. Technol. 2020, 51, 2306–2325. [Google Scholar] [CrossRef]

- Jung, I.; Lee, J. A cross-cultural approach to the adoption of open educational resources in higher education. Br. J. Educ. Technol. 2019, 51, 263–280. [Google Scholar] [CrossRef]

- Saidu, M.K.; Al Mamun, M.A. Exploring the Factors Affecting Behavioural Intention to Use Google Classroom: University Teachers’ Perspectives in Bangladesh and Nigeria. TechTrends 2022, 66, 681–696. [Google Scholar] [CrossRef]

- Yadegaridehkordi, E.; Nasir, M.; Noor, N.; Shuib, L.; Badie, N. Predicting the Adoption of Cloud-Based Technology Using Fuzzy Analytic Hierarchy Process and Structural Equation Modeling Approaches. Appl. Soft Comput. 2018, 66, 77–89. [Google Scholar] [CrossRef]

- El-Masri, M.; Tarhini, A. Factors affecting the adoption of e-learning systems in Qatar and USA: Extending the Unified Theory of Acceptance and Use of Technology 2 (UTAUT2). Educ. Technol. Res. Dev. 2017, 65, 743–763. [Google Scholar] [CrossRef]

- Kanwal, F.; Rehman, M. Factors Affecting E-Learning Adoption in Developing Countries–Empirical Evidence From Pakistan’s Higher Education Sector. IEEE Access 2017, 5, 10968–10978. [Google Scholar] [CrossRef]

- Miah, M.; Singh, J.; Rahman, M. Factors Influencing Technology Adoption in Online Learning among Private University Students in Bangladesh Post COVID-19 Pandemic. Sustainability 2023, 15, 3543. [Google Scholar] [CrossRef]

- Duong, D. How effort expectancy and performance expectancy interact to trigger higher education students’ uses of ChatGPT for learning. Interact. Technol. Smart Educ. 2023, 21, 356–380. [Google Scholar] [CrossRef]

- Amrozi, Y.; Ekowati, D.; Putranto, H.; Zikky, M.; Zulkarnain, M. Adoption of Information Technology as a Mediator Between Institutional Pressure and Change Performance. J. Namib. Stud. Hist. Politics Cult. 2023, 34, 788–808. [Google Scholar] [CrossRef]

- He, L.; Li, C. Students’ Adoption of ICT Tools for Learning English Based on Unified Theory of Acceptance and Use of Technology. Asian J. Educ. Soc. Stud. 2023, 44, 26–38. [Google Scholar] [CrossRef]

- Alghushami, A.; Zakaria, N.H.; Mat Aji, Z. Factors Influencing Cloud Computing Adoption in Higher Education Institutions of Least Developed Countries: Evidence from Republic of Yemen. Appl. Sci. 2020, 10, 8098. [Google Scholar] [CrossRef]

- Gómez-Ramirez, I.; Valencia-Arias, A.; Duque, L. Approach to M-learning Acceptance Among University Students. Int. Rev. Res. Open Distrib. Learn. 2019, 20. [Google Scholar] [CrossRef]

- Sofwan, M.; Habibi, A.; Yaakob, M. TPACK’s Roles in Predicting Technology Integration during Teaching Practicum: Structural Equation Modeling. Educ. Sci. 2023, 13, 448. [Google Scholar] [CrossRef]

- Al-Rahmi, A.; Shamsuddin, A.; Wahab, E.; Al-Rahmi, W.; Alismaiel, O.; Crawford, J. Social media usage and acceptance in higher education: A structural equation model. Front. Educ. 2022, 7, 964456. [Google Scholar] [CrossRef]

- Meet, R.K.; Kala, D.; Al-Adwan, A.S. Exploring factors affecting the adoption of MOOC in Generation Z using extended UTAUT2 model. Educ. Inf. Technol. 2022, 27, 10261–10283. [Google Scholar] [CrossRef]

- Chomunorwa, S.; Mugobo, V. Challenges of e-learning adoption in South African public schools: Learners’ perspectives. J. Educ. e-Learn. Res. 2023, 10, 80–85. [Google Scholar] [CrossRef]

- Dwivedi, Y.K.; Rana, N.P.; Jeyaraj, A.; Clement, M.; Williams, M.D. Re-examining the Unified Theory of Acceptance and Use of Technology (UTAUT): Towards a Revised Theoretical Model. Inf. Syst. Front. 2019, 21, 719–734. [Google Scholar] [CrossRef]

- Jak, S.; Cheung, M. Meta-Analytic Structural Equation Modeling With Moderating Effects on SEM Parameters. Psychol. Methods 2019, 25, 430–455. [Google Scholar] [CrossRef]

- Ateş, H.; Gündüzalp, C. A unified framework for understanding teachers’ adoption of robotics in STEM education. Educ. Inf. Technol. 2023, 29, 1–27. [Google Scholar] [CrossRef]

- Singh, H.; Singh, P.; Sharma, D. Faculty acceptance of virtual teaching platforms for online teaching: Moderating role of resistance to change. Australas. J. Educ. Technol. 2023, 39, 33–50. [Google Scholar] [CrossRef]

- Guggemos, J.; Seufert, S.; Sonderegger, S. Humanoid robots in higher education: Evaluating the acceptance of Pepper in the context of an academic writing course using the UTAUT. Br. J. Educ. Technol. 2020, 51, 1864–1883. [Google Scholar] [CrossRef]

- Ustun, A.B.; Karaoglan-Yilmaz, F.G.; Yilmaz, R.; Ceylan, M.; Uzun, O. Development of UTAUT-based augmented reality acceptance scale: A validity and reliability study. Educ. Inf. Technol. 2024, 29, 11533–11554. [Google Scholar] [CrossRef]

- Kashive, N.; Phanshikar, D. Understanding the antecedents of intention for using mobile learning. Smart Learn. Environ. 2023, 10, 34. [Google Scholar] [CrossRef]

- Liu, Y.; Sun, J.C.-Y.; Chen, S.-K. Comparing technology acceptance of AR-based and 3D map-based mobile library applications: A multigroup SEM analysis. Interact. Learn. Environ. 2023, 31, 4156–4170. [Google Scholar] [CrossRef]

- Nikou, S. Factors influencing student teachers’ intention to use mobile augmented reality in primary science teaching. Educ. Inf. Technol. 2024, 29, 15353–15374. [Google Scholar] [CrossRef]

- Rahman, M.S.; Sabbir, M.; Zhang, J.; Moral, I.; Hossain, G. Examining students’ intention to use ChatGPT: Does trust matter? Australas. J. Educ. Technol. 2023, 39, 51–71. [Google Scholar] [CrossRef]

- Cheung, M.; Cheung, S.F. Random-effects models for meta-analytic structural equation modeling: Review, issues, and illustrations. Res. Synth. Methods 2016, 7, 140–155. [Google Scholar] [CrossRef] [PubMed]

- Cheung, M. Fixed- and random-effects meta-analytic structural equation modeling: Examples and analyses in R. Behav. Res. Methods 2013, 46, 29–40. [Google Scholar] [CrossRef]

- Goretzko, D.; Siemund, K.; Sterner, P. Evaluating Model Fit of Measurement Models in Confirmatory Factor Analysis. Educ. Psychol. Meas. 2023, 84, 001316442311638. [Google Scholar] [CrossRef]

- Sova, R.; Tudor, C.; Tartavulea, C. Artificial Intelligence Tool Adoption in Higher Education: A Structural Equation Modeling Approach to Understanding Impact Factors among Economics Students. Electronics 2024, 13, 3632. [Google Scholar] [CrossRef]

- Anderson, C.; Al-Gahtani, S.; Hubona, G. The Value of TAM Antecedents in Global IS Development and Research. J. Organ. End User Comput. 2011, 23, 18–37. [Google Scholar] [CrossRef]

- Granić, A. Technology Acceptance and Adoption in Education. In Handbook of Open, Distance and Digital Education; Zawacki-Richter, O., Jung, I., Eds.; Springer Nature: Singapore, 2023; pp. 183–197. [Google Scholar]

- Adelana, O.; Ayanwale, M.; Ishola, A.; Oladejo, A.; Adewuyi, H. Exploring pre-service teachers’ intention to use virtual reality: A mixed method approach. Comput. Educ. X Real. 2023, 3, 100045. [Google Scholar] [CrossRef]

- Barrett, A.; Pack, A.; Guo, Y.; Wang, J. Technology acceptance model and multi-user virtual reality learning environments for Chinese language education. Interact. Learn. Environ. 2020, 31, 1665–1682. [Google Scholar] [CrossRef]

- Alvarez-Marin, A.; Velázquez-Iturbide, J.Á.; Castillo-Vergara, M. The acceptance of augmented reality in engineering education: The role of technology optimism and technology innovativeness. Interact. Learn. Environ. 2021, 31, 3409–3421. [Google Scholar] [CrossRef]

- Rüth, M.; Birke, A.; Kaspar, K. Teaching with digital games: How intentions to adopt digital game-based learning are related to personal characteristics of pre-service teachers. Br. J. Educ. Technol. 2022, 53, 1412–1429. [Google Scholar] [CrossRef]

- Hussein, M.; Ow, S.; Al-Azawei, A.; Ibrhim, I. What drives students’ successful reuse of online learning in higher education? A case of Google Classroom. Australas. J. Educ. Technol. 2022, 38, 1–21. [Google Scholar] [CrossRef]

- Francom, G.; Schwan, A.; Nuatomue, J. Comparing Google Classroom and D2L Brightspace Using the Technology Acceptance Model. TechTrends 2020, 65, 111–119. [Google Scholar] [CrossRef]

- Elfeky, A.; Elbyaly, M. The use of data analytics technique in learning management system to develop fashion design skills and technology acceptance. Interact. Learn. Environ. 2021, 31, 3810–3827. [Google Scholar] [CrossRef]

- Virani, S.; Saini, J.; Sharma, S. Adoption of massive open online courses (MOOCs) for blended learning: The Indian educators’ perspective. Interact. Learn. Environ. 2020, 31, 1060–1076. [Google Scholar] [CrossRef]

- Aburub, F. A new integrated model to explore factors that influence adoption of mobile learning in higher education: An empirical investigation. Educ. Inf. Technol. 2019, 24, 2145–2158. [Google Scholar] [CrossRef]

- Khlaisang, J.; Songkram, N.; Huang, F.; Teo, T. Teachers’ perception of the use of mobile technologies with smart applications to enhance students’ thinking skills: A study among primary school teachers in Thailand. Interact. Learn. Environ. 2021, 31, 5037–5058. [Google Scholar] [CrossRef]

- Al-Rahmi, A.; Al-Rahmi, W.; Alturki, U.; Aldraiweesh, A.; Almotairi, S.; Al-Adwan, A. Acceptance of mobile technologies and M-learning by university students: An empirical investigation in higher education. Educ. Inf. Technol. 2022, 27, 7805–7826. [Google Scholar] [CrossRef]

- Tao, D.; Fu, P.; Wang, Y.; Zhang, T.; Qu, X. Key characteristics in designing massive open online courses (MOOCs) for user acceptance: An application of the extended technology acceptance model. Interact. Learn. Environ. 2022, 30, 882–895. [Google Scholar] [CrossRef]

- Pozón-López, I.; Kalinić, Z.; Higueras-Castillo, E.; Liébana-Cabanillas, F. A multi-analytical approach to modeling of customer satisfaction and intention to use in Massive Open Online Courses (MOOC). Interact. Learn. Environ. 2019, 28, 1003–1021. [Google Scholar] [CrossRef]

- de Oliveira Neto, J.D.; Law, V.; Kang, S.P. Adoption of open educational resources in the global south. J. Comput. High. Educ. 2024, 36, 116–140. [Google Scholar] [CrossRef]

- Tang, H.; Lin, Y.J.; Qian, Y. Understanding K-12 teachers’ intention to adopt open educational resources: A mixed methods inquiry. Br. J. Educ. Technol. 2020, 51, 2558–2572. [Google Scholar] [CrossRef]

- Zhang, X.; Tlili, A.; Shubeck, K.; Hu, X.; Huang, R.; Zhu, L. Teachers’ adoption of an open and interactive e-book for teaching K-12 students Artificial Intelligence: A mixed methods inquiry. Smart Learn. Environ. 2021, 8, 34. [Google Scholar] [CrossRef]

- Sprenger, D.; Schwaninger, A. Video demonstrations can predict the intention to use digital learning technologies. Br. J. Educ. Technol. 2023, 54, 857–877. [Google Scholar] [CrossRef]

- Al-Maroof, R.S.; Salloum, S.A.; Hassanien, A.E.; Shaalan, K. Fear from COVID-19 and technology adoption: The impact of Google Meet during Coronavirus pandemic. Interact. Learn. Environ. 2023, 31, 1293–1308. [Google Scholar] [CrossRef]

- Kemp, A.; Palmer, E.; Strelan, P.; Thompson, M. Testing a novel extended educational technology acceptance model using student attitudes towards virtual classrooms. Br. J. Educ. Technol. 2024, 55, 2110–2131. [Google Scholar] [CrossRef]

- Liu, H.; Wang, L.; Koehler, M. Exploring the intention-behavior gap in the technology acceptance model: A mixed-methods study in the context of foreign-language teaching in China. Br. J. Educ. Technol. 2019, 50, 2536–2556. [Google Scholar] [CrossRef]

- Bower, M.; DeWitt, D.; Lai, J. Reasons associated with preservice teachers’ intention to use immersive virtual reality in education. Br. J. Educ. Technol. 2020, 51, 2215–2233. [Google Scholar] [CrossRef]

- Branko, A.; Šorgo, A.; Helm, C.; Weinhandl, R.; Lang, V. Exploring Factors Affecting Elementary School Teachers’ Adoption of 3D Printers In Teaching. TechTrends 2023, 67, 990–1006. [Google Scholar] [CrossRef]

- Di Natale, A.F.; Bartolotta, S.; Gaggioli, A.; Riva, G.; Villani, D. Exploring students’ acceptance and continuance intention in using immersive virtual reality and metaverse integrated learning environments: The case of an Italian university course. Educ. Inf. Technol. 2024, 29, 14749–14768. [Google Scholar] [CrossRef]

- Strzelecki, A.; ElArabawy, S. Investigation of the moderation effect of gender and study level on the acceptance and use of generative AI by higher education students: Comparative evidence from Poland and Egypt. Br. J. Educ. Technol. 2024, 55, 1209–1230. [Google Scholar] [CrossRef]

- Zhou, M.; Dzingirai, C.; Hove, K.; Chitata, T.; Mugandani, R. Adoption, use and enhancement of virtual learning during COVID-19. Educ. Inf. Technol. 2022, 27, 8939–8959. [Google Scholar] [CrossRef] [PubMed]

- de Leon-Pineda, J.L.; Delos Reyes, E.; Galura, J. C5-LMS design using Google Classroom: User acceptance based on extended Unified Theory of Acceptance and Use of Technology. Interact. Learn. Environ. 2022, 31, 6074–6083. [Google Scholar] [CrossRef]

- Raza, S.A.; Qazi, W.; Khan, K.; Salam, J. Social Isolation and Acceptance of the Learning Management System (LMS) in the time of COVID-19 Pandemic: An Expansion of the UTAUT Model. J. Educ. Comput. Res. 2020, 59, 183–208. [Google Scholar] [CrossRef]

- Zhang, Z.; Cao, T.; Shu, J.; Liu, H. Identifying key factors affecting college students’ adoption of the e-learning system in mandatory blended learning environments. Interact. Learn. Environ. 2020, 30, 1388–1401. [Google Scholar] [CrossRef]

- Rotar, O. Online Course use in Academic Practice: An Examination of Factors from Technology Acceptance Research in the Russian Context. TechTrends 2023, 1–12. [Google Scholar] [CrossRef]

- Qashou, A. Influencing factors in M-learning adoption in higher education. Educ. Inf. Technol. 2021, 26, 1755–1785. [Google Scholar] [CrossRef]

- Guillen-Gamez, F.; Colomo Magaña, E.; Ruiz-Palmero, J.; Tomczyk, Ł. Teaching digital competence in the use of YouTube and its incidental factors: Development of an instrument based on the UTAUT model from a higher order PLS-SEM approach. Br. J. Educ. Technol. 2023, 55, 340–362. [Google Scholar] [CrossRef]

- Orhan-Özen, S.; Sumer, M. Factors affecting undergraduate students’ acceptance and use of live instructions for learning. Interact. Learn. Environ. 2023, 32, 3720–3731. [Google Scholar] [CrossRef]

- Al-Rahmi, W.; Yahaya, N.; Alamri, M.; Alyoussef, I.; Al-Rahmi, A.; Kamin, Y. Integrating innovation diffusion theory with technology acceptance model: Supporting students’ attitude towards using a massive open online courses (MOOCs) systems. Interact. Learn. Environ. 2019, 29, 1380–1392. [Google Scholar] [CrossRef]

- Okada, A.; Kowalski, R.P.G.; Kirner, C.; Torres, P.L. Factors influencing teachers’ adoption of AR inquiry games to foster skills for Responsible Research and Innovation. Interact. Learn. Environ. 2019, 27, 324–335. [Google Scholar] [CrossRef]

- Shonfeld, M.; Greenstein, Y. Factors promoting the use of virtual worlds in educational settings. Br. J. Educ. Technol. 2020, 52, 214–234. [Google Scholar] [CrossRef]

- Yang, H.H.; Feng, L.; MacLeod, J. Understanding College Students’ Acceptance of Cloud Classrooms in Flipped Instruction: Integrating UTAUT and Connected Classroom Climate. J. Educ. Comput. Res. 2019, 56, 1258–1276. [Google Scholar] [CrossRef]

- Ho, N.; Pham, H.-H.; Sivapalan, S.; Dinh, V.-H. The adoption of blended learning using Coursera MOOCs: A case study in a Vietnamese higher education institution. Australas. J. Educ. Technol. 2022, 38, 121–138. [Google Scholar] [CrossRef]

- Dai, H.M.; Teo, T.; Rappa, N.A.; Huang, F. Explaining Chinese university students’ continuance learning intention in the MOOC setting: A modified expectation confirmation model perspective. Comput. Educ. 2020, 150, 103850. [Google Scholar] [CrossRef]

- Huang, F.; Teo, T.; Sánchez-Prieto, J.C.; García-Peñalvo, F.J.; Olmos-Migueláñez, S. Cultural values and technology adoption: A model comparison with university teachers from China and Spain. Comput. Educ. 2019, 133, 69–81. [Google Scholar] [CrossRef]

| Model | Core Components | Cause–Effect Links | ||

|---|---|---|---|---|

| TAM [31] | PU, PEU, AT, BIU, UB | (1) PU → BIU (2) PEU → AT | (3) PEU → PU (4) PU → AT | (5) AT → BIU (6) BI → UB |

| TAM2 [32] | PU, PEU, BIU, UB, SN, IM, JR, RD, OPQ | (1) PEU → PU (2) PU → BIU (3) PEU → BIU (4) BI → UB | (5) SN → PU (6) SN → BIU (7) SN → IM (8) IM → PU | (9) JR → PU (10) RD → PU (11) OPQ → PU |

| TAM3 [36] | PU, PEU, BIU, UB, SN, IM, JR, RD, OPQ, CSE, PEC, CA, PENJ, OU, CPF, moderators (voluntariness, experience) | (1) PEU → PU (2) PU → BIU (3) PEU → BIU (4) BI → UB (5) SN → PU (6) SN → IU | (7) SN → IM (8) IM → PU (9) JR → PU (10) RD → PU (11) OPQ → PU (12) CSE → PEU | (13) PEC → PEU (14) CANX → PEU (15) CPF → PEU (16) PENJ → PEU (17) OU → PEU |

| UTAUT [33] | PE, EE, SI, FC, BIU, Moderator variables (gender, age, experience, and voluntariness) | (1) PE → BIU (2) EE → BIU | (3) SI → BIU (4) FC → BIU | (5) FC → UB (6) B → UB |

| UTAUT2 [35] | PE, EE, SI, FC, HM, H, PV, BIU, Moderator variables (gender, age, experience, and voluntariness) | (1) PE → BIU (2) EE → BIU (3) SI → BIU (4) FC → BIU | (5) FC → UB (6) HM → BIU (7) PV → BIU | (8) H → BIU (9) H → UB (10) BIU → UB |

| Studies | Base Models | Components | Cause–Effect Links | |||

|---|---|---|---|---|---|---|

| [37] | UTAUT2 | PE, EE, SI, FC, HM, PV, BIU, PI | (1) PE → BIU (2) EE → BIU (3) SI → BIU | (4) FC → BIU (5) HM → BIU | (6) PV → BIU (7) PI → BIU | (8) PI → PE (9) PI → EE |

| [38] | UTAUT2 | PE, EE, SI, FC, HM, H, BIU, CS, TR | (1) PE → BIU (2) EE → BIU | (3) SI → BIU (4) FC → BIU | (5) HM → BIU (6) H → BIU | (7) CS → BIU (8) TR → BIU |

| [39] | TAM | PEU, PU, SE, PEN, PCR, PI, PV, BIU | (1) PI → SE (2) PI → PU (3) PI → PEU (4) PI → BIU | (5) SE → PU (6) SE → PEU (7) PCR → PU | (8) PCR → BIU (9) PEU → PU (10) PEU → PENJ | (11) PEU → BIU (12) PU → BIU (13) PENJ → BIU |

| [40] | TAM and UTAUT | PU, PEU, AT, FC, PENJ, PRA, MSE, IU | (1) PENJ → PU (2) PENJ → AT (3) PU → IU | (4) PU → AT (5) AT → IU (6) FC → IU | (7) FC → PEU (8) PEU → PU (9) PEU → AT | (10) MSE → PEU (11) PRA → PU |

| [41] | UTAUT | PE, EE, FC, BIU, PR, AT, AAHE | (1) PR → AT (2) PE → AT | (3) EE → AT (4) FC → EE | (5) FC → BIU (6) AT → BIU | (7) BIU → AAHE |

| [42] | TAM | PE, EE, BIU | (1) AT → BIU (2) PEU → AT | (3) PEU → PU (4) BIU → AT | (5) ST → PU (6) ST → PEU | (7) ST → A |

| [43] | UTAUT2 | PE, EE, SI, FC, HM, PV, BIU, UB | (1) PE → BIU (2) EE → BIU | (3) SI → BIU (4) FC → BIU | (5) FC → UB (6) HM → BIU | (7) PV → BIU (8) BIU → UB |

| [44] | UTAUT2 | PE, EE, SI, FC, HM, H, PV, BIU, UB, PI | (1) PE → BIU (2) EE → BIU (3) SI → BIU | (4) FC → BIU (5) FC → UB (6) HM → BIU | (7) PV → BIU (8) H → BIU (9) H → UB | (10) BIU → UB (11) PI → BIU |

| [45] | IOD | WTU, PT, RA or PE, CP, CM, EX or EE | (1) CP → WTU (2) CM → WTU (3) EE → WTU | (4) RA → WTU (5) PT → WTU | ||

| [46] | UTAUT2 | PE, EE, SI, FC, HM, H, BIU, PI | (1) PE → BIU (2) EE → BIU (3) SI → BIU | (4) FC → BIU (5) FC → UB (6) HM → BIU | (7) H → BIU (8) H → UB | (9) BIU → UB (10) PI → BIU |

| [47] | UTAUT2 | PE, EE, SI, FC, HM, PV, BIU, | (1) PE → BIU (2) EE → BIU | (3) SI → BIU (4) FC → BIU | (5) HM → BIU (6) PV → BIU | |

| [48] | UTAUT2 | PE, EE, SI, FC, HM, PV, WU | (1) PE → WTU (2) EE → WTU | (3) SI → WTU (4) FC → WTU | (5) HM → WTU (6) PV → WTU | |

| [49] | TAM, TAM2 and TAM3 | PU, PEU, AT, SN or SI, IU, SE, JR, OPQ, PEC | (1) PU → IU (2) PU → AT (3) PEU → AT (4) PEU → PU | (5) AT → IU (6) SN → PU (7) OPQ → PU | (8) PEC → PEU (9) PENJ → PEU (10) SE → PEU | |

| [50] | UTAUT | PE, EE, SI, BIU, UB | (1) PE → BIU (2) EE → BIU | (3) SI → BIU (4) BI → UB | ||

| [51] | UTAUT | PE, EE, SI, FC, BIU, U | (1) PE → BIU (2) EE → BIU (3) SI → BIU | (4) FC → UB (5) BIU → UB | ||

| [52] | TAM | PEU, PU, BIU, PEN | (1) PEN → PEU (2) PEU → BIU | (3) PEU → PU (4) PU → BIU | ||

| [53] | TAM | PEU, PU, SI, PT, PA, AN, BIA | (1) PEU → BIA (2) PU → BIA | (3) SI → BIA (4) PT → BIA | (5) PA → BIA (6) AN → BIA | |

| [54] | TAM | PEU, PU, U, AT, BIU, EF, PL, SA | (1) AT → BIU (2) PU → AT (3) PU → BIU (4) PEU → AT | (5) PEU → PU (6) SA → PU (7) SA → AT (8) SA → BIU | (9) SA → U (10) PEU → SA (11) EF → PU (12) EF → PEU | (13) EF → SA (14) PL → PU (15) PL → PEU (16) PL → SA |

| [55] | TAM | PU, PEU, IU, INTR, IMRN, IMGN | (1) PU → IU (2) PEU → IU | (3) INTR → PU (4) INTR → PEU | (5) IMGN → PU (6) IMGN → PEU | (7) IMRN → PU (8) IMRN → PEU |

| [56] | UTAUT | PE, EE, SI, FC, BIU, IV | (1) PE → BIU (2) EE → BIU | (3) SI → BIU (4) FC → BIU | (5) FC → UB (6) BIU → UB | (7) IV → BIU (8) IV → UB |

| [57] | UTAUT2 | PE, EE, SI, FC, H, HM, PV, BIU, GD, AG, EX | (1) PE → BIU (2) EE → BIU (3) SI → BIU | (4) FC → BIU (5) HM → BIU (6) PV → BIU | (7) H → BIU (8) GD → BIU | (9) AG → BIU (10) EX → BIU |

| [58] | TAM | PEU, IU, AW or SI, OA or FC, TC | (1) SI → FC (2) TC → FC (3) SI → PEU | (4) FC → PEU (5) PEU → IU | ||

| References | N | PE → EE | PE → SI | PE → AU | PE → FC | PE → PV | EE → SI | EE → AU | EE → FC | EE → PV | FC → SI | FC → AU | FC → PV | SI → PV | SI → AU | PV → AU |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| [43] | 161 | 0.34 | 0.54 | 0.7 | 0.52 | 0.62 | 0.31 | 0.36 | 0.54 | 0.45 | 0.43 | 0.57 | 0.55 | 0.44 | 0.65 | 0.59 |

| [57] | 152 | 0.53 | 0.48 | 0.55 | 0.37 | 0.47 | 0.34 | 0.62 | 0.6 | 0.53 | 0.36 | 0.51 | 0.49 | 0.22 | 0.47 | 0.62 |

| [44] | 629 | 0.351 | 0.565 | 0.809 | 0.341 | 0.415 | 0.213 | 0.39 | 0.71 | 0.339 | 0.302 | 0.431 | 0.462 | 0.335 | 0.601 | 0.479 |

| [74] | 605 | 0.62 | 0.31 | 0.35 | NA | NA | 0.39 | 0.3 | NA | NA | NA | NA | NA | NA | 0.39 | NA |

| [75] | 418 | 0.561 | NA | 0.412 | NA | NA | NA | 0.353 | NA | NA | NA | NA | NA | NA | NA | NA |

| [58] | 54 | NA | NA | NA | NA | NA | 0.529 | 0.658 | 0.658 | NA | 0.366 | 0.573 | NA | NA | 0.526 | NA |

| [45] | 178 | 0.341 | NA | 0.404 | 0.213 | NA | NA | 0.396 | 0.413 | NA | NA | 0.334 | NA | NA | NA | NA |

| [38] | 365 | 0.804 | 0.648 | 0.637 | 0.654 | NA | 0.638 | 0.57 | 0.653 | NA | 0.539 | 0.436 | NA | NA | 0.66 | NA |

| [46] | 534 | 0.596 | 0.583 | 0.841 | 0.595 | NA | 0.42 | 0.636 | 0.798 | NA | 0.489 | 0.629 | NA | NA | 0.612 | NA |

| [47] | 141 | 0.508 | 0.478 | 0.41 | 0.552 | 0.64 | 0.477 | 0.324 | 0.574 | 0.327 | 0.638 | 0.507 | 0.548 | 0.6 | 0.416 | 0.508 |

| [48] | 352 | 0.734 | 0.65 | 0.618 | 0.653 | 0.491 | 0.699 | 0.588 | 0.693 | 0.491 | 0.781 | 0.672 | 0.669 | 0.676 | 0.742 | 0.586 |

| [37] | 537 | 0.632 | 0.528 | 0.637 | 0.635 | 0.606 | 0.507 | 0.637 | 0.609 | 0.577 | 0.501 | 0.605 | 0.564 | 0.484 | 0.474 | 0.585 |

| [49] | 218 | 0.711 | 0.552 | 0.676 | NA | NA | 0.428 | 0.565 | NA | NA | NA | NA | NA | NA | 0.498 | NA |

| [50] | 186 | 0.74 | 0.62 | 0.68 | NA | NA | 0.58 | 0.6 | NA | NA | NA | NA | NA | NA | 0.69 | NA |

| [42] | 156 | 0.468 | NA | 0.123 | NA | NA | NA | 0.132 | NA | NA | NA | NA | NA | NA | NA | NA |

| [56] | 99 | 0.687 | 0.742 | 0.666 | 0.771 | NA | 0.642 | 0.463 | 0.735 | NA | 0.712 | 0.68 | NA | NA | 0.651 | NA |

| [41] | 329 | 0.544 | NA | 0.511 | 0.561 | NA | NA | 0.499 | 0.556 | NA | NA | 0.506 | NA | NA | NA | NA |

| [76] | 462 | 0.73 | 0.61 | 0.7 | NA | NA | 0.55 | 0.64 | NA | NA | NA | NA | NA | NA | 0.64 | NA |

| [40] | 306 | 0.517 | NA | 0.673 | 0.528 | NA | NA | 0.535 | 0.676 | NA | NA | 0.601 | NA | NA | NA | NA |

| [51] | 194 | 0.748 | 0.584 | 0.701 | 0.584 | NA | 0.493 | 0.642 | 0.715 | NA | 0.425 | 0.519 | NA | NA | 0.645 | NA |

| [77] | 546 | 0.595 | 0.577 | NA | 0.559 | NA | 0.701 | NA | 0.525 | NA | 0.63 | NA | NA | NA | NA | NA |

| [78] | 233 | 0.452 | 0.478 | 0.671 | NA | NA | 0.381 | 0.454 | NA | NA | NA | NA | NA | NA | 0.583 | NA |

| [79] | 450 | 0.613 | 0.626 | 0.796 | NA | NA | 0.338 | 0.573 | NA | NA | NA | NA | NA | NA | 0.629 | NA |

| [39] | 574 | 0.529 | NA | 0.741 | NA | NA | NA | 0.51 | NA | NA | NA | NA | NA | NA | NA | NA |

| [52] | 58 | 0.7 | NA | 0.758 | NA | NA | NA | 0.641 | NA | NA | NA | NA | NA | NA | NA | NA |

| [53] | 207 | 0.613 | 0.433 | 0.56 | NA | NA | 0.333 | 0.502 | NA | NA | NA | NA | NA | NA | 0.672 | NA |

| [80] | 89 | 0.649 | 0.48 | 0.752 | NA | NA | 0.524 | 0.699 | NA | NA | NA | NA | NA | NA | 0.562 | NA |

| [54] | 223 | 0.507 | NA | 0.579 | NA | NA | NA | 0.589 | NA | NA | NA | NA | NA | NA | NA | NA |

| [55] | 134 | 0.396 | NA | 0.765 | NA | NA | NA | 0.366 | NA | NA | NA | NA | NA | NA | NA | NA |

| [81] | 344 | 0.829 | NA | 0.745 | NA | NA | NA | 0.81 | NA | NA | NA | NA | NA | NA | NA | NA |

| PE | FC | SI | EE | PV | AU | |

|---|---|---|---|---|---|---|

| PE | 1 | 8880 (29) | 6160 (19) | 8334 (28) | 4523 (14) | 1972 (6) |

| FC | 0.588 *** | 1 | 6214 (20) | 8388 (29) | 4577 (15) | 1972 (6) |

| SI | 0.545 *** | 0.466 *** | 1 | 3764 (12) | 4031 (14) | 1972 (6) |

| EE | 0.623 *** | 0.517 *** | 0.507 *** | 1 | 1972 (6) | 5668 (19) |

| PV | 0.536 *** | 0.629 *** | 0.536 *** | 0.465 *** | 1 | 1972 (6) |

| AU | 0.542 ** | 0.458 *** | 0.547 *** | 0.581 *** | 0.551 *** | 1 |

| Indices | Recommended Values [84] | ETADC Testing Values | Conclusion |

|---|---|---|---|

| Root Mean Square Error of Approximation (RMSEA) | ≤0.05; reasonable fit >0.1; poor fit. | 0.0387 | Good model fit |

| Standardized Root Mean Squared Residual (SRMR) | ≤0.08; acceptable fit | 0.0476 | Good model fit |

| Comparative Fit Index (CFI) | =1; perfect fit | 0.9916 | Good model fit |

| ≥0.95; excellent fit | |||

| Tucker–Lewis index (TLI) | ≥0.9; good fit; | 0.9578 | Good model fit |

| Independent Variables | Dependent Variable | Coefficient of Determination (R2) | Conclusion |

|---|---|---|---|

| Social Influence (SI), Facilitating Conditions (FC) | Effort Expectancy (EE) | 53% or 0.53 | Acceptable |

| Performance Expectancy (PE), Social influence (SI), Price Value (PV), Effort Expectancy (EE) | Acceptance and Use (AU) | 52% or 0.52 | Acceptable |

| Hypotheses | Paths (Connections Between Variables) | Path Coefficients (β) | Std. Error | z Value | p-Values | Statistical Significance | Conclusion |

|---|---|---|---|---|---|---|---|

| H1 | Performance Expectancy → Acceptance and Use | 0.17 | 0.061 | 2.77 | <0.01 | Significant | supported |

| H2 | Effort Expectancy → Acceptance and Use | 0.29 | 0.040 | 7.28 | <0.001 | Significant | supported |

| H3 | Social Influence → Acceptance and Use | 0.15 | 0.044 | 3.38 | <0.001 | Significant | supported |

| H4 | Facilitating Conditions → Effort Expectancy | 0.37 | 0.035 | 10.62 | <0.001 | Significant | supported |

| H5 | Social Influence → Effort Expectancy | 0.44 | 0.042 | 10.33 | <0.001 | Significant | supported |

| H6 | Price Value → Acceptance and Use | 0.24 | 0.032 | 7.41 | <0.001 | Significant | supported |

| Models, Authors, Year | Original TAM by [31] | TAM2 by [32] TAM3 by [36] | Original UTAUT by [33] | UTAUT2 by [35] | ETADC (Developed in the Present Study) |

|---|---|---|---|---|---|

| Model Core constructs | Perceived usefulness; perceived ease of use. | Social influence, age, gender, and core constructs from the TAM. | Performance expectancy, effort expectancy, social influence, facilitating conditions, and factors such as gender, age, experience, and voluntariness moderate the impact of the four key constructs. | Hedonic motivation, price value, habit, including all constructs from the UTAUT. | Performance expectancy, effort expectancy, social influence, facilitating conditions, and price value. |

| Context of its primary development | Originally developed for the adoption of IT in the workplace. | Developed for technology adoption in organizations, focusing on employee acceptance of new information systems. | Developed in the context of technology adoption, focusing on employee acceptance of new information systems. | This focuses on employee acceptance and use of new information systems in technology adoption for consumer contexts. | Developed only for education settings in developing countries |

| Origin | Originated from psychological theories. It was built upon the theory of reasoned action. | Originated from the TAM. | Originated from the TRA, TPB, TAM, TAM2, TAM3, DOI, motivational model, and social cognitive theory. | Originated from the UTAUT. | Originated from the TAM, UTAUT, and UTAUT2. |

| Context of its validation | Originally validated in the United States [86]. | Originally validated in the United States. | Originally validated in the United States [17]. | Originally validated in the United States. | Validated worldwide. |

| Explanatory power | Explained 40% of the variance in technology use intention [32]. | In 4 studies, TAM2 explained between 34% and 52% of the variance in the use of IT [32]. In 4 studies, TAM3 explained between 31% and 36% of the variance in the use of IT [36]. | The original UTAUT explained 40% of the variance in technology use. | The UTAUT2 explained 52% of the variance in technology use. | Very high explanatory power. The ETADC explains 51% of the variance in effort expectancy, and it explains 52% of the variance in technology acceptance and use. |

| General Criticisms | Lack of subjective norms or social impact on the central constructs of PU and PEU; provides no information about how to make technology more useful and easier to use. | They are very complex due to the multitude of factors incorporated. | The multitude of moderators incorporated raises the model’s complexity. Accordingly, it is often applied without moderators. | The multitude of moderators incorporated raises the model’s complexity. Accordingly, it is often applied without moderators. | Even though the model was primarily developed for developing countries, it can be applied worldwide. |

| Update | It was developed 35 years ago. | Was developed, respectively, 24 and 16 years ago | It was developed 21 years ago. | It was developed 12 years ago. | Updated, developed in 2024. |

| Advantages | It has emerged as the predominant model for studying technology adoption in educational contexts due to its flexibility for modifications. | They have demonstrated that a technology adoption model should not be complex, and it should not have a large number of constructs. | It provides the highest explanatory power among standard acceptance models, supporting the technology development process. | It is a good foundation for the development of technology adoption models according to the local context. | It is a general and updated model for the identification and adoption of technologies suitable for education settings in developing countries, as well as around the world. |

| Appropriateness to education settings | The TAM, while used in education, may not fully address the complexities of technology adoption in this field. | Not appropriate for education settings. | The UTAUT framework may need adaptations to better address the complexities of technology adoption in education [87]. | Should be extended or modified to better suit educational environments. | Developed specifically for education purposes to achieve sustainable education. |

| Appropriateness for developing countries | The TAM’s core constructs may not fully reflect the complexities of technology adoption in developing countries. | Not appropriate for developing countries. | Should include context-specific factors such as societal aspects and cost-effectiveness to better fit developing countries. | Needs adjustment for societal factors and cost-effectiveness to fit developing countries better. | Suitable for developing countries as it has been developed according to the unique challenges facing these nations and their local contexts. |

| Type of Educational Technology | PE | PV Pricing | FC | EE Users (Downloads) | SI | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| PE1 Features | PE2 Category | FC1 Required Devices | FC2 Download Size | SI1 Technology Maturity | SI2 Teacher-Approved | SI3 Reviews | SI4 Ratings on the Market | |||

| 1. Real-time engagement technologies (Quizlet) | Promote engagement, personalized learning, creativity, critical thinking, problem-solving skills | Education | USD 1.99–USD 35.99 | Computers, iPads, iPhones, iTouches, Android tablets, and smartphones | 39 MB | 10 M+ | Yes | Yes | 712 K | 4.7  |

| 2. Design and creativity technologies (Canva) | Education | USD 1.49–USD 300 | 27 MB | 100 M+ | Yes | Yes | 19.3 M | 4.8  | ||

| 3. Interactive learning labs (PhET Simulations) | Education | USD 0.99 | 123 MB | 50 K+ | Yes | Yes | 531 | 4.7  | ||

| 4. Language learning technology (Duolingo) | Education | USD 0.99–USD 239.9 | 81 MB | 500 M+ | Yes | Yes | 30.5 M | 4.7  | ||

| 5. Virtual reality and augmented Teality (CamToPlan) | Business and Education | Free–USD 17.99 | 20 M | 100 K+ | Yes | Yes | 7.38 K | 4.5  | ||

| 6. Robotics (Mio, the Robot) | Education | FREE | 48 MB | 100 K+ | Yes | Yes | 1.25 K | 3.1  | ||

| 7. Game-based learning platforms (Kahoot) | Education | FREE | 93 MB | 50 M+ | Yes | Yes | 751 K | 4.7  | ||

| 8. Learning management systems (Google Classroom) | Education | FREE | 21.65 MB | 100 M+ | Yes | Yes | 2.04 M | 4.1  | ||

| 9. Interactive learning platforms (Nearpod) | Education | FREE | 3 MB | 1 M+ | Yes | Yes | 7.04 K | 2.2  | ||

| 10. Open education resources (Khan Academy) | Education | FREE | 28 MB | 10 M+ | Yes | Yes | 167 K | 4.2  | ||

| 11. Three-dimensional printing (Tinkercad) | Education | FREE | 100 K+ | Yes | Yes | 825 | 2.5  | |||

is a star rating.

is a star rating.| Type of Educational Technology | PE = (PE1 + PE2)/2 | PV | FC = (FC1 + FC2)/2 | EE | SI = (SI1 + SI2 + SI3 + SI4)/4 | Adoption Rate = (PE + PV + FC + EE + SI) /5 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PE1 | PE2 | PE | FC1 | FC2 | FC | SI1 | SI2 | SI3 | SI4 | SI | ||||

| 1. Real-time engagement technologies (Quizlet) | 4 | 4 | 4 | 5 | 5 | 5 | 5 | 4 | 5 | 5 | 4 | 5 | 4.7 | 4.5  |

| 2. Design and creativity technologies (Canva) | 5 | 5 | 5 | 4 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 4.8  |

| 3. Interactive learning labs (PhET Simulations) | 5 | 5 | 5 | 4 | 5 | 3 | 4 | 2 | 5 | 5 | 1 | 5 | 4 | 3.8  |

| 4. Language learning technology (Duolingo) | 5 | 4 | 4.5 | 4 | 5 | 4 | 4.5 | 5 | 5 | 5 | 5 | 5 | 5 | 4.6  |

| 5. Virtual Reality and Augmented reality (CamToPlan) | 5 | 5 | 5 | 5 | 4 | 5 | 4.5 | 3 | 5 | 5 | 1 | 5 | 4 | 4.3  |

| 6. Robotics (Mio, the Robot) | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 3 | 5 | 5 | 2 | 3 | 3.7 | 4.3  |

| 7. Game-based learning platforms (Kahoot) | 5 | 4 | 4.5 | 5 | 5 | 4 | 4.5 | 4 | 5 | 5 | 4 | 5 | 4.7 | 4.5  |

| 8. Learning Management Systems (Google Classroom) | 5 | 4 | 4.5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 4 | 4.7 | 4.8  |

| 9. Interactive learning platforms (Nearpod) | 5 | 4 | 4.5 | 5 | 5 | 5 | 5 | 3 | 5 | 5 | 1 | 2 | 3.2 | 4.1  |

| 10. Open Education Resources (Khan Academy) | 5 | 4 | 4.5 | 5 | 5 | 5 | 5 | 4 | 5 | 5 | 4 | 4 | 4.5 | 4.6  |

| 11. Three-dimensional printing (Tinkercad) | 5 | 4 | 4.5 | 5 | 5 | 4 | 4.5 | 3 | 5 | 5 | 1 | 3 | 3.5 | 4.1  |

is a star rating.

is a star rating.Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sabiteka, M.; Yu, X.; Sun, C. Toward Sustainable Education: A Contextualized Model for Educational Technology Adoption for Developing Countries. Sustainability 2025, 17, 3592. https://doi.org/10.3390/su17083592

Sabiteka M, Yu X, Sun C. Toward Sustainable Education: A Contextualized Model for Educational Technology Adoption for Developing Countries. Sustainability. 2025; 17(8):3592. https://doi.org/10.3390/su17083592

Chicago/Turabian StyleSabiteka, Micheline, Xinguo Yu, and Chao Sun. 2025. "Toward Sustainable Education: A Contextualized Model for Educational Technology Adoption for Developing Countries" Sustainability 17, no. 8: 3592. https://doi.org/10.3390/su17083592

APA StyleSabiteka, M., Yu, X., & Sun, C. (2025). Toward Sustainable Education: A Contextualized Model for Educational Technology Adoption for Developing Countries. Sustainability, 17(8), 3592. https://doi.org/10.3390/su17083592