Assessing Radiometric Correction Approaches for Multi-Spectral UAS Imagery for Horticultural Applications

Abstract

:1. Introduction

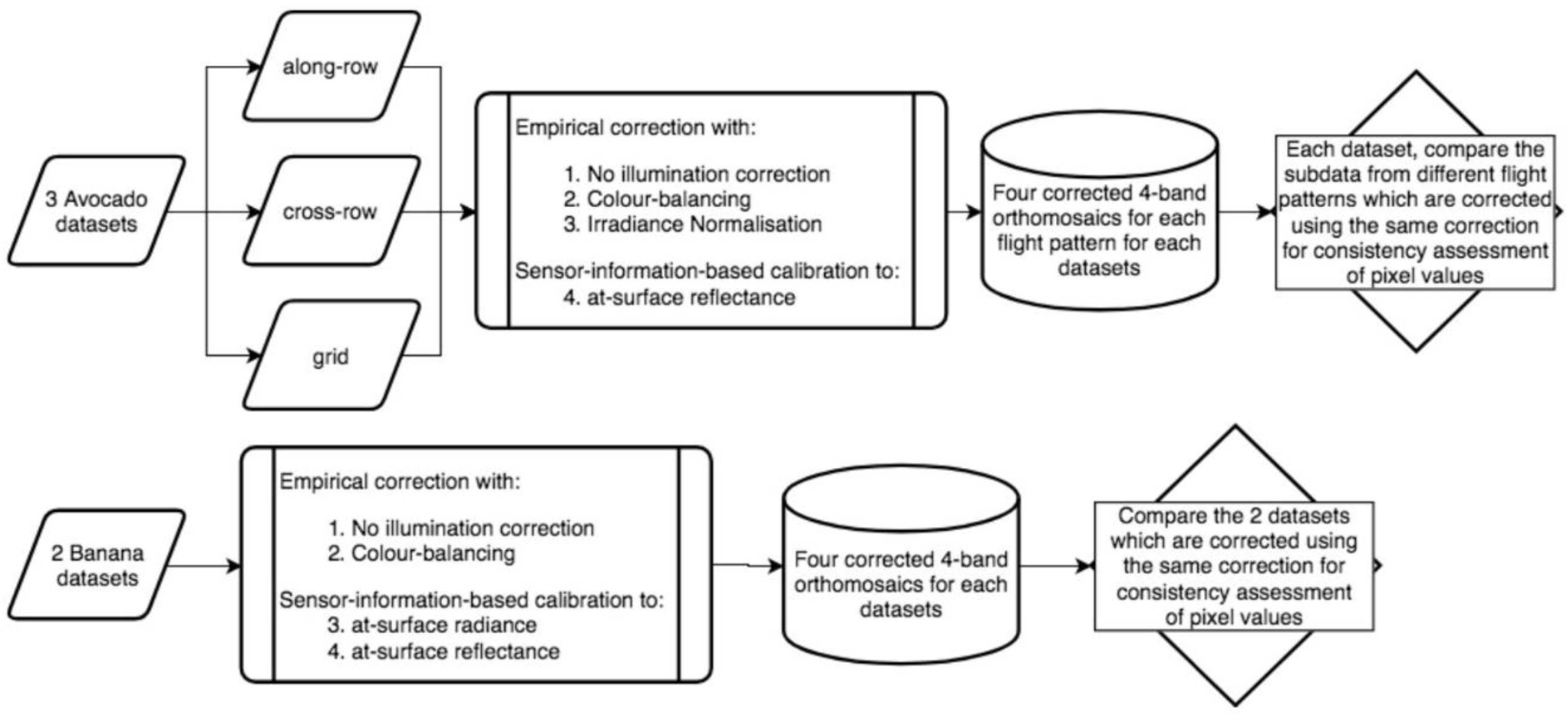

2. Materials and Methods

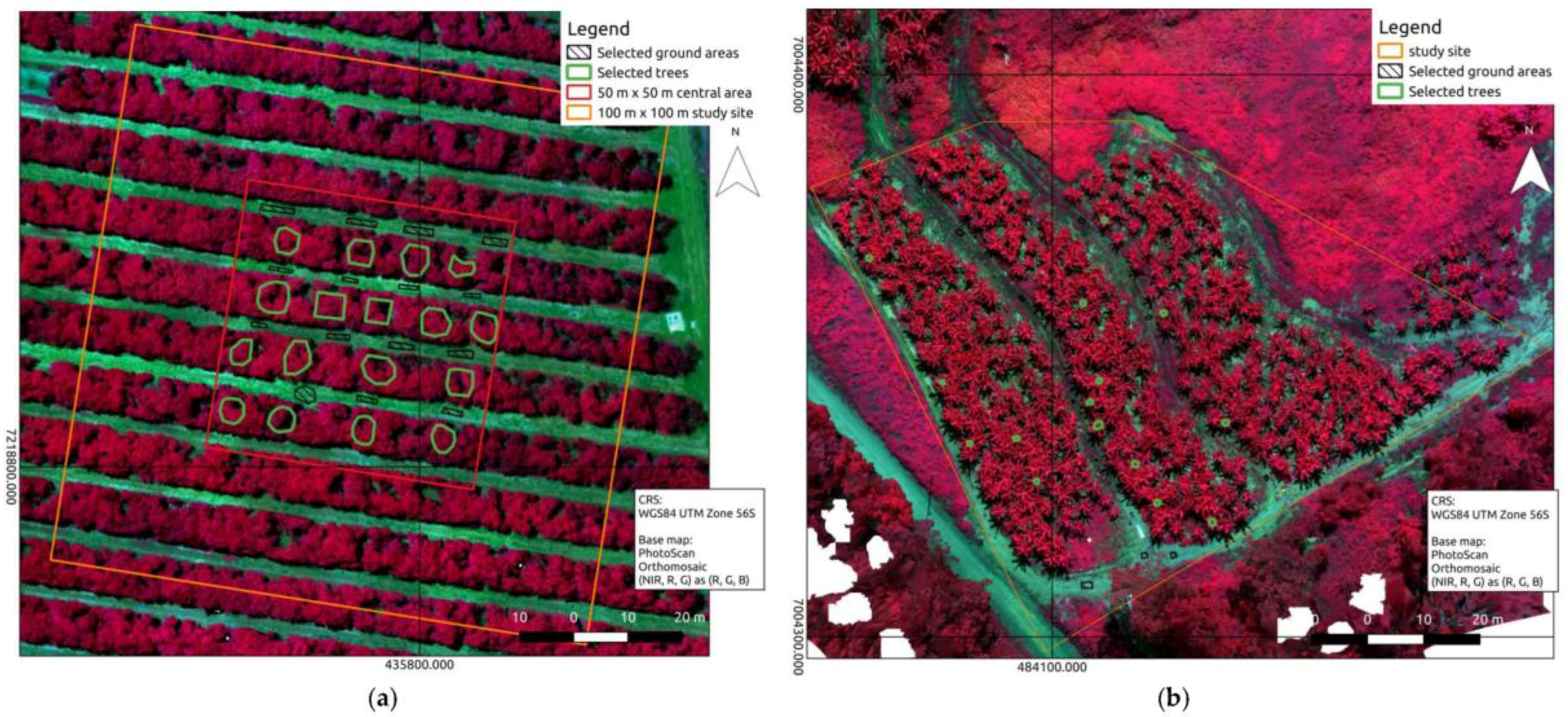

2.1. Study Sites

2.2. UAS Data Acquisition

2.3. Image Pre-Processing

2.3.1. Geometric Correction and Initial Pre-Processing

2.3.2. Producing Analysis-Ready Data

- Simplified empirical correction;

- Colour balancing before empirical correction;

- Irradiance normalisation before empirical correction; and

- Sensor-information based calibration.

2.3.3. Correcting for Solar and Viewing Geometries Within and Between Images—Bidirectional Reflectance Distribution Function (BRDF)

2.4. Assessment of Canopy Reflectance Consistency

- 5.

- For a sample size of 30, if this fraction is over 33%, there tends to be a difference.

- 6.

- For a sample size of 100, if this fraction is over 20%, there tends to be a difference.

- 7.

- For a sample size of 1000, if this fraction is over 10%, there tends to be a difference.

3. Results

3.1. Reflectance Consistency Assessment of Avocado Imagery

3.2. Correction Consistency Assessment of Banana Imagery

3.3. BRDF Correction Consistency Assessment

4. Discussion

4.1. The Influences of Flight Altitude and Image Scale

4.2. The Influence of Canopy Geometric Complexity on Reflectance Consistency

4.3. The Limitation of Simplified Empirical Correction

4.4. UAS Based Irradiance Measurements

4.5. Proposed BRDF Correction

4.6. Potential of Sensor-Information-Based Calibration

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A. Box-and-Whisker Comparison Results of Avocado Datasets

| Correction Method | Band | Along vs. Cross | Along vs. Grid | Cross vs. Grid |

|---|---|---|---|---|

| Empirical correction | Green | 41.2% | 11.8% | 0% |

| Red | 0% | 0% | 0% | |

| Red edge | 58.8% | 76.5% | 76.5% | |

| NIR | 82.4% | 94.1% | 70.6% | |

| Colour balancing + Empirical correction | Green | 58.8% | 94.1% | 88.2% |

| Red | 23.5% | 88.2% | 41.2% | |

| Red edge | 29.4% | 88.2% | 70.6% | |

| NIR | 47.1% | 76.5% | 82.4% | |

| Irradiance normalisation + Empirical correction | Green | 0% | 47.1% | 0% |

| Red | 0% | 0% | 0% | |

| Red edge | 76.5% | 70.6% | 82.4% | |

| NIR | 82.4% | 94.1% | 88.2% | |

| Sensor-information-based calibration | Green | 47.1% | 100% | 82.4% |

| Red | 0% | 52.9% | 0% | |

| Red edge | 47.1% | 0% | 0% | |

| NIR | 0% | 88.2% | 0% |

| Correction Method | Band | Along vs. Cross | Along vs. Grid | Cross vs. Grid |

|---|---|---|---|---|

| Empirical correction | Green | 0% | 58.8% | 0% |

| Red | 0% | 11.8% | 0% | |

| Red edge | 64.7% | 47.1% | 88.2% | |

| NIR | 64.7% | 70.6% | 76.5% | |

| Colour balancing + Empirical correction | Green | 11.8% | 0% | 0% |

| Red | 17.6% | 0% | 0% | |

| Red edge | 47.1% | 17.6% | 52.9% | |

| NIR | 11.8% | 82.4% | 11.8% | |

| Irradiance normalisation + Empirical correction | Green | 47.1% | 58.8% | 76.5% |

| Red | 23.5% | 5.9% | 5.9% | |

| Red edge | 47.1% | 70.6% | 94.1% | |

| NIR | 52.3% | 82.4% | 23.5% | |

| Sensor-information-based calibration | Green | 0% | 0% | 0% |

| Red | 0% | 23.5% | 0% | |

| Red edge | 0% | 100% | 0% | |

| NIR | 0% | 88.2% | 0% |

| Correction Method | Band | Along vs. Cross | Along vs. Grid | Cross vs. Grid |

|---|---|---|---|---|

| Empirical correction | Green | 0% | 5.9% | 0% |

| Red | 0% | 58.8% | 0% | |

| Red edge | 5.9% | 35.3% | 5.9% | |

| NIR | 5.9% | 82.4% | 0% | |

| Colour balancing + Empirical correction | Green | 0% | 52.9% | 0% |

| Red | 0% | 17.6% | 0% | |

| Red edge | 23.5% | 29.4% | 17.6% | |

| NIR | 0% | 76.5% | 0% | |

| Irradiance normalisation + Empirical correction | Green | 0% | 0% | 0% |

| Red | 0% | 52.9% | 0% | |

| Red edge | 29.4% | 52.9% | 70.6% | |

| NIR | 0% | 88.2% | 0% | |

| Sensor-information-based calibration | Green | 0% | 5.9% | 0% |

| Red | 0% | 0% | 0% | |

| Red edge | 0% | 88.2% | 0% | |

| NIR | 0% | 64.7% | 0% |

| Correction Method | Band | Along vs. Cross | Along vs. Grid | Cross vs. Grid |

|---|---|---|---|---|

| Empirical correction | Green | 33.3% | 0% | 0% |

| Red | 0% | 0% | 0% | |

| Red edge | 33.3% | 60% | 40% | |

| NIR | 40% | 66.6% | 60% | |

| Colour balancing + Empirical correction | Green | 0% | 0% | 6.7% |

| Red | 13.3% | 13.3% | 20% | |

| Red edge | 20% | 40% | 33.3% | |

| NIR | 40% | 73.3% | 46.7% | |

| Irradiance normalisation + Empirical correction | Green | 33.3% | 20% | 0% |

| Red | 0% | 6.7% | 0% | |

| Red edge | 26.7% | 40% | 26.7% | |

| NIR | 13.3% | 53.3% | 33.3% | |

| Sensor-information-based calibration | Green | 40% | 73.3% | 20% |

| Red | 0% | 53.3% | 0% | |

| Red edge | 6.7% | 0% | 0% | |

| NIR | 13.3% | 60% | 6.7% |

| Correction Method | Band | Along vs. Cross | Along vs Grid | Cross vs. Grid |

|---|---|---|---|---|

| Empirical correction | Green | 26.7% | 6.7% | 26.7% |

| Red | 6.7% | 6.7% | 6.7% | |

| Red edge | 66.7% | 26.7% | 40% | |

| NIR | 13.3% | 6.7% | 60% | |

| Colour balancing + Empirical correction | Green | 0% | 0% | 0% |

| Red | 0% | 6.7% | 6.7% | |

| Red edge | 33.3% | 6.7% | 26.7% | |

| NIR | 26.7% | 40% | 26.7% | |

| Irradiance normalisation + Empirical correction | Green | 0% | 26.7% | 40% |

| Red | 0% | 0% | 20% | |

| Red edge | 60% | 40% | 20% | |

| NIR | 40% | 46.7% | 46.7% | |

| Sensor-information-based calibration | Green | 6.7% | 0% | 0% |

| Red | 13.3% | 40% | 6.7% | |

| Red edge | 6.7% | 53.3% | 0% | |

| NIR | 6.7% | 40% | 0% |

| Correction Method | Band | Along vs. Cross | Along vs. Grid | Cross vs. Grid |

|---|---|---|---|---|

| Empirical correction | Green | 0% | 0% | 0% |

| Red | 0% | 13.3% | 0% | |

| Red edge | 0% | 40% | 6.7% | |

| NIR | 0% | 93.3% | 0% | |

| Colour balancing + Empirical correction | Green | 0% | 20% | 0% |

| Red | 20% | 0% | 0% | |

| Red edge | 26.7% | 6.7% | 26.7% | |

| NIR | 0% | 46.7% | 0% | |

| Irradiance normalisation + Empirical correction | Green | 0% | 0% | 0% |

| Red | 6.7% | 6.7% | 0% | |

| Red edge | 40% | 40% | 33.3% | |

| NIR | 0% | 60% | 0% | |

| Sensor-information-based calibration | Green | 0% | 20% | 0% |

| Red | 0% | 0% | 0% | |

| Red edge | 0% | 73.3% | 0% | |

| NIR | 0% | 6.7% | 0% |

Appendix B. Box-and-Whisker Comparison Results of Banana Datasets

| Correction Method | Band | Canopies | Ground |

|---|---|---|---|

| Empirical correction | Green | 46.7% | 30% |

| Red | 60% | 20% | |

| Red edge | 66.7% | 20% | |

| NIR | 100% | 100% | |

| Colour balancing + Empirical correction | Green | 46.7% | 20% |

| Red | 40% | 30% | |

| Red edge | 66.7% | 20% | |

| NIR | 73.3% | 30% | |

| Sensor-information-based calibrated surface reflectance | Green | 33.3% | 0% |

| Red | 20% | 0% | |

| Red edge | 13.3% | 0% | |

| NIR | 0% | 0% | |

| Sensor-information-based calibrated arbitrary surface radiance | Green | 73.3% | 20% |

| Red | 66.7% | 30% | |

| Red edge | 26.7% | 40% | |

| NIR | 40% | 40% |

References

- Sellers, P.J. Canopy reflectance, photosynthesis, and transpiration, II. The role of biophysics in the linearity of their interdependence. Remote Sens. Environ. 1987, 21, 143–183. [Google Scholar] [CrossRef]

- Lillesand, T.M.; Kiefer, R.W.; Chipman, J.W. (Eds.) Remote Sensing and Image Interpretation, 7th ed.; Wiley: Hoboken, NJ, USA, 2015. [Google Scholar]

- Heege, H.J. Precision in Crop Farming: Site Specific Concepts and Sensing Methods: Applications and Results; Springer: Berlin, Germany, 2013; p. 356. ISBN 978-94-007-6760-7. [Google Scholar]

- Jensen, J.R. Introductory Digital Image Processing: A Remote Sensing Perspective, 4th ed.; Pearson Education, Inc.: Glenview, IL, USA, 2016. [Google Scholar]

- Zarco-Tejada, P.J.; Diaz-Varela, R.; Angileri, V.; Loudjani, P. Tree height quantification using very high resolution imagery acquired from an unmanned aerial vehicle (UAV) and automatic 3D photo-reconstruction methods. Eur. J. Agron. 2014, 55, 89–99. [Google Scholar] [CrossRef] [Green Version]

- Torres-Sánchez, J.; López-Granados, F.; Serrano, N.; Arquero, O.; Peña, J.M. High-Throughput 3-D Monitoring of Agricultural-Tree Plantations with Unmanned Aerial Vehicle (UAV) Technology. PLoS ONE 2015, 10, e0130479. [Google Scholar] [CrossRef] [PubMed]

- Herwitz, S.R.; Dunagan, S.; Sullivan, D.; Higgins, R.; Johnson, L.; Zheng, J.; Slye, R.; Brass, J.; Leung, J.; Gallmeyer, B. Solar-powered UAV mission for agricultural decision support. In Proceedings of the 2003 IEEE International Geoscience and Remote Sensing Symposium (IGARSS’03), Toulouse, France, 21–25 July 2003; pp. 1692–1694. [Google Scholar]

- Johansen, K.; Raharjo, T.; McCabe, M. Using Multi-Spectral UAV Imagery to Extract Tree Crop Structural Properties and Assess Pruning Effects. Remote Sens. 2018, 10, 854. [Google Scholar] [CrossRef]

- Candiago, S.; Remondino, F.; De Giglio, M.; Dubbini, M.; Gattelli, M. Evaluating Multispectral Images and Vegetation Indices for Precision Farming Applications from UAV Images. Remote Sens. 2015, 7, 4026–4047. [Google Scholar] [CrossRef] [Green Version]

- Comba, L.; Gay, P.; Primicerio, J.; Aimonino, D.R. Vineyard detection from unmanned aerial systems images. Comput. Electron. Agric. 2015, 114, 78–87. [Google Scholar] [CrossRef]

- Chianucci, F.; Disperati, L.; Guzzi, D.; Bianchini, D.; Nardino, V.; Lastri, C.; Rindinella, A.; Corona, P. Estimation of canopy attributes in beech forests using true colour digital images from a small fixed-wing UAV. Int. J. Appl. Earth Obs. Geoinf. 2016, 47, 60–68. [Google Scholar] [CrossRef] [Green Version]

- Iqbal, F.; Lucieer, A.; Barry, K. Simplified radiometric calibration for UAS-mounted multispectral sensor. Eur. J. Remote Sens. 2018, 51, 301–313. [Google Scholar] [CrossRef]

- Wang, C.; Myint, S.W. A Simplified Empirical Line Method of Radiometric Calibration for Small Unmanned Aircraft Systems-Based Remote Sensing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 1876–1885. [Google Scholar] [CrossRef]

- Berni, J.A.J.; Zarco-Tejada, P.J.; Suárez, L.; Fereres, E. Thermal and Narrowband Multispectral Remote Sensing for Vegetation Monitoring from an Unmanned Aerial Vehicle. IEEE Trans. Geosci. Remote Sens. 2009, 47, 722–738. [Google Scholar] [CrossRef] [Green Version]

- Parrot (Ed.) Application Note: Pixel Value to Irradiance Using the Sensor Calibration Model; Parrot: Paris, France, 2017; Volume SEQ-AN-01. [Google Scholar]

- Hakala, T.; Honkavaara, E.; Saari, H.; Mäkynen, J.; Kaivosoja, J.; Pesonen, L.; Pölönen, I. Spectral imaging from UAVs under varying illumination conditions. In UAV-g2013; Grenzdörffer, G., Bill, R., Eds.; International Society for Photogrammetry and Remote Sensing (ISPRS): Rostock, Germany, 2013; Volume XL-1/W2, pp. 189–194. [Google Scholar]

- Honkavaara, E.; Saari, H.; Kaivosoja, J.; Pölönen, I.; Hakala, T.; Litkey, P.; Mäkynen, J.; Pesonen, L. Processing and Assessment of Spectrometric, Stereoscopic Imagery Collected Using a Lightweight UAV Spectral Camera for Precision Agriculture. Remote Sens. 2013, 5, 5006–5039. [Google Scholar] [CrossRef] [Green Version]

- Scarth, P. A Methodology for Scaling Biophysical Models; The University of Queensland, School of Geography, Planning and Architecture: St Lucia, Australia, 2003. [Google Scholar]

- Wulder, M. Optical remote-sensing techniques for the assessment of forest inventory and biophysical parameters. Prog. Phys. Geogr. Earth Environ. 1998, 22, 449–476. [Google Scholar] [CrossRef]

- Marceau, D.J.; Howarth, P.J.; Gratton, D.J. Remote sensing and the measurement of geographical entities in a forested environment. 1. The scale and spatial aggregation problem. Remote Sens. Environ. 1994, 49, 93–104. [Google Scholar] [CrossRef]

- Novaković, P.; Hornak, M.; Zachar, M. 3D Digital Recording of Archaeological, Architectural and Artistic Heritage; Knjigarna Filozofske Fakultete: Ljubljana, Slovenija, 2017; ISBN 978-961-237-898-1. [Google Scholar] [CrossRef]

- Parrot (Ed.) Application Note: How to Correct Vignetting in Images; Parrot: Paris, France, 2017; Volume SEQ-AN-02. [Google Scholar]

- Pix4D. Camera Radiometric Correction Specifications. Available online: https://support.pix4d.com/hc/en-us/articles/115001846106-Camera-radiometric-correction-specifications (accessed on 23 January 2018).

- Pasumansky, A. Topic: Questions about New Calibrate Color Feature in 1.4. Available online: http://www.agisoft.com/forum/index.php?topic=8284.msg39660#msg39660 (accessed on 23 January 2018).

- Pasumansky, A. Topic: About “Build Orthomosaic”. Available online: http://www.agisoft.com/forum/index.php?topic=7000.0 (accessed on 20 May 2017).

- Walthall, C.L.; Norman, J.M.; Welles, J.M.; Campbell, G.; Blad, B.L. Simple equation to approximate the bidirectional reflectance from vegetative canopies and bare soil surfaces. Appl. Opt. 1985, 24, 383–387. [Google Scholar] [CrossRef] [PubMed]

- Hapke, B. Bidirectional reflectance spectroscopy: 1. Theory. J. Geophys. Res. Solid Earth 1981, 86, 3039–3054. [Google Scholar] [CrossRef]

- Moran, M.S.; Jackson, R.D.; Slater, P.N.; Teillet, P.M. Evaluation of simplified procedures for retrieval of land surface reflectance factors from satellite sensor output. Remote Sens. Environ. 1992, 41, 169–184. [Google Scholar] [CrossRef]

- Wild, C.J.; Pfannkuch, M.; Regan, M.; Horton, N.J. Towards more accessible conceptions of statistical inference. J. R. Stat. Soc. 2011, 174, 247–295. [Google Scholar] [CrossRef] [Green Version]

- Johansen, K.; Duan, Q.; Tu, Y.-H.; Searle, C.; Wu, D.; Phinn, S.; Robson, A. Mapping the Condition of Macadamia Tree Crops Using Multi-spectral Drone and WorldView-3 Imagery. In Proceedings of the International Tri-Conference for Precision Agriculture in 2017 (PA17), Hamilton, New Zealand, 16–18 October 2017. [Google Scholar]

- Robson, A.; Rahman, M.M.; Muir, J.; Saint, A.; Simpson, C.; Searle, C. Evaluating satellite remote sensing as a method for measuring yield variability in Avocado and Macadamia tree crops. Adv. Anim. Biosci. 2017, 8, 498–504. [Google Scholar] [CrossRef]

- Dymond, J.R.; Shepherd, J.D. Correction of the Topographic Effect in Remote Sensing. IEEE Trans. Geosci. Remote Sens. 1999, 37, 2618–2620. [Google Scholar] [CrossRef]

- Gu, D.; Gillespie, A. Topographic Normalization of Landsat TM Images of Forest Based on Subpixel Sun–Canopy–Sensor Geometry. Remote Sens. Environ. 1998, 64, 166–175. [Google Scholar] [CrossRef]

- Coulson, K.L.; Bouricius, G.M.; Gray, E.L. Optical reflection properties of natural surfaces. J. Geophys. Res. 1965, 70, 4601–4611. [Google Scholar] [CrossRef]

- Verhoef, W.; Bach, H. Coupled soil–leaf-canopy and atmosphere radiative transfer modeling to simulate hyperspectral multi-angular surface reflectance and TOA radiance data. Remote Sens. Environ. 2007, 109, 166–182. [Google Scholar] [CrossRef]

- Gao, F.; Shaaf, C.B.; Strahler, A.H.; Jin, Y.; Li, X. Detecting vegetation structure using a kernel-based BRDF model. Remote Sens. Environ. 2003, 86, 198–205. [Google Scholar] [CrossRef]

- Lucht, W.; Schaaf, C.B.; Strahler, A.H. An Algorithm for the Retrieval of Albedo from Space Using Semiempirical BRDF Models. IEEE Trans. Geosci. Remote Sens. 2000, 38, 977–998. [Google Scholar] [CrossRef]

- Goetz, A.F.H. Making Accurate Field Spectral Reflectance Measurements; ASD Inc.: Longmont, CO, USA, 2012. [Google Scholar]

- González-Piqueras, J.; Sánchez, S.; Villodre, J.; López, H.; Calera, A.; Hernández-López, D.; Sánchez, J.M. Radiometric Performance of Multispectral Camera Applied to Operational Precision Agriculture; Universidad de Castilla-La Mancha: Ciudad Real, Spain, 2018. [Google Scholar]

- Bendig, J.; Gautam, D.; Malenovský, Z.; Lucieer, A. Influence of Cosine Corrector and UAS Platform Dynamics on Airborne Spectral Irradiance Measurements. In Proceedings of the 2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018. [Google Scholar]

- Ma, X.; Geng, J.; Wang, H. Hyperspectral image classification via contextual deep learning. EURASIP J. Image Video Process. 2015, 2015, 1–12. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J. A survey on object detection in optical remote sensing images. ISPRS J. Photogramm. Remote Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef] [Green Version]

- Hung, C.; Bryson, M.; Sukkarieh, S. Multi-class predictive template for tree crown detection. ISPRS J. Photogramm. Remote Sens. 2012, 68, 170–183. [Google Scholar] [CrossRef]

- Del Pozo, S.; Rodríguez-Gonzálvez, P.; Hernández-López, D.; Felipe-García, B. Vicarious Radiometric Calibration of a Multispectral Camera on Board an Unmanned Aerial System. Remote Sens. 2014, 6, 1918–1937. [Google Scholar] [CrossRef] [Green Version]

- Hiscocks, P.D. Measuring Luminance with a Digital Camera. Syscomp Electron. Des. Ltd. 2011, 16, 6–16. [Google Scholar]

- Dandois, J.P.; Olano, M.; Ellis, E.C. Optimal Altitude, Overlap, and Weather Conditions for Computer Vision UAV Estimates of Forest Structure. Remote Sens. 2015, 7, 13895–13920. [Google Scholar] [CrossRef] [Green Version]

| Correction Method | Band | Along vs. Cross | Along vs. Grid | Cross vs. Grid |

|---|---|---|---|---|

| BRDF + Colour balancing + Empirical correction on canopies | Green | 0% (same) | 0% (same) | 5.9% (better) |

| Red | 0% (same) | 0% (same) | 0% (same) | |

| Red edge | 52.9% (better) | 88.2% (better) | 64.7% (better) | |

| NIR | 41.2% (better) | 17.6% (worse) | 64.7% (better) | |

| BRDF + Colour balancing + Empirical correction on ground | Green | 0% (same) | 0% (same) | 6.7% (better) |

| Red | 0% (same) | 0% (worse) | 26.7% (better) | |

| Red edge | 0% (worse) | 26.7% (better) | 0% (worse) | |

| NIR | 6.7% (worse) | 6.7% (worse) | 46.7% (better) |

| Correction Method | Band | Canopies | Ground |

|---|---|---|---|

| BRDF + Sensor-information-based calibrated surface reflectance | Green | 46.7% (better) | 10% (better) |

| Red | 26.7% (better) | 0% (same) | |

| Red edge | 26.7% (better) | 0% (same) | |

| NIR | 33.3% (better) | 0% (same) | |

| BRDF + Sensor-information-based calibrated arbitrary surface radiance | Green | 33.3% (worse) | 10% (worse) |

| Red | 33.3% (worse) | 40% (better) | |

| Red edge | 53.3% (better) | 0% (worse) | |

| NIR | 20% (worse) | 20% (worse) |

| Correction Method | Avocado Datasets | Banana Datasets | ||||||

|---|---|---|---|---|---|---|---|---|

| G | R | RE | NIR | G | R | RE | NIR | |

| Simplified empirical correction | X | X | V | V | O | O | V | V |

| Colour-balancing + empirical correction | X | X | Δ | Δ | O | O | V | V |

| Irradiance normalisation + empirical correction | X | X | Δ | Δ | ||||

| Sensor-information-based calibrated reflectance | X | X | X | X | X | X | X | X |

| Sensor-information-based calibrated radiance | V | V | X | O | ||||

| Remarks | None of them works when flight altitude is 50 m | |||||||

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tu, Y.-H.; Phinn, S.; Johansen, K.; Robson, A. Assessing Radiometric Correction Approaches for Multi-Spectral UAS Imagery for Horticultural Applications. Remote Sens. 2018, 10, 1684. https://doi.org/10.3390/rs10111684

Tu Y-H, Phinn S, Johansen K, Robson A. Assessing Radiometric Correction Approaches for Multi-Spectral UAS Imagery for Horticultural Applications. Remote Sensing. 2018; 10(11):1684. https://doi.org/10.3390/rs10111684

Chicago/Turabian StyleTu, Yu-Hsuan, Stuart Phinn, Kasper Johansen, and Andrew Robson. 2018. "Assessing Radiometric Correction Approaches for Multi-Spectral UAS Imagery for Horticultural Applications" Remote Sensing 10, no. 11: 1684. https://doi.org/10.3390/rs10111684

APA StyleTu, Y.-H., Phinn, S., Johansen, K., & Robson, A. (2018). Assessing Radiometric Correction Approaches for Multi-Spectral UAS Imagery for Horticultural Applications. Remote Sensing, 10(11), 1684. https://doi.org/10.3390/rs10111684