Abstract

This study aimed to characterize vineyard vegetation thorough multi-temporal monitoring using a commercial low-cost rotary-wing unmanned aerial vehicle (UAV) equipped with a consumer-grade red/green/blue (RGB) sensor. Ground-truth data and UAV-based imagery were acquired on nine distinct dates, covering the most significant vegetative growing cycle until harvesting season, over two selected vineyard plots. The acquired UAV-based imagery underwent photogrammetric processing resulting, per flight, in an orthophoto mosaic, used for vegetation estimation. Digital elevation models were used to compute crop surface models. By filtering vegetation within a given height-range, it was possible to separate grapevine vegetation from other vegetation present in a specific vineyard plot, enabling the estimation of grapevine area and volume. The results showed high accuracy in grapevine detection (94.40%) and low error in grapevine volume estimation (root mean square error of 0.13 m and correlation coefficient of 0.78 for height estimation). The accuracy assessment showed that the proposed method based on UAV-based RGB imagery is effective and has potential to become an operational technique. The proposed method also allows the estimation of grapevine areas that can potentially benefit from canopy management operations.

1. Introduction

As with precision agriculture (PA), precision viticulture (PV) depends on the adoption of emerging technologies to acquire data that allow the assessment of field variability to support the PV decision making process [1,2]. Grapevine (Vitis vinifera L.) yield has both spatial and temporal variability [3] and several field- and crop-related factors can influence yield, such as the soil, terrain topography, and microclimate conditions. Therefore, it is important to have information allowing specific and proper operations for each identified management zone within vineyards [2,3,4,5].

Canopy management is critical for improving grapevine yield and wine quality [6] by influencing canopy size and vigour and reducing phytosanitary problems [7]. As such, it is important to estimate above-ground biomass (AGB), which helps with the monitoring of plant status and can potentially provide a yield forecast [8]. Grapevine biomass can be estimated through crop models [9] by using leaf area, global solar radiation, and air temperatures [10], and based on vegetation indices, which correlate several grapevine biophysical parameters [11]. More direct methods to estimate biomass require accurate field measurements and involve destructive processes [12,13].

Remote sensing is an effective solution, allowing the acquisition of several types of data with various spatial and temporal resolutions. Specifically, unmanned aerial systems (UAS) are considered to be cost-effective, able to acquire the needed data at the needed time and place, and able to provide greater spatial resolution compared with other remote sensing platforms, such as satellites and manned aircrafts [14,15]. Several research studies successfully applied UAS-based remote sensing in distinct vineyard monitoring contexts by coupling different sensors—such as red/green/blue (RGB), multispectral, thermal, hyperspectral sensors, and Light Detection And Ranging (LIDAR)—to unmanned aerial vehicles (UAVs), for the estimation of potential phytosanitary problems [16], water status assessment [17,18,19], leaf area index (LAI) calculation [20], and grapevine biophysical parameters [21].

Several studies explored UAV-based plant monitoring using hyperspectral sensors, namely for biomass and nitrogen estimation in wheat [22,23], in grassland with different treatments [24], for rice paddies characterization [25], using UAV-based RGB photogrammetry for tree identification, and to estimate phytosanitary damages mapping [26,27]. In Kalisperakis et al. [20], a high correlation was found in a vineyard’s canopy greenness map, computed from hyperspectral data, compared with the three-dimensional (3D) canopy model. However, some current hyperspectral sensor data acquisition technology (e.g., push-broom sensors) does not support structure from motion (SfM). As such, geometric parameters’ estimation is difficult [28]. These sensors are also highly dependent on cloud coverage [22], leading to over- or under-exposure, which affects data reliability. LIDAR sensors have proven their usefulness and precision when applied to forestry inventory [29], individual tree detection [30], and forest understory studies [31]. Despite providing high accuracy, they are costly [32].

UAV-based RGB imagery stands out as a cost-effective solution, providing reasonable precision compared to LIDAR [33]. Sensor fusion was a focus of other studies, such as Sankey et al. [34], where hyperspectral and LIDAR sensors were both used for forest and vegetation monitoring [35]. Cost-effective sensors (RGB and multispectral) have been used for biomass estimation and parameters extraction in different contexts, such as in pasture lands [36], near-infrared (NIR), sunflower crops [37] (NIR), maize [38,39], winter wheat [40], barley [8,41], and vegetable crops [42,43]. These sensors were proven to be suitable for tree detection and height estimation [32,44], and diameter at breast height estimation [45].

Regarding vineyard AGB estimation, Mathews and Jensen [46] used UAV-based imagery with SfM algorithms to compute a vineyard’s point cloud to generate the canopy structure model. The authors stated that SfM-based point clouds can be used to estimate volumetric variables, such as AGB. Thus, providing this type of data throughout the different grapevine phenological stages would benefit winegrowers in assessing a grapevine’s canopy spatial variation [46,47]. Weiss and Baret [48] used dense point clouds, generated through photogrammetric processing of UAV-based RGB imagery, to characterize a vineyard’s properties, such as grapevine row height, width, spacing, and cover fraction. Matese et al. [21] used a multispectral sensor mounted on a UAV to assess the photogrammetric processing of multispectral imagery. The authors concluded that greater normalized difference vegetation index (NDVI) [49] value matched areas where grapevines were located were higher, proving the effectiveness of UAV-based data for vineyard mapping. Grapevine volume was estimated by considering three classes of grapevine height, width, and length. However, the low-resolution of the multispectral sensor caused a smoothing effect in the evaluated vineyard plot’s digital surface model (DSM). Caruso et al. [50] used an UAV equipped with RGB and NIR sensors to obtain biophysical and geometrical parameters relationships among grapevines, using high, medium, and low vigour zones of a vineyard, determined from the NDVI. The volume was calculated for grapevines’ lower, middle, and upper parts. UAV-based data were acquired in four different periods: May, June, July, and August. De Castro et al. [51] proposed an approach where a DSM computed from photogrammetric processing of UAV-based RGB imagery was used in object-based image analysis (OBIA) software to compute individual vineyard parameters. Unlike in Matese et al. [21], the smoothing effect was less significant. The authors stated that multi-temporal monitoring of grapevine biophysical parameters using UAV-based data can be both efficient and accurate, constituting a viable alternative to time-consuming, laborious, and inconsistent manual in-field measurements.

This article supports the findings of De Castro et al. [51] about the relevance of using multi-temporal data acquired from remote-sensing platforms in PV, to monitor the size, shape, and vigour of grapevines canopies. This study aimed to characterize vineyard vegetation evolution through multi-temporal analysis using a commercial low-cost rotary-wing UAV equipped with an RGB sensor, enabling the acquisition of very high-resolution imagery up to few millimetres of ground sample distance (GSD). The multi-temporal data acquired over the area of interest (AOI) were automatically analysed and grapevine vegetation was non-evasively estimated using vegetation area and volume, as well as identifying vineyard areas that need canopy management operations, by extracting several of the vineyard’s parameters.

2. Materials and Methods

The study area characterization; the description of the used UAS; and the methods applied to acquire, process, and interpret the UAV-based imagery are presented in this section. The methodology followed in this study was proposed by Pádua et al. [52] and was intended for multi-temporal crop analysis of UAV-based data. The method is based on three main stages: vegetation segmentation, parameters extraction, and multi-temporal analysis.

2.1. Study Area Context and Description

Typical Vitis vinifera L. phenological stages are well defined, occurring within known time periods depending on geographical context. In Portugal, budburst occurs from March to April, followed by flowering and an intensive vegetative growth in the period between May and June. Then, veraison occurs. During this stage, usually between July and August, grapevine ripening starts. Fruit maturity and harvesting typically happens between September and October. In the remaining months, grapevines are in a dormancy stage [53]. However, these stages might vary slightly in time, depending on environmental conditions and grapevine variety [54]. The warm and dry Portuguese summers can limit crop growth due to limited water availability during summertime [55]. To improve both fruit quality and yield, vineyard canopy management methods are performed, which involve different operations throughout the year. They include pruning, shoot thinning, leaf removal, cover crop cultivation, irrigation scheduling, and application of soil and crop amendments [56]. Regarding UAV-based aerial survey in vineyards, data should be acquired after the budburst stage, when grapevine leaves begin to be noticeable. These data can be used to monitor vineyard vegetation growth.

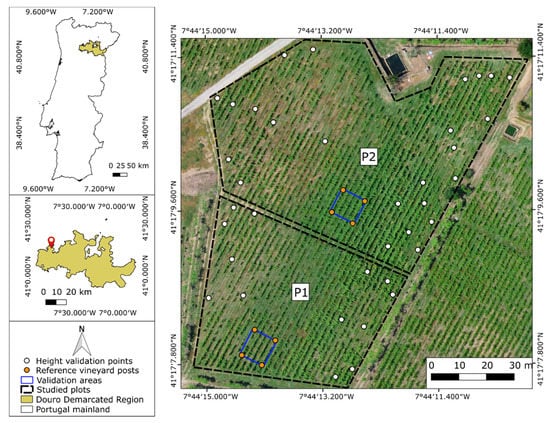

Two experimental vineyard plots were selected as the AOI for this work. Figure 1 presents an overview of both plots, located at the University of Trás-os-Montes e Alto Douro campus in Vila Real, Portugal (41°17′09.7″N, 7°44′12.9″W). Plot 1 (P1) had an area of 0.33 ha and was composed of red grapevine varieties. Plot 2 (P2) had an area of about 0.55 ha and contained white grapevine varieties. The grapevine varieties planted in both plots are recommended in the Douro Demarcated Region (DDR), where this study occurred. Grapevines were planted in 1995 in parallel rows, separated by 2 m, and with 1.2 m space between plants within a row. They were trained in a vertical shoot positioning (VSP) system, with a double Guyot training system—one of the most commonly used training systems in DDR [57].

Figure 1.

Area of interest (AOI) general overview: analysed vineyard plots, validation areas, height validation points, and their location in the Douro Demarcated Region, coordinates in WGS84 (EPSG:4326).

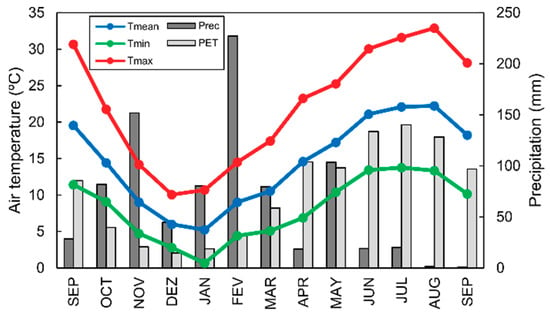

For a better understanding of the results obtained in this study, weather contextualization is necessary. Therefore, parameters such as monthly precipitation, potential evapotranspiration (PET) and mean, minimum, and maximum air temperatures were acquired from an automatic weather station (iMETOS 1, Pessl Instruments GmbH, Weiz, Austria), located 300 m from the AOI. Figure 2 represents daily mean air temperature parameters for each month and the monthly accumulated precipitation and PET for the period of September 2016 to September 2017.

Figure 2.

Monthly mean weather variables for the study areas in the period between September 2016 and September 2017: mean (Tmean), minimum (Tmin) and maximum (Tmax) air temperatures, and precipitation (Prec) and potential evapotranspiration (PET) values.

The high air temperature during summer 2017, together with low precipitation in spring 2017 and winter 2016 caused a drought period in Portugal and earlier grape maturation in the DDR region. As such, harvesting was anticipated in late August to mid-September: about two or three weeks earlier than usual. In the AOI, harvesting occurred in mid-September. This can be explained by comparing the weather data against the climatological normal of Vila Real (retrieved from the Instituto Português do Mar e da Atmosfera, IPMA, Lisbon, Portugal) for the period of 1981 to 2010. Comparing the one-year period with the climatological normal, we noticed a difference of +3.2 °C in the maximum air temperature (+4.1 °C for the period of the flight surveys), +0.6 °C in the mean air temperature (+1.1 °C for the period of the flight surveys), –0.7 °C in the minimum air temperature (−0.6 °C for the period of the flight surveys), and approximately 220 mm less accumulated precipitation.

2.2. Flight Campaigns

A commercial UAV, the DJI Phantom 4 (DJI, Shenzhen, China), was used in this study for data acquisition. It is a flexible and cost-effective off-the-shelf solution, able to perform manual or fully automatic flights in different configurations through a set of user-defined waypoints. The UAS consists of this multi-rotor UAV equipped with a rolling-shutter 1/2.3″ CMOS sensor attached to a 3-axis electronic gimbal, which acquires 12.4 MP resolution RGB imagery.

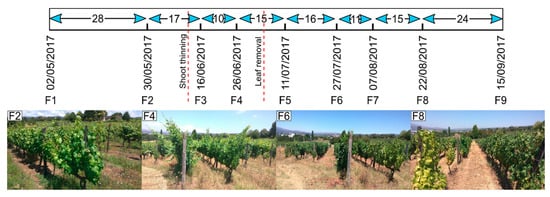

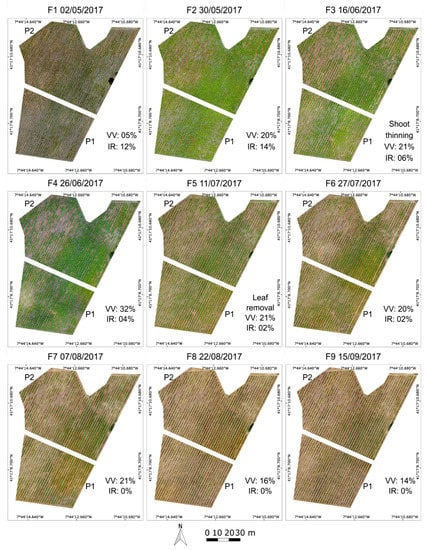

Nine aerial campaigns were completed in the selected plots, covering the time span from 2 May to 15 September 2017. Details about these flight campaigns are presented in Figure 3. The flight strategy enabled the inclusion of most of the plants’ phenological development until harvesting season. The performed canopy management operations in the studied vineyard plots were performed by the farmers and the aerial surveys were conducted within one week of its ending. All flights were conducted between 1:00 p.m. and 2:00 p.m. to minimize the sun angle influences and shadows. A double-grid configuration was used when planning each flight campaign to ensure a high overlap of 75% between images. Flight height relative to the UAV take-off position was set to 60 m.

Figure 3.

Flight campaign details. Flight number (F#), date, and the temporal difference in days between flights and the performed vineyard canopy management operations in dashed lines. Plot 2 images in different flight campaigns are also provided.

2.3. Data Processing

The imagery acquired in each flight was subjected to a photogrammetric processing using SfM algorithms to compute different orthorectified outcomes, which were used to segment vineyards and extract their features. This enabled a multi-temporal analysis of the AOI, along with the estimation of areas that potentially need canopy management operations.

2.3.1. Photogrammetric Processing

Photogrammetric processing was applied to the high-resolution aerial imagery using Pix4Dmapper Pro software (Pix4D SA, Lausanne, Switzerland). This software allows the generation of different orthorectified outputs, such as orthophoto mosaics, DSMs, and DTMs.

The processing involved three main stages: (1) generation of a sparse point cloud by using SfM algorithms to establish relationships between the geo-tagged RGB imagery through matching corresponding points (tie points) in multiple images, thus estimating its three-dimensional (3D) position. In this study, the computed outputs were aligned by setting manual tie points in areas that were clearly identifiable in the imagery of all flight campaigns: five points were used. This ensured that all generated outputs shared the same relative latitude, longitude, and altitude coordinates, differing only on the surface’s changes as vegetation develops. (2) The next step was the generation of a dense point cloud by considering the computed tie points and enlarging the number of candidate points (in this case point density was set to high); and (3) then computation of orthorectified outcomes, namely orthophoto mosaics, DSM, and DTM, which was achieved by submitting the dense point cloud to a noise filtering process, and by interpolating it using a triangulation algorithm. Since the mission plan was the same in all flights, the photogrammetric processing allowed the generation of orthophoto mosaics, DSMs, and DTMs with a GSD of 3 cm.

2.3.2. Vineyard Properties Extraction

Besides grapevine vegetation, inter-row vegetation and shadows cast by grapevines canopies are two examples of elements usually present in vineyard aerial imagery [58]. To automatically separate grapevine vegetation in aerial high-resolution imagery acquired by UAVs, different approaches have been proposed in the literature: digital image processing-based techniques [59,60], supervised and unsupervised machine learning classification techniques [61], point clouds (obtained from SfM methods) filtering [48]; and the use of DEMs [20,58].

Pádua et al. [62] proposed a method for segmenting vineyards. The method uses UAV-based RGB imagery—commonly available in most UAS—assumes that vineyards are organized in rows, and that grapevine heights are greater than inter-row vegetation. Grapevine canopy is often constrained to a certain area using a wire-based training system along the rows. This confines grapevines to both a given width and height. By complementarily using the different outcomes from photogrammetric processing of very high-resolution UAV-based imagery and resorting to vegetation indices, the method is able to filter vegetation within a certain height range in a given vineyard plot. Therefore, the method can extract parameters, such as grapevine vegetation, and estimate the number of vine rows, the inter-row vegetation, and potentially missing grapevines. Vegetation indices proved to be an accurate and quick mean to extract vineyard vegetation, compared to more complex supervised and unsupervised machine learning methods which, respectively, require datasets for both training and validation purposes or that provide lower accuracy rates [61]. Table 1 explains the notation used in this section.

Table 1.

Notation table.

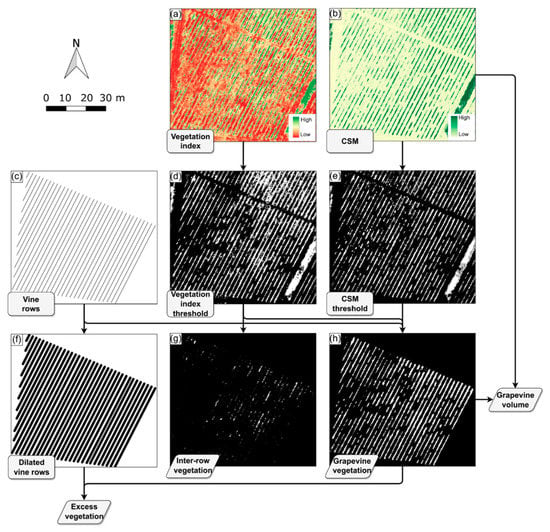

This work proposes a modified and enhanced version of the method introduced by Pádua et al. [62]. The original method was applied to the AOI’s two plots, resulting in a mask (S) with the central lines of grapevine rows. Figure 4 illustrates the method’s main steps and the different outputs obtained from its application.

Figure 4.

General workflow of the proposed method and main outputs, illustrated with data acquired on 11 July 2017 (F5) from plot 1 (P1).

The orthophoto mosaics obtained from photogrammetric processing of each flight campaign data were used to compute the green percentage index (G%) [63] (Figure 4a), as presented in Equation (1), where the green band was normalized by the sum of all RGB bands, allowing the extraction of the green vegetation cover.

Next, an automatic threshold value based in Otsu’s method [64] was applied to G% (Figure 4d), generating a binary image. From the difference between the DTM and DSM, the crop surface model (CSM) was generated, as shown in Equation (2) (Figure 4b). CSM values represent the height of objects above the terrain that, upon further processing, allows obtaining grapevine vegetation height.

The CSM was filtered by height (h), ranging from to . The outcome was a new binary image (Figure 4e), in which each pixel (i, j) assumes the value “1” or “0”, based upon whether the matching pixel in the CSM has a height value within the defined range. A new binary image D, containing all the vegetation within the defined height range, was obtained by combining the binary images resulting from the threshold of G% and the CSM. Then, a set of morphological operations (e.g., open, close, or remove small objects) was applied to D to delete potential outliers that did not represent grapevines. This also contributed to reducing the proposed method’s computational burden.

Each cluster of D was individually analysed and discarded if it did not intercept S (Figure 4c) at least once. The result was a set of clusters , which constitute a new binary image F that contains only vegetation within a certain height range (Figure 4h). Hence, inter-row vegetation was estimated by the interception between F’s complement and the binary image resultant from G% thresholding (Figure 4g). The resulting binary image, L, was composed of vegetation that did not belong to grapevines.

Thus, a vineyard’s plot parameters can be estimated. Equation (3) presents the method of calculating grapevine vegetation area A: the sum of each pixel (i, j) from F multiplied by the squared GSD value, where m and n represent the image’s number of rows and columns, respectively. The same approach can be used to determine inter-row vegetation area using L instead of F.

In terms of grapevine volume V, expressed in m3, the estimation is performed by adding the individual volumes of F’s clusters of pixels (), which in turn are obtained by multiplying each cluster’s area by its mean height, as presented in Equation (4), where a cluster area is represented by , and represents the mean height of a given cluster and its value is obtained from the CSM.

2.3.3. Multi-Temporal Analysis Procedure

Although significant, parameters computed from individual flight campaigns are only capable of offering a snapshot about a crop’s developmental stage and its contextual environmental conditions. A multi-temporal approach allows analysis changes over time and to create data series that may prove valuable for extracting patterns about crops and environmental conditions, which can further improve PV management tools.

As this study aimed to characterize vineyard vegetation evolution throughout the most significant grapevine vegetative growing cycles and given the importance of managing biomass for both fruit and yield optimization, a multi-temporal analysis was conducted. The process used grapevine vegetation detected in consecutive flight campaigns (k and k + 1) to perform a pixel-wise estimation of grapevine vegetation development. This produced one of the following three possible outcomes per pixel: (1) considered as grapevine vegetation in both flights and remains as such; (2) not considered as grapevine vegetation in k but considered in k + 1, representing grapevine vegetation growth; or (3) considered as grapevine vegetation in k but not in k + 1, representing a grapevine vegetation decline.

A new image X with grapevine vegetative growth values was created by applying Equation (5) to both F images from k and k + 1 flight campaigns. Values 1, 0, and −1 represent grapevine vegetation growth, maintenance, and decline, respectively. No value (NaN) was attributed to areas with no grapevine vegetation detected in consecutive flight campaigns.

2.3.4. Canopy Management

Given the diversity and sheer number of field operations performed throughout a year to maintain and extend grapevine life and increase their productivity, the ability to identify vineyard areas in need of canopy management actions can significantly contribute to PV sustainable practices. This process can help evaluate, hierarchize, and schedule field operations based on the operation’s potential benefit evaluation in the identified vineyard area, while considering cost and environmental impact.

Grapevine vegetation outside a defined area is considered as excess. To identify excess, S is dilated according to a given width (w) (Figure 4f), which represents the maximum width of grapevine vegetation in a row, according to its spacing. Afterward, the resulting binary image is combined with F. Grapevine vegetation pixels belonging to F outside the dilated S mask are estimated as excess vegetation.

2.3.5. Validation Procedure

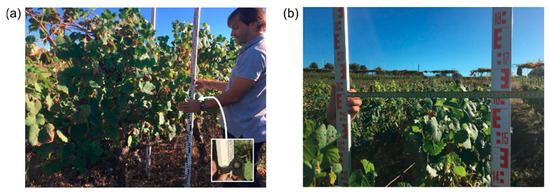

To monitor the selected vineyards temporally, a total of nine aerial campaigns were carried out, covering the grapevines’ most significant life cycle. The first flight, performed on 2 May 2017, corresponding to the beginning of the grapevine vegetative cycle; and the last flight was carried out on 15 September 2017, corresponding to the grapes’ final maturation stage (i.e., harvesting season). Field data acquisition consisted of collecting vine row height and width measurements at marked positions to estimate the vine row area and volume to compare the estimated parameters by the proposed method and the one calculated with ground-truth data. Vine row height was obtained by taking measurements using a surveyor’s levelling rod (Figure 5a), and width by using a measuring tape and two surveyor’s levelling rods, used as presented in Figure 5b. These validation points were selected from two 10 × 10 m areas (blue polygons in Figure 1). They are limited by characteristic features present in all vineyards and easily recognised both in aerial images and in the field: posts equally spaced along the rows (every 5 m in our AOI). This way it was possible to identify the same area over the flight epochs and to compare ground measurements with those provided by the proposed method. In total, 50 measurement points were selected, 25 located in each validation area, to allow correct representation of the vine row. If the vine row presents a regular shape, five points were selected per row, with 2 m average separation. These areas were selected due to the presence of different vigour levels and missing grapevine plants. Moreover, 37 other points (see Figure 1 for location) outside the 10 × 10 m areas were used as verification points (24 in P1 and 13 in P2). These points were selected to ensure sample representativeness in different contexts (dense and sparse grapevine vegetation, different height values, etc.). In this case, only vine row heights were measured and compared with heights estimated by the CSM.

Figure 5.

In-field measurements at specific points: (a) row height measurements; and (b) row width measurements.

Grapevine height and area of the two 10 × 10 m validation areas was estimated using three different approaches: (1) ground-truth data; (2) a mask produced by manual segmentation of the computed orthophoto mosaics for the computation of grapevine vegetation area, which was then multiplied by the vine row’s average height, computed using the results of the CSM; and (3) applying the proposed method to UAV-acquired data and extracting both row area and height in a fully automatic process.

The accuracy of the method was assessed using vine rows heights and widths measured in-field as reference. Then, those values were compared with those obtained using the proposed method. The overall agreement between the observed in-field measurements o and the estimated values e were verified through the root mean square error (RMSE), as shown in Equation (6).

3. Results

As stated in Section 2.3, this modified and enhanced version of Pádua et al. [62] method enabled the estimation of grapevine area and volume, as well as vineyard areas that can potentially benefit from canopy management operations. By using multi-temporal data analysis, this method enables monitoring grapevine vegetation evolution.

3.1. Study Area Characterization

Both vineyard plots analysed—one composed of red wine varieties (P1) and another by white wine varieties (P2)—were characterized by orthophoto mosaics, DSMs and DTMs with 3 cm GSD, resulting from photogrammetric processing of UAV-based RGB imagery, acquired during each flight campaign. Table 2 presents the mean error and RMSE values for each direction (X: easting, Y: northing, Z: height), obtained during photogrammetric processing, using five ground control points extracted from F1 coordinates, as reference. Higher deviations were found in Z, while the error rate is lower in both X and Y.

Table 2.

Mean error and root mean square error (RMSE) in each direction (X, Y, Z) on the five tie points for each flight and its global values, considering the deviations from all tie points. F1 coordinates were used as reference.

Figure 6 presents the generated orthophoto mosaics along with the percentages of both grapevine vegetation and inter-row vegetation. Grapevine vegetation was denser in the right side of both studied vineyard plots, particularly in P2’s lower-right side and P1’s upper right side. Conversely, there was a greater incidence of missing grapevines in the studied vineyard plots’ left sides. Canopy management operations that occurred were also perceivable between flight campaigns when considering multi-temporal analysis, as observed in the flights of 16 June (F3) and 11 July (F5).

Figure 6.

Generated orthophoto mosaics for each flight campaign carried out in both vineyard plots (P1 and P2), along with grapevine vegetation (VV) and inter-row vegetation (IR) percentages. The result of canopy management operations, such as shoot thinning and leaf removal, is noticeable by comparing the orthophoto mosaics. Coordinates in WGS84 (EPSG:4326).

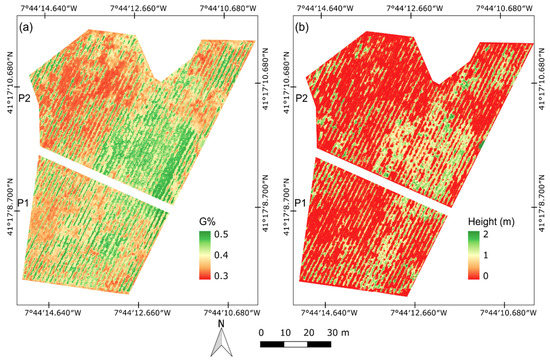

Regarding the other orthorectified outcomes, the DSM and DTM enabled obtaining the CSM, and G% was computed from the orthophoto mosaics. Figure 7 presents a color-coded representation from these results from data acquired on the 27 July 2017 flight campaign. Regarding CSM height range in this study, and were set to 0.2 and 2 m, respectively. Those values were selected according to the known characteristics of the study vineyards.

Figure 7.

Examples of inputs used in this study, computed from the photogrammetric processing of imagery acquired on the 27 July 2017 flight campaign: (a) green percentage index; and (b) crop surface model. Coordinates in WGS84 (EPSG:4326).

3.2. Vineyard Vegetation Change Monitoring

By applying the proposed method to the orthorectified products from the photogrammetric processing of data acquired in each flight campaign, it was possible to (1) identify vine rows, (2) determine individual vine row’s central line, (3) estimate grapevines’ vegetation, and (4) distinguish grapevines from other types of vegetation (e.g., inter-row vegetation). Two relevant canopy management operations took place during this study (marked both in figures and tables): one in the first half of June 2017 (shoot thinning between the second and the third flight campaigns) and another one in the first week of July 2017 (leaf removal between the fourth and fifth flight campaigns).

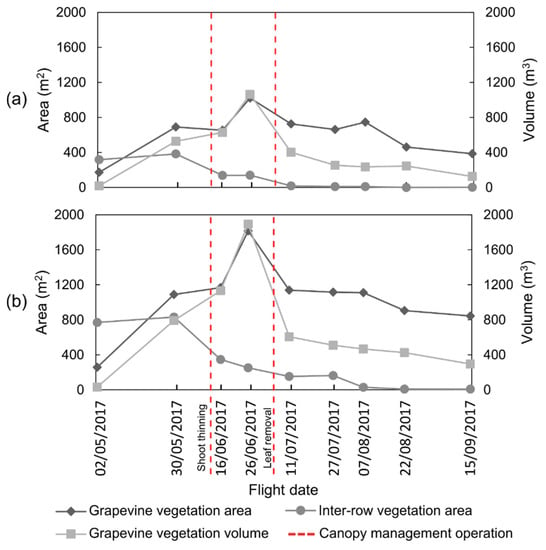

Figure 8 shows an estimation of grapevine vegetation area and volume per flight campaign, as well inter-row vegetation area. As expected, P1 and P2 begin by having the smallest estimated grapevine vegetation area (F1, 2 May 2017) with 172 m2 and 257 m2, respectively, representing 5% of the total plot occupation area. An intensive vegetative growth was expected between May and June, together with some relevant canopy management operations. These results coincide with the expected vegetative evolution of grapevines in DDR. Moreover, they allow not only identification but also estimation of the impact on grapevine vegetative area of two relevant canopy management operations.

Figure 8.

Estimated outcomes from applying the proposed method to data acquired in all aerial campaigns, from (a) P1 and (b) P2: grapevines’ vegetation area, inter-row vegetation area, and grapevine vegetation volume.

In terms of grapevine vegetation volume, the behaviour was similar to grapevines’ vegetation area: it increased from the first to the fourth flight campaigns and decreases thereafter, as presented in Figure 8. Whereas the first canopy management operation—shoot thinning—that occurred a few days before the third flight campaign did not decrease the volume’s growth, the second canopy management operation—leaf removal—verifiable in the fifth flight campaign, clearly did.

In general, no significant differences amongst red and white grapevine varieties in regards to either area or volume were detected. Both parameters presented a similar behaviour per flight campaign.

In this study, we also estimated the area of non-grapevine vegetation (e.g., inter-row vegetation) in the same plots, P1 and P2 (Figure 8). After the winter and spring months, the first flight campaign data—about 300 m2 in P1 (6% of occupation area) and approximately 800 m2 in P2 (14% of occupation area)—and the second flight campaign data revealed a slight increase in both plots. Data acquired in the following flight campaigns showed a decrease in inter-row vegetation area.

3.3. Multi-Temporal Analysis

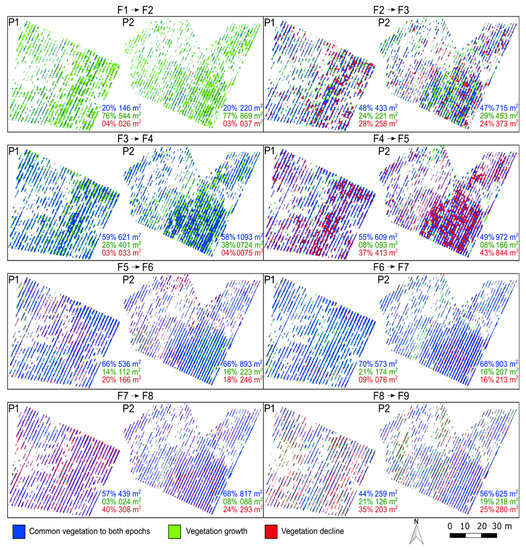

By applying the proposed method to consecutive flight campaigns’ data, a multi-temporal analysis of the study area was performed, as described in Section 2.3.4. This enabled the observation of canopy management operations that occurred during grapevines growing season. Figure 9 presents a visual representation of the multi-temporal analysis of grapevine vegetation area variation between flight campaigns.

Figure 9.

Multi-temporal analysis of grapevine vegetation: blue stands for vegetation present in both consecutive flight campaigns; green means vegetation growth; and red represents vegetation decline. Percentage and area (m2) values are also presented for each class.

The main vegetative development occurred between the first and the second flight campaigns, with an estimated grapevine area increase of about 300% for P1 (~540 m2) and 320% for P2 (~870 m2) and the lowest decline (nearly 26 m2 for P1 and 38 m2 for P2, corresponding to 4% and 3% of grapevine vegetation area in the fight campaigns) registered during this study. This result further supports those presented for grapevine’ vegetation area and volume (Section 3.2).

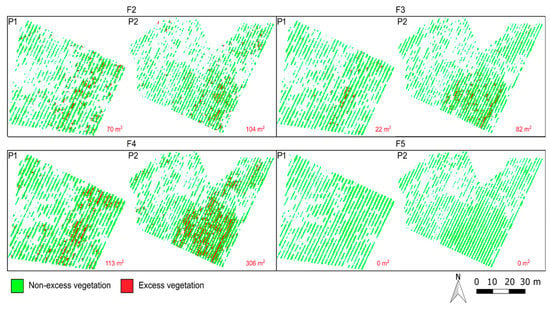

3.4. Estimation of Vineyard Areas for Potential Canopy Management Operations

By obtaining continuous information about grapevine vegetation evolution, it is possible to estimate which areas (if any) within a given vineyard plot that could potentially benefit from canopy management operations at any given time. This can be useful as a decision-support system for canopy management operations scheduling, enabling the optimizing of physical means, managing biomass, and further improving vineyards’ overall performance.

Both P1 and P2 were analysed to estimate areas that potentially needed canopy management operations. Grapevine vegetation is considered excessive when outside a defined area. To identify it, the binary image S was dilated according to a given width w, representing the maximum width of grapevine vegetation in a row. Several tests were performed in this analysis to determine the best value for w. Accordingly, for the canopy management operations performed in the field, a value of 0.6 m was considered optimal for the estimation of potential excess vegetation. This procedure was applied for all flight campaigns’ data. Figure 10 presents the outcomes obtained for data from the second, third, fourth, and fifth flight campaigns. Those flights were the ones that revealed excess vegetation, except for F5, which was included in Figure 10 to show an example where no excess vegetation was detected. F5 occurred after the second management operation. After that, vegetation was contained in the range of w = 0.6 m until harvesting season.

Figure 10.

Estimated grapevines’ vegetation both in P1 and P2. Green identifies grapevines’ vegetation, red signals areas of excess grapevines’ vegetation and therefore that potentially could benefit from canopy management operations along with its area in m2.

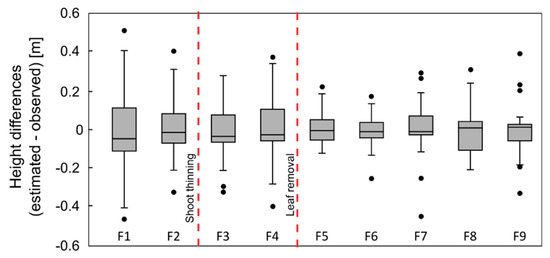

3.5. Accuracy Assessment

The results presented in the last subsections were obtained by automatically applying the proposed method. However, to assess the method’s accuracy and effectiveness, a validation procedure was used, as described in Section 2.3.5. Figure 11 presents the boxplots of the differences in height per flight campaign between the measurements taken in the field and the heights generated by the proposed method at the 50 points belonging to the validation areas. The influence of field management operations and the vegetative vigour of the plants are clearly detectable in the method’s height estimation accuracy. The dispersion of values increased with plant vigour and decreased after each field management operation, remaining stable after the last field operation, because after that time, the vegetative expansion was no longer so prominent.

Figure 11.

Boxplots of the height differences per flight campaign.

Table 3 presents the results of the comparison between heights estimated by the proposed method and measured in the field per flight campaign. In general, the RMSE indicates the expected difference between heights per campaign. As can be concluded from Table 3, Figure 11, and demonstrated in the next section, the RMSE varied significantly and a direct correlation was obtained with canopy management operations and the grapevine vegetative cycle.

Table 3.

Accuracy assessment per flight campaign (F#) using the 50 points in the two validation areas and the 37 sparse points used for control. RMSE: root-mean-square error, R2: coefficient of determination. Red dashed lines represent canopy management operations.

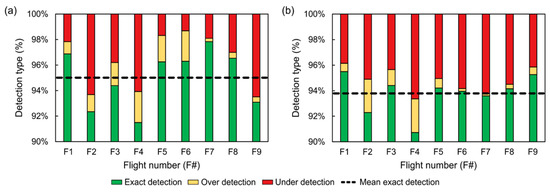

Regarding grapevine area estimation, three different approaches were used, as explained in Section 2.3.3. The method was validated by comparing manual segmentation of two different areas, each one located in a different vineyard plot where the following three conditions could be observed: (1) the pixel-value is the same and is classified as exact detection; (2) over detection, if grapevine vegetation estimated in the method’s application result is not classified as grapevine vegetation in the reference mask; and (3) under detection, corresponding to areas of grapevine vegetation that were not accurately estimated from the obtained results. The results from this evaluation are presented in Figure 12. Overall, the proposed method provided a mean accuracy of 94.40% in the exact detection of grapevine vegetation, similar to Pádua et al. [62]. However, the mean exact detection percentage in P1 area was greater than the area located in P2, at 95.01% and 93.79%, respectively.

Figure 12.

Results from occupation row area validation from data from each flight in an area of 10 × 10 m from both studied vineyard plots (a) P1 and (b) P2.

4. Discussion

A relationship was clearly established between grapevine vegetative cycle, field canopy management operations, and the different parameters obtained using the proposed method based on the results presented in Section 3. This section presents a discussion regarding vineyard vegetation evolution, determination of vine row height, and the impact that the proposed method can have in canopy management operations scheduling.

4.1. Vegetation Evolution

AOI vegetation evolution over time can be observed in Figure 6 and Figure 8. As expected, the grapevine vegetative cycle was verified. P1 and P2 begin by having the smallest estimated grapevine vegetation area (F1, 2 May 2017), at 172 m2 and 257 m2, respectively. This represents 5% of the total vineyard area. An intensive vegetative growth follows, between the months of May and June. From the fifth flight campaign onward, grapevine vegetation area remained relatively stable, with only some minor variations. Some vegetation growth still occurred within the AOI, but grapevine vegetation steadily declined until the harvesting season, with a greater emphasis to the last two flight campaigns.

The impact of the first canopy management operation (shoot thinning, which took place in mid-June) is distinctly noticeable when comparing the second and third flight campaigns. Whereas vineyard vegetation area variation was not meaningful for both P1 and P2 (approximately −5% and 7%, respectively), the decline area was about 258 m2 for P1 and 373 m2 for P2, which are among the highest values registered in this study. As mentioned when presenting the grapevine vegetation volume, the type of canopy management operation can be directly correlated to grapevine canopy. This can be further established by analysing vineyard vegetation evolution from the fourth to the fifth flight campaigns, in between which another canopy management operation, leaf removal, took place (Figure 9, F4→F5). Grapevine vegetation area variation was higher than when the first canopy management operation occurred. Larger vegetation decline values were registered, about −29% (413 m2) for P1 and −37% for P2 (843 m2), when considering that the more intensive grapevines vegetative growth period ended in late June.

When comparing consecutive flights (Figure 9), the slight differences in the results concerning temporal evolution may be explained by the proposed method’s implementation. However, grapevine leaves can (and do) change colour either when entering in their later phonological stages or as a manifestation of potential phytosanitary problems. As an example, in P1, some misdetections occurred mostly in the last two flight campaigns, because grapevine leaves were turning red.

Regarding grapevine canopy area, and when analysing each flight campaign individually (Figure 12), data from the flight prior to leaf removal (F4) showed the lowest accuracy in both analysed areas—91.50% and 90.73%, respectively—which can be explained by the existence of some grapevine branches that were not correctly detected in the CSM. This means F4 was the flight with the greatest overall under detection rate. The highest accuracy was achieved in F7 for P2 with 97.83% and F1 for P2 with a detection accuracy of 95.50%. Regarding misclassifications, under detection was verified in the boarders of grapevine plants and in the few thinner parts, whereas over detection was observed in shadowed areas and when there was more abundant vegetation, resulting in connected rows. Some inter-row vegetation was also classified. However, these misclassifications did not significantly influence the proposed method’s overall performance in terms of grapevine canopy area evaluation.

With respect to grapevine vegetation volume, the behaviour was similar to grapevine vegetation area: it increased from the first to the fourth flight campaign and decreased thereafter, as presented in Figure 8. The first canopy management operation—shoot thinning—that took place a few days before the third flight campaign, did not decrease the volume’s growth. The second canopy management operation—leaf removal—verifiable in the fifth flight campaign, clearly did decrease the volume: P1 decreased by about 62% in its 1000 m3 and P2 grapevines’ vegetation volume decreased about 68%, from 1900 m3. This can be explained by the applied canopy management operations.

When assessing both grapevine area and volume from aerial imagery, management operations such as shoot thinning, which helps to focus grapevine development to have the best possible yield by removing secondary shoots and uncrowning areas to open up the canopy and help avoid diseases and improve air flow, may have no significant visual impact in grapevines’ canopy. This happens because much of this operation is performed in the grapevines’ canopy understory, and uncrowning does not completely remove surrounding vegetation—it only reduces it. Leaf removal effectively and unequivocally removes a significant amount of grapevine vegetation, with a visible impact on grapevine canopy level. In the sixth, seventh, and eighth flight campaigns, grapevine vegetation volume was about 240 m3 in P1, having decreased to 125 m3 (−53%) in the ninth (and final) flight campaign. P2 grapevine vegetation volume progressively decreased about 15% per flight campaign, until approximately 295 m3 in the ninth flight campaign.

No significant differences amongst red and white grapevine varieties in regards to either area or volume were detected. Both parameters presented a similar behaviour per flight campaign.

Inter-row vegetation (Figure 8) in P1 and P2 was practically non-existent after the fourth and seventh flight campaigns, respectively. By cross-referencing estimated non-grapevine vegetation area with environmental data (Figure 2), the evolution was as expected. Whereas some precipitation during May 2017 can account for the slight increase in area between the first and the second flight campaigns, the lack of precipitation, the non-existent irrigation system, along with the high air temperature, and some inter-row management operations justify a reduced or even non-existent inter-row vegetation until the harvesting season.

4.2. Grapevine Row Height

The determination of grapevine row height is critical since it is used to compute vegetation volume, which was one of this study’s goals. As such, a thorough validation was carried out, following the procedure presented in Section 2.3.3. A total of 87 measurements were recorded both in P1 and P2 per flight campaign, separated in two groups: 50 points were used for the proposed method’s validation and the remaining 37 were used as control points. Field measurements were recorded simultaneously with flight campaigns and provided heights ranging from 1.01 m to 1.95 m. By analysing the results presented in Figure 11 and Table 3, an overall RMSE of 0.13 m was attained. These results are in line with other studies that used this type of validation: in De Castro et al. [51] a R2 of 0.78 and a RMSE of 0.19 m were observed in measurements ranging from 1 m to 2.5 m, from three different vineyards, at two different epochs. In Caruso et al. [50], a R2 of 0.75 and a RMSE of 0.15 m were obtained, with heights ranging from 1.4 m to approximately 2 m. Again, grapevines’ vegetative cycle, together with the canopy management operations, influenced the quality of row height estimation. Leaves scarcity made it difficult to estimate heights using the proposed method in the first flight campaign; as a result, the highest RMSE (~0.2 m) was obtained. Then, vegetation development facilitated the use of photogrammetric tools, and the RMSE decreased in a consistent manner until ~0.13 m, just before the leaf removal canopy management operation. After that, RMSE drastically reduced (~0.10 m), influenced by the rows’ regularity after the canopy management operation and the density of leaves. In the last stage of the grapevine vegetative cycle, RMSE moderately increased, since some grapevine branches influenced the photogrammetric estimation. From this point onward, both phenological and environmental contexts contributed so that no further excess grapevine vegetation was detected until the harvesting season.

4.3. Field Management Operations

Whereas the analysis of the data acquired in the second flight campaign identified some excess grapevine vegetation both in P1 and P2, a canopy management operation—shoot thinning—conducted before the third flight campaign reduced it significantly (P1 had about 22 m2 and P2 had 82 m2, representing 3% and 7% of the detected grapevine vegetation, respectively). However, given the intense grapevine vegetative growth until the end of June—the time when the fourth flight campaign took place—more excess grapevine vegetation was detected on both plots (P1 had about 113 m2, representing 11% of the grapevine vegetation, and P2 had 306 m2, representing 17% of the estimated grapevine vegetation). Another canopy management operation—leaf removal—which occurred before the fifth flight campaign, meant none excess grapevines vegetation in P1 and P2.

Besides being a potentially useful tool to identify vineyard areas that can benefit from canopy management operations, the analysis in Figure 10 shows the estimation of excess vegetation is possible at any given point in time. Therefore, grapevine biomass management can be optimized accordingly. Together with multi-temporal analysis, this approach enables a more complete characterization of vineyard’s parameters’ evolution, as well as the construction of historical series to further define intra-seasons crops’ profiles.

5. Conclusions

Canopy management is critical to improving grapevine yield and wine quality by influencing canopy size and vigour and by reducing phytosanitary problems. As such, finding an operational method to estimate vineyards’ geometric and volumetric parameters via remote sensing would improve the efficiency of vineyard management. In this context, we introduced the potential of applying low-cost and commercially off-the-shelf UAS equipped with an RGB sensor in the PV context. The acquired high-resolution aerial imagery proved to be effective for vineyard area, and volume estimation and multi-temporal analysis. The image-processing techniques we used enabled the extraction of different vineyard characteristics and the estimation of its area and canopy volume. Our method provides a quick and transparent way to assist winegrowers in managing grapevine canopy.

RGB orthophoto mosaics provide a context of the whole vineyard for visual interpretation of the surveyed area. By combining the different photogrammetric processing outcomes with image-processing techniques, we proved the possibility of automatically estimating vineyard geometric and volumetric parameters. Multi-temporal analysis of vineyard vegetation development enabled monitoring vineyard growth. We observed both volume and area growth until the period were the in-field grapevine canopy management operations were carried out for leaf removal, decreasing from that moment until the grape harvesting season. Inter-row vegetation decreased as the campaign progressed due to the high air temperatures and the almost absent precipitation during the summer period. These results were corroborated by a thorough validation using ground-truth data. The proposed method provided height estimations with a mean RMSE of 0.13 m, corresponding to an error of less than 10% in the row height, even considering the most complex scenarios of vegetation development (projected branches in the side and in the top of the row). After canopy management operations, the method’s effectiveness improves, benefiting from row shape regularity (RMSE ~0.10 m). Regarding the area evaluation, we validated that the overall method’s effectiveness was over 90% for all the flight campaigns.

This study provides a more valuable and less complex crop-related data acquisition method for farmers and winegrowers. The acquisition of other UAV-based data from different sensors can also be employed to estimate other grapevine parameters, such as for multispectral and thermal infrared sensors. These sensors, despite being less cost-effective and sometimes requiring, more expensive UASs, can estimate other important parameters, such as vineyard water status by estimating crop water stress for decision support in irrigation management, LAI estimation from vegetation indices, which can also support canopy management operations, and estimate the presence of potential phytosanitary problems in vineyards.

In the near future, the growing attention given by UAS manufacturers to both PA and PV markets will provide new technology in these fields by designing sensors adaptable for different UAS. It is expected that cloud-based photogrammetric processing solutions and geographic information system (GIS)-based web platforms for data analysis and results interpretation will contribute to an easier and more flexible method of acquiring and interpreting crop-related UAV-based data.

Author Contributions

Conceptualization, L.P., E.P. and J.J.S.; Data curation, L.P., P.M. and T.A.; Formal analysis, L.P.; Funding acquisition, E.P., R.M. and J.J.S.; Investigation, L.P., P.M., J.H. and T.A.; Methodology, L.P. and J.H.; Project administration, R.M. and J.J.S.; Resources, R.M. and J.J.S.; Software, L.P., P.M. and T.A.; Supervision, E.P., R.M. and J.J.S.; Validation, E.P. and J.J.S.; Visualization, L.P.; Writing—original draft, L.P. and E.P.; Writing—review and editing, E.P., R.M. and J.J.S.

Funding

This work was financed by the European Regional Development Fund (ERDF) through the Operational Programme for Competitiveness and Internationalisation, COMPETE 2020 under the PORTUGAL 2020 Partnership Agreement, and through the Portuguese National Innovation Agency (ANI) as a part of project “PARRA—Plataforma integrAda de monitoRização e avaliação da doença da flavescência douRada na vinhA” (No.3447).

Acknowledgments

This work supported by the North 2020—North Regional Operational Program, as part of project “INNOVINEandWINE—Vineyard and Wine Innovation Platform” (NORTE–01–0145–FEDER–000038).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zarco-Tejada, P.J.; Hubbard, N.; Loudjani, P. Precision Agriculture: An Opportunity for EU Farmers—Potential Support with the CAP 2014–2020; Joint Research Centre (JRC) of the European Commission Monitoring Agriculture ResourceS (MARS): Brussels, Belgium, 2014. [Google Scholar]

- Ozdemir, G.; Sessiz, A.; Pekitkan, F.G. Precision viticulture tools to production of high quality grapes. Sci. Pap.-Ser. B-Hortic. 2017, 61, 209–218. [Google Scholar]

- Bramley, R.; Hamilton, R. Understanding variability in winegrape production systems. Aust. J. Grape Wine Res. 2004, 10, 32–45. [Google Scholar] [CrossRef]

- Bramley, R. Understanding variability in winegrape production systems 2. Within vineyard variation in quality over several vintages. Aust. J. Grape Wine Res. 2005, 11, 33–42. [Google Scholar] [CrossRef]

- Bramley, R.G.V. Progress in the development of precision viticulture—Variation in yield, quality and soil proporties in contrasting Australian vineyards. In Precision Tools for Improving Land Management; Fertilizer and Lime Research Centre: Palmerston North, New Zealand, 2001. [Google Scholar]

- Smart, R.E.; Dick, J.K.; Gravett, I.M.; Fisher, B.M. Canopy Management to Improve Grape Yield and Wine Quality—Principles and Practices. S. Afr. J. Enol. Viticult. 2017, 11, 3–17. [Google Scholar] [CrossRef]

- Vance, A.J.; Reeve, A.L.; Skinkis, P.A. The Role of Canopy Management in Vine Balance; Corvallis, or Extension Service, Oregon State University: Corvallis, OR, USA, 2013; p. 12. [Google Scholar]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating Biomass of Barley Using Crop Surface Models (CSMs) Derived from UAV-Based RGB Imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef]

- CeSIA, A.D.G.; Corti, L.U.; Firenze, I. A simple model for simulation of growth and development in grapevine (Vitis vinifera L.). I. Model description. Vitis 1997, 36, 67–71. [Google Scholar]

- Duchêne, E.; Schneider, C. Grapevine and climatic changes: A glance at the situation in Alsace. Agron. Sustain. Dev. 2005, 25, 93–99. [Google Scholar] [CrossRef]

- Dobrowski, S.Z.; Ustin, S.L.; Wolpert, J.A. Remote estimation of vine canopy density in vertically shoot-positioned vineyards: Determining optimal vegetation indices. Aust. J. Grape Wine Res. 2008, 8, 117–125. [Google Scholar] [CrossRef]

- Yu, X.; Liang, X.; Hyyppä, J.; Kankare, V.; Vastaranta, M.; Holopainen, M. Stem biomass estimation based on stem reconstruction from terrestrial laser scanning point clouds. Remote Sens. Lett. 2013, 4, 344–353. [Google Scholar] [CrossRef]

- Kankare, V.; Holopainen, M.; Vastaranta, M.; Puttonen, E.; Yu, X.; Hyyppä, J.; Vaaja, M.; Hyyppä, H.; Alho, P. Individual tree biomass estimation using terrestrial laser scanning. ISPRS J. Photogramm. Remote Sens. 2013, 75, 64–75. [Google Scholar] [CrossRef]

- Matese, A.; Toscano, P.; Di Gennaro, S.F.; Genesio, L.; Vaccari, F.P.; Primicerio, J.; Belli, C.; Zaldei, A.; Bianconi, R.; Gioli, B. Intercomparison of UAV, Aircraft and Satellite Remote Sensing Platforms for Precision Viticulture. Remote Sens. 2015, 7, 2971–2990. [Google Scholar] [CrossRef]

- Pádua, L.; Vanko, J.; Hruška, J.; Adão, T.; Sousa, J.J.; Peres, E.; Morais, R. UAS, sensors, and data processing in agroforestry: A review towards practical applications. Int. J. Remote Sens. 2017, 38, 2349–2391. [Google Scholar] [CrossRef]

- Baofeng, S.; Jinru, X.; Chunyu, X.; Yulin, F.; Yuyang, S.; Fuentes, S. Digital surface model applied to unmanned aerial vehicle based photogrammetry to assess potential biotic or abiotic effects on grapevine canopies. Int. J. Agric. Biol. Eng. 2016, 9, 119. [Google Scholar]

- Baluja, J.; Diago, M.P.; Balda, P.; Zorer, R.; Meggio, F.; Morales, F.; Tardaguila, J. Assessment of vineyard water status variability by thermal and multispectral imagery using an unmanned aerial vehicle (UAV). Irrig. Sci. 2012, 30, 511–522. [Google Scholar] [CrossRef]

- Romero, M.; Luo, Y.; Su, B.; Fuentes, S. Vineyard water status estimation using multispectral imagery from an UAV platform and machine learning algorithms for irrigation scheduling management. Comput. Electron. Agric. 2018, 147, 109–117. [Google Scholar] [CrossRef]

- Santesteban, L.G.; Di Gennaro, S.F.; Herrero-Langreo, A.; Miranda, C.; Royo, J.B.; Matese, A. High-resolution UAV-based thermal imaging to estimate the instantaneous and seasonal variability of plant water status within a vineyard. Agric. Water Manag. 2017, 183, 49–59. [Google Scholar] [CrossRef]

- Kalisperakis, I.; Stentoumis, C.; Grammatikopoulos, L.; Karantzalos, K. Leaf area index estimation in vineyards from UAV hyperspectral data, 2D image mosaics and 3D canopy surface models. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 299. [Google Scholar] [CrossRef]

- Matese, A.; Di Gennaro, S.F.; Berton, A. Assessment of a canopy height model (CHM) in a vineyard using UAV-based multispectral imaging. Int. J. Remote Sens. 2016, 1–11. [Google Scholar] [CrossRef]

- Pölönen, I.; Saari, H.; Kaivosoja, J.; Honkavaara, E.; Pesonen, L. Hyperspectral Imaging Based Biomass and Nitrogen Content Estimations from Light-Weight UAV; SPIE: Bellingham, WA, USA, 2013; Volume 8887. [Google Scholar]

- Yue, J.; Yang, G.; Li, C.; Li, Z.; Wang, Y.; Feng, H.; Xu, B. Estimation of Winter Wheat Above-Ground Biomass Using Unmanned Aerial Vehicle-Based Snapshot Hyperspectral Sensor and Crop Height Improved Models. Remote Sens. 2017, 9, 708. [Google Scholar] [CrossRef]

- Capolupo, A.; Kooistra, L.; Berendonk, C.; Boccia, L.; Suomalainen, J. Estimating Plant Traits of Grasslands from UAV-Acquired Hyperspectral Images: A Comparison of Statistical Approaches. ISPRS Int. J. Geo-Inf. 2015, 4, 2792–2820. [Google Scholar] [CrossRef]

- Uto, K.; Seki, H.; Saito, G.; Kosugi, Y. Characterization of Rice Paddies by a UAV-Mounted Miniature Hyperspectral Sensor System. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 851–860. [Google Scholar] [CrossRef]

- Näsi, R.; Honkavaara, E.; Lyytikäinen-Saarenmaa, P.; Blomqvist, M.; Litkey, P.; Hakala, T.; Viljanen, N.; Kantola, T.; Tanhuanpää, T.; Holopainen, M. Using UAV-Based Photogrammetry and Hyperspectral Imaging for Mapping Bark Beetle Damage at Tree-Level. Remote Sens. 2015, 7, 15467–15493. [Google Scholar] [CrossRef]

- Nevalainen, O.; Honkavaara, E.; Tuominen, S.; Viljanen, N.; Hakala, T.; Yu, X.; Hyyppä, J.; Saari, H.; Pölönen, I.; Imai, N.N.; et al. Individual Tree Detection and Classification with UAV-Based Photogrammetric Point Clouds and Hyperspectral Imaging. Remote Sens. 2017, 9, 185. [Google Scholar] [CrossRef]

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J.J. Hyperspectral Imaging: A Review on UAV-Based Sensors, Data Processing and Applications for Agriculture and Forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Watson, C.; Turner, D. Development of a UAV-LiDAR System with Application to Forest Inventory. Remote Sens. 2012, 4, 1519–1543. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Watson, C.S. Evaluating Tree Detection and Segmentation Routines on Very High Resolution UAV LiDAR Data. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7619–7628. [Google Scholar] [CrossRef]

- Chisholm, R.A.; Cui, J.; Lum, S.K.Y.; Chen, B.M. UAV LiDAR for below-canopy forest surveys. J. Unmanned Veh. Syst. 2013, 1, 61–68. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Diaz-Varela, R.; Angileri, V.; Loudjani, P. Tree height quantification using very high resolution imagery acquired from an unmanned aerial vehicle (UAV) and automatic 3D photo-reconstruction methods. Eur. J. Agron. 2014, 55, 89–99. [Google Scholar] [CrossRef]

- Madec, S.; Baret, F.; de Solan, B.; Thomas, S.; Dutartre, D.; Jezequel, S.; Hemmerlé, M.; Colombeau, G.; Comar, A. High-Throughput Phenotyping of Plant Height: Comparing Unmanned Aerial Vehicles and Ground LiDAR Estimates. Front. Plant Sci. 2017, 8. [Google Scholar] [CrossRef] [PubMed]

- Sankey, T.; Donager, J.; McVay, J.; Sankey, J.B. UAV lidar and hyperspectral fusion for forest monitoring in the southwestern USA. Remote Sens. Environ. 2017, 195, 30–43. [Google Scholar] [CrossRef]

- Sankey, T.T.; McVay, J.; Swetnam, T.L.; McClaran, M.P.; Heilman, P.; Nichols, M. UAV hyperspectral and lidar data and their fusion for arid and semi-arid land vegetation monitoring. Remote Sens. Ecol. Conserv. 2018, 4, 20–33. [Google Scholar] [CrossRef]

- Von Bueren, S.; Yule, I. Multispectral aerial imaging of pasture quality and biomass using unmanned aerial vehicles (UAV). In Accurate and Efficient Use of Nutrients on Farms; Occasional Report; Fertilizer and Lime Research Centre, Massey University: Palmerston North, New Zealand, 2013; Volume 26. [Google Scholar]

- Vega, F.A.; Ramírez, F.C.; Saiz, M.P.; Rosúa, F.O. Multi-temporal imaging using an unmanned aerial vehicle for monitoring a sunflower crop. Biosyst. Eng. 2015, 132, 19–27. [Google Scholar] [CrossRef]

- Li, W.; Niu, Z.; Chen, H.; Li, D.; Wu, M.; Zhao, W. Remote estimation of canopy height and aboveground biomass of maize using high-resolution stereo images from a low-cost unmanned aerial vehicle system. Ecol. Indic. 2016, 67, 637–648. [Google Scholar] [CrossRef]

- Castaldi, F.; Pelosi, F.; Pascucci, S.; Casa, R. Assessing the potential of images from unmanned aerial vehicles (UAV) to support herbicide patch spraying in maize. Precis. Agric. 2017, 18, 76–94. [Google Scholar] [CrossRef]

- Schirrmann, M.; Giebel, A.; Gleiniger, F.; Pflanz, M.; Lentschke, J.; Dammer, K.-H. Monitoring Agronomic Parameters of Winter Wheat Crops with Low-Cost UAV Imagery. Remote Sens. 2016, 8, 706. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Moeckel, T.; Dayananda, S.; Nidamanuri, R.R.; Nautiyal, S.; Hanumaiah, N.; Buerkert, A.; Wachendorf, M. Estimation of Vegetable Crop Parameter by Multi-temporal UAV-Borne Images. Remote Sens. 2018, 10, 805. [Google Scholar] [CrossRef]

- Kim, D.-W.; Yun, H.S.; Jeong, S.-J.; Kwon, Y.-S.; Kim, S.-G.; Lee, W.S.; Kim, H.-J. Modeling and Testing of Growth Status for Chinese Cabbage and White Radish with UAV-Based RGB Imagery. Remote Sens. 2018, 10, 563. [Google Scholar] [CrossRef]

- Karpina, M.; Jarząbek-Rychard, M.; Tymków, P.; Borkowski, A. UAV-BASED AUTOMATIC TREE GROWTH MEASUREMENT FOR BIOMASS ESTIMATION. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B8, 685–688. [Google Scholar] [CrossRef]

- Carr, J.C.; Slyder, J.B. Individual tree segmentation from a leaf-off photogrammetric point cloud. Int. J. Remote Sens. 2018, 39, 5195–5210. [Google Scholar] [CrossRef]

- Mathews, A.J.; Jensen, J.L.R. Visualizing and Quantifying Vineyard Canopy LAI Using an Unmanned Aerial Vehicle (UAV) Collected High Density Structure from Motion Point Cloud. Remote Sens. 2013, 5, 2164–2183. [Google Scholar] [CrossRef]

- Mathews, A.J. Object-based spatiotemporal analysis of vine canopy vigor using an inexpensive unmanned aerial vehicle remote sensing system. J. Appl. Remote Sens. 2014, 8, 085199. [Google Scholar] [CrossRef]

- Weiss, M.; Baret, F. Using 3D Point Clouds Derived from UAV RGB Imagery to Describe Vineyard 3D Macro-Structure. Remote Sens. 2017, 9, 111. [Google Scholar] [CrossRef]

- Rouse, J.W., Jr.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in the Great Plains with Erts. NASA Spec. Publ. 1974, 351, 309. [Google Scholar]

- Caruso, G.; Tozzini, L.; Rallo, G.; Primicerio, J.; Moriondo, M.; Palai, G.; Gucci, R. Estimating biophysical and geometrical parameters of grapevine canopies (‘Sangiovese’) by an unmanned aerial vehicle (UAV) and VIS-NIR cameras. VITIS J. Grapevine Res. 2017, 56. [Google Scholar] [CrossRef]

- De Castro, A.I.; Jiménez-Brenes, F.M.; Torres-Sánchez, J.; Peña, J.M.; Borra-Serrano, I.; López-Granados, F. 3-D Characterization of Vineyards Using a Novel UAV Imagery-Based OBIA Procedure for Precision Viticulture Applications. Remote Sens. 2018, 10, 584. [Google Scholar] [CrossRef]

- Pádua, L.; Adão, T.; Hruška, J.; Sousa, J.J.; Peres, E.; Morais, R.; Sousa, A. Very high resolution aerial data to support multi-temporal precision agriculture information management. Procedia Comput. Sci. 2017, 121, 407–414. [Google Scholar] [CrossRef]

- Magalhães, N. Tratado de Viticultura: A Videira, a Vinha eo Terroir; Chaves Ferreira: São Paulo, Brazil, 2008; ISBN 972-8987-15-3. [Google Scholar]

- Costa, R.; Fraga, H.; Malheiro, A.C.; Santos, J.A. Application of crop modelling to portuguese viticulture: Implementation and added-values for strategic planning. Ciência Téc. Vitiv. 2015, 30, 29–42. [Google Scholar] [CrossRef]

- Fraga, H.; Malheiro, A.C.; Moutinho-Pereira, J.; Cardoso, R.M.; Soares, P.M.M.; Cancela, J.J.; Pinto, J.G.; Santos, J.A. Integrated Analysis of Climate, Soil, Topography and Vegetative Growth in Iberian Viticultural Regions. PLoS ONE 2014, 9, e108078. [Google Scholar] [CrossRef] [PubMed]

- Johnson, L.F.; Roczen, D.E.; Youkhana, S.K.; Nemani, R.R.; Bosch, D.F. Mapping vineyard leaf area with multispectral satellite imagery. Comput. Electron. Agric. 2003, 38, 33–44. [Google Scholar] [CrossRef]

- Fraga, H.; Santos, J.A. Daily prediction of seasonal grapevine production in the Douro wine region based on favourable meteorological conditions. Aust. J. Grape Wine Res. 2017, 23, 296–304. [Google Scholar] [CrossRef]

- Burgos, S.; Mota, M.; Noll, D.; Cannelle, B. Use of very high-resolution airborne images to analyse 3D canopy architecture of a vineyard. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 399. [Google Scholar] [CrossRef]

- Comba, L.; Gay, P.; Primicerio, J.; Ricauda Aimonino, D. Vineyard detection from unmanned aerial systems images. Comput. Electron. Agric. 2015, 114, 78–87. [Google Scholar] [CrossRef]

- Nolan, A.; Park, S.; Fuentes, S.; Ryu, D.; Chung, H. Automated detection and segmentation of vine rows using high resolution UAS imagery in a commercial vineyard. In Proceedings of the 21st International Congress on Modelling and Simulation, Gold Coast, Australia, 29 November–4 December 2015; Volume 29, pp. 1406–1412. [Google Scholar]

- Poblete-Echeverría, C.; Olmedo, G.F.; Ingram, B.; Bardeen, M. Detection and Segmentation of Vine Canopy in Ultra-High Spatial Resolution RGB Imagery Obtained from Unmanned Aerial Vehicle (UAV): A Case Study in a Commercial Vineyard. Remote Sens. 2017, 9, 268. [Google Scholar] [CrossRef]

- Pádua, L.; Marques, P.; Hruška, J.; Adão, T.; Bessa, J.; Sousa, A.; Peres, E.; Morais, R.; Sousa, J.J. Vineyard properties extraction combining UAS-based RGB imagery with elevation data. Int. J. Remote Sens. 2018, 1–25. [Google Scholar] [CrossRef]

- Richardson, A.D.; Jenkins, J.P.; Braswell, B.H.; Hollinger, D.Y.; Ollinger, S.V.; Smith, M.-L. Use of digital webcam images to track spring green-up in a deciduous broadleaf forest. Oecologia 2007, 152, 323–334. [Google Scholar] [CrossRef] [PubMed]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).