Automated Aerial Triangulation for UAV-Based Mapping

Abstract

1. Introduction

- Automated relative orientation recovery of UAV-based images in the presence of prior information regarding the flight trajectory,

- Initial recovery of image EOPs through either incremental or global SfM-based strategies,

- Accuracy analysis of the derived 3D reconstruction through check point analysis, and

- Comparison of the proposed approach against available commercial software, such as Pix4D.

2. Theoretical Background

2.1. Relative Orientation Recovery

2.2. Exterior Orientation Estimation

2.3. Bundle Adjustment

3. Methodology

3.1. Automated Relative Orientation

- The rotation angle and between overlapping stereo-images can be assumed to be zero, and

- The translation component is approximated to be zero.

3.2. Incremental Strategy for EOP Recovery

3.2.1. Local Reference Coordinate System Initialization

- There should be a sufficient number of feature correspondences within the selected image triplet, and

- There should be a good geometric configuration among the three overlapping images.

3.2.2. Image Augmentation Process

Rotational Parameters Estimation:

Positional Parameters Estimation:

Compatibility Analysis for Image Augmentation:

3.3. Global Strategy for EOP Recovery

3.3.1. Multiple Rotation Averaging

3.3.2. Global Translation Averaging

3.4. Global Bundle Adjustment

4. Experimental Results

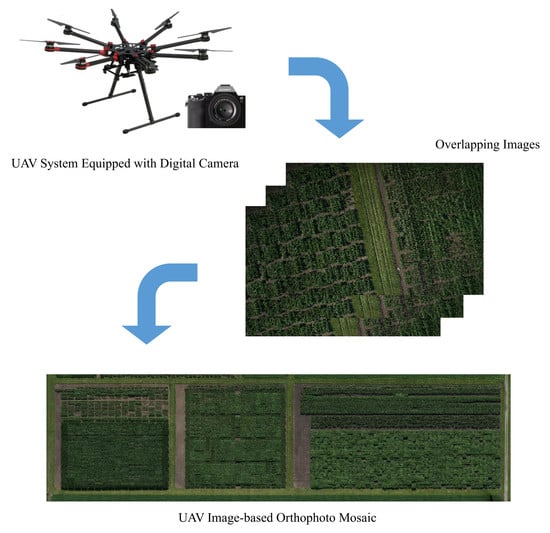

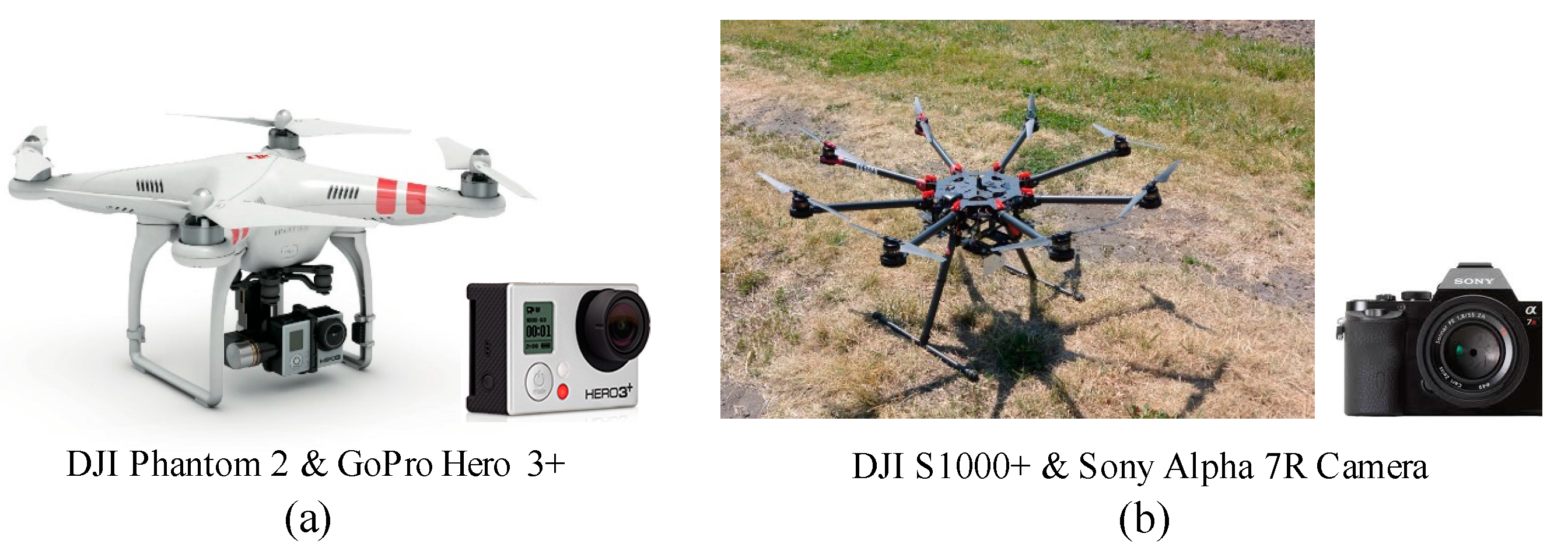

4.1. Data Description

4.2. Comparison between Incremental and Global Estimation for Image EOPs

- Compared to the incremental approach, the global approach provides more accurate initial estimates of image EOPs when compared to the BA-based EOPs.

- According to the reported processing time, one can note that the global approach is more efficient when dealing with datasets including a large number of images.

4.3. Accuracy Analysis

4.3.1. Check Point Analysis

4.3.2. Comparison with Airborne LiDAR Data

4.4. Comparison with Pix4D

5. Conclusions and Recommendations for Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Candiago, S.; Remondino, F.; De Giglio, M.; Dubbini, M.; Gattelli, M. Evaluating multispectral images and vegetation indices for precision farming applications from UAV images. Remote Sens. 2015, 7, 4026–4047. [Google Scholar] [CrossRef]

- Habib, A.; Han, Y.; Xiong, W.; He, F.; Zhang, Z.; Crawford, M. Automated ortho-rectification of UAV-based hyperspectral data over an agricultural field using frame RGB imagery. Remote Sens. 2016, 8, 796. [Google Scholar] [CrossRef]

- Ribera, J.; He, F.; Chen, Y.; Habib, A.F.; Delp, E.J. Estimating phenotypic traits from UAV based RGB imagery. In Proceedings of the ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Workshop on Data Science for Food, Energy, and Water, San Francisco, CA, USA, 13–17 August 2016. [Google Scholar]

- Ribera, J.; Chen, Y.; Boomsma, C.; Delp, E.J. Counting Plants Using Deep Learning. In Proceedings of the 2017 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Montreal, QC, Canada, 14–16 November 2017. [Google Scholar]

- Habib, A.; Xiong, W.; He, F.; Yang, H.L.; Crawford, M. Improving orthorectification of UAV-based push-broom scanner imagery using derived orthophotos from frame cameras. IEEE J-STARS 2017, 10, 262–276. [Google Scholar] [CrossRef]

- Chen, Y.; Ribera, J.; Boomsma, C.; Delp, E.J. Locating Crop Plant Centers from UAV-Based RGB Imagery. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017; pp. 2030–2037. [Google Scholar]

- Chen, Y.; Ribera, J.; Boomsma, C.; Delp, E.J. Plant leaf segmentation for estimating phenotypic traits. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3884–3888. [Google Scholar]

- Habib, A.; Zhou, T.; Masjedi, A.; Zhang, Z.; Flatt, J.E.; Crawford, M. Boresight Calibration of GNSS/INS-Assisted Push-Broom Hyperspectral Scanners on UAV Platforms. IEEE J-STARS 2018, 11, 1734–1749. [Google Scholar] [CrossRef]

- Kim, D.-W.; Yun, H.S.; Jeong, S.-J.; Kwon, Y.-S.; Kim, S.-G.; Lee, W.S.; Kim, H.-J. Modeling and Testing of Growth Status for Chinese Cabbage and White Radish with UAV-Based RGB Imagery. Remote Sens. 2018, 10, 563. [Google Scholar] [CrossRef]

- D’Oleire-Oltmanns, S.; Marzolff, I.; Peter, K.D.; Ries, J.B. Unmanned aerial vehicle (UAV) for monitoring soil erosion in Morocco. Remote Sens. 2012, 4, 3390–3416. [Google Scholar] [CrossRef]

- Su, T.-C.; Chou, H.-T. Application of multispectral sensors carried on unmanned aerial vehicle (UAV) to trophic state mapping of small reservoirs: A case study of Tain-Pu reservoir in Kinmen, Taiwan. Remote Sens. 2015, 7, 10078–10097. [Google Scholar] [CrossRef]

- Al-Rawabdeh, A.; He, F.; Moussa, A.; El-Sheimy, N.; Habib, A. Using an unmanned aerial vehicle-based digital imaging system to derive a 3D point cloud for landslide scarp recognition. Remote Sens. 2016, 8, 95. [Google Scholar] [CrossRef]

- Fernández, T.; Pérez, J.L.; Cardenal, J.; Gómez, J.M.; Colomo, C.; Delgado, J. Analysis of landslide evolution affecting olive groves using UAV and photogrammetric techniques. Remote Sens. 2016, 8, 837. [Google Scholar] [CrossRef]

- Hird, J.N.; Montaghi, A.; McDermid, G.J.; Kariyeva, J.; Moorman, B.J.; Nielsen, S.E.; McIntosh, A. Use of unmanned aerial vehicles for monitoring recovery of forest vegetation on petroleum well sites. Remote Sens. 2017, 9, 413. [Google Scholar] [CrossRef]

- Tomaštík, J.; Mokroš, M.; Saloň, Š.; Chudỳ, F.; Tunák, D. Accuracy of photogrammetric UAV-based point clouds under conditions of partially-open forest canopy. Forests 2017, 8, 151. [Google Scholar] [CrossRef]

- Fraser, B.T.; Congalton, R.G. Issues in Unmanned Aerial Systems (UAS) Data Collection of Complex Forest Environments. Remote Sens. 2018, 10, 908. [Google Scholar] [CrossRef]

- George Pierce Jones, I.V.; Pearlstine, L.G.; Percival, H.F. An assessment of small unmanned aerial vehicles for wildlife research. Wildl. Soc. Bull. 2006, 34, 750–758. [Google Scholar] [CrossRef]

- Hodgson, A.; Kelly, N.; Peel, D. Unmanned aerial vehicles (UAVs) for surveying marine fauna: A dugong case study. PLoS ONE 2013, 8, e79556. [Google Scholar] [CrossRef] [PubMed]

- Fernández-Hernandez, J.; González-Aguilera, D.; Rodríguez-Gonzálvez, P.; Mancera-Taboada, J. Image-based modelling from unmanned aerial vehicle (UAV) photogrammetry: An effective, low-cost tool for archaeological applications. Archaeometry 2015, 57, 128–145. [Google Scholar] [CrossRef]

- Jorayev, G.; Wehr, K.; Benito-Calvo, A.; Njau, J.; de la Torre, I. Imaging and photogrammetry models of Olduvai Gorge (Tanzania) by Unmanned Aerial Vehicles: A high-resolution digital database for research and conservation of Early Stone Age sites. J. Archaeol. Sci. 2016, 75, 40–56. [Google Scholar] [CrossRef]

- He, F.; Habib, A.; Al-Rawabdeh, A. Planar constraints for an improved uav-image-based dense point cloud generation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 269. [Google Scholar] [CrossRef]

- Lari, Z.; Al-Rawabdeh, A.; He, F.; Habib, A.; El-Sheimya, N. Region-based 3D surface reconstruction using images acquired by low-cost unmanned aerial systems. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 167–173. [Google Scholar] [CrossRef]

- He, F.; Habib, A. Automated Relative Orientation of UAV-Based Imagery in the Presence of Prior Information for the Flight Trajectory. Photogramm. Eng. Remote Sens. 2016, 82, 879–891. [Google Scholar] [CrossRef]

- Fraser, C.S. Digital camera self-calibration. ISPRS J. Photogramm. Remote Sens. 1997, 52, 149–159. [Google Scholar] [CrossRef]

- Habib, A.; Morgan, M.F. Automatic calibration of low-cost digital cameras. Opt. Eng. 2003, 42, 948–956. [Google Scholar]

- Cramer, M.; Stallmann, D.; Haala, N. Direct georeferencing using GPS/inertial exterior orientations for photogrammetric applications. Int. Arch. Photogramm. Remote Sens. 2000, 33, 198–205. [Google Scholar]

- Skaloud, J. Direct georeferencing in aerial photogrammetric mapping. Photogramm. Eng. Remote Sens. 2002, 68, 207–209, 210. [Google Scholar]

- Pfeifer, N.; Glira, P.; Briese, C. Direct georeferencing with on board navigation components of light weight UAV platforms. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 39, 487–492. [Google Scholar] [CrossRef]

- Remondino, F.; Barazzetti, L.; Nex, F.; Scaioni, M.; Sarazzi, D. UAV photogrammetry for mapping and 3d modeling–current status and future perspectives. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2011, 38, C22. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Horn, B.K. Relative orientation. Int. J. Comput. Vis. 1990, 4, 59–78. [Google Scholar] [CrossRef]

- Mikhail, E.M.; Bethel, J.S.; McGlone, J.C. Introduction to Modern Photogrammetry; Wiley: New York, NY, USA, 2001. [Google Scholar]

- Longuet-Higgins, H.C. A computer algorithm for reconstructing a scene from two projections. Nature 1981, 293, 133. [Google Scholar] [CrossRef]

- Hartley, R.I. In defense of the eight-point algorithm. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 580–593. [Google Scholar] [CrossRef]

- Nistér, D. An efficient solution to the five-point relative pose problem. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 756–770. [Google Scholar] [CrossRef]

- Faugeras, O.D.; Maybank, S. Motion from point matches: Multiplicity of solutions. Int. J. Comput. Vis. 1990, 4, 225–246. [Google Scholar] [CrossRef]

- Philip, J. A Non-Iterative Algorithm for Determining All Essential Matrices Corresponding to Five Point Pairs. Photogramm. Rec. 1996, 15, 589–599. [Google Scholar] [CrossRef]

- Triggs, B. Routines for Relative Pose of Two Calibrated Cameras from 5 Points; Technical Report; INRIA, 2000. [Google Scholar]

- Batra, D.; Nabbe, B.; Hebert, M. An alternative formulation for five point relative pose problem. In Proceedings of the Motion and Video Computing, IEEE Workshop on (WMVC), Austin, TX, USA, 23–24 February 2007; p. 21. [Google Scholar]

- Kukelova, Z.; Bujnak, M.; Pajdla, T. Polynomial Eigenvalue Solutions to the 5-pt and 6-pt Relative Pose Problems. In Proceedings of the British Machine Vision Conference, Leeds, UK, 1–4 September 2008; pp. 56.1–56.10. [Google Scholar]

- Li, H.; Hartley, R. Five-point motion estimation made easy. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; Volume 1, pp. 630–633. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Ortin, D.; Montiel, J.M.M. Indoor robot motion based on monocular images. Robotica 2001, 19, 331–342. [Google Scholar] [CrossRef]

- Fraundorfer, F.; Tanskanen, P.; Pollefeys, M. A minimal case solution to the calibrated relative pose problem for the case of two known orientation angles. In Proceedings of the 11th European conference on Computer vision, Crete, Greece, 5–11 September 2010; pp. 269–282. [Google Scholar]

- Scaramuzza, D. Performance evaluation of 1-point-RANSAC visual odometry. J. Field Robot. 2011, 28, 792–811. [Google Scholar] [CrossRef]

- Troiani, C.; Martinelli, A.; Laugier, C.; Scaramuzza, D. 2-point-based outlier rejection for camera-imu systems with applications to micro aerial vehicles. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 5530–5536. [Google Scholar]

- Viéville, T.; Clergue, E.; Facao, P.D.S. Computation of ego-motion and structure from visual and inertial sensors using the vertical cue. In Proceedings of the 1993 (4th) International Conference on Computer Vision, Berlin, Germany, 11–14 May 1993; pp. 591–598. [Google Scholar]

- Kalantari, M.; Hashemi, A.; Jung, F.; Guédon, J.-P. A new solution to the relative orientation problem using only 3 points and the vertical direction. J. Math. Imaging Vis. 2011, 39, 259–268. [Google Scholar] [CrossRef]

- Naroditsky, O.; Zhou, X.S.; Gallier, J.; Roumeliotis, S.I.; Daniilidis, K. Two efficient solutions for visual odometry using directional correspondence. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 818–824. [Google Scholar] [CrossRef] [PubMed]

- Robertson, D.P.; Cipolla, R. An Image-Based System for Urban Navigation. In Proceedings of the British Machine Vision Conference, London, UK, 7–9 September 2004; Volume 19, p. 165. [Google Scholar]

- Gallagher, A.C. Using vanishing points to correct camera rotation in images. In Proceedings of the 2nd Canadian Conference on Computer and Robot Vision (CRV’05), Victoria, BC, Canada, 9–11 May 2005; pp. 460–467. [Google Scholar]

- He, F.; Habib, A. Performance Evaluation of Alternative Relative Orientation Procedures for UAV-based Imagery with Prior Flight Trajectory Information. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 21–25. [Google Scholar] [CrossRef]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Photo tourism: Exploring photo collections in 3D. In ACM Transactions on Graphics (TOG); ACM: New York, NY, USA, 2006; Volume 25, pp. 835–846. [Google Scholar]

- Fitzgibbon, A.W.; Zisserman, A. Automatic camera recovery for closed or open image sequences. In Proceedings of the 5th European Conference on Computer Vision, London, UK, 2–6 June 1998; pp. 311–326. [Google Scholar]

- Hartley, R.I. Lines and points in three views and the trifocal tensor. Int. J. Comput. Vis. 1997, 22, 125–140. [Google Scholar] [CrossRef]

- Agarwal, S.; Snavely, N.; Simon, I.; Seitz, S.M.; Szeliski, R. Building rome in a day. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 72–79. [Google Scholar]

- Frahm, J.-M.; Fite-Georgel, P.; Gallup, D.; Johnson, T.; Raguram, R.; Wu, C.; Jen, Y.-H.; Dunn, E.; Clipp, B.; Lazebnik, S. Building rome on a cloudless day. In Proceedings of the 11th European Conference on Computer Vision: Part IV, Crete, Greece, 5–11 September 2010; pp. 368–381. [Google Scholar]

- Wu, C. Towards linear-time incremental structure from motion. In Proceedings of the 2013 International Conference on 3D Vision-3DV 2013, Seattle, WA, USA, 29 June–1 July 2013; pp. 127–134. [Google Scholar]

- Schonberger, J.L.; Frahm, J.-M. Structure-from-motion revisited. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar]

- He, F.; Habib, A. Linear approach for initial recovery of the exterior orientation parameters of randomly captured images by low-cost mobile mapping systems. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 40, 149. [Google Scholar] [CrossRef]

- Hartley, R.; Trumpf, J.; Dai, Y.; Li, H. Rotation averaging. Int. J. Comput. Vis. 2013, 103, 267–305. [Google Scholar] [CrossRef]

- Martinec, D.; Pajdla, T. Robust rotation and translation estimation in multiview reconstruction. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Chatterjee, A.; Madhav Govindu, V. Efficient and robust large-scale rotation averaging. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 521–528. [Google Scholar]

- Carlone, L.; Tron, R.; Daniilidis, K.; Dellaert, F. Initialization techniques for 3D SLAM: A survey on rotation estimation and its use in pose graph optimization. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 4597–4604. [Google Scholar]

- Govindu, V.M. Combining two-view constraints for motion estimation. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Kauai, HI, USA, 8–14 December 2001; Volume 2. [Google Scholar]

- Brand, M.; Antone, M.; Teller, S. Spectral solution of large-scale extrinsic camera calibration as a graph embedding problem. In Proceedings of the 8th European Conference on Computer Vision, Prague, Czech Republic, 11–14 May 2004; pp. 262–273. [Google Scholar]

- Sinha, S.N.; Steedly, D.; Szeliski, R. A multi-stage linear approach to structure from motion. In Proceedings of the 11th European Conference on Trends and Topics in Computer Vision, Crete, Greece, 10–11 September 2010; pp. 267–281. [Google Scholar]

- Arie-Nachimson, M.; Kovalsky, S.Z.; Kemelmacher-Shlizerman, I.; Singer, A.; Basri, R. Global motion estimation from point matches. In Proceedings of the 2012 Second International Conference on 3D Imaging, Modeling, Processing, Visualization & Transmission, Zurich, Switzerland, 13–15 October 2012; pp. 81–88. [Google Scholar]

- Cui, Z.; Jiang, N.; Tang, C.; Tan, P. Linear global translation estimation with feature tracks. In Proceedings of the British Machine Vision Conference (BMVC), Swansea, UK, 7–10 September 2015; pp. 41.6–46.13. [Google Scholar]

- Cui, Z.; Tan, P. Global structure-from-motion by similarity averaging. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 864–872. [Google Scholar]

- Jiang, N.; Cui, Z.; Tan, P. A global linear method for camera pose registration. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 481–488. [Google Scholar]

- Förstner, W.; Wrobel, B.P. Photogrammetric Computer Vision; Springer: Berlin, Germany, 2016. [Google Scholar]

- Granshaw, S.I. Bundle adjustment methods in engineering photogrammetry. Photogramm. Rec. 1980, 10, 181–207. [Google Scholar] [CrossRef]

- Bartoli, A.; Sturm, P. Structure-from-motion using lines: Representation, triangulation, and bundle adjustment. Comput. Vis. Image Understand. 2005, 100, 416–441. [Google Scholar] [CrossRef]

- Lee, W.H.; Yu, K. Bundle block adjustment with 3D natural cubic splines. Sensors 2009, 9, 9629–9665. [Google Scholar] [CrossRef] [PubMed]

- Vo, M.; Narasimhan, S.G.; Sheikh, Y. Spatiotemporal bundle adjustment for dynamic 3d reconstruction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1710–1718. [Google Scholar]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle adjustment—A modern synthesis. In International Workshop on Vision Algorithms; Springer: Berlin, Germany, 1999; pp. 298–372. [Google Scholar]

- Lourakis, M.I.; Argyros, A.A. SBA: A software package for generic sparse bundle adjustment. ACM Trans. Math. Softw. (TOMS) 2009, 36, 2. [Google Scholar] [CrossRef]

- Wu, C.; Agarwal, S.; Curless, B.; Seitz, S.M. Multicore bundle adjustment. In Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 20–25 June 2011; pp. 3057–3064. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- He, F.; Habib, A. Automatic orientation estimation of multiple images with respect to laser data. In Proceedings of the ASPRS 2014 Annual Conference, Louisville, KY, USA, 23–24 Match 2014. [Google Scholar]

- Horn, B.K. Closed-form solution of absolute orientation using unit quaternions. JOSA A 1987, 4, 629–642. [Google Scholar] [CrossRef]

- Guan, Y.; Zhang, H. Initial registration for point clouds based on linear features. In Proceedings of the 2011 Fourth International Symposium on Knowledge Acquisition and Modeling, Sanya, China, 8–9 October 2011; pp. 474–477. [Google Scholar]

- He, F.; Habib, A. A closed-form solution for coarse registration of point clouds using linear features. J. Surv. Eng. 2016, 142, 04016006. [Google Scholar]

- Watson, G.A. Computing helmert transformations. J. Comput. Appl. Math. 2006, 197, 387–394. [Google Scholar] [CrossRef]

- He, F.; Habib, A. Target-based and Feature-based Calibration of Low-cost Digital Cameras with Large Field-of-view. In Proceedings of the ASPRS 2015 Annual Conference, Tampa, FL, USA, 4–8 May 2015. [Google Scholar]

- Habib, A.; Detchev, I.; Bang, K. A comparative analysis of two approaches for multiple-surface registration of irregular point clouds. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2010, 38, 61–66. [Google Scholar]

| Specs/Model | UAVs | |

| DJI Phantom 2 | DJI S1000+ | |

| Weight | 1000 g (Take-off weight: < 1300 g) | 4.2 kg (Take-off weight: 6.0 kg ~ 11.0 kg) |

| Max Speed | 15 m/s | 20 m/s |

| Max Flight Endurance | Approximate 23 min | 15 min (9.5 kg take-off weight) |

| Diagonal Size | 350 mm | 1045 mm |

| Specs/Model | Cameras | |

| GoPro Hero 3+ Black Edition | Sony Alpha 7R | |

| Image Size | 3000 × 2250 pixels (medium field-of-view) | 7360 × 4912 pixels |

| Pixel Size | 1.55 μm | 4.90 μm |

| Focal Length | 3.5 mm | 35 mm |

| Values | Datasets | |||||||

|---|---|---|---|---|---|---|---|---|

| Phantom2-Agriculture | Phantom2-Building | S1000-Agriculture-1 | S1000-Agriculture-2 | |||||

| Incremental | Global | Incremental | Global | Incremental | Global | Incremental | Global | |

| 1.12 | 0.83 | 0.61 | 0.43 | 0.29 | 0.17 | 1.52 | 1.24 | |

| 1.79 | 0.77 | 0.88 | 0.65 | 0.55 | 0.31 | 1.71 | 1.63 | |

| 0.94 | 0.46 | 0.53 | 0.37 | 0.27 | 0.14 | 1.86 | 1.49 | |

| 0.44 | 0.28 | 0.28 | 0.21 | 0.84 | 0.40 | 0.80 | 0.46 | |

| 0.53 | 0.33 | 0.25 | 0.20 | 0.69 | 0.37 | 0.96 | 0.51 | |

| 0.78 | 0.43 | 0.38 | 0.24 | 0.79 | 0.55 | 0.85 | 0.60 | |

| Time (min) | 92.8 | 22.1 | 20.1 | 5.8 | 43.7 | 11.2 | 137.2 | 31.3 |

| Phantom2-Agriculture | S1000-Agriculture-1 | S1000-Agriculture-2 | |

|---|---|---|---|

| Number of GCPs | 10 | 9 | 10 |

| Number of Check Points | 18 | 21 | 22 |

| (pixel) | 0.88 | 1.30 | 1.16 |

| (m) | 0.01 | 0.01 | 0.03 |

| (m) | 0.01 | 0.01 | 0.03 |

| (m) | 0.04 | 0.02 | 0.04 |

| Extent of Covered Area | 280 m 100 m | 650 m × 100 m | 410 m × 100 m |

| Total Number of Images | Number of Images with Estimated EOPs | ||

|---|---|---|---|

| Proposed Approach | Pix4D | ||

| Phantom2-Agriculture | 569 | 569 | 487 |

| Phantom2-Building | 81 | 81 | 81 |

| S1000-Agriculture-1 | 421 | 418 | 420 |

| S1000-Agriculture-2 | 639 | 639 | 639 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, F.; Zhou, T.; Xiong, W.; Hasheminnasab, S.M.; Habib, A. Automated Aerial Triangulation for UAV-Based Mapping. Remote Sens. 2018, 10, 1952. https://doi.org/10.3390/rs10121952

He F, Zhou T, Xiong W, Hasheminnasab SM, Habib A. Automated Aerial Triangulation for UAV-Based Mapping. Remote Sensing. 2018; 10(12):1952. https://doi.org/10.3390/rs10121952

Chicago/Turabian StyleHe, Fangning, Tian Zhou, Weifeng Xiong, Seyyed Meghdad Hasheminnasab, and Ayman Habib. 2018. "Automated Aerial Triangulation for UAV-Based Mapping" Remote Sensing 10, no. 12: 1952. https://doi.org/10.3390/rs10121952

APA StyleHe, F., Zhou, T., Xiong, W., Hasheminnasab, S. M., & Habib, A. (2018). Automated Aerial Triangulation for UAV-Based Mapping. Remote Sensing, 10(12), 1952. https://doi.org/10.3390/rs10121952