1. Introduction

Hyperspectral images (HSIs), which include hundreds of bands, contain a great deal of information. Among the many typical applications of HSIs are civil and biological threat detection [

1], atmospheric environmental research [

2], and ocean research [

3], among others. The most commonly used technology in these applications is the classification of pixels in the HSI, referred to as HSI classification. However, HSI classification presents numerous difficulties, particularly in processing high-dimensional data and images with high spatial resolution.

Machine learning and other feature-extraction methods have been applied to HSI classification to cope with these difficulties. The relative performances of support vector learning machines (SVM), a radial basis function (RBF) neural network, and k-neighbor classifiers demonstrate that the SVM method could effectively replace the traditional method, which combines feature reduction algorithms with classification [

4]. Li [

5], however, proposed a framework that uses local binary patterns (LBPs) to extract image features and a high-efficiency extreme learning machine (ELM) as a classifier to show that the ELM classifier is more efficient than SVM methods. However, when compared with the LBP feature extraction method, the complex spectral and spatial information of HSIs requires more sophisticated feature selection methods. Deng et al. [

6] proposed a HSI classification framework based on HSI micro-texture. The framework extends local response patterns to texture enhancement to represent HSIs and uses discriminant locality-preserving projections to reduce the dimensionality of the HSI data. However, the framework does not make use of the spectral information within the HSIs and requires performance improvement.

The above-mentioned traditional machine learning methods for HSI classification all have the same disadvantage—the classification accuracy needs improvement. Since these traditional methods are based on hand-crafted features, hyperspectral data need an algorithm that can learn the representative and discriminative features [

7]. Recently, deep learning, an alternative to the traditional machine learning algorithms discussed above, has been introduced into HSI classification, and is able to extract deep spatial and spectral features from hyperspectral data. Much of the pioneering work on deep learning applied to hyperspectral data classification has shown that identification of deep features leads to higher classification accuracies for hyperspectral data classification [

8].

In 2014, Chen et al. [

8] first proposed a deep learning framework to merge spatial and spectral features. The deep learning framework combined principal component analysis (PCA) with deep learning architecture, and to obtain classification results it used stacked autoencoders to obtain high-level features and logistic regression; this framework was abbreviated to SAE-LR. Although SAE-LR has a disadvantage in terms of its training time, it showed that deep learning methods had a large potential for HSI classification. The following year, Makantasis et al. [

9] exploited a deep supervised method for HSI classification through a convolutional neural network (CNN). The approach used randomized PCA (R-PCA) to reduce the dimensions of raw input data, a CNN to construct high-level features, and a multi-layer perceptron (MLP) for classification. In 2016, Zhao and Du [

10] proposed a spectral–spatial feature-based classification (SSFC) framework. Their SSFC framework used a balanced local discriminant embedding (BLDE) algorithm to extract spectral features, a CNN to find high-level spatial features, and a multiple-feature-based classifier for training. Chen et al. [

11], also in 2016, proposed a deep feature extraction (FE) method based on a CNN and built a deep FE model based on a three-dimensional (3D) CNN to extract the spectral–spatial characteristics of HSIs. This paper established a direction for the application of a CNN and its extended network in the field of HSI classification.

In the last two years, Li et al. [

12] have proposed a 3D CNN framework for accurate HSI classification and used original 3D high-level data directly as an input without actively extracting the features of the HSI. This framework does not rely on any pre- or post-processing, but effectively extracts spectral–spatial features and does not distinguish between these two kinds of features. Distinguished from the above-mentioned deep-learning-based methods, Zhong et al. [

13] proposed a spectral–spatial residual network (SSRN) that uses spectral and spatial residual blocks to learn the deep distinguishing features from the rich spectral features and spatial backgrounds of HSIs.

Among deep-learning-based methods, the SSRN achieves the best performance compared to other methods for three main reasons. First, the SSRN learns spectral and spatial features separately, meaning that more discriminative features can be extracted. Second, SSRN depend on CNN to extract high-level features. Third, SSRN has a deeper CNN structure than other deep learning methods. Early work showed that the deeper a CNN is, the higher the accuracy. The disadvantage of the SSRN, however, is an overly long training time.

Recently, other methods have been devised for which it is claimed that use of additional features can improve classification accuracy. Zhou et al. [

14] incorporated the group knowledge of the hyperspectral features for deep-learning-based-method spatial-spectral classification. Ma et al. [

15] took a local decision based on weighted neighborhood information. Maltezos et al. [

16] introduced a set of features for improving overall classification accuracy. However, only for HSI classification might these methods lead to sub-optimal results, because the SSRN achieved its optimal HSI classification accuracy by learning deep spectral and spatial representations separately.

Inspired by the SSRN and to alleviate its problems, we aimed at building a deeper convolution network that can learn deeper spectral and spatial features separately, but much faster. In 2017, Gao et al. proposed a new deep network structure, DenseNet [

17], based on Google Inception [

18] and Residual Net [

19] (ResNet). As the depth of the network increases, DenseNet can reduce the problem of gradients becoming zero, and the structure can more effectively utilize features and enhance feature transfer between convolution layers. Despite its advantages, DenseNet has a long training time. To reduce the training time and prevent overfitting, we use the parametric rectified linear unit (PReLU), a dynamic learning rate, and other technical improvements (see

Section 2.3).

We propose an end-to-end fast and dense spectral–spatial convolution (FDSSC) network framework for HSI classification. The FDSSC framework has the following three characteristics distinguishing it from the above-mentioned deep learning based methods:

- (1)

It is an end-to-end spectral–spatial convolution network without feature engineering as compared with SAE-LR, Makantasis’s method, and the SSFC framework. Without relying on PCA, R-PCA, or BLDE to reduce the dimension, our framework completely utilizes the CNN to reduce the high dimensionality and to automatically learn spatial and spectral features separately at a very high level, which is more effective and robust. Moreover, the FDSSC framework uses a smaller training sample size than SAE-LR and Makantasis’s method, while achieving higher accuracy.

- (2)

It has a deeper structure than the SSFC framework, and the methods in [

11,

12] which are CNN-based deep learning methods. A deeper structured CNN can learn more useful deep spatial and spectral features, which leads to extremely high accuracy, and thus the FDSSC framework has better performance than previous methods.

- (3)

It reduces the training time while achieving state-of-the-art performance. Although it has a deeper structure, the FDSSC framework is easier to train than other deep-learning-based methods. Specifically, it achieves the best accuracy in only 80 epochs, compared with 600,000 epochs for SAE-LR and 200 epochs for the SSRN framework.

The rest of this paper is structured as follows: In

Section 2, we present our proposed FDSSC framework. In

Section 3, we introduce the HSI dataset and set up our proposed method. In

Section 4, we present the HSI classification results of the proposed framework and discuss the performance and training time compared with other classification methods.

Section 5 provides a summary, as well as suggestions for future work.

2. Proposed Framework

In this section, we explain the FDSSC framework in detail, elaborate on how to extract spectral and spatial features separately from HSI, how to go deeper with a densely-connected structure, and how it manages fast training and prevents overfitting. At the end, we summarize all of the steps in a graphical flowchart to explain the FDSSC network and describe the framework to clarify the advantages of the proposed method.

2.1. Extracting Spectral and Spatial Features Separately from HSI

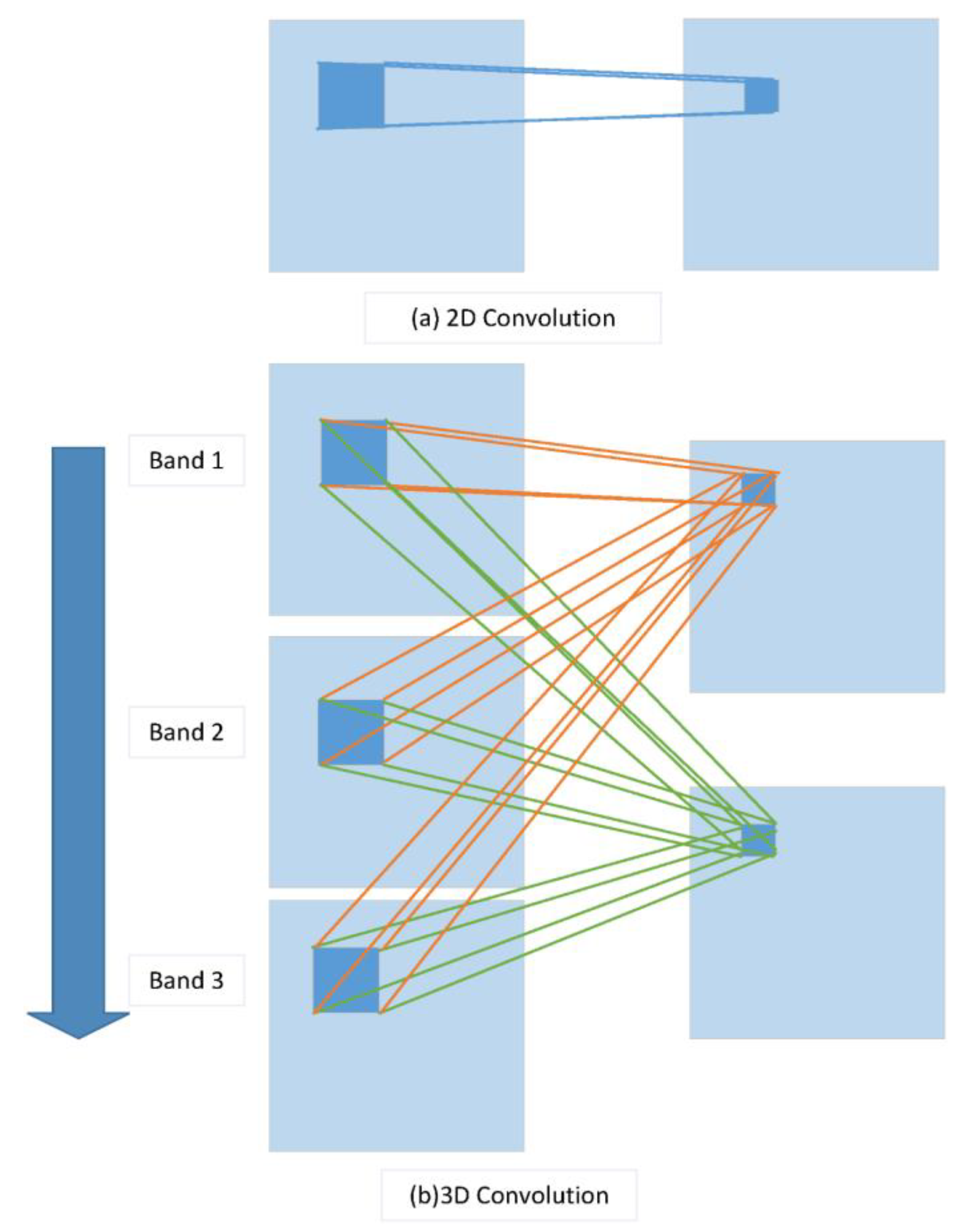

A 1D CNN extracts spectral features, whereas a 2D CNN extracts local spatial features of pixels. However, HSIs contain both abundant spatial and spectral information. For HSI classification, this means to the use of a 3D CNN, which can extract both types of information. As shown in

Figure 1a, the 2D convolution sends a channel of the input image to another feature map after a convolution kernel operation. For an input image with three channels of spectral information (Band1, Band2, and Band3), a 3D convolution processes the data from three channels using two convolution kernels to obtain two characteristic maps, as shown in

Figure 1b. Within the neural network, the value

at position

on the

feature cube in the

layer can be formulated as follows [

12]:

where the feature map attached to the current feature map in the

layer is denoted

, the length and width of the convolution kernel in space are denoted by

and

, respectively, the size of the 3D convolution kernel along the spectral dimension is denoted

, the

th value of the kernel connected to the

th feature cube in the preceding layer is denoted

, the bias on the

feature cube in the

layer is denoted

, and the activation function is denoted

.

When a 3D CNN is applied to HSI classification, the target pixel is at the center of an -size block taken from the original pixels of the HSI as the input of the network, where is the size of the image block in the spatial domain and is the spectral dimension of the HSI. After the convolution and pooling step, the results are converted into 1D feature vectors. Finally, the feature vectors are input into a classifier to obtain the classification results.

The above operations describe the general process of a 3D CNN-based deep learning method. The key to these operations is the size of the convolution kernel, because features determine accuracy. Taking [

12] as an example, a convolution kernel of

or similar size is used to learn the spectral and spatial features at the same time. Distinguished from obtaining the spectral and spatial features together, the proposed framework uses the CNN to learn the spectral and spatial features separately to extract more discriminative features. Next, we explain how to use different-sized kernels to achieve this.

A kernel of size learns the spectral features from a HSI. Local spatial features exist in the HSI space, and the 2D convolution process aims to extract the local spatial features. However, the convolution with a kernel size of does not extract any spatial features because it does not consider the relationship between pixels and their neighbors in the spatial field. Nevertheless, the convolution of a kernel size of can make linear combinations or integrate spatial information for each pixel in a spatial field. Therefore, for 3D hyperspectral data a kernel of size of extracts spectral features and perfectly retains the spatial features.

The spectral information is encoded within bands of hyperspectral data, which is the reason for the high dimensionality of hyperspectral data. However, after spectral features are learned by a kernel of size , the high dimensions of the hyperspectral data can be reduced by a 3D CNN and a reshaping operation. The key to reducing high dimensionality lies in the method of padding the 3D convolution layer. “Same” and “valid” are two frequently used ways of padding. “Same” denotes convolution results at the reserved boundary, which usually cause the output shape to be the same as the input shape. “Valid” represents only effective convolution; that is, the boundary data are not processed. The valid convolution is used to reduce dimensions and retain extracted spectral features and raw spatial information.

A kernel of size of learns the spatial features from a HSI after the spectral features have been learned. By reducing the high dimension, a kernel of size can learn the spatial features from the reserved spatial information of the previous step.

In short, our framework uses a 3D convolution layer of a kernel of size to learn the spectral features. Next, the high dimension of the feature maps is reduced, and then a 3D convolution layer of a kernel of size learns the spatial features. Finally, the classification result is obtained by average pooling, flattening, and a fully-connected layer.

2.2. Going Deeper with Densely-Connected Structures

2.2.1. Densely-Connected Structure

Assume that the CNN has

convolution layers,

is the output of the

layer and

represents the complex nonlinear transformation operations in the

convolution layer. The connected structure of the traditional CNN is such that the output of the

layer is the input of the

layer:

As shown in

Figure 2, DenseNet [

17] uses an extremely densely-connected structure, with the feature map of the output of the zeroth to the

layers acting as the input to the

layer. The connected structure is formulated as

DenseNet combines the number of channels and leaves the value of the feature maps unchanged. To promote the down-sampling of the framework, DenseNet is divided into multiple densely-connected blocks called Dense Blocks, with a transition layer connecting each one. Each layer of DenseNet directly connects to the input and the prior layer, resulting in a hidden deep supervision. This connected structure reduces the phenomenon of gradient disappearance and thus constructs a deeper network. In addition, DenseNet has a regularizing effect that inhibits overfitting.

2.2.2. Separately Learning Deeper Spectral and Spatial Features

The densely-connected structure is used to learn deeper spectral and spatial features from HSIs. The small cube block is the input of our model. To improve down-sampling and separately learn the deeper spatial and spectral features of HSIs, we divided the model into two densely-connected blocks called dense spectral and spatial blocks.

Dense spectral blocks identify the deeper spectral features between multiple channels of a HSI. The first 3D convolution layer processes the original pixel data of size

to produce

feature maps with size

. The maps are the input to a dense spectral block denoted

, where the subscript 1 represents the data in the dense spectral block of the model and the superscript 0 represents the data in the starting position of the dense spectral block. The 3D convolution layers (including the first 3D convolution layer) use

kernels of size

to learn deeper spectral features. The convolution layer in the dense spectral block is recorded as

. Since the model is densely connected, the input of the

layer is

As shown in

Figure 3, the size of the input and output feature maps of each composite convolution layer is the constant value

and the number of output feature maps is also a constant,

k. However, the number of input feature maps increases linearly with the number of composite convolution layers. The number of the input feature maps can be formulated as follows:

where

is the index of the initial feature map. Through the dense spectral block, the channel feature maps merge to become

, and successfully learn deeper spectral features and keep the spatial information.

The reducing dimensional layer connects the dense spectral block and dense spatial block. The aim is to compress the model and reduce the high dimensionality of feature maps. Inside the dense spectral and spatial blocks, the method of padding a 3D convolution layer is “same”, which is the reason the output sizes are constant (). However, in reducing the dimensional layer, the method used for padding the 3D convolution layer is “valid” to change the size of feature maps.

As shown in

Figure 4,

feature maps with a size of

proceed through the 3D convolution layer, which has a kernel size of

and a kernel number

. Due to the 3D convolution layer with “valid” padding, the results is

p feature maps of size

. Through a reshaping operation,

channels of

feature maps become one channel of size

. Then, a 3D convolution layer that has a kernel size of

and a kernel number

n transforms the feature map to an

with

channels.

In summary, through two 3D convolution layers with “valid” padding and reshaping, the size of the feature maps becomes , which reduces the space size, the large number of channels, and the high dimensionality of the data blocks. This process facilitates the extraction of new features from the dense spatial block.

The dense spatial block learns the deeper spatial features of the HSI. For the convolution layer in the dense spatial block, the kernel size is

, and the number of kernels is also

. The convolution layer in the dense spatial block is termed

. The output of the convolution layer of the

th layer in the dense spatial block is given by

As shown in

Figure 5, the size of the input and output feature maps of each convolution layer are of a constant size (

) and the number of output feature maps is also constant with value

k. The number of input feature maps is the same as in Equation (5).

2.3. Going Faster and Preventing Overfitting

There are a large number of training parameters in our framework, which means long training times and a tendency to overfit the training sets. Here, we explain how our framework is able to be faster and prevent overfitting.

We selected PReLU as the activation function [

20]. It introduces a very small number of parameters on the basis of the ReLU [

21]. Its formula is

where

is the input of the nonlinear activation on the

th channel and

is a learnable parameter that determines the slope of the negative part. PReLU adopts the momentum method when updating

:

For the updating formula,

is the momentum and

is the learning rate. When updating

, the weight decay should not be used because

may tend to zero.

is used as the initial value. Although ReLU is a useful nonlinear function, it hinders counter-propagation, whereas PReLU makes the model converge more quickly. Batch normalization (BN) [

22] adds standardized processing to the input data of each layer in the training process of a neural network and means that the gradients converge faster, saving time and resources during model training. For the proposed framework, BN and PReLU are added before the 3D convolution layer, except for the first 3D convolution layer.

Early stopping and the dynamic learning rate are also used when training a model. Stopping early means that, after a certain number of epochs (such as 50 in this paper), if the loss is no longer decreasing, the training process will be stopped early. This reduces the training time as well as preventing overfitting. We adopted a variable learning rate because the step size should decrease as the result approaches an optimal value. With a better initial learning rate, the learning rate is halved when the precision does not increase after a certain number of epochs (such as 10 epochs in this paper). If precision no longer increases after a certain number of epochs, the learning rate will be reduced by half again and will loop until it is less than the set minimum learning rate. In this paper, the minimum learning rate was set to 0; that is, the learning rate looped until the maximum number of epochs was reached.

Since the network of the proposed model is deeper, we used a dropout layer [

23] before the full connection layer to reduce the possibility of overfitting. We set the dropout rate to 50% because at this point the network structure randomly generated by the dropout layer is the greatest and produced the best results. Cross-validation prevents overfitting for complex models, so we divided our datasets into a training, validation, and test datasets for cross-validation.

2.4. Fast Dense Spectral–Spatial Convolution Framework

2.4.1. Objective Function

HSI classification presents a typical classification problem. For such problems, a cross-entropy loss function is commonly used to measure the difference between predicted value and real value to optimize the parameters of the model. In this paper, the predicted value of the FDSSC framework is a vector,

, where

, and is formulated as follows:

where

is the parameter of the FDSSC model to be optimized and

is the number of categories to be classified. Since HSI classification requires multiple classification discriminations, we performed a softmax regression, with the loss function

where

denotes the size of the mini-batch,

the number of categories to be classified,

the

th deep feature belonging to the

th class,

the

jth column of the weights

W in the last fully connected layer, and

the bias term.

Therefore, the objective function of the FDSSC framework, , is

2.4.2. Fast Dense Spectral–Spatial Convolution Network for Classification of Labeled Pixels

For a hyperspectral image with

channels and

size,

r was selected as 9; that is, a target pixel served as the center of a small cube with size

selected from the original pixel data as the input of the neural network. In this paper, the convolution kernel number of dense blocks

was 12 and the number of convolution layers of dense blocks

was 3. The FDSSC network is shown in

Figure 6.

For the following detailed explanation, BN and PReLU are added before all convolution and average pooling layers, except the first 3D convolution layer. As shown in

Figure 6, the

original data pass through the first 3D convolution layer generated

feature maps of size

because the stride of the first convolution layer is

and the method of padding is “valid.” For the convolution layers of the dense spectral block, the kernel size is

, the kernel number is 12, the method of padding is “same”, and the stride is

, so the output of each convolution layer is 12

feature maps containing the learned spectral features. Merging all output and the initial input, the size of feature maps is unchanged and the number of channels is

.

In reducing the dimensional layer, 60 feature maps with a size of proceed through the 3D convolution layer, which has a kernel size of and a kernel number of 200. Since the 3D convolution layer has “valid” padding, there are 200 feature maps. Through a reshaping operation, the channels of feature maps become one feature map with a size of . Next, a 3D convolution layer with and a size of transformed the feature map into a with channels.

For the convolution layers of the dense spatial block, the kernel size is , the kernel number is 12, and the padding is “same,” so each output of the convolution layers is 12 feature maps to learn the deeper spatial features. Similar to the dense spectral block, 60 feature maps with a size of are produced.

Finally, the 3D average pooling layer with a pooling size changes the size of the feature maps to . Through the flattening operation, dropout layer, and fully-connected layers, a prediction vector is produced, where is the number of categories to be classified.

2.4.3. Fast Dense Spectral–Spatial Convolution Framework

Summarizing the above steps, we have proposed a framework that learns deeper spectral and spatial features while reducing the training time compared to deep learning CNN-based methods. The FDSSC framework is shown in

Figure 7.

The partition of HSI data and labels is the first step of the proposed framework. For cross-validation, we randomly divided the labeled pixels and corresponding labels into training, validation, and testing datasets selected with size from the original HSIs, denoting these datasets , , and , respectively.

Taking the cross-entropy as the objective function, and were utilized to train the FDSSC network to obtain the best FDSSC model under the control of the dynamic learning rate and early stopping. The FDSSC framework was only used to optimize the parameters of the model through back-propagation, and tested the initial trained models using . Through cross-validation, the FDSSC could obtain the best-trained model. Finally, FDSSC made use of the best-trained model to obtain three evaluation indices of performance by and classified all datasets.

Combining

Figure 6 and

Figure 7 clarifies the technical advantages of the proposed method. First, the FDSSC network only uses a convolution layer and an average pooling layer to learn features and the fully-connected layer as a classifier, so it is an end-to-end framework and reduces high dimensions without complicated feature engineering. Second, because of the densely-connected method, the FDSSC network has a deeper structure resulting in extremely efficient performance. Finally, the convergence rate of the model is very fast because of the BN and PReLU applied to the FDSSC network and dynamic learning rate, and early stopping. Therefore, the training time of the proposed framework is shorter, and although the FDSSC model has a high quantity of parameters, it lacks overfitting on account of the dropout layer in the FDSSC network, early stopping, and cross-validation.

4. Experimental Results and Discussion

In our experiment, we compared the proposed FDSSC framework to other deep-learning-based methods, that is, SAE-LR [

8], CNN [

9], 3D-CNN-LR [

11], and the state-of-art SSRN method [

13] (only for labeled pixels). SAE-LR was implemented with Theano [

28]. CNN, SSRN, and the proposed FDSSC were implemented with Keras [

29] using TensorFlow [

30] as a backend. 3D-CNN-LR was obtained from the literature [

11]. In the following, the detailed classification accuracy and training times are shown and discussed.

4.1. Experimental Results

In our experiment, we randomly selected 10 groups of training samples from the KSC, UP, and IN datasets. Experimental results are given in the form “mean ± variance.” The training and testing time results were obtained using the same computer, which was configured with 32 GB of memory and a NVIDIA GeForce GTX 1080Ti GPU. The OA, AA, and kappa coefficient were used to determine the accuracy of the classification results. Work from [

9] was denoted CNN. The input spatial size is important for the 3D convolution method. Therefore, to ensure a fair comparison, an appropriate spatial size was chosen for each method. For the SSRN method, the classification accuracy increases with the spatial size, so we used an input spatial size of

, which was the same as that of the FDSSC framework.

Figure 10,

Figure 11 and

Figure 12 show classification maps for each of the methods.

The comparison of deep-learning-based methods relies heavily on training data. Sometimes a method may appear better, but in reality it simply has more training data. Therefore, the same amount of training data should be used for all of the methods compared. However, for some methods, such as the CNN for the IN dataset, when we used the same proportion to train the model the overall accuracy would fall by approximately 2%. Therefore, in order to obtain the best accuracy for each model, in these cases we use the same training proportion as previously used in the literature. For SAE-LR, the split ratio of training, validation, and testing data was 6:2:2; for a CNN, the split ratio was 8:1:1; for the SSRN method, the split ratio was 2:1:7 for the IN and KSC datasets, and 1:1:8 for the UP dataset; for the proposed FDSSC framework, we used 20% of the labeled pixels as the training set, and 10% and 70% for validation and testing datasets, respectively, for the IN and KSC datasets, and 10%, 5%, and 85% for the UP dataset.

Table 4 shows the OA, AA, and kappa coefficient for the different methods for the KSC, IN, and UP datasets. From

Table 4, it can be clearly seen that the proposed FDSSC framework is superior to SAE-LR, CNN, and 3D-CNN-LR methods. For the state-of-the-art SSRN method, we note slight improvements of 0.02%, 0.37%, and 0.04% for the KSC, IN, and UP datasets, respectively. There is no more obvious improvement because the OAs of two of the methods are higher than 99%.

Table 5 summarizes the average training times, training epochs, and testing times of 10 runs of the SAE-LR, CNN, SSRN, and FDSSC methods. SAE-LR was trained with 3300 epochs of pre-training and 400,000 epochs of fine-tuning [

8]; the training time for fine-tuning was only 61.7 min. For a CNN [

9], the training process of this model converged in almost 40 epochs, but in our experiments the model trained with 120 epochs achieved the best accuracy. The SSRN method needed 200 training epochs [

13] and the FDSSC framework only needed 80 training epochs to achieve the best accuracy. Therefore, the FDSSC training time was less than that of other deep-learning-based methods. The hyperspectral data became larger from KSC to UP, and the time difference of the FDSSC framework increased compared with other deep-learning-based methods. Therefore, the larger the hyperspectral data, the more time was reduced by the FDSSC framework.

4.2. Discussion

In terms of accuracy of the HSI classification methods, for deep-learning-based methods, our experiments show that the deeper the framework is, the higher the classification accuracy. The proposed FDSSC framework is obviously superior to the SAE-LR, CNN, and 3D-CNN-LR methods. Compared with the SSRN method, which has state-of-the-art accuracy, the FDSSC method improves OA and AA by 0.40% and 11.23%, respectively, for the IN dataset. It is precisely because of the greater depth of the FDSSC network that the spectral and spatial features of HSIs are more effectively utilized, with better feature transfer between the convolution layers. Although the training size of the SAE-LR and CNN methods are greater than that of the FDSSC framework, the FDSSC framework has higher accuracy than the SAE-LR and CNN methods. In addition, compared with the 24 kernel numbers of the SSRN method, the FDSSC framework uses only 12 kernel numbers, and the model is narrower.

In terms of the training time, the FDSSC framework takes less time and has the characteristics of fast convergence. Many deep learning methods, such as the SSRN, use ReLU, but the FDSSC framework uses PReLU. Problems with ReLU include neuronal death and the offset phenomenon. The former occurs because when , ReLU will be in the hard-saturation area. As training advances, part of the input will fall into the hard-saturation area, meaning that the corresponding weight cannot be updated. The latter occurs because the mean of the activations is always greater than zero. Neuronal death and offset phenomena jointly influence the convergence of a network. Compared with ReLU, PReLU converges faster because the output of PReLU is closer to zero. BN and dynamic learning rate are the other reasons for fast convergence. Thus, compared with the 400,000 epochs required by the SAE-LR method, 200 epochs required by the SSRN method, and 120 epochs by the CNN, the FDSSC framework needs only 80 epochs to obtain the best accuracy, which leads to a shorter training time than other deep-learning-based methods.

Therefore, taking both accuracy and running time into account, we conclude that the FDSSC framework has state-of-the-art accuracy with less required training time than methods achieving similar accuracy.

5. Conclusions and Future Work

In this paper, we propose an end-to-end, fast, and dense spectral–spatial convolution framework for HSI classification. The most significant features of the proposed FDSSC framework are depth and speed. Furthermore, the FDSSC framework has no complicated mechanism for reducing the dimensionality, and instead uses original 3D pixel data directly as input. The proposed framework uses two different dense blocks to extract abundant spatial features and spectral features in HSIs automatically. The densely-connected arrangement of dense blocks deepens the network, reducing the problem of gradient disappearance. The result is that the classification precision of the FDSSC framework reaches a very high level. We introduced BN, dropout layers, and dynamic learning rates, and adopted PReLU as the activation function of the neural network to initialize fully-connected layers. These improvements led the FDSSC framework to converge faster and prevented overfitting, such that only 80 epochs were needed to achieve the best classification accuracy.

The future direction of our work is hyperspectral data segmentation, aimed at segmenting hyperspectral data based on our classification work. We plan to study an end-to-end, pixel-to-pixel deep-learning-based method for hyperspectral data segmentation.