Spatial–Spectral Fusion Based on Conditional Random Fields for the Fine Classification of Crops in UAV-Borne Hyperspectral Remote Sensing Imagery

Abstract

:1. Introduction

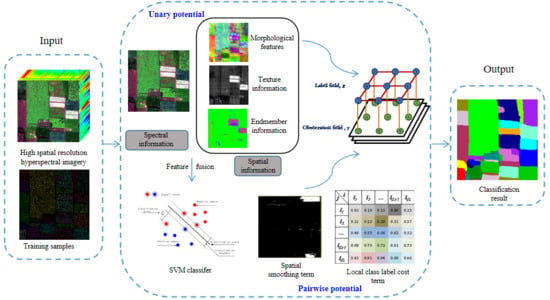

2. Methods

2.1. The Improved Conditional Random Field (CRF) Model

2.1.1. Unary Potential

- (1)

- Homogeneity—reflects the uniformity of the image grayscale;

- (2)

- Angular second moment—reflects the uniformity of the grayscale distribution of the image and the thickness of the texture;

- (3)

- Contrast—reflects the amount of grayscale change in the image;

- (4)

- Dissimilarity—measures the degree of dissimilarity of the gray values in the image;

- (5)

- Mean—indicates the degree of regularity of the texture;

- (6)

- Entropy—reflects the complexity or non-uniformity of the image texture.

2.1.2. Pairwise Potential

2.2. Algorithm Flowchart

- (1)

- MNF rotation is performed on the original image, and the noise covariance matrix in the principal component is used to separate and readjust the noise in the data, so that the variance of the transformed noise data is minimized and the bands are not correlated;

- (2)

- Representative features are selected from the perspective of mathematical morphology, spatial texture, and mixed pixel decomposition, and then combined with the spectral information of each pixel to form a spectral–spatial fusion feature vector. The SVM classifier is used to model the relationship between the label and the fusion feature and the probability estimate of each pixel is calculated independently, based on the feature vector, according to the given label;

- (3)

- The spatial smoothing term and the local class label cost term simulate the spatial contextual information of each pixel and its corresponding neighborhood through the label field and the observation field. According to spatial correlation theory, both the spatial smoothing term and the local class label cost term have the effect of adjacent pixels having the same class label.

3. Experimental Results and Discussion

3.1. Study Areas

3.2. Data Acquisition

3.3. Experimental Description

3.4. Classification Results and Discussion

3.4.1. Experiment 1: Hanchuan Dataset

3.4.2. Experiment 2: Honghu Dataset

3.5. Sensitivity Analysis for the Training Sample Size

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Liu, H.; Yu, S.; Zhang, X.; Guo, D.; Yin, J. Timeliness analysis of crop remote sensing classification one crop a year. Sci. Agric. Sin. 2017, 50, 830–839. [Google Scholar]

- Hu, Y.; Zhang, Q.; Zhang, Y.; Yan, H. A Deep Convolution Neural Network Method for Land Cover Mapping: A Case Study of Qinhuangdao, China. Remote Sens. 2018, 10, 2053. [Google Scholar] [CrossRef]

- Guo, J.; Zhu, L.; Jin, B. Crop Classification Based on Data Fusion of Sentinel-1 and Sentinel-2. Trans. Chin. Soc. Agric. Mach. 2018, 49, 192–198. [Google Scholar]

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J.J. Hyperspectral Imaging: A review on UAV-based sensors, Data Processing and Applications for Agriculture and Forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef]

- Whitcraft, A.K.; Becker-Reshef, I.; Justice, C.O. A framework for defining spatially explicit earth observation requirements for a global agricultural monitoring initiative (GEOGLAM). Remote Sens. 2015, 7, 1461–1481. [Google Scholar] [CrossRef]

- Atzberger, C. Advances in Remote Sensing of Agriculture: Context Description, Existing Operational Monitoring Systems and Major Information Needs. Remote Sens. 2013, 5, 949–981. [Google Scholar] [CrossRef] [Green Version]

- Li, X.; Zhang, L.; You, J. Hyperspectral Image Classification Based on Two-Stage Subspace Projection. Remote Sens. 2018, 10, 1565. [Google Scholar] [CrossRef]

- Mariotto, I.; Thenkabail, P.; Huete, A.; Slonecker, E.; Platonov, A. Hyperspectral versus multispectral crop-productivity modeling and type discrimination for the HyspIRI mission. Remote Sens. Environ. 2013, 139, 291–305. [Google Scholar] [CrossRef]

- Kim, Y. Generation of Land Cover Maps through the Fusion of Aerial Images and Airborne LiDAR Data in Urban Areas. Remote Sens. 2016, 8, 521. [Google Scholar] [CrossRef]

- Zhong, Y.; Cao, Q.; Zhao, J.; Ma, A.; Zhao, B.; Zhang, L. Optimal Decision Fusion for Urban Land-Use/Land-Cover Classification Based on Adaptive Differential Evolution Using Hyperspectral and LiDAR Data. Remote Sens. 2017, 9, 868. [Google Scholar] [CrossRef]

- Cheng, Y.; Nasrabadi, N.; Tran, T. Hyperspectral image classification using dictionary-based sparse representation. IEEE Trans. Geosci. Remote Sens. 2011, 49, 3973–3985. [Google Scholar] [CrossRef]

- Lin, Z.; Chen, Y.; Zhao, X.; Wang, G. Spectral-spatial classification of hyperspectral image using autoencoders. In Proceedings of the 2013 9th International Conference on Information, Communications Signal Processing, Tainan, Taiwan, 10–13 December 2013; pp. 1–5. [Google Scholar]

- Wang, D.; Wu, J. Study on crop variety identification by hyperspectral remote sensing. Geogr. Geo-Inf. Sci. 2015, 31, 29–33. [Google Scholar]

- Zhang, F.; Xiong, Z.; Kou, N. Airborne Hyperspectral Remote Sensing Image Data is Used for Rice Precise Classification. J. Wuhan Univ. Technol. 2002, 24, 36–39. [Google Scholar]

- Senthilnath, J.; Omkar, S.; Mani, V.; Karnwal, N.; Shreyas, P. Crop Stage Classification of Hyperspectral Data Using Unsupervised Techniques. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 861–866. [Google Scholar] [CrossRef]

- Chen, Y. Identification and Classification of Typical Wetland Vegetation in Poyang Lake Based on Spectral Feature. Master’s Thesis, Jiangxi University of Science and Technology, Ganzhou, China, 2018. [Google Scholar]

- Zhou, Y.; Wang, S. Study on the fragmentariness of land in China. China Land Sci. 2008, 22, 50–54. [Google Scholar]

- Whitehead, K.; Hugenholtz, C.H.; Myshak, S.; Brown, O.; LeClair, A.; Tamminga, A.; Barchyn, T.E.; Moorman, B.; Eaton, B. Remote sensing of the environment with small unmanned aircraft systems (UASs), Part 1: A review of progress and challenges. J. Unmanned Veh. Syst. 2014, 2, 69–85. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS-J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef] [Green Version]

- Hugenholtz, C.H.; Moorman, B.J.; Riddell, K.; Whitehead, K. Small unmanned aircraft systems for remote sensing and Earth science research. Eos Trans. Am. Geophys. Union 2012, 93, 236. [Google Scholar] [CrossRef]

- Zhong, Y.; Wang, X.; Xu, Y.; Wang, S.; Jia, T.; Hu, X.; Zhao, J.; Wei, L.; Zhang, L. Mini-UAV-Borne Hyperspectral Remote Sensing: From Observation and Processing to Applications. IEEE Trans. Geosci. Remote Sens. Mag. 2018, 6, 46–62. [Google Scholar] [CrossRef]

- Chen, Z.; Ren, J.; Tang, H.; Shi, Y. Progress and Prospects of Agricultural Remote Sensing Research. J. Remote Sens. 2016, 20, 748–767. [Google Scholar]

- Wang, P.; Luo, X.; Zhou, Z.; Zang, Y.; Hu, L. Key technology for remote sensing information acquisition based on micro UAV. J. Agric. Eng. 2014, 30, 1–12. [Google Scholar]

- Prasad, S.; Bruce, L. Decision fusion with confidence-based weight assignment for hyperspectral target recognition. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1448–1456. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L.; Li, P. An adaptive multiscale information fusion approach for feature extraction and classification of IKONOS multispectral imagery over urban areas. IEEE Geosci. Remote Sens. Lett. 2007, 4, 654–658. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS-J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Geman, S.; Geman, D. Stochastic Relaxation, Gibbs Distributions, and the Bayesian Restoration of images. J. Appl. Stat. 1984, 20, 25–62. [Google Scholar] [CrossRef]

- Zhao, W.; Emery, W.; Bo, Y.; Chen, J. Land Cover Mapping with Higher Order Graph-Based Co-Occurrence Model. Remote Sens. 2018, 10, 1713. [Google Scholar] [CrossRef]

- Solberg, A.; Taxt, T.; Jain, A. A Markov random field model for classification of multisource satellite imagery. IEEE Trans. Geosci. Remote Sens. 1996, 34, 100–113. [Google Scholar] [CrossRef]

- Qiong, J.; Landgrebe, D. Adaptive Bayesian contextual classification based on Markov random fields. IEEE Trans. Geosci. Remote Sens. 2003, 40, 2454–2463. [Google Scholar]

- Moser, G.; Serpico, S. Combining support vector machines and Markov random fields in an integrated framework for contextual image classification. IEEE Trans. Geosci. Remote Sens. 2013, 51, 2734–2752. [Google Scholar] [CrossRef]

- He, X.; Zemel, R.S.; Carreira-Perpiñán, M.Á. Multiscale conditional random fields for image labeling. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 17 June–2 July 2004; IEEE: Piscataway, NJ, USA, 2004; Volume 2. [Google Scholar]

- Zhao, W.; Du, S.; Wang, Q.; Emery, W.J. Contextually guided very-high-resolution imagery classification with semantic segments. ISPRS-J. Photogramm. Remote Sens. 2017, 132, 48–60. [Google Scholar] [CrossRef]

- Zhang, G.; Jia, X. Simplified conditional random fields with class boundary constraint for spectral-spatial based remote sensing image classification. IEEE Geosci. Remote Sens. Lett. 2012, 9, 856–860. [Google Scholar] [CrossRef]

- Wegner, J.; Hansch, R.; Thiele, A.; Soergel, U. Building detection from one orthophoto and high-resolution InSAR data using conditional random fields. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2011, 4, 83–91. [Google Scholar] [CrossRef]

- Bai, J.; Xiang, S.; Pan, C. A graph-based classification method for hyperspectral images. IEEE Trans. Geosci. Remote Sens. 2013, 51, 803–817. [Google Scholar] [CrossRef]

- Zhong, Y.; Lin, X.; Zhang, L. A support vector conditional random fields classifier with a Mahalanobis distance boundary constraint for high spatial resolution remote sensing imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 1314–1330. [Google Scholar] [CrossRef]

- Zhong, Y.; Zhao, J.; Zhang, L. A hybrid object-oriented conditional random field classification framework for high spatial resolution remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7023–7037. [Google Scholar] [CrossRef]

- Zhong, P.; Wang, R. Learning conditional random fields for classification of hyperspectral images. IEEE Trans. Image Process. 2010, 19, 1890–1907. [Google Scholar] [CrossRef] [PubMed]

- Lafferty, J.; Mccallum, A.; Pereira, F. Conditional random fields: Probabilistic models for segmenting and labeling sequence data. Proc. ICML 2001, 3, 282–289. [Google Scholar]

- Kumar, S.; Hebert, M. Discriminative random fields. Int. J. Comput. Vis. 2006, 68, 179–201. [Google Scholar] [CrossRef]

- Wu, T.; Lin, C.; Weng, R. Probability estimates for multi-class classification by pairwise coupling. J. Mach. Learn. Res. 2004, 5, 975–1005. [Google Scholar]

- Chang, C.; Lin, C. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. 2011, 2, 1–27. [Google Scholar] [CrossRef]

- Simard, M.; Saatchi, S.; De Grandi, G. The use of decision tree and multiscale texture for classification of JERS-1 SAR data over tropical forest. IEEE Trans. Geosci. Remote Sens. 2000, 38, 2310–2321. [Google Scholar] [CrossRef] [Green Version]

- Pesaresi, M.; Benediktsson, J. A new approach for the Morphological Segmentation of high-resolution satellite imagery. IEEE Trans. Geosci. Remote Sens. 2001, 39, 309–320. [Google Scholar] [CrossRef]

- Benediktsson, J.; Pesaresi, M.; Arnason, K. Classification and feature extraction for remote sensing image from urban areas based on morphological transformations. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1940–1949. [Google Scholar] [CrossRef]

- Yu, Q.; Gong, P.; Clinton, N.; Biging, G.; Kelly, M.; Schirokauer, D. Object-based detailed vegetation classification with airborne high spatial resolution remote sensing imagery. Photogramm. Eng. Remote Sens. 2006, 72, 799–811. [Google Scholar] [CrossRef]

- Hu, R.; Huang, X.; Huang, Y. An enhanced morphological building index for building extraction from high-resolution images. Acta Geod. Cartogr. Sin. 2014, 43, 514–520. [Google Scholar]

- Fu, Q.; Wu, B.; Wang, X.; Sun, Z. Building extraction and its height estimation over urban areas based on morphological building index. Remote Sens. Technol. Appl. 2015, 30, 148–154. [Google Scholar]

- Zhang, L.; Huang, X. Object-oriented subspace analysis for airborne hyperspectral remote sensing imagery. Neurocomputing 2010, 73, 927–936. [Google Scholar] [CrossRef]

- Maillard, P. Comparing texture analysis methods through classification. Photogramm. Eng. Remote Sens. 2003, 69, 357–367. [Google Scholar] [CrossRef]

- Beguet, B.; Chehata, N.; Boukir, S.; Guyon, D. Classification of forest structure using very high resolution Pleiades image texture. In Proceedings of the 2014 IEEE International Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014; Volume 2014, pp. 2324–2327. [Google Scholar]

- Gruninger, J.; Ratkowski, A.; Hoke, M. The sequential maximum angle convex cone (SMACC) endmember model. Proc SPIE 2004, 5425, 1–14. [Google Scholar]

- Zhao, J.; Zhong, Y.; Zhang, L. Detail-preserving smoothing classifier based on conditional random fields for high spatial resolution remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2440–2452. [Google Scholar] [CrossRef]

- Richards, J.; Jia, X. Remote Sensing Digital Image Analysis: An Introduction, 4th ed.; Springer: New York, NY, USA, 2006. [Google Scholar]

| Class | Parameter | Class | Parameter | ||||

|---|---|---|---|---|---|---|---|

| Wavelength range | 400–1000 nm | Field of view | 33 | 22 | 16 | ||

| Number of spectral channels | 270 | IFOV single pixel spatial resolution | 0.9 | 0.61 | 0.43 | ||

| Number of spatial channels | 640 | Instrument power consumption | <13 W | ||||

| Spectral sampling interval | 2.2 nm/pixel | Bit depth | 12 bit | ||||

| Spectral resolution | 6 nm @ 20 um | Storage | 480 GB | ||||

| Secondary sequence filter | Yes | Cell size | 7.4 um | ||||

| Numerical aperture | F/2.5 | Camera type | COMS | ||||

| Light path design | Coaxial reflection imaging spectrometer | Maximum frame rate | 300 fps | ||||

| Slit width | 20 um | Weight | <0.6 kg(no lens) | ||||

| Lens focal length | 8 mm | 12 mm | 17 mm | Operating temperature | 0–50 °C | ||

| Class | Red Roof | Tree | Road | Strawberry | Pea | Soy | Shadow | Gray Roof | Iron Sheet | Total |

|---|---|---|---|---|---|---|---|---|---|---|

| Red roof | 82.16 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.14 | 0.00 | 0.00 | 3.87 |

| Tree | 0.05 | 96.12 | 0.00 | 0.22 | 0.00 | 1.29 | 0.31 | 0.00 | 0.60 | 8.15 |

| Road | 0.00 | 0.00 | 76.42 | 0.00 | 0.00 | 0.00 | 0.13 | 0.00 | 0.00 | 3.97 |

| Strawberry | 0.00 | 0.01 | 5.77 | 98.00 | 0.34 | 2.42 | 0.37 | 0.00 | 5.77 | 16.07 |

| Pea | 0.00 | 1.06 | 0.00 | 0.00 | 91.66 | 0.00 | 0.07 | 0.00 | 0.20 | 7.55 |

| Soy | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 89.26 | 0.00 | 0.00 | 0.00 | 0.83 |

| Shadow | 17.79 | 2.28 | 17.81 | 1.78 | 8.00 | 7.03 | 98.07 | 23.12 | 3.68 | 56.18 |

| Gray roof | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.21 | 76.88 | 17.79 | 2.83 |

| Iron sheet | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.07 | 0.00 | 71.97 | 0.55 |

| Total | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 |

| Class | Accuracy (%) | |||||

|---|---|---|---|---|---|---|

| SVM | MS | SVRFMC | DPSCRF | MSVC | SSF-CRF | |

| Red roof | 49.72 | 48.89 | 64.75 | 49.96 | 67.43 | 82.16 |

| Tree | 67.30 | 73.95 | 92.47 | 80.38 | 84.33 | 96.12 |

| Road | 65.07 | 66.77 | 74.91 | 62.58 | 75.39 | 76.42 |

| Strawberry | 94.55 | 95.37 | 97.54 | 96.89 | 95.74 | 98.00 |

| Pea | 64.12 | 65.49 | 79.55 | 67.51 | 78.37 | 91.66 |

| Soy | 35.78 | 29.95 | 47.81 | 13.92 | 78.67 | 89.26 |

| Shadow | 97.19 | 97.41 | 98.84 | 97.53 | 97.83 | 98.07 |

| Gray roof | 53.90 | 53.67 | 74.21 | 64.06 | 72.05 | 76.88 |

| Iron sheet | 42.25 | 43.54 | 22.07 | 37.57 | 43.84 | 71.97 |

| OA | 85.51 | 86.41 | 91.98 | 87.40 | 90.91 | 94.60 |

| Kappa | 0.7757 | 0.7890 | 0.8760 | 0.8043 | 0.8607 | 0.9177 |

| Class | Red Roof | Bare Soil | Cotton | Rape | Chinese Cabbage | Pakchoi | Cabbage | Tuber Mustard | Brassica parachinensis | Brassica chinensis | Small Brassica chinensis | Lactuca sativa | Celtuce | Film-Covered Lettuce | Romaine Lettuce | Carrot | White Radish | Sprouting Garlic | Total |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Red roof | 98.49 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1.5 |

| Bare soil | 0 | 99.66 | 0.99 | 0 | 0 | 0 | 0.03 | 0 | 0 | 0.64 | 0 | 0.04 | 0 | 0 | 0 | 0 | 0 | 0 | 8.17 |

| Cotton | 1.51 | 0 | 99.01 | 0 | 0 | 0.05 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 |

| Rape | 0 | 0 | 0 | 99.91 | 0 | 0 | 0 | 0.02 | 0.02 | 0 | 0.04 | 1.03 | 0 | 0.01 | 0 | 0 | 0.74 | 0 | 26.21 |

| Chinese cabbage | 0 | 0 | 0 | 0 | 99.44 | 0 | 0.16 | 0 | 1.57 | 0.82 | 0.13 | 0.02 | 2.62 | 0 | 0 | 0 | 0 | 0 | 7.56 |

| Pakchoi | 0 | 0 | 0 | 0 | 0.02 | 87.5 | 0 | 0 | 0.42 | 0 | 0 | 0 | 8.56 | 0 | 0 | 0 | 0 | 0 | 2.53 |

| Cabbage | 0 | 0 | 0 | 0 | 0 | 0 | 99.57 | 0.23 | 0 | 0 | 0 | 0 | 1.71 | 0.07 | 0 | 0.22 | 0 | 0 | 7.12 |

| Tuber mustard | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 98.49 | 0 | 0 | 0.1 | 0.06 | 0 | 0 | 0 | 0.07 | 1.98 | 0 | 7.85 |

| Brassica parachinensis | 0 | 0 | 0 | 0 | 0 | 0 | 0.09 | 0 | 97.63 | 0 | 0 | 0 | 8.96 | 0 | 0 | 0 | 0 | 1.42 | 4.33 |

| Brassica chinensis | 0 | 0.01 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 98.45 | 4.6 | 0.08 | 0 | 0.08 | 0 | 0 | 4.53 | 0 | 5.57 |

| Small Brassica chinensis | 0 | 0.09 | 0 | 0.09 | 0 | 0 | 0 | 0.09 | 0 | 0.08 | 94.98 | 1.59 | 0 | 0.08 | 1.07 | 4.3 | 0.3 | 0 | 10.89 |

| Lactuca sativa | 0 | 0 | 0 | 0 | 0.22 | 0 | 0 | 0.66 | 0 | 0 | 0 | 97.18 | 0 | 0 | 0 | 0 | 0 | 0 | 3.6 |

| Celtuce | 0 | 0 | 0 | 0 | 0.02 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 78.15 | 0 | 0 | 0 | 0 | 0 | 0.54 |

| Film-covered lettuce | 0 | 0 | 0 | 0 | 0 | 0 | 0.13 | 0 | 0 | 0 | 0 | 0 | 0 | 99.74 | 3.29 | 0 | 0 | 0 | 5.08 |

| Romaine lettuce | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.01 | 95.64 | 0 | 0 | 0 | 1.99 |

| Carrot | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.46 | 0 | 0 | 0.11 | 0 | 0 | 0 | 0 | 95.41 | 0 | 0 | 1.89 |

| White radish | 0 | 0 | 0 | 0 | 0 | 0 | 0.03 | 0 | 0.21 | 0 | 0.03 | 0 | 0 | 0 | 0 | 0 | 92.45 | 1.37 | 2.64 |

| Sprouting garlic | 0 | 0.25 | 0 | 0 | 0.31 | 3.99 | 0 | 0 | 0.16 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 97.12 | 1.55 |

| Total | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 |

| Class | Accuracy (%) | |||||

|---|---|---|---|---|---|---|

| SVM | MS | SVRFMC | DPSCRF | MSVC | SSF-CRF | |

| Red roof | 77.59 | 93.77 | 99.40 | 86.16 | 89.18 | 98.49 |

| Bare soil | 93.86 | 94.97 | 98.12 | 96.07 | 94.02 | 99.66 |

| Cotton | 83.55 | 95.89 | 98.58 | 97.09 | 91.77 | 99.01 |

| Rape | 96.19 | 98.90 | 99.80 | 98.11 | 98.73 | 99.91 |

| Chinese cabbage | 88.00 | 94.60 | 99.00 | 93.86 | 93.04 | 99.44 |

| Pakchoi | 1.79 | 14.92 | 13.87 | 3.76 | 10.76 | 87.50 |

| Cabbage | 94.13 | 97.28 | 99.30 | 97.29 | 96.32 | 99.57 |

| Tuber mustard | 63.15 | 77.96 | 90.17 | 80.52 | 70.80 | 98.54 |

| Brassica parachinensis | 62.36 | 72.72 | 93.69 | 83.51 | 67.32 | 97.63 |

| Brassica chinensis | 39.02 | 66.02 | 75.20 | 34.38 | 65.76 | 98.45 |

| Small Brassica chinensis | 77.68 | 82.67 | 92.68 | 84.31 | 83.46 | 94.98 |

| Lactuca sativa | 71.63 | 76.38 | 85.75 | 74.75 | 80.65 | 97.18 |

| Celtuce | 42.30 | 68.98 | 87.51 | 46.02 | 71.40 | 78.15 |

| Film-covered lettuce | 88.65 | 96.37 | 98.69 | 97.68 | 95.61 | 99.74 |

| Romaine lettuce | 31.23 | 36.30 | 27.31 | 8.45 | 43.17 | 95.64 |

| Carrot | 34.89 | 48.48 | 82.43 | 58.68 | 60.48 | 95.41 |

| White radish | 51.31 | 72.64 | 89.46 | 59.35 | 78.33 | 92.45 |

| Sprouting garlic | 39.20 | 61.29 | 82.94 | 21.80 | 71.16 | 97.21 |

| OA | 76.97 | 84.77 | 91.08 | 81.97 | 84.32 | 97.95 |

| Kappa | 0.7367 | 0.8262 | 0.8985 | 0.7936 | 0.8217 | 0.9768 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, L.; Yu, M.; Zhong, Y.; Zhao, J.; Liang, Y.; Hu, X. Spatial–Spectral Fusion Based on Conditional Random Fields for the Fine Classification of Crops in UAV-Borne Hyperspectral Remote Sensing Imagery. Remote Sens. 2019, 11, 780. https://doi.org/10.3390/rs11070780

Wei L, Yu M, Zhong Y, Zhao J, Liang Y, Hu X. Spatial–Spectral Fusion Based on Conditional Random Fields for the Fine Classification of Crops in UAV-Borne Hyperspectral Remote Sensing Imagery. Remote Sensing. 2019; 11(7):780. https://doi.org/10.3390/rs11070780

Chicago/Turabian StyleWei, Lifei, Ming Yu, Yanfei Zhong, Ji Zhao, Yajing Liang, and Xin Hu. 2019. "Spatial–Spectral Fusion Based on Conditional Random Fields for the Fine Classification of Crops in UAV-Borne Hyperspectral Remote Sensing Imagery" Remote Sensing 11, no. 7: 780. https://doi.org/10.3390/rs11070780

APA StyleWei, L., Yu, M., Zhong, Y., Zhao, J., Liang, Y., & Hu, X. (2019). Spatial–Spectral Fusion Based on Conditional Random Fields for the Fine Classification of Crops in UAV-Borne Hyperspectral Remote Sensing Imagery. Remote Sensing, 11(7), 780. https://doi.org/10.3390/rs11070780