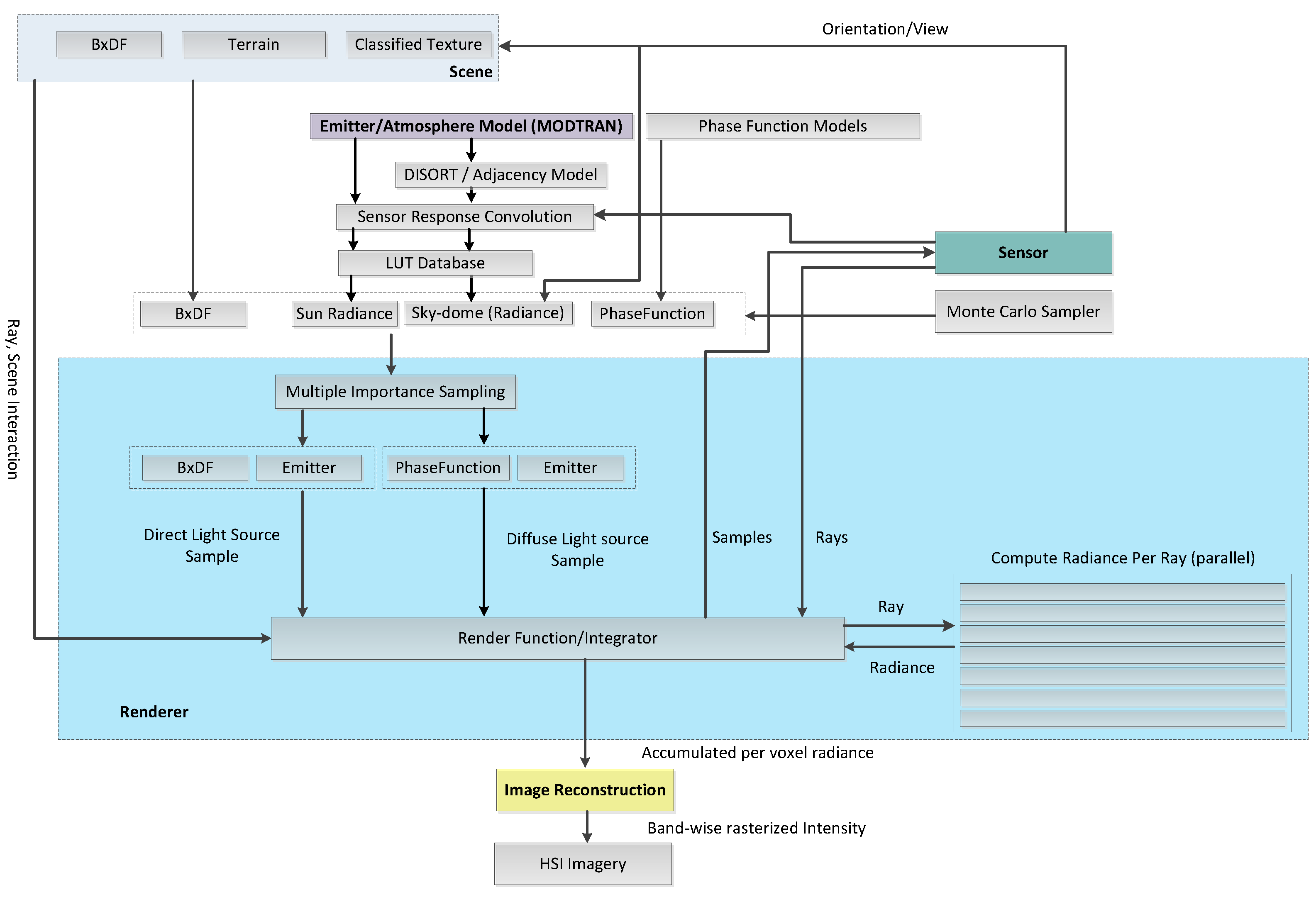

CHIMES is a texture rendering based simulator and an overview of its design and system components are shown in

Figure 1 and

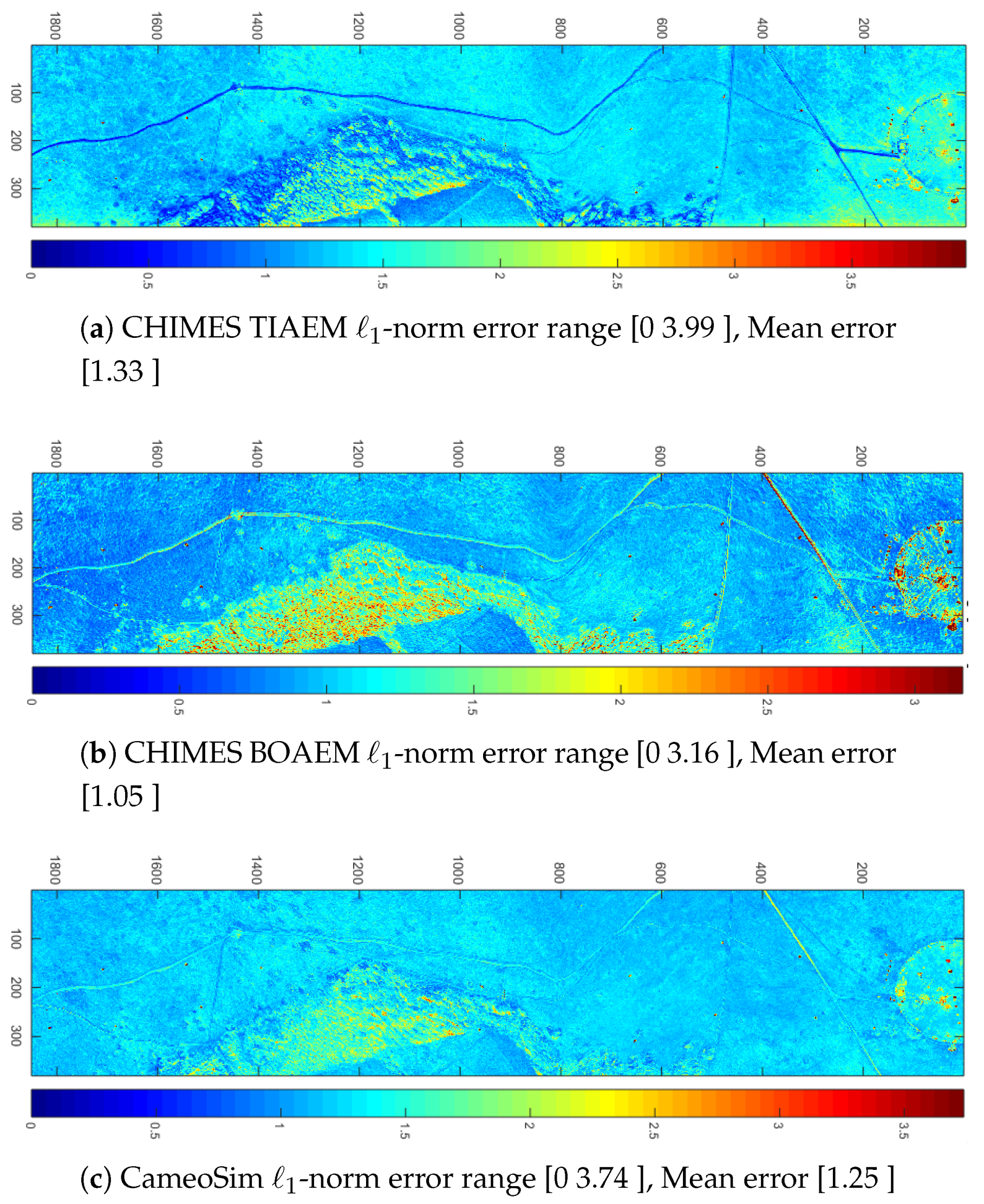

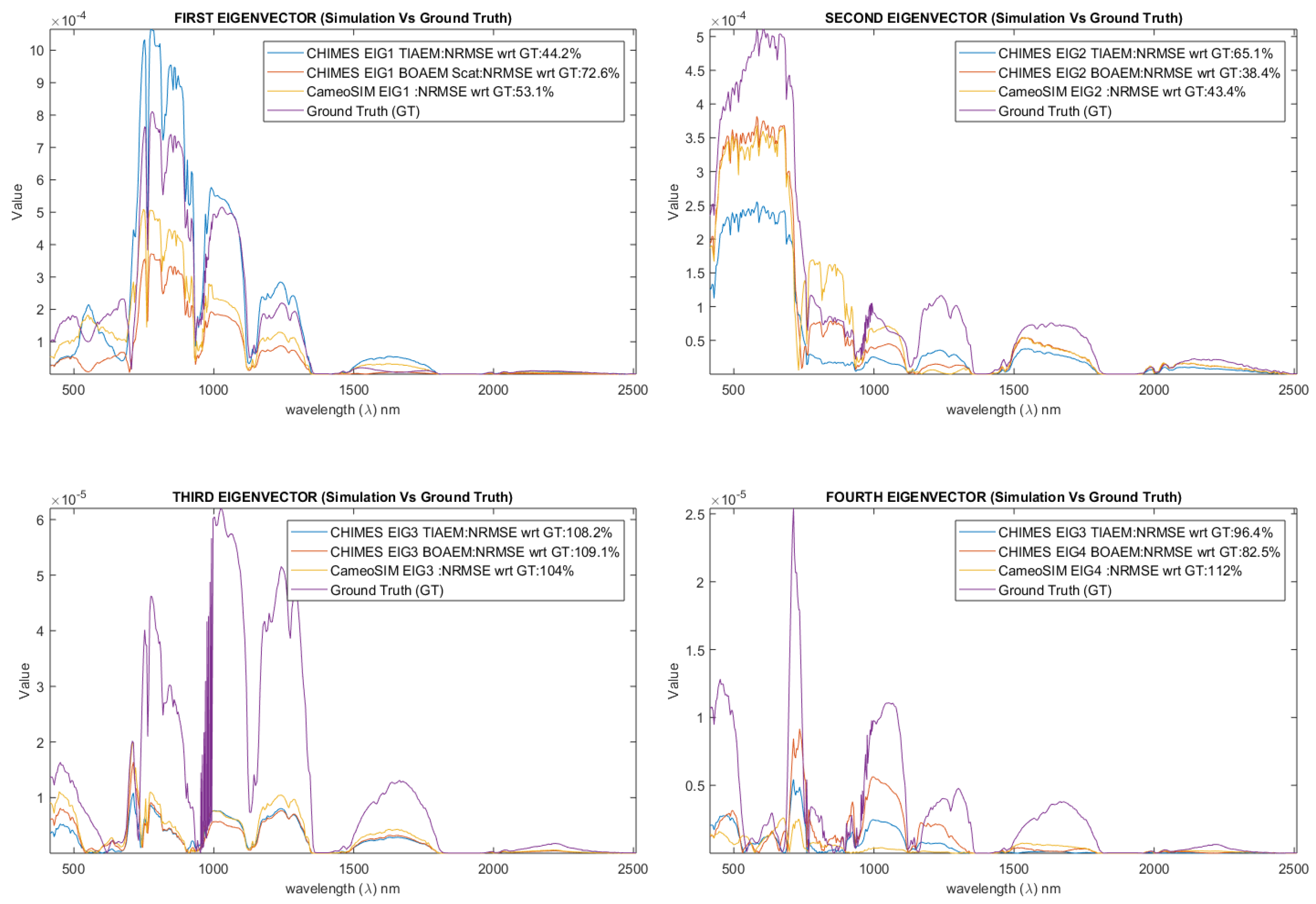

Figure 5 respectively. As mentioned in the introduction section, there are two main features in the proposed CHIMES; one is enhanced adjacency treatment that has been implemented for a better estimation of the volumetric scattering of upwelled scattered radiance, which is particularly important for the simulation of rugged scenes. This feature is in the form of a regional background neighbourhood function, which, embeds an altitude dependence of the optical scattering characteristics between the ground and the sensor. During the simulation, the averaged background albedo of the surrounding region of the test pixel, and the total upwelled radiance are evaluated for each pixel in the scene during the rendering process. The use of this neighbourhood function together with volumetric scattering has not been found in the open domain, and it is believed that this approach may help to enhance the performance of other scene simulators in the community. DIRSIG5 offers volumetric scattering based adjacency model, however considering effective background neighbourhood based on sensor height, air volume and density is not reported. Although ATCOR also employs the neighbourhood based adjacency, however, it performs differencing of target and background spectra to model the reduced contrast. This is only an approximation because volumetric scattering is not considered in ATCOR, probably due to its application domain. Moreover, variable height difference from every pixel to the sensor is not also considered in ATCOR or any other COTS scene simulation package.

The other feature in the CHIMES is the automatic search of the optimal atmospheric parameters to be used for the simulation of a scene, such that the output will be as realistic as that of the ground truth data. Many simulators, such as the DIRSIG and the CameoSim, require the user to define the atmospheric parameters which are unknown. Thus a number of trial and error of simulation runs using different sets of atmospheric parameters are normally required for these simulators. This contribution will help to reduce the amount of guesswork and help to obtain the optimal simulation result without repeatedly trial and error processing by varying the aerosol optical thickness.

3.1. Proposed Adjacency Models for CHIMES Simulator

The adjacency effect reduces with wavelength [

32] as it depends on scattering efficiency. In CHIMES we maintain most of DIRSIG radiative transfer equation, however, treatment of I type of rays (adjacency) is handled with two different models. Both of our models adopt DISORT based upwelled scattering, however approach of setting average background albedo

is different in them. Our first and comparably simplistic model is similar to CameoSim.

Based on Equation (

10), the upwelled scattered radiance into the sensor is therefore expressed as in Equation (

15). This is the path radiance output from MODTRAN when target albedo is set to zero, during execution.

Replacing upwelled scattered term of Equation (

2) by Equation (

15) we get Equation (

16), which is the lambertian BRDF form of CHIMES Background One-Spectra AEM (BOAEM) model. The final estimation of

during rendering is driven by volumetric scattering. This model is constructed to provide a better comparison of CHIMES with CameoSim, in terms of overall performance, which include several parameters such as sensor model, scene, ray tracing, volumetric scattering and background reflected radiance and so forth.

In the second, more comprehensive, adjacency model, CHIMES adopts the methodology similar to that in ATCOR to deduce the upwelling of the adjacency through a sliding window of regions along the horizontal scale of the terrain. Since the extent of the adjacency scattering depends on the density of air within the field of view of the sensor, hence the adjacency is a function of the sensor altitude (

) and the elevation of ground (

). Through experimental analysis Richter et al. [

35] derived an empirical equation to quantify the approximate adjacency range

on the ground as;

The above empirical equation is the result of the air density differentiation along elevation

z. It has been shown that the density of air

is exponentially reduced above

elevation;

where

is air density at altitude

z,

represents density at sea-level and

is the average scale height for the density of air which is 8 Km [

35]. The adjacency effect for every pixel in a scene is evaluated by forming a region of interest (ROI) of

pixels having the test pixel (target) in the center. According to Equation (

17) the dimension of the ROI is dependent on the difference of elevation of the test pixel (target) and sensor altitude. Therefore in rugged terrain’s ROI dimension is variable across the scene. For each pixel in the scene, CHIMES deduces the path reflectance (

), adjacent contribution for unit reflectance (

) and the ROI background reflectance (

) for each

ROI. We incorporate

into Equation (

2) by interpolation, therefore

is calculated for

and

from Equation (

10), and it is denoted by

and

, respectively which is given in Equation (19). Interpolation between zero and one is performed in these experiments due to large test scene and memory constraints which may incur interpolation errors, therefore it is recommended to calculate

from MODTRAN at more intermediate points between zero and one.

, inside ROI is calculated by Equation (

20).

where

is the reflectance of the sample pixel as shown by red pixels depicted in

Figure 6b and

is the distance between target pixel (green/orange) and sample pixels.

M is the number of samples in the grid. The upwelled scattered reflectance for the ROI region is therefore computed according to every pixel’s position and height by interpolation as given in Equation (21), where Subscript K denotes the kernel ROI. The approximate upwelled scattered radiance for the ROI is given in Equation (

22).

Once

is computed for the intersecting ray, it is backscattered by coupling the phase function of the atmosphere and the diffuse emitter in ray-tracing by utilizing Monte- Carlo multiple importance sampling [

36]. A ray-level computation of upwelled scattered radiance is shown in

Figure 6a. Moreover, the actual model in CHIMES also considers BxDF models in calculating the radiance. It should be noted that, unlike ATCOR, TIAEM does not perform any differencing between target and background reflectance. Contrast reduction is the consequence of volumetric backscattering of the computed upwelled scattered radiance under the kernel, which eventually takes the form of Equation (

22). The final rendering equation for the TIAEM model (assuming Lambertian BRDF) takes the form of Equation

23.

We reused DIRSIG rendering equation to represent the modified upwelled scattered term in our presentation, however, this equation assumes Lambertian BRDF. In our simulator three BRDF models are supported such as Phong, Lambertian and Torrence and Sparrow. In our experiments, we assume Lambertian BRDF for all simulations due to the abundant vegetation in the background of the scene.

3.2. Automatic Atmospheric Search for Atmospheric Parameters for Scene Simulation

The presence of aerosols or water droplets in the atmosphere is a complicated phenomenon to model as their properties, such as their sizes, densities and their distributions are subjected to many atmospheric factors which makes them highly in-homogeneous along the solar zenith and azimuth dimensions. Given the upwell radiance of a scene, there is no method to know exactly what are the aerosols and their density distributions over the measured site because it is an inverse problem and the solution is likely to be non-unique. To simulate a scene, one will need the knowledge of the material characteristic on the ground, and more importantly the atmosphere property over the scene. The latter input is normally ‘guessed’ from the data provided by the weather stations perhaps with the help from radiosonde and so forth. Among the list of atmosphere parameters which is outlined in

Table 5, some relatively more important ones such as the optical thickness and water droplets dimensions in the cloud can be modelled within certain limits given by the seasonal model of the test site. Studies [

37] have shown that light’s extinction by the presence of clouds is dominantly affected by the effective radius of droplets (

r) and their densities (

) in the cloud.

The optical transmittance

of a slab of cloud with thickness

R, effective radius of droplets in the cloud

r, density of droplets in the cloud

and density of water

(

gm/cm

) has been found analytically in the form of Equation (

25) [

28,

37].

Thus, the above equation gives a first-order estimation of the optical transmittance

of the cloud. For a scene with known materials on the ground and when it subjects to solar irradiation, the albedo of the ground or the radiance of some specific materials in the scene can be evaluated analytically through Equation (

25) for a given set of droplet parameters

, For low altitude clouds having Stratus profile, MODTRAN assumes (

r = 8.33

m). A set of the albedo can then be evaluated under systematic variation of these droplet parameters as according to Equation (

25). After this set of albedo is convolved with the sensor characteristics, they can then be monitored through a distance metric measurement with respect to the ground truth radiances of the scene. In a nut shell the atmospheric search algorithm is a subroutine to obtain the ground or material albedo under the different configuration of cloud

and a subsequent call of MODTRAN for the radiative transfer evaluation. A flowchart of the atmospheric search is given in

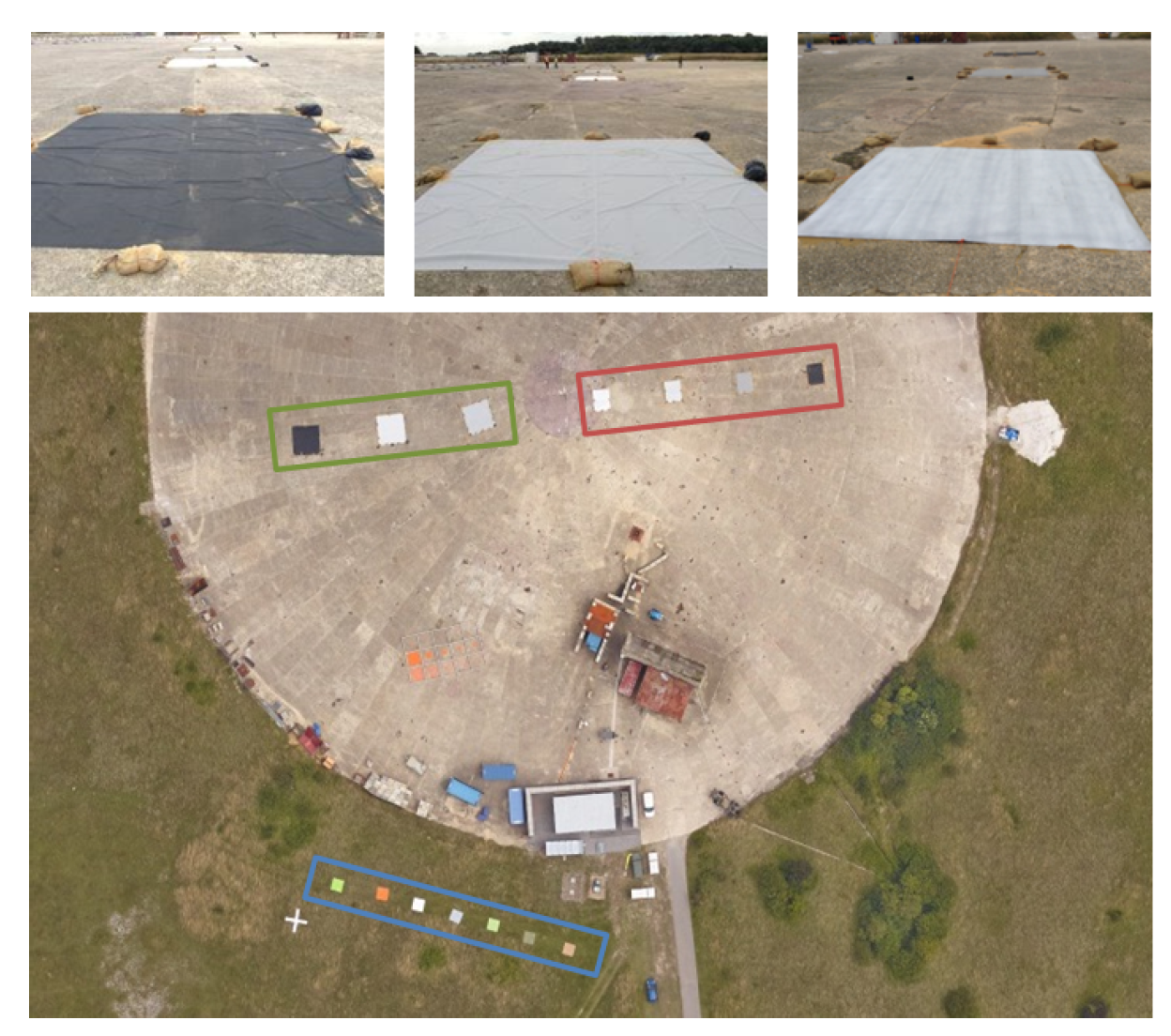

Figure 7. The experimental setup during GT data acquisition and the results of the search algorithm are presented in

Section 7.

In this section, we discussed adjacency models included in CHIMES. Concisely, the BOAEM is a simplistic adjacency model which evaluates the upwelling by using one spectral reflectance. Other simulators such as the CameoSim has also employed a similar model for adjacency modelling. However, the TIAEM may represent a more comprehensive adjacency model in which it evaluates the local adjacency in upwelling radiance within several small ROIs across the entire scene.