Figure 1.

Images from the DREAM-B data set. The figures of (a–d) are sample images from the city of Paris, Vienna, Tokyo, and Los Angeles. All the samples are with a size of .

Figure 1.

Images from the DREAM-B data set. The figures of (a–d) are sample images from the city of Paris, Vienna, Tokyo, and Los Angeles. All the samples are with a size of .

Figure 2.

Geospatial distribution of the DREAM-B data set. Each point in this figure represents an image tile. The DREAM-B data set contains 626 tiles in total and covers 100 cities across several continents.

Figure 2.

Geospatial distribution of the DREAM-B data set. Each point in this figure represents an image tile. The DREAM-B data set contains 626 tiles in total and covers 100 cities across several continents.

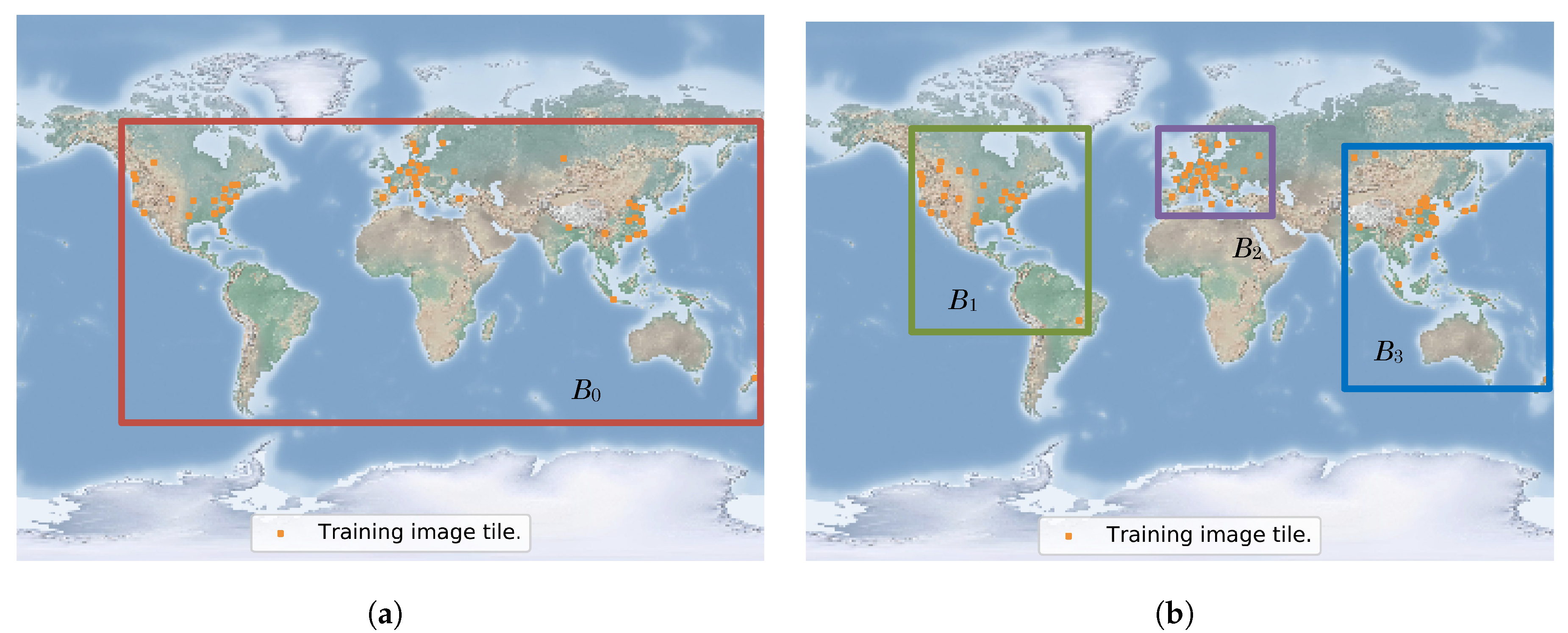

Figure 3.

Geospatial distribution of data sets in different areas. The figure of (a) is the bounding box of the data group , and the figure of (b) is the bounding boxes of the data groups , , and . Each group is divided equally in terms of the number of image tiles, and the corresponding colored boxes are their coverage range. The bounding boxes of data groups , , , and are , , , and colored with red, green, purple, and blue, respectively.

Figure 3.

Geospatial distribution of data sets in different areas. The figure of (a) is the bounding box of the data group , and the figure of (b) is the bounding boxes of the data groups , , and . Each group is divided equally in terms of the number of image tiles, and the corresponding colored boxes are their coverage range. The bounding boxes of data groups , , , and are , , , and colored with red, green, purple, and blue, respectively.

Figure 4.

The architecture of U-NASNetMobile. Normal Cells and Reduction Cells are the structures obtained via neural architecture searching [

39]. The yellow circles are concatenation layers.

Figure 4.

The architecture of U-NASNetMobile. Normal Cells and Reduction Cells are the structures obtained via neural architecture searching [

39]. The yellow circles are concatenation layers.

Figure 5.

Comparison of the model with pre-training and with random initialization. The figure plots the learning curves of the base model . We can observe that with pre-training on converges faster and leads to better performance.

Figure 5.

Comparison of the model with pre-training and with random initialization. The figure plots the learning curves of the base model . We can observe that with pre-training on converges faster and leads to better performance.

Figure 6.

Accuracy variation of the GeoBoost models. The base learner of boosting is the U-NASNetMobile model for (a) and the U-Net model for (b).

Figure 6.

Accuracy variation of the GeoBoost models. The base learner of boosting is the U-NASNetMobile model for (a) and the U-Net model for (b).

Figure 7.

Semantic segmentation results of GeoBoost from the cities of Chicago, Vienna, and Shanghai. The figures of (a,d,g) colored in red are the ground truth of images. The figures of (b,e,h) colored in green are the results of , and the figures of (c,f,i) are the results of . The yellow circles indicate the notable learning progresses of GeoBoost. Each image in this figure is with a size of .

Figure 7.

Semantic segmentation results of GeoBoost from the cities of Chicago, Vienna, and Shanghai. The figures of (a,d,g) colored in red are the ground truth of images. The figures of (b,e,h) colored in green are the results of , and the figures of (c,f,i) are the results of . The yellow circles indicate the notable learning progresses of GeoBoost. Each image in this figure is with a size of .

Figure 8.

Semantic segmentation results of different models from the cities of Chicago, Berlin, and Shanghai. The figures of (a,e,i) colored in red are the ground truth of images, and the figures of (b,f,j) colored in green are the prediction of a single model. The figures of (c,j,k) are the prediction of end-to-end gradient boosting, and the figures of (d,h,l) are the prediction of GeoBoost. Yellow circles highlight some notable discrepancies among different prediction results. Each image in this figure has a size of .

Figure 8.

Semantic segmentation results of different models from the cities of Chicago, Berlin, and Shanghai. The figures of (a,e,i) colored in red are the ground truth of images, and the figures of (b,f,j) colored in green are the prediction of a single model. The figures of (c,j,k) are the prediction of end-to-end gradient boosting, and the figures of (d,h,l) are the prediction of GeoBoost. Yellow circles highlight some notable discrepancies among different prediction results. Each image in this figure has a size of .

Figure 9.

Samples from the cities of Toronto, Chicago, and Los Angeles in area . The figures of (a,f,k) are the sample images, and the figures of (b,g,l) colored in red are the ground truth of images. The figures of (c,h,m) colored in green are the prediction of GeoBoost. The figures of (d,i,n) are the center crop of labels, and the figures of (e,j,o) are the center crop of GeoBoost’s prediction. The first three columns are with a size of , and the last two is the center crop of the big images for observing details with a size of .

Figure 9.

Samples from the cities of Toronto, Chicago, and Los Angeles in area . The figures of (a,f,k) are the sample images, and the figures of (b,g,l) colored in red are the ground truth of images. The figures of (c,h,m) colored in green are the prediction of GeoBoost. The figures of (d,i,n) are the center crop of labels, and the figures of (e,j,o) are the center crop of GeoBoost’s prediction. The first three columns are with a size of , and the last two is the center crop of the big images for observing details with a size of .

Figure 10.

Samples from the cities of Berlin, Paris, and Vienna in area . The figures of (a,f,k) are the sample images, and the figures of (b,g,l) colored in red are the ground truth of images. The figures of (c,h,m) colored in green are the prediction of GeoBoost. The figures of (d,i,n) are the center crop of labels, and the figures of (e,j,o) are the center crop of GeoBoost’s prediction. The first three columns are with a size of , and the last two is the center crop of the big images for observing details with a size of .

Figure 10.

Samples from the cities of Berlin, Paris, and Vienna in area . The figures of (a,f,k) are the sample images, and the figures of (b,g,l) colored in red are the ground truth of images. The figures of (c,h,m) colored in green are the prediction of GeoBoost. The figures of (d,i,n) are the center crop of labels, and the figures of (e,j,o) are the center crop of GeoBoost’s prediction. The first three columns are with a size of , and the last two is the center crop of the big images for observing details with a size of .

Figure 11.

Samples from the cities of Seoul, Shanghai, and Tokyo in area . The figures of (a,f,k) are the sample images, and the figures of (b,g,l) colored in red are the ground truth of images. The figures of (c,h,m) colored in green are the prediction of GeoBoost. The figures of (d,i,n) are the center crop of labels, and the figures of (e,j,o) are the center crop of GeoBoost’s prediction. The first three columns are with a size of , and the last two is the center crop of the big images for observing details with a size of .

Figure 11.

Samples from the cities of Seoul, Shanghai, and Tokyo in area . The figures of (a,f,k) are the sample images, and the figures of (b,g,l) colored in red are the ground truth of images. The figures of (c,h,m) colored in green are the prediction of GeoBoost. The figures of (d,i,n) are the center crop of labels, and the figures of (e,j,o) are the center crop of GeoBoost’s prediction. The first three columns are with a size of , and the last two is the center crop of the big images for observing details with a size of .

Figure 12.

Distributions of building size for the DREAM-B data set.

Figure 12.

Distributions of building size for the DREAM-B data set.

Figure 13.

Samples from the city of Shanghai. This sample is cropped from

Figure 11g,h. The figure of (

a) is the label of the sample, and the figure of (

b) is the prediction results. All the samples are with a size of

.

Figure 13.

Samples from the city of Shanghai. This sample is cropped from

Figure 11g,h. The figure of (

a) is the label of the sample, and the figure of (

b) is the prediction results. All the samples are with a size of

.

Table 1.

Results for GeoBoost with different learning rates.

Table 1.

Results for GeoBoost with different learning rates.

| Learning Rate | Validation IoU |

|---|

| |

| |

| |

| |

| |

Table 2.

Results for the comparison of different methods. The result of a single model is obtained by training a net with all the available training data , , , and from four areas. EGB is short for the end-to-end gradient boosting method.

Table 2.

Results for the comparison of different methods. The result of a single model is obtained by training a net with all the available training data , , , and from four areas. EGB is short for the end-to-end gradient boosting method.

| Method | IoU | Precision | Recall | Overall Accuracy | Kappa |

|---|

| A single model (U-Net) | | | | | |

| A single model (U-NASNetMobile) | | | | | |

| EGB (U-Net) | | | | | |

| GeoBoost (U-Net) | 0.6164 | | | | |

| EGB (U-NASNetMobile) | | | | | |

| GeoBoost (U-NASNetMobile) | 0.6372 | | | | |

Table 3.

Results for the comparison of different areas on the test set. The coverage of different areas are the same as the bounding boxes of

,

, and

in

Figure 3.

Table 3.

Results for the comparison of different areas on the test set. The coverage of different areas are the same as the bounding boxes of

,

, and

in

Figure 3.

| Area | Testing IoU |

|---|

| America () | |

| Europe () | |

| East Asia () | |