Quantifying Uncertainty and Bridging the Scaling Gap in the Retrieval of Leaf Area Index by Coupling Sentinel-2 and UAV Observations

Abstract

:1. Introduction

- How do maps of red-edge VIs compare between high-resolution UAV imagery and coarser-spatial-resolution Sentinel-2 data throughout the winter wheat season?

- How do observation scale and scale mismatch (i.e., between in-situ data and satellite resolution) influence the accuracy of wheat LAI estimates?

- To what extent can models calibrated with high-spatial-resolution UAV-derived winter wheat LAI estimates reduce uncertainty and bias in the retrieval of LAI from Sentinel-2 data?

2. Materials and Methods

2.1. Field Campaigns and Measurements

2.1.1. Field Sites

2.1.2. Ground Measurements

2.2. Image Data Collection and Processing

2.2.1. UAV Platform and Imagery

2.2.2. UAV Imagery Post-Processing

2.2.3. Sentinel-2 Data

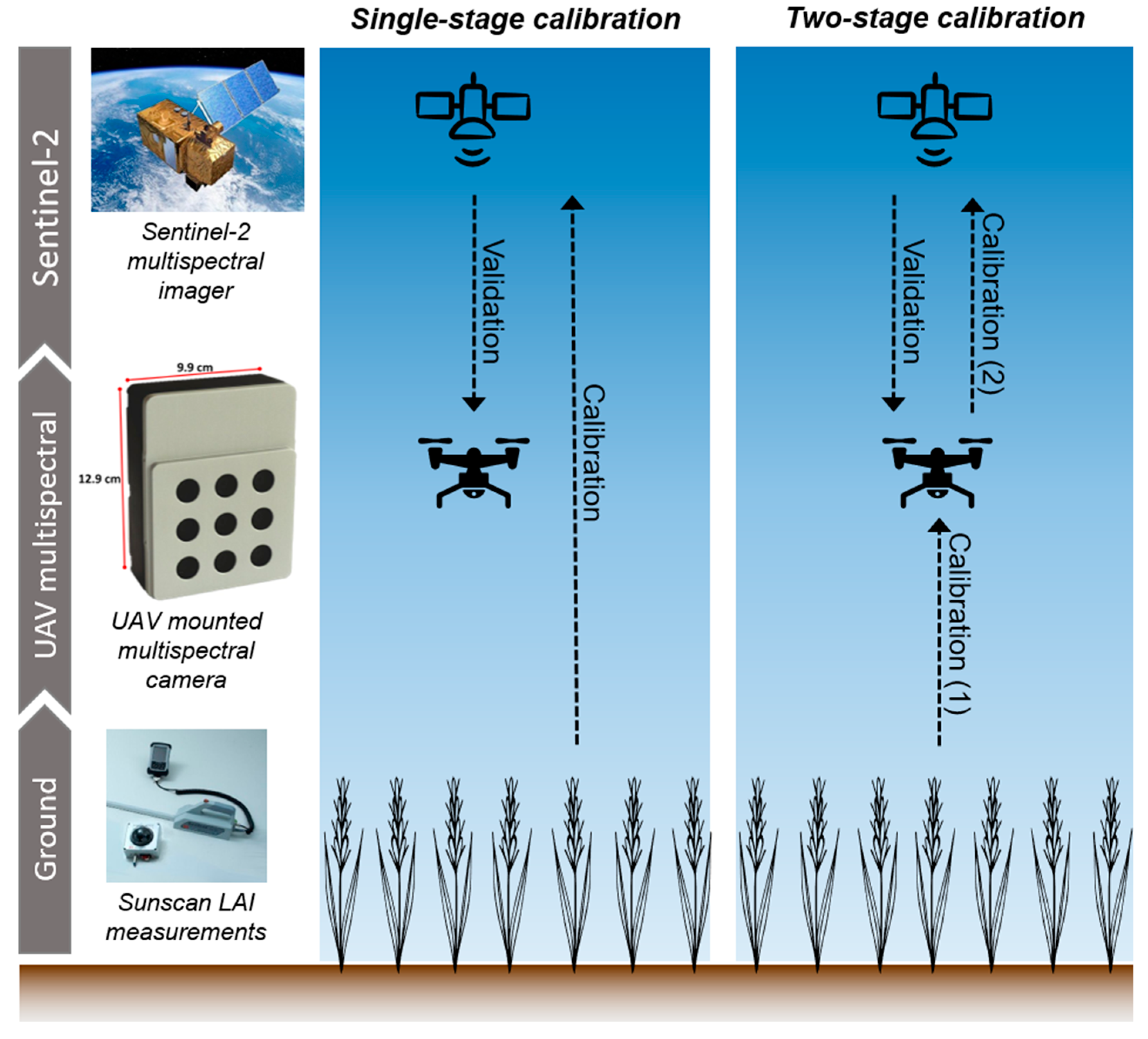

2.3. Experimental Design

2.3.1. Characterising Sentinel-2 Sub-Pixel Variability

2.3.2. Impacts of Scale on LAI Retrievals

2.3.3. Sentinel-2 LAI Retrieval Approaches

3. Results

3.1. Multiscale Chlorophyll Index Inter-Comparisons

3.2. Linking UAV LAI Retrieval Accuracies to Spatial Scale

3.3. Sentinel-2 LAI Retrieval Calibration Approaches

4. Discussion

4.1. How Do UAV and Sentinel-2 Chlorophyll Index Maps Compare across Multiple Growth Stages?

4.2. Canopy Heterogeneity and Impact of Scale on LAI Retrieval Accuracies

4.3. UAV Observations Reduced Uncertainty and Biases in Sentinel-2 LAI Retrievals

4.4. Research Implications and Limitations

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Tilman, D.; Balzer, C.; Hill, J.; Befort, B.L. Global food demand and the sustainable intensification of agriculture. Proc. Natl. Acad. Sci. USA 2011, 108, 20260–20264. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Clark, M.; Tilman, D. Comparative analysis of environmental impacts of agricultural production systems, agricultural input efficiency, and food choice. Environ. Res. Lett. 2017, 12, 64016. [Google Scholar] [CrossRef]

- Gebbers, R.; Adamchuk, V.I. Precision Agriculture and Food Security. Science 2010, 327, 828. [Google Scholar] [CrossRef] [PubMed]

- Jat, P.; Serre, M.L. Bayesian Maximum Entropy space/time estimation of surface water chloride in Maryland using river distances. Environ. Pollut. 2016, 219, 1148–1155. [Google Scholar] [CrossRef] [Green Version]

- Mulla, D.J. Twenty five years of remote sensing in precision agriculture: Key advances and remaining knowledge gaps. Biosyst. Eng. 2013, 114, 358–371. [Google Scholar] [CrossRef]

- Chen, J.M.; Black, T.A. Defining leaf area index for non-flat leaves. Plant. Cell Environ. 1992, 15, 421–429. [Google Scholar] [CrossRef]

- Clevers, J.; Kooistra, L.; van den Brande, M. Using Sentinel-2 Data for Retrieving LAI and Leaf and Canopy Chlorophyll Content of a Potato Crop. Remote Sens. 2017, 9, 405. [Google Scholar] [CrossRef] [Green Version]

- Zhu, W.; Sun, Z.; Huang, Y.; Lai, J.; Li, J.; Zhang, J.; Yang, B.; Li, B.; Li, S.; Zhu, K.; et al. Improving Field-Scale Wheat LAI Retrieval Based on UAV Remote-Sensing Observations and Optimized VI-LUTs. Remote Sens. 2019, 11, 2456. [Google Scholar] [CrossRef] [Green Version]

- Zheng, G.; Moskal, L.M. Retrieving Leaf Area Index (LAI) Using Remote Sensing: Theories, Methods and Sensors. Sensors. 2009, 9, 2719–2745. [Google Scholar] [CrossRef] [Green Version]

- Aboelghar, M.; Arafat, S.; Saleh, A.; Naeem, S.; Shirbeny, M.; Belal, A. Retrieving leaf area index from SPOT4 satellite data. Egypt. J. Remote Sens. Sp. Sci. 2010, 13, 121–127. [Google Scholar] [CrossRef] [Green Version]

- Sylvester-Bradley, R.; Berry, P.; Blake, J.; Kindred, D.; Spink, J.; Bingham, I.; McVittie, J.; Foulkes, J. The Wheat Growth Guide. Available online: http://www.adlib.ac.uk/resources/000/265/686/WGG_2008.pdf (accessed on 5 May 2020).

- Liu, X.; Cao, Q.; Yuan, Z.; Liu, X.; Wang, X.; Tian, Y.; Cao, W.; Zhu, Y. Leaf area index based nitrogen diagnosis in irrigated lowland rice. J. Integr. Agric. 2018, 17, 111–121. [Google Scholar] [CrossRef] [Green Version]

- Boote, K.J.; Jones, J.W.; White, J.W.; Asseng, S.; Lizaso, J.I. Putting mechanisms into crop production models. Plant. Cell Environ. 2013, 36, 1658–1672. [Google Scholar] [CrossRef] [PubMed]

- Nearing, G.S.; Crow, W.T.; Thorp, K.R.; Moran, M.S.; Reichle, R.H.; Gupta, H.V. Assimilating remote sensing observations of leaf area index and soil moisture for wheat yield estimates: An observing system simulation experiment. Water Resour. Res. 2012, 48. [Google Scholar] [CrossRef] [Green Version]

- Revill, A.; Sus, O.; Barrett, B.; Williams, M. Carbon cycling of European croplands: A framework for the assimilation of optical and microwave Earth observation data. Remote Sens. Environ. 2013, 137. [Google Scholar] [CrossRef] [Green Version]

- Huang, J.; Sedano, F.; Huang, Y.; Ma, H.; Li, X.; Liang, S.; Tian, L.; Zhang, X.; Fan, J.; Wu, W. Assimilating a synthetic Kalman filter leaf area index series into the WOFOST model to improve regional winter wheat yield estimation. Agric. For. Meteorol. 2016, 216, 188–202. [Google Scholar] [CrossRef]

- Jin, H.; Li, A.; Wang, J.; Bo, Y. Improvement of spatially and temporally continuous crop leaf area index by integration of CERES-Maize model and MODIS data. Eur. J. Agron. 2016, 78, 1–12. [Google Scholar] [CrossRef]

- Li, H.; Chen, Z.; Liu, G.; Jiang, Z.; Huang, C. Improving Winter Wheat Yield Estimation from the CERES-Wheat Model to Assimilate Leaf Area Index with Different Assimilation Methods and Spatio-Temporal Scales. Remote Sens. 2017, 9, 190. [Google Scholar] [CrossRef] [Green Version]

- Huang, J.; Gómez-Dans, J.L.; Huang, H.; Ma, H.; Wu, Q.; Lewis, P.E.; Liang, S.; Chen, Z.; Xue, J.-H.; Wu, Y.; et al. Assimilation of remote sensing into crop growth models: Current status and perspectives. Agric. For. Meteorol. 2019, 276–277, 107609. [Google Scholar] [CrossRef]

- Cammarano, D.; Fitzgerald, G.; Casa, R.; Basso, B. Assessing the Robustness of Vegetation Indices to Estimate Wheat N in Mediterranean Environments. Remote Sens. 2014, 6, 2827. [Google Scholar] [CrossRef] [Green Version]

- Verrelst, J.; Schaepman, M.E.; Malenovský, Z.; Clevers, J.G.P.W. Effects of woody elements on simulated canopy reflectance: Implications for forest chlorophyll content retrieval. Remote Sens. Environ. 2010, 114, 647–656. [Google Scholar] [CrossRef] [Green Version]

- Sehgal, V.K.; Chakraborty, D.; Sahoo, R.N. Inversion of radiative transfer model for retrieval of wheat biophysical parameters from broadband reflectance measurements. Inf. Process. Agric. 2016, 3, 107–118. [Google Scholar] [CrossRef] [Green Version]

- Cai, Y.; Guan, K.; Lobell, D.; Potgieter, A.B.; Wang, S.; Peng, J.; Xu, T.; Asseng, S.; Zhang, Y.; You, L.; et al. Integrating satellite and climate data to predict wheat yield in Australia using machine learning approaches. Agric. For. Meteorol. 2019, 274, 144–159. [Google Scholar] [CrossRef]

- Hunt, M.L.; Blackburn, G.A.; Carrasco, L.; Redhead, J.W.; Rowland, C.S. High resolution wheat yield mapping using Sentinel-2. Remote Sens. Environ. 2019, 233, 111410. [Google Scholar] [CrossRef]

- Kayad, A.; Sozzi, M.; Gatto, S.; Marinello, F.; Pirotti, F. Monitoring Within-Field Variability of Corn Yield using Sentinel-2 and Machine Learning Techniques. Remote Sens. 2019, 11, 2873. [Google Scholar] [CrossRef] [Green Version]

- Revill, A.; Florence, A.; MacArthur, A.; Hoad, S.P.; Rees, R.M.; Williams, M. The value of Sentinel-2 spectral bands for the assessment of winter wheat growth and development. Remote Sens. 2019, 11. [Google Scholar] [CrossRef] [Green Version]

- Han, J.; Zhang, Z.; Cao, J.; Luo, Y.; Zhang, L.; Li, Z.; Zhang, J. Prediction of Winter Wheat Yield Based on Multi-Source Data and Machine Learning in China. Remote Sens. 2020, 12, 236. [Google Scholar] [CrossRef] [Green Version]

- Pasolli, E.; Melgani, F.; Alajlan, N.; Bazi, Y. Active Learning Methods for Biophysical Parameter Estimation. IEEE Trans. Geosci. Remote Sens. 2012, 50, 4071–4084. [Google Scholar] [CrossRef]

- Gewali, U.B.; Monteiro, S.T.; Saber, E. Gaussian Processes for Vegetation Parameter Estimation from Hyperspectral Data with Limited Ground Truth. Remote Sens. 2019, 11, 1614. [Google Scholar] [CrossRef] [Green Version]

- Liang, S.; Li, X.; Wang, J. (Eds.) Chapter 1—A Systematic View of Remote Sensing. In Advanced Remote Sensing; Academic Press: Boston, MA, USA, 2012; pp. 1–31. ISBN 978-0-12-385954-9. [Google Scholar]

- Delloye, C.; Weiss, M.; Defourny, P. Retrieval of the canopy chlorophyll content from Sentinel-2 spectral bands to estimate nitrogen uptake in intensive winter wheat cropping systems. Remote Sens. Environ. 2018, 216, 245–261. [Google Scholar] [CrossRef]

- Delegido, J.; Verrelst, J.; Alonso, L.; Moreno, J. Evaluation of Sentinel-2 red-edge bands for empirical estimation of green LAI and chlorophyll content. Sensors 2011, 11, 7063–7081. [Google Scholar] [CrossRef] [Green Version]

- Clevers, J.G.P.W.; Gitelson, A.A. Remote estimation of crop and grass chlorophyll and nitrogen content using red-edge bands on Sentinel-2 and -3. Int. J. Appl. Earth Obs. Geoinf. 2013, 23, 344–351. [Google Scholar] [CrossRef]

- Peng, Y.; Nguy-Robertson, A.; Arkebauer, T.; Gitelson, A.A. Assessment of Canopy Chlorophyll Content Retrieval in Maize and Soybean: Implications of Hysteresis on the Development of Generic Algorithms. Remote Sens. 2017, 9, 226. [Google Scholar] [CrossRef] [Green Version]

- Delegido, J.; Verrelst, J.; Rivera, J.P.; Ruiz-Verdú, A.; Moreno, J. Brown and green LAI mapping through spectral indices. Int. J. Appl. Earth Obs. Geoinf. 2015, 35, 350–358. [Google Scholar] [CrossRef]

- Xie, Q.; Dash, J.; Huete, A.; Jiang, A.; Yin, G.; Ding, Y.; Peng, D.; Hall, C.C.; Brown, L.; Shi, Y.; et al. Retrieval of crop biophysical parameters from Sentinel-2 remote sensing imagery. Int. J. Appl. Earth Obs. Geoinf. 2019, 80, 187–195. [Google Scholar] [CrossRef]

- Pasqualotto, N.; Delegido, J.; Van Wittenberghe, S.; Rinaldi, M.; Moreno, J. Multi-Crop Green LAI Estimation with a New Simple Sentinel-2 LAI Index (SeLI). Sensors 2019, 19, 904. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pla, M.; Bota, G.; Duane, A.; Balagué, J.; Curcó, A.; Gutiérrez, R.; Brotons, L. Calibrating Sentinel-2 Imagery with Multispectral UAV Derived Information to Quantify Damages in Mediterranean Rice Crops Caused by Western Swamphen (Porphyrio porphyrio). Drones 2019, 3, 45. [Google Scholar] [CrossRef] [Green Version]

- Matese, A.; Toscano, P.; Di Gennaro, S.F.; Genesio, L.; Vaccari, F.P.; Primicerio, J.; Belli, C.; Zaldei, A.; Bianconi, R.; Gioli, B. Intercomparison of UAV, Aircraft and Satellite Remote Sensing Platforms for Precision Viticulture. Remote Sens. 2015, 7, 2971–2990. [Google Scholar] [CrossRef] [Green Version]

- Gitelson, A.A.; Gritz, Y.; Merzlyak, M.N. Relationships between leaf chlorophyll content and spectral reflectance and algorithms for non-destructive chlorophyll assessment in higher plant leaves. J. Plant Physiol. 2003, 160, 271–282. [Google Scholar] [CrossRef]

- Reichenau, T.G.; Korres, W.; Montzka, C.; Fiener, P.; Wilken, F.; Stadler, A.; Waldhoff, G.; Schneider, K. Spatial Heterogeneity of Leaf Area Index (LAI) and Its Temporal Course on Arable Land: Combining Field Measurements, Remote Sensing and Simulation in a Comprehensive Data Analysis Approach (CDAA). PLoS ONE 2016, 11, e0158451. [Google Scholar] [CrossRef] [Green Version]

- Fraser, R.H.; Van der Sluijs, J.; Hall, R.J. Calibrating Satellite-Based Indices of Burn Severity from UAV-Derived Metrics of a Burned Boreal Forest in NWT, Canada. Remote Sens. 2017, 9, 279. [Google Scholar] [CrossRef] [Green Version]

- Padró, J.-C.; Muñoz, F.-J.; Ávila, L.Á.; Pesquer, L.; Pons, X. Radiometric Correction of Landsat-8 and Sentinel-2A Scenes Using Drone Imagery in Synergy with Field Spectroradiometry. Remote Sens. 2018, 10, 1687. [Google Scholar] [CrossRef] [Green Version]

- Zadoks, J.C.; Chang, T.T.; Konzak, C.F. A decimal code for the growth stages of cereals. Weed Res. 1974, 14, 415–421. [Google Scholar] [CrossRef]

- Nocerino, E.; Dubbini, M.; Menna, F.; Remondino, F.; Gattelli, M.; Covi, D. Geometric Calibration and Radiometric Correction of the MAIA Multispectral Camera. ISPRS 2017, XLII-3/W3, 149–156. [Google Scholar] [CrossRef] [Green Version]

- Vreys, K.; VITO. Technical Assistance to fieldwork in the Harth forest during SEN2Exp; Flemish Institute for Technological Research: Boeretang, Belgium, 2014. [Google Scholar]

- MacLellan, C. NERC Field Spectroscopy Facility - Guidlines for Post Processing ASD FieldSpec Pro and FieldSpec 3 Spectral Data Files using the FSF MS Excel Template. 2009, 18. Available online: https://fsf.nerc.ac.uk/resources/post-processing/post_processing_v3/post%20processing%20v3%20pdf/Guidelines%20for%20ASD%20FieldSpec%20Templates_v03.pdf (accessed on 5 May 2020).

- European Space Agency. Copernicus Open Access Hub. Available online: scihub.copernicus.eu (accessed on 10 August 2019).

- Vuolo, F.; Żółtak, M.; Pipitone, C.; Zappa, L.; Wenng, H.; Immitzer, M.; Weiss, M.; Baret, F.; Atzberger, C. Data Service Platform for Sentinel-2 Surface Reflectance and Value-Added Products: System Use and Examples. Remote Sens. 2016, 8, 938. [Google Scholar] [CrossRef] [Green Version]

- Rasmussen, C.E.; Williams, C.K.I. Gaussian Processes for Machine Learning 2006. Available online: http://www.gaussianprocess.org/gpml/chapters/RW.pdf (accessed on 5 May 2020).

- Camps-Vails, G.; Gómez-Chova, L.; Muñoz-Mari, J.; Vila-Frances, J.; Amoros, J.; del Valle-Tascon, S.; Calpe-Maravilla, J. Biophysical parameter estimation with adaptive Gaussian Processes. IEEE Int. Geosci. Remote Sens. Symp. 2009, 4, IV-69–IV-72. [Google Scholar]

- Verrelst, J.; Rivera, J.P.; Gitelson, A.; Delegido, J.; Moreno, J.; Camps-Valls, G. Spectral band selection for vegetation properties retrieval using Gaussian processes regression. Int. J. Appl. Earth Obs. Geoinf. 2016, 52, 554–567. [Google Scholar] [CrossRef]

- Rivera, J.P.; Verrelst, J.; Muñoz-Marí, J.; Moreno, J.; Camps-Valls, G. Toward a Semiautomatic Machine Learning Retrieval of Biophysical Parameters. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 1249–1259. [Google Scholar]

- Verrelst, J.; Muñoz, J.; Alonso, L.; Delegido, J.; Rivera, J.P.; Camps-Valls, G.; Moreno, J. Machine learning regression algorithms for biophysical parameter retrieval: Opportunities for Sentinel-2 and -3. Remote Sens. Environ. 2012, 118, 127–139. [Google Scholar] [CrossRef]

- Ramirez-Garcia, J.; Almendros, P.; Quemada, M. Ground cover and leaf area index relationship in a grass, legume and crucifer crop. Plant Soil Environ. 2012, 58, 385–390. [Google Scholar] [CrossRef] [Green Version]

- Fawcett, D.; Panigada, C.; Tagliabue, G.; Boschetti, M.; Celesti, M.; Evdokimov, A.; Biriukova, K.; Colombo, R.; Miglietta, F.; Rascher, U.; et al. Multi-Scale Evaluation of Drone-Based Multispectral Surface Reflectance and Vegetation Indices in Operational Conditions. Remote Sens. 2020, 12. [Google Scholar] [CrossRef] [Green Version]

- Li, Z.; Jin, X.; Yang, G.; Drummond, J.; Yang, H.; Clark, B.; Li, Z.; Zhao, C. Remote Sensing of Leaf and Canopy Nitrogen Status in Winter Wheat (Triticum aestivum L.) Based on N-PROSAIL Model. Remote Sens. 2018, 10, 1463. [Google Scholar] [CrossRef] [Green Version]

- Peralta, N.R.; Costa, J.L.; Balzarini, M.; Castro Franco, M.; Córdoba, M.; Bullock, D. Delineation of management zones to improve nitrogen management of wheat. Comput. Electron. Agric. 2015, 110, 103–113. [Google Scholar] [CrossRef]

- Buttafuoco, G.; Castrignanò, A.; Cucci, G.; Lacolla, G.; Lucà, F. Geostatistical modelling of within-field soil and yield variability for management zones delineation: A case study in a durum wheat field. Precis. Agric. 2017, 18, 37–58. [Google Scholar] [CrossRef]

- Guo, C.; Zhang, L.; Zhou, X.; Zhu, Y.; Cao, W.; Qiu, X.; Cheng, T.; Tian, Y. Integrating remote sensing information with crop model to monitor wheat growth and yield based on simulation zone partitioning. Precis. Agric. 2018, 19, 55–78. [Google Scholar] [CrossRef]

- Dash, P.J.; Pearse, D.G.; Watt, S.M. UAV Multispectral Imagery Can Complement Satellite Data for Monitoring Forest Health. Remote Sens. 2018, 10. [Google Scholar] [CrossRef] [Green Version]

- Gitelson, A.A.; Viña, A.; Ciganda, V.; Rundquist, D.C.; Arkebauer, T.J. Remote estimation of canopy chlorophyll content in crops. Geophys. Res. Lett. 2005, 32. [Google Scholar] [CrossRef] [Green Version]

- Zhang, F.; Zhou, G.; Nilsson, C. Remote estimation of the fraction of absorbed photosynthetically active radiation for a maize canopy in Northeast China. J. Plant Ecol. 2014, 8, 429–435. [Google Scholar] [CrossRef] [Green Version]

| Farm | UAV/Ground Observation Date (2018) | Growth Stage (Description) | Sentinel-2 Observation Date (+/− UAV Day) |

|---|---|---|---|

| Farm 1 | 24th May | 39 (Stem elongation—late) | 25th May (+1) |

| Farm 1 | 5th June | 51 (Ear emergence) | 7th June (+2) |

| Farm 1 | 28th June | 69 (Flowering completed) | 29th June (+1) |

| Farm 2 | 4th May | 31 (Stem elongation—early) | 5th May (+1) |

| Farm 2 | 23rd May | 39 (Stem elongation—late) | 28th May (+5) |

| Farm 2 | 3th July | 77 (Milk development) | 4th July (+1) |

| Sentinel-2 CIred-edge (20 m) | UAV CIred-edge (0.05 m) | Sentinel-2/UAV Inter-Comparisons | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Farm | GS | Number of Pixels | Mean | SD | CV (%) | Number of Pixels | Mean | SD | CV (%) | R2 | NRMSE (%) |

| Farm 1 | 39 | 80 | 7.46 | 0.81 | 11 | 5,491,483 | 8.88 | 1.18 | 13 | 0.37 | 28 |

| Farm 1 | 51 | 80 | 7.07 | 0.92 | 13 | 5,491,483 | 9.62 | 1.10 | 11 | 0.75 | 45 |

| Farm 1 | 68 | 80 | 5.94 | 0.51 | 9 | 5,491,483 | 6.14 | 0.47 | 8 | 0.65 | 14 |

| Farm 2 | 31 | 161 | 1.84 | 0.42 | 23 | 11,051,610 | 2.49 | 0.76 | 31 | 0.32 | 32 |

| Farm 2 | 39 | 161 | 8.59 | 0.75 | 9 | 11,051,610 | 9.15 | 2.09 | 23 | 0.58 | 19 |

| Farm 2 | 78 | 161 | 4.29 | 0.45 | 10 | 11,051,610 | 5.57 | 0.82 | 15 | 0.71 | 23 |

| Average | 0.64 | 12 | 1.07 | 17 | 0.56 | 27 | |||||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Revill, A.; Florence, A.; MacArthur, A.; Hoad, S.; Rees, R.; Williams, M. Quantifying Uncertainty and Bridging the Scaling Gap in the Retrieval of Leaf Area Index by Coupling Sentinel-2 and UAV Observations. Remote Sens. 2020, 12, 1843. https://doi.org/10.3390/rs12111843

Revill A, Florence A, MacArthur A, Hoad S, Rees R, Williams M. Quantifying Uncertainty and Bridging the Scaling Gap in the Retrieval of Leaf Area Index by Coupling Sentinel-2 and UAV Observations. Remote Sensing. 2020; 12(11):1843. https://doi.org/10.3390/rs12111843

Chicago/Turabian StyleRevill, Andrew, Anna Florence, Alasdair MacArthur, Stephen Hoad, Robert Rees, and Mathew Williams. 2020. "Quantifying Uncertainty and Bridging the Scaling Gap in the Retrieval of Leaf Area Index by Coupling Sentinel-2 and UAV Observations" Remote Sensing 12, no. 11: 1843. https://doi.org/10.3390/rs12111843

APA StyleRevill, A., Florence, A., MacArthur, A., Hoad, S., Rees, R., & Williams, M. (2020). Quantifying Uncertainty and Bridging the Scaling Gap in the Retrieval of Leaf Area Index by Coupling Sentinel-2 and UAV Observations. Remote Sensing, 12(11), 1843. https://doi.org/10.3390/rs12111843