1. Introduction

The study of the evolutionary dynamics of environmental landscapes is typically performed by means of archival aerial image analysis. From such photographs, a large range of details can be grasped about areas of interest at different times that can provide support to many study fields, such as analyses of land-use [

1,

2,

3,

4,

5], urban area [

6], glacier volume and lake surface [

7], and landslide evolution [

8,

9,

10] changes. Despite AAPs’ (archival aerial photos) informative potential, so far, their usage has been scarce owing to the processing difficulty related to the lack of some key information (e.g., external and internal camera parameters [

11]). Fortunately, a recently introduced technology (structure from motion) has been demonstrated to be able to overcome the limitations of classic photogrammetry [

7], opening possibilities to a larger exploitation of this kind of informative support. In general, AAPs, in order to be compared with current images [

12], must be (i) converted from analog to digital; (ii) georeferenced; and then (iii) imported into some kind of geographic information system (GIS).

The key stage of the above process is georeferencing, which consists of producing an absolute image orientation in a specific geographic system. However, to successfully carry out that stage, the treatment of the digital image must be supervised by means of a set of ground-referenced information. The most common method for finding suitable ground references is by seeking ground control points (GCPs), namely points whose coordinates are measured with maximal accuracy. According to the technical literature, GCPs can be detected directly in the field by means of ground positioning systems (GPS) or manually (visually) using ancillary orthoimages or digital terrain model (DTM) in GIS environments [

13,

14,

15]. GCPs are then introduced into the structure from motion (SfM) process, at the stage termed block bundle adjustment (BBA).

At present, there are some open issues concerning the georeferencing stage outcomes: (i) the definition of an effective accuracy descriptor of the generated orthophoto [

16]; (ii) determining the optimal amount of GCPs in relationship to the study area size or, alternatively, to the number of images available [

17]; and (iii) assessing the relationship, if any, between the spatial distribution of GCPs and georeferencing accuracy [

18].

The accuracy of the georeferencing is typically assessed by computing the root mean square error (RMSE) [

4,

7,

19,

20,

21]. That index is an average measure of the difference between the GCPs’ in-field caught coordinates and those garnered after georeferencing [

6]. Criticisms have been raised against such an index, for instance, by [

22] and [

23]; they highlighted that, owing to the averaging effect, RMSE could mask relevant local errors. In this manner, a georeferenced orthophoto could be characterized by sub-areas with different degrees of reliability. Such a consideration suggests that a theoretical framework able to manage local errors could be more appropriate for assessing reliably the accuracy of a georeferenced orthophoto rather than a single global index.

The literature indications regarding the correct number of GCPs required for achieving a given accuracy do not provide a univocal answer [

4,

7,

14,

24]. According to the desired accuracy, the resolution of the final orthophotograph, and the size of the study area, that number can range from few units to hundreds, as reported in Table 11 [

21,

25,

26]. In addition, during the digitalization stage of AAPs [

24], deformations and noise can be introduced into the image, an issue that can increase the difficulty of finding a large number of reliable GCPs visually [

17,

26]. Therefore, a parsimonious approach in GCP searching should be considered.

Although there exists a large body of literature about the GCP number and its relationship with final orthophoto accuracy, few studies can be found about their ideal spatial placement to achieve a fine georeferencing with a minimal number of GCPs [

18,

27]. Nevertheless, studies comparing the accuracy performances of georeferencing supported by GCP sets characterized by different sizes and spatial distributions showed that, considering configurations of the same sizes, changes in the spatial distribution of the GCPs can have a significant impact on the georeferencing accuracy [

17,

18,

26,

27].

In summary, it emerges that the best strategy for GCP selection over the study area should be related to the concepts of local accuracy improvement, GCP position exploitation, and parsimony in their identification.

Considering the efforts devoted by researchers to the issue of optimizing the number and the placement of GCPs over the study area, it appears that a methodology addressing such an issue could be a valuable tool. To find an appropriate theoretical framework for modelling the involved concepts, the following points should be clarified: (i) the conditions leading to low accuracy in georeferencing and (ii) the main properties’ characteristics of georeferencing errors.

GCPs play a crucial role both for bringing the coordinates of the original orthoimage grid into the desired coordinate system and for assessing the georeferencing final accuracy beside the check points (CPs). In fact, accuracy is assessed determining the mismatch between GCP coordinates before and after the georeferencing stage. During that stage, misalignments propagate from GCPs to nearby locations, favouring the onset of areas characterized by low accuracy (weak areas) [

16]. The similarity of error values at neighbouring locations makes evident the spatially auto-correlated nature of

coordinate errors. Hence, geostatistics [

28,

29] appear to be the most suitable theoretical framework to effectively model those errors [

30].

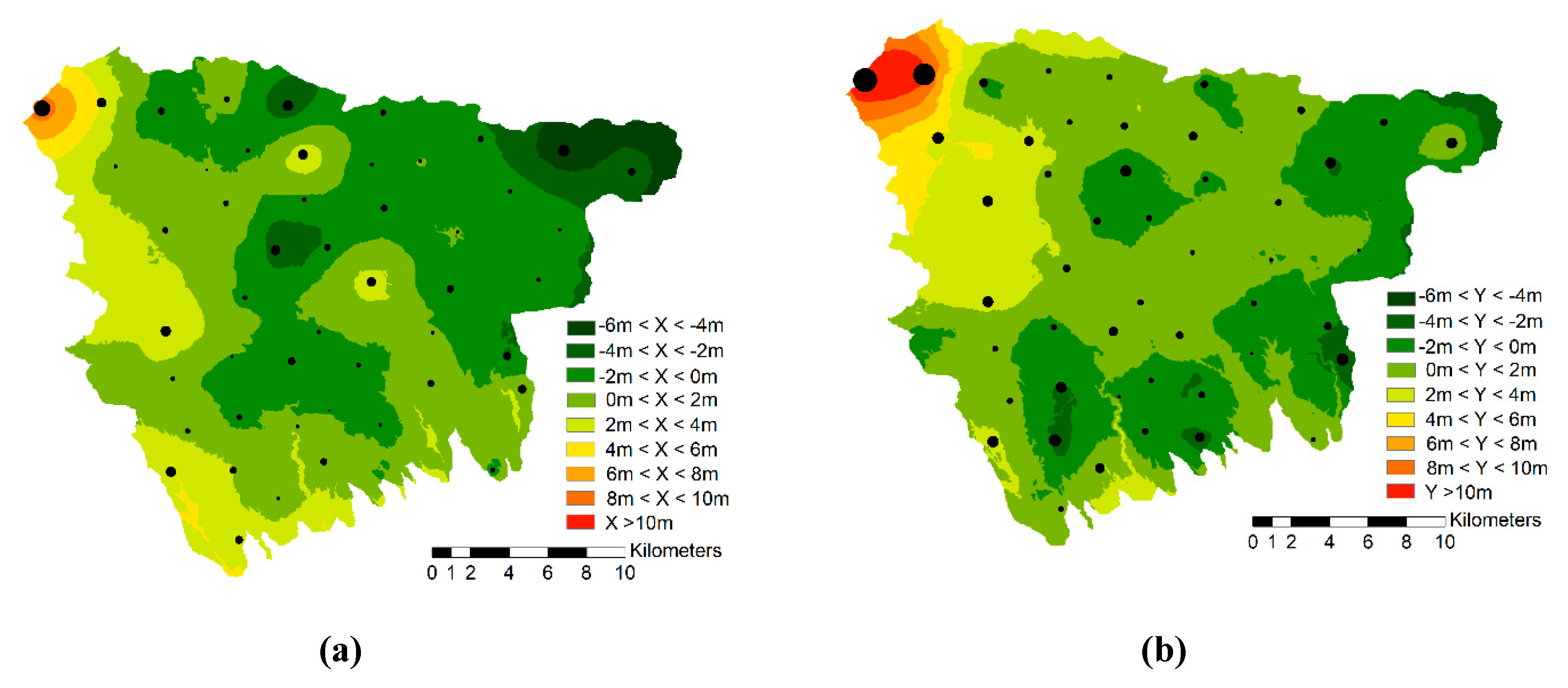

The rationale of the proposed methodology is as follows: at the first stage, a deliberately gross version of the considered orthomosaic is produced by means of a small number of GCPs randomly spread over the study area. GCPs (local) errors, assessed by means of Pix4D software, are then spatialized by use of the kriging interpolator. An error raster map is thus produced, where sub-areas characterized by high local errors can be easily identified. The initial set is then integrated by new GCPs that are placed within or neighbouring such sub-areas. Therefore, the spatial distribution of GCPs is stratified; the first stratum is random, and the second is

preferential. The georeferencing procedure is then re-run, and the accuracy of the resulting orthomosaic is assessed once more; if that accuracy is deemed unsatisfactory, GCPs’ errors are re-modelled for re-defining weak areas where new GCPs are placed. That procedure can be repeated as many times as desired until large errors are completely filtered out [

31]. In summary, the random distributed stratum is defined at the first stage, once for all, whereas the second stratum can be recursively increased until the desired accuracy is obtained. The effectiveness of the proposed error model is confirmed by [

27]. In fact, that paper reports that errors are not indefinitely compressible, but there is a threshold of the GCP number beyond which there is no further improvement in the accuracy. That finding is not particularly surprising because it is actually inherent to the considered theoretical framework. In conclusion, it was shown that the proposed procedure addresses all three open issues mentioned above.

The presented case study concerns the georeferencing of 67 archival AAPs dating back to the 1950s with a desired error threshold of 10 m, which is typical within the forestry scope for large-scale studies. The study results, compared with similar works on archival photographic georeferencing, showed better performance in achieving a final orthomosaic with the same RMSE at a lower information cost computed in terms of nGCPs/km

2 [

18,

27,

32].

The present study is a follow-up of the QUALIGOUV project (IG-MED 08-392), financed by the European Regional Development Fund, aimed at improving the governance and quality of forest management in protected Mediterranean areas. The proposed methodology was also developed for the ‘Alta Murgia’ National Park (Southern Italy) in order to study the evolution of the reforestations owned by the Puglia region, managed by the A.R.I.F. (Regional Agency for Irrigation and Forestry).

2. Study Area and Data Description

The study area covers a surface of approximately 55,000 ha, and is located on the border between two regions, Puglia and Basilicata, in Southern Italy. It is included in the ‘Murge’, a low limestone plateau dominating the landscape of the central part of the Puglia region. The delineation of the study area was carried out considering the hydrographic basin system of the streams crossing the western part of the Regional Natural Park ‘Terra delle Gravine’.

The area can be divided into three different longitudinal strips that differ in terms of altitude, morphology, land cover, and presence of forest vegetation (

Figure 1a).

The northern zone, between 500 and 380 asl, is characterized by hills generally with south- and south-east exposure, where there are forests of Quercus trojana Webb. The central area, between 400 and 220 asl, consists of a slightly wavy plain, used almost everywhere for agricultural crops [

33]. The southern zone, between 350 and 160 asl, is morphologically more complex owing to the presence of a series of deep incisions that appear similar to North American canyons [

34], termed ‘gravine’ (

Figure 1b). The vegetation mainly comprises forest communities characterized by an accentuated physiognomic and compositional heterogeneity [

33]. The transects reported in

Figure 2 show the inhomogeneity of the study area and the different degrees of morphological complexity of the three zones reported in

Figure 1.

The present study was based on 67 AAPs captured in black and white between 1954 and 1955 during the first Italian National Planimetric Survey. The photographs (23 cm × 23 cm) were taken with metric cameras at a height of 6000 m with an acquisition scale of 1:34,000. The analogue AAPs were digitalized by means of an Epson Expression 1640 XL nonphotogrammetric scanner with a resolution of 800 dpi (or equivalently, 31.75 µm). AAPs were purchased already digitalized from the IAGO/IGM retailer (Italian Army Geographical Office/Istituto Geografico Militare). Scanned images had different orientations relative to the original images, and they had an overlap of approximately 60%.

Furthermore, a digital terrain model (DTM) was obtained by merging DTMs of Apulian and Basilicata regions, which have a resolution of 8 m and 5 m, respectively. Digital orthoimages of the 2016 flight for the Puglia region and that of 2013 for the Basilicata region were used. The two orthophotographs have a scale of 1:5000 with a 50 cm pixel resolution.

5. Discussion

Figure 15a shows the variation of the mean values related to the three errors before and after the application of the proposed methodology. As a first remark, it is noticeable that the mean values were reduced and tend to zero. Another feature of this result is the sign of the mean value that is positive after the methodology application, which suggests that there is a tendency to overestimate the error values during the kriging application. Concerning

Figure 15b, a decrease in the standard deviation values is apparent. An interpretation of this result is that a tendency exists towards an equalization of the error values; that is to say, the structural error vanishes, and the white noise remains, as expected. In fact, the three error distributions become even more similar to the general white noise distribution that is Gaussian centred on zero.

To provide a judgement regarding the appropriateness of this methodology, it is necessary to compare the achieved results with the existing literature. After a review, it emerged that authors typically tend to lower RMSE values and achieve a fine georeferencing using a large information rate for unit area (ratio between number of GCPs and the size of the study area), which can be measured as nGCPs/Km

2 [

18,

32] (see

Table 11). Hence, there is a general tendency for neglecting the GCPs’ positions in favour of their number.

The issue of minimizing the GCP number optimally has been addressed by many authors.

Table 11 reports the results derived from a set of papers, the contributions of which can be grouped into three main categories: (i) the use of archival aerial photogrammetry to quantify the landscape change [

1,

25]; (ii) the application of SfM for orientation, orthorectification, and mosaic composition of historical aerial images [

7,

21]; and (iii) the optimal distribution [

18] and number [

27] of GCPs to optimize the accuracy of the georeferencing process obtained by unmanned aerial vehicle (UAV) photogrammetry.

Table 11 demonstrates clearly that, by comparing results from the scientific literature with those achieved in the present paper, it is, in fact, possible to reach the same RMSE values with a reduced information rate for area unit. This was reached by selecting GCPs in the areas highlighted during the geostatistical analysis (weak areas).

Therefore, this finding demonstrates that GCP position can be a key piece of information for achieving a fine georeferencing as much as the GCP number. In

Table 11, the references reporting a better result compared with the present study are those of [

25,

27] and [

18]. However, it should be highlighted that the nGCPs/Km

2 is approximately 65, 10, and 871 times larger than the results obtained here, and the corresponding size areas are substantially smaller than the presented study area. It may be that it would have been possible to achieve a similar goal with a smaller increase in the information rate, but such an analysis was beyond the objective of the present study.

Another important result is that the optimal (minimal) number of GCPs required to achieve the study’s main objective is within the range of 50 < opt ≤ 75. Furthermore, it is noticeable that the RMSE related to error Z improved strongly, notwithstanding the fact the GCPs were selected with respect to errors X and Y only. This association suggests the possible existence of inter-relationships between the three errors and that, probably, an improvement along a single direction can impact positively upon all the remaining errors. Therefore, applying the methodology to a single direction could achieve similar results in terms of accuracy by simplifying the overall complexity of the procedure to a significant degree.

From a computational standpoint, it can be stated that the proposed methodology converged after only a single run for the presented case study. This is not surprising, because simulation trials demonstrated that, at most, a couple of runs are sufficient to reach the desired accuracy. Therefore, the methodology is generally also particularly rapid.

6. Conclusions

This research has presented a geostatistically-based methodology aimed at the following: (i) assessing and mapping the local accuracy of a grossly georeferenced archival orthomosaic; and (ii) improving that accuracy to meet a given error threshold (10 m) by selecting a number of GCPs within or close to areas affected by large errors [

16] reported by error maps.

After the methodology’s application, (i) the target error was, in fact, reached along all three axes, and (ii) the target was reached with a minimal quantity of GCPs. The (ii) point was gained not by chance, but as an effect of limiting areas within which GCPs were found.

To compare two different GCP configurations over two different areas, the information rate per unit area, measured in terms of nGCPs/Km2, can be an effective index.

A review reported in

Table 11 shows that the rate was substantially lower than those considered in this study. To test the proposed methodology, a wide and morphologically complex study area was considered. The methodology found the convergence towards a satisfying solution after only one run. The results showed a significant improvement for error X, which decreased far below the given threshold; in fact, the largest local error was approximately 5 m, only half of the required target. The same large positive impact was found for error Z, notwithstanding the fact that the GCP selection strategy was not driven by such a variable. At first sight, error Y, observing the behaviour of the largest errors, would appear to be influenced in a lower measure by the proposed procedure, but this impression is incorrect because the RMSE improved by approximately 32%. In addition, as emerged following the geostatistical analysis, the structural error was not completely filtered out [

31], demonstrating potential room for further improvement. However, because the largest errors decreased below the given threshold (even if by only a small amount), and the local errors converged towards a uniform value over the entire study area, the procedure was stopped.

Therefore, the procedure can be considered validated and the application fully successful. Regarding the next developments, we consider that the addressed topic deserves further investigation. In particular, research into the error propagation during georeferencing process is presently in progress. The initial results are interesting and are likely to be of high significance.