Abstract

Haze removal is an ill-posed problem that has attracted much scientific interest due to its various practical applications. Existing methods are usually founded upon various priors; consequently, they demonstrate poor performance in circumstances in which the priors do not hold. By examining hazy and haze-free images, we determined that haze density is highly correlated with image features such as contrast energy, entropy, and sharpness. Then, we proposed an iterative algorithm to accurately estimate the extinction coefficient of the transmission medium via direct optimization of the objective function taking into account all of the features. Furthermore, to address the heterogeneity of the lightness, we devised adaptive atmospheric light to replace the homogeneous light generally used in haze removal. A comparative evaluation against other state-of-the-art approaches demonstrated the superiority of the proposed method. The source code and data sets used in this paper are made publicly available to facilitate further research.

1. Introduction

Haze is a general term that is used to indicate the presence of natural and artificial aerosols in the atmosphere, which are the main cause of the light scattering phenomenon that obscures the visibility of captured images. In vision-based intelligent systems, such as surveillance cameras and autonomous driving vehicles, obscured visibility gives rise to a sharp decrease in performance, as the algorithms used in these systems are primarily designed for clear weather conditions. Thus, haze removal plays a crucial role as a pre-processing step for improving the visibility of recorded scenes before providing them to other functional modules. In addition, haze removal methods can also remove the undesirable visual effects of yellow dust, which is a topical issue in Eastern Asian countries due to rapid industrialization. This can be attributed to the similarity in the diameter of scattering particles.

Researchers generally approach the haze removal problem from two perspectives: image enhancement and image restoration. The former focuses on enhancing an image’s low-level features such as contrast, sharpness, and edges, to gain the improved visibility. Low-light stretch, unsharp masking, histogram equalization, and homomorphic filtering are cases in point [1,2,3,4,5]. Although these methods can recover faded distant objects, haze remains in the image. Thus, these methods are only applicable to thin haze. In image restoration, the haze formation procedure is described by a physical model, and various assumptions are imposed to address the ill-posed nature of haze removal. Then, a recovered image with improved visibility is obtained by inverting the model. It is worth noticing that most contemporary dehazing algorithms are developed in this manner.

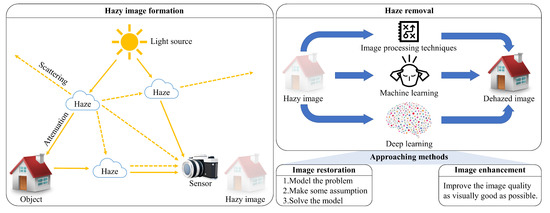

Image-restoration-based haze removal methods can be further categorized into single-image and multiple-image techniques. The latter usually outperforms the former owing the additional external information. However, due to the difficulty of collecting multiple images of the same scene, there is increasing interest in single-image haze removal, which can be divided into three categories: image processing, machine learning, and deep learning-based techniques, as depicted in Figure 1.

Figure 1.

General introduction to the haze removal problem.

In the image processing category, dark channel prior (DCP) proposed by He et al. [6] is one of the most famous single-image dehazing methods. DCP imposes the assumption that there exists an extremely dark pixel in every color channel of the local non-sky patch around all pixels. This is a strong prior that leads to high performance for indoor dehazing [7]. However, DCP works unpredictably in the sky region and is time-consuming. DCP has since been improved in many directions [8,9,10,11]. By observing that the computationally expensive drawback of DCP is largely due to the use of the soft-matting method [12], Kim et al. [13] exploited an edge-preserving filter called the modified hybrid median filter to accelerate the processing speed, albeit with slight background noise. In addition, their algorithm was implemented as a real-time intellectual property by Ngo et al. [14].

In the machine learning category, color attenuation prior (CAP) proposed by Zhu et al. [15] is a prime example of a machine learning-based haze removal algorithm. A linear model describing the relationship between the scene depth, saturation, and brightness is constructed, and its parameters are estimated via maximum likelihood estimates in a supervised learning manner. CAP is an efficient algorithm with high performance in most cases, and has a considerably high processing speed. The recently proposed improved color attenuation prior (ICAP) [16] addressed the shortcomings of CAP, such as color distortion and background noise, and successfully resolved them by means of adaptively weighting and low-pass filtering. Tang et al. [17] made use of another machine learning technique called random forest regression to estimate the haze distribution from various image features, such as DCP, hue disparity, contrast, and saturation. Despite its good dehazing ability, the slow processing rate of this technique prohibits it from being widely deployed.

Finally, in the deep learning category, DehazeNet, developed by Cai et al. [18], is a shallow but efficient convolutional neural network (CNN) that learns the mapping between a hazy image and its corresponding haze distribution map from synthetic training data. The cascaded CNN proposed by Li et al. [19] is a deeper network with improved performance. Increasing the receptive field via either deeper networks or dilated convolution and developing a more efficient loss function are additional methods for improving haze removal performance. These methods have been utilized by Golts et al. [20] and Ren et al. [21]. Recently, Yeh et al. [22] have presented an interesting way to conduct haze removal based on deep CNNs. Because haze distribution is generally smooth except at discontinuities, the input image is decomposed into the base and the detail components. Then, haze removal is solely performed on the base component via a multi-scale CNN, while the detail component is enhanced by another CNN. However, the lack of real data sets comprising hazy images and their corresponding haze-free ground truth images imposes a performance limit on deep learning-based approaches. To cope with this problem, Ignatov et al. [23] proposed a weakly supervised learning method by exploiting the inverse generative network to partially alleviate the strict requirement of hazy/haze-free pairs of the same scene in the training data set. Another attempt was proposed by Shao et al. [24] to employ the domain adaptation to improve the performance of a deep CNN trained on synthetic images. Ngo et al. [16] and Li et al. [25] provided a comprehensive review on haze removal algorithms.

In this paper, we present a machine-learning-based method called robust single-image haze removal via optimal transmission map and adaptive atmospheric light (OTM-AAL). The main contributions of this method are summarized as follows:

- Because haze reduces an image’s contrast, sharpness, and therefore, the information conveyed therein, an objective function quantifying this type of adverse effect is developed and then optimized to achieve an accurate estimate of the atmospheric extinction coefficient. For ease of computation, it is assumed that haze is locally homogeneous. The guided image filter is then employed to suppress block artifacts arising from the imposed assumption.

- Because atmospheric light does not vary significantly within the captured scene, it is assumed to be constant in virtually all haze removal algorithms. However, the use of global atmospheric light may cause the color shift problem in some image regions. Therefore, we also provide an adaptive estimate of the image’s lightness in an attempt to improve the perceived image quality.

The remainder of this paper is structured as follows. Section 2 provides a brief introduction to the atmospheric scattering model, a physical model that is the foundation of haze removal algorithms. Section 3 describes the proposed method by explaining haze-related features and the estimation of the optimal transmission map as well as adaptive atmospheric light. Section 4 presents the results of quantitative and qualitative assessments, while Section 5 concludes the paper and discusses future development.

2. Atmospheric Scattering Model

The atmospheric scattering model was proposed by McCartney [26] to model the formation of a hazy image due to the light scattering phenomenon in the atmosphere, and could be expressed as follows:

where denotes the two dimensional coordinates of image pixels, denotes the hazy image captured by the camera and is the only known parameter in this model, denotes the haze-free image that the haze removal algorithms aim to recover, t denotes the transmission map representing the atmospheric extinction coefficient, and denotes the global atmospheric light. Given an N-bit red-green-blue (RGB) image, the value range of , , and is , while t varies between . The multiplicative term describes the direct attenuation of the scene radiance in the transmission medium. The remaining term, , represents the amount of scattered light entering the aperture and causing additive distortion of the scene radiance.

In this paper, Equation (1) is slightly modified to bring the adaptive atmospheric light (i.e., instead of ) into consideration. Then, all variables in Equation (2) are spatially varying, and the pixel coordinates can be omitted in the main text for ease of expression.

Equation (2) is clearly under-constrained, as it is supposed to recover the haze-free image from the hazy image without any knowledge about t and . Thus, haze removal is considered an ill-posed problem, and it is necessary to impose a strong prior for precisely estimating the transmission map and atmospheric light. Supposing that and are estimates of t and , Equation (2) can be inverted to get the haze-free image as follows:

where the term is added to prevent instability when is close to zero.

It is evident that the performance of haze removal algorithms is largely dependent on the estimation procedure of and . To achieve an accurate estimate , we first investigate image features that are pertinent to the distribution of haze in the captured scene. Then, an objective function taking all of these features into account is devised, and a direct optimization algorithm called the Nelder–Mead algorithm [27] is exploited to obtain . Concerning the adaptive atmospheric light, the quad-decomposition algorithm proposed in [28] is utilized to provide the initial estimate. In addition, another objective function is developed that ensures that the lightness is mainly smooth and does not vary significantly around the initial estimate. Locating the minimum of this function results in the desired estimate .

3. Proposed Algorithm

3.1. Haze-Related Features

Due to the presence of haze, direct attenuation and additive distortion of the scene radiance mainly result in the loss of contrast and image details. The degradation degree of the hazy image is numerically expressed by the transmission map, in which values close to zero denote pixels heavily obscured by haze, while values close to one denote clear pixels. Thus, there is likely a mapping between image features representing the image contrast and details and the haze distribution. In this paper, we use six image features, including three for contrast energy, one for entropy, and two for sharpness, to devise an objective function for identifying the optimal transmission map. Although the DCP discussed in [6] is an effective descriptor quantifying the amount of haze in a scene, it is omitted because it easily fails in outdoor scenes with a large sky region.

The contrast energy (), which quantifies the perceived local contrast of natural images [29], is employed as the first haze-related feature. Following [30], a bank of Gaussian second-order derivative filters modeling the receptive fields in cortical neurons is used to decompose the hazy image . The filter responses are then rectified and normalized to resemble the process of nonlinear contrast gain in the visual cortex. Thereafter, a simple global thresholding step is performed to remove noise. is computed independently on each color component (grayscale, yellow-blue, and red-green) in the opponent color space as follows:

where {grayscale, yellow-blue, red-green} denotes the color channels of , denotes the maximum value of , denotes the constant gain, and denotes the noise threshold of individual color channels. Symbol ∗ represents convolution, while and are kernels of the horizontal and vertical Gaussian second-order derivative filters, respectively.

To quantify the image details, the image entropy (), which measures the average amount of information conveyed by an image, is used as the second haze-related feature. Similar to the entropy concept widely used in information theory, high image entropy signifies that the image contains many details, while low image entropy signifies that the image contains few details. In this context, image details represent the number of image intensity levels in the grayscale channel . We use the following equation to compute , where denotes the histogram probability of the pixel intensity i:

Furthermore, we also use statistical image features, such as the local standard deviation () and the normalized dispersion () [29], to quantify the image details. These are computed as follows:

where is the local mean, denotes the local Gaussian weighting kernel, and denotes the coordinates in . Although the local standard deviation is highly correlated with the image structural information quantifying local sharpness, the normalized dispersion is also used based on the observation that generally varies with the local mean .

3.2. Optimal Transmission Map Estimation

Prior to the estimation of the optimal transmission map, we assume that the atmosphere is homogeneous, thus giving rise to the following equation:

where is a positive constant representing the extinction coefficient of the atmosphere, and d is the scene depth. Equation (10) indicates that the haze distribution is exponentially proportional to the depth. This relationship is a foundation of our second assumption, which states that the transmission map is uniform in local patches. This can be easily verified by the fact that the scene depth is mainly smooth except at discontinuities, such as object outlines and image edges.

Following our second assumption, the hazy image is decomposed into non-overlapping square patches. Then, a four-step iterative algorithm is employed to estimate the optimal transmission in each image patch. For a particular patch ith, we first initialize the transmission value and then calculate the dehazed patch using Equation (3), where the atmospheric light is assumed to be estimated in advance. The objective function is devised using haze-related features discussed in Section 3.1 as follows:

where represents the contrast energy over three opponent color channels. To obtain one value per patch, we use the average value of , while one value is directly calculated on each patch for , , and . Because the adverse effects of haze include contrast reduction and loss of image details, maximizing the objective function results in the optimal transmission map value .

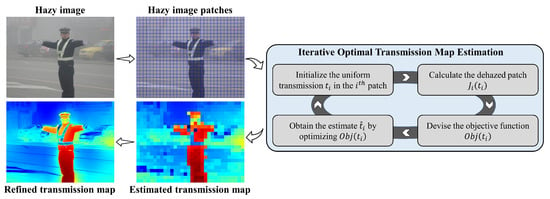

In this paper, we make use of the well-known Nelder–Mead direct search algorithm for optimization. A detailed description is provided in [27]. However, because the transmission is not always uniform in image patches, the estimated transmission map suffers from block artifacts. Thus, the fast-and-efficient guided image filter [31] is exploited in the refinement step to address this problem. The entire procedure is illustrated in Figure 2. In this figure, it can be seen that the estimated transmission map provides a good description of the haze distribution in the scene, and the refined map improves upon it to outline the profile of the objects.

Figure 2.

Proposed iterative algorithm for optimal transmission map estimation.

3.3. Adaptive Atmospheric Light Estimation

Thus far, we have assumed that the atmospheric light is known. In this subsection, a discussion of atmospheric light estimation (ALE) methods is provided, and an approach called quad-decomposition [28] is presented. Through extensive examination, it is observed that techniques for estimating the atmospheric light in existing haze removal algorithms usually face two main challenges: high computational cost and functional defects in scenes containing large white objects. The ALE method used in DCP and CAP can be taken as an example. An atmospheric light pixel is considered the brightest pixel among a predetermined percentage of pixels located in the most haze-opaque region. This way of identifying the atmospheric light requires sorting all pixels and searching over the selected ones. Moreover, the estimated atmospheric light may belong to a white object, such as a light bulb, if the percentage is not determined carefully.

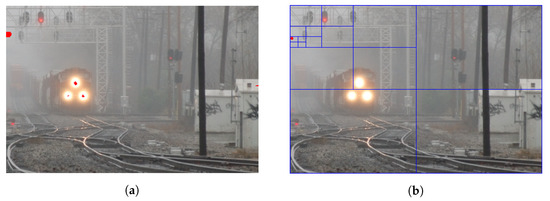

In contrast, the quad-decomposition algorithm is capable of preventing these undesirable effects. The input image is preprocessed by the minimum filter to reduce the intensity of white objects. Then, the image is decomposed into quarters, and the quarter with the largest average luminance value is selected. The decomposition process is repeated on the selected quarter until the size of the quarter is less than a predetermined value. Within the selected quarter, the pixel with the smallest Euclidean norm to the white point in the RGB color space is selected as the atmospheric light. Because white objects mainly appear in regions with high perceived contrast, they are successfully eliminated through the process of iterative decomposition based on the average luminance value. In Figure 3a, pixels marked in red belong to of the farthest pixels in the depth map, and the selected atmospheric light is the pixel within the train’s headlight. In contrast, Figure 3b illustrates that quad-decomposition efficiently eliminates the headlight from the estimation process, resulting in a more accurate result located in the top left of the scene.

Figure 3.

Atmospheric light estimation: (a) color attenuation prior method and (b) quad-decomposition method.

As mentioned in Section 2, the atmospheric light returned from the quad-decomposition algorithm is used as the initial estimate for determining the adaptive lightness, which is generally assumed to be smooth except for special image regions, such as dark areas. The objective function () is developed with the following two constraints: (i) the luminance of the recovered image must not significantly decrease to prevent loss of details in low-light regions, and (ii) the adaptive atmospheric light () should vary around the initial estimate (). In Equation (12), and denote the luminance channels of the input hazy image and recovered image, respectively; is the regularization parameter; and is the smoothness regularization function.

with the presence of , the function becomes nonlinear, and optimizing it directly becomes difficult. Therefore, it is more convenient to approximate the optimization by excluding the term . The remaining linear function () is easily optimized, and the guided image filter is applied to the found minimum to achieve the effect of smoothness regularization. By exploiting the linearity of the atmospheric scattering model, the linear function and its corresponding derivatives are expressed as follows:

where . It is evident that because the second derivative is always positive, setting Equation (14) to zero results in the minimum of . Denoting the guided image filter as , the adaptive atmospheric light is presented in Equation (16) as follows:

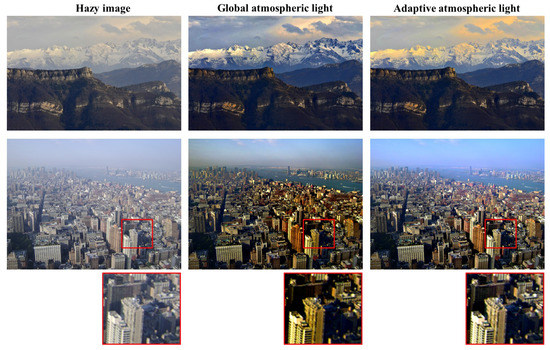

Figure 4 illustrates two real hazy scenes in which the use of the adaptive atmospheric light produces a noticeable improvement in perceived image quality. In the first row (mountain scenes), using the global atmospheric light causes the sky region to turn bluish. In contrast, the adaptive atmospheric light successfully prevents such a color shift. In the second row (city scenes), the proposed adaptive atmospheric light successfully resolves the visual artifact near the horizon as well as the loss of dark details in the building behind the white building in the cropped red region. The value of is set to 4 for the dehazed images in Figure 4, and this value is used for all results presented hereinafter.

Figure 4.

Visual comparison of the use of global atmospheric light and adaptive atmospheric light.

3.4. Scene Radiance Recovery

With the transmission map and adaptive atmospheric light, it is possible to recover the scene radiance according to Equation (3). However, even in clear weather, the presence of aerosols in the atmosphere is inevitable, and haze is observed upon viewing distant objects. This is a fundamental cue of human depth perception and has the scientific name aerial perspective [6]. If haze is completely removed, the recovered image may appear unnatural, and it may be difficult to perceive the feeling of depth. Accordingly, we add the constant parameter in an exponential manner to control the dehazing power, as the transmission map is inversely proportional to the amount of haze to be removed. The value of is empirically set to for all results presented in this paper. Equation (3) can be re-written as follows:

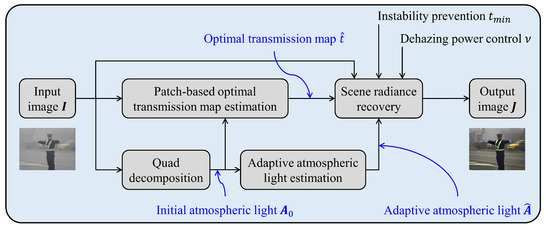

the input image () initially undergoes the quad-decomposition process to produce the initial atmospheric light (), which is a prerequisite for the patch-based optimal transmission map estimation process. To obtain the output image with improved visibility (), the adaptive atmospheric light () is calculated and substituted into Equation (17) together with the optimal transmission map () and user-defined parameters (). A block diagram of the proposed algorithm is presented in Figure 5.

Figure 5.

Block diagram of proposed haze removal algorithm.

4. Evaluation

This section quantitatively and qualitatively assesses the performance of the proposed algorithm against four state-of-the-art methods: DCP, CAP, DehazeNet, and ICAP. In addition, we provide the dehazed results when the scene visibility is obscured by yellow dust in lieu of haze to demonstrate that our method can function properly under different circumstances.

4.1. Quantitative Evaluation

To perform a thorough assessment, we used both synthetic and real image data sets. Foggy road image database 2 (FRIDA2) [32] is a synthetic data set designed for advanced driver-assistance systems (ADAS). It consists of 66 haze-free road scenes that are generated entirely by computer software. Each road scene has four associated hazy images generated under four different haze conditions: homogeneous, heterogeneous, cloudy homogeneous, and cloudy heterogeneous. In addition, O-HAZE [33] and I-HAZE [34] are used to evaluate the dehazing performance for hazy images of real outdoor and indoor scenes, respectively. O-HAZE consists of 45 pairs of haze-free and hazy images taken outdoors, while I-HAZE consists of 30 pairs of haze-free and hazy images taken indoors. Ancuti et al. [33,34] utilized a specialized vapor generator to create haze in hazy scenes.

FRIDA2, O-HAZE, and I-HAZE contain the ground truth references; therefore, the structural similarity (SSIM) [35], feature similarity extended to color images (FSIMc) [36], and fog aware density evaluator (FADE) [29] were used as evaluation metrics. SSIM works on the luminance channel of images and assesses the degree of similarity in structural information. The value range of SSIM is , in which a higher score indicates that the compared image resembles the reference image to a greater extent. FSIMc is designed because low-level features, such as phase congruency, gradient magnitude, and chrominance similarity, play an important role in the way humans perceive images. Its value varies between 0 and 1, where a higher score is better. FADE is a statistically robust metric estimating haze density in the scene radiance. In this context, a smaller value of FADE is desirable in haze removal tasks. However, it is worth noticing that the FADE metric solely assesses the haze density in the recovered scene and does not provide any information about the image details or contrast. Thus, darker images resulting from excessive haze removal possess smaller FADE scores. In our evaluation, FADE was utilized together with SSIM and FSIMc to assess the dehazing power of five algorithms.

Table 1 and Table 2 display the average SSIM, FSIMc, and FADE results on the FRIDA2 data set, in which the best results are marked in bold. It can be seen that the proposed algorithm demonstrated the best performance in terms of SSIM and FSIMc. This can be attributed to the maximization of the objective function taking into account image features quantifying image details and contrast. In addition, the use of the adaptive atmospheric light to prevent the loss of dark details also contributed to the high SSIM and FSIMc scores. Regarding the FADE scores, FADE itself is not a reliable metric for evaluating dehazing performance, and the high FADE scores of OTM-AAL can be interpreted in the following manner. Because lightness is not homogeneously distributed throughout the captured scene, applying the global atmospheric light is very likely to give rise to loss of image details in dark areas. This can be observed from the building located behind the white building in the cropped red region in Figure 4. Namely, its windows are faded when the global atmospheric light is used. This problem is alleviated with the proposed adaptive atmospheric light. Therefore, the loss of dark details is misinterpreted as the disappearance of haze, producing low FADE scores for DCP, CAP, DehazeNet, and ICAP. In contrast, the proposed algorithm is capable of preserving dark details, resulting in higher FADE scores. Therefore, by using FADE in combination with SSIM and FSIMc, our proposed algorithm demonstrates superior performance to that of the four benchmark methods.

Table 1.

Average structural similarity (SSIM) and feature similarity extended to color images (FSIMc) scores on FRIDA2 data set.

Table 2.

Average fog aware density evaluator (FADE) score on FRIDA2 data set.

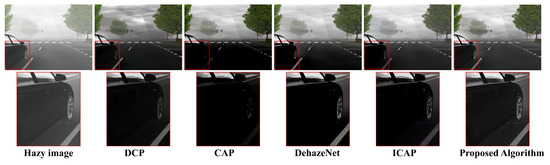

Figure 6 presents the dehazed results of five algorithms to support the above-mentioned interpretation of the high FADE scores of our proposed method. It is evident that all four benchmark approaches, notably CAP, suffer from the loss of dark details (e.g., door handles or tires) to varying degrees. This is due to the use of the global atmospheric light, where FADE misinterprets this type of loss as the desired removal of haze. Accordingly, CAP exhibits the lowest FADE scores despite the fact that its results are generally too dark for object recognition or lane-marking extraction. Likewise, DehazeNet also suffers from loss of details at door handles and tires. The reason for this is that whereas the CNN is employed for learning the transmission map, DehazeNet still utilizes the global atmospheric light. In contrast, the proposed OTM-AAL method makes good use of the adaptive atmospheric light lest the loss of dark details arises. In Figure 6, it can be seen that the result of OTM-AAL is the most visually satisfactory, as all details of the car are preserved while the haze is efficiently removed.

Figure 6.

Dehazed images from FRIDA2 data set for interpreting high FADE scores of the proposed algorithm.

Table 3 and Table 4 present the average SSIM, FSIMc, and FADE results on O-HAZE and I-HAZE data sets, respectively, where the best results are displayed in bold. The proposed algorithm demonstrates high performance; specifically, it has the best performance on the I-HAZE data set and the second-best performance on the O-HAZE data set. Likewise, high SSIM and FSIMc scores are attributed to the optimal transmission map and adaptive atmospheric light, and the high FADE results can be interpreted in a similar manner.

Table 3.

Average SSIM and FSIMc scores on O-HAZE and I-HAZE data sets.

Table 4.

Average FADE score on O-HAZE and I-HAZE data sets.

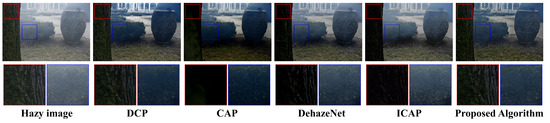

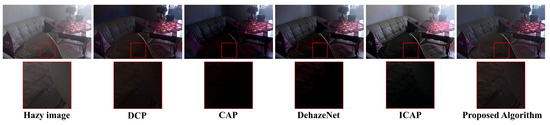

Figure 7 and Figure 8 present the dehazed results for the O-HAZE and I-HAZE data sets, demonstrating the advantage of the proposed adaptive lightness. Although the global atmospheric light assumption generally suffices for haze removal, it is prone to the loss of dark details when the lightness is heterogeneous, as illustrated in Figure 7 and Figure 8. Thus, by taking into account the heterogeneity of the atmospheric light, the proposed OTM-AAL method successfully surmounts this visually unpleasant effects (i.e., the loss of dark details).

Figure 7.

Dehazed images from O-HAZE data set for interpreting high FADE scores of the proposed algorithm.

Figure 8.

Dehazed images from I-HAZE data set for interpreting high FADE scores of the proposed algorithm.

4.2. Qualitative Evaluation

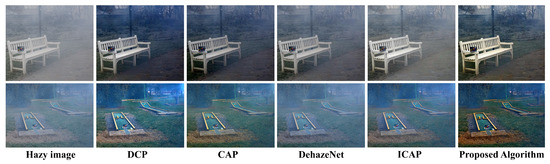

Figure 9 presents real outdoor hazy scenes to visually evaluate the performance of the proposed algorithm and four benchmark methods. Although all five approaches produce satisfactory results, it can be seen that the results of the proposed method are superior. In the first row, DCP, CAP, DehazeNet, and ICAP are only able to remove haze in the close region, while distant house and trees remain obscure. In particular, DehazeNet exhibits weaker dehazing power than other methods because its CNN was trained on synthetic images, therein lies the cause of its poor performance on real scenes. The proposed algorithm, in contrast, performs well in both close and distant regions. A similar observation can be made for the images in the second row of Figure 9.

Figure 9.

Qualitative assessment of four algorithms on real hazy scenes.

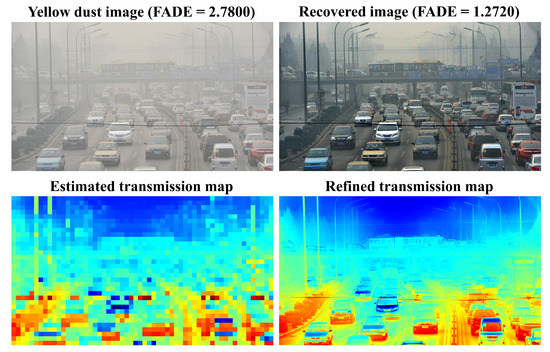

Figure 10 presents an image in which visibility is obscured by yellow dust (more results can be found online). It can be seen that the proposed OTM-AAL method produces an impressive result, as the scene visibility is improved to a large degree. The estimated transmission map accurately describes the degradation degree of the captured scene, and the guided image filter successfully outlines the objects’ profile. Because this is a real image without a ground truth reference, the solely measurable FADE scores show that a large amount of yellow dust has been removed (i.e., approximately ). As a result, the recovered image is clearer, which is beneficial for other algorithms, such as object detection and traffic sign recognition algorithms.

Figure 10.

Result of applying proposed algorithm to a scene obscured by yellow dust.

5. Conclusions

In this paper, an efficient algorithm for robust single-image haze removal is proposed. Through extensive observations of hazy and haze-free images, we determined that image features conveying image details and contrast are highly correlated with the distribution of haze in the scene radiance. Therefore, maximizing the objective function that combines all of these features in a multiplicative manner results in an accurately estimated transmission map, and this is then refined by a guided image filter to produce the optimal transmission. Another unknown quantity called atmospheric light is predicted in an adaptive manner using the simple quad-decomposition algorithm and another objective function. The adaptive atmospheric light and optimal transmission map are then substituted into the atmospheric scattering model to produce clear visibility.

However, a major drawback of our algorithm is its high computational cost. Although optimization is performed on non-overlapping patches, the proposed algorithm is relatively slow in comparison to four benchmark approaches. In addition, the lack of a closed-form solution for the first optimization problem concerning the optimal transmission map is a challenge in applying the proposed algorithm to real-time processing systems. These demanding problems are left for future research.

Author Contributions

Conceptualization, B.K.; software, D.N.; validation, D.N. and S.L.; data curation, D.N. and S.L.; writing—original draft preparation, D.N.; writing—review and editing, B.K., D.N., and S.L.; supervision, B.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by research funds from Dong-A University, Busan, South Korea.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Deng, G. A Generalized Unsharp Masking Algorithm. IEEE Trans. Image Process. 2011, 20, 1249–1261. [Google Scholar] [CrossRef]

- Ngo, D.; Kang, B. Image Detail Enhancement via Constant-Time Unsharp Masking. In Proceedings of the 2019 IEEE 21st Electronics Packaging Technology Conference (EPTC), Singapore, 4–6 December 2019; pp. 743–746. [Google Scholar] [CrossRef]

- Ngo, D.; Lee, S.; Kang, B. Nonlinear Unsharp Masking Algorithm. In Proceedings of the 2020 International Conference on Electronics, Information, and Communication (ICEIC), Barcelona, Spain, 19–22 January 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Chang, Y.; Jung, C.; Ke, P.; Song, H.; Hwang, J. Automatic Contrast-Limited Adaptive Histogram Equalization With Dual Gamma Correction. IEEE Access 2018, 6, 11782–11792. [Google Scholar] [CrossRef]

- Mustafa, W.A.; Khairunizam, W.; Yazid, H.; Ibrahim, Z.; Shahriman, A.; Razlan, Z.M. Image Correction Based on Homomorphic Filtering Approaches: A Study. In Proceedings of the 2018 International Conference on Computational Approach in Smart Systems Design and Applications (ICASSDA), Kuching, Malaysia, 15–17 August 2018; pp. 1–5. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single Image Haze Removal Using Dark Channel Prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar] [CrossRef] [PubMed]

- Ancuti, C.; Ancuti, C.O.; De Vleeschouwer, C. D-HAZY: A dataset to evaluate quantitatively dehazing algorithms. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 2226–2230. [Google Scholar] [CrossRef]

- Lee, S.; Yun, S.; Nam, J.H.; Won, C.S.; Jung, S.W. A review on dark channel prior based image dehazing algorithms. J. Image Video Proc. 2016, 2016, 4. [Google Scholar] [CrossRef]

- Park, Y.; Kim, T.H. Fast Execution Schemes for Dark-Channel-Prior-Based Outdoor Video Dehazing. IEEE Access 2018, 6, 10003–10014. [Google Scholar] [CrossRef]

- Tufail, Z.; Khurshid, K.; Salman, A.; Fareed Nizami, I.; Khurshid, K.; Jeon, B. Improved Dark Channel Prior for Image Defogging Using RGB and YCbCr Color Space. IEEE Access 2018, 6, 32576–32587. [Google Scholar] [CrossRef]

- Zhu, Y.; Tang, G.; Zhang, X.; Jiang, J.; Tian, Q. Haze removal method for natural restoration of images with sky. Neurocomputing 2018, 275, 499–510. [Google Scholar] [CrossRef]

- Levin, A.; Lischinski, D.; Weiss, Y. A Closed-Form Solution to Natural Image Matting. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 30, 228–242. [Google Scholar] [CrossRef]

- Kim, G.J.; Lee, S.; Kang, B. Single Image Haze Removal Using Hazy Particle Maps. IEICE Trans. Fundam. Electron. Commun. Comput. Sci. 2018, E101-A, 1999–2002. [Google Scholar]

- Ngo, D.; Lee, G.D.; Kang, B. A 4K-Capable FPGA Implementation of Single Image Haze Removal Using Hazy Particle Maps. Appl. Sci. 2019, 9, 3443. [Google Scholar] [CrossRef]

- Zhu, Q.; Mai, J.; Shao, L. A Fast Single Image Haze Removal Algorithm Using Color Attenuation Prior. IEEE Trans. Image Process. 2015, 24, 3522–3533. [Google Scholar] [CrossRef]

- Ngo, D.; Lee, G.D.; Kang, B. Improved Color Attenuation Prior for Single-Image Haze Removal. Appl. Sci. 2019, 9, 4011. [Google Scholar] [CrossRef]

- Tang, K.; Yang, J.; Wang, J. Investigating Haze-Relevant Features in a Learning Framework for Image Dehazing. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2995–3002. [Google Scholar] [CrossRef]

- Cai, B.; Xu, X.; Jia, K.; Qing, C.; Tao, D. DehazeNet: An End-to-End System for Single Image Haze Removal. IEEE Trans. Image Process. 2016, 25, 5187–5198. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Guo, J.; Porikli, F.; Fu, H.; Pang, Y. A Cascaded Convolutional Neural Network for Single Image Dehazing. IEEE Access 2018, 6, 24877–24887. [Google Scholar] [CrossRef]

- Golts, A.; Freedman, D.; Elad, M. Unsupervised Single Image Dehazing Using Dark Channel Prior Loss. IEEE Trans. Image Process. 2019, 29, 2692–2701. [Google Scholar] [CrossRef]

- Ren, W.; Pan, J.; Zhang, H.; Cao, X.; Yang, M.H. Single Image Dehazing via Multi-scale Convolutional Neural Networks with Holistic Edges. Int. J. Comput. Vis. 2020, 128, 240–259. [Google Scholar] [CrossRef]

- Yeh, C.H.; Huang, C.H.; Kang, L.W. Multi-Scale Deep Residual Learning-Based Single Image Haze Removal via Image Decomposition. IEEE Trans. Image Process. 2019, 29, 3153–3167. [Google Scholar] [CrossRef]

- Ignatov, A.; Kobyshev, N.; Timofte, R.; Vanhoey, K.; Van Gool, L. WESPE: Weakly Supervised Photo Enhancer for Digital Cameras. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 804–80409, ISSN 2160-7516. [Google Scholar] [CrossRef]

- Shao, Y.; Li, L.; Ren, W.; Gao, C.; Sang, N. Domain Adaptation for Image Dehazing. Available online: http://xxx.lanl.gov/abs/2005.04668 (accessed on 3 July 2020).

- Li, B.; Ren, W.; Fu, D.; Tao, D.; Feng, D.; Zeng, W.; Wang, Z. Benchmarking Single-Image Dehazing and Beyond. IEEE Trans. Image Process. 2019, 28, 492–505. [Google Scholar] [CrossRef]

- McCartney, E.J. Optics of the Atmosphere: Scattering by Molecules and Particles. Phys. Today 2008, 30, 76. [Google Scholar] [CrossRef]

- Gonçalves-E-Silva, K.; Aloise, D.; Xavier-De-Souza, S.; Mladenović, N. Less is more: Simplified Nelder-Mead method for large unconstrained optimization. Yugosl. J. Oper. Res. 2018, 28, 153–169. [Google Scholar] [CrossRef]

- Park, D.; Park, H.; Han, D.K.; Ko, H. Single image dehazing with image entropy and information fidelity. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 4037–4041. [Google Scholar] [CrossRef]

- Choi, L.K.; You, J.; Bovik, A.C. Referenceless Prediction of Perceptual Fog Density and Perceptual Image Defogging. IEEE Trans. Image Process. 2015, 24, 3888–3901. [Google Scholar] [CrossRef]

- Groen, I.I.; Ghebreab, S.; Prins, H.; Lamme, V.A.; Scholte, H.S. From Image Statistics to Scene Gist: Evoked Neural Activity Reveals Transition from Low-Level Natural Image Structure to Scene Category. J. Neurosci. 2013, 33, 18814–18824. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Sun, J.; Tang, X. Guided Image Filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1397–1409. [Google Scholar] [CrossRef]

- Tarel, J.P.; Hautiere, N.; Caraffa, L.; Cord, A.; Halmaoui, H.; Gruyer, D. Vision Enhancement in Homogeneous and Heterogeneous Fog. IEEE Intell. Transp. Syst. Mag. 2012, 4, 6–20. [Google Scholar] [CrossRef]

- Ancuti, C.O.; Ancuti, C.; Timofte, R.; De Vleeschouwer, C. O-HAZE: A Dehazing Benchmark with Real Hazy and Haze-Free Outdoor Images. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 867–8678. [Google Scholar] [CrossRef]

- Ancuti, C.O.; Ancuti, C.; Timofte, R.; De Vleeschouwer, C. I-HAZE: A dehazing benchmark with real hazy and haze-free indoor images. arXiv 2018, arXiv:1804.05091. [Google Scholar]

- Wang, Z.; Bovik, A.; Sheikh, H.; Simoncelli, E. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Mou, X.; Zhang, D. FSIM: A Feature Similarity Index for Image Quality Assessment. IEEE Trans. Image Process. 2011, 20, 2378–2386. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).