A Survey of Change Detection Methods Based on Remote Sensing Images for Multi-Source and Multi-Objective Scenarios

Abstract

:1. Introduction

1.1. Motivation and Problem Statement

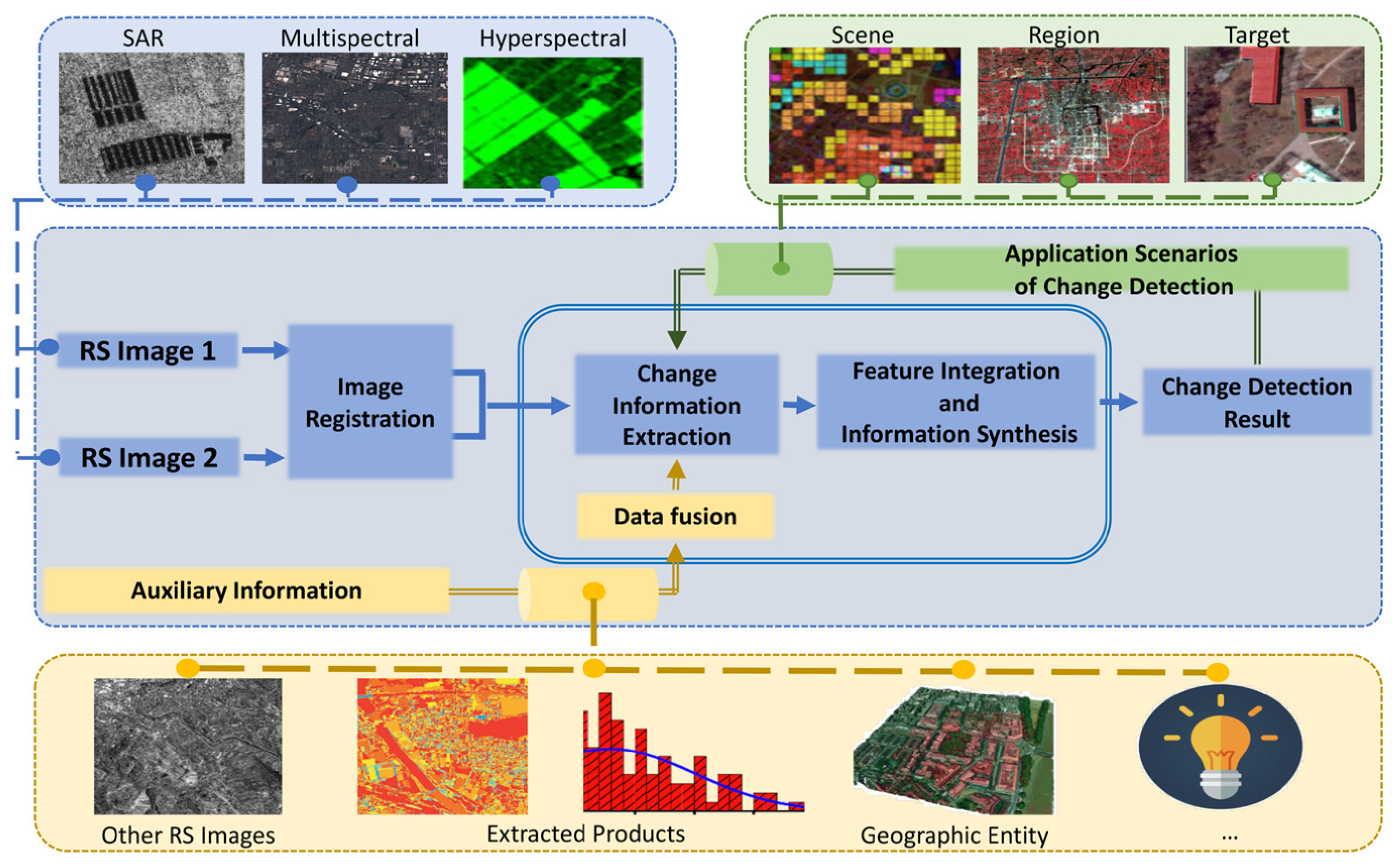

1.2. Change Detection Framework

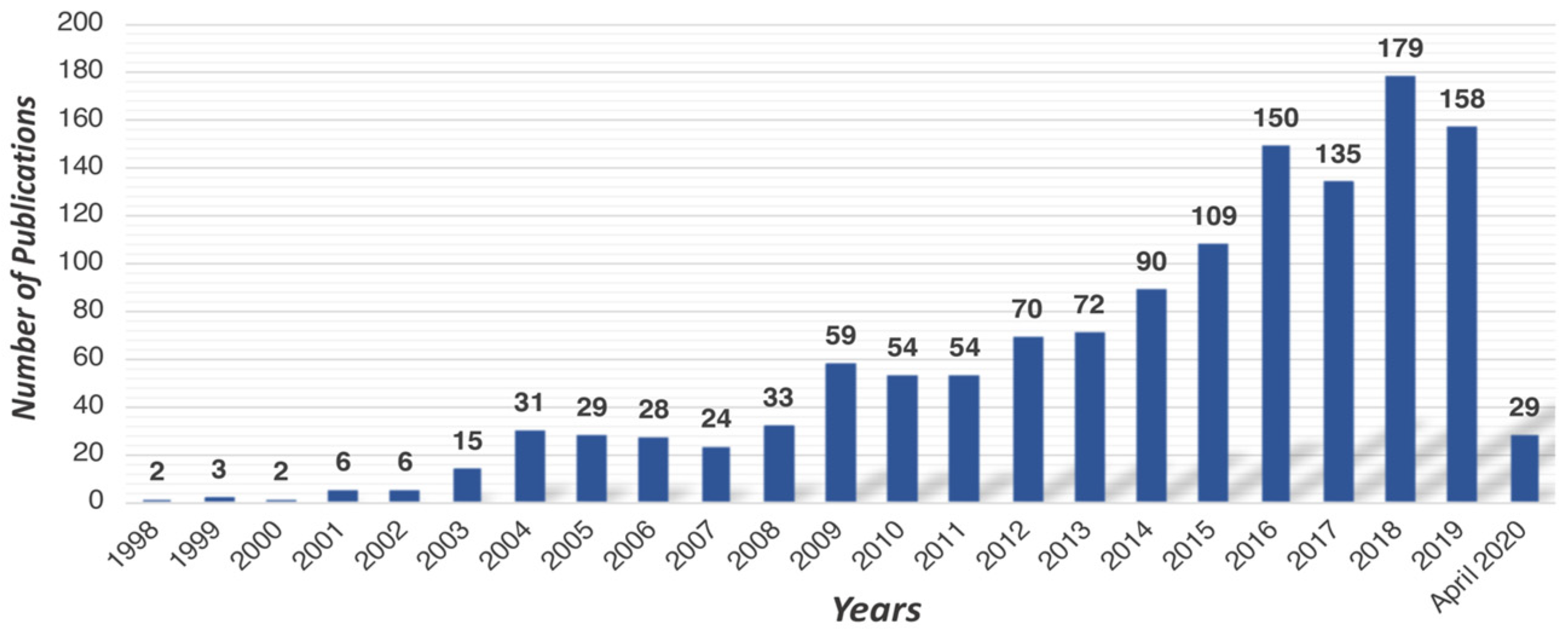

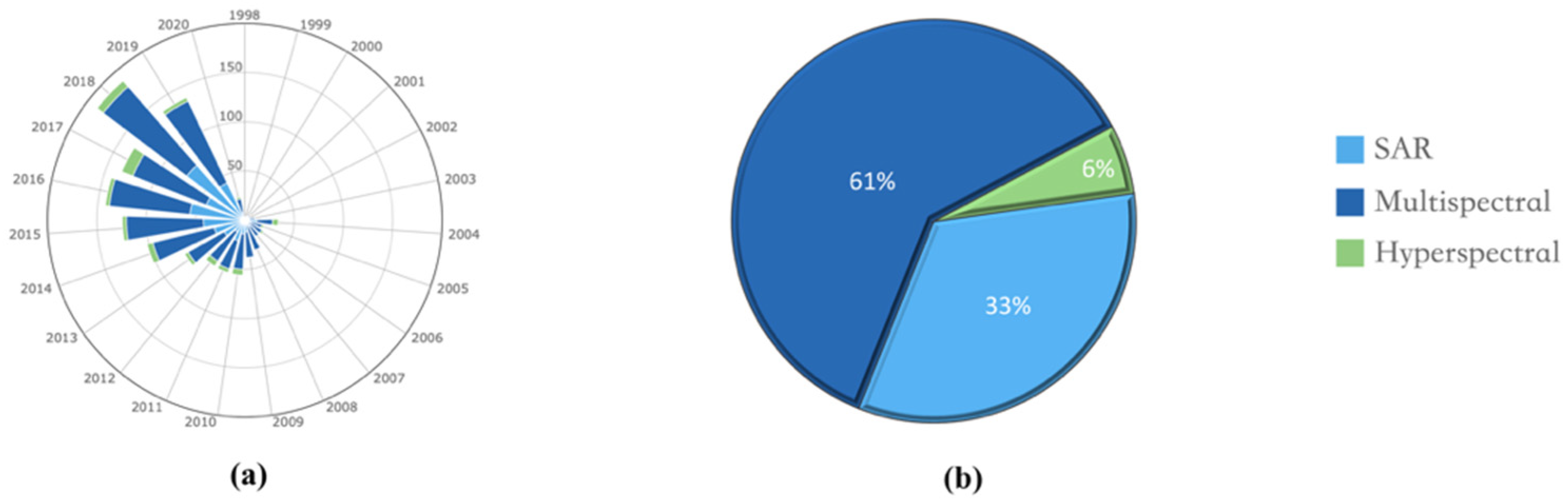

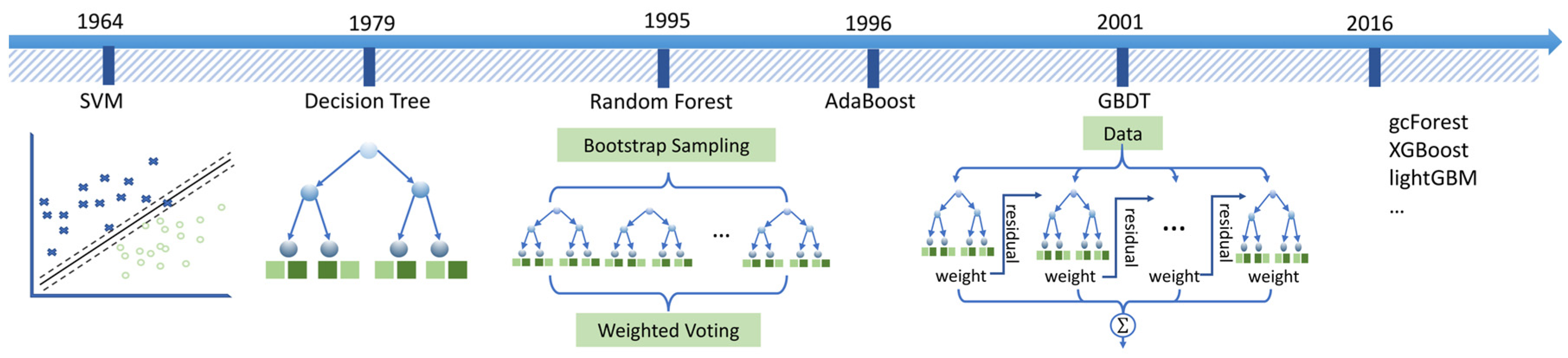

2. Dataset and Analysis

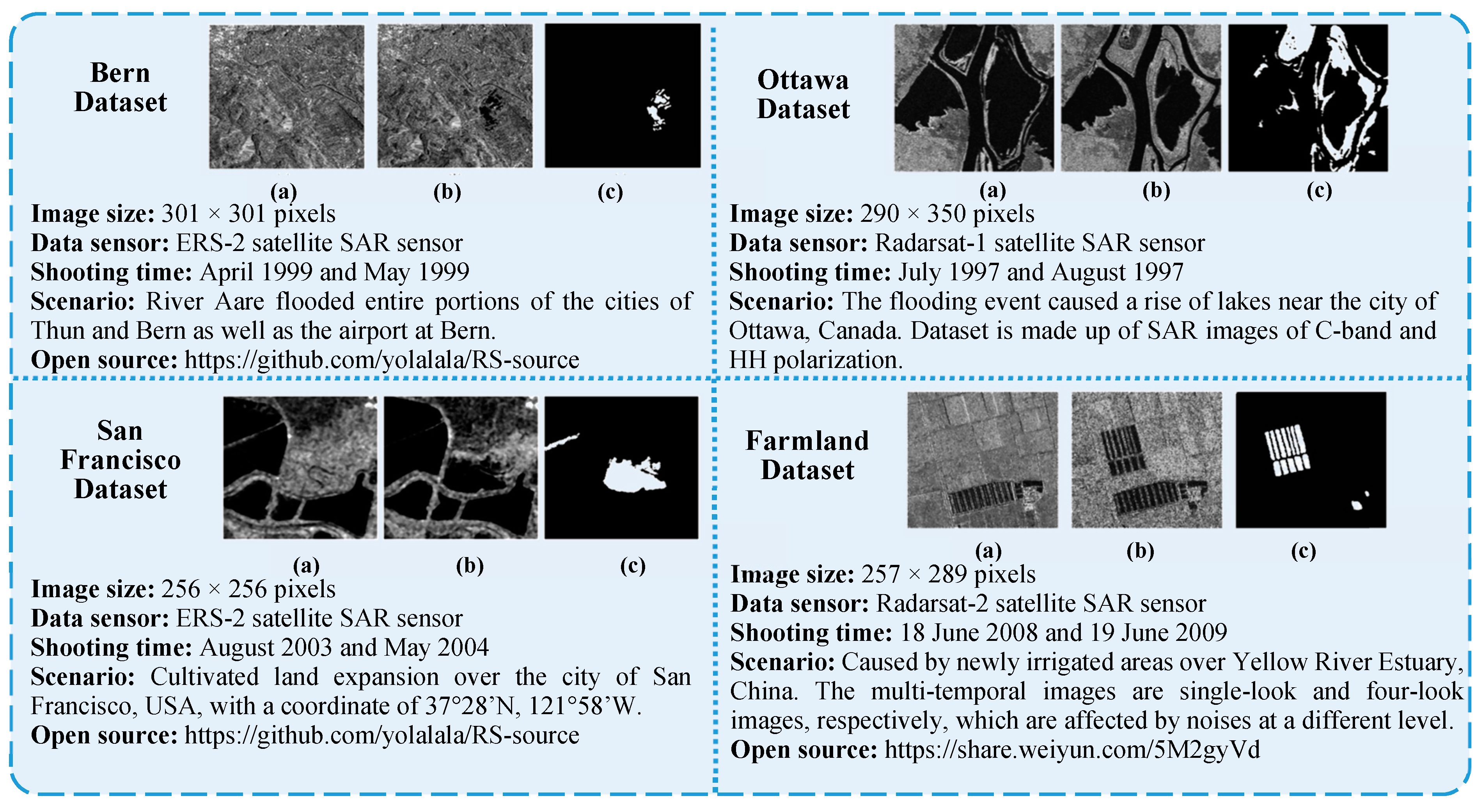

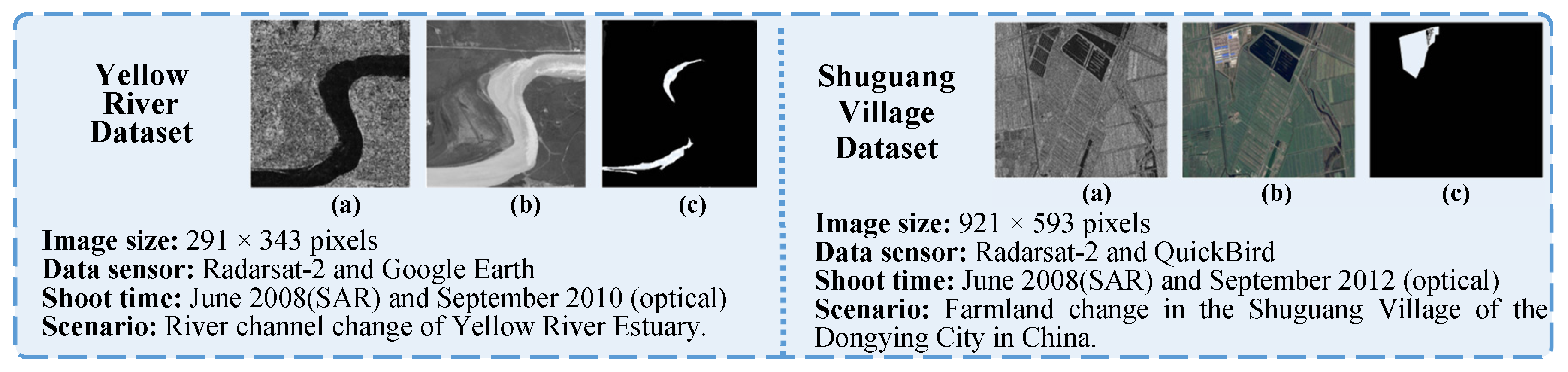

2.1. Synthetic Aperture Radar Images

2.2. Multispectral Images

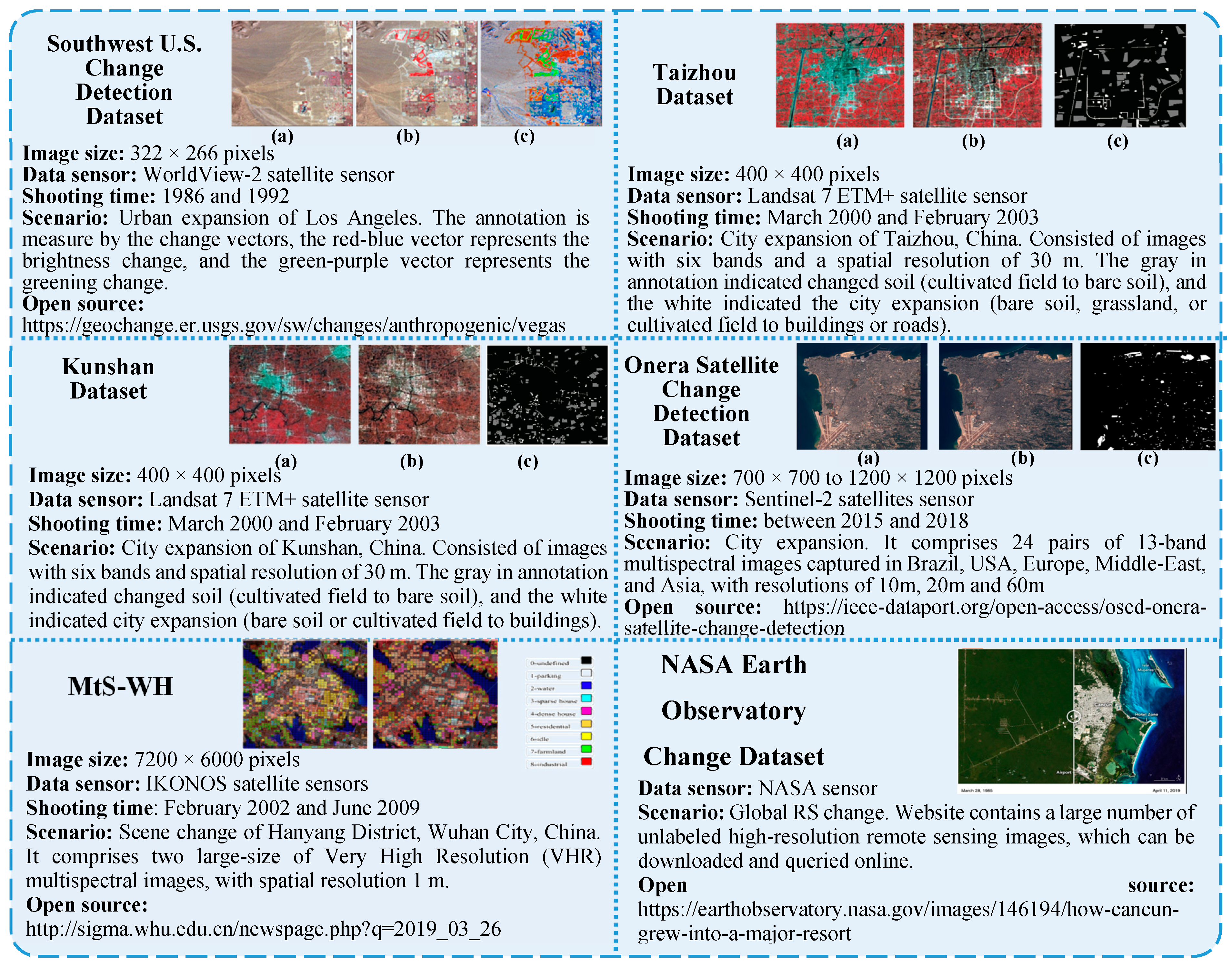

- Wide-area datasets: Wide-area datasets focus on the changes within the considerable coverage, ignoring the detailed changes of sporadic targets. As depicted in Figure 5, 6 datasets are collected. Not overly concerned with the internal details of the changes, therefore, most datasets consist of medium resolution images. Southwest U.S. Change Detection Images from the EROS Data Center [45] is the first open-source dataset for the change detection task, which applied change vector (CV) to symbolize the changes in greening and brightness. With the development of feature extraction technology, the extraction models can interpret more abstract annotation. Therefore, the annotation of datasets no longer needs to be obtained after analyzing each spectral layer, in fact, the binary values references are also available to indicate the change location, as Taizhou images and Kunshan dataset have shown [35]. Furthermore, the development of sensor technology makes it possible to acquire the wide-area high-resolution images. Onera Satellite Change Detection Dataset [46] and Multi-temporal Scene WuHan (MtS-WH) dataset [47] are representatives of high-resolution datasets for the wide-area change detection, which are annotated from the perspective of scene block change and regional details change, respectively. However, due to the limitation of the image resolutions and the subjective consciousness of the annotators, the complete correct annotation cannot be guaranteed, which is inappropriate for the conventional supervised method. In recent years, the rise of semi-supervised and unsupervised annotating methods makes the annotation no longer a problem for research [48,49]. Instead, researchers pay more attention to the diverse and real-time information acquisition. For example, the NASA Earth observatory captures the most representative multi-temporal RS images of global region changes, creating a sufficient data basis for multispectral change detection.

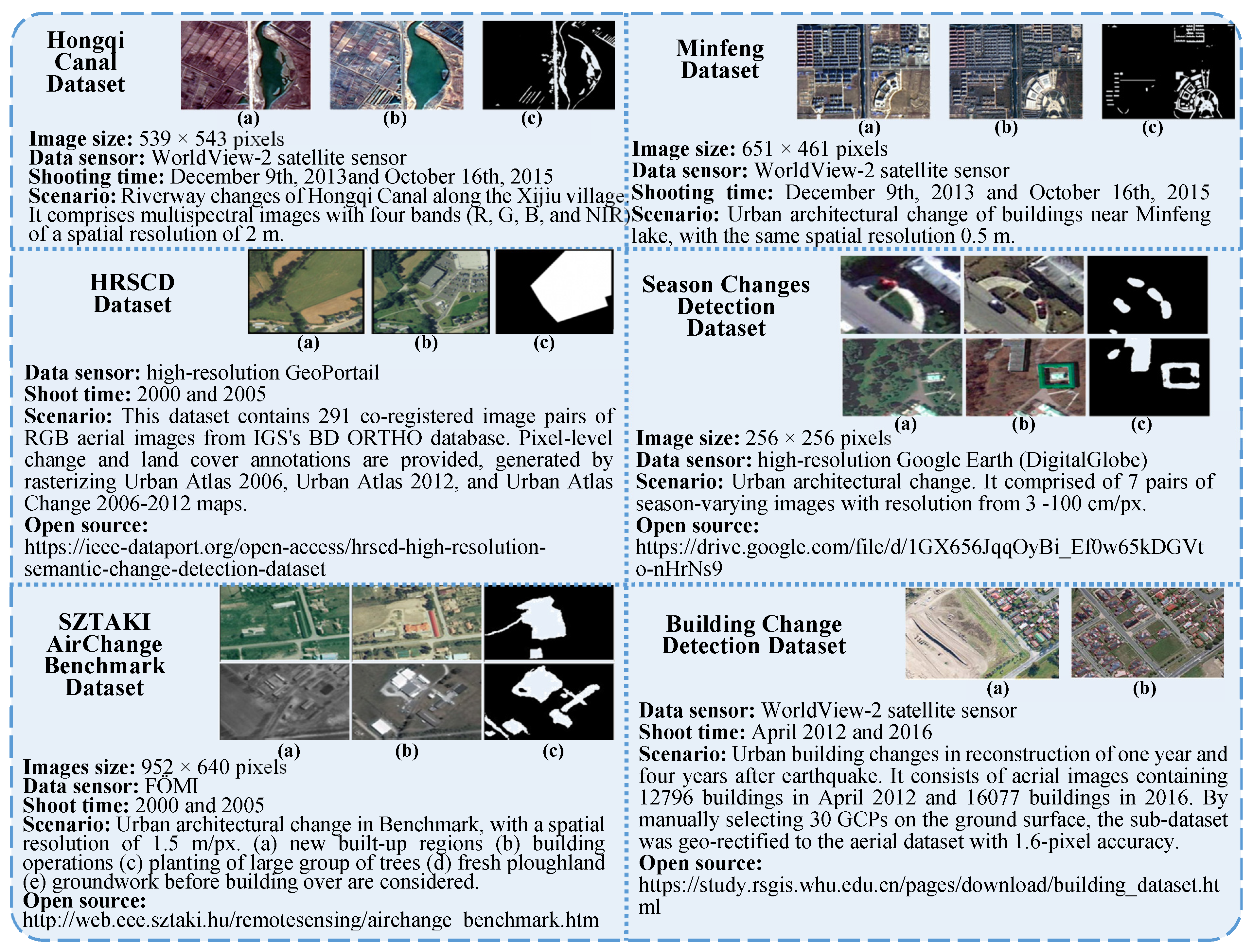

- Local-area datasets: It is an indispensable task to study the change of specific objectives in the context of urban areas, such as the building, vegetation, rivers, roads, etc. Consequently, the Hongqi canal [13], Minfeng [13], HRSCD Dataset [50], Season changes detection [51], SZTAKI Air Change Benchmark [52], Building change detection [53] are introduced, as shown in Figure 6. In order to annotate changes of target and detail region, high-resolution RS images are the primary data source. However, meanwhile bringing detailed information for detection, the high-resolution RS images contain a lot of inescapable interference. For example, the widespread shadows and distractors which have similar spectral properties to the concerned objects. To some extent, it increases the difficulty of change detection. Nevertheless, the precise morphological information brought by the details makes sense. Theoretically, owing to the unreliability of the spectrum, the morphological attributes of targets can be used to distinguish changes from the pseudo-changes.

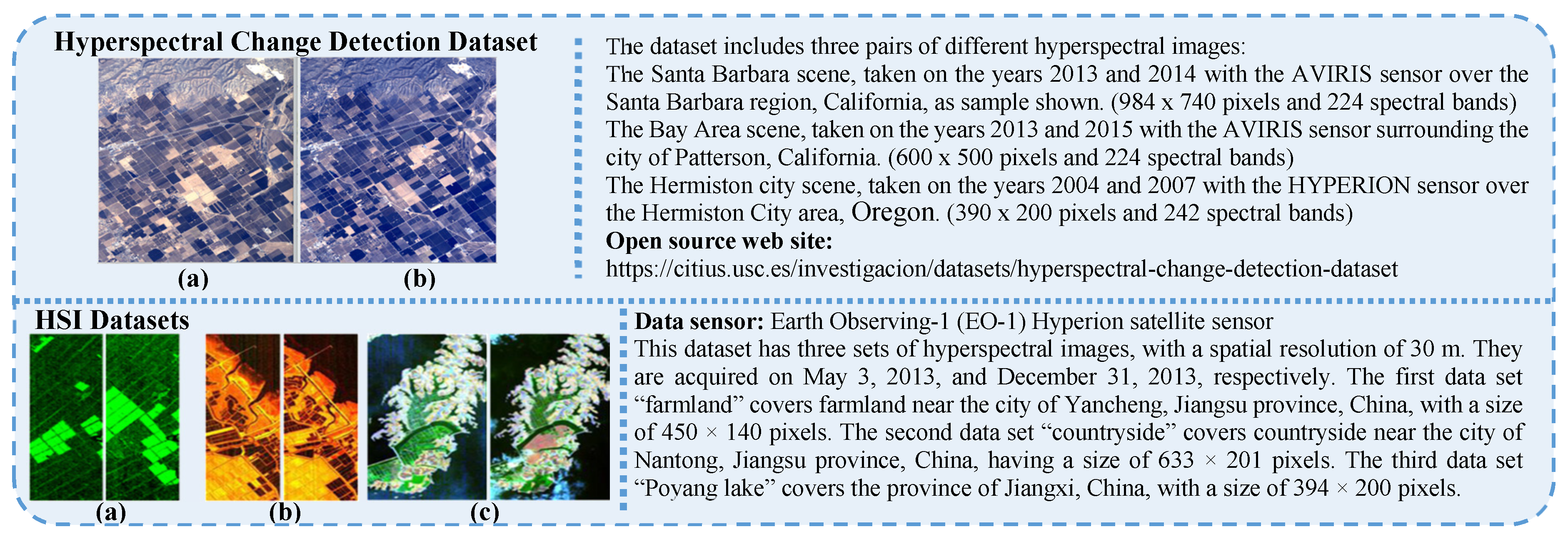

2.3. Hyperspectral Images

2.4. Heterogeneous Images

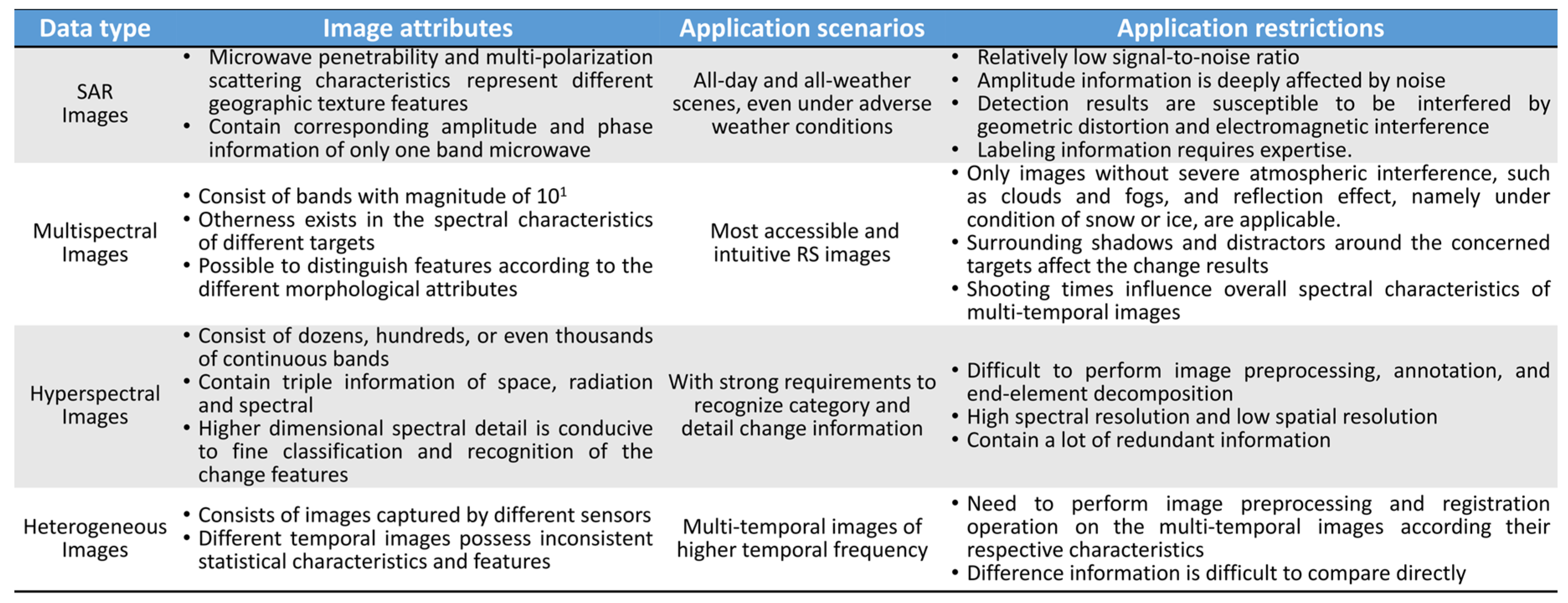

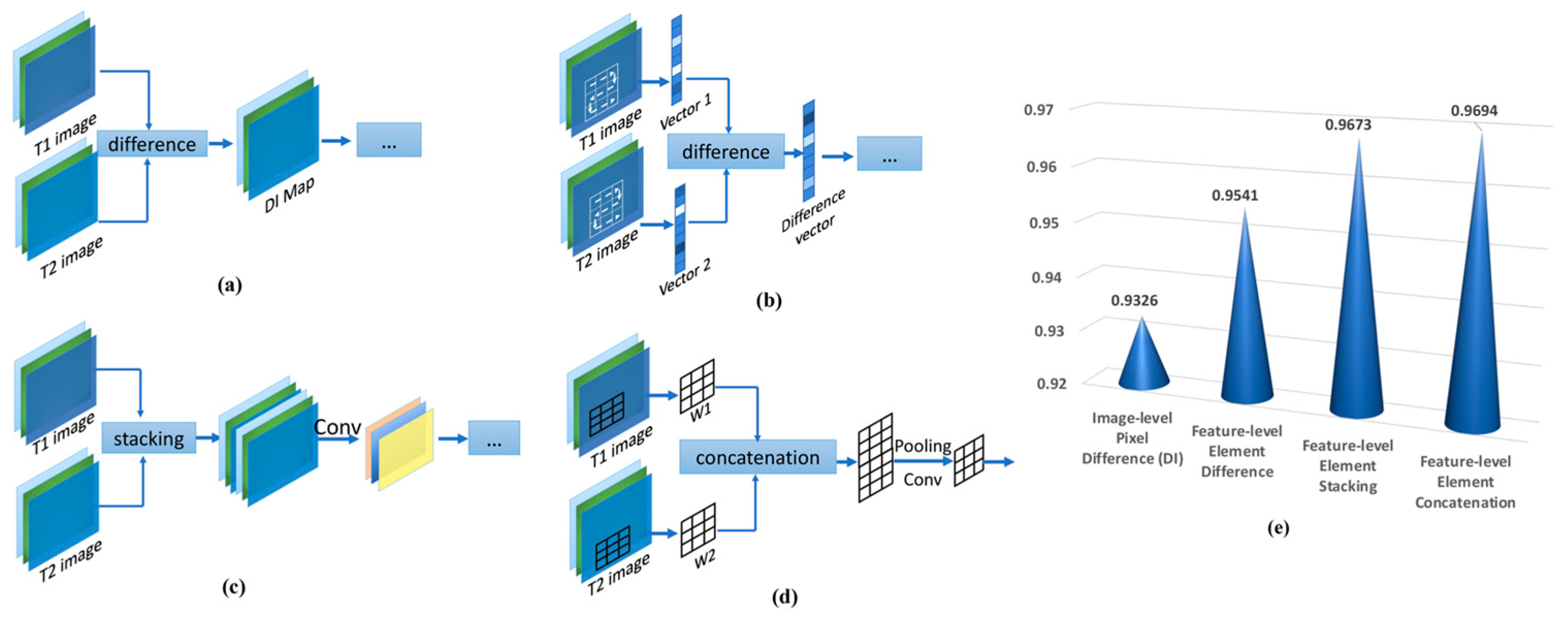

3. Change Information Extraction

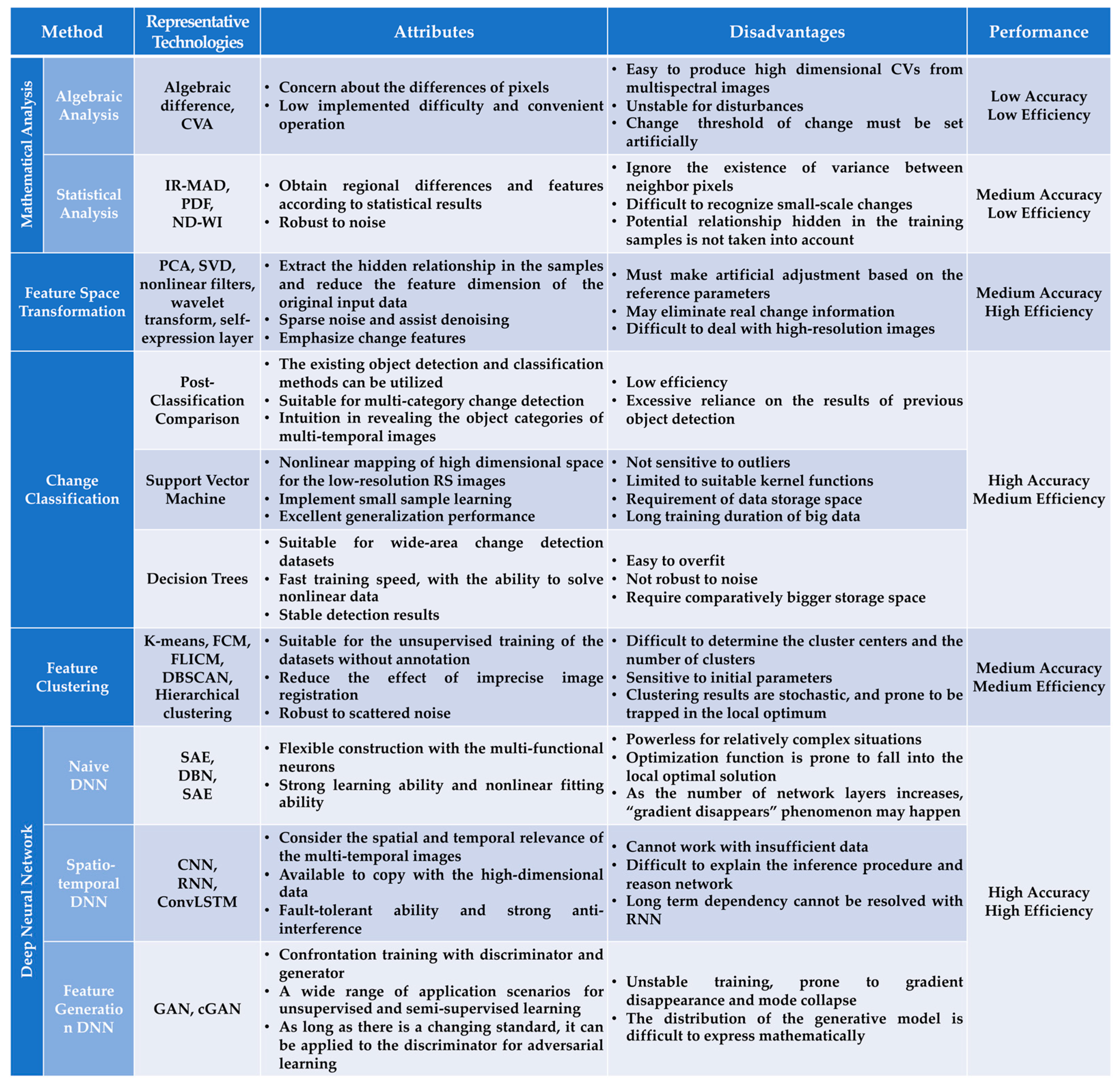

3.1. Methods of Mathematical Analysis

3.1.1. Algebraic Analysis

3.1.2. Statistical Analysis

- Direct calculation: The direct calculation methods make a difference on the individual statistics results of the original multi-temporal images. Without a doubt, even though the independent images are calculated, the correlation between multi-temporal images is still necessary for the direct calculation method. For example, iteratively regularized multivariate change detection (IR-MAD) transformation is of capacity to measure spatial correlation, namely achieving affine invariant mapping on multi-temporal images in unsupervised, and then make individual statistics on this basis [62,63]. In addition, there are other statistical parameters are available to measure the spatial correlation of multi-temporal data, e.g., Moran’s index [64], likelihood ratio parameters [8], and even trend of spectral histogram [65]. The multi-scale object histogram distance (MOHD) [66] is created to measure the “bin-to-bin” change, contrasting the mean values of the red, green, and blue bands of the pairwise frequency distribution histograms. In order to achieve targeted statistics of the concerned objects, the covariance matrix of MAD, calculated through weighted functions, i.e., the normalized difference water index (ND-WI) [67] for the water body, and the normalized difference built-up index (ND-BI) for urban building, acts an important role.

- Indirect calculation: There are two situations feasible for indirect calculation. One is carrying change statistics on the refined features. In fact, some statistical functions are difficult to be applied in the original data domain of the extracted features, taking the probability density function (PDF) as an example. However, in the dual-tree complex wavelet transform (DTCWT) domain [68], PDF is effective for probability statistics of image features. In addition, with the purpose to optimize change results, performing statistics on the raw difference results of multi-temporal images also plays an important role. Experiences reveal that it is indispensable to iterate model and optimize difference image (DI) by generalized statistical region merging (GSRM), Gaussian Mixture Model (GMM), or other optimized technology [69]. Thereinto, two points are mainly emphasized: one is to improve the completeness of change extraction by repeatedly modeling the difference image (DI), or by repeatedly testing the change with correlation statistics [70]. The other is to improve the otherness of the characteristics between the change targets and the non-change targets in DI with relevant parameters, such as the object-level homogeneity index (OHI) [71].

3.2. Methods of Feature Space Transformation

- Naive dimensionality reduction: The method aims at reducing redundancy and improving the recognizability of change by converting the original images into analyzable feature space. As the basic dimensionality reduction operations, principal component analysis (PCA) [72,73], and mapping of variable base vectors in sparse coding [74] are suitable for urban change detection. For avoiding dependence on the statistical characteristics of PCA, the context-aware visual saliency detection is combined into SDPCANet [29]. In addition, it has been proved that the specific filtering operation and wave frequency transformation highlight the high-frequency information and weaken the low-frequency information. For example, Gabor linear filtering [11], quaternion Fourier transform (QFT) [75], and Hilbert transform [76] are supplementary means for localized analysis of time or spatial frequency. Relatively, the wave frequency transformation method is more flexible. At present, it is advisable to conduct conversion of the high-low frequency on the multi-source multi-temporal images, and then make difference on the results of wavelet inverse transform [32], or directly obtain DI with wavelet frequency difference (WFD) method [75].

- Noise suppression: Noise interference is an unavoidable problem in image detection, especially for SAR images. Singular value decomposition (SVD) [77] and sparse model [28] can map high-dimensional data space to low-dimensional data space, meanwhile undertaking auxiliary denoising. For example, the adapted sparse constrained clustering (ASCC) method [78] integrates the sparse features into the clustering process, utilizing the coding representation of only meaningful pixels. Or based on relationships between whole and part, processing filtering operation on the boundary pixels to confirm properties of center pixels is also desirable for noise suppression [79].

- Emphasize changing feature: Instead of reducing invariant features or noise through naive dimensionality reduction, the enhancement of changing features spotlights real changes and focuses on the model’s ability to recognize the change. Generally speaking, it is mainly contemplated from three points. The first is to purify the preliminary extraction features, such as adding a non-linear orthogonal subspace into the extraction network as a self-expression layer [80]. In addition, from the perspective of pixel correlation, iterating with the relationship between the surrounding pixels and the central pixel in feature space [81]. The other is to construct energy feature space, and emphasis targets with saliency mask on the relevant key points [81].

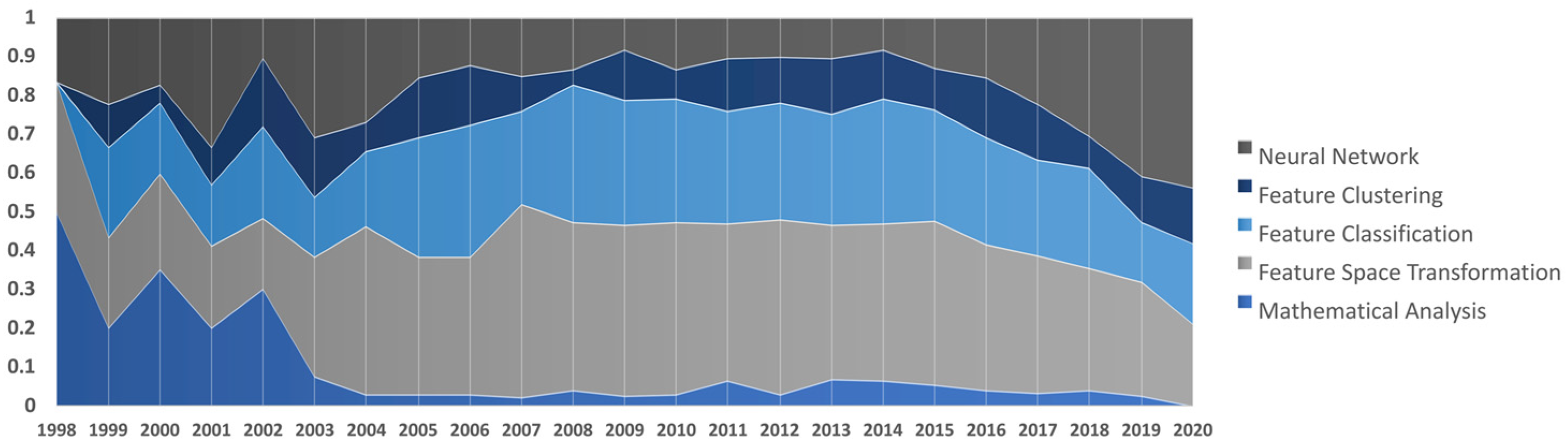

3.3. Methods of Feature Classification

3.4. Methods of Feature Clustering

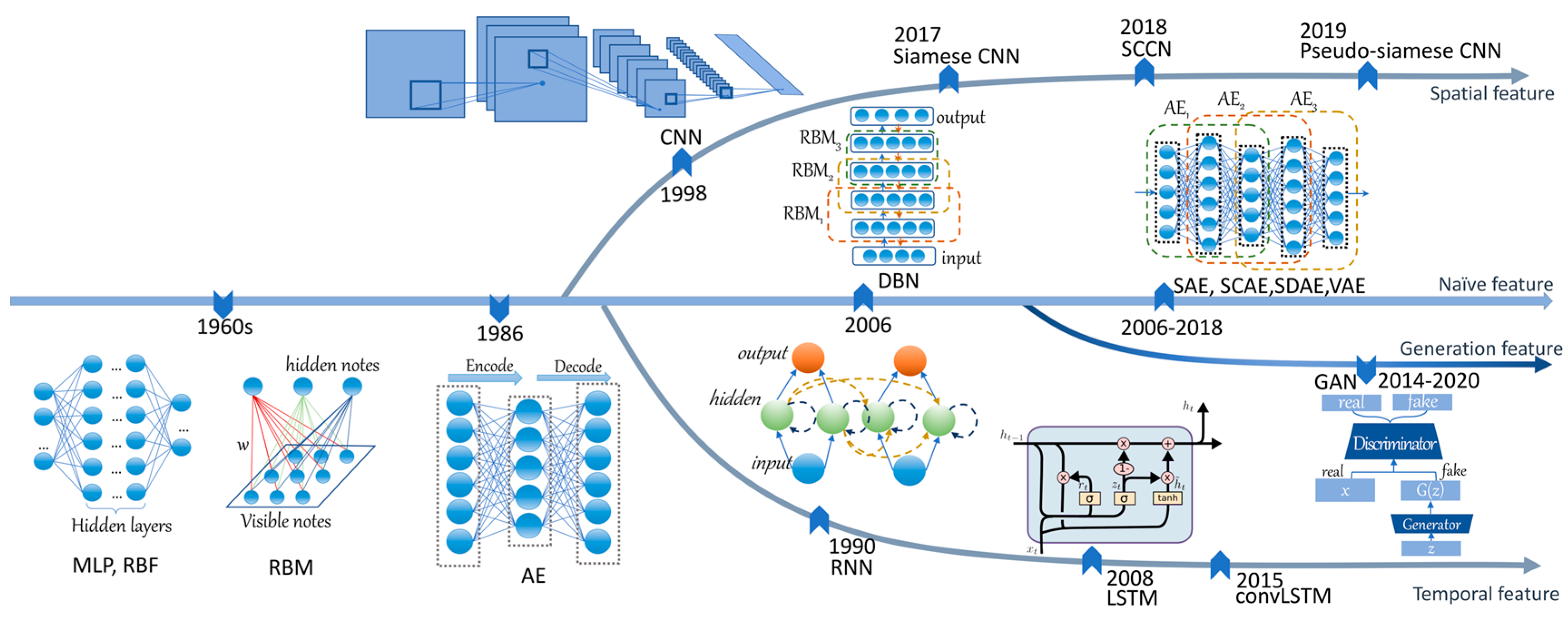

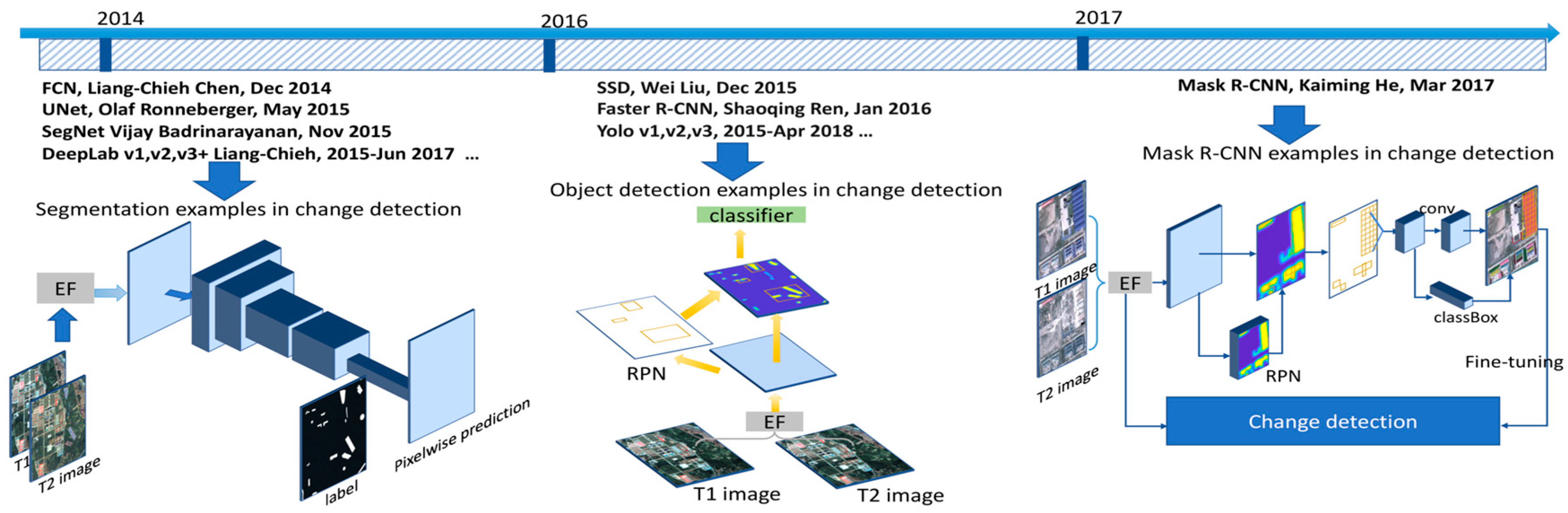

3.5. Method of Deep Neural Network

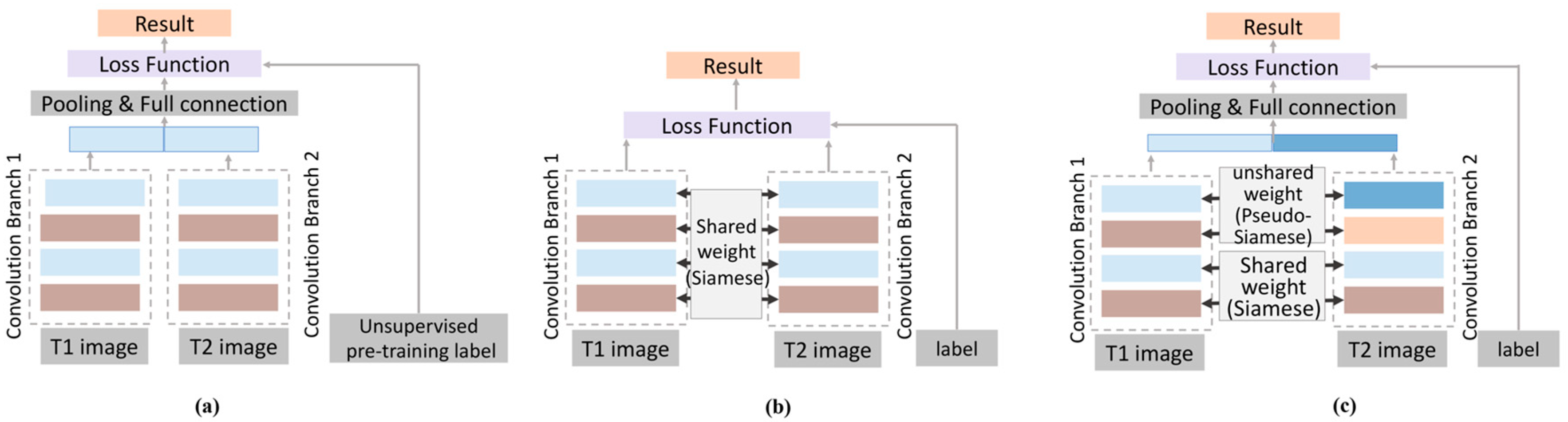

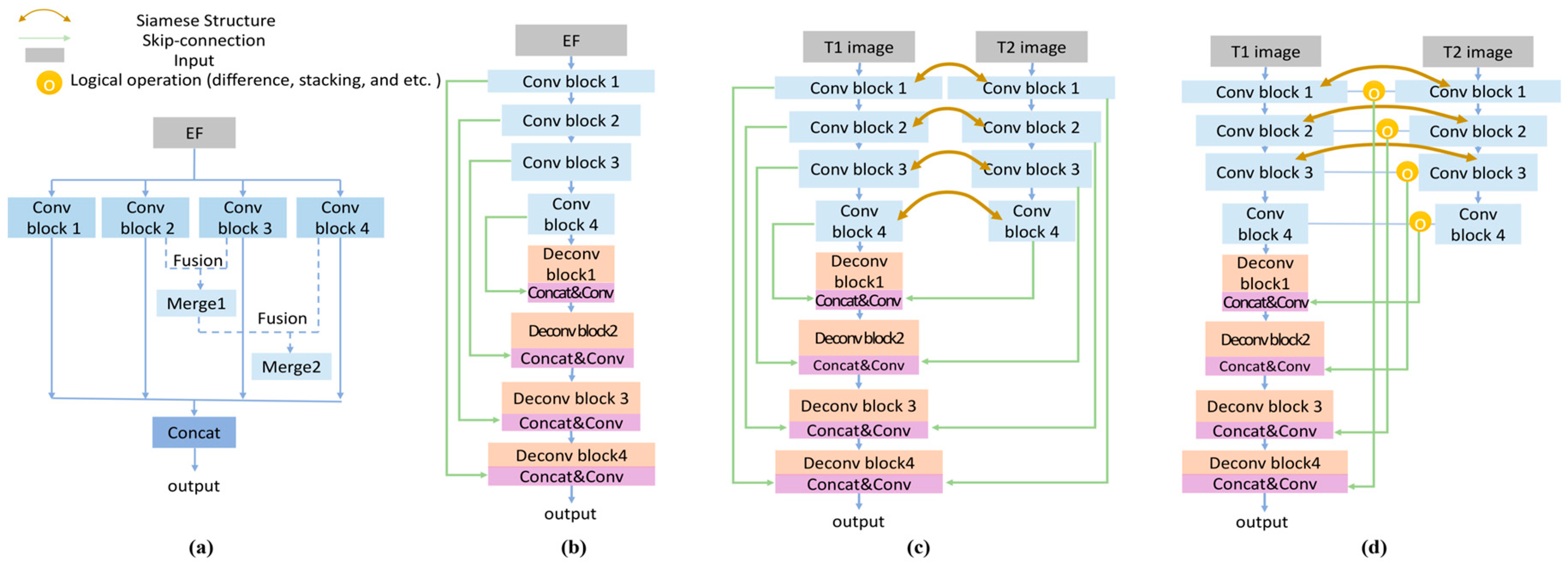

3.5.1. Naive DNN

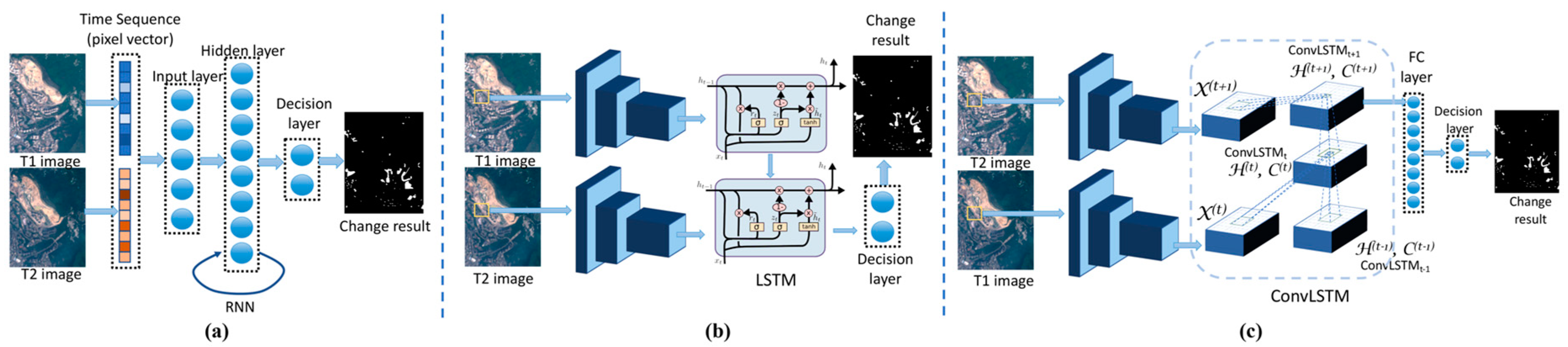

3.5.2. DNN for Spatio-Temporal Features

3.5.3. DNN for Feature Generation

- Noise interference: In addition to the annotated SAR data [42], the pre-processed differential data is also available to act as a criterion for variation generation in the GAN [53,158,159]. Experiences prove GAN possesses the ability to recover the real scene distribution from the noisy input. Taking the conditional generative adversarial network (cGAN) as an example, in the process of generating the pseudo-change map, Lebedev [51] proposed to introduce artificial noise vectors into the discriminant and generation model, so as to make the final results stable to the environment change and other random condition.

- Spectrum synthesis: Informative bands are contained in hyperspectral images, however, owing to the confusion and complexity of the end-element, there is little research that fully utilizes all available bands. In spite of this, GAN still provides a good solution to the problem. For example, it prompts researchers to assemble the relevant wavebands into a set. Based on the divided 4 spectral sets of 13 spectral bands of Sentinel-2, the quadruple parallel auxiliary classifier GAN transfers each set into a brand-new feature layer, then performs variance-based discriminative on generated four layers for change emphasis [160].

- Heterogeneous process: Owing to the dissimilarity in imaging time, atmospheric conditions, and sensors, it is not feasible to directly model heterogeneous images. Researchers demonstrate that with the aid of cGAN, it is feasible to directly transform the non-uniform SAR and optical images into new optical-SAR noise-enhanced images [161], or indirectly compare multi-temporal images with generated cross-domain images [162]. In contrast, as an indirect method to implement a consistent observation domain, the GAN-based image translation converts one type of image into another type, modifying heterogeneous images into isomorphic [121].

3.6. Summary

4. Data Fusion of Multi-Source Data

4.1. Fusion between RS Images

- Fusion between basic RS images: As introduced in Section 2, the grayscale information contained in the multiple bands of multispectral optical images facilitates the identification of features and categories. However, the uncertain shooting angles of optical sensors result in multiform shadows adhere to urban targets in optical images, which becomes an inevitable interference. On the other hand, in spite of the disadvantage of the low signal-to-noise ratio, the backscattering intensity information of SAR images is unobtainable for other sensors. In fact, it is associated with the target information about the medium, water content, and roughness. Currently, in order to achieve features fusion of the multi-source images in the same time phase, pixel algebra, RF regression [163], AE [164], even NN [165] have been proved desirable. Not content with its fusion ability and fusion data amount, researchers attempt to refine fused features by repeated iterating. For example, Anisha [78] employs sparse coding on the initial fused data. Nevertheless, limiting by scattered noise in SAR, other groups have also raised concerns in decision level, for example, fusing independent detection results of multi-source images with the Dempster–Shafer (D-S) theory [89].

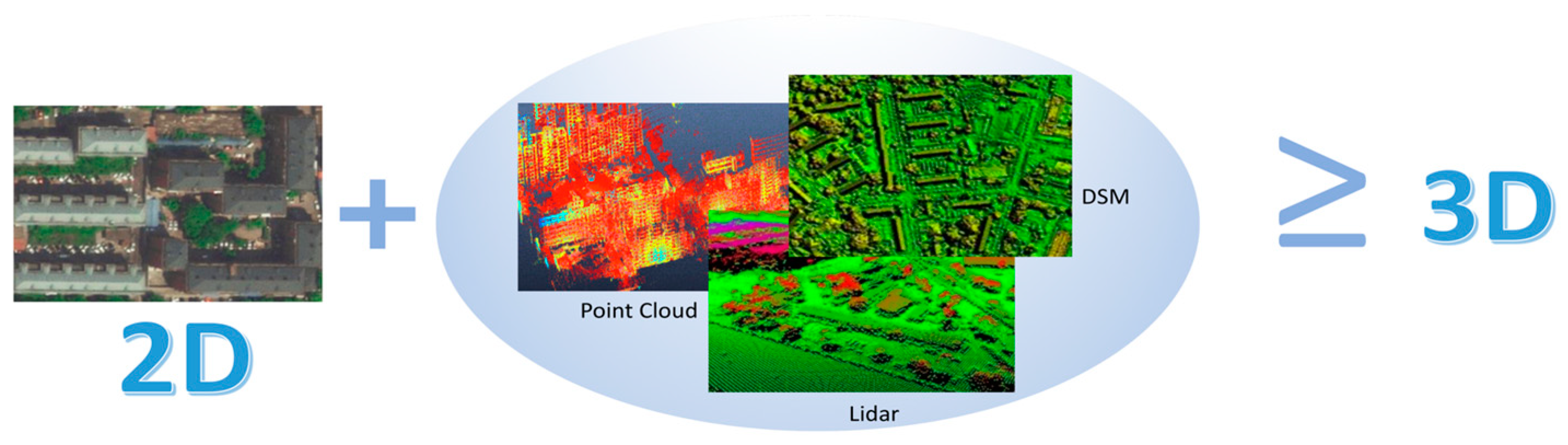

- Fusion between multi-dimensional images: Change detection of the conventional two-dimensional (2D) images is susceptible to be affected by spectral variability and perspective distortion. Conversely, taking 3D Lidar data as an example, it not only provides comprehensive 3D geometric information but also delivers roughness and material attributes of ground features. By virtue of complementary properties of Lidar and optical images, many scholars have implemented studies on data fusion [44]. In addition to SAR images, 3D Tomographic SAR images, which possess the similar disadvantages such as signal scattering and noise interference, are also reasonable to be fused with 2D optical images. As a matter of fact, it is feasible to realize fusion at the data level. For instance, directly fusing 2D and 3D by feature transformation [166] or fusing after converting 2D images into 3D with the aid of back-projection tomography [167]. It is not limited to data level, decision level is also feasible, for instance, fusing detection results came from different dimensions according to the significance of targets, like color, height [168]. In addition, the registration is still unavoidable for the multi-dimensional, multi-temporal images. Therefore, in order to eliminate the interference of incomplete registration, Qin [169] proposed that the final change should be determined by not only the fusion products but also on the results generated by the unfused data.

4.2. Fusion between Extracted Products and RS Images

- Fusion of extracted features and original images: On the one hand, enhancing the characteristics of change objects is the most fundamental need for data fusion in change detection. At present, the extracted information, such as saliency maps [38], is advocated to emphasize and indicate the existence of change. On the other hand, improving detection accuracy is more critical, including the definite boundaries of changed objects. Considering all these two effects, Ma [94] proposed to combine the probability maps obtained from a well-trained gcForest and mGMM with the original images or gradient information extracted from DI. Based on the D-S theory, Wu [170] has indicated to incorporate original images with the generated edge-derived line-density-based visual saliency (LDVS) feature and the texture-derived built-up presence index (PanTex) feature. In addition, regardless of time efficiency, it turns out that the fusion of multiple similarity features, such as grey level co-occurrence matrix, Gaussian Markov random field, and Gabor features, can also add additional spatial information and improve the accuracy of change detection [171].

- Fusion of extracted results: Multi-temporal RS images can be disassembled into several sub-data, which emphasizes abundant object characteristics and spatial behaviors of changing targets, such as shapes and distance of the surrounding environment. Recognizing the diversity of data utilization, it is possible to fuse sub-results extracted from sub-data. For example, separately analyzing the combinations of certain bands in multispectral images [46] or fusing the results extracted from the multi-squint views filtered from the poly-directional pulses of SAR images with the single-look change result [172]. Similarly, not only the results of diverse data, but the results of the multiple change detection methods are also desirable to be fused [173].

4.3. Fusion of Characteristics of Geographic Entity and RS Images

- Wide-area changes: Digital elevation model (DEM) describes the spatial distribution of geomorphic forms with data collected through contour lines, while the point clouds and digital terrain model (DTM) contain attribute information of other topographic details, such as slope and aspect other than elevation. For analyzing wide-area urban changes, they are capable to be incorporated into RS images [175]. In fact, even the 3D point cloud and DEM information can be directly transformed into 3D RS images [131,176]. Moreover, due to the high acquisition and labeling cost of DTM and point cloud data, in order to achieve the same effect, Chen [24] proposed to reconstruct 2D images obtained from the unmanned aerial vehicle into 3D RGB-D maps. Despite the spatial dimension, the temporal dimension is also worthy of consideration. Taking [157] as an example, Khandelwal proposed to project the extracted seasonal image features into the temporal features of the corresponding land cover, unifying the semantic information extracted from image characteristics with the semantic information deduced from the time dimension.

- Small-scale change: For urban subjects, such as buildings, their existence and construction state can be reflected in the height change in RS data. Different from DEM, the digital surface model (DSM) accurately models shapes of the existing targets beyond terrain, representing the most realistic expression of ground fluctuation. It has been found that collaborating height change brought by DSM and texture difference extracted from RS images can get rid of variation ambiguity caused by conventional 2D change extraction, and even profitably to observe demolition and construction process in the case of inherent architectures [177,178].

4.4. Fusion of Other Information and RS Images

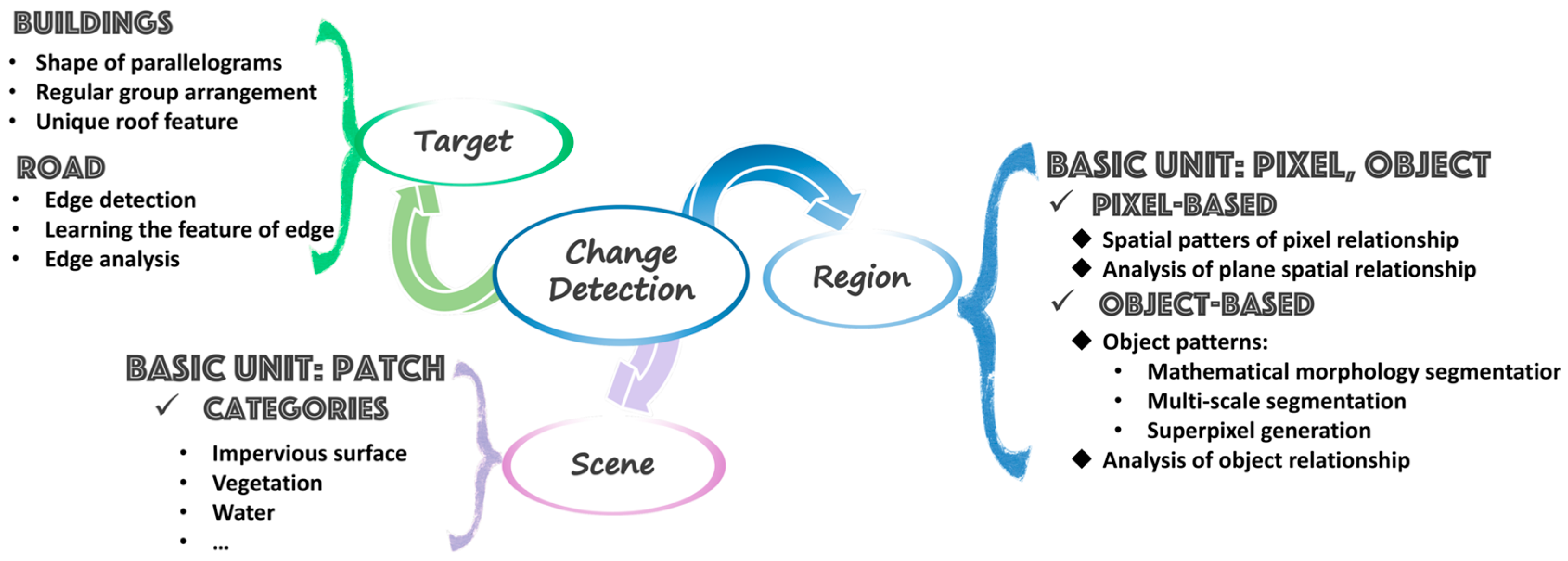

5. Analysis of Multi-Objective Scenarios

5.1. Change Detection Methods for Scene-Level

5.2. Change Detection Methods for Region-Level

5.2.1. Pixel-Based Change Detection

- Spatial patterns of pixel relationship: Regardless of the RS image category, the relationship of adjacent pixels within the same image (layer) is the most concerned, as shown in (a) in Figure 20. (Its relevant methods are described in the next part.) Nevertheless, most RS images, such as RGB multispectral images, are comprised of different spectrum intensity images. Generally speaking, picture analysis is carried out layer by layer [36,134], and the final result is obtained through information synthesis between layers. However, it ignores the correlation within the spectrum (bands), as (b) in Figure 20. Recognize its importance, 3D convolution [14] and pseudo cross multivariate variogram (PCMV) [183] are proposed to quantify and standardize the spatio-temporal correlation of multiple spectrums. For change detection, in addition to considering the internal correlation of the same image, the correlation of pixels between multi-temporal images is indispensable, as (c) in Figure 20. Interestingly, not only the correlation between pixels in the same position [8], but in the different positions are available to measure change. For example, Wang [184] proposed to connect the local maximum pixels extracted by stereo graph cuts (SGC) technology to implicitly measure the pixel difference by energy function. In fact, many scholars have realized the decisive effect of the above spatial patterns, among which the most incisive one is the hyperspectral mixed-affinity matrix [15], as shown in Figure 21. For each pixel in multi-temporal images, it converts two one-dimensional pixel vectors into a two-dimensional matrix, excavating cross-band gradient feature by linear and nonlinear mapping among m endmembers.

- Analysis of plane spatial relationship: It has been widely recognized that exploring the spatial relationship of pixels within the same layer (namely plane spatial relationship) improves the awareness of central pixels, and even eliminates noise interference according to the potential neighborhood relationship. Determining which sets of pixels need to be associated is the first step in the association. In addition to the fix-size window [172], the adaptive regions obtained by adaptive clustering of spectral correlation [107] or density correlation [185], and iterative neighborhoods around the high confidence changed or unchanged pixels [77] are feasible basic processing units. Extracting the correlation of pixels within the unit is another challenge. In the early algebraic methods, logarithmic likelihood ratio (LLR) is applied to represent the difference between the adjacent pixel; log-ratio (LR) and mean-ratio (MR), and even log-mean ratio (LMR) values [186] can indicate the difference between the single-pixel. Practice has been proved that taking LLR as weights to participate in the weighted voting of the central pixel with single-pixel algebraic indicators is effective [103]. Similarly to LLR, the neighborhood intensity features are also available to make a contribution to the center by Gaussian weighted Euclidean [127]. In addition, the misclassified pixels can be corrected through reasonable analysis of pixel correlation, for instance, the center point with high credibility is available to verify and correct the neighborhood features. To some extent, it can address the need for accurate boundaries and integral results without hole noise. Taking [187] as an example, to identify real and pseudo changes, a background dictionary of local neighborhood pixels is constructed through the joint sparse representation of random confidence center points. The aim is to carry out the secondary representation of the unchanged regions, and then modify the pixels with inconsistent representation in real-time.

5.2.2. Object-Based Change Detection

- Object patterns: Multi-scale objects are available to be obtained by watershed transform [61] or the iterative region growth technology based on random seed pixels [65], uncomplicatedly. Refined from watershed transform, Xing [86] combines the scale-invariant feature transform with the maximally stable extremal region (MSER) to obtain a multi-scale connected region. Under the guidance of regional growth, the fractal net evolution approach (FNEA) [36,66] merges pixels into objects with heterogeneous shapes within the scale scope defined by users. Superior to the above mathematical morphology methods, the segmentation technology automatically acquires multiform and scale objects in a refinement process of “global to local”. Representatively, multi-scale segmentation [100,170,188,189] combines multiple pixels or existing objects into a series of homogeneous objects according to scale, color gradient weight, or morphological compactness weight. In addition, as a process to produce homogeneous, highly compact, and tightly distributed objects that adhere to the boundaries of the image contents, the superpixel generation method [120,122] achieves similar effects to segmentation. At present, superpixel is usually generated by the simple linear iterative clustering (SLIC) algorithm [81,113,178]. In addition, the multi-level object generation increases the granularity of the generated objects layer by layer, which benefits feature extraction of multi-scale changing targets. It is worth noting that all of the above methods have the possibility to generate objects with multi-level scales. The synthetic results of multi-scale generation methods with different performance can also be considered as the multi-level objects set [190].

- Analysis of object relationship: The relationship patterns of objects are similar to the pixel-based methods. Thereinto, we only take the association of adjacent multi-scale objects as an example, as shown in (a) of Figure 22. Zheng [60] proposed to determine the properties of the central object by a weighted vote on the change results of surrounding objects. Facing diverse RS change scenarios, in order to avoid that the scale range of multi-scale objects is always within an interval, it is the inevitable choice to consider the relationship of the generated objects with multi-level scales. In fact, applying majority voting rule with object features on multi-level object layers is still advisable [90]. In addition, different from the realization of multi-scale object generation on the same image, in the change detection task, the generated objects of multi-temporal images are often completely different. To dodge the problem, stacking all the time phases (images) into a single layer, and then performing synchronous segmentation is doable [17], in (d) of Figure 22. However, it needs to be pointed out that not only the spectral, texture, spatial features, and other changing features extracted from the object pairs are identifiable, but the morphological differences of objects in different time phases directly indicate the occurrence of change [13]. Therefore, in view of the above advantages, scholars put forward assigning [190] and overlaying [191] to face the diverse challenges of multi-temporal objects, as shown in the (e) and (f) of Figure 22. Experiments demonstrate that it is meaningful to take multi-level theory and morphological characteristics into comprehensive consideration. In other words, for multi-level results, the richer the fusion information, the more accurate the results, as shown in (c) of Figure 22.

5.3. Change Detection Methods for Target-Level

5.3.1. Building Change Detection

- From a bird’s-eye view, the roofs of buildings are mostly parallelograms or combinations of parallelograms. There are three solutions to this problem: (i) Only objects with the corresponding shape are generated, such as obtaining rectangular outputs with object detection [151]. (ii) Exclude objects with inconsistent shape. Multi-attribute profiles (EMAP) are applied in [192], which provide a set of morphological filters with different thresholds to screen out error objects. Pang [178] proposed to further refine the generated rectangular-like superpixels with the CNN-based semantic segmentation method. (iii) Optimize the extracted features or generated results to rectangular shapes, or enhance the expression of the boundary. In addition to the statistics-based histogram of oriented gradients (HOG) and local binary pattern (LBP) [82] to extract linear information, Gong [193] illustrated to integrate the corner and edge information through the phase consistency model, and optimizes the boundary by conditional random field (CRF). Wu [194] proposed to extract the edge probability map of the generated self-region, and optimize the map with the vectorized morphology. A unique discovery is in [177], it adjusts the integration of the generated change results by the attraction effect of the firefly algorithm; Ant colony optimization is used to find the shortest path between corner pixels to the rectangular direction.

- Buildings generally appear in groups, with regular formation. For the low separability between the building and the other classes, [85] proposed to focus on the neighborhood relationship between the training samples and the other objects with the consistent label, that is, considering the arrangement mode of the buildings in the image. The relationship learning (RL) and distance adjustment are used to improve the ability to distinguish changing features.

- The unique roof features of the building as well as the building shadows bring auxiliary information for the change detection. In some cases, roof features are hard to learn, especially, when targets occupy a small proportion in the overall dataset. Therefore, it is feasible to replace the binary cross-entropy loss function with the category cross-entropy loss function to emphasize the attribute difference between the buildings and other categories [127].

5.3.2. Road Change Detection

5.4. Summary

6. Conclusions and Future Trends

- Heterogeneous data. Whether spectrum or electromagnetic scattering, the consistency of multi-temporal data is necessary but difficult to maintain for the change detection task. This problem not only affects the detection of homogeneous images, especially heterogeneous images are more disturbed by data inconsistency. In the future, more attention should be paid to solving the heterogeneity of the multi-temporal images in an end-to-end system, such as feature comparison through key point mapping.

- Multi-resolution images. In order to obtain multi-temporal images with shorter temporal intervals, in practice, the multi-temporal images taken at the same location but from different resolution sensors have to be employed. However, few studies have explored the problem of change analysis between multi-scale or multi-resolution images.

- Global information of high-resolution and large-scale images. Owing to the limits of computing memory and time, the high-resolution and large-scale images are usually cut into patches and then fed into the model randomly. Even if a certain overlap rate is guaranteed during image slicing and patch stitching, it is possible to predict a significant pseudo-change region over a wide range of unchanged regions. The reason is only local features of each patch are predicted each time, whereas the interrelation between patches is not considered at all. Therefore, it is instructive to set a global correlation criterion of all patches according to their position relation or pay attention to the global and local by pyramid model during image processing.

- Wholesome knowledge base for change detection. Due to the diversity of data sources and requirements, constructing a change detection knowledge base, namely a comprehensive change interpreter, can improve the generalization ability of the model. Therefore, in the future, scholars should try to disassemble changing features into pixel algebra layer, feature statistics layer, visual primitive layer, object layer, scene layer, change explanation layer, and multivariate data synthesis layer. Then, the profitable knowledge can be extracted from the unknown input through integrating the logical mechanism of each layer.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.-S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef] [Green Version]

- White, L.; Brisco, B.; Dabboor, M.; Schmitt, A.; Pratt, A. A Collection of SAR Methodologies for Monitoring Wetlands. Remote Sens. 2015, 7, 7615–7645. [Google Scholar] [CrossRef] [Green Version]

- Muro, J.; Canty, M.; Conradsen, K.; Hüttich, C.; Nielsen, A.; Skriver, H.; Remy, F.; Strauch, A.; Thonfeld, F.; Menz, G. Short-Term Change Detection in Wetlands Using Sentinel-1 Time Series. Remote Sens. 2016, 8, 795. [Google Scholar] [CrossRef] [Green Version]

- Bioresita, F.; Puissant, A.; Stumpf, A.; Malet, J.-P. A Method for Automatic and Rapid Mapping of Water Surfaces from Sentinel-1 Imagery. Remote Sens. 2018, 10, 217. [Google Scholar] [CrossRef] [Green Version]

- Huang, F.; Chen, L.; Yin, K.; Huang, J.; Gui, L. Object-oriented change detection and damage assessment using high-resolution remote sensing images, Tangjiao Landslide, Three Gorges Reservoir, China. Environ. Earth Sci. 2018, 77, 183. [Google Scholar] [CrossRef]

- Ma, L.; Li, M.; Blaschke, T.; Ma, X.; Tiede, D.; Cheng, L.; Chen, Z.; Chen, D. Object-Based Change Detection in Urban Areas: The Effects of Segmentation Strategy, Scale, and Feature Space on Unsupervised Methods. Remote Sens. 2016, 8, 761. [Google Scholar] [CrossRef] [Green Version]

- Wen, D.; Huang, X.; Zhang, L.; Benediktsson, J.A. A Novel Automatic Change Detection Method for Urban High-Resolution Remotely Sensed Imagery Based on Multiindex Scene Representation. IEEE Trans. Geosci. Remote Sens. 2015, 54, 609–625. [Google Scholar] [CrossRef]

- Liu, W.; Yang, J.; Zhao, J.; Yang, L. A Novel Method of Unsupervised Change Detection Using Multi-Temporal PolSAR Images. Remote Sens. 2017, 9, 1135. [Google Scholar] [CrossRef] [Green Version]

- Hou, B.; Wang, Y.; Liu, Q. A Saliency Guided Semi-Supervised Building Change Detection Method for High Resolution Remote Sensing Images. Sensors 2016, 16, 1377. [Google Scholar] [CrossRef] [Green Version]

- Alshehhi, R.; Marpu, P.R.; Woon, W.L.; Mura, M.D. Simultaneous extraction of roads and buildings in remote sensing imagery with convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2017, 130, 139–149. [Google Scholar] [CrossRef]

- Sumaiya, M.N.; Shantha Selva Kumari, R. Gabor filter based change detection in SAR images by KI thresholding. Optik 2017, 130, 114–122. [Google Scholar] [CrossRef]

- Shang, R.; Yuan, Y.; Jiao, L.; Meng, Y.; Ghalamzan, A.M. A self-paced learning algorithm for change detection in synthetic aperture radar images. Signal Process. 2018, 142, 375–387. [Google Scholar] [CrossRef] [Green Version]

- Gong, M.; Zhan, T.; Zhang, P.; Miao, Q. Superpixel-Based Difference Representation Learning for Change Detection in Multispectral Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2658–2673. [Google Scholar] [CrossRef]

- Song, A.; Choi, J.; Han, Y.; Kim, Y. Change detection in hyperspectral images using recurrent 3D fully convolutional networks. Remote Sens. 2018, 10. [Google Scholar] [CrossRef] [Green Version]

- Wang, Q.; Yuan, Z.; Du, Q.; Li, X. GETNET: A General End-to-End 2-D CNN Framework for Hyperspectral Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2019, 57, 3–13. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.; Xiao, P.; Feng, X.; Yuan, M. Separate segmentation of multi-temporal high-resolution remote sensing images for object-based change detection in urban area. Remote Sens. Environ. 2017, 201, 243–255. [Google Scholar] [CrossRef]

- Zhou, Z.; Ma, L.; Fu, T.; Zhang, G.; Yao, M.; Li, M. Change Detection in Coral Reef Environment Using High-Resolution Images: Comparison of Object-Based and Pixel-Based Paradigms. ISPRS Int. J. Geo-Inf. 2018, 7, 441. [Google Scholar] [CrossRef] [Green Version]

- Wan, X.; Liu, J.; Li, S.; Dawson, J.; Yan, H. An Illumination-Invariant Change Detection Method Based on Disparity Saliency Map for Multitemporal Optical Remotely Sensed Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 1311–1324. [Google Scholar] [CrossRef]

- Dai, X.; Khorram, S. The effects of image misregistration on the accuracy of remotely sensed change detection. IEEE Trans. Geosci. Remote Sens. 1998, 36, 1566–1577. [Google Scholar] [CrossRef] [Green Version]

- Yu, L.; Zhang, D.; Holden, E.-J. A fast and fully automatic registration approach based on point features for multi-source remote-sensing images. Comput. Geosci. 2008, 34, 838–848. [Google Scholar] [CrossRef]

- Fortun, D.; Bouthemy, P.; Kervrann, C. Optical flow modeling and computation: A survey. Comput. Vis. Image Underst. 2015, 134, 1–21. [Google Scholar] [CrossRef] [Green Version]

- Song, F.; Yang, Z.; Gao, X.; Dan, T.; Yang, Y.; Zhao, W.; Yu, R. Multi-Scale Feature Based Land Cover Change Detection in Mountainous Terrain Using Multi-Temporal and Multi-Sensor Remote Sensing Images. IEEE Access 2018, 6, 77494–77508. [Google Scholar] [CrossRef]

- Gong, M.; Zhao, S.; Jiao, L.; Tian, D.; Wang, S. A Novel Coarse-to-Fine Scheme for Automatic Image Registration Based on SIFT and Mutual Information. IEEE Trans. Geosci. Remote Sens. 2014, 52, 4328–4338. [Google Scholar] [CrossRef]

- Chen, B.; Chen, Z.; Deng, L.; Duan, Y.; Zhou, J. Building change detection with RGB-D map generated from UAV images. Neurocomputing 2016, 208, 350–364. [Google Scholar] [CrossRef]

- Yu, L.; Wang, Y.; Wu, Y.; Jia, Y. Deep Stereo Matching with Explicit Cost Aggregation Sub-Architecture. arXiv 2018, arXiv:1801.04065. [Google Scholar]

- Zhang, P.; Gong, M.; Su, L.; Liu, J.; Li, Z. Change detection based on deep feature representation and mapping transformation for multi-spatial-resolution remote sensing images. ISPRS J. Photogramm. Remote Sens. 2016, 116, 24–41. [Google Scholar] [CrossRef]

- Zitová, B.; Flusser, J. Image registration methods: A survey. Image Vis. Comput. 2003, 21, 977–1000. [Google Scholar] [CrossRef] [Green Version]

- Wang, R.; Chen, J.-W.; Jiao, L.; Wang, M. How Can Despeckling and Structural Features Benefit to Change Detection on Bitemporal SAR Images? Remote Sens. 2019, 11, 421. [Google Scholar] [CrossRef] [Green Version]

- Li, M.; Li, M.; Zhang, P.; Wu, Y.; Song, W.; An, L. SAR Image Change Detection Using PCANet Guided by Saliency Detection. IEEE Geosci. Remote Sens. Lett. 2019, 16, 402–406. [Google Scholar] [CrossRef]

- Solano-Correa, Y.; Bovolo, F.; Bruzzone, L. An Approach for Unsupervised Change Detection in Multitemporal VHR Images Acquired by Different Multispectral Sensors. Remote Sens. 2018, 10, 533. [Google Scholar] [CrossRef] [Green Version]

- Ye, S.; Chen, D.; Yu, J. A targeted change-detection procedure by combining change vector analysis and post-classification approach. ISPRS J. Photogramm. Remote Sens. 2016, 114, 115–124. [Google Scholar] [CrossRef]

- Yan, W.; Shi, S.; Pan, L.; Zhang, G.; Wang, L. Unsupervised change detection in SAR images based on frequency difference and a modified fuzzy c-means clustering. Int. J. Remote Sens. 2018, 39, 3055–3075. [Google Scholar] [CrossRef]

- Gong, M.; Zhao, J.; Liu, J.; Miao, Q.; Jiao, L. Change Detection in Synthetic Aperture Radar Images Based on Deep Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 125–138. [Google Scholar] [CrossRef] [PubMed]

- Zhan, Y.; Fu, K.; Yan, M.; Sun, X.; Wang, H.; Qiu, X. Change Detection Based on Deep Siamese Convolutional Network for Optical Aerial Images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1845–1849. [Google Scholar] [CrossRef]

- Lyu, H.; Lu, H.; Mou, L. Learning a Transferable Change Rule from a Recurrent Neural Network for Land Cover Change Detection. Remote Sens. 2016, 8, 506. [Google Scholar] [CrossRef] [Green Version]

- Tan, K.; Zhang, Y.; Wang, X.; Chen, Y. Object-Based Change Detection Using Multiple Classifiers and Multi-Scale Uncertainty Analysis. Remote Sens. 2019, 11, 359. [Google Scholar] [CrossRef] [Green Version]

- Gravina, R.; Alinia, P.; Ghasemzadeh, H.; Fortino, G. Multi-sensor fusion in body sensor networks: State-of-the-art and research challenges. Inf. Fusion 2017, 35, 68–80. [Google Scholar] [CrossRef]

- Wang, B.; Choi, J.; Choi, S.; Lee, S.; Wu, P.; Gao, Y. Image Fusion-Based Land Cover Change Detection Using Multi-Temporal High-Resolution Satellite Images. Remote Sens. 2017, 9, 804. [Google Scholar] [CrossRef] [Green Version]

- Tian, J.; Cui, S.; Reinartz, P. Building Change Detection Based on Satellite Stereo Imagery and Digital Surface Models. IEEE Trans. Geosci. Remote Sens. 2014, 52, 406–417. [Google Scholar] [CrossRef] [Green Version]

- Gong, M.; Zhang, P.; Su, L.; Liu, J. Coupled Dictionary Learning for Change Detection from Multisource Data. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7077–7091. [Google Scholar] [CrossRef]

- Bamler, R.; Hartl, P. Synthetic aperture radar interferometry. Inverse Probl. 1998, 14, R1. [Google Scholar] [CrossRef]

- Wang, X.; Jia, Z.; Yang, J.; Kasabov, N. Change detection in SAR images based on the logarithmic transformation and total variation denoising method. Remote Sens. Lett. 2017, 8, 214–223. [Google Scholar] [CrossRef]

- Gao, F.; Dong, J.; Li, B.; Xu, Q. Automatic Change Detection in Synthetic Aperture Radar Images Based on PCANet. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1792–1796. [Google Scholar] [CrossRef]

- Liu, J.; Gong, M.; Qin, K.; Zhang, P. A Deep Convolutional Coupling Network for Change Detection Based on Heterogeneous Optical and Radar Images. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 545–559. [Google Scholar] [CrossRef] [PubMed]

- Dwyer, J.L.; Sayler, K.L.; Zylstra, G.J. Landsat Pathfinder data sets for landscape change analysis. In Proceedings of the 1996 International Geoscience and Remote Sensing Symposium (IGARSS ’96), Lincoln, NE, USA, 31 May 1996; Volume 1, pp. 547–550. [Google Scholar]

- Daudt, R.C.; Le Saux, B.; Boulch, A.; Gousseau, Y. Urban Change Detection for Multispectral Earth Observation Using Convolutional Neural Networks; IEEE: Valencia, Spain, 2018; pp. 2115–2118. [Google Scholar]

- Wu, C.; Zhang, L.; Du, B. Kernel Slow Feature Analysis for Scene Change Detection. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2367–2384. [Google Scholar] [CrossRef]

- Bruzzone, L.; Prieto, D.F. Automatic analysis of the difference image for unsupervised change detection. IEEE Trans. Geosci. Remote Sens. 2000, 38, 1171–1182. [Google Scholar] [CrossRef] [Green Version]

- Yin, S.; Qian, Y.; Gong, M. Unsupervised hierarchical image segmentation through fuzzy entropy maximization. Pattern Recognit. 2017, 68, 245–259. [Google Scholar] [CrossRef]

- Ji, S.; Wei, S.; Lu, M. Fully Convolutional Networks for Multisource Building Extraction from an Open Aerial and Satellite Imagery Data Set. IEEE Trans. Geosci. Remote Sens. 2019, 57, 574–586. [Google Scholar] [CrossRef]

- Lebedev, M.A.; Vizilter, Y.V.; Vygolov, O.V.; Knyaz, V.A.; Rubis, A.Y. CHANGE DETECTION IN REMOTE SENSING IMAGES USING CONDITIONAL ADVERSARIAL NETWORKS. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII–2, 565–571. [Google Scholar] [CrossRef] [Green Version]

- Benedek, C.; Sziranyi, T. Change Detection in Optical Aerial Images by a Multilayer Conditional Mixed Markov Model. IEEE Trans. Geosci. Remote Sens. 2009, 47, 3416–3430. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Ouyang, X.; Agam, G. ChangeNet: Learning to Detect Changes in Satellite Images; ACM Press: Chicago, IL, USA, 2019; pp. 24–31. [Google Scholar]

- López-Fandiño, J.; Garea, A.S.; Heras, D.B.; Argüello, F. Stacked Autoencoders for Multiclass Change Detection in Hyperspectral Images; IEEE: Valencia, Spain, 2018; pp. 1906–1909. [Google Scholar]

- Liu, G.; Gousseau, Y.; Tupin, F. A Contrario Comparison of Local Descriptors for Change Detection in Very High Spatial Resolution Satellite Images of Urban Areas. IEEE Trans. Geosci. Remote Sens. 2019, 57, 3904–3918. [Google Scholar] [CrossRef]

- Quan, S.; Xiong, B.; Xiang, D.; Zhao, L.; Zhang, S.; Kuang, G. Eigenvalue-Based Urban Area Extraction Using Polarimetric SAR Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 458–471. [Google Scholar] [CrossRef]

- Bovolo, F.; Bruzzone, L. A Theoretical Framework for Unsupervised Change Detection Based on Change Vector Analysis in the Polar Domain. IEEE Trans. Geosci. Remote Sens. 2007, 45, 218–236. [Google Scholar] [CrossRef] [Green Version]

- Chen, Q.; Chen, Y. Multi-Feature Object-Based Change Detection Using Self-Adaptive Weight Change Vector Analysis. Remote Sens. 2016, 8, 549. [Google Scholar] [CrossRef] [Green Version]

- Tewkesbury, A.P.; Comber, A.J.; Tate, N.J.; Lamb, A.; Fisher, P.F. A critical synthesis of remotely sensed optical image change detection techniques. Remote Sens. Environ. 2015, 160, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Zheng, Z.; Cao, J.; Lv, Z.; Benediktsson, J.A. Spatial–Spectral Feature Fusion Coupled with Multi-Scale Segmentation Voting Decision for Detecting Land Cover Change with VHR Remote Sensing Images. Remote Sens. 2019, 11, 1903. [Google Scholar] [CrossRef] [Green Version]

- Sun, H.; Zhou, W.; Zhang, Y.; Cai, C.; Chen, Q. Integrating spectral and textural attributes to measure magnitude in object-based change vector analysis. Int. J. Remote Sens. 2019, 40, 5749–5767. [Google Scholar] [CrossRef]

- Tahraoui, A.; Kheddam, R.; Bouakache, A.; Belhadj-Aissa, A. Multivariate alteration detection and ChiMerge thresholding method for change detection in bitemporal and multispectral images. In Proceedings of the 2017 5th International Conference on Electrical Engineering-Boumerdes (ICEE-B), Boumerdes, Algeria, 29–31 October 2017; pp. 1–6. [Google Scholar]

- Du, B.; Wang, Y.; Wu, C.; Zhang, L. Unsupervised Scene Change Detection via Latent Dirichlet Allocation and Multivariate Alteration Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 4676–4689. [Google Scholar] [CrossRef]

- Das, M.; Ghosh, S.K. Measuring Moran’s I in a Cost-Efficient Manner to Describe a Land-Cover Change Pattern in Large-Scale Remote Sensing Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 2631–2639. [Google Scholar] [CrossRef]

- Lv, Z.Y.; Liu, T.F.; Zhang, P.; Benediktsson, J.A.; Lei, T.; Zhang, X. Novel Adaptive Histogram Trend Similarity Approach for Land Cover Change Detection by Using Bitemporal Very-High-Resolution Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2019, 1–21. [Google Scholar] [CrossRef]

- Lv, Z.; Liu, T.; Atli Benediktsson, J.; Lei, T.; Wan, Y. Multi-Scale Object Histogram Distance for LCCD Using Bi-Temporal Very-High-Resolution Remote Sensing Images. Remote Sens. 2018, 10, 1809. [Google Scholar] [CrossRef] [Green Version]

- Byun, Y.; Han, D. Relative radiometric normalization of bitemporal very high-resolution satellite images for flood change detection. J. Appl. Remote Sens. 2018, 12, 026021. [Google Scholar] [CrossRef]

- Sumaiya, M.N.; Shantha Selva Kumari, R. Unsupervised change detection of flood affected areas in SAR images using Rayleigh-based Bayesian thresholding. Sonar Navig. IET Radar 2018, 12, 515–522. [Google Scholar] [CrossRef]

- Liu, W.; Yang, J.; Zhao, J.; Shi, H.; Yang, L. An Unsupervised Change Detection Method Using Time-Series of PolSAR Images from Radarsat-2 and GaoFen-3. Sensors 2018, 18, 559. [Google Scholar] [CrossRef] [Green Version]

- Wu, T.; Luo, J.; Fang, J.; Ma, J.; Song, X. Unsupervised Object-Based Change Detection via a Weibull Mixture Model-Based Binarization for High-Resolution Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2018, 15, 63–67. [Google Scholar] [CrossRef]

- Liu, J.; Li, P. Extraction of Earthquake-Induced Collapsed Buildings from Bi-Temporal VHR Images Using Object-Level Homogeneity Index and Histogram. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 2755–2770. [Google Scholar] [CrossRef]

- Deng, J.S.; Wang, K.; Deng, Y.H.; Qi, G.J. PCA-based land-use change detection and analysis using multitemporal and multisensor satellite data. Int. J. Remote Sens. 2008, 29, 4823–4838. [Google Scholar] [CrossRef]

- Lou, X.; Jia, Z.; Yang, J.; Kasabov, N. Change Detection in SAR Images Based on the ROF Model Semi-Implicit Denoising Method. Sensors 2019, 19, 1179. [Google Scholar] [CrossRef] [Green Version]

- Ran, Q.; Li, W.; Du, Q. Kernel one-class weighted sparse representation classification for change detection. Remote Sens. Lett. 2018, 9, 597–606. [Google Scholar] [CrossRef]

- Wang, C.; Zhang, Y.; Wang, X. Coarse-to-fine SAR image change detection method. Remote Sens. Lett. 2019, 10, 1153–1162. [Google Scholar] [CrossRef]

- Padrón-Hidalgo, J.A.; Laparra, V.; Longbotham, N.; Camps-Valls, G. Kernel Anomalous Change Detection for Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2019, 57, 7743–7755. [Google Scholar] [CrossRef]

- Gao, F.; Liu, X.; Dong, J.; Zhong, G.; Jian, M. Change Detection in SAR Images Based on Deep Semi-NMF and SVD Networks. Remote Sens. 2017, 9, 435. [Google Scholar] [CrossRef] [Green Version]

- Lal, A.M.; Anouncia, S.M. Modernizing the multi-temporal multispectral remotely sensed image change detection for global maxima through binary particle swarm optimization. J. King Saud Univ.-Comput. Inf. Sci. 2018. [Google Scholar] [CrossRef]

- Zhuang, H.; Fan, H.; Deng, K.; Yu, Y. An improved neighborhood-based ratio approach for change detection in SAR images. Eur. J. Remote Sens. 2018, 51, 723–738. [Google Scholar] [CrossRef] [Green Version]

- Luo, B.; Hu, C.; Su, X.; Wang, Y. Differentially Deep Subspace Representation for Unsupervised Change Detection of SAR Images. Remote Sens. 2019, 11, 2740. [Google Scholar] [CrossRef] [Green Version]

- Yang, S.; Liu, Z.; Gao, Q.; Gao, Y.; Feng, Z. Extreme Self-Paced Learning Machine for On-Orbit SAR Images Change Detection. IEEE Access 2019, 7, 116413–116423. [Google Scholar] [CrossRef]

- Konstantinidis, D.; Stathaki, T.; Argyriou, V.; Grammalidis, N. Building Detection Using Enhanced HOG–LBP Features and Region Refinement Processes. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 888–905. [Google Scholar] [CrossRef] [Green Version]

- Lefebvre, A.; Corpetti, T. Monitoring the Morphological Transformation of Beijing Old City Using Remote Sensing Texture Analysis. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 539–548. [Google Scholar] [CrossRef]

- Zakeri, F.; Huang, B.; Saradjian, M.R. Fusion of Change Vector Analysis in Posterior Probability Space and Postclassification Comparison for Change Detection from Multispectral Remote Sensing Data. Remote Sens. 2019, 11, 1511. [Google Scholar] [CrossRef] [Green Version]

- Huo, C.; Chen, K.; Ding, K.; Zhou, Z.; Pan, C. Learning Relationship for Very High Resolution Image Change Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 3384–3394. [Google Scholar] [CrossRef]

- Xing, J.; Sieber, R.; Caelli, T. A scale-invariant change detection method for land use/cover change research. ISPRS J. Photogramm. Remote Sens. 2018, 141, 252–264. [Google Scholar] [CrossRef]

- Azzouzi, S.A.; Vidal-Pantaleoni, A.; Bentounes, H.A. Desertification Monitoring in Biskra, Algeria, with Landsat Imagery by Means of Supervised Classification and Change Detection Methods. IEEE Access 2017, 5, 9065–9072. [Google Scholar] [CrossRef]

- Im, J.; Jensen, J. A change detection model based on neighborhood correlation image analysis and decision tree classification. Remote Sens. Environ. 2005, 99, 326–340. [Google Scholar] [CrossRef]

- Shao, Z.; Fu, H.; Fu, P.; Yin, L. Mapping Urban Impervious Surface by Fusing Optical and SAR Data at the Decision Level. Remote Sens. 2016, 8, 945. [Google Scholar] [CrossRef] [Green Version]

- Feng, W.; Sui, H.; Tu, J.; Huang, W.; Xu, C.; Sun, K. A Novel Change Detection Approach for Multi-Temporal High-Resolution Remote Sensing Images Based on Rotation Forest and Coarse-to-Fine Uncertainty Analyses. Remote Sens. 2018, 10, 1015. [Google Scholar] [CrossRef] [Green Version]

- Zerrouki, N.; Harrou, F.; Sun, Y.; Hocini, L. A Machine Learning-Based Approach for Land Cover Change Detection Using Remote Sensing and Radiometric Measurements. IEEE Sens. J. 2019, 19, 5843–5850. [Google Scholar] [CrossRef] [Green Version]

- Peng, B.; Meng, Z.; Huang, Q.; Wang, C. Patch Similarity Convolutional Neural Network for Urban Flood Extent Mapping Using Bi-Temporal Satellite Multispectral Imagery. Remote Sens. 2019, 11, 2492. [Google Scholar] [CrossRef] [Green Version]

- Zhang, C.; Liu, C.; Zhang, X.; Almpanidis, G. An up-to-date comparison of state-of-the-art classification algorithms. Expert Syst. Appl. 2017, 82, 128–150. [Google Scholar] [CrossRef]

- Ma, W.; Yang, H.; Wu, Y.; Xiong, Y.; Hu, T.; Jiao, L.; Hou, B. Change Detection Based on Multi-Grained Cascade Forest and Multi-Scale Fusion for SAR Images. Remote Sens. 2019, 11, 142. [Google Scholar] [CrossRef] [Green Version]

- Panuju, D.R.; Paull, D.J.; Trisasongko, B.H. Combining Binary and Post-Classification Change Analysis of Augmented ALOS Backscatter for Identifying Subtle Land Cover Changes. Remote Sens. 2019, 11, 100. [Google Scholar] [CrossRef] [Green Version]

- Krishna Moorthy, S.M.; Calders, K.; Vicari, M.B.; Verbeeck, H. Improved Supervised Learning-Based Approach for Leaf and Wood Classification from LiDAR Point Clouds of Forests. IEEE Trans. Geosci. Remote Sens. 2020, 58, 3057–3070. [Google Scholar] [CrossRef] [Green Version]

- Touazi, A.; Bouchaffra, D. A k-Nearest Neighbor approach to improve change detection from remote sensing: Application to optical aerial images. In Proceedings of the 2015 15th International Conference on Intelligent Systems Design and Applications (ISDA), Marrakech, Morocco, 14–16 December 2015; pp. 98–103. [Google Scholar]

- Ma, W.; Wu, Y.; Gong, M.; Xiong, Y.; Yang, H.; Hu, T. Change detection in SAR images based on matrix factorisation and a Bayes classifier. Int. J. Remote Sens. 2019, 40, 1066–1091. [Google Scholar] [CrossRef]

- Tan, K.; Jin, X.; Plaza, A.; Wang, X.; Xiao, L.; Du, P. Automatic Change Detection in High-Resolution Remote Sensing Images by Using a Multiple Classifier System and Spectral–Spatial Features. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 3439–3451. [Google Scholar] [CrossRef]

- Wang, X.; Liu, S.; Du, P.; Liang, H.; Xia, J.; Li, Y. Object-Based Change Detection in Urban Areas from High Spatial Resolution Images Based on Multiple Features and Ensemble Learning. Remote Sens. 2018, 10, 276. [Google Scholar] [CrossRef] [Green Version]

- Liu, J.; Chen, K.; Xu, G.; Sun, X.; Yan, M.; Diao, W.; Han, H. Convolutional Neural Network-Based Transfer Learning for Optical Aerial Images Change Detection. IEEE Geosci. Remote Sens. Lett. 2020, 17, 127–131. [Google Scholar] [CrossRef]

- Liu, L.; Jia, Z.; Yang, J.; Kasabov, N.K. SAR Image Change Detection Based on Mathematical Morphology and the K-Means Clustering Algorithm. IEEE Access 2019, 7, 43970–43978. [Google Scholar] [CrossRef]

- Cui, B.; Zhang, Y.; Yan, L.; Wei, J.; Wu, H. An Unsupervised SAR Change Detection Method Based on Stochastic Subspace Ensemble Learning. Remote Sens. 2019, 11, 1314. [Google Scholar] [CrossRef] [Green Version]

- Li, Z.; Shi, W.; Zhang, H.; Hao, M. Change Detection Based on Gabor Wavelet Features for Very High Resolution Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 783–787. [Google Scholar] [CrossRef]

- Zhang, W.; Lu, X.; Li, X. A Coarse-to-Fine Semi-Supervised Change Detection for Multispectral Images. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3587–3599. [Google Scholar] [CrossRef]

- Liang, S.; Li, H.; Zhu, Y.; Gong, M. Detecting Positive and Negative Changes from SAR Images by an Evolutionary Multi-Objective Approach. IEEE Access 2019, 7, 63638–63649. [Google Scholar] [CrossRef]

- Lv, Z.; Liu, T.; Shi, C.; Benediktsson, J.A.; Du, H. Novel Land Cover Change Detection Method Based on k-Means Clustering and Adaptive Majority Voting Using Bitemporal Remote Sensing Images. IEEE Access 2019, 7, 34425–34437. [Google Scholar] [CrossRef]

- Zhang, P.; Gong, M.; Zhang, H.; Liu, J.; Ban, Y. Unsupervised Difference Representation Learning for Detecting Multiple Types of Changes in Multitemporal Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 2277–2289. [Google Scholar] [CrossRef]

- Yuan, J.; Lv, X.; Dou, F.; Yao, J. Change Analysis in Urban Areas Based on Statistical Features and Temporal Clustering Using TerraSAR-X Time-Series Images. Remote Sens. 2019, 11, 926. [Google Scholar] [CrossRef] [Green Version]

- Sharma, A.; Gupta, R.K.; Tiwari, A. Improved Density Based Spatial Clustering of Applications of Noise Clustering Algorithm for Knowledge Discovery in Spatial Data. Math. Probl. Eng. 2016, 2016, 1–9. [Google Scholar] [CrossRef]

- Fan, J.; Lin, K.; Han, M. A Novel Joint Change Detection Approach Based on Weight-Clustering Sparse Autoencoders. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 685–699. [Google Scholar] [CrossRef]

- Marinelli, D.; Bovolo, F.; Bruzzone, L. A Novel Change Detection Method for Multitemporal Hyperspectral Images Based on Binary Hyperspectral Change Vectors. IEEE Trans. Geosci. Remote Sens. 2019, 57, 4913–4928. [Google Scholar] [CrossRef]

- Che, M.; Du, P.; Gamba, P. 2- and 3-D Urban Change Detection with Quad-PolSAR Data. IEEE Geosci. Remote Sens. Lett. 2018, 15, 68–72. [Google Scholar] [CrossRef]

- Yang, G.; Li, H.-C.; Yang, W.; Fu, K.; Sun, Y.-J.; Emery, W.J. Unsupervised Change Detection of SAR Images Based on Variational Multivariate Gaussian Mixture Model and Shannon Entropy. IEEE Geosci. Remote Sens. Lett. 2019, 16, 826–830. [Google Scholar] [CrossRef]

- Erbek, F.S.; Özkan, C.; Taberner, M. Comparison of maximum likelihood classification method with supervised artificial neural network algorithms for land use activities. Int. J. Remote Sens. 2004, 25, 1733–1748. [Google Scholar] [CrossRef]

- Multispectral image change detection with kernel cross-modal factor analysis-based fusion of kernels. J. Appl. Remote Sens. 2018, 12, 1. [CrossRef]

- Du, B.; Ru, L.; Wu, C.; Zhang, L. Unsupervised Deep Slow Feature Analysis for Change Detection in Multi-Temporal Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9976–9992. [Google Scholar] [CrossRef] [Green Version]

- Cao, G.; Wang, B.; Xavier, H.-C.; Yang, D.; Southworth, J. A new difference image creation method based on deep neural networks for change detection in remote-sensing images. Int. J. Remote Sens. 2017, 38, 7161–7175. [Google Scholar] [CrossRef]

- Daudt, R.C.; Saux, B.L.; Boulch, A.; Gousseau, Y. Multitask Learning for Large-scale Semantic Change Detection. arXiv 2019, arXiv:1810.08452. [Google Scholar]

- Lv, N.; Chen, C.; Qiu, T.; Sangaiah, A.K. Deep Learning and Superpixel Feature Extraction Based on Contractive Autoencoder for Change Detection in SAR Images. IEEE Trans. Ind. Inform. 2018, 14, 5530–5538. [Google Scholar] [CrossRef]

- Gong, M.; Niu, X.; Zhan, T.; Zhang, M. A coupling translation network for change detection in heterogeneous images. Int. J. Remote Sens. 2019, 40, 3647–3672. [Google Scholar] [CrossRef]

- Lei, Y.; Liu, X.; Shi, J.; Lei, C.; Wang, J. Multiscale Superpixel Segmentation With Deep Features for Change Detection. IEEE Access 2019, 7, 36600–36616. [Google Scholar] [CrossRef]

- Gao, Y.; Gao, F.; Dong, J.; Wang, S. Change Detection from Synthetic Aperture Radar Images Based on Channel Weighting-Based Deep Cascade Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 4517–4529. [Google Scholar] [CrossRef]

- Son, H.; Choi, H.; Seong, H.; Kim, C. Detection of construction workers under varying poses and changing background in image sequences via very deep residual networks. Autom. Constr. 2019, 99, 27–38. [Google Scholar] [CrossRef]

- Xiao, R.; Cui, R.; Lin, M.; Chen, L.; Ni, Y.; Lin, X. SOMDNCD: Image Change Detection Based on Self-Organizing Maps and Deep Neural Networks. IEEE Access 2018, 6, 35915–35925. [Google Scholar] [CrossRef]

- Liu, T.; Li, Y.; Cao, Y.; Shen, Q. Change detection in multitemporal synthetic aperture radar images using dual-channel convolutional neural network. J. Appl. Remote Sens. 2017, 11, 042615. [Google Scholar] [CrossRef] [Green Version]

- Li, L.; Wang, C.; Zhang, H.; Zhang, B.; Wu, F. Urban Building Change Detection in SAR Images Using Combined Differential Image and Residual U-Net Network. Remote Sens. 2019, 11, 1091. [Google Scholar] [CrossRef] [Green Version]

- Dong, H.; Ma, W.; Wu, Y.; Gong, M.; Jiao, L. Local Descriptor Learning for Change Detection in Synthetic Aperture Radar Images via Convolutional Neural Networks. IEEE Access 2019, 7, 15389–15403. [Google Scholar] [CrossRef]

- Wiratama, W.; Lee, J.; Park, S.-E.; Sim, D. Dual-Dense Convolution Network for Change Detection of High-Resolution Panchromatic Imagery. Appl. Sci. 2018, 8, 1785. [Google Scholar] [CrossRef] [Green Version]

- Zhang, W.; Lu, X. The Spectral-Spatial Joint Learning for Change Detection in Multispectral Imagery. Remote Sens. 2019, 11, 240. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z.; Vosselman, G.; Gerke, M.; Persello, C.; Tuia, D.; Yang, M.Y. Detecting Building Changes between Airborne Laser Scanning and Photogrammetric Data. Remote Sens. 2019, 11, 2417. [Google Scholar] [CrossRef] [Green Version]

- Caye Daudt, R.; Le Saux, B.; Boulch, A. Fully convolutional siamese networks for change detection. Proc.-Int. Conf. Image Process. ICIP 2018, 4063–4067. [Google Scholar] [CrossRef] [Green Version]

- Nex, F.; Duarte, D.; Tonolo, F.G.; Kerle, N. Structural Building Damage Detection with Deep Learning: Assessment of a State-of-the-Art CNN in Operational Conditions. Remote Sens. 2019, 11, 2765. [Google Scholar] [CrossRef] [Green Version]

- Peng, D.; Zhang, Y.; Guan, H. End-to-End Change Detection for High Resolution Satellite Images Using Improved UNet++. Remote Sens. 2019, 11, 1382. [Google Scholar] [CrossRef] [Green Version]

- Wang, M.; Tan, K.; Jia, X.; Wang, X.; Chen, Y. A Deep Siamese Network with Hybrid Convolutional Feature Extraction Module for Change Detection Based on Multi-sensor Remote Sensing Images. Remote Sens. 2020, 12, 205. [Google Scholar] [CrossRef] [Green Version]

- Zhang, M.; Xu, G.; Chen, K.; Yan, M.; Sun, X. Triplet-Based Semantic Relation Learning for Aerial Remote Sensing Image Change Detection. IEEE Geosci. Remote Sens. Lett. 2019, 16, 266–270. [Google Scholar] [CrossRef]

- Seydi, S.T.; Hasanlou, M.; Amani, M. A New End-to-End Multi-Dimensional CNN Framework for Land Cover/Land Use Change Detection in Multi-Source Remote Sensing Datasets. Remote Sens. 2020, 12, 2010. [Google Scholar] [CrossRef]

- Gong, M.; Yang, H.; Zhang, P. Feature learning and change feature classification based on deep learning for ternary change detection in SAR images. ISPRS J. Photogramm. Remote Sens. 2017, 129, 212–225. [Google Scholar] [CrossRef]

- Zhuang, H.; Deng, K.; Fan, H.; Ma, S. A novel approach based on structural information for change detection in SAR images. Int. J. Remote Sens. 2018, 39, 2341–2365. [Google Scholar] [CrossRef]

- Li, X.; Yuan, Z.; Wang, Q. Unsupervised Deep Noise Modeling for Hyperspectral Image Change Detection. Remote Sens. 2019, 11, 258. [Google Scholar] [CrossRef] [Green Version]

- Saha, S.; Bovolo, F.; Bruzzone, L. Unsupervised Deep Change Vector Analysis for Multiple-Change Detection in VHR Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 3677–3693. [Google Scholar] [CrossRef]

- Liu, R.; Kuffer, M.; Persello, C. The Temporal Dynamics of Slums Employing a CNN-Based Change Detection Approach. Remote Sens. 2019, 11, 2844. [Google Scholar] [CrossRef] [Green Version]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. arXiv 2015, arXiv:1505.04597. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. arXiv 2018, arXiv:1802.02611. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. arXiv 2016, arXiv:1511.00561. [Google Scholar]

- De Jong, K.L.; Sergeevna Bosman, A. Unsupervised Change Detection in Satellite Images Using Convolutional Neural Networks. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; pp. 1–8. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. arXiv 2016, arXiv:1512.02325. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv 2016, arXiv:1506.01497. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2016, arXiv:1506.02640. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Wang, Q.; Zhang, X.; Chen, G.; Dai, F.; Gong, Y.; Zhu, K. Change detection based on Faster R-CNN for high-resolution remote sensing images. Remote Sens. Lett. 2018, 9, 923–932. [Google Scholar] [CrossRef]

- Ji, S.; Shen, Y.; Lu, M.; Zhang, Y. Building Instance Change Detection from Large-Scale Aerial Images using Convolutional Neural Networks and Simulated Samples. Remote Sens. 2019, 11, 1343. [Google Scholar] [CrossRef] [Green Version]

- Wu, C.; Du, B.; Zhang, L. Slow Feature Analysis for Change Detection in Multispectral Imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2858–2874. [Google Scholar] [CrossRef]

- Kong, Y.L.; Huang, Q.; Wang, C.; Chen, J.; Chen, J.; He, D. Long short-term memory neural networks for online disturbance detection in satellite image time series. Remote Sens. 2018, 10. [Google Scholar] [CrossRef] [Green Version]

- Wu, H.; Prasad, S. Convolutional recurrent neural networks for hyperspectral data classification. Remote Sens. 2017, 9, 298. [Google Scholar] [CrossRef] [Green Version]

- Mou, L.; Bruzzone, L.; Zhu, X.X. Learning Spectral-Spatial-Temporal Features via a Recurrent Convolutional Neural Network for Change Detection in Multispectral Imagery. IEEE Trans. Geosci. Remote Sens. 2019, 57, 924–935. [Google Scholar] [CrossRef] [Green Version]

- Jia, X.; Khandelwal, A.; Nayak, G.; Gerber, J.; Carlson, K.; West, P.; Kumar, V. Incremental Dual-Memory LSTM in Land Cover Prediction; ACM Press: New York, NY, USA, 2017; pp. 867–876. [Google Scholar]

- Gong, M.; Niu, X.; Zhang, P.; Li, Z. Generative Adversarial Networks for Change Detection in Multispectral Imagery. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2310–2314. [Google Scholar] [CrossRef]

- Saha, S.; Bovolo, F.; Bruzzone, L. Building Change Detection in VHR SAR Images via Unsupervised Deep Transcoding. IEEE Trans. Geosci. Remote Sens. 2020, 1–13. [Google Scholar] [CrossRef]

- Saha, S.; Solano-Correa, Y.T.; Bovolo, F.; Bruzzone, L. Unsupervised deep learning based change detection in Sentinel-2 images. In Proceedings of the 2019 10th International Workshop on the Analysis of Multitemporal Remote Sensing Images (MultiTemp), Shanghai, China, 5–7 August 2019; pp. 1–4. [Google Scholar]

- Niu, X.; Gong, M.; Zhan, T.; Yang, Y. A Conditional Adversarial Network for Change Detection in Heterogeneous Images. IEEE Geosci. Remote Sens. Lett. 2019, 16, 45–49. [Google Scholar] [CrossRef]

- Fang, B.; Pan, L.; Kou, R. Dual Learning-Based Siamese Framework for Change Detection Using Bi-Temporal VHR Optical Remote Sensing Images. Remote Sens. 2019, 11, 1292. [Google Scholar] [CrossRef] [Green Version]

- Seo, D.K.; Kim, Y.H.; Eo, Y.D.; Lee, M.H.; Park, W.Y. Fusion of SAR and Multispectral Images Using Random Forest Regression for Change Detection. ISPRS Int. J. Geo-Inf. 2018, 7, 401. [Google Scholar] [CrossRef] [Green Version]

- Farahani, M.; Mohammadzadeh, A. Domain adaptation for unsupervised change detection of multisensor multitemporal remote-sensing images. Int. J. Remote Sens. 2020, 41, 3902–3923. [Google Scholar] [CrossRef]

- Benedetti, A.; Picchiani, M.; Del Frate, F. Sentinel-1 and Sentinel-2 Data Fusion for Urban Change Detection. In Proceedings of the IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 1962–1965. [Google Scholar]

- Azzouzi, S.A.; Vidal-Pantaleoni, A.; Bentounes, H.A. Monitoring Desertification in Biskra, Algeria Using Landsat 8 and Sentinel-1A Images. IEEE Access 2018, 6, 30844–30854. [Google Scholar] [CrossRef]

- Méndez Domínguez, E.; Magnard, C.; Meier, E.; Small, D.; Schaepman, M.E.; Henke, D. A Back-Projection Tomographic Framework for VHR SAR Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2019, 57, 4470–4484. [Google Scholar] [CrossRef]

- Méndez Domínguez, E.; Small, D.; Henke, D. Synthetic Aperture Radar Tomography for Change Detection Applications. In Proceedings of the 2019 Joint Urban Remote Sensing Event (JURSE), Vannes, France, 22–24 May 2019; pp. 1–4. [Google Scholar]

- Qin, R.; Huang, X.; Gruen, A.; Schmitt, G. Object-Based 3-D Building Change Detection on Multitemporal Stereo Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2125–2137. [Google Scholar] [CrossRef]

- Wu, T.; Luo, J.; Zhou, X.; Ma, J.; Song, X. Automatic newly increased built-up area extraction from high-resolution remote sensing images using line-density-based visual saliency and PanTex. J. Appl. Remote Sens. 2018, 12, 015016. [Google Scholar] [CrossRef]

- Hao, M.; Shi, W.; Ye, Y.; Zhang, H.; Deng, K. A novel change detection approach for VHR remote sensing images by integrating multi-scale features. Int. J. Remote Sens. 2019, 40, 4910–4933. [Google Scholar] [CrossRef]

- Méndez Domínguez, E.; Meier, E.; Small, D.; Schaepman, M.E.; Bruzzone, L.; Henke, D. A Multisquint Framework for Change Detection in High-Resolution Multitemporal SAR Images. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3611–3623. [Google Scholar] [CrossRef]

- Luo, H.; Liu, C.; Wu, C.; Guo, X. Urban Change Detection Based on Dempster–Shafer Theory for Multitemporal Very High-Resolution Imagery. Remote Sens. 2018, 10, 980. [Google Scholar] [CrossRef] [Green Version]

- Tamkuan, N.; Nagai, M. Fusion of Multi-Temporal Interferometric Coherence and Optical Image Data for the 2016 Kumamoto Earthquake Damage Assessment. ISPRS Int. J. Geo-Inf. 2017, 6, 188. [Google Scholar] [CrossRef] [Green Version]

- Iino, S.; Ito, R.; Doi, K.; Imaizumi, T.; Hikosaka, S. CNN-based generation of high-accuracy urban distribution maps utilising SAR satellite imagery for short-term change monitoring. Int. J. Image Data Fusion 2018, 9, 302–318. [Google Scholar] [CrossRef]

- Joining multi-epoch archival aerial images in a single SfM block allows 3-D change detection with almost exclusively image information. ISPRS J. Photogramm. Remote Sens. 2018, 146, 495–506. [CrossRef] [Green Version]

- Pirasteh, S.; Rashidi, P.; Rastiveis, H.; Huang, S.; Zhu, Q.; Liu, G.; Li, Y.; Li, J.; Seydipour, E. Developing an Algorithm for Buildings Extraction and Determining Changes from Airborne LiDAR, and Comparing with R-CNN Method from Drone Images. Remote Sens. 2019, 11, 1272. [Google Scholar] [CrossRef] [Green Version]

- Pang, S.; Hu, X.; Zhang, M.; Cai, Z.; Liu, F. Co-Segmentation and Superpixel-Based Graph Cuts for Building Change Detection from Bi-Temporal Digital Surface Models and Aerial Images. Remote Sens. 2019, 11, 729. [Google Scholar] [CrossRef] [Green Version]

- Xu, H.; Wang, Y.; Guan, H.; Shi, T.; Hu, X. Detecting Ecological Changes with a Remote Sensing Based Ecological Index (RSEI) Produced Time Series and Change Vector Analysis. Remote Sens. 2019, 11, 2345. [Google Scholar] [CrossRef] [Green Version]

- Che, M.; Gamba, P. Intra-Urban Change Analysis Using Sentinel-1 and Nighttime Light Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 1134–1142. [Google Scholar] [CrossRef]

- Zhu, B.; Gao, H.; Wang, X.; Xu, M.; Zhu, X. Change Detection Based on the Combination of Improved SegNet Neural Network and Morphology. 2018 3rd IEEE Int. Conf. Image Vis. Comput. ICIVC 2018 2018, 55–59. [Google Scholar] [CrossRef]

- Ru, L.; Wu, C.; Du, B.; Zhang, L. Deep Canonical Correlation Analysis Network for Scene Change Detection of Multi-Temporal VHR Imagery. In Proceedings of the 2019 10th International Workshop on the Analysis of Multitemporal Remote Sensing Images (MultiTemp), Shanghai, China, 5–7 May 2019; 2019; pp. 1–4. [Google Scholar]

- Feng, X.; Li, P. Urban Built-up Area Change Detection Using Multi-Band Temporal Texture and One-Class Random Forest. In Proceedings of the 2019 10th International Workshop on the Analysis of Multitemporal Remote Sensing Images (MultiTemp), Shanghai, China, 5–7 May 2019; pp. 1–4. [Google Scholar]

- Wang, J.; Yang, X.; Jia, L.; Yang, X.; Dong, Z. Pointwise SAR image change detection using stereo-graph cuts with spatio-temporal information. Remote Sens. Lett. 2019, 10, 421–429. [Google Scholar] [CrossRef]

- Zhou, J.; Yu, B.; Qin, J. Multi-Level Spatial Analysis for Change Detection of Urban Vegetation at Individual Tree Scale. Remote Sens. 2014, 6, 9086–9103. [Google Scholar] [CrossRef] [Green Version]

- Cui, B.; Zhang, Y.; Yan, L.; Wei, J.; Huang, Q. A SAR change detection method based on the consistency of single-pixel difference and neighbourhood difference. Remote Sens. Lett. 2019, 10, 488–495. [Google Scholar] [CrossRef]

- Wu, C.; Du, B.; Zhang, L. Hyperspectral anomalous change detection based on joint sparse representation. ISPRS J. Photogramm. Remote Sens. 2018, 146, 137–150. [Google Scholar] [CrossRef]

- Luo, H.; Wang, L.; Wu, C.; Zhang, L. An Improved Method for Impervious Surface Mapping Incorporating LiDAR Data and High-Resolution Imagery at Different Acquisition Times. Remote Sens. 2018, 10, 1349. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.; Shi, W.; Lv, Z.; Peng, F. Land Cover Change Detection from High-Resolution Remote Sensing Imagery Using Multitemporal Deep Feature Collaborative Learning and a Semi-supervised Chan–Vese Model. Remote Sens. 2019, 11, 2787. [Google Scholar] [CrossRef] [Green Version]

- Wan, L.; Xiang, Y.; You, H. An Object-Based Hierarchical Compound Classification Method for Change Detection in Heterogeneous Optical and SAR Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9941–9959. [Google Scholar] [CrossRef]

- Huang, J.; Liu, Y.; Wang, M.; Zheng, Y.; Wang, J.; Ming, D. Change Detection of High Spatial Resolution Images Based on Region-Line Primitive Association Analysis and Evidence Fusion. Remote Sens. 2019, 11, 2484. [Google Scholar] [CrossRef] [Green Version]

- Kwan, C.; Ayhan, B.; Larkin, J.; Kwan, L.; Bernabé, S.; Plaza, A. Performance of Change Detection Algorithms Using Heterogeneous Images and Extended Multi-attribute Profiles (EMAPs). Remote Sens. 2019, 11, 2377. [Google Scholar] [CrossRef] [Green Version]

- Gong, J.; Hu, X.; Pang, S.; Li, K. Patch Matching and Dense CRF-Based Co-Refinement for Building Change Detection from Bi-Temporal Aerial Images. Sensors 2019, 19, 1557. [Google Scholar] [CrossRef] [Green Version]

- Wu, T.; Luo, J.; Zhou, Y.; Wang, C.; Xi, J.; Fang, J. Geo-Object-Based Land Cover Map Update for High-Spatial-Resolution Remote Sensing Images via Change Detection and Label Transfer. Remote Sens. 2020, 12, 174. [Google Scholar] [CrossRef] [Green Version]

- Cheng, D.; Meng, G.; Cheng, G.; Pan, C. SeNet: Structured Edge Network for Sea–Land Segmentation. IEEE Geosci. Remote Sens. Lett. 2017, 14, 247–251. [Google Scholar] [CrossRef]

| Method | Datasets | Change (%) | Non-Changed (%) | OA (%) | Kappa | AUC | F1 |

|---|---|---|---|---|---|---|---|

| CVA 4 | Taizhou | 27.10 | 97.38 | 83.82 | 0.32 | -- | -- |

| PCA 4 | Taizhou | 74.51 | 99.79 | 94.63 | 0.82 | -- | -- |

| MAD 4 | Taizhou | 78.52 | 98.47 | 94.62 | 0.82 | -- | -- |

| IRMAD 5 | Hongqi Canal | -- | -- | 82.63 | 0.31 | 0.8563 | 0.3988 |

| Wavelet Transformation 2 | Farmland | 98.96 | 98.45 | 97.41 | 0.76 | -- | -- |

| gcForest 2 | Farmland | 82.96 | 99.82 | 99.09 | 0.91 | -- | -- |

| FCM 1 | Farmland | 40.53 | 99.17 | 96.66 | 0.75 | -- | -- |

| FLICM 1 | Farmland | 84.80 | 98.63 | 98.24 | 0.84 | -- | -- |

| PCC 3 | Unpublished | 80.58 | 96.69 | 96.31 | 0.49 | -- | -- |

| SAE 3 | Unpublished | 64.73 | 99.52 | 97.29 | 0.56 | -- | -- |

| IR-MAD+VAE 5 | Hongqi Canal | -- | -- | 93.05 | 0.58 | 0.9396 | 0.6183 |

| DBN 2 | Farmland | 79.07 | 99.00 | 98.27 | 0.84 | -- | -- |

| SCCN 2 | Farmland | 80.62 | 98.90 | 98.26 | 0.84 | -- | -- |

| MFRCNN 3 | Unpublished | 72.62 | 98.80 | 98.20 | 0.64 | -- | -- |

| SFRCNN 3 | Unpublished | 66.74 | 99.55 | 98.80 | 0.71 | -- | -- |

| RNN 4 | Taizhou | 91.96 | 97.58 | 96.50 | 0.89 | -- | -- |

| ReCNN-LSTM 4 | Taizhou | 96.77 | 99.20 | 98.73 | 0.96 | -- | -- |

| IR-MAD+GAN 5 | Hongqi Canal | -- | -- | 94.76 | 0.73 | 0.9793 | 0.7539 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

You, Y.; Cao, J.; Zhou, W. A Survey of Change Detection Methods Based on Remote Sensing Images for Multi-Source and Multi-Objective Scenarios. Remote Sens. 2020, 12, 2460. https://doi.org/10.3390/rs12152460

You Y, Cao J, Zhou W. A Survey of Change Detection Methods Based on Remote Sensing Images for Multi-Source and Multi-Objective Scenarios. Remote Sensing. 2020; 12(15):2460. https://doi.org/10.3390/rs12152460

Chicago/Turabian StyleYou, Yanan, Jingyi Cao, and Wenli Zhou. 2020. "A Survey of Change Detection Methods Based on Remote Sensing Images for Multi-Source and Multi-Objective Scenarios" Remote Sensing 12, no. 15: 2460. https://doi.org/10.3390/rs12152460

APA StyleYou, Y., Cao, J., & Zhou, W. (2020). A Survey of Change Detection Methods Based on Remote Sensing Images for Multi-Source and Multi-Objective Scenarios. Remote Sensing, 12(15), 2460. https://doi.org/10.3390/rs12152460