Evaluating the Quality of TLS Point Cloud Colorization

Abstract

:1. Introduction

2. Materials and Methods

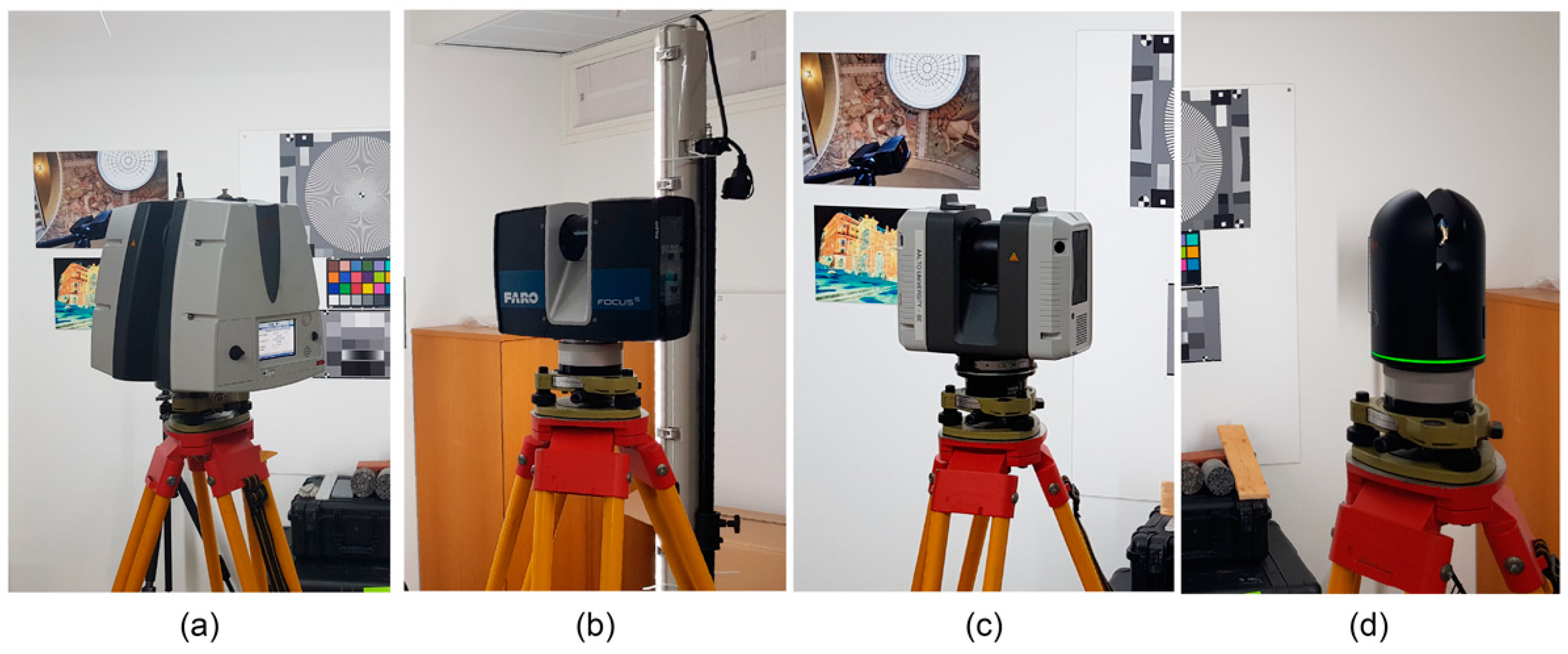

2.1. Tested Instruments

2.2. Test Environment

2.3. Data Acquisition

2.4. Developed Method in Brief

3. The Proposed Method for Evaluating the Colorization Quality of TLS-Derived 3D Point Clouds

3.1. Image Quality

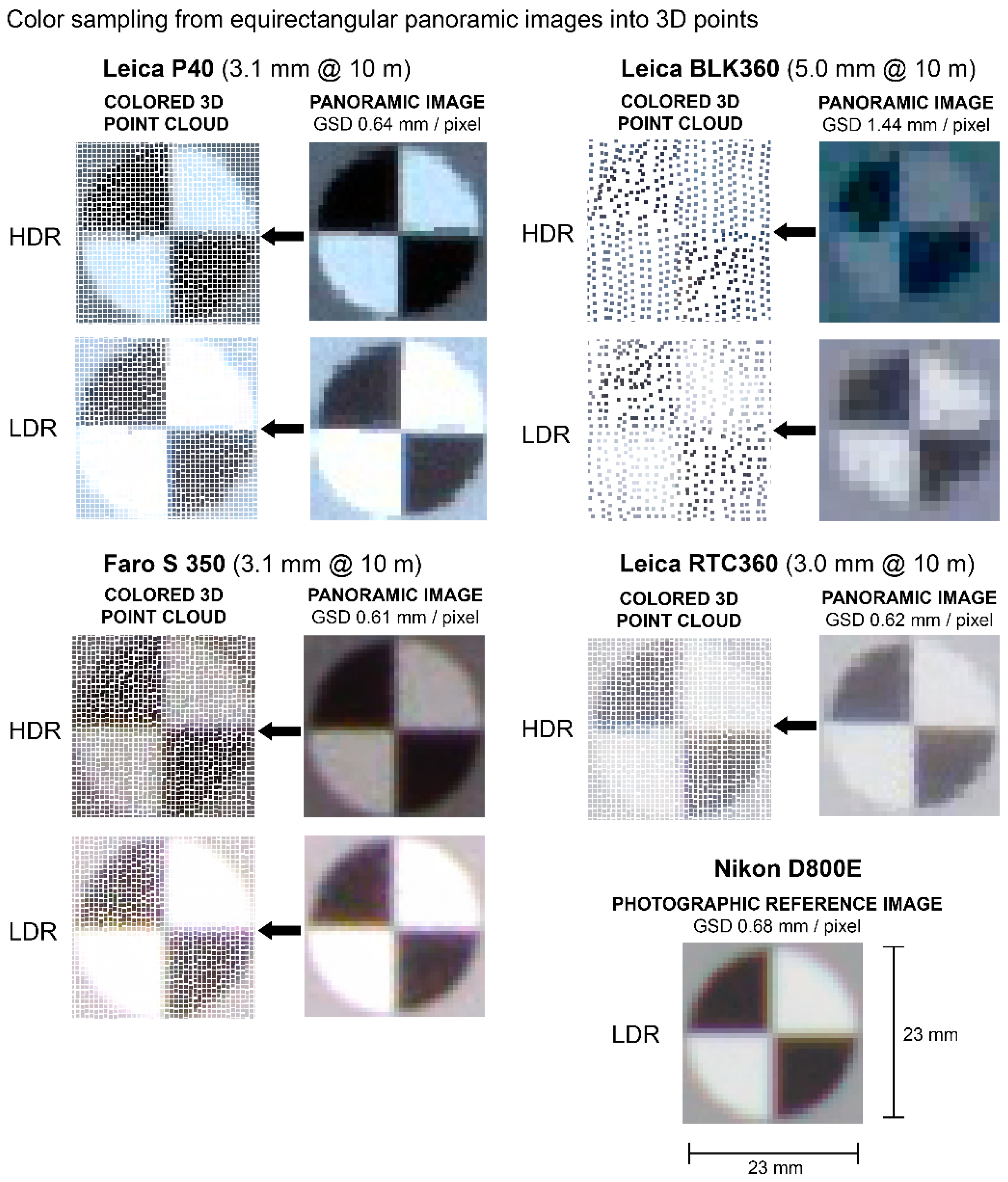

3.2. Point Cloud Pre-Processing and Colorization

3.3. Point Cloud Preparation for Image Quality Analysis

- 1.

- 3D points representing each test chart were manually segmented from the point clouds.

- 2a.

- The points representing the ColorChecker were rendered as point clouds using rectangular points with the point size set to the minimum so that there were no visible holes inside the charts. This was done to mitigate any interpolation of color data before the color-related quality measurements.

- 2b.

- Alternatively, for the points representing the Siemens star chart and the simplified ISO 15739 digital camera noise test chart, a Delaunay triangulation was performed to create mesh models (with vertex colors) of the charts. This was done to fill all potential gaps in the point cloud and to negate the effect of point size for the detail reproduction-related quality measurements.

- 3.

- The segmented test charts were rendered using orthographic projection and an equal zoom level between the charts and exported as 2D image files in PNG format.

3.4. Image Quality Analysis

3.4.1. Color Reproduction

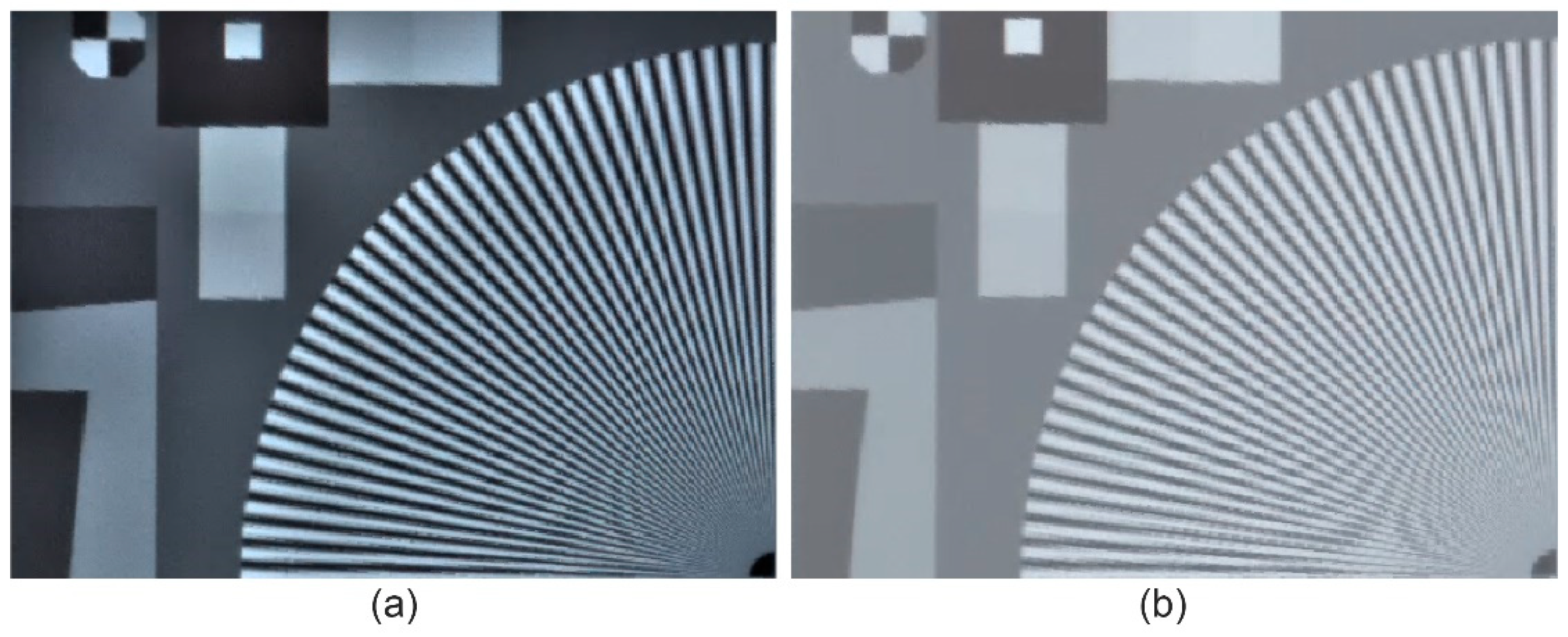

3.4.2. Detail Reproduction

3.5. Combining Metrics for a Quality Score

4. Results

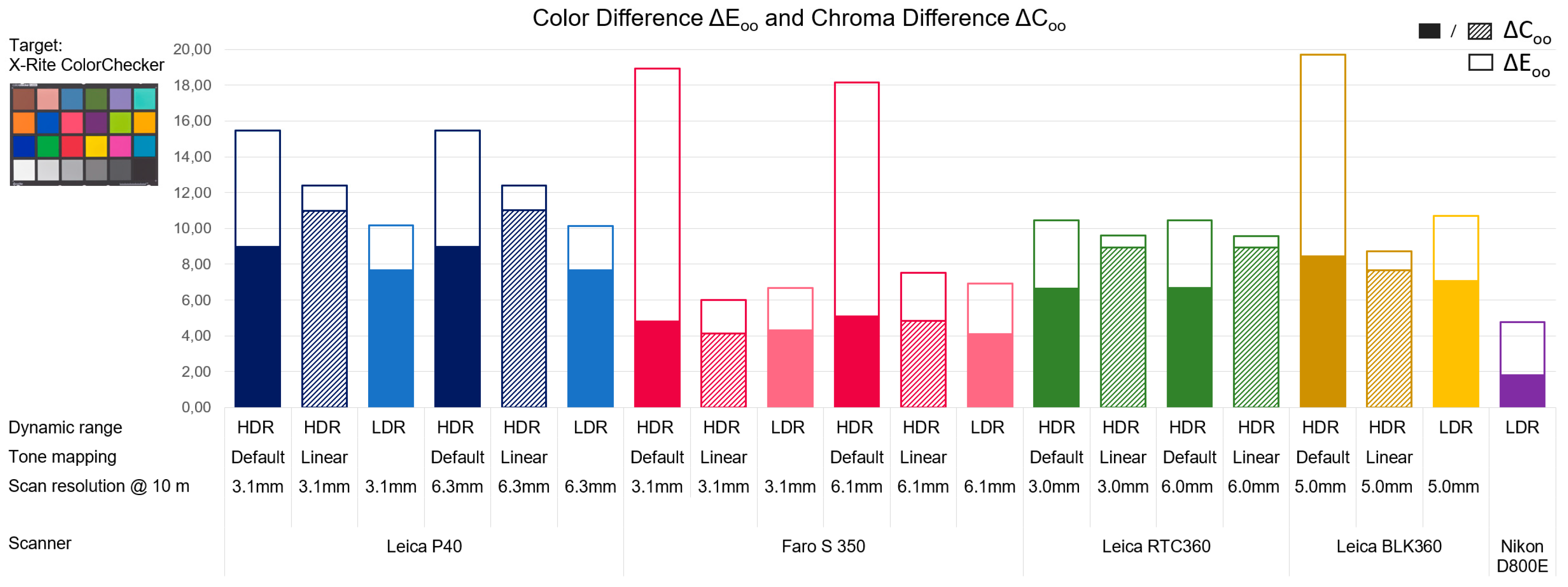

4.1. Color Reproduction

4.2. Detail Reproduction

4.2.1. Sharpness

4.2.2. Information Capacity

4.2.3. Noise

4.3. Quality Score

5. Discussion

5.1. Color Reproduction

5.2. Detail Reproduction

5.3. The Photographic Reference Dataset

5.4. The Effect of Data Collection Speed

5.5. Study Limitations

5.6. Future Research Directions

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A

| Scan (Scanner, Dynamic Range, Scan Resolution, Tone Mapping) | Color Difference (Mean ΔE00) | Chroma Difference (Mean ΔC00) | Exposure Error (f-Stops) | White Balance Error (Saturation) |

|---|---|---|---|---|

| Leica P40, HDR, 3.1 mm, Default | 15.48 | 9.04 | −0.89 | 0.19 |

| Leica P40, HDR, 3.1 mm, Linear | 12.39 | 10.98 | −0.45 | 0.08 |

| Leica P40, LDR, 3.1 mm | 10.16 | 7.72 | 0.85 | 0.14 |

| Leica P40, HDR, 6.3 mm, Default | 15.47 | 9.03 | −0.84 | 0.18 |

| Leica P40, HDR, 6.3 mm, Linear | 12.40 | 11.02 | −0.44 | 0.08 |

| Leica P40, LDR, 6.3 mm | 10.14 | 7.70 | 0.85 | 0.14 |

| Faro S 350, HDR, 3.1 mm, Default | 18.94 | 4.87 | −1.22 | 0.05 |

| Faro S 350, HDR, 3.1 mm, Linear | 6.02 | 4.13 | −0.05 | 0.02 |

| Faro S 350, LDR, 3.1 mm | 6.68 | 4.35 | 0.41 | 0.05 |

| Faro S 350, HDR, 6.1 mm, Default | 18.16 | 5.15 | −1.03 | 0.05 |

| Faro S 350, HDR, 6.1 mm, Linear | 7.53 | 4.84 | 0.04 | 0.02 |

| Faro S 350, LDR, 6.1 mm | 6.92 | 4.15 | 0.6 | 0.04 |

| Leica RTC360, HDR, 3.0 mm, Default | 10.44 | 6.68 | 0.03 | 0.06 |

| Leica RTC360, HDR, 3.0 mm, Linear | 9.59 | 8.93 | −0.10 | 0.08 |

| Leica RTC360, HDR, 6.0 mm, Default | 10.46 | 6.73 | 0.02 | 0.06 |

| Leica RTC360, HDR, 6.0 mm, Linear | 9.58 | 8.93 | −0.16 | 0.08 |

| Leica BLK360, HDR, 5.0 mm, Default | 19.69 | 8.48 | −1.71 | 0.33 |

| Leica BLK360, HDR, 5.0 mm, Linear | 8.72 | 7.66 | −0.38 | 0.14 |

| Leica BLK360, LDR, 5.0 mm, | 10.69 | 7.11 | −0.50 | 0.17 |

| Nikon D800E photographic reference | 4.77 | 1.86 | −0.11 | 0.05 |

| Leica P40 HDR (default tone mapping) 3.1 mm @ 10 m | Leica P40 HDR (linear tone mapping) 3.1 mm @ 10 m | Leica P40 LDR 3.1 mm @ 10 m |

|  |  |

| Faro S 350 HDR (default tone mapping) 3.1 mm @ 10 m. | Faro S 350 HDR (linear tone mapping) 3.1 mm @ 10 m. | Faro S 350 LDR 3.1 mm @ 10 m. |

|  |  |

| Leica RTC360 HDR (default tone mapping) 3.0 mm @ 10 m. | Leica RTC360 HDR (linear mapping) 3.0 mm @ 10 m. | LDR setting not available |

|  | |

| Leica BLK360 HDR (default tone mapping) 5.0 mm @ 10 m. | Leica BLK360 HDR (linear tone mapping) 5.0 mm @ 10 m. | Leica BLK360 LDR 5.0 mm @ 10 m. |

|  |  |

| Scan (Scanner, Dynamic Range, Scan Resolution, Tone Mapping) | MTF50P (Cycles/Pixel) | MTF10P (Cycles/Pixel) | Shannon Information Capacity (Bits/Pixel) | ISO 15739 SNR (dB) |

|---|---|---|---|---|

| Leica P40 HDR 3.1 mm Default | 0.199 | 0.4 | 1.32 | 27.4 |

| Leica P40 HDR 3.1 mm Linear | 0.191 | 0.357 | 0.83 | 46.4 |

| Leica P40 LDR 3.1 mm | 0.255 | 0.468 | 1.32 | 15.6 |

| Leica P40 HDR 6.3 mm Default | 0.167 | 0.316 | 1.07 | 27.2 |

| Leica P40 HDR 6.3 mm Linear | 0.173 | 0.299 | 0.81 | 47.5 |

| Leica P40 LDR 6.3 mm | 0.201 | 0.353 | 1.04 | 15.6 |

| Faro S 350 HDR 3.1 mm Default | 0.237 | 0.419 | 2.21 | 26.8 |

| Faro S 350 HDR 3.1 mm Linear | 0.237 | 0.417 | 2.31 | 38.5 |

| Faro S 350 LDR 3.1 mm | 0.225 | 0.406 | 2.41 | 28.5 |

| Faro S 350 HDR 6.1 mm Default | 0.191 | 0.336 | 1.34 | 27.8 |

| Faro S 350 HDR 6.1 mm Linear | 0.196 | 0.339 | 1.38 | 40.1 |

| Faro S 350 LDR 6.1 mm | 0.190 | 0.328 | 1.45 | 30.4 |

| Leica RTC360 HDR 3.0 mm Default | 0.222 | 0.373 | 1.15 | 40.0 |

| Leica RTC360 HDR 3.0 mm Linear | 0.217 | 0.377 | 1.16 | 32.5 |

| Leica RTC360 HDR 6.0 mm Default | 0.188 | 0.312 | 0.97 | 41.0 |

| Leica RTC360 HDR 6.0 mm Linear | 0.182 | 0.31 | 0.83 | 34.0 |

| Leica BLK360 HDR 5.0 mm Default | 0.135 | 0.226 | 1.08 | 33.3 |

| Leica BLK360 HDR 5.0 mm Linear | 0.136 | 0.227 | 0.76 | 39.5 |

| Leica BLK360 LDR 5.0 mm | 0.129 | 0.206 | 0.81 | 31.5 |

| Nikon D800E photographic reference | 0.212 | 0.357 | 2.89 | 34.0 |

| Leica P40 HDR (default tone mapping) 3.1 mm @ 10 m | Leica P40 HDR (linear tone mapping) 3.1 mm @ 10 m | Leica P40 LDR 3.1 mm @ 10 m |

|  |  |

| Faro S 350 HDR (default tone mapping) 3.1 mm @ 10 m | Faro S 350 HDR (linear tone mapping) 3.1 mm @ 10 m | Faro S 350 LDR 3.1 mm @ 10 m |

|  |  |

| Leica RTC360 HDR (default tone mapping) 3.0 mm @ 10 m | Leica RTC360 HDR (linear tone mapping) 3.0 mm @ 10 m | LDR setting not available |

|  | |

| Leica BLK360 HDR (default tone mapping) 5.0 mm @ 10 m | Leica BLK360 HDR (linear tone mapping) 5.0 mm @ 10 m | Leica BLK360 LDR 5.0 mm @ 10 m |

|  |  |

| Leica P40 HDR (default tone mapping) 3.1 mm @ 10 m | Leica P40 HDR (linear tone mapping) 3.1 mm @ 10 m | Leica P40 LDR 3.1 mm @ 10 m |

|  |  |

| Faro S 350 HDR (default tone mapping) 3.1 mm @ 10 m | Faro S 350 HDR (linear tone mapping) 3.1 mm @ 10 m | Faro S 350 LDR 3.1 mm @ 10 m |

|  |  |

| Leica RTC360 HDR (default tone mapping) 3.0 mm @ 10 m | Leica RTC360 HDR (linear tone mapping) 3.0 mm @ 10 m | LDR setting not available |

|  | |

| Leica BLK360 HDR (default tone mapping) 5.0 mm @ 10 m | Leica BLK360 HDR (linear tone mapping) 5.0 mm @ 10 m | Leica BLK360 LDR 5.0 mm @ 10 m |

|  |  |

References

- Lensch, H.P.; Kautz, J.; Goesele, M.; Heidrich, W.; Seidel, H.P. Image-based reconstruction of spatial appearance and geometric detail. ACM Trans. Graph. 2003, 22, 234–257. [Google Scholar] [CrossRef] [Green Version]

- Gaiani, M.; Apollonio, F.I.; Ballabeni, A.; Remondino, F. Securing color fidelity in 3D architectural heritage scenarios. Sensors 2017, 17, 2437. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- A13.1-Scheme for the Identification of Piping Systems-ASME. Available online: https://www.asme.org/codes-standards/find-codes-standards/a13-1-scheme-identification-piping-systems (accessed on 25 June 2020).

- Virtanen, J.-P.; Daniel, S.; Turppa, T.; Zhu, L.; Julin, A.; Hyyppä, H.; Hyyppä, J. Interactive dense point clouds in a game engine. ISPRS J. Photogramm. Remote Sens. 2020, 163, 375–389. [Google Scholar] [CrossRef]

- Statham, N. Use of photogrammetry in video games: A historical overview. Games Cult. 2018, 15, 289–307. [Google Scholar] [CrossRef]

- Pepe, M.; Ackermann, S.; Fregonese, L.; Achille, C. 3D Point cloud model color adjustment by combining terrestrial laser scanner and close range photogrammetry datasets. In Proceedings of the ICDH 2016: 18th International Conference on Digital Heritage, London, UK, 24–25 November 2016; pp. 1942–1948. [Google Scholar]

- Gómez-Moreno, H.; Maldonado-Bascón, S.; Gil-Jiménez, P.; Lafuente-Arroyo, S. Goal evaluation of segmentation algorithms for traffic sign recognition. IEEE Trans. Intell. Transp. Syst. 2010, 11, 917–930. [Google Scholar] [CrossRef]

- Wang, Q.; Cheng, J.C.; Sohn, H. Automated Estimation of Reinforced Precast Concrete Rebar Positions Using Colored Laser Scan Data. Comput. Aided Civ. Infrastruct. Eng. 2017, 32, 787–802. [Google Scholar] [CrossRef]

- Yuan, L.; Guo, J.; Wang, Q. Automatic classification of common building materials from 3D terrestrial laser scan data. Automat. Constr. 2020, 110. [Google Scholar] [CrossRef]

- Valero, E.; Forster, A.; Bosché, F.; Hyslop, E.; Wilson, L.; Turmel, A. Automated defect detection and classification in ashlar masonry walls using machine learning. Automat. Constr. 2019, 106, 1–30. [Google Scholar] [CrossRef]

- Tutzauer, P.; Haala, N. Façade reconstruction using geometric and radiometric point cloud information. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2015, 40, 247–252. [Google Scholar] [CrossRef] [Green Version]

- Men, H.; Gebre, B.; Pochiraju, K. Color point cloud registration with 4D ICP algorithm. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; IEEE: Piscataway, NJ, USA; pp. 1511–1516. [Google Scholar] [CrossRef]

- Łępicka, M.; Kornuta, T.; Stefańczyk, M. Utilization of colour in ICP-based point cloud registration. In Proceedings of the 9th International Conference on Computer Recognition Systems CORES 2015, Wroclaw, Poland, 25–27 May 2015; Springer: Berlin, Germany, 2015; pp. 821–830. [Google Scholar]

- Park, J.; Zhou, Q.Y.; Koltun, V. Colored point cloud registration revisited. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshop (ICCVW), Venice, Italy, 22–29 October 2017; IEEE: Piscataway, NJ, USA; pp. 143–152. [Google Scholar]

- Zhan, Q.; Liang, Y.; Xiao, Y. Color-based segmentation of point clouds. In Proceedings of the ISPRS Workshop Laserscanning ‘09, Paris, France, 1–2 September 2009; Bretar, F., Pierrot-Deseilligny, M., Vosselman, G., Eds.; pp. 155–161. [Google Scholar]

- Strom, J.; Richardson, A.; Olson, E. Graph-based segmentation for colored 3D laser point clouds. In Proceedings of the IROS 2010: IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; IEEE: Piscataway, NJ, USA; pp. 2131–2136. [Google Scholar] [CrossRef] [Green Version]

- Verdoja, F.; Thomas, D.; Sugimoto, A. Fast 3D point cloud segmentation using supervoxels with geometry and color for 3D scene understanding. In Proceedings of the 2017 IEEE International Conference on Multimedia and Expo (ICME), Hong Kong, China, 10–14 July 2017; IEEE: Piscataway, NJ, USA; pp. 1285–1290. [Google Scholar] [CrossRef] [Green Version]

- Leica RTC360 3D Laser Scanner. Available online: https://leica-geosystems.com/products/laser-scanners/scanners/leica-rtc360 (accessed on 23 April 2020).

- FARO FOCUS LASER SCANNERS. Available online: https://www.faro.com/products/construction-bim/faro-focus/ (accessed on 23 April 2020).

- Trimble TX8 3D Laser Scanner. Available online: https://geospatial.trimble.com/products-and-solutions/trimble-tx8 (accessed on 23 April 2020).

- Z+F IMAGER 5016, 3D Laser Scanner. Available online: https://www.zf-laser.com/Z-F-IMAGER-R-5016.184.0.html?&L=1 (accessed on 23 April 2020).

- Pourreza-Shahri, R.; Nasser Kehtarnavaz, N. Exposure bracketing via automatic exposure selection. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; IEEE: Piscataway, NJ, USA; pp. 2487–2494. [Google Scholar] [CrossRef]

- Trimble X7 3D Scanning System. Available online: https://geospatial.trimble.com/node/2650 (accessed on 23 April 2020).

- Gordon, S.; Lichti, D.D.; Stewart, M.P.; Tsakiri, M. Metric performance of a high-resolution laser scanner. Proc. SPIE 2000, 4309, 174–184. [Google Scholar] [CrossRef]

- Lichti, D.; Stewart, M.P.; Tsakiri, M.; Snow, A.J. Calibration and testing of a terrestrial laser scanner. Int. Arch. Photogramm. 2000, 33, 485–492. [Google Scholar]

- Boehler, W.; Vicent, M.B.; Marbs, A. Investigating laser scanner accuracy. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2003, 34, 696–701. [Google Scholar]

- Staiger, R. Terrestrial laser scanning technology, systems and applications. In Proceedings of the 2nd FIG Regional Conference, Marrakech, Morocco, 2–5 December 2003; pp. 1–10. [Google Scholar]

- Mechelke, K.; Kersten, T.P.; Lindstaedt, M. Comparative investigations into the accuracy behaviour of the new generation of terrestrial laser scanning systems. In Proceedings of the 8th Conference on the Optical 3-D Measurement Techniques, Zurich, Switzerland, 9–12 July 2007; Gruen, A., Kahmen, H., Eds.; Volume 3, pp. 19–327. [Google Scholar]

- Pfeifer, N.; Briese, C. Geometrical aspects of airborne laser scanning and terrestrial laser scanning. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2007, 36, 311–319. [Google Scholar]

- Wunderlich, T.; Wasmeier, P.; Ohlmann-Lauber, J.; Schäfer, T.; Reidl, F. Objective Specifications of Terrestrial Laserscanners—A Contribution of the Geodetic Laboratory at the Technische Universität München; Technische Universität München; Chair of Geodesy: Munich, Germany, 2013; pp. 1–38. [Google Scholar]

- Schmitz, B.; Holst, C.; Medic, T.; Lichti, D.D.; Kuhlmann, H. How to Efficiently Determine the Range Precision of 3D Terrestrial Laser Scanners. Sensors 2019, 19, 1466. [Google Scholar] [CrossRef] [Green Version]

- Lichti, D.D.; Jamtsho, S. Angular resolution of terrestrial laser scanners. Photogramm. Rec. 2006, 21, 141–160. [Google Scholar] [CrossRef]

- Ling, Z.; Yuqing, M.; Ruoming, S. Study on the resolution of laser scanning point cloud. In Proceedings of the 2008 IEEE International Geoscience and Remote Sensing Symposium, Boston, MA, USA, 8–11 July 2008; IEEE: Piscataway, NJ, USA; Volume 2, pp. 1136–1139. [Google Scholar] [CrossRef]

- Pesci, A.; Teza, G.; Bonali, E. Terrestrial laser scanner resolution: Numerical simulations and experiments on spatial sampling optimization. Remote Sens. 2011, 3, 167–184. [Google Scholar] [CrossRef] [Green Version]

- Clark, J.; Robson, S. Accuracy of measurements made with a Cyrax 2500 laser scanner against surfaces of known colour. Surv. Rev. 2004, 37, 626–638. [Google Scholar] [CrossRef]

- Kersten, T.P.; Sternberg, H.; Mechelke, K. Investigations into the accuracy behaviour of the terrestrial laser scanning system Mensi GS100. In Proceedings of the 7th Conference on the Optical 3-D Measurement Techniques, Vienna, Austria, 3–5 October 2005; Gruen, A., Kahmen, H., Eds.; Volume 1, pp. 122–131. [Google Scholar]

- Soudarissanane, S.; Van Ree, J.; Bucksch, A.; Lindenbergh, R. Error budget of terrestrial laser scanning: Influence of the incidence angle on the scan quality. In Proceedings of the 3D-NordOst 2007, Berlin, Germany, 6–7 December 2007; pp. 1–8. [Google Scholar]

- Voegtle, T.; Schwab, I.; Landes, T. Influences of different materials on the measurements of a terrestrial laser scanner (TLS). Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2008, 37, 1061–1066. [Google Scholar]

- Soudarissanane, S.; Lindenbergh, R.; Menenti, M.; Teunissen, P. Scanning geometry: Influencing factor on the quality of terrestrial laser scanning points. ISPRS J. Photogramm. Remote Sens. 2011, 66, 389–399. [Google Scholar] [CrossRef]

- Kawashima, K.; Yamanishi, S.; Kanai, S.; Date, H. Finding the next-best scanner position for as-built modeling of piping systems. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 40, 313–320. [Google Scholar] [CrossRef] [Green Version]

- Borah, D.K.; Voelz, D.G. Estimation of laser beam pointing parameters in the presence of atmospheric turbulence. Appl. Opt. 2007, 46, 6010–6018. [Google Scholar] [CrossRef] [PubMed]

- Bucksch, A.; Lindenbergh, R.; van Ree, J. Error budget of Terrestrial Laser Scanning: Influence of the intensity remission on the scan quality. In Proceedings of the Geo-Siberia 2007, Novosibirsk, Russia, 25–27 April 2007; pp. 113–122. [Google Scholar]

- Pfeifer, N.; Dorninger, P.; Haring, A.; Fan, H. Investigating Terrestrial Laser Scanning Intensity Data: Quality and Functional Relations. In Proceedings of the 8th Conference on Optical 3-D Measurement Techniques, Zurich, Switzerland, 9–12 July 2007; pp. 328–337. [Google Scholar]

- Kukko, A.; Kaasalainen, S.; Litkey, P. Effect of incidence angle on laser scanner intensity and surface data. Appl. Opt. 2008, 47, 986–992. [Google Scholar] [CrossRef] [PubMed]

- Kaasalainen, S.; Krooks, A.; Kukko, A.; Kaartinen, H. Radiometric calibration of terrestrial laser scanners with external reference targets. Remote Sens. 2009, 1, 144–158. [Google Scholar] [CrossRef] [Green Version]

- Krooks, A.; Kaasalainen, S.; Hakala, T.; Nevalainen, O. Correction of intensity incidence angle effect in terrestrial laser scanning. In Proceedings of the ISPRS Workshop Laser Scanning 2013, Antalya, Turkey, 11–13 November 2013; pp. 145–150. [Google Scholar] [CrossRef] [Green Version]

- Tan, K.; Cheng, X. Intensity data correction based on incidence angle and distance for terrestrial laser scanner. J. Appl. Remote Sens. 2015, 9. [Google Scholar] [CrossRef]

- Balaguer-Puig, M.; Molada-Tebar, A.; Marqués-Mateu, A.; Lerma, J.L. Characterisation of intensity values on terrestrial laser scanning for recording enhancement. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2017, XLII-2/W5, 49–55. [Google Scholar] [CrossRef] [Green Version]

- Hassan, M.U.; Akcamete-Gungor, A.; Meral, C. Investigation of terrestrial laser scanning reflectance intensity and RGB distributions to assist construction material identification. In Proceedings of the Joint Conference on Computing in Construction (JC3), Heraklion, Greece, 4–7 July 2017; pp. 507–515. [Google Scholar] [CrossRef] [Green Version]

- Abdelhafiz, A.; Riedel, B.; Niemeier, W. Towards a 3D true colored space by the fusion of laser scanner point cloud and digital photos. In Proceedings of the ISPRS WG V/4 3D-ARCH 2005: Virtual Reconstruction and Visualization of Complex Architectures, Mestre-Venice, Italy, 22–24 August 2005; El-Hakim, S., Remondino, F., Gonzo, L., Eds.; Volume XXXVI-5/W17. [Google Scholar]

- Forkuo, E.K.; King, B. Automatic fusion of photogrammetric imagery and laser scanner point clouds. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 35, 921–926. [Google Scholar]

- Stal, C.; De Maeyer, P.; De Ryck, M.; De Wulf, A.; Goossens, R.; Nuttens, T. Comparison of geometric and radiometric information from photogrammetry and color-enriched laser scanning. In Proceedings of the FIG Working Week 2011: Bridging the gap between cultures, Marrakech, Morocco, 18–22 May 2011; International Federation of Surveyors (FIG): Copenhagen, Denmark; pp. 1–14. [Google Scholar]

- Moussa, W.; Abdel-Wahab, M.; Fritsch, D. An automatic procedure for combining digital images and laser scanner data. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2012, 39, 229–234. [Google Scholar] [CrossRef] [Green Version]

- Crombez, N.; Caron, G.; Mouaddib, E. 3D point cloud model colorization by dense registration of digital images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 123–130. [Google Scholar] [CrossRef] [Green Version]

- Pleskacz, M.; Rzonca, A. Design of a testing method to assess the correctness of a point cloud colorization algorithm. Arch. Fotogram. Kartogr. i Teledetekcji 2016, 28, 91–104. [Google Scholar]

- Gašparović, M.; Malarić, I. Increase of readability and accuracy of 3D models using fusion of close range photogrammetry and laser scanning. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 39, 93–98. [Google Scholar] [CrossRef] [Green Version]

- Valero, E.; Forster, A.; Bosché, F.; Wilson, L.; Leslie, A. Comparison of 3D Reality Capture Technologies for the Survey of Stone Walls. In Proceedings of the 8th International Congress on Archaeology, Computer Graphics, Cultural Heritage and Innovation ‘ARQUEOLÓGICA 2.0′, Valencia, Spain, 4–5 September 2016; pp. 14–23. [Google Scholar]

- Julin, A.; Jaalama, K.; Virtanen, J.P.; Maksimainen, M.; Kurkela, M.; Hyyppä, J.; Hyyppä, H. Automated multi-sensor 3D reconstruction for the web. ISPRS Int. J. Geo-Inf. 2019, 8, 221. [Google Scholar] [CrossRef] [Green Version]

- Loebich, C.; Wueller, D. Three years of practical experience in using ISO standards for testing digital cameras. In Proceedings of the PICS 2001: Image Processing, Image Quality, Image Capture Systems Conference, Montreal, QC, Canada, 22–25 April 2001; IS&T-The Society for Imaging Science and Technology: Springfield, VA, USA; pp. 257–261. [Google Scholar]

- Wueller, D. Evaluating digital cameras. Proc. SPIE 2006, 6069. [Google Scholar] [CrossRef]

- Jin, E.W. Image quality quantification in camera phone applications. In Proceedings of the 2008 IEEE International Conference on Acoustics, Speech and Signal Processing, Las Vegas, NV, USA, 31 March–4 April 2008; IEEE: Piscataway, NJ, USA; pp. 5336–5339. [Google Scholar]

- Peltoketo, V.T. Mobile phone camera benchmarking: Combination of camera speed and image quality. Proc. SPIE 2014, 9016. [Google Scholar] [CrossRef]

- Peltoketo, V.T. Presence capture cameras-a new challenge to the image quality. Proc. SPIE 2016, 9896. [Google Scholar] [CrossRef]

- Yang, L.; Tan, Z.; Huang, Z.; Cheung, G. A content-aware metric for stitched panoramic image quality assessment. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshop (ICCVW), Venice, Italy, 22–29 October 2017; IEEE: Piscataway, NJ, USA; pp. 2487–2494. [Google Scholar]

- Honkavaara, E.; Peltoniemi, J.; Ahokas, E.; Kuittinen, R.; Hyyppä, J.; Jaakkola, J.; Kaartinen, H.; Markelin, L.; Nurminen, K.; Suomalainen, J. A permanent test field for digital photogrammetric systems. Photogramm. Eng. Remote Sens. 2008, 74, 95–106. [Google Scholar] [CrossRef] [Green Version]

- Dąbrowski, R.; Jenerowicz, A. Portable imagery quality assessment test field for UAV sensors. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 117–122. [Google Scholar] [CrossRef] [Green Version]

- Orych, A. Review of methods for determining the spatial resolution of UAV sensors. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 391–395. [Google Scholar] [CrossRef] [Green Version]

- Leica ScanStation P40/P30-High-Definition 3D Laser Scanning Solution. Available online: https://leica-geosystems.com/products/laser-scanners/scanners/leica-scanstation-p40--p30 (accessed on 23 April 2020).

- Leica BLK360 Imaging Laser Scanner. Available online: https://leica-geosystems.com/products/laser-scanners/scanners/blk360 (accessed on 23 April 2020).

- Walsh, G. Leica ScanStation White Paper; Leica Geosystems AG: Heerbrugg, Switzerland, 2015; pp. 1–9. [Google Scholar]

- Ramos, A.P. Leica P40 Scan Colourisation with iSTAR HDR Images; NCTech: Edinburgh, UK, 2015; pp. 1–8. [Google Scholar]

- Pascale, D. RGB Coordinates of the Macbeth ColorChecker; The BabelColor Company: Montreal, QC, Canada, 2006; pp. 1–16. [Google Scholar]

- Loebich, C.; Wueller, D.; Klingen, B.; Jaeger, A. Digital camera resolution measurements using sinusoidal Siemens stars. In Digital Photography III. In Proceedings of the Electronic Imaging 2007, San Jose, CA, United States, 28 January–1 February 2007; International Society for Optics and Photonics: Bellinham, WD, USA. [CrossRef]

- ISO 12233:2017 Photography—Electronic still picture imaging—Resolution and Spatial Frequency Responses. Available online: https://www.iso.org/standard/71696.html (accessed on 25 June 2020).

- ISO 15739:2017 Photography—Electronic Still-Picture Imaging—Noise Measurements. Available online: https://www.iso.org/standard/72361.html (accessed on 25 June 2020).

- ISO 11664-2:2007 Colorimetry—Part 2: CIE Standard Illuminants. Available online: https://www.iso.org/standard/52496.html (accessed on 25 June 2020).

- IEC 61966-2-1:1999 Multimedia Systems and Equipment-Colour Measurement and Management—Part 2-1: Colour Management-Default RGB Colour Space-sRGB. Available online: https://webstore.iec.ch/publication/6169 (accessed on 25 June 2020).

- Direct3D. Available online: https://docs.microsoft.com/en-us/windows/win32/direct3d (accessed on 25 June 2020).

- OpenGL—The Industry’s Foundation for High Performance Graphics. Available online: https://opengl.org/ (accessed on 25 June 2020).

- WebGL Overview. Available online: https://www.khronos.org/webgl/ (accessed on 25 June 2020).

- Wang, Z.; Bovik, A.C. Modern image quality assessment. In Synthesis Lectures on Image, Video, and Multimedia Processing, 1st ed.; Morgan & Claypool Publishers: San Rafael, CA, USA, 2006; pp. 1–156. [Google Scholar] [CrossRef] [Green Version]

- Imatest Master. Available online: https://www.imatest.com/products/imatest-master/ (accessed on 12 May 2020).

- iQ-Analyzer. Available online: https://www.image-engineering.de/products/software/376-iq-analyzer (accessed on 12 May 2020).

- Peltoketo, V.T. Benchmarking of Mobile Phone Cameras. Doctoral Thesis, University of Vaasa, Vaasa, Finland, 2016; pp. 1–168. [Google Scholar]

- Leica Cyclone REGISTER 360-3D Laser Scanning Point Cloud Registration Software. Available online: https://leica-geosystems.com/products/laser-scanners/software/leica-cyclone/leica-cyclone-register-360 (accessed on 25 June 2020).

- FARO SCENE SOFTWARE. Available online: https://www.faro.com/products/construction-bim/faro-scene/ (accessed on 25 June 2020).

- Darktable. Available online: https://www.darktable.org/ (accessed on 25 June 2020).

- Banterle, F.; Ledda, P.; Debattista, K.; Chalmers, A. Inverse tone mapping. In GRAPHITE ‘06, Proceedings of the 4th International Conference on Computer Graphics and Interactive Techniques in Australasia and Southeast Asia, Kuala Lumpur, Malaysia, 29 November–2 December 2006; ACM: New York, NY, USA, 2006; pp. 349–356. [Google Scholar]

- Mantiuk, R.; Seidel, H.P. Modeling a generic tone-mapping operator. In Computer Graphics Forum, Proceedings of the Eurographics 2008, Crete, Greece, 14–18 April 2008; European Association for Computer Graphics: Aire-la-Ville, Switzerland, 2008; pp. 699–708. [Google Scholar]

- CloudCompare. Available online: http://www.cloudcompare.org/ (accessed on 25 June 2020).

- Color/Tone & eSFR ISO Noise Measurements. Available online: https://www.imatest.com/docs/color-tone-esfriso-noise/ (accessed on 12 May 2020).

- Color/Tone and Colorcheck Appendix. Available online: https://www.imatest.com/docs/colorcheck_ref/ (accessed on 12 May 2020).

- Sharma, G.; Wu, W.; Dalal, E.N. The CIEDE2000 color-difference formula: Implementation notes, supplementary test data, and mathematical observations. Color. Res. Appl. 2015, 30, 21–30. [Google Scholar] [CrossRef]

- ISO/CIE 11664-6:2014 Colorimetry—Part 6: CIEDE2000 Colour-Difference Formula. Available online: https://www.iso.org/standard/63731.html (accessed on 25 June 2020).

- Habekost, M. Which color differencing equation should be used. Int. Circ. Graph. Educ. Res. 2013, 6, 20–33. [Google Scholar]

- Mokrzycki, W.S.; Tatol, M. Colour difference ∆E-A survey. Mach. Graph. Vis. 2011, 20, 383–411. [Google Scholar]

- Star Chart, 2020 Star Chart. Available online: https://www.imatest.com/docs/starchart/ (accessed on 12 May 2020).

- Koren, N.L. Correcting Misleading Image Quality Measurements. In Proceedings of the 2020 IS&T International Symposium on Electronic Imaging, Burlingame, CA, USA, 26–30 January 2020; Society for Imaging Science and Technology: Springfield, VA, USA; pp. 1–9. [Google Scholar] [CrossRef]

- Shannon Information Capacity. Available online: https://www.imatest.com/docs/shannon/ (accessed on 12 May 2020).

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef] [Green Version]

- Koren, N.L. Measuring camera Shannon Information Capacity with a Siemens Star Image. In Proceedings of the 2020 IS&T International Symposium on Electronic Imaging, Burlingame, CA, USA, 26–30 January 2020; Society for Imaging Science and Technology: Springfield, VA, USA; pp. 1–9. [Google Scholar] [CrossRef]

- ISO 15739—Noise Measurements. Available online: https://www.imatest.com/solutions/iso-15739/ (accessed on 19 May 2020).

- Fleming, P.J.; Wallace, J.J. How not to lie with statistics: The correct way to summarize benchmark results. Commun. ACM 1986, 29, 218–221. [Google Scholar] [CrossRef]

- Phillips, J.B.; Eliasson, H. Camera Image Quality Benchmarking, 1st ed.; John Wiley & Sons: Hoboken, NJ, USA, 2018; pp. 1–396. [Google Scholar]

- Honkavaara, E.; Saari, H.; Kaivosoja, J.; Pölönen, I.; Hakala, T.; Litkey, P.; Mäkynen, J.; Pesonen, L. Processing and Assessment of Spectrometric, Stereoscopic Imagery Collected Using a Lightweight UAV Spectral Camera for Precision Agriculture. Remote Sens. 2013, 5, 5006–5039. [Google Scholar] [CrossRef] [Green Version]

- Vaaja, M.T.; Kurkela, M.; Virtanen, J.-P.; Maksimainen, M.; Hyyppä, H.; Hyyppä, J.; Tetri, E. Luminance-Corrected 3D Point Clouds for Road and Street Environments. Remote Sens. 2015, 7, 11389–11402. [Google Scholar] [CrossRef] [Green Version]

- Unreal Engine. Available online: https://www.unrealengine.com/en-US/ (accessed on 25 June 2020).

- Schütz, M. Potree: Rendering large point clouds in web browsers. Master’s Thesis, Technische Universität Wien, Vienna, Austria, 2016; pp. 1–84. [Google Scholar]

- Kurkela, M.; Maksimainen, M.; Vaaja, M.T.; Virtanen, J.P.; Kukko, A.; Hyyppä, J.; Hyyppä, H. Camera preparation and performance for 3D luminance mapping of road environments. Photogramm. J. Finl. 2017, 25, 1–23. [Google Scholar] [CrossRef]

| TLS System | Scan Rate | Ranging Method | Range Accuracy | Max Range | Wavelength | Beam Divergence | Weight (Incl. Battery) |

|---|---|---|---|---|---|---|---|

| Leica ScanStation P40 | 1,000,000 pts/s | Time-of-flight | 1.2 mm + 10 ppm | 270 m | 1550 nm | <0.23 mrad (FWHM) | 12.65 kg |

| Faro Focus S 350 | 976,000 pts/s | Phase based | 1.0 mm | 350 m | 1550 nm | 0.3 mrad (1/e) | 4.2 kg |

| Leica RTC360 | 2,000,000 pts/s | Time-of-flight | 1.0 mm + 10 ppm | 130 m | 1550 nm | 0.5 mrad (1/e2, full angle) | 5.64 kg |

| Leica BLK360 | 360,000 pts/s | Time-of-flight | 4 mm @ 10 m | 60 m | 830 nm | 0.4 mrad (FWHM) | 1 kg |

| TLS System | No. of Camera Sensors | Camera Configuration | Camera Sensor Resolution (Pixels) | No. of Photos Used for Equirectangular Panorama | Est. Total Raw Pixel Count for Single Exposure (Megapixels) | No. of Exposure Brackets for HDR Imaging |

|---|---|---|---|---|---|---|

| Leica ScanStation P40 | 1 | Mounted coaxially with laser | 1920 × 1920 1 | 260 [70] | 958 3 | 3 [71] |

| Faro Focus S 350 | 1 | Mounted coaxially with laser | 3264 × 2448 2 | 66 3 | 527 3 | 2, 3, or 5 |

| Leica RTC360 | 3 | Mounted to scanner body | 4000 × 3000 1 | 12 per camera 1 | 432 1 | 5 |

| Leica BLK360 | 3 | Mounted to scanner body | 2592 × 1944 1 | 10 per camera 1 | 150 1 | 2, 3, 4, or 5 |

| TLS System | Scan Resolution | Dynamic Range | Scan Time (min:s) | Imaging Time (min:s) | Total Time (min:s) |

|---|---|---|---|---|---|

| Leica ScanStation P40 | 3.1 mm @ 10 m | HDR | 3:30 | 10:19 | 13:49 |

| LDR | 3:30 | 7:22 | 10:52 | ||

| 6.3 mm @ 10 m | HDR | 1:49 | 10:19 | 12:08 | |

| LDR | 1:49 | 7:22 | 9:11 | ||

| Faro Focus S 350 | 3.1 mm @ 10 m | HDR | 15:19 | 11:25 | 26:44 |

| LDR | 15:19 | 2:07 | 17:26 | ||

| 6.1 mm @ 10 m | HDR | 4:35 | 11:25 | 16:00 | |

| LDR | 4:35 | 2:07 | 6:42 | ||

| Leica RTC360 | 3.0 mm @ 10 m | HDR | 1:42 | 1:00 | 2:42 |

| HDR | 0:51 | 1:00 | 1:51 | ||

| Leica BLK360 | 5.0 mm @ 10 m | HDR | 3:40 | 1:40 | 5:20 |

| LDR | 3:40 | 1:00 | 4:40 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Julin, A.; Kurkela, M.; Rantanen, T.; Virtanen, J.-P.; Maksimainen, M.; Kukko, A.; Kaartinen, H.; Vaaja, M.T.; Hyyppä, J.; Hyyppä, H. Evaluating the Quality of TLS Point Cloud Colorization. Remote Sens. 2020, 12, 2748. https://doi.org/10.3390/rs12172748

Julin A, Kurkela M, Rantanen T, Virtanen J-P, Maksimainen M, Kukko A, Kaartinen H, Vaaja MT, Hyyppä J, Hyyppä H. Evaluating the Quality of TLS Point Cloud Colorization. Remote Sensing. 2020; 12(17):2748. https://doi.org/10.3390/rs12172748

Chicago/Turabian StyleJulin, Arttu, Matti Kurkela, Toni Rantanen, Juho-Pekka Virtanen, Mikko Maksimainen, Antero Kukko, Harri Kaartinen, Matti T. Vaaja, Juha Hyyppä, and Hannu Hyyppä. 2020. "Evaluating the Quality of TLS Point Cloud Colorization" Remote Sensing 12, no. 17: 2748. https://doi.org/10.3390/rs12172748