RGB Image-Derived Indicators for Spatial Assessment of the Impact of Broadleaf Weeds on Wheat Biomass

Abstract

:1. Introduction

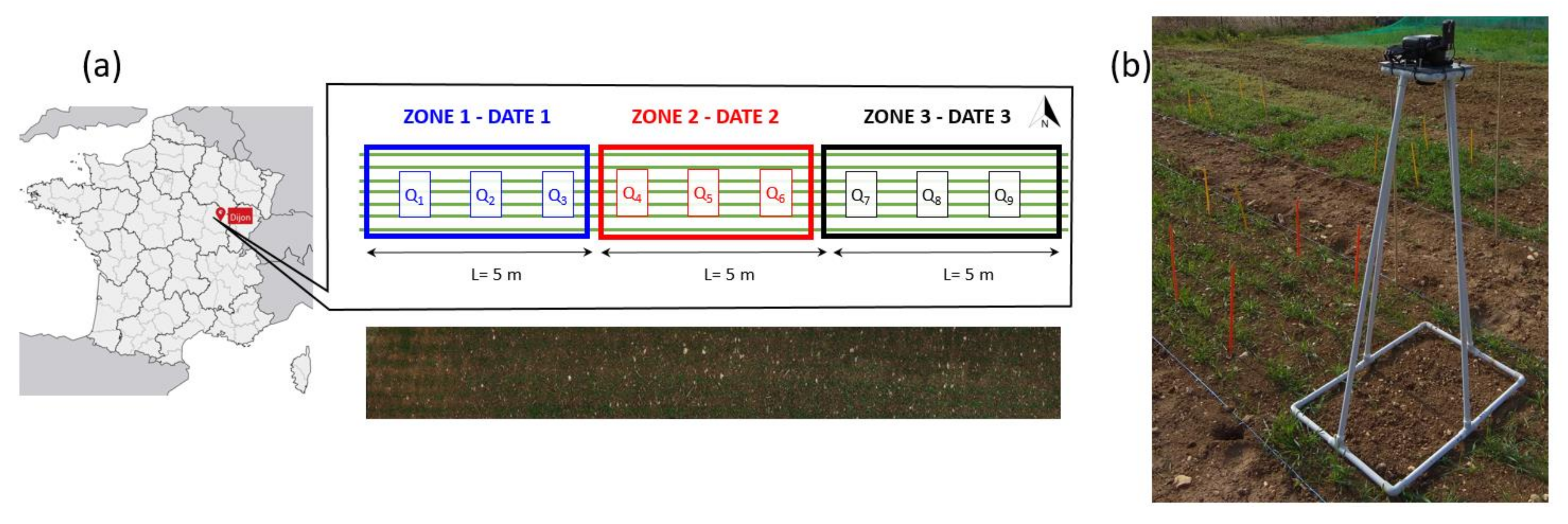

2. Materials and Methods

2.1. Study Site and Data Acquisition

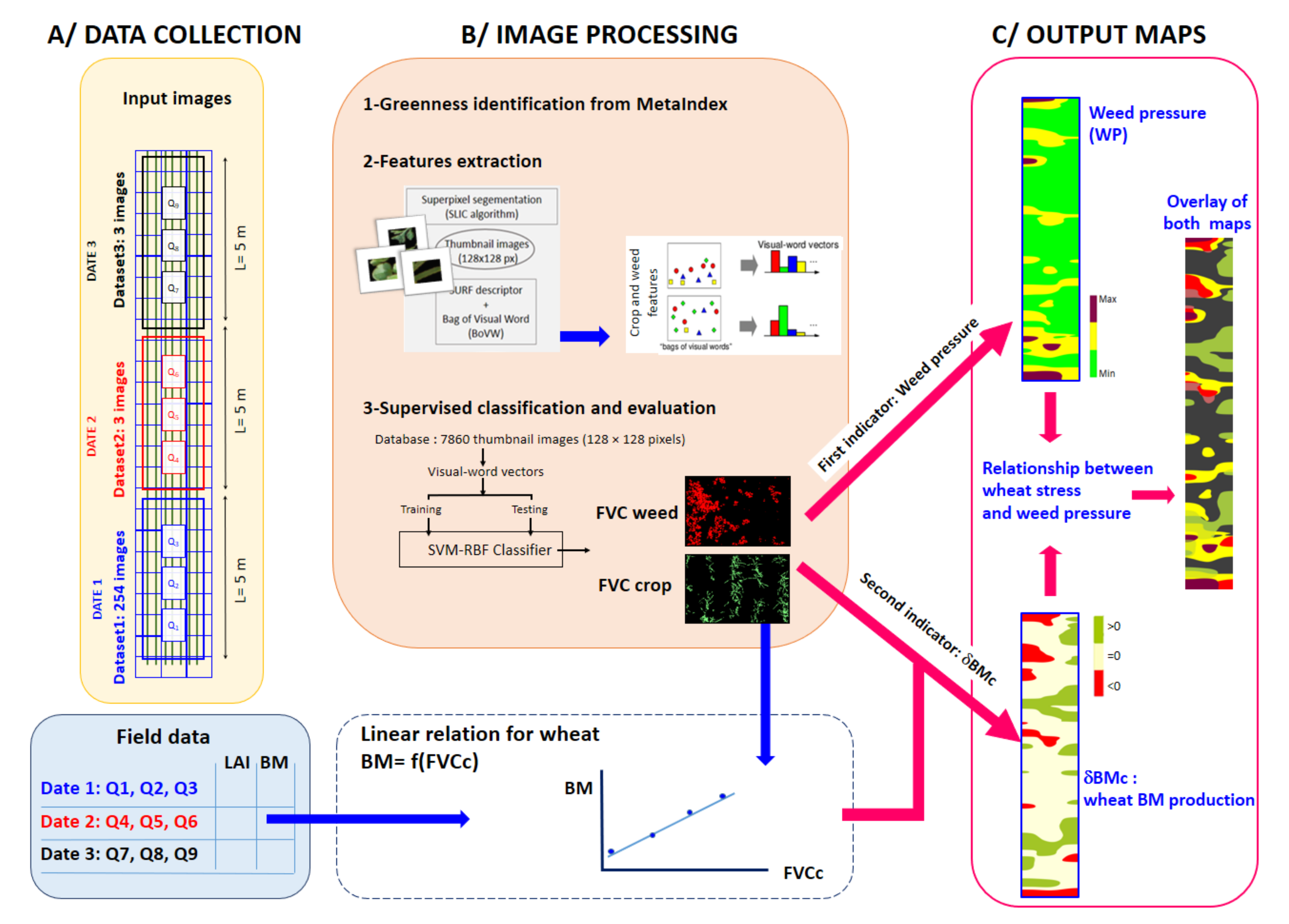

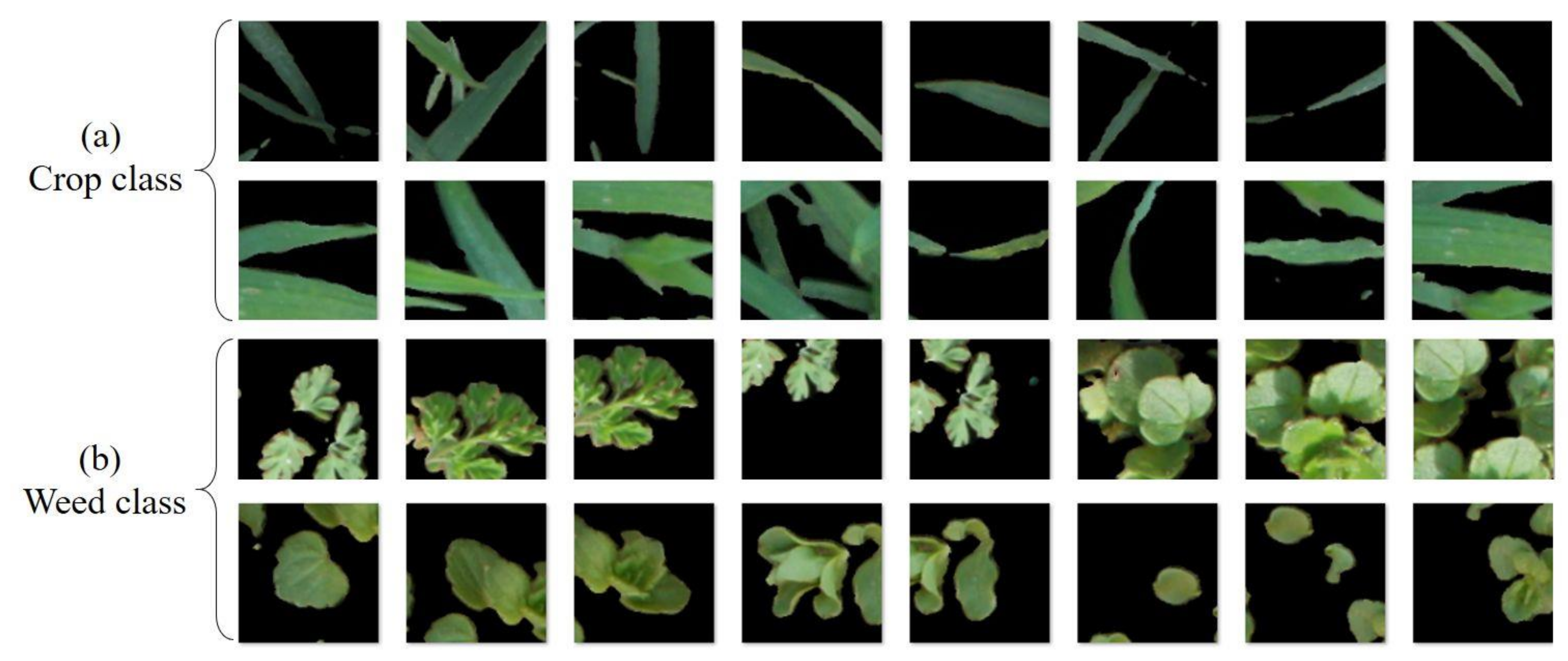

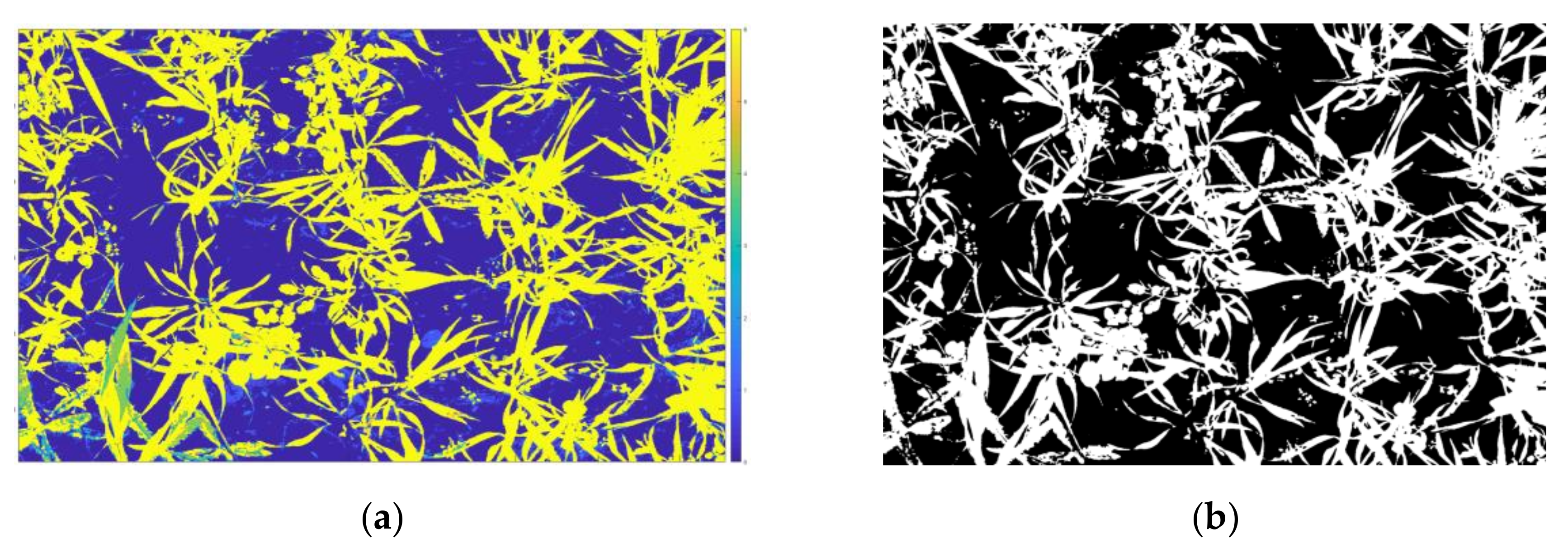

2.2. Image Processing

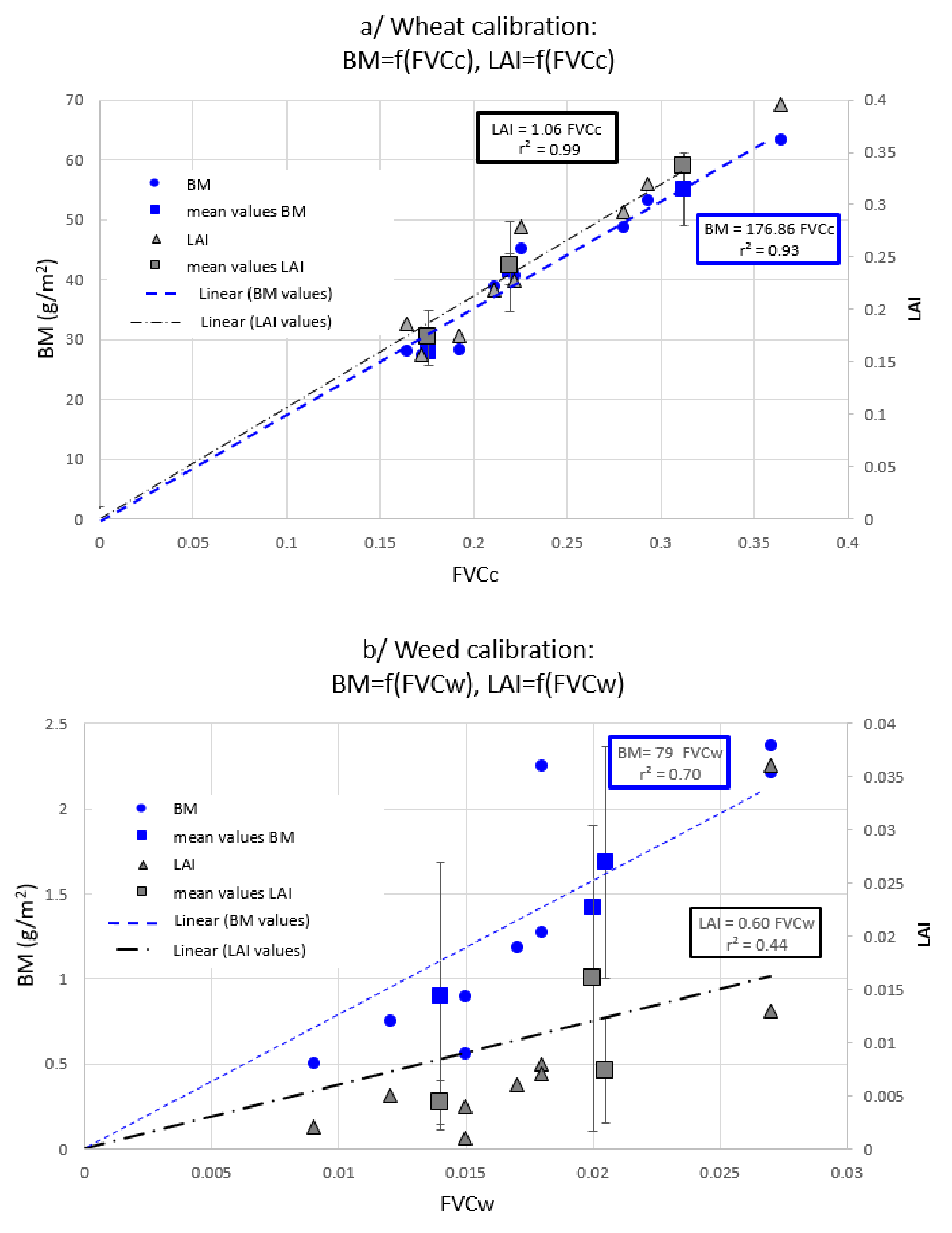

2.3. Two Non-Destructive Indicators for a Crop-Weed Competition

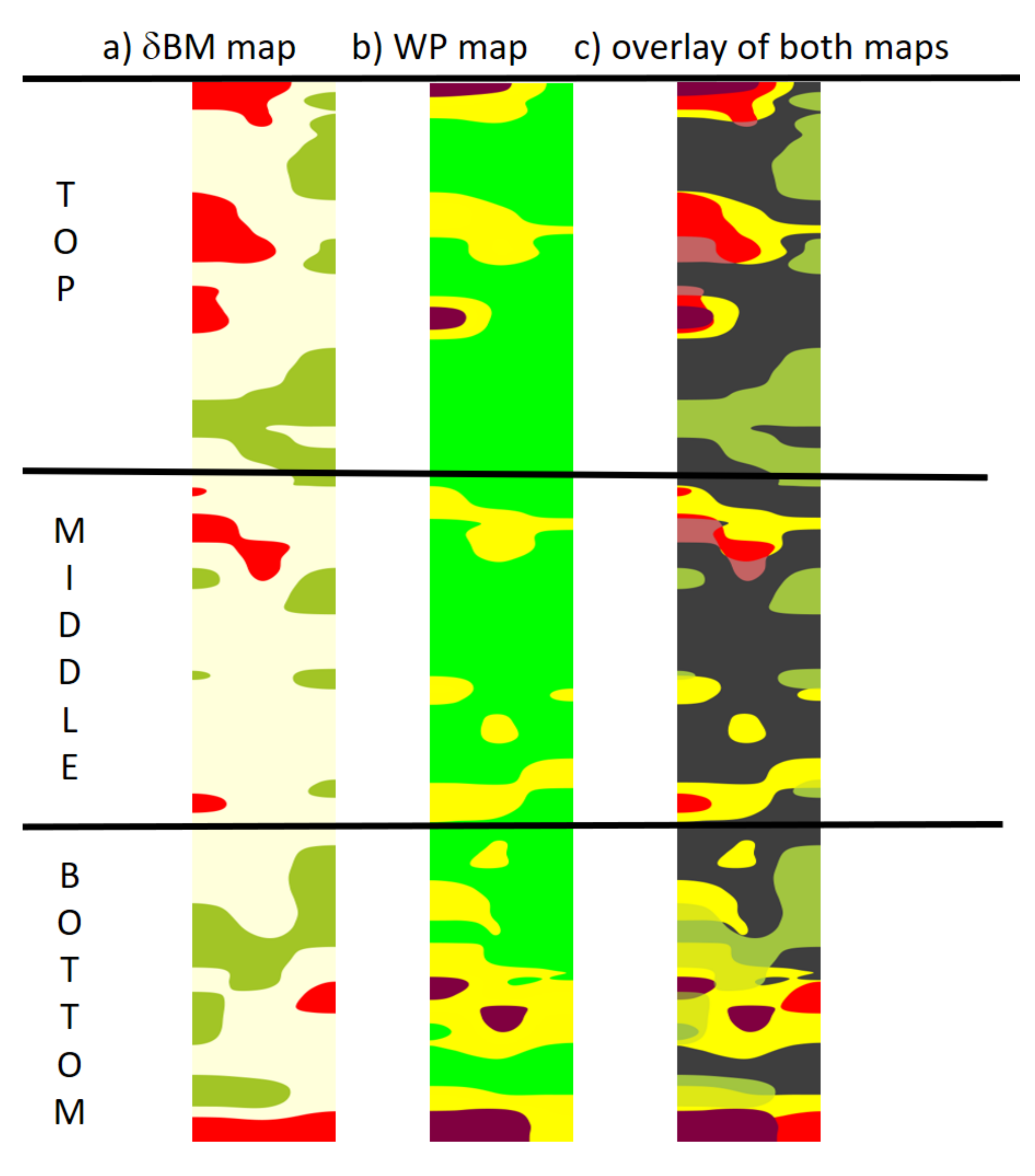

- The weed pressure (WP) is expressed as a percentage and defined as

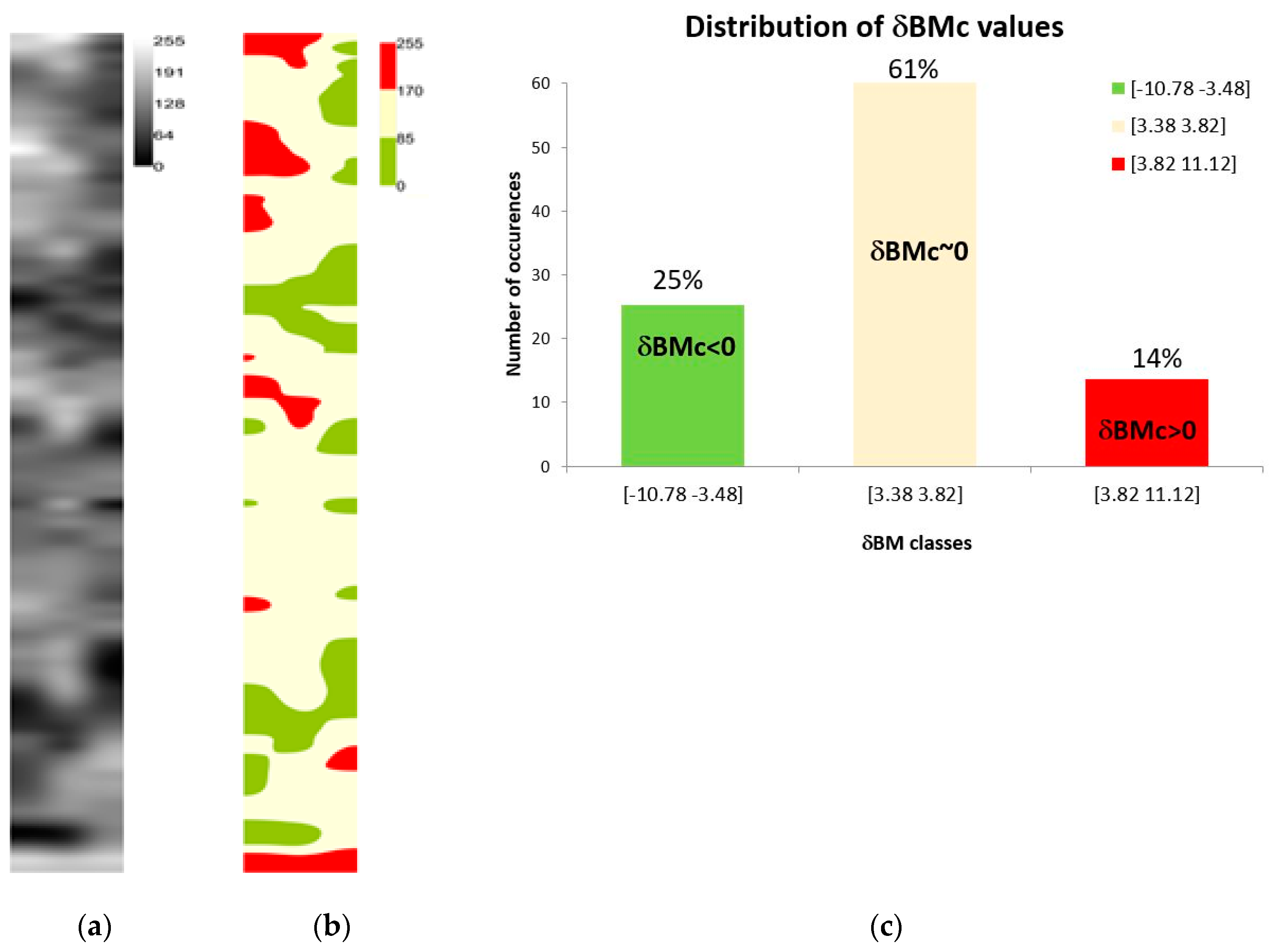

- Evaluation of the local wheat above-ground biomass production: δBMc

3. Results

3.1. Crop/Weed Map from SVM Classifier and Classification Performance

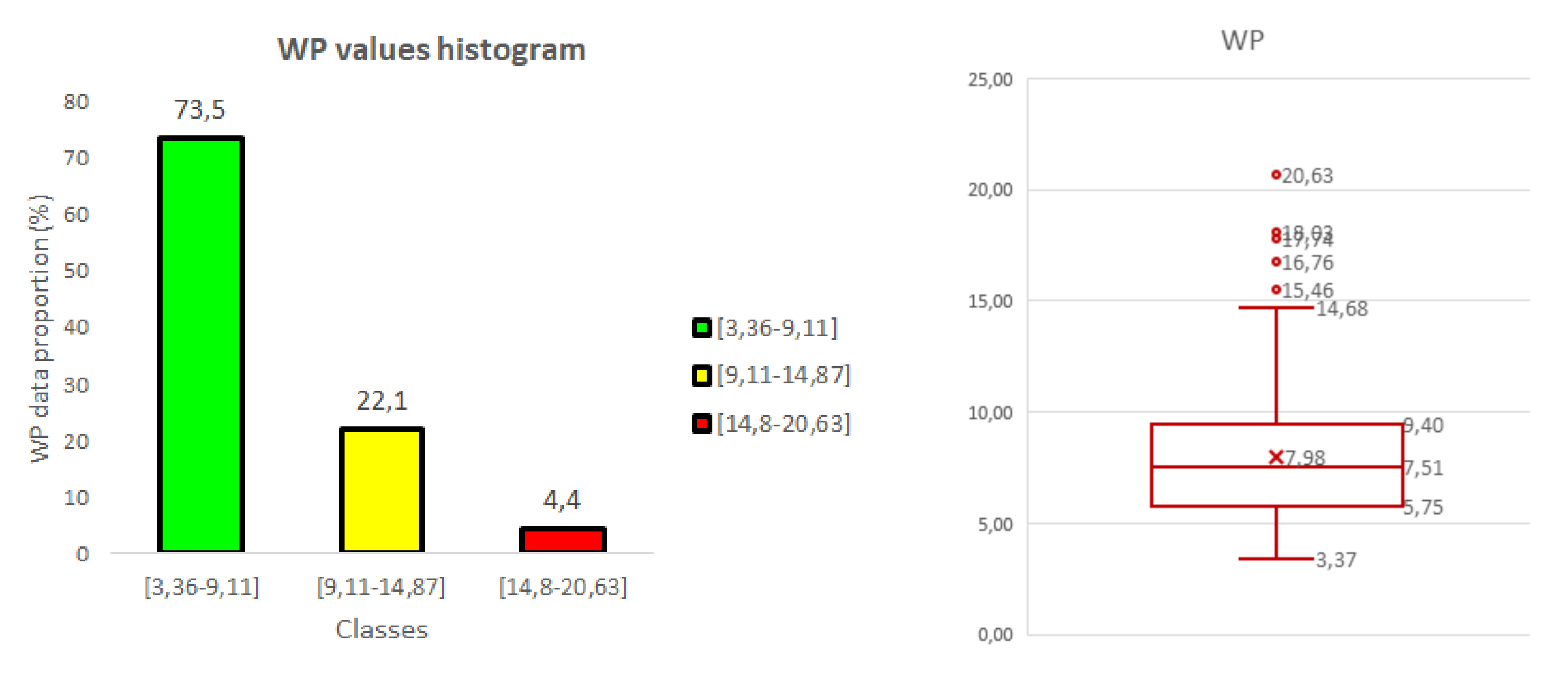

3.2. Weed Pressure (WP)

3.3. A Non-Destructive Indicator of Wheat Crop Growth: δBMc

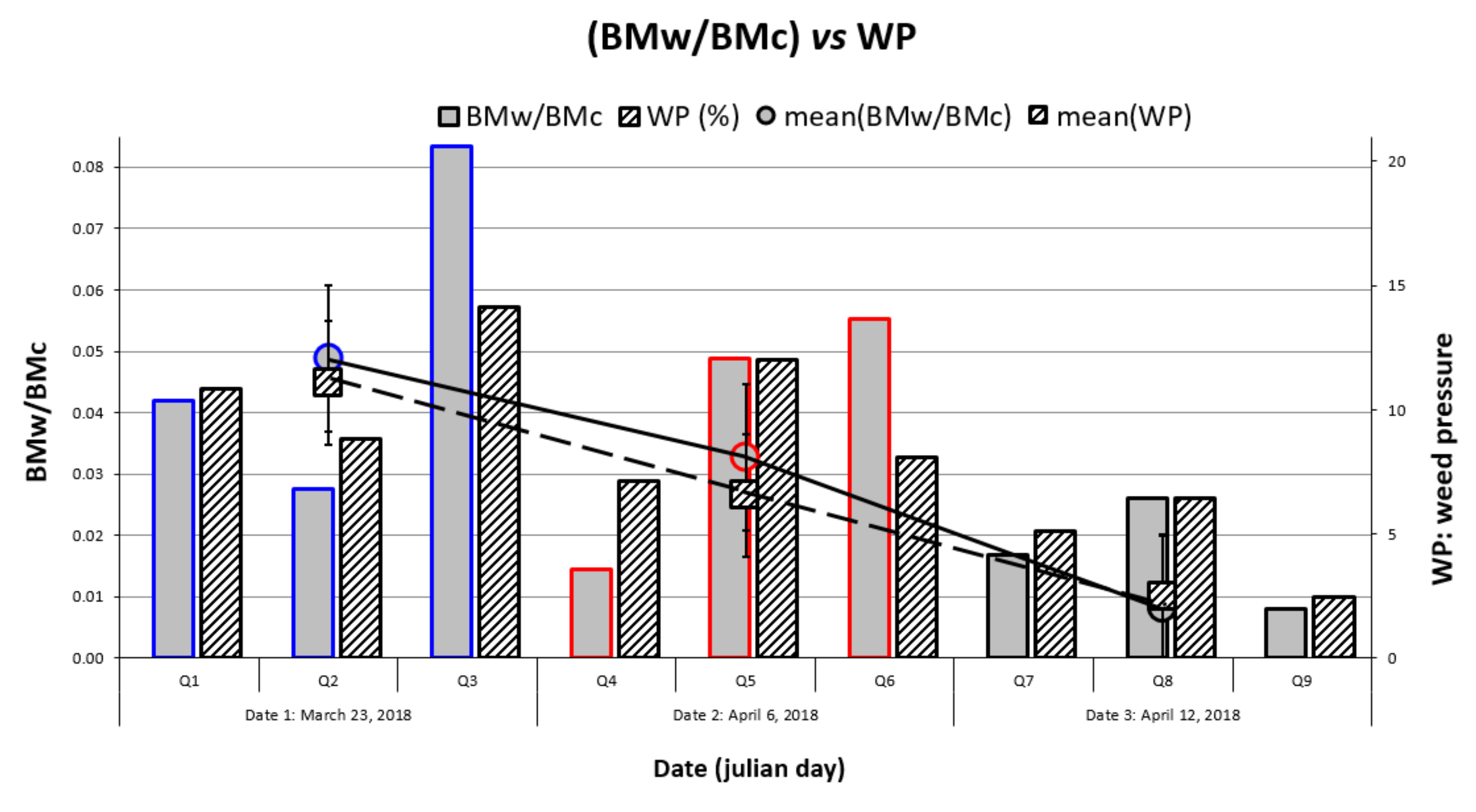

3.4. Comparison of δBMc and WP Maps

4. Discussion

4.1. Relationship between Wheat Stress and Weed Pressure

4.2. Temporal Evolution of Weed Harmfulness

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| Abbreviation | Description |

| RGB image | Red Green Blue image: visible image. |

| LAI | The Leaf Area Index (LAI expressed in m2.m−2) is defined as the total area of the upper surfaces of the leaves contained in a volume above a square metre of soil area. It is determined destructively using a planimeter. It is a key variable used for physiological and functional plant models and by remote sensing models at large scale |

| Above-ground BM | The dry matter biomass of aerial plant parts (BM expressed in g.m−2) is obtained by weighing plants after oven drying at 80 °C for 48 h. It is a key parameter for vegetation growth models playing a major role in photosynthesis and ecosystem functioning. |

| BMw/BMc | The ratio between weeds and crop biomass, deduced from destructive measures, is one of the closest indicators to the concept of direct primary harmfulness. |

| FVC, FVCc, FVCw | Fractionnal Vegetation Cover is a parameter deduced from image. It corresponds to a vertical projection of plant foliar area. It represents the ratio of the number of pixels of vegetation to the total number of pixels in the image. FVCc and FVCw are the fraction of the soil covered by ‘crop’ or ‘weed’ type vegetation. Capturing vertical images allows comparing the FVC with the LAI and BM at early plant growth stages. |

| WP indicator | Weed Pressure defined as FVCw/FVCc ratio. It is deduced from image parameters and it represents the balance of power between crop and weeds. |

| δBMc indicator | It is defined as the difference between , the mean value of wheat above-ground biomass in the field, and . It is an evaluation of the local wheat above-ground biomass production. A local excess of wheat above-ground biomass is observed when whereas a stress is observed when |

| SVM-RBF classifier | Support Vector Machine with a Radial Basis Function kernel. It allows classifying data that is not at all linearly separable. A two-class classification (crop and weeds) is used and classifier input data are the BoVW vectors containing the main features for each observable (i.e., crop, weed). |

| SLIC algorithm | Simple Linear Iterative Clustering algorithm. It is a fast and robust algorithm to segment image by clustering pixels based on their color similarity and proximity in the image. Thus, it generates superpixels that are more meaningful and easier to analyze. In our study, the superpixels of vegetation (128px x 128px) are then called ‘thumbnails’ and used to create the training data set (5000 labelled thumbnails per class). From this technique, we increase the number of labelled images of training set. |

| SURF algorithm | Speeded-Up Robust Features algorithm. It is a fast descriptor algorithm used for object detection and recognition. It is a robust algorithm in a scale and in-plane rotation invariant. SURF descriptors are used to recognize vegetation features. Thousands of features of each stand (crop and weeds) are extracted to construct a 500-dimensional BoVW vectors. |

| BoVW model | Bag of Visual Words (BoVW) model considers image features as words. In image classification, a bag of visual words is a frequency vector, called the “bag of visual words”, which counts the number of unique relations between the features of an image to the visual dictionary. The visual dictionary is generated aggregating extracted features (500). |

| TP/FP/PN/FN | Parameters deduced from confusion matrix to evaluate the performance of the supervised learning classifier (SVM-RBF)TP: true positive/FP: false positive/TN: true negative/FN: false negative |

Appendix A

| Specification | Value |

|---|---|

| Geometric resolution (px) | 4272 × 2848 |

| CMOS sensor size (mm) | 22.2 × 14.8 |

| Megapixels | 12.2 |

| Focal length (mm) | 35 |

| 2018 | Image Approach | Destructive Approach | ||||||

|---|---|---|---|---|---|---|---|---|

| Wheat | Weeds | Wheat | Weeds | |||||

| FVCc | FVCw | LAI | BMc (g.m−2) | LAI | BMc (g.m−2) | Plants.m−2 | ||

| March 23 | Quadrat 1 | 0.157 | 0.034 | 0.187 | 28.2 | 0.006 | 1.184 | 67 |

| Quadrat 2 | 0.17 | 0.015 | 0.157 | 27.4 | 0.005 | 0.754 | 23 | |

| Quadrat 3 | 0.184 | 0.026 | 0.175 | 28.4 | 0.036 | 2.369 | 76 | |

| April 6 | Quadrat 4 | 0.211 | 0.014 | 0.218 | 39 | 0.001 | 0.564 | 44 |

| Quadrat 5 | 0.225 | 0.027 | 0.279 | 45.2 | 0.013 | 2.211 | 58 | |

| Quadrat 6 | 0.222 | 0.017 | 0.227 | 10.8 | 0.008 | 2.251 | 35 | |

| April 12 | Quadrat 7 | 0.293 | 0.015 | 0.32 | 53.3 | 0.004 | 0.898 | 84 |

| Quadrat 8 | 0.28 | 0.018 | 0.293 | 48.7 | 0.007 | 1.272 | 70 | |

| Quadrat 9 | 0.364 | 0.009 | 0.395 | 63.28 | 0.002 | 0.503 | 61 | |

References

- Christensen, S.; Heisel, T.; Walter, A.M.; Graglia, E. A decision algorithm for patch spraying. Weed Res. 2003, 43, 276–284. [Google Scholar] [CrossRef]

- Maes, W.H.; Steppe, K. Perspectives for remote sensing with unmanned aerial vehicles in precision agriculture. Trends Plant Sci. 2019, 24, 152–164. [Google Scholar] [CrossRef] [PubMed]

- Bàrberi, P. Weed management in organic agriculture: Are we addressing the right issues. Weed Res. 2002, 42, 177–193. [Google Scholar] [CrossRef]

- Preston, C. Plant biotic stress: Weeds. Encycl. Agric. Food Syst. 2014, 343–348. [Google Scholar] [CrossRef]

- Winifred, E.; Brenchley, W.E. The effect of weeds upon cereal crops. New Phytol. 1917, 16, 53–76. [Google Scholar]

- López-Granados, F. Weed detection for site-specific weed management: Mapping and real-time approaches. Weed Res. 2011, 51, 1–11. [Google Scholar] [CrossRef] [Green Version]

- Bawden, O.; Kulk, J.; Russell, R.; McCool, C.; English, A.; Dayoub, F.; Perez, T. Robot for weed species plant-specific management. J. Field Robot. 2017, 34, 1179–1199. [Google Scholar] [CrossRef]

- Pflanz, M.; Nordmeyer, H.; Schirrmann, M. Weed Mapping with UAS Imagery and a Bag of Visual Words Based Image Classifier. Remote Sens. 2018, 10, 1530. [Google Scholar] [CrossRef] [Green Version]

- Peña, J.M.; Torres-Sánchez, J.; de Castro, A.I.; Kelly, M.; López-Granados, F. Weed mapping in early-season Maize fields using object-based analysis of unmanned aerial vehicle (UAV) images. PLoS ONE 2013, 8, e77151. [Google Scholar] [CrossRef] [Green Version]

- Louargant, M.; Jones, G.; Faroux, R.; Maillot, T.; Gée, C.; Villette, S. Unsupervised classification algorithm for early weed detection in row-crops by combining spatial and spectral information. Remote Sens. 2018, 10, 761. [Google Scholar] [CrossRef] [Green Version]

- De Castro, A.I.; Torres-Sánchez, J.; Peña, J.M.; Jiménez-Brenes, F.M.; Csillik, O.; López-Granados, F. An automatic Random Forest-OBIA Algorithm for Early Weed Mapping between and within Crop Rows Using UAV Imagery. Remote Sens. 2018, 10, 285. [Google Scholar] [CrossRef] [Green Version]

- Huang, H.; Deng, J.; Lan, Y.; Yang, A.; Deng, X.; Zhang, L. A fully convolutional network for weed mapping of unmanned aerial vehicle (UAV) imagery. PLoS ONE 2018, 13, e0196302. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- López-Granados, F.; Torres-Sánchez, J.; Serrano-Pérez, A.; De Castro, A.I.; Mesas-Carrascosa, F.J.; Peña, J.-M. Early season weed mapping in sunflower using UAV technology: Variability of herbicide treatment maps against weed thresholds. Precis. Agric. 2016, 17, 183–199. [Google Scholar] [CrossRef]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color indices for weed identification under various soil, residue, and lighting conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; Peña, J.; de Castro, A.; López-Granados, F. Multi-temporal mapping of the vegetation fraction in early-season wheat fields using images from UAV. Comput. Electron. Agric. 2014, 103, 104–113. [Google Scholar] [CrossRef]

- Meyer, G.E.; Neto, J.C. Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 2008, 63, 282–293. [Google Scholar] [CrossRef]

- Rouse, J.; Haas, R.; Schell, J.; Deering, D. Monitoring vegetation systems in the Great Plains with ERTS. In Third Earth Resources Technology Satellite-1 Symposium—Volume I: Technical Presentations; NASA: Washington, DC, USA, 1974; p. 309. [Google Scholar]

- Tang, L.; Tian, L.; Steward, B.L. Classification of broadleaf and grass weeds using Gabor wavelets and an artificial neural network. Trans. ASAE 2003, 46, 1247–1254. [Google Scholar] [CrossRef] [Green Version]

- Gée, C.; Guillemin, J.P.; Bonvarlet, L.; Magnin-Robert, J.B. Weeds classification based on spectral properties. In Proceedings of the 7th International Conference on Precision Agriculture and Others Resources Management, Minneapolis, MN, USA, 24–28 July 2004. [Google Scholar]

- Pérez-Ortiz, M.; Peña, J.M.; Gutiérrez, P.A.; Torres-Sánchez, J.; Hervás-Martínez, C.; López-Granados, F. Selecting patterns and features for between- and within- crop-row weed mapping using UAV imagery. Expert Syst. Appl. 2016, 47, 85–94. [Google Scholar] [CrossRef] [Green Version]

- Akbarzadeh, S.; Paap, A.; Ahderom, S.; Apopei, B.; Alameh, K. Plant discrimination by Support Vector Machine classifier based on spectral reflectance. Comput. Electron. Agric. 2018, 148, 250–258. [Google Scholar] [CrossRef]

- Henrique Yano, I. Weed identification in sugarcane plantation through images taken from remotely piloted aircraft (RPA) and kNN classifier. J. Food Nutr. Sci. 2017, 5, 211. [Google Scholar] [CrossRef] [Green Version]

- Guerrero, J.; Pajares, G.; Montalvo, M.; Romeo, J.; Guijarro, M. Support vector machines for crop/weeds identification in maize fields. Expert Syst. Appl. 2012, 39, 11149–11155. [Google Scholar] [CrossRef]

- Garcia-Ruiz, F.J.; Wulfsohn, D.; Rasmussen, J. Sugar beet (Beta vulgaris L.) and thistle (Cirsium arvensis L.) discrimination based on field spectral data. Biosyst. Eng. 2015, 139, 1–15. [Google Scholar] [CrossRef]

- Pérez-Ortiz, M.; Pena, J.M.; Gutiérrez, P.A.; Torres-Sánchez, J.; Hervás-Martínez, C.; López-Granados, F. A semi-supervised system for weed mapping in sunflower crops using unmanned aerial vehicles and a crop row detection method. Appl. Soft Comput. J. 2015, 37, 533–544. [Google Scholar] [CrossRef]

- Bah, M.D.; Hafiane, A.; Canals, R. Deep Learning with unsupervised data labeling for weeds detection on UAV images. Remote Sens. 2018, 10, 1690. [Google Scholar] [CrossRef] [Green Version]

- Suh, H.K.; Hofstee, J.W.; IJsselmuiden, J.; Van Henten, E.J. Sugar beet and volunteer potato classification using Bag-of-Visual-Words model, Scale-Invariant Feature Transform, or Speeded Up Robust Feature descriptors and crop row information. Biosyst. Eng. 2018, 166, 210–226. [Google Scholar] [CrossRef] [Green Version]

- Castillejo-González, I.L.; Peña-Barragán, J.M.; Jurado-Expósito, M.; Mesas-Carrascosa, F.J.; López-Granados, F. Evaluation of pixel- and object-based approaches for mapping wild oat (Avena sterilis) weed patches in wheat fields using QuickBird imagery for site-specific management. Eur. J. Agron. 2014, 59, 57–66. [Google Scholar] [CrossRef]

- Baio, F.H.R.; Neves, D.C.; Souza, H.B.; Leal, A.J.F.; Leite, R.C.; Molin, J.P.; Silva, S.P. Variable rate spraying application on cotton using an electronic flow controller. Precis. Agric. 2018, 19, 912–928. [Google Scholar] [CrossRef]

- Pandey, P.; Irulappan, V.; Bagavathiannan, M.V.; Senthil-Kumar, M. Impact of combined abiotic and biotic stresses on plant growth and avenues for crop improvement by exploiting physio-morphological Traits. Front. Plant Sci. 2017, 8, 537. [Google Scholar] [CrossRef] [Green Version]

- Buhler, D.D. Development of Alternative Weed Management Strategies. J. Prod. Agric. 1996, 9, 501–505. [Google Scholar] [CrossRef]

- Van Evert, F.K.; Fountas, S.; Jakovetic, D.; Crnojrvic, V.; Travlos, I.; Kempenaar, C. Big Data for weed control and crop protection. Weed Res. 2017, 57, 218–233. [Google Scholar] [CrossRef] [Green Version]

- Chason, J.W.; Baldocchi, D.D.; Huston, M.A. A comparison of direct and indirect methods forestimating forest canopy leaf area. Agrici. For. Meteorol. 1991, 57, 107–128. [Google Scholar] [CrossRef]

- Fuentes, S.; Palmer, A.R.; Taylor, D.; Zeppel, M.; Whitley, R.; Eamus, D. An automated procedure for estimating the leaf area index (LAI) of woodland ecosystems using digital imagery, MATLAB programming and its application to an examination of the relationship between remotely sensed and field measurements of LAI. Funct. Plant. Biol. 2008, 35, 1070–1079. [Google Scholar] [CrossRef] [PubMed]

- Pekin, B.; Macfarlane, C. Measurement of crown cover and leaf area index using digital cover photography and its application to remote sensing. Remote Sens. 2009, 1, 1298–1320. [Google Scholar] [CrossRef] [Green Version]

- Colbach, N.; Cordeau, S. Reduced herbicide use does not increase crop yield loss if it is compensated by alternative preventive and curative measures. Eur. J. Agron. 2018, 94, 67–78. [Google Scholar] [CrossRef]

- Lotz, L.A.P.; Kropff, M.J.; Wallinga, J.; Bos, H.J.; Groeneveld, R.M.W. Techniques to estimate relative leaf area and cover of weeds in crops for yield loss prediction. Weed Res. 1994, 34, 167–175. [Google Scholar] [CrossRef]

- Rasmussen, J.; Norremark, M.; Bibby, B.M. Assessment of leaf cover and crop soil cover in weed harrowing research using digital images. Weed Res. 2017, 47, 199–310. [Google Scholar] [CrossRef] [Green Version]

- Casadesús, J.; Villegas, D. Conventional digital cameras as a tool for assessing leaf area index and biomass for cereal breeding. J. Integr. Plant. Biol. 2014, 56, 7–14. [Google Scholar] [CrossRef]

- Huete, A.R. A soil vegetation adjusted index (SAVI). Int J. Remote Sens. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Baret, F.; Guyot, G. Potentials and limits of vegetation indices for LAI and APAR assessment. Remote Sens. Environ. 1991, 35, 161–173. [Google Scholar] [CrossRef]

- Carlson, T.N.; Ripley, D.A. On the relation between NDVI, fractional vegetation cover, and leaf area index. Remote Sens. Environ. 1997, 62, 241–252. [Google Scholar] [CrossRef]

- Royo, C.; Villegas, D. Field Measurements of Canopy Spectra for Biomass Assessment of Small-Grain Cereals. In Production and Usage; Matovic, D., Ed.; Biomass: Rijeka, Croatia, 2011. [Google Scholar]

- Casadesús, J.; Villegas, D. Simple digital photography for assessing biomass and leaf area index in cereals. Bio Protoc. 2015, 5, e1488. [Google Scholar]

- Beniaich, A.; Naves Silva, M.L.; Pomar Avalos, F.A.; de Duarte Menezes, M.; Moreira Cândido, B. Determination of vegetation cover index under different soil management systems of cover plants by using an unmanned aerial vehicle with an onboard digital photographic camera. Psemina Ciencias Agrar. 2019, 40, 49–66. [Google Scholar] [CrossRef] [Green Version]

- Guo, W.; Rage, U.K.; Ninomiya, S. Illumination invariant segmentation of vegetation for time series wheat images based on decision tree model. Comput. Electron. Agric. 2013, 96, 58–66. [Google Scholar] [CrossRef]

- Yang, W.; Wang, S.; Zhao, X.; Zhang, J.; Feng, J. Greenness identification based on HSV decision tree. Inf. Process. Agric. 2015, 149–160. [Google Scholar] [CrossRef] [Green Version]

- Burgos-Artizzu, X.P.; Ribeiro, A.; Guijarro, M.; Pajares, G. Real-time image processing for crop/weed discrimination in maize fields. Comput. Electron. Agric. 2011, 75, 337–346. [Google Scholar] [CrossRef] [Green Version]

- Kataoka, T.; Kaneko, T.; Okamoto, H.; Hata, S. Crop growth estimation system using machine vision. In Proceedings of the Advanced Intelligent Mechatronics, Kobe, Japan, 20–24 July 2003; pp. 1079–1083. [Google Scholar]

- Marchant, J.A.; Onyango, C.M. Shadow invariant classification for scenes illuminated by daylight. J. Opt. Soc. Am. 2000, 17, 1952–1996. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Und. 2008, 10, 346–359. [Google Scholar] [CrossRef]

- Csurka, G.; Dance, C.; Fan, L.; Willamowski, J.; Bray, C. Visual categorization with bags of keypoints. In Workshop on Statistical Learning in Computer Vision; ECCV: Prague, Czech Republic, 2004; pp. 1–22. [Google Scholar]

- Ma, J.; Ma, Z.; Kang, B.; Lu, K. A Method of Protein Model Classification and Retrieval Using Bag-of-Visual-Features. Comput. Math. Methods Med. 2014, 2014, 269394. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Vapnik, V.; Chervonenkis, A. On the uniform convergence of relative frequencies of events to their probabilities. Probab. Appl. 1971, 16, 264–280. [Google Scholar] [CrossRef]

- Ahmed, F.; Al-Mamun, H.A.; Bari, A.S.M.H.; Hossain, E.; Kwan, P. Classification of crops and weeds from digital images: A support vector machine approach. Crop. Prot. 2012, 40, 98–104. [Google Scholar] [CrossRef]

- Sokolova, M.; Lapalme, G. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

- Cohen, J. A coefficient of agreement for nominal scales. Educ. Psychol. Meas. 1960, 20, 27–46. [Google Scholar] [CrossRef]

- Merienne, J.; Larmure, A.; Gée, C. Digital tools for a biomass prediction from a plant-growth model. Application to a Weed Xontrol in Wheat Crop. In Proceedings of the European Conference on Precision Agriculture, Montpellier, France, 8–12 July 2019; Stafford, J.V., Ed.; Wageningen Academic: Montpellier, France, 2019; pp. 597–603. [Google Scholar]

- Cousens, R. Theory and reality of weed control thresholds. Plant. Prot. Quart. 1987, 2, 13–20. [Google Scholar]

- Gherekhloo, J.; Noroozi, S.; Mazaheri, D.; Ghanbari, A.; Ghannadha, M.R.; Vidal, R.A.; de Prado, R.V. Multispecies weed competition and their economic threshold on the wheat crop. Planta Daninha 2017, 28, 239–246. [Google Scholar] [CrossRef] [Green Version]

- O’Donovan, J.T. Quack grass (Elytrigia repens) interference in Canola (Brassica compestris). Weed Sci. 1991, 39, 397–401. [Google Scholar] [CrossRef]

- O’Donovan, J.T.; Blackhaw, R.E. Effect of volunteer barley (Hordeum vulgare L.) interference on field pea (Pisum sativum L.) yield and profitability. Weed Sci. 1997, 42, 249–255. [Google Scholar]

- Wells, G.J. Annual weed competition in wheat crops: The effect of weed density and applied nitrogen. Weed Res. 1979, 19, 185–191. [Google Scholar] [CrossRef]

- Jeuffroy, M.H.; Recous, S. Azodyn: A simple model simulating the date of nitrogen deficiency for decision support in wheat fertilization. Eur. J. Agron. 1999, 10, 129–144. [Google Scholar] [CrossRef]

- Aase, J.K. Relationship between leaf area and dry matter in winter wheat. Agron. J. 1978, 70, 563–565. [Google Scholar] [CrossRef]

- Golzarian, M.R.; Frick, R.A.; Rajendran, K.; Berger, B.; Roy, S.; Tester, M.; Lun, S.D. Accurate inference of shoot biomass from high-throughput images of cereal plants. Plant. Methods 2011, 7, 2. [Google Scholar] [CrossRef] [Green Version]

- Neilson, E.H.; Edwards, A.M.; Blomstedt, C.K.; Berger, B.; Moler, B.M.; Gleadow, R.M. Utilization of a high-throughput shoot imaging system to examine the dynamic phenotypic responses of a C4 cereal crop plant to nitrogen and water deficiency over time. J. Exp. Bot. 2015, 66, 1817–1832. [Google Scholar] [CrossRef] [PubMed]

- Chen, D.; Shi, R.; Pape, J.M.; Neumann, K.; Arend, D.; Graner, A.; Chen, M.; Klukas, C. Predicting plant biomass accumulation from image-derived parameters. Giga Sci. 2018, 7, 1–13. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Valantin-Morison, M.; Guichard, L.; Jeuffroy, M.H. Comment maîtriser la flore adventice des grandes cultures à travers les éléments de l’itinéraire technique. Innov. Agron. 2008, 3, 27–41. [Google Scholar]

- Welbank, P.J. A comparison of competitive effects of some common weed species. Ann. Appl. Biol. 1963, 51, 107–125. [Google Scholar] [CrossRef]

- Gansberger, M.; Montgomery, L.F.R.; Liebhard, P. Botanical characteristics, crop management and potential of Silphium perfoliatum L. as a renewable resource for biogas production: A review. Ind. Crop. Prod. 2015, 63, 362–372. [Google Scholar] [CrossRef]

- Colbach, N.; Collard, A.; Guyot, S.H.M.; Mézière, D.; Munier-Jolain, N. Assessing innovative sowing patterns for integrated weed management with a 3D crop: Weed competition model. Eur. J. Agron. 2014, 53, 74–89. [Google Scholar] [CrossRef]

- Kropff, M.J.; Spitters, C.J.T. A simple model of crop loss by weed competition from early observations on relative leaf area of weeds. Weed Res. 1991, 31, 97–105. [Google Scholar] [CrossRef]

- Thompson, J.F.; Stafford, J.V.; Miller, P.C.H. Potential for automatic weed detection and selective herbicide application. Crop. Prot. 1991, 10, 254–259. [Google Scholar] [CrossRef]

- Lotz, L.A.P.; Kropff, M.J.; Wallinga, H.J.B. Prediction of yield loss based on relative leaf cover of weeds. In Proceedings of the First International Weed Control Congress, Melbourne, Australia, 17–21 February 1993; pp. 290–292. [Google Scholar]

- Lutman, P.J.W. Prediction of the competitive effects of weeds on the yields of several spring-sown arable crops. In Proceedings of the IXeme Colloque International sur la Biologie des Mauvaises, ANPP, Paris, France, 16–18 September 1992; pp. 337–345. [Google Scholar]

- Christensen, S. Crop weed competition and herbicide performance in cereal species and varieties. Weed Res. 1994, 34, 29–36. [Google Scholar] [CrossRef]

- Caussanel, J.P. Nuisibilité et seuils de nuisibilité des mauvaises herbes dans une culture annuelle: Situation de concurrence bispécifique. Agron. J. 1989, 9, 219–240. [Google Scholar] [CrossRef]

- Florez Fernandez, J.A.; Fischer, A.J.; Ramirez, H.; Duque, M.C. Predicting Rice Yield Losses Caused by Multispecies Weed Competition. Agron. J. 1999, 1, 87–92. [Google Scholar] [CrossRef] [Green Version]

- Milberg, P.; Hallgren, E. Yield loss due to weeds in cereals and its large-scale variability in Sweden. Field Crop. Res. 2004, 86, 199–209. [Google Scholar] [CrossRef]

| Date | Zadoks Growth Stage and Development Phase (3-Leaf Stage) | RGB Images | Destructive Measurements (Plant Identification, LAI and Dry Biomass for Crop and Weed) | Comments |

|---|---|---|---|---|

| 23 March 2018 | GS22 | 254 images on 3 quadrats (Q1, Q2, Q3) | 3 quadrats: Q1, Q2, Q3 | critical period for weed-crop competition |

| Leaf and Tiller Development | Middle-tillering | |||

| 6 April 2018 | GS24 | 3 images on 3 quadrats (Q4, Q5, Q6) | 3 quadrats: Q4, Q5, Q6 | |

| Leaf and Tiller Development | End-tillering | |||

| 12 April 2018 | GS30 | 3 images on 3 quadrats (Q7, Q8, Q9) | 3 quadrats: Q7, Q8, Q9 | Good nutrient and water supply are determining yield potential |

| Stem extension | Stem-elongation |

| Vegetation Index | Formula |

|---|---|

| ExG: Excess Green [14,45] | ExG = |

| MExG: Modified Excess Green [47,48] | MExG = |

| ExR: Excess Red [16,47] | ExR = |

| CIVE: color index of vegetation extraction [47,49] | CIVE = |

| VEG: vegetative index [45,50] | VEG = |

| HSVDT: HSV (Hue Saturation Value) decision tree [47] | Set the hue value to zero if it is less than 50 or greater than 150: H((H < 50)|(H > 150)) = 0; Then use T = 49 as a threshold |

| Data | Class | Training Thumbnails Subset (85%) | Test Subset (15%) | Total |

|---|---|---|---|---|

| 9 images | Crop | 3264 | 577 | 3841 |

| 9 images | Weed | 3264 | 577 | 3841 |

| 18 images | All | 6528 | 1154 | 7682 |

| Actual | ||||

|---|---|---|---|---|

| Wheat | Weed | Total | ||

| Predicted | Wheat | 543 | 47 | 590 |

| Weed | 34 | 530 | 564 | |

| Total | 577 | 577 | 1154 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gée, C.; Denimal, E. RGB Image-Derived Indicators for Spatial Assessment of the Impact of Broadleaf Weeds on Wheat Biomass. Remote Sens. 2020, 12, 2982. https://doi.org/10.3390/rs12182982

Gée C, Denimal E. RGB Image-Derived Indicators for Spatial Assessment of the Impact of Broadleaf Weeds on Wheat Biomass. Remote Sensing. 2020; 12(18):2982. https://doi.org/10.3390/rs12182982

Chicago/Turabian StyleGée, Christelle, and Emmanuel Denimal. 2020. "RGB Image-Derived Indicators for Spatial Assessment of the Impact of Broadleaf Weeds on Wheat Biomass" Remote Sensing 12, no. 18: 2982. https://doi.org/10.3390/rs12182982

APA StyleGée, C., & Denimal, E. (2020). RGB Image-Derived Indicators for Spatial Assessment of the Impact of Broadleaf Weeds on Wheat Biomass. Remote Sensing, 12(18), 2982. https://doi.org/10.3390/rs12182982