Optimal Temporal Window Selection for Winter Wheat and Rapeseed Mapping with Sentinel-2 Images: A Case Study of Zhongxiang in China

Abstract

:1. Introduction

- (1)

- The variable importance (VI) is calculated from the random forest (RF) framework, using the mean decrease accuracy (MDA) method, to assess the importance of the spectral-temporal features at different image acquisition times.

- (2)

- An evaluation method is proposed which comprehensively considers the spatial accuracy and statistical accuracy, which ensures that the final mapping results have valuable application significance.

- (3)

- As a representative of the typical cloudy and rainy weather in south-central China in winter, Zhongxiang City is taken as the study area to provide a reference for the selection of the optimal temporal window for crop mapping under the conditions of limited remote sensing imagery in south-central China in winter.

2. Study Area and Datasets

2.1. Study Area

2.2. Reference Data

2.3. Sentinel-2 Data Collection and Preprocessing

3. Method

3.1. Random Forest Classifier

3.2. Selecting the Optimal Temporal Window

3.3. Validation

3.3.1. Sample-Based Accuracy Assessment

3.3.2. Statistics-Based Area Accuracy Assessment

4. Results and Analysis

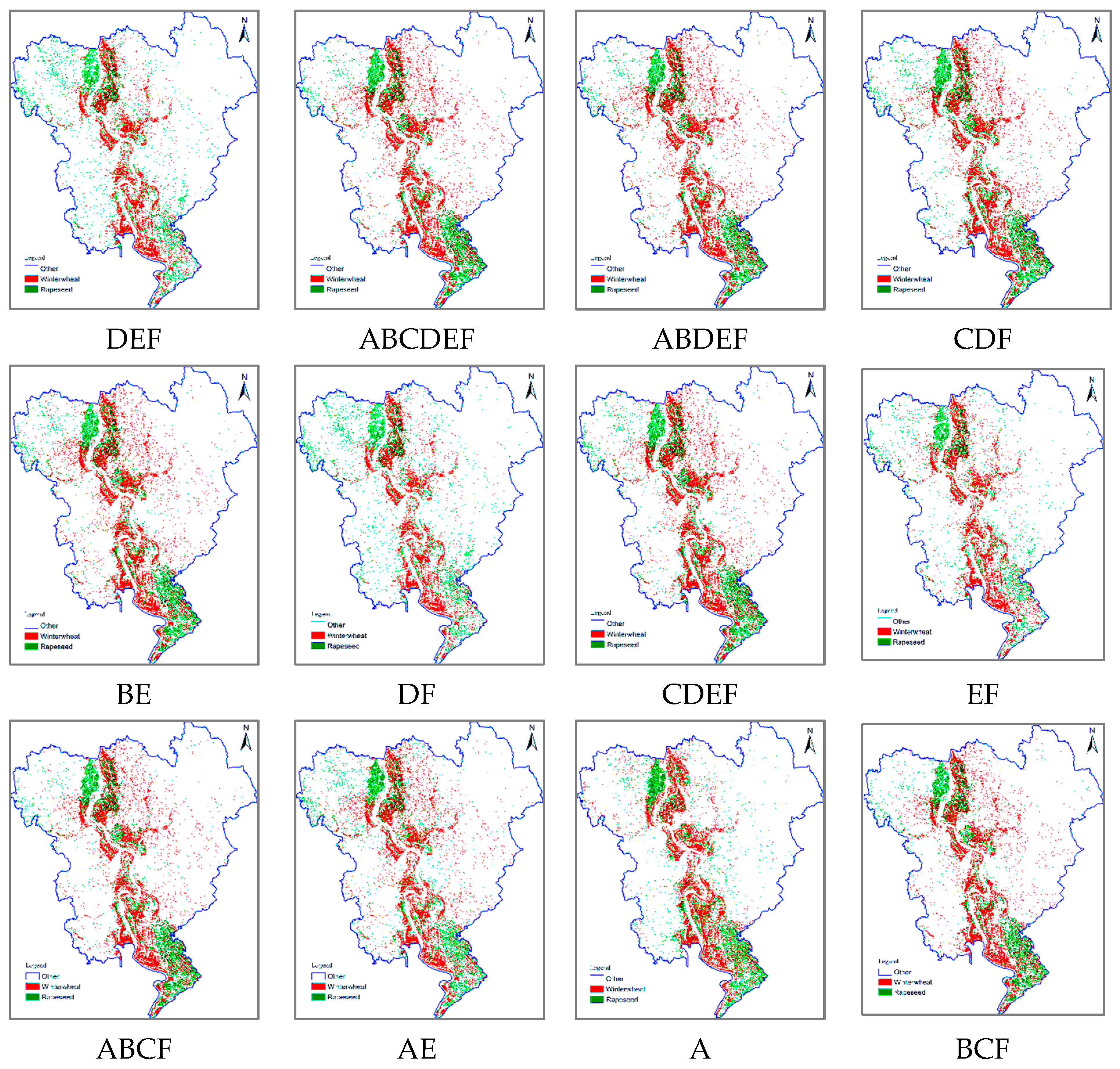

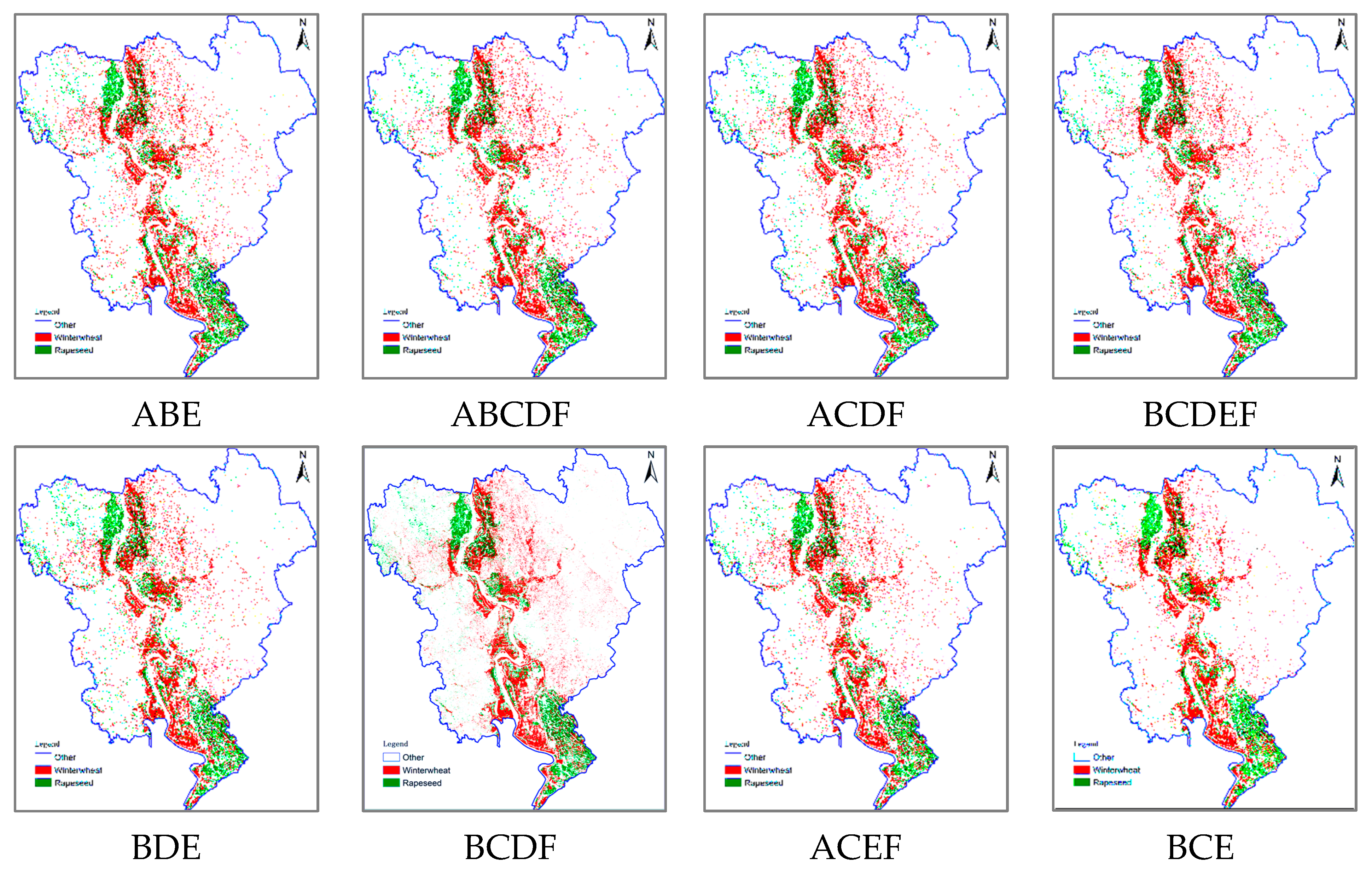

4.1. Classification Results

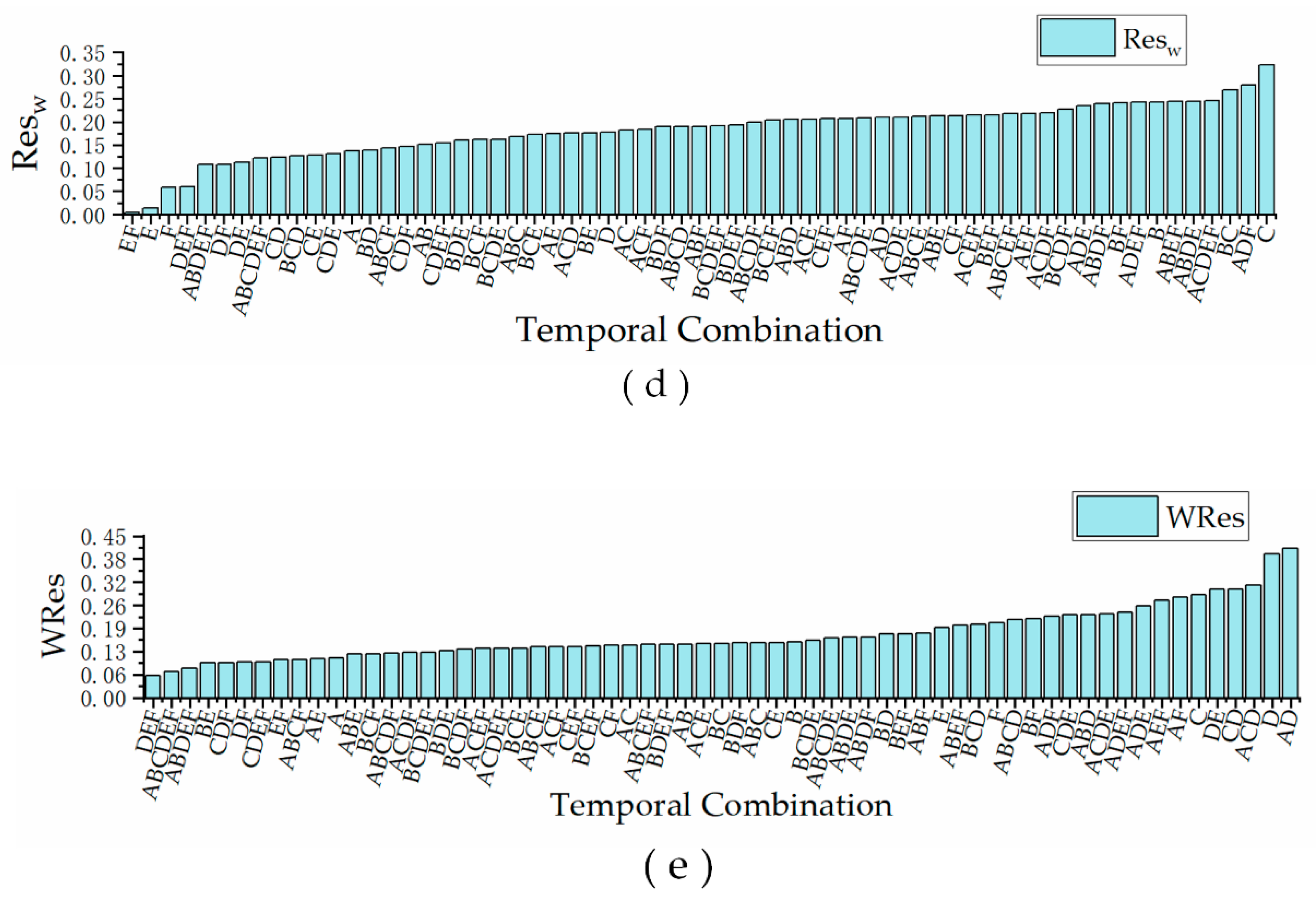

4.2. Optimal Temporal Window Analysis

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Leh, M.D.K.; Sharpley, A.N.; Singh, G.; Matlock, M.D. Assessing the impact of the MRBI program in a data limited Arkansas watershed using the SWAT model. Agric. Water Manag. 2018, 202, 202–219. [Google Scholar] [CrossRef]

- Singh, G.; Saraswat, D.; Pai, N.; Hancock, B. LUU CHECKER: A Web-based Tool to Incorporate Emerging LUs in the SWAT Model. Appl. Eng. Agric. 2019, 35, 723–731. [Google Scholar] [CrossRef]

- Jia, Y.; Ge, Y.; Chen, Y.; Li, S.; Heuvelink, G.; Ling, F. Super-Resolution Land Cover Mapping Based on the Convolutional Neural Network. Remote Sens. 2019, 11, 1815. [Google Scholar] [CrossRef] [Green Version]

- Thenkabail, P.S. Global Croplands and their Importance for Water and Food Security in the Twenty-first Century: Towards an Ever Green Revolution that Combines a Second Green Revolution with a Blue Revolution. Remote Sens. 2010, 2, 2305–2312. [Google Scholar] [CrossRef] [Green Version]

- Nasrallah, A.; Baghdadi, N.; Mhawej, M.; Faour, G.; Darwish, T.; Belhouchette, H.; Darwich, S. A Novel Approach for Mapping Wheat Areas Using High Resolution Sentinel-2 Images. Sensors 2018, 18, 2089. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Skakun, S.; Vermote, E.; Franch, B.; Roger, J.-C.; Kussul, N.; Ju, J.; Masek, J. Winter Wheat Yield Assessment from Landsat 8 and Sentinel-2 Data: Incorporating Surface Reflectance, Through Phenological Fitting, into Regression Yield Models. Remote Sens. 2019, 11, 1768. [Google Scholar] [CrossRef] [Green Version]

- Peña-Barragán, J.M.; Ngugi, M.K.; Plant, R.E.; Six, J. Object-based crop identification using multiple vegetation indices, textural features and crop phenology. Remote Sens. Environ. 2011, 115, 1301–1316. [Google Scholar] [CrossRef]

- You, L.; Wood, S.; Wood-Sichra, U.; Wu, W. Generating global crop distribution maps: From census to grid. Agric. Syst. 2014, 127, 53–60. [Google Scholar] [CrossRef] [Green Version]

- Bontemps, S.; Defourny, P.; Bogaert, E.V.; Arino, O.; Kalogirou, V.; Perez, J.R. GLOBCOVER 2009-Products description and validation report. Foro Mundial De La Salud 2010, 17, 285–287. [Google Scholar]

- Bartholomé, E.; Belward, A.S. GLC2000: A new approach to global land cover mapping from Earth observation data. Int. J. Remote Sens. 2005, 26, 1959–1977. [Google Scholar] [CrossRef]

- Friedl, M.A.; McIver, D.K.; Hodges, J.C.F.; Zhang, X.Y.; Muchoney, D.; Strahler, A.H.; Woodcock, C.E.; Gopal, S.; Schneider, A.; Cooper, A.; et al. Global land cover mapping from MODIS: Algorithms and early results. Remote Sens. Environ. 2002, 83, 287–302. [Google Scholar] [CrossRef]

- Arino, O.; Ramos, J.; Kalogirou, V.; Defourny, P.; Frédéric, A. Globcover 2009. In Proceedings of the Earth Observation for Land-Atmosphere Interaction Science, Frascati, Italy, 1 January 2011; p. 48. [Google Scholar]

- Kyle, P.; Hansen, M.; Becker-Reshef, I.; Potapov, P.; Justice, C. Estimating Global Cropland Extent with Multi-year MODIS Data. Remote Sens. 2010, 2, 1844–1863. [Google Scholar] [CrossRef] [Green Version]

- Friedl, M.A.; Sulla-Menashe, D.; Tan, B.; Schneider, A.; Ramankutty, N.; Sibley, A.; Huang, X. MODIS Collection 5 global land cover: Algorithm refinements and characterization of new datasets. Remote Sens. Environ. 2010, 114, 168–182. [Google Scholar] [CrossRef]

- Zhong, Y.; Luo, C.; Hu, X.; Wei, L.; Wang, X.; Jin, S. Cropland Product Fusion Method Based on the Overall Consistency Difference: A Case Study of China. Remote Sens. 2019, 11, 1065. [Google Scholar] [CrossRef] [Green Version]

- Lu, M.; Wu, W.; Zhang, L.; Liao, A.; Peng, S.; Tang, H. A comparative analysis of five global cropland datasets in China. Sci. China Earth Sci. 2016, 59, 2307–2317. [Google Scholar] [CrossRef]

- Boryan, C.; Yang, Z.; Mueller, R.; Craig, M. Monitoring US agriculture: The US Department of Agriculture, National Agricultural Statistics Service, Cropland Data Layer Program. Geocarto Int. 2011, 26, 341–358. [Google Scholar] [CrossRef]

- Osman, J.; Inglada, J.; Dejoux, J.; Hagolle, O.; Dedieu, G. Crop mapping by supervised classification of high resolution optical image time series using prior knowledge about crop rotation and topography. In Proceedings of the 2013 IEEE International Geoscience and Remote Sensing Symposium-IGARSS, Melbourne, Australia, 21–26 July 2013; pp. 2832–2835. [Google Scholar]

- Foerster, S.; Kaden, K.; Foerster, M.; Itzerott, S. Crop type mapping using spectral–temporal profiles and phenological information. Comput. Electron. Agric. 2012, 89, 30–40. [Google Scholar] [CrossRef] [Green Version]

- Zheng, B.; Myint, S.W.; Thenkabail, P.S.; Aggarwal, R.M. A support vector machine to identify irrigated crop types using time-series Landsat NDVI data. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 103–112. [Google Scholar] [CrossRef]

- Zhong, L.; Hu, L.; Yu, L.; Gong, P.; Biging, G.S. Automated mapping of soybean and corn using phenology. ISPRS J. Photogramm. Remote Sens. 2016, 119, 151–164. [Google Scholar] [CrossRef] [Green Version]

- Geerken, R.A. An algorithm to classify and monitor seasonal variations in vegetation phenologies and their inter-annual change. ISPRS J. Photogramm. Remote Sens. 2009, 64, 422–431. [Google Scholar] [CrossRef]

- Friedl, M.A.; Brodley, C.E.; Strahler, A.H. Maximizing land cover classification accuracies produced by decision trees at continental to global scales. IEEE Trans. Geosci. Remote Sens. 1999, 37, 969–977. [Google Scholar] [CrossRef]

- Maus, V.; Camara, G.; Cartaxo, R.; Sanchez, A.; Ramos, F.M.; de Queiroz, G.R. A Time-Weighted Dynamic Time Warping Method for Land-Use and Land-Cover Mapping. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 3729–3739. [Google Scholar] [CrossRef]

- Löw, F.; Michel, U.; Dech, S.; Conrad, C. Impact of feature selection on the accuracy and spatial uncertainty of per-field crop classification using Support Vector Machines. ISPRS J. Photogramm. Remote Sens. 2013, 85, 102–119. [Google Scholar] [CrossRef]

- Tyc, G.; Tulip, J.; Schulten, D.; Krischke, M.; Oxfort, M. The RapidEye mission design. Acta Astronautica-ACTA ASTRONAUT 2005, 56, 213–219. [Google Scholar] [CrossRef]

- Petitjean, F.; Inglada, J.; Gancarski, P. Satellite Image Time Series Analysis Under Time Warping. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3081–3095. [Google Scholar] [CrossRef]

- Belgiu, M.; Csillik, O. Sentinel-2 cropland mapping using pixel-based and object-based time-weighted dynamic time warping analysis. Remote Sens. Environ. 2018, 204, 509–523. [Google Scholar] [CrossRef]

- Cai, Y.; Guan, K.; Peng, J.; Wang, S.; Seifert, C.; Wardlow, B.; Li, Z. A high-performance and in-season classification system of field-level crop types using time-series Landsat data and a machine learning approach. Remote Sens. Environ. 2018, 210, 35–47. [Google Scholar] [CrossRef]

- Zhong, L.; Hu, L.; Zhou, H. Deep learning based multi-temporal crop classification. Remote Sens. Environ. 2019, 221, 430–443. [Google Scholar] [CrossRef]

- Hunt, M.L.; Blackburn, G.A.; Carrasco, L.; Redhead, J.W.; Rowland, C.S. High resolution wheat yield mapping using Sentinel-2. Remote Sens. Environ. 2019, 233. [Google Scholar] [CrossRef]

- Eberhardt, D.I.; Schultz, B.; Rizzi, R.; Sanches, D.I.; Formaggio, R.A.; Atzberger, C.; Mello, P.M.; Immitzer, M.; Trabaquini, K.; Foschiera, W.; et al. Cloud Cover Assessment for Operational Crop Monitoring Systems in Tropical Areas. Remote Sens. 2016, 8, 219. [Google Scholar] [CrossRef] [Green Version]

- Murakami, T.; Ogawa, S.; Ishitsuka, N.; Kumagai, K.; Saito, G. Crop discrimination with multitemporal SPOT/HRV data in the Saga Plains, Japan. Int. J. Remote Sens. 2001, 22, 1335–1348. [Google Scholar] [CrossRef]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P.; et al. Sentinel-2: ESA’s Optical High-Resolution Mission for GMES Operational Services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Main-Knorn, M.; Pflug, B.; Louis, J.; Debaecker, V.; Müller-Wilm, U.; Gascon, F. Sen2Cor for Sentinel-2. In Proceedings of the SPIE 2017, 10427, Image and Signal Processing for Remote Sensing XXIII, Bellingham, WA, USA, 11–13 September 2018. [Google Scholar] [CrossRef] [Green Version]

- Van Tricht, K.; Gobin, A.; Gilliams, S.; Piccard, I. Synergistic Use of Radar Sentinel-1 and Optical Sentinel-2 Imagery for Crop Mapping: A Case Study for Belgium. Remote Sens. 2018, 10, 1642. [Google Scholar] [CrossRef] [Green Version]

- Hao, P.; Wang, L.; Niu, Z.; Aablikim, A.; Huang, N.; Xu, S.; Chen, F. The Potential of Time Series Merged from Landsat-5 TM and HJ-1 CCD for Crop Classification: A Case Study for Bole and Manas Counties in Xinjiang, China. Remote Sens. 2014, 6, 7610–7631. [Google Scholar] [CrossRef] [Green Version]

- Van Niel, T.G.; McVicar, T.R. Determining temporal windows for crop discrimination with remote sensing: A case study in south-eastern Australia. Comput. Electron. Agric. 2004, 45, 91–108. [Google Scholar] [CrossRef]

- Griffiths, P.; Nendel, C.; Hostert, P. Intra-annual reflectance composites from Sentinel-2 and Landsat for national-scale crop and land cover mapping. Remote Sens. Environ. 2019, 220, 135–151. [Google Scholar] [CrossRef]

- Hao, P.; Wu, M.; Niu, Z.; Wang, L.; Zhan, Y. Estimation of different data compositions for early-season crop type classification. PeerJ 2018, 6, e4834. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Chica-Olmo, M.; Abarca-Hernandez, F.; Atkinson, P.M.; Jeganathan, C. Random Forest classification of Mediterranean land cover using multi-seasonal imagery and multi-seasonal texture. Remote Sens. Environ. 2012, 121, 93–107. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Hapfelmeier, A.; Ulm, K. A new variable selection approach using Random Forests. Comput. Stat. Data Anal. 2013, 60, 50–69. [Google Scholar] [CrossRef]

- Guan, H.; Li, J.; Chapman, M.; Deng, F.; Ji, Z.; Yang, X. Integration of orthoimagery and lidar data for object-based urban thematic mapping using random forests. Int. J. Remote Sens. 2013, 34, 5166–5186. [Google Scholar] [CrossRef]

- Gislason, P.O.; Benediktsson, J.A.; Sveinsson, J.R. Random Forests for land cover classification. Pattern Recognit. Lett. 2006, 27, 294–300. [Google Scholar] [CrossRef]

- Genuer, R.; Poggi, J.-M.; Tuleau-Malot, C. Variable selection using random forests. Pattern Recog. Lett. 2010, 31, 2225–2236. [Google Scholar] [CrossRef] [Green Version]

- Ghulam, A.; Li, Z.-L.; Qin, Q.; Yimit, H.; Wang, J. Estimating crop water stress with ETM+ NIR and SWIR data. Agric. For. Meteorol. 2008, 148, 1679–1695. [Google Scholar] [CrossRef]

- Hu, Q.; Sulla-Menashe, D.; Xu, B.; Yin, H.; Tang, H.; Yang, P.; Wu, W. A phenology-based spectral and temporal feature selection method for crop mapping from satellite time series. Int. J. Appl. Earth Obs. Geoinf. 2019, 80, 218–229. [Google Scholar] [CrossRef]

- Diao, C. Innovative pheno-network model in estimating crop phenological stages with satellite time series. ISPRS J. Photogramm. Remote Sens. 2019, 153, 96–109. [Google Scholar] [CrossRef]

| Class Name | Training | Validation |

|---|---|---|

| Winter wheat | 1919 | 720 |

| Rapeseed | 1395 | 466 |

| Others | 4883 | 1890 |

| Crop Type | Year | |

|---|---|---|

| 2017 | 2016 | |

| Winter wheat | 37,184.00 ha | 36,184.00 ha |

| Rapeseed | 29,495.26 ha | 30,001.53 ha |

| Image Acquisition Date | Mark |

|---|---|

| 30 October 2017 | A |

| 9 December 2017 | B |

| 24 December 2017 | C |

| 3 April 2018 | D |

| 8 April 2018 | E |

| 18 April 2018 | F |

| Ranking | Temporal Images | OA | Kappa | |||

|---|---|---|---|---|---|---|

| 1 | DEF | 0.935 | 0.914 | 0.061 | 0.061 | 0.060 |

| 2 | ABCDEF | 0.949 | 0.933 | 0.121 | 0.013 | 0.072 |

| 3 | ABDEF | 0.943 | 0.929 | 0.108 | 0.047 | 0.081 |

| 4 | CDF | 0.942 | 0.923 | 0.147 | 0.037 | 0.097 |

| 5 | BE | 0.935 | 0.915 | 0.176 | 0.002 | 0.097 |

| 6 | DF | 0.934 | 0.914 | 0.108 | 0.087 | 0.098 |

| 7 | CDEF | 0.944 | 0.927 | 0.154 | 0.033 | 0.099 |

| 8 | EF | 0.933 | 0.913 | 0.004 | 0.229 | 0.104 |

| 9 | ABCF | 0.951 | 0.935 | 0.144 | 0.058 | 0.105 |

| 10 | AE | 0.928 | 0.906 | 0.175 | 0.025 | 0.108 |

| Image Acquisition Date Mark | Frequency of Occurrence |

|---|---|

| A | 3 |

| B | 4 |

| C | 4 |

| D | 6 |

| E | 7 |

| F | 8 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Meng, S.; Zhong, Y.; Luo, C.; Hu, X.; Wang, X.; Huang, S. Optimal Temporal Window Selection for Winter Wheat and Rapeseed Mapping with Sentinel-2 Images: A Case Study of Zhongxiang in China. Remote Sens. 2020, 12, 226. https://doi.org/10.3390/rs12020226

Meng S, Zhong Y, Luo C, Hu X, Wang X, Huang S. Optimal Temporal Window Selection for Winter Wheat and Rapeseed Mapping with Sentinel-2 Images: A Case Study of Zhongxiang in China. Remote Sensing. 2020; 12(2):226. https://doi.org/10.3390/rs12020226

Chicago/Turabian StyleMeng, Shiyao, Yanfei Zhong, Chang Luo, Xin Hu, Xinyu Wang, and Shengxiang Huang. 2020. "Optimal Temporal Window Selection for Winter Wheat and Rapeseed Mapping with Sentinel-2 Images: A Case Study of Zhongxiang in China" Remote Sensing 12, no. 2: 226. https://doi.org/10.3390/rs12020226

APA StyleMeng, S., Zhong, Y., Luo, C., Hu, X., Wang, X., & Huang, S. (2020). Optimal Temporal Window Selection for Winter Wheat and Rapeseed Mapping with Sentinel-2 Images: A Case Study of Zhongxiang in China. Remote Sensing, 12(2), 226. https://doi.org/10.3390/rs12020226