UAV-Based Terrain Modeling under Vegetation in the Chinese Loess Plateau: A Deep Learning and Terrain Correction Ensemble Framework

Abstract

:1. Introduction

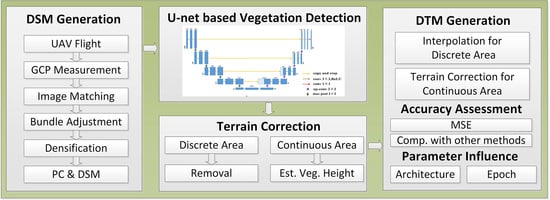

2. Materials and Methods

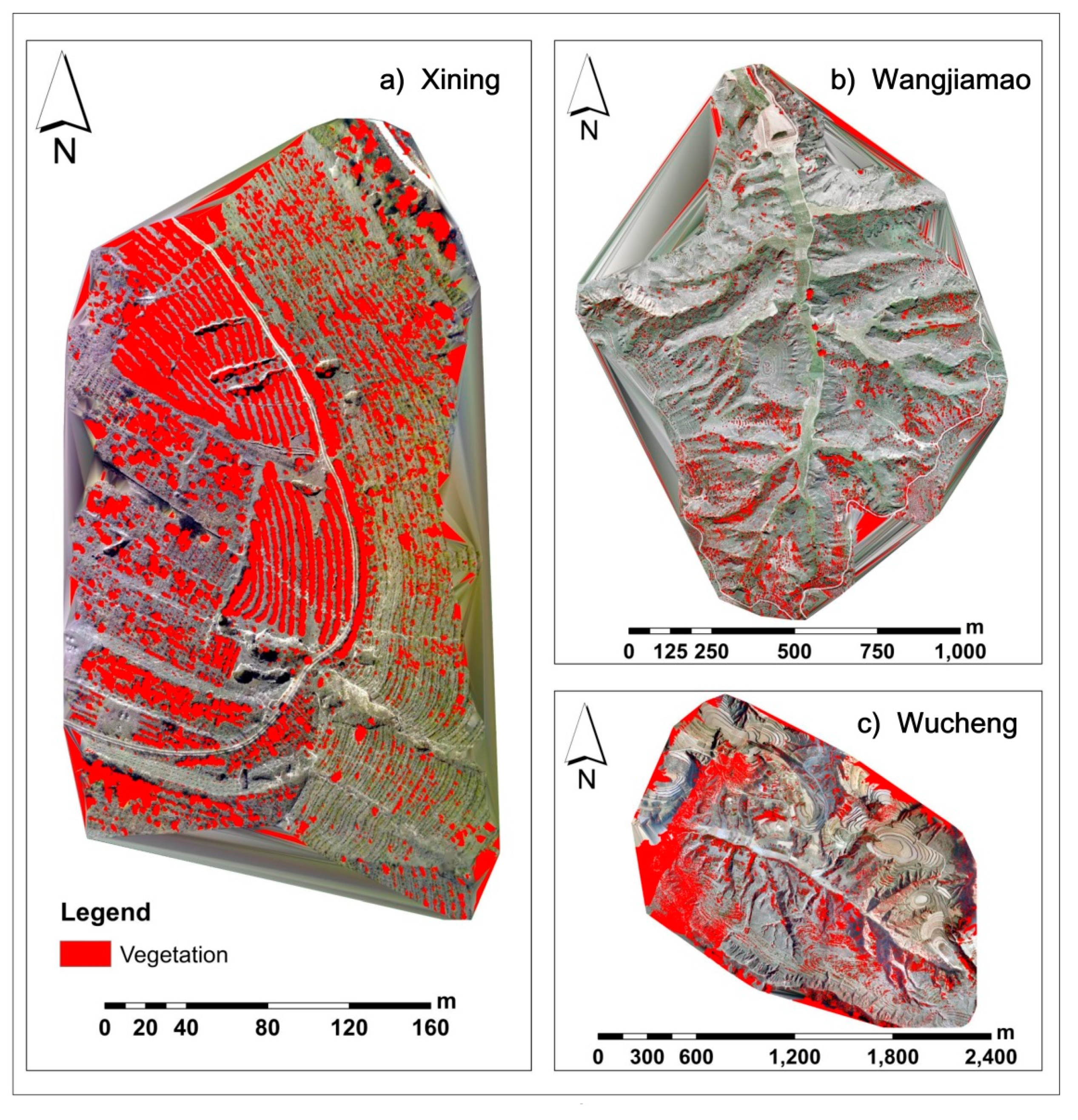

2.1. Study Site

2.2. Unmanned Aerial Vehicle (UAV) and Global Navigation Satellite System (GNSS) Field Data Collection

2.3. Deep Learning (DL)-Based Vegetation Detection

2.3.1. Training Data Generation

2.3.2. Feature Selection

2.3.3. Design of the U-Net Network

2.3.4. Vegetation Detection Accuracy Assessment

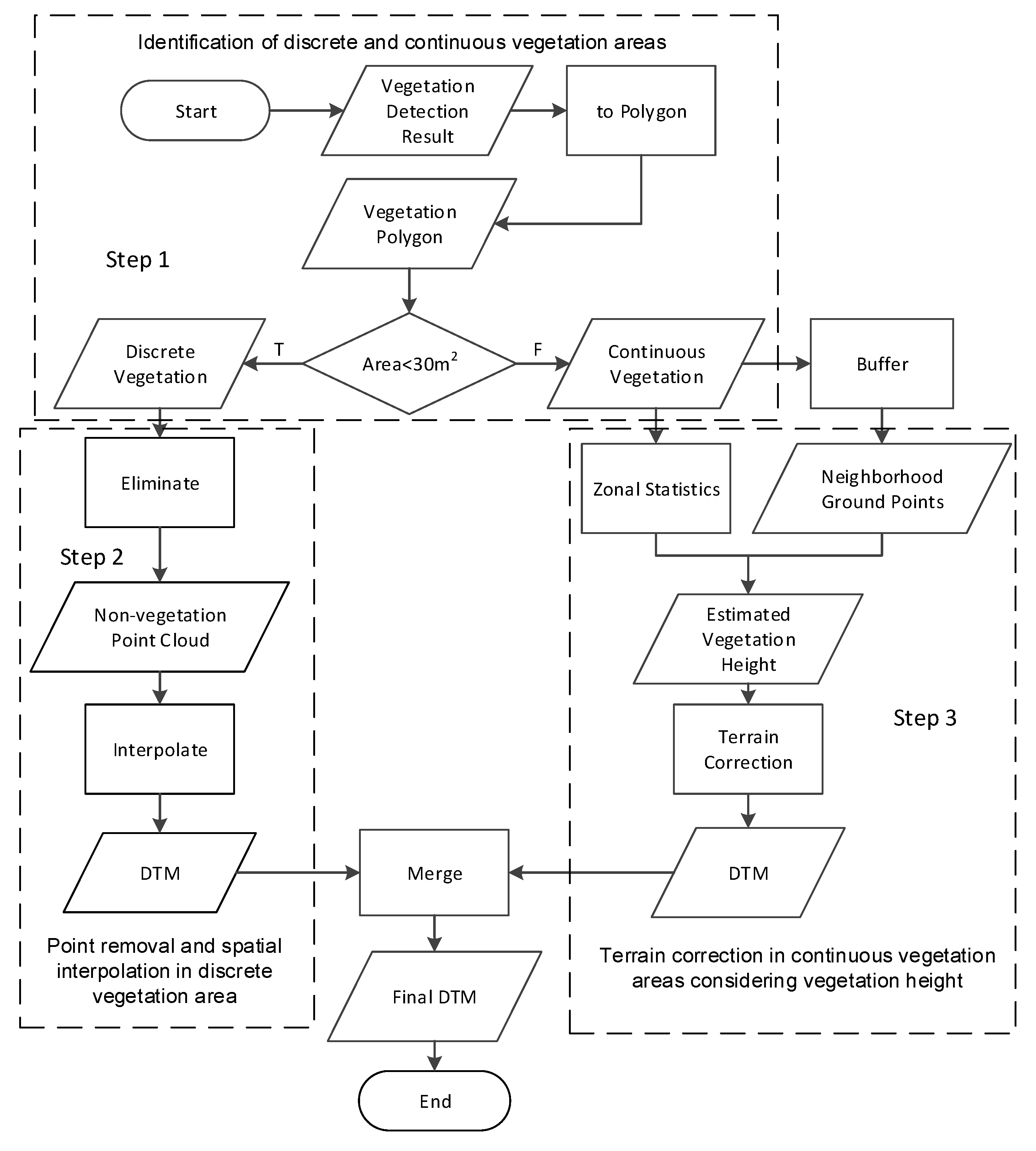

2.4. Terrain Correction

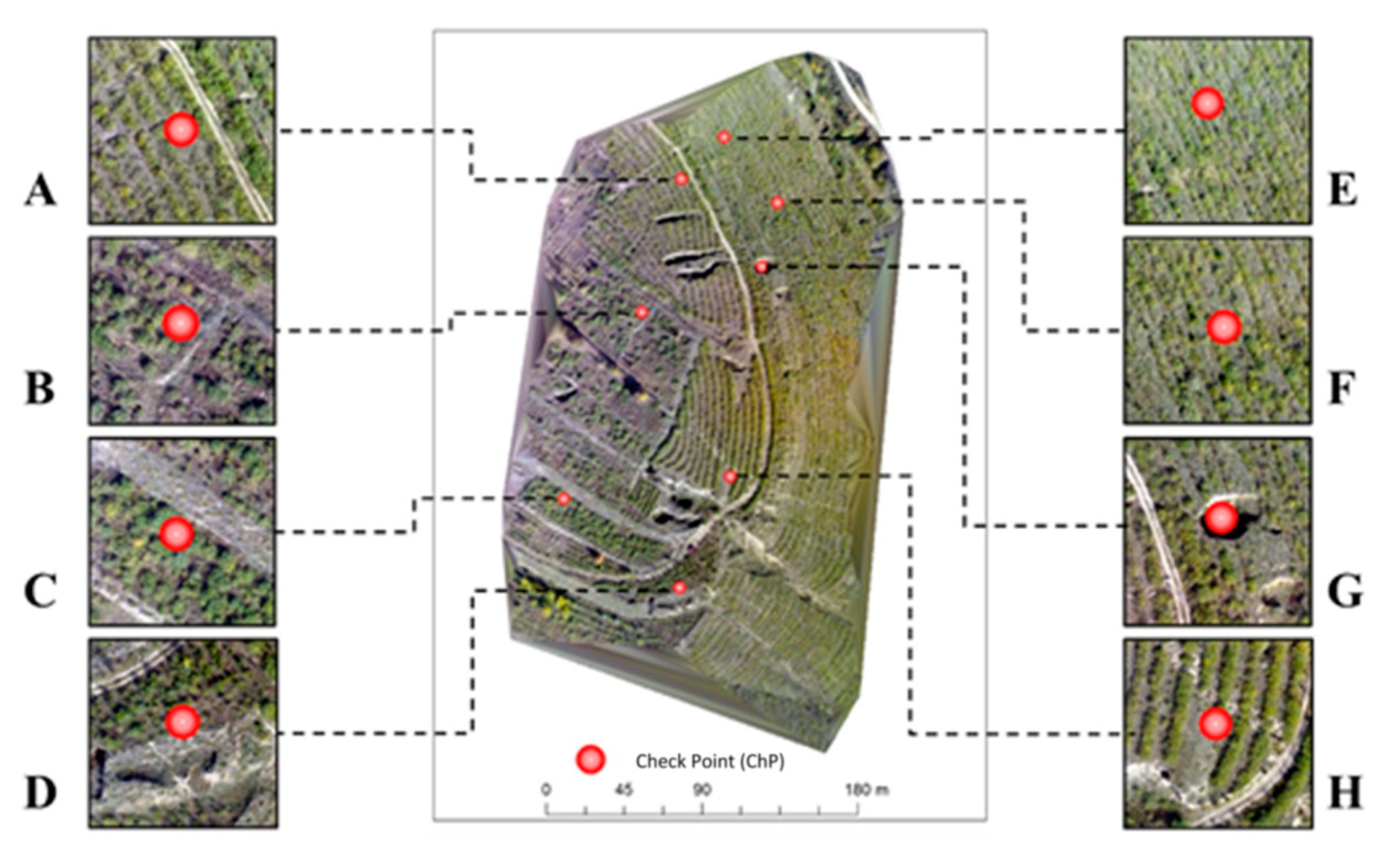

2.5. Terrain Modeling Result Validation

3. Results

3.1. Vegetation Detection Results

3.2. Vegetation Identification Results

3.3. Terrain Correction Results

3.4. Terrain Modeling Result Validation with Field Measurement Data

4. Discussion

4.1. U-Net Hyper-Parameter Influence on Vegetation Detection Performance

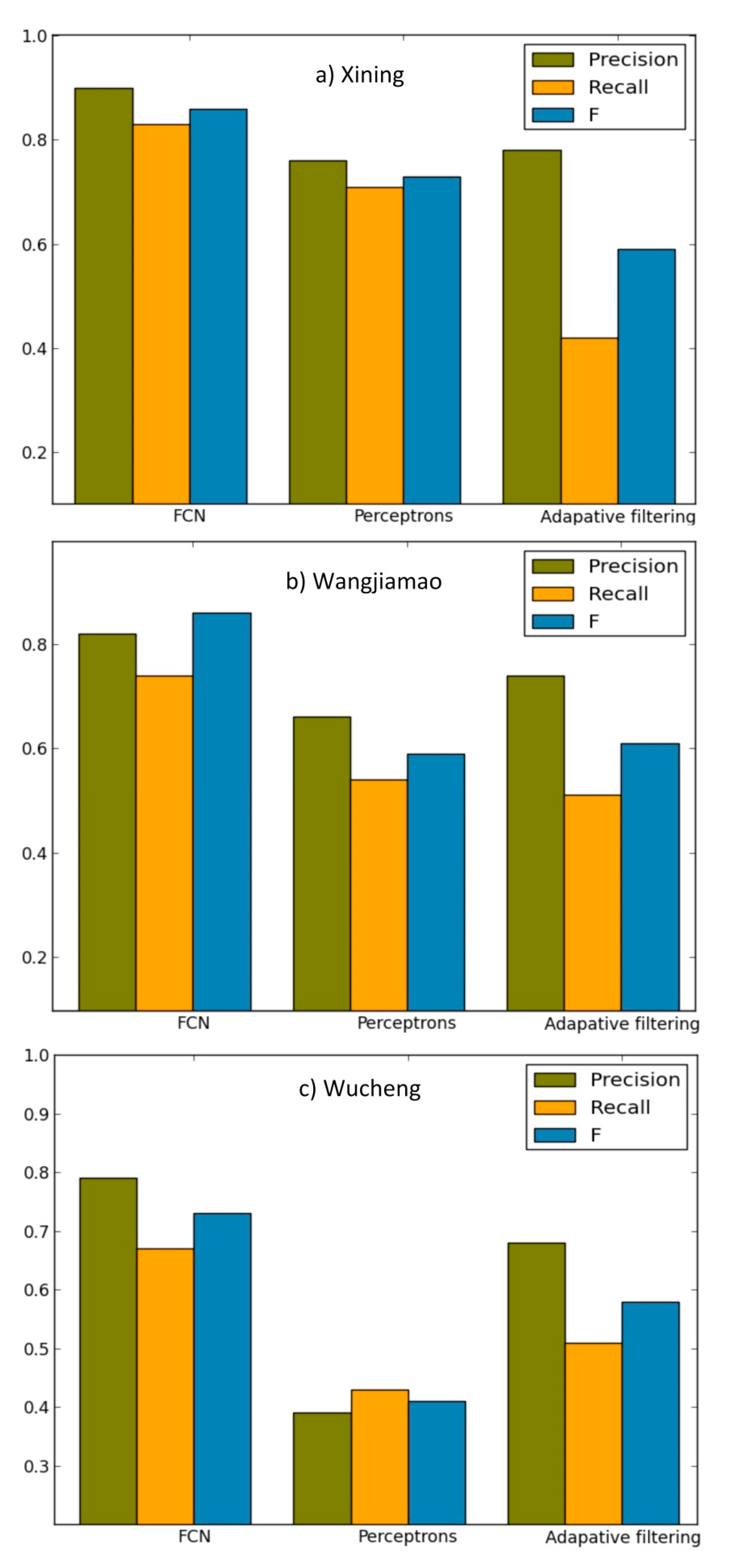

4.2. Comparison of Vegetation Detection Performance with Other Methods

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Kulawardhana, R.W.; Popescu, S.C.; Feagin, R. Airborne lidar remote sensing applications in non-forested short stature environments: A review. Ann. For. Res. 2017, 60, 173–196. [Google Scholar] [CrossRef] [Green Version]

- Galin, E.; Guérin, É.; Peytavie, A.; Cordonnier, G.; Cani, M.; Benes, B.; Gain, J. A Review of Digital Terrain Modeling. Comput. Graph. Forum 2019, 38, 553–577. [Google Scholar] [CrossRef]

- Tmušić, G.; Manfreda, S.; Aasen, H.; James, M.R.; Gonçalves, G.; Ben Dor, E.; Brook, A.; Polinova, M.; Arranz, J.J.; Mészáros, J.; et al. Current Practices in UAS-based Environmental Monitoring. Remote Sens. 2020, 12, 1001. [Google Scholar] [CrossRef] [Green Version]

- Hladik, C.; Alber, M. Accuracy assessment and correction of a LIDAR-derived salt marsh digital elevation model. Remote. Sens. Environ. 2012, 121, 224–235. [Google Scholar] [CrossRef]

- Hladik, C.; Schalles, J.; Alber, M. Salt marsh elevation and habitat mapping using hyperspectral and LIDAR data. Remote Sens. Environ. 2013, 139, 318–330. [Google Scholar] [CrossRef]

- Tsouros, D.C.; Bibi, S.; Sarigiannidis, P.G. A review on UAV-based applications for precision agriculture. Information 2019, 10, 349. [Google Scholar] [CrossRef] [Green Version]

- Szabó, Z.; Tóth, C.A.; Holb, I.; Szabo, S. Aerial Laser Scanning Data as a Source of Terrain Modeling in a Fluvial Environment: Biasing Factors of Terrain Height Accuracy. Sensors 2020, 20, 2063. [Google Scholar] [CrossRef] [Green Version]

- Liu, K.; Ding, H.; Tang, G.; Na, J.; Huang, X.; Xue, Z.; Yang, X.; Li, F. Detection of Catchment-Scale Gully-Affected Areas Using Unmanned Aerial Vehicle (UAV) on the Chinese Loess Plateau. ISPRS Int. J. Geo-Inf. 2016, 5, 238. [Google Scholar] [CrossRef]

- Li, P.; Mu, X.; Holden, J.; Wu, Y.; Irvine, B.; Wang, F.; Gao, P.; Zhao, G.; Sun, W. Comparison of soil erosion models used to study the Chinese Loess Plateau. Earth-Science Rev. 2017, 170, 17–30. [Google Scholar] [CrossRef] [Green Version]

- Toutin, T.; Gray, L. State-of-the-art of elevation extraction from satellite SAR data. ISPRS J. Photogramm. Remote Sens. 2000, 55, 13–33. [Google Scholar] [CrossRef]

- Ludwig, R.; Schneider, P. Validation of digital elevation models from SRTM X-SAR for applications in hydrologic modeling. ISPRS J. Photogramm. Remote Sens. 2006, 60, 339–358. [Google Scholar] [CrossRef]

- Xu, F.; Jin, Y.-Q. Imaging Simulation of Polarimetric SAR for a Comprehensive Terrain Scene Using the Mapping and Projection Algorithm. IEEE Trans. Geosci. Remote Sens. 2006, 44, 3219–3234. [Google Scholar] [CrossRef]

- Sabry, R. Terrain and Surface Modeling Using Polarimetric SAR Data Features. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1170–1184. [Google Scholar] [CrossRef]

- Spinhirne, J. Micro pulse lidar. IEEE Trans. Geosci. Remote Sens. 1993, 31, 48–55. [Google Scholar] [CrossRef]

- Petrie, G.; Toth, C.K. Introduction to Laser Ranging, Profiling, and Scanning. In Topographic Laser Ranging And Scanning: Principles And Processing, 2nd ed.; Shan, J., Toth, C.K., Eds.; CRC Press: Boca Raton, FL, USA, 2018; pp. 1–28. [Google Scholar]

- Milenković, M.; Ressl, C.; Piermattei, L.; Mandlburger, G.; Pfeifer, N. Roughness Spectra Derived from Multi-Scale LiDAR Point Clouds of a Gravel Surface: A Comparison and Sensitivity Analysis. ISPRS Int. J. Geo-Information 2018, 7, 69. [Google Scholar] [CrossRef] [Green Version]

- Benard, M. Automatic stereophotogrammetry: A method based on feature detection and dynamic programming. Photogrammetria 1984, 39, 169–181. [Google Scholar] [CrossRef]

- Van Zyl, J.J. The Shuttle Radar Topography Mission (SRTM): A breakthrough in remote sensing of topography. Acta Astronaut. 2001, 48, 559–565. [Google Scholar] [CrossRef]

- Rodríguez, E.; Morris, C.S.; Belz, J.E. A Global Assessment of the SRTM Performance. Photogramm. Eng. Remote Sens. 2006, 72, 249–260. [Google Scholar] [CrossRef] [Green Version]

- St-Onge, B.; Vega, C.; Fournier, R.A.; Hu, Y. Mapping canopy height using a combination of digital stereo-photogrammetry and lidar. Int. J. Remote Sens. 2008, 29, 3343–3364. [Google Scholar] [CrossRef]

- Latypov, D. Estimating relative lidar accuracy information from overlapping flight lines. ISPRS J. Photogramm. Remote. Sens. 2002, 56, 236–245. [Google Scholar] [CrossRef]

- Hodgson, M.E.; Bresnahan, P. Accuracy of Airborne Lidar-Derived Elevation. Photogramm. Eng. Remote Sens. 2004, 70, 331–339. [Google Scholar] [CrossRef] [Green Version]

- Salach, A.; Bakuła, K.; Pilarska, M.; Ostrowski, W.; Górski, K.; Kurczyński, Z. Accuracy Assessment of Point Clouds from LiDAR and Dense Image Matching Acquired Using the UAV Platform for DTM Creation. ISPRS Int. J. Geo-Inf. 2018, 7, 342. [Google Scholar] [CrossRef] [Green Version]

- Baltensweiler, A.; Walthert, L.; Ginzler, C.; Sutter, F.; Purves, R.S.; Hanewinkel, M. Terrestrial laser scanning improves digital elevation models and topsoil pH modelling in regions with complex topography and dense vegetation. Environ. Model. Softw. 2017, 95, 13–21. [Google Scholar] [CrossRef]

- Moon, D.; Chung, S.; Kwon, S.; Seo, J.; Shin, J. Comparison and utilization of point cloud generated from photogrammetry and laser scanning: 3D world model for smart heavy equipment planning. Autom. Constr. 2019, 98, 322–331. [Google Scholar] [CrossRef]

- Liu, P.; Chen, A.Y.; Huang, Y.-N.; Han, J.-Y.; Lai, J.-S.; Kang, S.-C.; Wu, T.-H.; Wen, M.-C.; Tsai, M.-H. A review of rotorcraft Unmanned Aerial Vehicle (UAV) developments and applications in civil engineering. Smart Struct. Syst. 2014, 13, 1065–1094. [Google Scholar] [CrossRef]

- Kanellakis, C.; Nikolakopoulos, G. Survey on Computer Vision for UAVs: Current Developments and Trends. J. Intell. Robot. Syst. 2017, 87, 141–168. [Google Scholar] [CrossRef] [Green Version]

- Zhong, Y.; Zhong, Y.; Xu, Y.; Wang, S.; Jia, T.; Hu, X.; Zhao, J.; Wei, L.; Zhang, L. Mini-UAV-Borne Hyperspectral Remote Sensing: From Observation and Processing to Applications. IEEE Geosci. Remote Sens. Mag. 2018, 6, 46–62. [Google Scholar] [CrossRef]

- Tsai, C.-H.; Lin, Y.-C. An accelerated image matching technique for UAV orthoimage registration. ISPRS J. Photogramm. Remote Sens. 2017, 128, 130–145. [Google Scholar] [CrossRef]

- Ziquan, W.; Yifeng, H.; Mengya, L.; Kun, Y.; Yang, Y.; Yi, L.; Sim-Heng, O. A small uav based multi-temporal image registration for dynamic agricultural terrace monitoring. Remote Sens. 2017, 9, 904. [Google Scholar]

- Wan, X.; Liu, J.; Yan, H.; Morgan, G.L. Illumination-invariant image matching for autonomous UAV localisation based on optical sensing. ISPRS J. Photogramm. Remote Sens. 2016, 119, 198–213. [Google Scholar] [CrossRef]

- Jiang, S.; Jiang, W. Hierarchical motion consistency constraint for efficient geometrical verification in UAV stereo image matching. ISPRS J. Photogramm. Remote Sens. 2018, 142, 222–242. [Google Scholar] [CrossRef]

- Zhao, J.; Zhang, X.; Gao, C.; Qiu, X.; Tian, Y.; Zhu, Y.; Cao, W. Rapid Mosaicking of Unmanned Aerial Vehicle (UAV) Images for Crop Growth Monitoring Using the SIFT Algorithm. Remote Sens. 2019, 11, 1226. [Google Scholar] [CrossRef] [Green Version]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef] [Green Version]

- Yu, H.; Li, G.; Zhang, W.; Huang, Q.; Du, D.; Tian, Q.; Sebe, N. The Unmanned Aerial Vehicle Benchmark: Object Detection, Tracking and Baseline. Int. J. Comput. Vis. 2019, 128, 1141–1159. [Google Scholar] [CrossRef]

- Guisado-Pintado, E.; Jackson, D.W.; Rogers, D. 3D mapping efficacy of a drone and terrestrial laser scanner over a temperate beach-dune zone. Geomorphology 2019, 328, 157–172. [Google Scholar] [CrossRef]

- Meng, X.; Shang, N.; Zhang, X.; Li, C.; Zhao, K.; Qiu, X.; Weeks, E. Photogrammetric UAV Mapping of Terrain under Dense Coastal Vegetation: An Object-Oriented Classification Ensemble Algorithm for Classification and Terrain Correction. Remote Sens. 2017, 9, 1187. [Google Scholar] [CrossRef] [Green Version]

- Kolarik, N.E.; Gaughan, A.E.; Stevens, F.R.; Pricope, N.G.; Woodward, K.; Cassidy, L.; Salerno, J.; Hartter, J. A multi-plot assessment of vegetation structure using a micro-unmanned aerial system (UAS) in a semi-arid savanna environment. ISPRS J. Photogramm. Remote Sens. 2020, 164, 84–96. [Google Scholar] [CrossRef] [Green Version]

- Jensen, J.L.R.; Mathews, A.J. Assessment of Image-Based Point Cloud Products to Generate a Bare Earth Surface and Estimate Canopy Heights in a Woodland Ecosystem. Remote Sens. 2016, 8, 50. [Google Scholar] [CrossRef] [Green Version]

- Gruszczyński, W.; Matwij, W.; Ćwiąkała, P. Comparison of low-altitude UAV photogrammetry with terrestrial laser scanning as data-source methods for terrain covered in low vegetation. ISPRS J. Photogramm. Remote Sens. 2017, 126, 168–179. [Google Scholar] [CrossRef]

- Ressl, C.; Brockmann, H.; Mandlburger, G.; Pfeifer, N.; Camillo, R.; Herbert, B.; Gottfried, M.; Norbert, P. Dense Image Matching vs. Airborne Laser Scanning—Comparison of two methods for deriving terrain models. Photogramm. Fernerkund. Geoinf. 2016, 2016, 57–73. [Google Scholar] [CrossRef]

- Manfreda, S.; McCabe, M.F.; Miller, P.E.; Lucas, R.; Pajuelo Madrigal, V.; Mallinis, G.; Ben-Dor, E.; Helman, D.; Estes, L.; Ciraolo, G.; et al. On the Use of Unmanned Aerial Systems for Environmental Monitoring. Remote Sens. 2018, 10, 641. [Google Scholar] [CrossRef] [Green Version]

- Feng, Z.; Yang, Y.; Zhang, Y.; Zhang, P.; Li, Y. Grain-for-green policy and its impacts on grain supply in West China. Land Use Policy 2005, 22, 301–312. [Google Scholar] [CrossRef]

- Delang, C.O.; Yuan, Z. China’s Reforestation and Rural Development Programs. In China’s Grain for Green Program; Springer International Publishing: Cham, Switzerland, 2016; pp. 22–23. [Google Scholar]

- DJI Inspire 1. Available online: https://www.dji.com/inspire-1?site=brandsite&from=landing_page (accessed on 28 September 2020).

- Zenmuse X5. Available online: https://www.dji.com/zenmuse-x5?site=brandsite&from=landing_page (accessed on 28 September 2020).

- HiPer SR. Available online: https://www.topcon.co.jp/en/positioning/products/pdf/HiPerSR_E.pdf (accessed on 28 September 2020).

- Pix4D. Available online: https://www.pix4d.com/product/pix4dmapper-photogrammetry-software (accessed on 28 September 2020).

- Chollet, F. What is deep learning? In Deep Learning with Python; Manning Publications: Shelter Island, NY, USA, 2017; pp. 8–9. [Google Scholar]

- Zhang, W.; Itoh, K.; Tanida, J.; Ichioka, Y. Parallel distributed processing model with local space-invariant interconnections and its optical architecture. Appl. Opt. 1990, 29, 4790–4797. [Google Scholar] [CrossRef]

- Valueva, M.; Nagornov, N.; Lyakhov, P.; Valuev, G.; Chervyakov, N. Application of the residue number system to reduce hardware costs of the convolutional neural network implementation. Math. Comput. Simul. 2020, 177, 232–243. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the 18th International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: New York, NY, USA, 2015; pp. 234–241. [Google Scholar]

- Erdem, F.; Avdan, U. Comparison of Different U-Net Models for Building Extraction from High-Resolution Aerial Imagery. Int. J. Environ. Geoinf. 2020, 7, 221–227. [Google Scholar] [CrossRef]

- Arcgis Pro. Available online: https://www.esri.com/en-us/arcgis/products/arcgis-pro/overview (accessed on 28 September 2020).

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 8–10 June 2015; pp. 3431–3440. [Google Scholar]

- Wang, R.; Peethambaran, J.; Chen, D. LiDAR Point Clouds to 3-D Urban Models: A Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 606–627. [Google Scholar] [CrossRef]

- Zlinszky, A.; Boergens, E.; Glira, P.; Pfeifer, N. Airborne Laser Scanning for calibration and validation of inshore satellite altimetry: A proof of concept. Remote Sens. Environ. 2017, 197, 35–42. [Google Scholar] [CrossRef] [Green Version]

- Klápště, P.; Fogl, M.; Barták, V.; Gdulov, K.; Urban, R.; Moudrý, V. Sensitivity analysis of parameters and contrasting performance of ground filtering algorithms with UAV photogrammetry-based and LiDAR point clouds. Int. J. Digit. Earth 2020, 1–23. [Google Scholar] [CrossRef]

- Kwak, Y.-T. Segmentation of objects with multi layer perceptron by using informations of window. J. Korean Data Inf. Sci. Soc. 2007, 18, 1033–1043. [Google Scholar]

- Han, H.; Yulin, D.; Qing, Z.; Jie, J.; Xuehu, W.; Li, Z.; Wei, T.; Jun, Y.; Ruofei, Z. Precision global dem generation based on adaptive surface filter and poisson terrain editing. Acta Geod. Cartogr. Sin. 2019, 48, 374. [Google Scholar]

| Xining (SA1) | Wangjiamao (SA2) | Wucheng (SA3) | |

|---|---|---|---|

| Location | 36°39′N101°43′E | 37°34′20″N~37°35′10″N 110°21′50″E~110°22′40″E | 39°15′51″N~39°16′57″N 111°33′21″E~111°34′48″E |

| Area | 0.07 km2 | 2.21 km2 | 3.17 km2 |

| Elevation | 2266–2348 m | 1011–1195 m | 1238–1448 m |

| Landform | Loess hill and gully | Loess hill | Loess valley |

| Climate | Semi-arid (BSh) | Semi-arid (BSh) | Semi-arid (BSh) |

| Annual Temperature | 6.5℃ | 9.7℃ | 8℃ |

| Precipitation | 327 mm/y | 486 mm/y | ~450 mm/y |

| Vegetation | Weed | Shrub | Arbor |

| Main vegetation type | Rhamnus erythroxylon, Artemisia | Haloxylon ammodendron, Ziziphus jujuba | Hippophae, Malus domestica |

| Vegetation height | 0.5–2 m | 0.5–6 m | 0.5–6 m |

| Xining | Wangjiamao | Wucheng | |

|---|---|---|---|

| Flight date | 2017.10.24 | 2019.08.20 | 2018.04.26 |

| Flight height | 50 m | 150 m | 200 m |

| Photo gained in total | 80 | 420 | 680 |

| Flight overlapping | 80% | 80% | 80% |

| Side overlapping | 70% | 70% | 70% |

| Ground sampling distance | 2.31 cm | 4.36 cm | 8.06 cm |

| Ground Control Points in total | 7 | 18 | 19 |

| Mean RMS of GCPs | 0.011 m | 0.014 m | 0.018 m |

| Point amount from dense matching | 832341 | 7917617 | 9956200 |

| Network | A | B | C |

|---|---|---|---|

| Layers | 5 | 6 | 10 |

| Down-sampling | 3× 3 × 64 (×128, ×256, ×512, ×512) | 3 × 3 × 64 (×128, ×256, × 512, ×1024, ×1024) | Double B |

| Up-sampling | 3 × 3 × 256 (×128,×64) | 3×3×512(×256, ×128, ×64) | Double B |

| Pooling | (2 × 2) × 3 | (2 × 2)× 4 | (2 × 2) × 4 |

| Jump connection | 3 times | 4 times | 4 times |

| Detection (In Cells) | |||||||

|---|---|---|---|---|---|---|---|

| Xining | Wangjiamao | Wuchenggou | |||||

| Ground | Vegetation | Ground | Vegetation | Ground | Vegetation | ||

| Reference | Ground | 4,457,886 (62.3%) | 225,949 (3.1%) | 127,645,071 (90.0%) | 2,049,627 (1.4%) | 2,095,418 (69.4%) | 135,462 (4.5%) |

| Vegetation | 425,710 (6.0%) | 2,039,464 (28.6%) | 3,181,941 (2.2%) | 9,075,223 (6.4%) | 252,464 (8.3%) | 535,952 (17.8%) | |

| Sample | Reference/m | Result/m | Error/m |

|---|---|---|---|

| A | 2343.246 | 2343.518 | 0.272 |

| B | 2343.283 | 2343.424 | 0.141 |

| C | 2339.275 | 2338.772 | −0.497 |

| D | 2335.718 | 2335.739 | 0.021 |

| E | 2328.019 | 2327.967 | −0.948 |

| F | 2317.586 | 2317.699 | 0.113 |

| G | 2331.099 | 2332.197 | 1.098 |

| H | 2340.806 | 2340.800 | −0.006 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Na, J.; Xue, K.; Xiong, L.; Tang, G.; Ding, H.; Strobl, J.; Pfeifer, N. UAV-Based Terrain Modeling under Vegetation in the Chinese Loess Plateau: A Deep Learning and Terrain Correction Ensemble Framework. Remote Sens. 2020, 12, 3318. https://doi.org/10.3390/rs12203318

Na J, Xue K, Xiong L, Tang G, Ding H, Strobl J, Pfeifer N. UAV-Based Terrain Modeling under Vegetation in the Chinese Loess Plateau: A Deep Learning and Terrain Correction Ensemble Framework. Remote Sensing. 2020; 12(20):3318. https://doi.org/10.3390/rs12203318

Chicago/Turabian StyleNa, Jiaming, Kaikai Xue, Liyang Xiong, Guoan Tang, Hu Ding, Josef Strobl, and Norbert Pfeifer. 2020. "UAV-Based Terrain Modeling under Vegetation in the Chinese Loess Plateau: A Deep Learning and Terrain Correction Ensemble Framework" Remote Sensing 12, no. 20: 3318. https://doi.org/10.3390/rs12203318

APA StyleNa, J., Xue, K., Xiong, L., Tang, G., Ding, H., Strobl, J., & Pfeifer, N. (2020). UAV-Based Terrain Modeling under Vegetation in the Chinese Loess Plateau: A Deep Learning and Terrain Correction Ensemble Framework. Remote Sensing, 12(20), 3318. https://doi.org/10.3390/rs12203318