A Novel Image Fusion Method of Multi-Spectral and SAR Images for Land Cover Classification

Abstract

:1. Introduction

2. Related Works

2.1. Image Fusion Methods

2.2. Classification Methods

3. Material

3.1. Datasets

3.2. Data Preprocessing

3.3. Evaluation Methods

- Standard deviation (SD): It calculates the contrast in the image. The larger the standard deviation, the more scattered the gray level distribution, which means that more information is available.where is the average gray value of the fused image.

- Average gradient (AG): It reflects the image’s ability to express small detail contrast and texture changes, as well as the sharpness of the image. It is desirable to have this value as high as possible.where and .

- Spatial frequency (SF): It measures the overall activity level of the image. Fusion images with high spatial frequency values are preferred. SF is expressed in row frequency (RF) and column frequency (CF).where RF and CF are given by

- Peak signal-to-noise ratio (PSNR): It calculates the visual error between the fused image and the reference image. A large value of PSNR indicates that the distortion of the fused image is small.where is the maximum pixel value of the image and represents the mean square error of the image.

- Correlation coefficient (CC): It measures the correlation between the reference image and the fused image.where is the mean value of reference image.

- Overall accuracy (OA): It represents the proportion of samples that are correctly classified among all samples.

- Kappa statistics: It is a multivariate statistical method for classification accuracy.where is the total number of test samples, and respectively represent the sum of the x-th row and the x-th column of the confusion matrix.

- McNemar’s test: It is used to determine whether a learning algorithm is superior to another in a particular task with acceptable the probability of false detection of differences when there is no difference. Suppose there are two algorithms A and B.where is the number of samples misclassified by algorithm A but not B, and represents the number of samples misclassified by algorithm B but not A. The p-value of the Chi-squared value is calculated by McNemar’s test. Since a small p-value indicates that the null hypothesis is unlikely to be established, we may reject the null hypothesis if the probability that is less than 0.05.

3.4. Parameter Setting

4. The Proposed Method

4.1. Weighted Median Filter

4.2. Gram–Schmidt Transform (GS)

- E, F: number of wavelength available in Sentinel-2A and in GF-3.

- , : total number of pixels in Sentinel-2A and in GF-3.

- : matrix related to the GF-3 image.

- : matrix related to the Sentinel-2A image.

- : matrix related to Gram–Schmidt transformed image.

- Q is an orthogonal matrix, where is the identity matrix.

- R is an upper triangle matrix having an upper triangle matrix inverse and .

5. Experimental Results

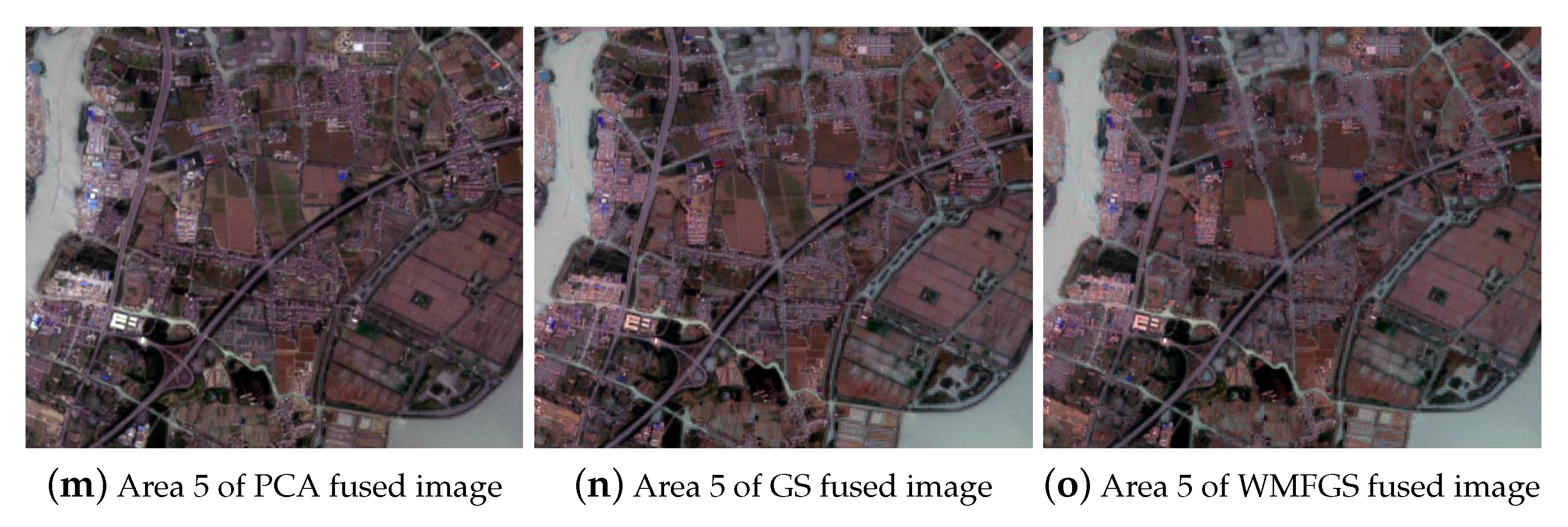

5.1. Visual Comparison

| Algorithm 1 Weighted Median Filer-Based Gram–Schmidt Image Fusion Method (WMFGS). |

| Input: |

| F: GF-3 image |

| E: Sentinel-2A image |

| F: number of wavelength available in GF-3 |

| E: number of wavelength available in Sentinel-2A |

| Nf: total number of pixels in GF-3 |

| Ne: total number of pixels in Sentinel-2A |

| 1 for i = 1 : Ne do |

| 2 Compute wi with (13) and (14) |

| 3 Compute PFk (B) using Pu(v) with (19)–(21) |

| 4 end for |

| 5 for j = 1 : Nf do |

| 6 Compute with (23)) |

| 7 Compute F′ using with (24) |

| 8 Compute S using Σ′ and F′ with (25) |

| 9 end for |

| 10 for i = 1 : Ne do |

| 11 Compute S′ using S and W with (26) |

| 12 Compute with (27) |

| 13 end for |

| Output: |

| : fusion image |

5.2. Image Histogram

5.3. Quantitative Analysis

5.4. Random Forest Classification Results

6. Discussion

- (1)

- In this study, a weighted median filter is used to combine with the Gram–Schmidt transform method. The result of Table 3 shows that compared with the traditional GS fusion method, the weighted median filter can improve the image quality. This is because WMF removes the noise in the Sentinel image while maintaining the details of the image. As described in reference [59], WMF could extract sparse salient structures, like perceptually important edges and contours, while minimizing the color differences in images regions. Since WMF is capable of identifying and filtering the noise while still preserving important structures, the classification map of WMFGS has higher OA and Kappa values.

- (2)

- In Figure 1 and Figure 3, comparing the fused image with the Sentinel image, the contrast between the colors of different classes in the fused image is obvious. In Areas 3 and 4, GS fused images and WMFGS fused images are relatively good at dividing urban areas and roads, while there is no obvious difference in the PCA fused images. In addition, the RF classification maps also show that the PCA fused images do not have better performance than the Sentinel images on the land cover. This is because the spectral information of the original image has a certain percentage of loss in the PCA algorithm fusion process. Comparing the details in Figure 5, the sentinel image has a rougher classification map due to its lower resolution. Although the resolution of PCA fusion images has been significantly improved, many tiny places are misclassified. Water bodies have special spectral characteristics and are easier to distinguish from other types in land cover classification. However, the inner city river is still difficult to distinguish. In the classification map of the WMFGS fusion image, small rivers also have relatively high classification accuracy.

- (3)

- In this study, five indicators, including standard deviation, average gradient, spatial frequency, peak signal-to-noise ratio and correlation coefficient, are used to evaluate the quality of the fusion image. As shown in Table 1, WMFGS fused images have the best quality in most areas in terms of their own image quality and the correlation with the source image. In future work, more indicators will be used to evaluate the fusion image.

- (4)

- In this paper, RF is used for land cover classification. The OA and Kappa coefficient of the five study areas are respectively displayed in Table 2 and Table 3. Compared with Sentinel images, when using WMFGS fusion method, the classification performance is superior for all study areas. The OA and Kappa coefficient have been significantly improved. The classification performance of GS fused images is also improved in Areas 1, 2 and 5. This is mainly due to the addition of GF-3 image information. The classification results prove that the fusion image is very helpful for land cover.

- (5)

- There are also many researchers using GF-3 or Sentinel-2 data for land cover classification. For example, Fang et al. propose a fast super-pixel segmentation method to classify GF-3 polarization data, which has an excellent performance in land cover classification [60]. However, their research also requires pre-classification based on optical data. In [61], Sentinel-2 image is used in the land cover study of the Red River Delta in Vietnam, and the balanced and imbalanced data sets are analyzed, but only the 10 band images with 20 m resolution are used for classification. Different from the pixel-level algorithm proposed in this paper, Gao et al. use a feature-level fusion algorithm to fuse the covariance matrix of GF-3 with the spectral band of Sentinel-2 [20].

- (6)

- The proposed method provides a feasible option for generating images with high spatial resolution and rich spectral information, thereby extending the application of EO data. The method is suitable for the efficient fusion of registered SAR images and MS images. Using the proposed method can make full use of existing SAR and MS satellite data to generate products with different spatial resolutions and spectral resolutions, thereby optimizing the results of land cover classification.

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Shiraishi, T.; Motohka, T.; Thapa, R.B.; Watanabe, M.; Shimada, M. Comparative Assessment of Supervised Classifiers for Land Use Land Cover Classification in a Tropical Region Using Time-Series PALSAR Mosaic Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 1186–1199. [Google Scholar] [CrossRef]

- Thomas, C.; Ranchin, T.; Wald, L.; Chanussot, J. Synthesis of Multispectral Images to High Spatial Resolution: A Critical Review of Fusion Methods Based on Remote Sensing Physics. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1301–1312. [Google Scholar] [CrossRef] [Green Version]

- Cheng, M.; Wang, C.; Li, J. Sparse Representation Based Pansharpening Using Trained Dictionary. Geosci. Remote Sens. Lett. 2014, 11, 293–297. [Google Scholar] [CrossRef]

- Wang, Q.; Shi, W.; Li, Z.; Atkinson, P.M. Fusion of Sentinel-2 images. Remote Sens. Environ. 2016, 187, 241–252. [Google Scholar] [CrossRef] [Green Version]

- Shi, W.; Zhu, C.; Tian, Y.; Nichol, J. Wavelet-based image fusion and quality assessment. Int. J. Appl. Earth Obs. Geoinf. 2005, 6, 241–251. [Google Scholar] [CrossRef]

- Ma, X.; Wu, P.; Wu, Y.; Shen, H. A Review on Recent Developments in Fully Polarimetric SAR Image Despeckling. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 743–758. [Google Scholar] [CrossRef]

- Qingjun, Z. System design and key technologies of the GF-3 satellite. Acta Geod. Cartogr. Sin. 2017, 46, 269. [Google Scholar]

- Jiao, N.; Wang, F.; You, H. A New RD-RFM Stereo Geolocation Model for 3D Geo-Information Reconstruction of SAR-Optical Satellite Image Pairs. IEEE Access 2020, 8, 94654–94664. [Google Scholar] [CrossRef]

- Wang, J.; Chen, J.; Wang, Q. Fusion of POLSAR and Multispectral Satellite Images: A New Insight for Image Fusion. In Proceedings of the 2020 IEEE International Conference on Computational Electromagnetics (ICCEM), Singapore, 24–26 August 2020; pp. 83–84. [Google Scholar] [CrossRef]

- Zhu, Y.; Liu, K.; W Myint, S.; Du, Z.; Li, Y.; Cao, J.; Liu, L.; Wu, Z. Integration of GF2 Optical, GF3 SAR, and UAV Data for Estimating Aboveground Biomass of China’s Largest Artificially Planted Mangroves. Remote Sens. 2020, 12, 2039. [Google Scholar] [CrossRef]

- Silveira, M.; Heleno, S. Separation Between Water and Land in SAR Images Using Region-Based Level Sets. IEEE Geosci. Remote Sens. Lett. 2009, 6, 471–475. [Google Scholar] [CrossRef]

- Fernandez-Beltran, R.; Haut, J.M.; Paoletti, M.E.; Plaza, J.; Plaza, A.; Pla, F. Multimodal Probabilistic Latent Semantic Analysis for Sentinel-1 and Sentinel-2 Image Fusion. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1347–1351. [Google Scholar] [CrossRef]

- Ye, Z.; Prasad, S.; Li, W.; Fowler, J.E.; He, M. Classification Based on 3-D DWT and Decision Fusion for Hyperspectral Image Analysis. IEEE Geosci. Remote Sens. Lett. 2014, 11, 173–177. [Google Scholar] [CrossRef] [Green Version]

- Vohra, R.; Tiwari, K.C. Comparative Analysis of SVM and ANN Classifiers using Multilevel Fusion of Multi-Sensor Data in Urban Land Classification. Sens. Imaging 2020, 21, 17. [Google Scholar] [CrossRef]

- Taha, L.G.E.d.; Elbeih, S.F. Investigation of fusion of SAR and Landsat data for shoreline super resolution mapping: The northeastern Mediterranean Sea coast in Egypt. Appl. Geomat. 2010, 2, 177–186. [Google Scholar] [CrossRef] [Green Version]

- Byun, Y.; Choi, J.; Han, Y. An Area-Based Image Fusion Scheme for the Integration of SAR and Optical Satellite Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 2212–2220. [Google Scholar] [CrossRef]

- Montgomery, J.; Brisco, B.; Chasmer, L.; Devito, K.; Cobbaert, D.; Hopkinson, C. SAR and Lidar Temporal Data Fusion Approaches to Boreal Wetland Ecosystem Monitoring. Remote Sens. 2019, 11, 161. [Google Scholar] [CrossRef] [Green Version]

- Iervolino, P.; Guida, R.; Riccio, D.; Rea, R. A Novel Multispectral, Panchromatic and SAR Data Fusion for Land Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3966–3979. [Google Scholar] [CrossRef]

- Kulkarni, S.C.; Rege, P.P. Pixel level fusion techniques for SAR and optical images: A review. Inf. Fusion 2020, 59, 13–29. [Google Scholar] [CrossRef]

- Gao, H.; Wang, C.; Wang, G.; Zhu, J.; Tang, Y.; Shen, P.; Zhu, Z. A crop classification method integrating GF-3 PolSAR and Sentinel-2A optical data in the Dongting Lake Basin. Sensors 2018, 18, 3139. [Google Scholar] [CrossRef] [Green Version]

- Rodriguez-Galiano, V.; Chica-Olmo, M.; Abarca-Hernandez, F.; Atkinson, P.; Jeganathan, C. Random Forest classification of Mediterranean land cover using multi-seasonal imagery and multi-seasonal texture. Remote Sens. Environ. 2012, 121, 93–107. [Google Scholar] [CrossRef]

- Zhang, R.; Tang, X.; You, S.; Duan, K.; Xiang, H.; Luo, H. A Novel Feature-Level Fusion Framework Using Optical and SAR Remote Sensing Images for Land Use/Land Cover (LULC) Classification in Cloudy Mountainous Area. Appl. Sci. 2020, 10, 2928. [Google Scholar] [CrossRef]

- Feng, W.; Huang, W.; Ren, J. Class Imbalance Ensemble Learning Based on the Margin Theory. Appl. Sci. 2018, 8, 815. [Google Scholar] [CrossRef] [Green Version]

- Feng, W.; Dauphin, G.; Huang, W.; Quan, Y.; Bao, W.; Wu, M.; Li, Q. Dynamic Synthetic Minority Over-Sampling Technique-Based Rotation Forest for the Classification of Imbalanced Hyperspectral Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 2159–2169. [Google Scholar] [CrossRef]

- Gamba, P. Human Settlements: A Global Challenge for EO Data Processing and Interpretation. Proc. IEEE 2013, 101, 570–581. [Google Scholar] [CrossRef]

- Rasaei, Z.; Bogaert, P. Spatial filtering and Bayesian data fusion for mapping soil properties: A case study combining legacy and remotely sensed data in Iran. Geoderma 2019, 344, 50–62. [Google Scholar] [CrossRef]

- Puttinaovarat, S.; Horkaew, P. Urban areas extraction from multi sensor data based on machine learning and data fusion. Pattern Recognit. Image Anal. 2017, 27, 326–337. [Google Scholar] [CrossRef]

- Zhang, J. Multi-source remote sensing data fusion: Status and trends. Int. J. Image Data Fusion 2010, 1, 5–24. [Google Scholar] [CrossRef] [Green Version]

- Pohl, C.; van Genderen, J. Multisensor image fusion in remote sensing: Concepts, methods and applications. Int. J. Remote Sens. 1998, 19, 823–854. [Google Scholar] [CrossRef] [Green Version]

- Wenbo, W.; Jing, Y.; Tingjun, K. Study of remote sensing image fusion and its application in image classification. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 1141–1146. [Google Scholar]

- Ghassemian, H. A review of remote sensing image fusion methods. Inf. Fusion 2016, 32, 75–89. [Google Scholar] [CrossRef]

- Tu, T.M.; Su, S.C.; Shyu, H.C.; Huang, P.S. A new look at IHS-like image fusion methods. Inf. Fusion 2001, 2, 177–186. [Google Scholar] [CrossRef]

- Gillespie, A.R.; Kahle, A.B.; Walker, R.E. Color enhancement of highly correlated images. II. Channel ratio and chromaticity transformation techniques. Remote Sens. Environ. 1987, 22, 343–365. [Google Scholar] [CrossRef]

- Gonzalez-Audicana, M.; Saleta, J.L.; Catalan, R.G.; Garcia, R. Fusion of multispectral and panchromatic images using improved IHS and PCA mergers based on wavelet decomposition. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1291–1299. [Google Scholar] [CrossRef]

- Palsson, F.; Sveinsson, J.R.; Ulfarsson, M.O.; Benediktsson, J.A. Model-Based Fusion of Multi- and Hyperspectral Images Using PCA and Wavelets. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2652–2663. [Google Scholar] [CrossRef]

- Laben, C.A.; Brower, B.V. Process for Enhancing the Spatial Resolution of Multispectral Imagery Using Pan-Sharpening. U.S. Patent 6,011,875, 4 January 2000. [Google Scholar]

- Aiazzi, B.; Baronti, S.; Selva, M. Improving Component Substitution Pansharpening Through Multivariate Regression of MS +Pan Data. Trans. Geosci. Remote Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Chen, C.M.; Hepner, G.; Forster, R. Fusion of hyperspectral and radar data using the IHS transformation to enhance urban surface features. ISPRS J. Photogramm. Remote Sens. 2003, 58, 19–30. [Google Scholar] [CrossRef]

- Shao, Z.; Wu, W.; Guo, S. IHS-GTF: A Fusion Method for Optical and Synthetic Aperture Radar Data. Remote Sens. 2020, 12, 2796. [Google Scholar] [CrossRef]

- Wang, Z.; Ziou, D.; Armenakis, C.; Li, D.; Li, Q. A comparative analysis of image fusion methods. IEEE Trans. Geosci. Remote Sens. 2005, 43, 1391–1402. [Google Scholar] [CrossRef]

- Singh, D.; Garg, D.; Pannu, H.S. Efficient Landsat image fusion using fuzzy and stationary discrete wavelet transform. Imaging Sci. J. 2017, 65, 108–114. [Google Scholar] [CrossRef]

- Kwarteng, P.; Chavez, A. Extracting spectral contrast in Landsat Thematic Mapper image data using selective principal component analysis. Photogramm. Eng. Remote Sens 1989, 55, 1. [Google Scholar]

- Ma, J.; Gong, M.; Zhou, Z. Wavelet Fusion on Ratio Images for Change Detection in SAR Images. IEEE Geosci. Remote Sens. Lett. 2012, 9, 1122–1126. [Google Scholar] [CrossRef]

- Salentinig, A.; Gamba, P. A General Framework for Urban Area Extraction Exploiting Multiresolution SAR Data Fusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 2009–2018. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J.P. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Foody, G.M. Thematic map comparison. Photogramm. Eng. Remote Sens. 2004, 70, 627–633. [Google Scholar] [CrossRef]

- Feng, W.; Dauphin, G.; Huang, W.; Quan, Y.; Liao, W. New margin-based subsampling iterative technique in modified random forests for classification. Knowl. Based Syst. 2019, 182, 104845. [Google Scholar] [CrossRef]

- Feng, W.; Huang, W.; Dauphin, G.; Xia, J.; Quan, Y.; Ye, H.; Dong, Y. Ensemble Margin Based Semi-Supervised Random Forest for the Classification of Hyperspectral Image with Limited Training Data. In Proceedings of the 2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 971–974. [Google Scholar] [CrossRef]

- Feng, W.; Huang, W.; Bao, W. Imbalanced Hyperspectral Image Classification With an Adaptive Ensemble Method Based on SMOTE and Rotation Forest With Differentiated Sampling Rates. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1879–1883. [Google Scholar] [CrossRef]

- Quan, Y.; Zhong, X.; Feng, W.; Dauphin, G.; Gao, L.; Xing, M. A Novel Feature Extension Method for the Forest Disaster Monitoring Using Multispectral Data. Remote Sens. 2020, 12, 2261. [Google Scholar] [CrossRef]

- Li, Q.; Feng, W.; Quan, Y. Trend and forecasting of the COVID-19 outbreak in China. J. Infect. 2020, 80, 469–496. [Google Scholar]

- Tian, S.; Zhang, X.; Tian, J.; Sun, Q. Random forest classification of wetland landcovers from multi-sensor data in the arid region of Xinjiang, China. Remote Sens. 2016, 8, 954. [Google Scholar] [CrossRef] [Green Version]

- Xu, Z.; Chen, J.; Xia, J.; Du, P.; Zheng, H.; Gan, L. Multisource Earth Observation Data for Land-Cover Classification Using Random Forest. IEEE Geosci. Remote Sens. Lett. 2018, 15, 789–793. [Google Scholar] [CrossRef]

- Wu, Q.; Zhong, R.; Zhao, W.; Song, K.; Du, L. Land-cover classification using GF-2 images and airborne lidar data based on Random Forest. Geosci. Remote Sens. Lett. 2019, 40, 2410–2426. [Google Scholar] [CrossRef]

- Dietterich, T.G. Approximate Statistical Tests for Comparing Supervised Classification Learning Algorithms. Neural Comput. 1998, 10, 1895–1923. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, Q.; Xu, L.; Jia, J. 100+ Times Faster Weighted Median Filter (WMF). In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2830–2837. [Google Scholar]

- Du, J.; Li, W.; Xiao, B.; Nawaz, Q. Union Laplacian pyramid with multiple features for medical image fusion. Neurocomputing 2016, 194, 326–339. [Google Scholar] [CrossRef]

- Fan, Q.; Yang, J.; Hua, G.; Chen, B.; Wipf, D. A Generic Deep Architecture for Single Image Reflection Removal and Image Smoothing. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Fang, Y.; Zhang, H.; Mao, Q.; Li, Z. Land cover classification with gf-3 polarimetric synthetic aperture radar data by random forest classifier and fast super-pixel segmentation. Sensors 2018, 18, 2014. [Google Scholar] [CrossRef] [Green Version]

- Thanh Noi, P.; Kappas, M. Comparison of random forest, k-nearest neighbor, and support vector machine classifiers for land cover classification using Sentinel-2 imagery. Sensors 2018, 18, 18. [Google Scholar] [CrossRef] [Green Version]

- Amani, M.; Ghorbanian, A.; Ahmadi, S.A.; Kakooei, M.; Moghimi, A.; Mirmazloumi, S.M.; Moghaddam, S.H.A.; Mahdavi, S.; Ghahremanloo, M.; Parsian, S.; et al. Google Earth Engine Cloud Computing Platform for Remote Sensing Big Data Applications: A Comprehensive Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5326–5350. [Google Scholar] [CrossRef]

- Stromann, O.; Nascetti, A.; Yousif, O.; Ban, Y. Dimensionality Reduction and Feature Selection for Object-Based Land Cover Classification based on Sentinel-1 and Sentinel-2 Time Series Using Google Earth Engine. Remote Sens. 2020, 12, 76. [Google Scholar] [CrossRef] [Green Version]

| SD | AG | SF | PSNR | CC | ||

|---|---|---|---|---|---|---|

| Area 1 | PCA fused image | 301.3385 | 8.4444 | 59.0375 | 89.6709 | 0.6908 |

| GS fused image | 362.5207 | 8.5403 | 132.8691 | 94.5200 | 0.7078 | |

| WMFGS fused image | 365.7824 | 9.4150 | 133.5344 | 94.5325 | 0.7107 | |

| Area 2 | PCA fused image | 288.3498 | 9.5105 | 78.5329 | 90.0781 | 0.6040 |

| GS fused image | 324.7555 | 9.5290 | 177.7829 | 92.0075 | 0.6077 | |

| WMFGS fused image | 329.9461 | 10.4368 | 178.6462 | 92.0360 | 0.6360 | |

| Area 3 | PCA fused image | 620.3025 | 10.2363 | 131.9687 | 114.0561 | 0.7434 |

| GS fused image | 646.8171 | 10.7526 | 132.6416 | 95.5631 | 0.7313 | |

| WMFGS fused image | 657.6024 | 9.9434 | 120.6161 | 95.6224 | 0.7344 | |

| Area 4 | PCA fused image | 497.7268 | 9.1275 | 48.9170 | 76.2159 | 0.6041 |

| GS fused image | 453.9458 | 9.9560 | 70.0637 | 83.0082 | 0.6278 | |

| WMFGS fused image | 641.7992 | 9.9691 | 71.6464 | 82.9793 | 0.6303 | |

| Area 5 | PCA fused image | 274.4397 | 9.2216 | 80.3165 | 94.1552 | 0.7368 |

| GS fused image | 277.0106 | 9.2422 | 109.9329 | 97.1569 | 0.7775 | |

| WMFGS fused image | 282.7229 | 9.4953 | 110.7430 | 98.8695 | 0.7810 |

| Sentinel | PCA | GS | WMFGS | |

|---|---|---|---|---|

| Area 1 | 83.97% | 79.58% | 85.64% | 89.33% |

| Area 2 | 88.71% | 86.50% | 91.78% | 92.96% |

| Area 3 | 93.63% | 92.76% | 92.21% | 95.45% |

| Area 4 | 86.32% | 82.87% | 83.78% | 89.13% |

| Area 5 | 90.97% | 88.08% | 94.55% | 95.42% |

| Sentinel | PCA | GS | WMFGS | |

|---|---|---|---|---|

| Area 1 | 0.7910 | 0.7334 | 0.8136 | 0.8610 |

| Area 2 | 0.8524 | 0.8236 | 0.8923 | 0.9076 |

| Area 3 | 0.9168 | 0.9049 | 0.8977 | 0.9403 |

| Area 4 | 0.8205 | 0.7755 | 0.7867 | 0.8558 |

| Area 5 | 0.8835 | 0.8442 | 0.9292 | 0.9406 |

| Area 1 | Area 2 | Area 3 | Area 4 | Area 5 | |

|---|---|---|---|---|---|

| Sentinel & PCA | 3.725523 | 2.426208 | 2.110579 | 2.114182 | 3.323549 |

| Sentinel & GS | 4.058187 | 14.41599 | 8.181650 | 4.221159 | 7.939799 |

| Sentinel & WMFGS | 2.994345 | 13.69692 | 9.777861 | 6.246950 | 7.559289 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Quan, Y.; Tong, Y.; Feng, W.; Dauphin, G.; Huang, W.; Xing, M. A Novel Image Fusion Method of Multi-Spectral and SAR Images for Land Cover Classification. Remote Sens. 2020, 12, 3801. https://doi.org/10.3390/rs12223801

Quan Y, Tong Y, Feng W, Dauphin G, Huang W, Xing M. A Novel Image Fusion Method of Multi-Spectral and SAR Images for Land Cover Classification. Remote Sensing. 2020; 12(22):3801. https://doi.org/10.3390/rs12223801

Chicago/Turabian StyleQuan, Yinghui, Yingping Tong, Wei Feng, Gabriel Dauphin, Wenjiang Huang, and Mengdao Xing. 2020. "A Novel Image Fusion Method of Multi-Spectral and SAR Images for Land Cover Classification" Remote Sensing 12, no. 22: 3801. https://doi.org/10.3390/rs12223801