3.2. Two-class Vegetation Classification Results

We now recast the vegetation detection problem into a two-class classification setting, where all vegetation classes are grouped into vegetation and the remaining classes into non-vegetation. This means combining the three land covers shown in

Table 1 as vegetation—which are two versions of grass (healthy and stressed), and also trees—and the other 12 classes as non-vegetation, those being synthetic grass, soil, water, residential, commercial, road, highway, railway, parking lot 1, parking lot 2, tennis court, and running track. It should be noted that synthetic grass has the same appearance in terms of color and texture as real grass but was not considered as vegetation. The OA and AA results for two-class classification results are shown in

Table 5 and

Table 6. For some datasets, accuracies spike for each method with some standard methods (ASD, MSD, RXD) nearing OA values of 95%. The kernel methods also see drastic improvements with a majority resulting in 90% accuracy or higher for those datasets (DS-44, DS-55, DS-144, DS-145) as can be seen in

Table 5. The best in both cases is MSD with the 55-band version returning 94.77% accuracy and KMSD’s 44-band case returning a value of 99.40% only missing 78 pixels out of 12,197.

The objective of our investigation was mainly to compare the performances of all the investigated methods with the NDVI and EVI techniques. In this setting, SVM using RGB and NIR bands (DS-4: four bands) without EMAP surprisingly provided an overall accuracy of 99.54% whereas the NDVI technique’s overall accuracy was 93.74%. SVM with four bands also exceeded the performance of SVM when using all 144 bands which was 96.66%. In the two-class setting, we also observed that EMAP improved the accuracies for several methods but SVM was not among them.

The LiDAR band also slightly helped the SR performance to reach 98.29%, but the gain is about 0.77%. In other methods, the LiDAR band helped more drastically, for KMSD the improvement is a more substantial 7.08%, but not always, as is seen with MSD where the reduction in accuracy is 8.88%.

For two-class classification results, CNN did not yield the best performance. We think that this is because CNN has good intra-class classification performance, but not necessarily good performance for inter-classification. We have some further explanations in

Section 3.3.

For completeness, 2 × 2 confusion matrices of vegetation and non-vegetation of all the nine conventional algorithms and six datasets are shown in

Table 7,

Table 8 and

Table 9.

Table 10 contains the results for the deep learning method, CNN. Because these results are much more compact than 15 × 15 confusion matrices, they can easily be included in the body of this paper.

The confusion matrices for the vegetation detection results (vegetation vs. non-vegetation) in

Table 8,

Table 9 and

Table 10 using the 10 algorithms can now be compared with the NDVI and EVI results.

Table 11 shows the results for NDVI. Given the quick computation times of these methods and that they do not need any training data, we conclude that the resulting percentages for NDVI are quite good. With an overall accuracy of 93.74%, NDVI beats 23 of the 45 scenarios, where one of the best overall accuracies, 99.54%, comes from the SVM case using four bands. However, NDVI does have its limitations, specifically that it can only differentiate between vegetation and non-vegetation and it cannot classify different types of vegetation. In some cases, differentiating trees from grass is important for construction surveying. The EVI results are shown in

Table 12. The detection results for EVI are not good for this dataset even though EVI may have better performance than NDVI in other scenarios [

11].

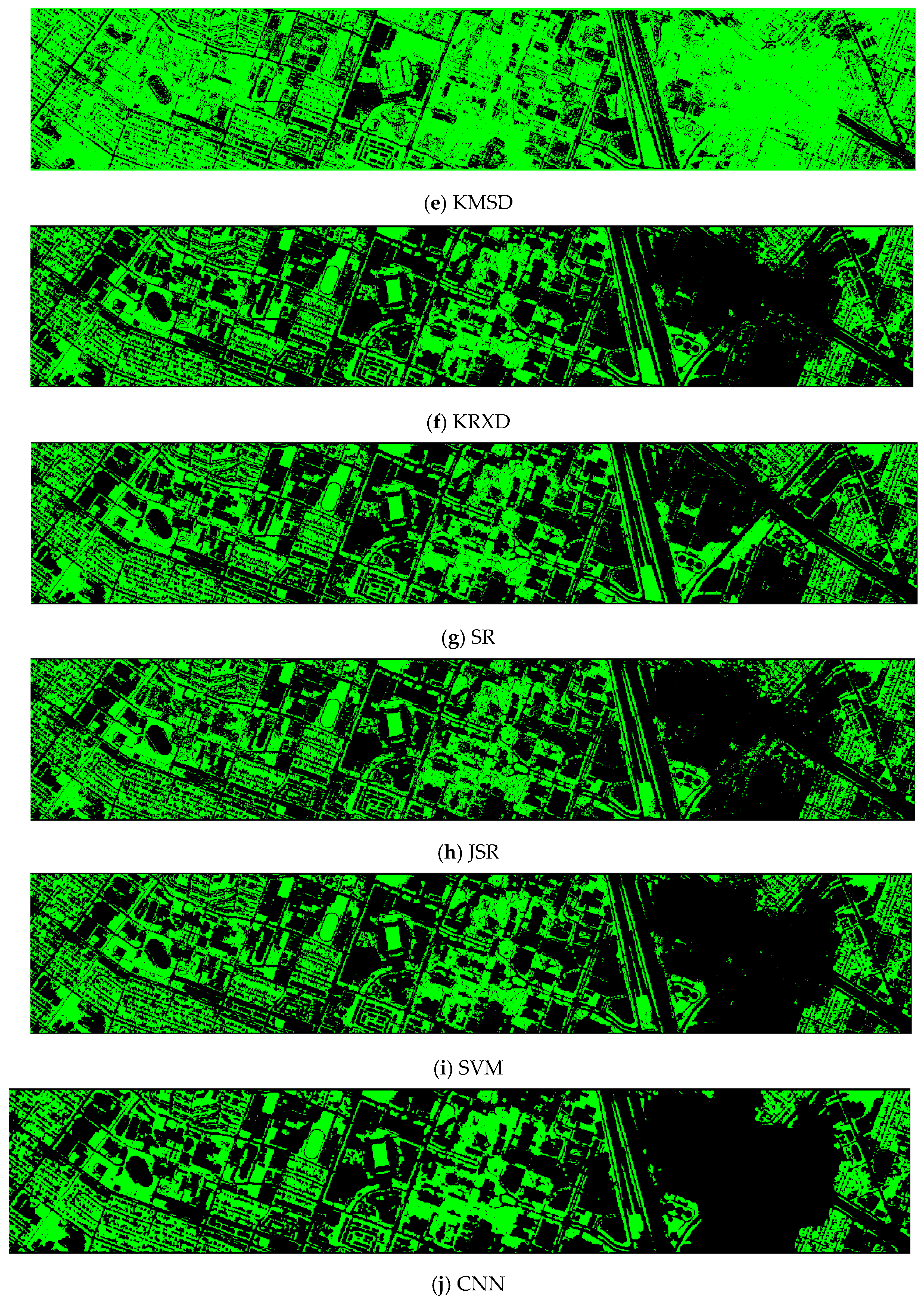

Finally, we would like to include some vegetation detection maps using different methods.

Figure 7 shows the detection maps for the 4-band case. There are twelve maps, including ASD, MSD, RXD, KASD, KMSD, KRXD, SR, JSR, SVM, CNN, NDVI, and EVI. It can be seen that ASD, MSD, KASD, KRXD maps are not good. EVI has more false positives and NDVI has more missed detections. SR, JSR, SVM, and NN have comparable performance. Vegetation maps for DS-5, DS-44, DS-45, DS-144, and DS-145 can be found in

Figure A1,

Figure A2,

Figure A3,

Figure A4 and

Figure A5, respectively in the

Appendix A.

3.3. Discussion

Here, we summarize some observations and offer some plausible explanations for them.

In

Section 1, we raised a few important research questions. The first one is about whether or not the vegetation performance will be better if HS data is used. We would like to answer this question now. From

Table 5 and

Table 6, we can see that the detection performance using HS data is not always better than that of using only four bands. There are two possible reasons. First, researchers have observed that land cover classification results using HS data also do not yield better results than that of using fewer bands. See results in [

17,

25,

40]. This could be due to the curse of dimensionality. Some people also think that having redundant bands in the HS data may degrade the classification performance as well. Second, for vegetation detection, many bands in the HS data do not contribute to the detection of vegetation because only bands close to the red and NIR bands will have more influence to vegetation detection.

The second research question raised in

Section 1 concerns whether or not EMAP can help the vegetation detection performance. Based on our experiments, the answer is positive. In the 15-class land cover classification experiments, we observed that the synthetic bands helped the overall classification performance in both OA and AA (see

Table 3 and

Table 4). In the two-class vegetation detection scenario, we also see a similar trend, except the SVM and JSR cases. In those SVM and JSR cases, the performance difference between DS-4 and DS-44 is about 2%. In short, synthetic bands help more on those low performing methods such as ASD, MSD, etc.

In

Section 1, we also raised the question (Question 3) of whether or not there exist simple and high performing land cover classification methods for vegetation detection that may perform better than NDVI. We also brought up the fourth question about the quantification of the performance gain if there does exist simple and high performing methods. Based on our experiments, SVM performs better than other methods and achieved 99% accurate vegetation detection. The performance gain over NDVI is 6%.

The last question raised in

Section 1 is about whether or not LiDAR can help the vegetation detection. Based on our experiments, the performance gain of using LiDAR is small. This is mainly because most vegetation pixels are grass.

Although EVI has shown some promising vegetation detection in some cases than that of NDVI, EVI has inferior performance in this application. Since it was mentioned in [

11] that EVI is sensitive to topographic changes, we speculate that the reason for the poor performance of EVI in this application is perhaps due to topological variations in the IEEE dataset, given that the U. Houston area is primarily urban area and EVI is most responsive to the canopy variations of completely forested areas. Before we carried out our experiments, we anticipated that EVI may have better performance than NDVI. However, this turns out not be the case. This shows that one cannot jump to conclusions before one actually carries out some actual investigations.

Initially, we were puzzled by the SVM results in the 4-band (DS-4) case. From

Table 3 and

Table 5, we observe that the OA of SVM in the 15-class is 70.43%. However, the OA of SVM in the two-class case jumps to 99.52%. Why is there a big jump? To answer the above question, it will be easier to explain if we use the confusion matrices.

Table 13 shows the confusion matrix of the 15-class case using 4 bands. The red and blue numbers indicate the vegetation and non-vegetation classes, respectively. We can see that there are over 300 off-diagonal numbers in the red group, indicating that there are mis-classifications among the vegetation classes. Similarly, there are also a lot of off-diagonal blue numbers in the non-vegetation classes. When we aggregate the vegetation and non-vegetation classes in

Table 12 into the two-class confusion matrix as shown in

Table 14, one can see that the off-diagonal numbers are much smaller. This means that even though there are a lot of intra-class mis-classifications, the inter-class mis-classifications are not that many. In other words, SVM did a good job in reducing the mis-classifications between vegetation and non-vegetation classes.

It can be seen from

Table 3 and

Table 4 that CNN outperforms other conventional methods for 15-class classification. However, from

Table 5 and

Table 6, we observe that the CNN results are not as good as SVM. This can be explained by following the same analysis as the previous bullet. CNN may have better intra-class classification performance, but it may have slightly inferior inter-class classification performance due to lack of training data.

It will be interesting to compare with some other deep learning-based approaches here. The OA of three CNN based methods (CNN, EMAP CNN, and Gabor CNN) in

Table 10 of Paoletti et al.’s paper [

33] are 82.75, 84.04, and 84.12, respectively. These numbers are slightly better than ours. However, we would like emphasize that we only used RGB and NIR bands, whereas those CNN methods use all the hyperspectral bands.

One reviewer brought up an important issue about imbalanced data in

Table 1. It is true that unbalanced data can induce lower classification accuracies for those classes with fewer samples. We did try to augment the classes with fewer samples. For instance, we introduced duplicated samples, slightly noisy samples, and random downsampling classes with more samples. However, the performance metrics did not change much. In some cases, the accuracies were actually worse than that of no augmentation. We believe that the key limiting factor in improving the CNN is that the training sample size is too small. There are only 2832 samples for training and 12,197 samples for testing. See

Table 1. For deep learning methods to flourish, it is necessary to have a lot of training data. Unfortunately, due to lack of training data in the Houston data, the power of CNN cannot be materialized. Actually, in other CNN methods [

33] for the same dataset, the overall performance gain is slightly above 80%. This implies that the lack of training data is the key limiting factor for the CNN performance.

One reviewer pointed out that the NDVI and EVI should be calculated using reflectance data. However, the Houston data only have radiance data. It will require some atmospheric compensation tools to transform the radiance data to reflectance data. This is out of the scope of our research. Moreover, according to a discussion thread in Researchgate [

41], it is still possible to use radiance to compute NDVI or EVI. One contributor in that thread, Dr. Fernando Camacho de Coca, who is a physicist, argued that “Even it, in general, is much convenient to use reflectance at the top of the canopy to better normalize atmospheric effects, it is not always needed. It could depend on what is the use of the NDVI. For instance, if you want to make a regression with ground information using high resolution data (e.g., Landsat), to use a NDVI _rad is perfectly valid. However, if you want to use NDVI for multi-temporal studies to assess for instances changes in the vegetation canopy, you must use NDVI_ref to assure that observed changes are no affected by atmospheric effects.” Since our application is not about “instances changes in the vegetation canopy”, the use of radiance to compute NDVI or EVI is valid.

We would also like to argue that the hyperspectral imager was onboard a helicopter, which flew at a very low altitude above the ground. Consequently, the atmospheric effects between the imager and the ground were almost negligible and can be ignored. In other words, the Houston hyperspectral dataset was not collected at the “top of the atmosphere (TOA)”. In addition, we observed that the NDVI results are quite accurate (

Table 11), meaning that the use of radiance is valid in this special case of low flying airborne data acquisition.

The nine land cover classification algorithms vary a lot in computational complexity. In our earlier papers, we compared them and found that SVM was the most efficient. Using an Intel

® Core™ i7-4790 CPU and a GeForce GTX TITAN Black GPU, it took about 5 min for SVM to process the DS-4 dataset. Details can be found in [

17,

19], and we omit the details here for brevity. The CNN method requires GPU and is not fair to compare CNN’s computational times with other conventional methods.