Crop Classification Using Multi-Temporal Sentinel-2 Data in the Shiyang River Basin of China

Abstract

:1. Introduction

- (1)

- explore what degree of accuracy can be achieved for crop mapping when using the multi-temporal and multi-spectral Sentinel-2 images and random forest model in the Shiyang River Basin;

- (2)

- identify the influence of the spectral and temporal information of Sentinel-2 on crop classification and the suitable feature selection strategies;

- (3)

- explore how early in the growing season the crops could be classified with an acceptable accuracy.

2. Study Area and Data

2.1. Study Area

2.2. Data

2.2.1. Remote Sensing Data

2.2.2. Ground Truth Dataset

2.2.3. Pre-Extraction of Cropland

3. Crop Classification Methods

3.1. Crop Classification Model and the Accuracy Assessment

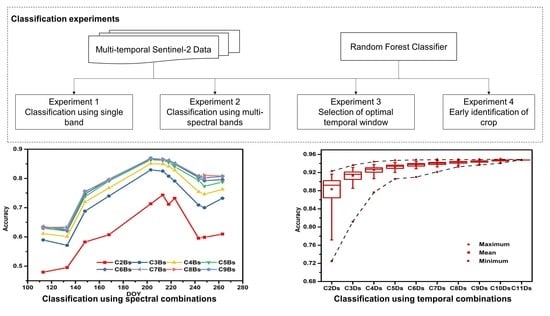

3.2. Experiment Design

3.2.1. Classification Using Single Band

3.2.2. Classification Using Multi-Spectral Bands

3.2.3. Selection of the Optimal Temporal Window

3.2.4. Early Identification of Crops

4. Results

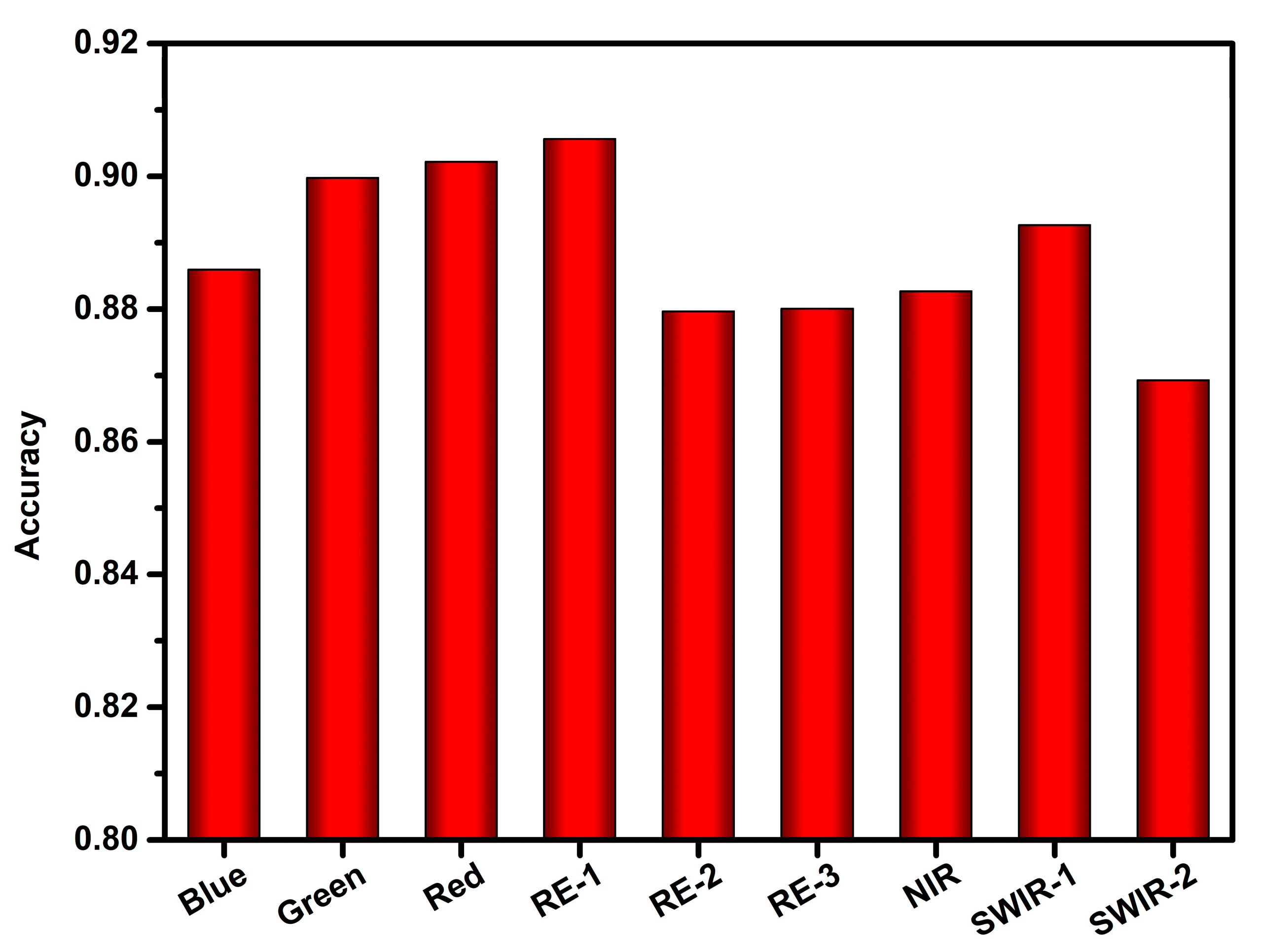

4.1. Crop Classification Accuracy Using Single Band

4.2. Crop Classification Accuracy Using Multi-Spectral Bands

4.2.1. Spectral Combinations on Single Date

4.2.2. Spectral Combinations with Multi-Temporal Information

4.3. Selecting Optimal Temporal Window

4.4. Early Identification of Crop Types

4.5. Basin-Scale Crop Classification Mapping

5. Discussion

5.1. The Impact of Multi-Spectral Information on Crop Classification

5.2. The Impact of Temporal Information on Crop Classification

5.3. The Selection Strategy of Spectral-Temporal Features

5.4. Limitations

6. Conclusions

- (1)

- Reasonable choice of spectral band combinations can effectively improve the crop classification accuracy. The RE-1 and SWIR-1 bands of Sentinel-2 are more efficient in identifying crops than other bands in the Shiyang River Basin.

- (2)

- In single-date crop classification, images from the middle growth periods are most pivotal for crop classification.

- (3)

- Images including the early, mid and late stages of the growing season are indispensable for achieving optimal performance in crop classification. In this study, four images from the key temporal window can get the best trade-off among the classification accuracy and number of images to be used.

- (4)

- Sentinel-2 data in combination with the RF method have the potential for the early detection of crops. In the Shiyang River Basin, the time of in-season classification could be advanced in late July (DOY210) with the overall accuracy reaching 0.9. Wheat could be identified accurately as early as in mid-June (one month before harvest). Alfalfa could be mapped as early as in mid-June (the first harvest). Sunflower, melon2, fennel and corn could be recognized as early as early August (one month before harvest).

Author Contributions

Funding

Conflicts of Interest

References

- Ozdogan, M. The spatial distribution of crop types from modis data: Temporal unmixing using independent component analysis. Remote Sens. Environ. 2010, 114, 1190–1204. [Google Scholar] [CrossRef]

- Lobell, D.B.; Thau, D.; Seifert, C.; Engle, E.; Little, B. A scalable satellite-based crop yield mapper. Remote Sens. Environ. 2015, 164, 324–333. [Google Scholar] [CrossRef]

- Song, X.-P.; Potapov, P.V.; Krylov, A.; King, L.; Di Bella, C.M.; Hudson, A.; Khan, A.; Adusei, B.; Stehman, S.V.; Hansen, M.C. National-scale soybean mapping and area estimation in the united states using medium resolution satellite imagery and field survey. Remote Sens. Environ. 2017, 190, 383–395. [Google Scholar] [CrossRef]

- Sakamoto, T.; Gitelson, A.A.; Arkebauer, T.J. Near real-time prediction of u.S. Corn yields based on time-series modis data. Remote Sens. Environ. 2014, 147, 219–231. [Google Scholar] [CrossRef]

- See, L.; Fritz, S.; You, L.; Ramankutty, N.; Herrero, M.; Justice, C.; Becker-Reshef, I.; Thornton, P.; Erb, K.; Gong, P.; et al. Improved global cropland data as an essential ingredient for food security. Glob. Food Secur. 2015, 4, 37–45. [Google Scholar] [CrossRef]

- Wardlow, B.D.; Egbert, S.L. Large-area crop mapping using time-series modis 250 m ndvi data: An assessment for the u.S. Central great plains. Remote Sens. Environ. 2008, 112, 1096–1116. [Google Scholar] [CrossRef]

- You, N.; Dong, J. Examining earliest identifiable timing of crops using all available sentinel 1/2 imagery and google earth engine. ISPRS J. Photogramm. Remote Sens. 2020, 161, 109–123. [Google Scholar] [CrossRef]

- Duro, D.C.; Franklin, S.E.; Dubé, M.G. A comparison of pixel-based and object-based image analysis with selected machine learning algorithms for the classification of agricultural landscapes using spot-5 hrg imagery. Remote Sens. Environ. 2012, 118, 259–272. [Google Scholar] [CrossRef]

- Van Niel, T.G.; McVicar, T.R. Determining temporal windows for crop discrimination with remote sensing: A case study in south-eastern australia. Comput. Electron. Agric. 2004, 45, 91–108. [Google Scholar] [CrossRef]

- Wardlow, B.; Egbert, S.; Kastens, J. Analysis of time-series modis 250 m vegetation index data for crop classification in the u.S. Central great plains. Remote Sens. Environ. 2007, 108, 290–310. [Google Scholar] [CrossRef] [Green Version]

- Zhong, L.; Gong, P.; Biging, G.S. Efficient corn and soybean mapping with temporal extendability: A multi-year experiment using landsat imagery. Remote Sens. Environ. 2014, 140, 1–13. [Google Scholar] [CrossRef]

- Conese, C.; Maselli, F. Use of multitemporal information to improve classification performance of tm scenes in complex terrain. ISPRS J. Photogramm. Remote Sens. 1991, 46, 187–197. [Google Scholar] [CrossRef]

- Peña, M.A.; Brenning, A. Assessing fruit-tree crop classification from landsat-8 time series for the maipo valley, chile. Remote Sens. Environ. 2015, 171, 234–244. [Google Scholar] [CrossRef]

- Lobell, D.B.; Asner, G.P. Cropland distributions from temporal unmixing of modis data. Remote Sens. Environ. 2004, 93, 412–422. [Google Scholar] [CrossRef]

- Massey, R.; Sankey, T.T.; Congalton, R.G.; Yadav, K.; Thenkabail, P.S.; Ozdogan, M.; Sánchez Meador, A.J. Modis phenology-derived, multi-year distribution of conterminous u.S. Crop types. Remote Sens. Environ. 2017, 198, 490–503. [Google Scholar] [CrossRef]

- Wardlow, B.D.; Egbert, S.L. A comparison of modis 250-m evi and ndvi data for crop mapping: A case study for southwest kansas. Int. J. Remote. Sens. 2010, 31, 805–830. [Google Scholar] [CrossRef]

- Cai, Y.; Guan, K.; Peng, J.; Wang, S.; Seifert, C.; Wardlow, B.; Li, Z. A high-performance and in-season classification system of field-level crop types using time-series landsat data and a machine learning approach. Remote Sens. Environ. 2018, 210, 35–47. [Google Scholar] [CrossRef]

- Oliphant, A.J.; Thenkabail, P.S.; Teluguntla, P.; Xiong, J.; Gumma, M.K.; Congalton, R.G.; Yadav, K. Mapping cropland extent of southeast and northeast asia using multi-year time-series landsat 30-m data using a random forest classifier on the google earth engine cloud. Int. J. Appl. Earth Obs. Geoinf. 2019, 81, 110–124. [Google Scholar] [CrossRef]

- Zhong, L.; Hu, L.; Zhou, H. Deep learning based multi-temporal crop classification. Remote Sens. Environ. 2019, 221, 430–443. [Google Scholar] [CrossRef]

- Gilbertson, J.K.; Kemp, J.; van Niekerk, A. Effect of pan-sharpening multi-temporal landsat 8 imagery for crop type differentiation using different classification techniques. Comput. Electron. Agric. 2017, 134, 151–159. [Google Scholar] [CrossRef] [Green Version]

- Wu, M.; Yang, C.; Song, X.; Hoffmann, W.C.; Huang, W.; Niu, Z.; Wang, C.; Li, W.; Yu, B. Monitoring cotton root rot by synthetic sentinel-2 ndvi time series using improved spatial and temporal data fusion. Sci. Rep. 2018, 8, 2016. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Campos, T.; García, H.; Martínez, B.; Sánchez, R.; Gilabert, M.A. A copernicus sentinel-1 and sentinel-2 classification framework for the 2020+ european common agricultural policy: A case study in valència (spain). Agronomy 2019, 9, 556. [Google Scholar] [CrossRef] [Green Version]

- Immitzer, M.; Vuolo, F.; Atzberger, C. First experience with sentinel-2 data for crop and tree species classifications in central europe. Remote Sens. 2016, 8, 166. [Google Scholar] [CrossRef]

- Nasrallah, A.; Baghdadi, N.; Mhawej, M.; Faour, G.; Darwish, T.; Belhouchette, H.; Darwich, S. A novel approach for mapping wheat areas using high resolution sentinel-2 images. Sensors 2018, 18, 2089. [Google Scholar] [CrossRef] [Green Version]

- Piedelobo, L.; Hernández-López, D.; Ballesteros, R.; Chakhar, A.; Del Pozo, S.; González-Aguilera, D.; Moreno, M.A. Scalable pixel-based crop classification combining sentinel-2 and landsat-8 data time series: Case study of the duero river basin. Agric. Syst. 2019, 171, 36–50. [Google Scholar] [CrossRef]

- Veloso, A.; Mermoz, S.; Bouvet, A.; Le Toan, T.; Planells, M.; Dejoux, J.-F.; Ceschia, E. Understanding the temporal behavior of crops using sentinel-1 and sentinel-2-like data for agricultural applications. Remote Sens. Environ. 2017, 199, 415–426. [Google Scholar] [CrossRef]

- Zhang, H.; Kang, J.; Xu, X.; Zhang, L. Accessing the temporal and spectral features in crop type mapping using multi-temporal sentinel-2 imagery: A case study of yi’an county, heilongjiang province, china. Comput. Electron. Agric. 2020, 176, 105618. [Google Scholar] [CrossRef]

- Hu, Q.; Wu, W.-B.; Song, Q.; Lu, M.; Chen, D.; Yu, Q.-Y.; Tang, H.-J. How do temporal and spectral features matter in crop classification in heilongjiang province, china? J. Integr. Agric. 2017, 16, 324–336. [Google Scholar] [CrossRef]

- Pal, M.; Mather, P.M. An assessment of the effectiveness of decision tree methods for land cover classification. Remote Sens. Environ. 2003, 86, 554–565. [Google Scholar] [CrossRef]

- Pal, M.; Mather, P.M. Assessment of the effectiveness of support vector machines for hyperspectral data. Future Gener. Comp. Syst. 2004, 20, 1215–1225. [Google Scholar] [CrossRef]

- Inglada, J.; Vincent, A.; Arias, M.; Marais-Sicre, C. Improved early crop type identification by joint use of high temporal resolution sar and optical image time series. Remote Sens. 2016, 8, 362. [Google Scholar] [CrossRef] [Green Version]

- Feng, S.; Zhao, J.; Liu, T.; Zhang, H.; Zhang, Z.; Guo, X. Crop type identification and mapping using machine learning algorithms and sentinel-2 time series data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3295–3306. [Google Scholar] [CrossRef]

- Immitzer, M.; Neuwirth, M.; Böck, S.; Brenner, H.; Vuolo, F.; Atzberger, C. Optimal input features for tree species classification in central europe based on multi-temporal sentinel-2 data. Remote Sens. 2019, 11, 2599. [Google Scholar] [CrossRef] [Green Version]

- Meng, S.Y.; Zhong, Y.F.; Luo, C.; Hu, X.; Wang, X.Y.; Huang, S.X. Optimal temporal window selection for winter wheat and rapeseed mapping with sentinel-2 images: A case study of zhongxiang in china. Remote Sens. 2020, 12, 226. [Google Scholar] [CrossRef] [Green Version]

- Yuan, Q.; Shen, H.; Li, T.; Li, Z.; Li, S.; Jiang, Y.; Xu, H.; Tan, W.; Yang, Q.; Wang, J.; et al. Deep learning in environmental remote sensing: Achievements and challenges. Remote Sens. Environ. 2020, 241, 111716. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep learning in remote sensing: A comprehensive review and list of resources. IEEE Geosc. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef] [Green Version]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep learning classification of land cover and crop types using remote sensing data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Lyu, H.; Lu, H.; Mou, L.; Li, W.; Wright, J.; Li, X.; Li, X.; Zhu, X.; Wang, J.; Yu, L.; et al. Long-term annual mapping of four cities on different continents by applying a deep information learning method to landsat data. Remote Sens. 2018, 10, 471. [Google Scholar] [CrossRef] [Green Version]

- Debats, S.R.; Luo, D.; Estes, L.D.; Fuchs, T.J.; Caylor, K.K. A generalized computer vision approach to mapping crop fields in heterogeneous agricultural landscapes. Remote Sens. Environ. 2016, 179, 210–221. [Google Scholar] [CrossRef] [Green Version]

- Erinjery, J.J.; Singh, M.; Kent, R. Mapping and assessment of vegetation types in the tropical rainforests of the western ghats using multispectral sentinel-2 and sar sentinel-1 satellite imagery. Remote Sens. Environ. 2018, 216, 345–354. [Google Scholar] [CrossRef]

- Immitzer, M.; Atzberger, C.; Koukal, T. Tree species classification with random forest using very high spatial resolution 8-band worldview-2 satellite data. Remote Sens. 2012, 4, 2661–2693. [Google Scholar] [CrossRef] [Green Version]

- Löw, F.; Michel, U.; Dech, S.; Conrad, C. Impact of feature selection on the accuracy and spatial uncertainty of per-field crop classification using support vector machines. ISPRS J. Photogramm. Remote Sens. 2013, 85, 102–119. [Google Scholar] [CrossRef]

- Inglada, J.; Arias, M.; Tardy, B.; Hagolle, O.; Valero, S.; Morin, D.; Dedieu, G.; Sepulcre, G.; Bontemps, S.; Defourny, P.; et al. Assessment of an operational system for crop type map production using high temporal and spatial resolution satellite optical imagery. Remote Sens. 2015, 7, 12356–12379. [Google Scholar] [CrossRef] [Green Version]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Su, X.; Li, J.; Singh, V.P. Optimal allocation of agricultural water resources based on virtual water subdivision in shiyang river basin. Water Resour. Manag. 2014, 28, 2243–2257. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Pelletier, C.; Valero, S.; Inglada, J.; Champion, N.; Dedieu, G. Assessing the robustness of random forests to map land cover with high resolution satellite image time series over large areas. Remote Sens. Environ. 2016, 187, 156–168. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from eos-modis. Remote Sens. Environ. 1996, 58, 298. [Google Scholar] [CrossRef]

- Sun, Y.H.; Qin, Q.M.; Ren, H.Z.; Zhang, T.Y.; Chen, S.S. Red-edge band vegetation indices for leaf area index estimation from sentinel-2/msi imagery. IEEE Trans. Geosci. Remote Sens. 2020, 58, 826–840. [Google Scholar] [CrossRef]

- Tucker, C.J. Remote sensing of leaf water content in the near infrared. Remote Sens. Environ. 1980, 10, 23–32. [Google Scholar] [CrossRef]

- Yilmaz, M.T.; Hunt, E.R.; Jackson, T.J. Remote sensing of vegetation water content from equivalent water thickness using satellite imagery. Remote Sens. Environ. 2008, 112, 2514–2522. [Google Scholar] [CrossRef]

- Ghulam, A.; Li, Z.-L.; Qin, Q.; Yimit, H.; Wang, J. Estimating crop water stress with etm+ nir and swir data. Agric. Forest. Meteorol. 2008, 148, 1679–1695. [Google Scholar]

- Xiao, X.; Boles, S.; Frolking, S.; Li, C.; Babu, J.Y.; Salas, W.; Moore, B. Mapping paddy rice agriculture in south and southeast asia using multi-temporal modis images. Remote Sens. Environ. 2006, 100, 95–113. [Google Scholar] [CrossRef]

- Maponya, M.G.; van Niekerk, A.; Mashimbye, Z.E. Pre-harvest classification of crop types using a sentinel-2 time-series and machine learning. Comput. Electron. Agric. 2020, 169, 105164. [Google Scholar]

- Hao, P.; Zhan, Y.; Wang, L.; Niu, Z.; Shakir, M. Feature selection of time series modis data for early crop classification using random forest: A case study in kansas, USA. Remote Sens. 2015, 7, 5347–5369. [Google Scholar] [CrossRef] [Green Version]

- Vuolo, F.; Neuwirth, M.; Immitzer, M.; Atzberger, C.; Ng, W.-T. How much does multi-temporal sentinel-2 data improve crop type classification? Int. J. Appl. Earth Obs. Geoinf. 2018, 72, 122–130. [Google Scholar]

- Defourny, P.; Bontemps, S.; Bellemans, N.; Cara, C.; Dedieu, G.; Guzzonato, E.; Hagolle, O.; Inglada, J.; Nicola, L.; Rabaute, T.; et al. Near real-time agriculture monitoring at national scale at parcel resolution: Performance assessment of the sen2-agri automated system in various cropping systems around the world. Remote Sens. Environ. 2019, 221, 551–568. [Google Scholar] [CrossRef]

- Hao, P.; Wu, M.; Niu, Z.; Wang, L.; Zhan, Y. Estimation of different data compositions for early-season crop type classification. Peerj 2018, 6, e4834. [Google Scholar]

- Bai, T.; Li, D.; Sun, K.; Chen, Y.; Li, W. Cloud detection for high-resolution satellite imagery using machine learning and multi-feature fusion. Remote Sens. 2016, 8, 715. [Google Scholar]

- Lück, W.; van Niekerk, A. Evaluation of a rule-based compositing technique for landsat-5 tm and landsat-7 etm+ images. Int. J. Appl. Earth Obs. Geoinf. 2016, 47, 1–14. [Google Scholar] [CrossRef]

- Brinkhoff, J.; Vardanega, J.; Robson, A.J. Land cover classification of nine perennial crops using sentinel-1 and -2 data. Remote Sens. 2019, 12, 96. [Google Scholar] [CrossRef] [Green Version]

- Stehman, S.V.; Foody, G.M. Key issues in rigorous accuracy assessment of land cover products. Remote Sens. Environ. 2019, 231, 111199. [Google Scholar]

| Crop Type | Code | Sowing Window | Peak Greenness | Harvest Window |

|---|---|---|---|---|

| Wheat | WH | Early April | Mid-June | Mid-July |

| Corn | CO | Late April | Mid-July | Mid-September |

| Melon1 | M1 | Late April | Mid-July | Late August |

| Melon2 | M2 | May | Early August | September |

| Fennel | FN | Late April | Mid-July | Late August |

| Sunflower | SF | Late April | July | Late August |

| Sunflower & Melon | SM | April–May | Early August | September |

| Alfalfa | AL | Three harvests from March to October | ||

| Acquisition Date | Day of Year (DOY) | Number of Images |

|---|---|---|

| 23 April 2019 | 113 | 11 |

| 13 May 2019 | 133 | 11 |

| 28 May 2019 | 148 | 11 |

| 17 June 2019 | 168 | 11 |

| 22 July 2019 | 203 | 11 |

| 01 August 2019 | 213 | 11 |

| 06 August 2019 | 218 | 11 |

| 1 August 2019 | 223 | 11 |

| 31 August 2019 | 243 | 11 |

| 05 September 2019 | 248 | 11 |

| 20 September 2019 | 263 | 11 |

| Total | 121 | |

| Bands | Name | Central Wavelength (nm) | Band Width | Spatial Resolution (nm) |

|---|---|---|---|---|

| 2 | Blue | 490 | 65 | 10 |

| 3 | Green | 560 | 35 | 10 |

| 4 | Red | 665 | 30 | 10 |

| 5 | RE-1 | 705 | 15 | 20 |

| 6 | RE-2 | 740 | 15 | 20 |

| 7 | RE-3 | 783 | 20 | 20 |

| 8 | NIR | 842 | 115 | 10 |

| 11 | SWIR-1 | 1610 | 90 | 20 |

| 12 | SWIR-2 | 2190 | 180 | 20 |

| Class | Training Fields | Testing Fields | Training Pixels | Testing Pixels |

|---|---|---|---|---|

| Wheat | 20 | 9 | 1973 | 663 |

| Corn | 40 | 22 | 2118 | 740 |

| Melon1 | 18 | 7 | 943 | 140 |

| Melon2 | 19 | 8 | 916 | 372 |

| Fennel | 20 | 10 | 954 | 595 |

| Sunflower | 23 | 16 | 1494 | 1028 |

| Sunflower and Melon | 16 | 10 | 813 | 378 |

| Alfalfa | 20 | 10 | 1900 | 1009 |

| DOY | Spectral Bands and Overall Accuracy (OA) of Best Combinations | |||||||

|---|---|---|---|---|---|---|---|---|

| C2Bs | OA | C3Bs | OA | C4Bs | OA | C5Bs | OA | |

| 203 | B5, B11 | 0.71 | B4, B5, B11 | 0.83 | B4, B6, B11, B12 | 0.85 | B4, B6, B8, B11, B12 | 0.86 |

| 213 | B5, B11 | 0.74 | B5, B11, B12 | 0.83 | B4, B5, B8, B11 | 0.85 | B4, B5, B8, B11, B12 | 0.86 |

| 218 | B5, B11 | 0.71 | B5, B6, B11 | 0.81 | B5, B8, B11, B12 | 0.84 | B2, B5, B8, B11, B12 | 0.85 |

| 223 | B5, B11 | 0.73 | B5, B6, B11 | 0.80 | B5, B8, B11, B12 | 0.83 | B4, B5, B8, B11, B12 | 0.84 |

| Combinations | Band of Sentinel-2 | Overall Accuracy |

|---|---|---|

| C2Bs | RE-1, SWIR-1 | 0.94 |

| C3Bs | RE-1, NIR, SWIR-1 | 0.95 |

| C4Bs | GREEN, RE-1, NIR, SWIR-1 | 0.95 |

| C5Bs | BLUE, Red, RE-1, NIR, SWIR-1 | 0.95 |

| C6Bs | BLUE, GREEN, Red, RE-1, NIR, SWIR-1 | 0.95 |

| C7Bs | All bands without Red and SWIR-2 | 0.95 |

| C8Bs | All bands without Red | 0.95 |

| C9Bs | All bands | 0.95 |

| Combinations | DOY | Overall Accuracy |

|---|---|---|

| C2Ds | 213, 243 | 0.92 |

| C3Ds | 148, 213, 248 | 0.94 |

| C4Ds | 148, 168, 213, 243 | 0.95 |

| C5Ds | 148, 168, 203, 213, 243 | 0.95 |

| C6Ds | 148, 168, 203, 213, 218, 248 | 0.95 |

| C7Ds | 133, 148, 168, 203, 213, 223, 248 | 0.95 |

| C8Ds | 133, 148, 168, 203, 213, 218, 223, 248 | 0.95 |

| C9Ds | 113, 148, 168, 203, 213, 218, 223, 248, 263 | 0.95 |

| C10Ds | 113, 148, 168, 203, 213, 218, 223, 243, 248, 263 | 0.95 |

| C11Ds | 113, 133, 148, 168, 203, 213, 218, 223, 243, 248, 263 | 0.95 |

| Actual Types | Classified | Total | PA | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| M1 | FN | SF | SM | AL | M2 | WH | CO | |||

| M1 | 115 | 2 | 1 | 2 | 1 | 19 | 140 | 0.82 | ||

| FN | 1 | 523 | 56 | 1 | 14 | 595 | 0.88 | |||

| SF | 19 | 13 | 979 | 1 | 16 | 1028 | 0.95 | |||

| SM | 1 | 354 | 5 | 7 | 11 | 378 | 0.94 | |||

| AL | 1 | 2 | 999 | 3 | 1 | 3 | 1009 | 0.99 | ||

| M2 | 47 | 1 | 322 | 0 | 2 | 372 | 0.87 | |||

| WH | 4 | 1 | 658 | 663 | 0.99 | |||||

| CO | 1 | 5 | 15 | 41 | 2 | 4 | 672 | 740 | 0.90 | |

| Total | 137 | 548 | 1100 | 398 | 1007 | 331 | 667 | 737 | 4925 | |

| UA | 0.84 | 0.95 | 0.89 | 0.89 | 0.99 | 0.97 | 0.99 | 0.91 | ||

| OA = 0.94 | Kappa = 0.93 | |||||||||

| Actual Types | Classified | Total | PA | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| M1 | FN | SF | SM | AL | M2 | WH | CO | |||

| M1 | 120 | 5 | 15 | 140 | 0.86 | |||||

| FN | 0 | 561 | 20 | 14 | 595 | 0.94 | ||||

| SF | 26 | 10 | 985 | 7 | 1028 | 0.96 | ||||

| SM | 357 | 11 | 2 | 8 | 378 | 0.94 | ||||

| AL | 2 | 1 | 999 | 2 | 0 | 5 | 1009 | 0.99 | ||

| M2 | 1 | 51 | 0 | 320 | 0 | 372 | 0.86 | |||

| WH | 4 | 659 | 663 | 0.99 | ||||||

| CO | 1 | 1 | 12 | 49 | 1 | 2 | 674 | 740 | 0.91 | |

| Total | 147 | 577 | 1070 | 407 | 1011 | 329 | 661 | 723 | 4925 | |

| UA | 0.82 | 0.97 | 0.92 | 0.88 | 0.99 | 0.97 | 0.99 | 0.93 | ||

| OA = 0.95 | Kappa = 0.94 | |||||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yi, Z.; Jia, L.; Chen, Q. Crop Classification Using Multi-Temporal Sentinel-2 Data in the Shiyang River Basin of China. Remote Sens. 2020, 12, 4052. https://doi.org/10.3390/rs12244052

Yi Z, Jia L, Chen Q. Crop Classification Using Multi-Temporal Sentinel-2 Data in the Shiyang River Basin of China. Remote Sensing. 2020; 12(24):4052. https://doi.org/10.3390/rs12244052

Chicago/Turabian StyleYi, Zhiwei, Li Jia, and Qiting Chen. 2020. "Crop Classification Using Multi-Temporal Sentinel-2 Data in the Shiyang River Basin of China" Remote Sensing 12, no. 24: 4052. https://doi.org/10.3390/rs12244052

APA StyleYi, Z., Jia, L., & Chen, Q. (2020). Crop Classification Using Multi-Temporal Sentinel-2 Data in the Shiyang River Basin of China. Remote Sensing, 12(24), 4052. https://doi.org/10.3390/rs12244052