Mapping Burn Extent of Large Wildland Fires from Satellite Imagery Using Machine Learning Trained from Localized Hyperspatial Imagery

Abstract

1. Introduction

1.1. Background

1.1.1. Wildland Fire Severity and Extent

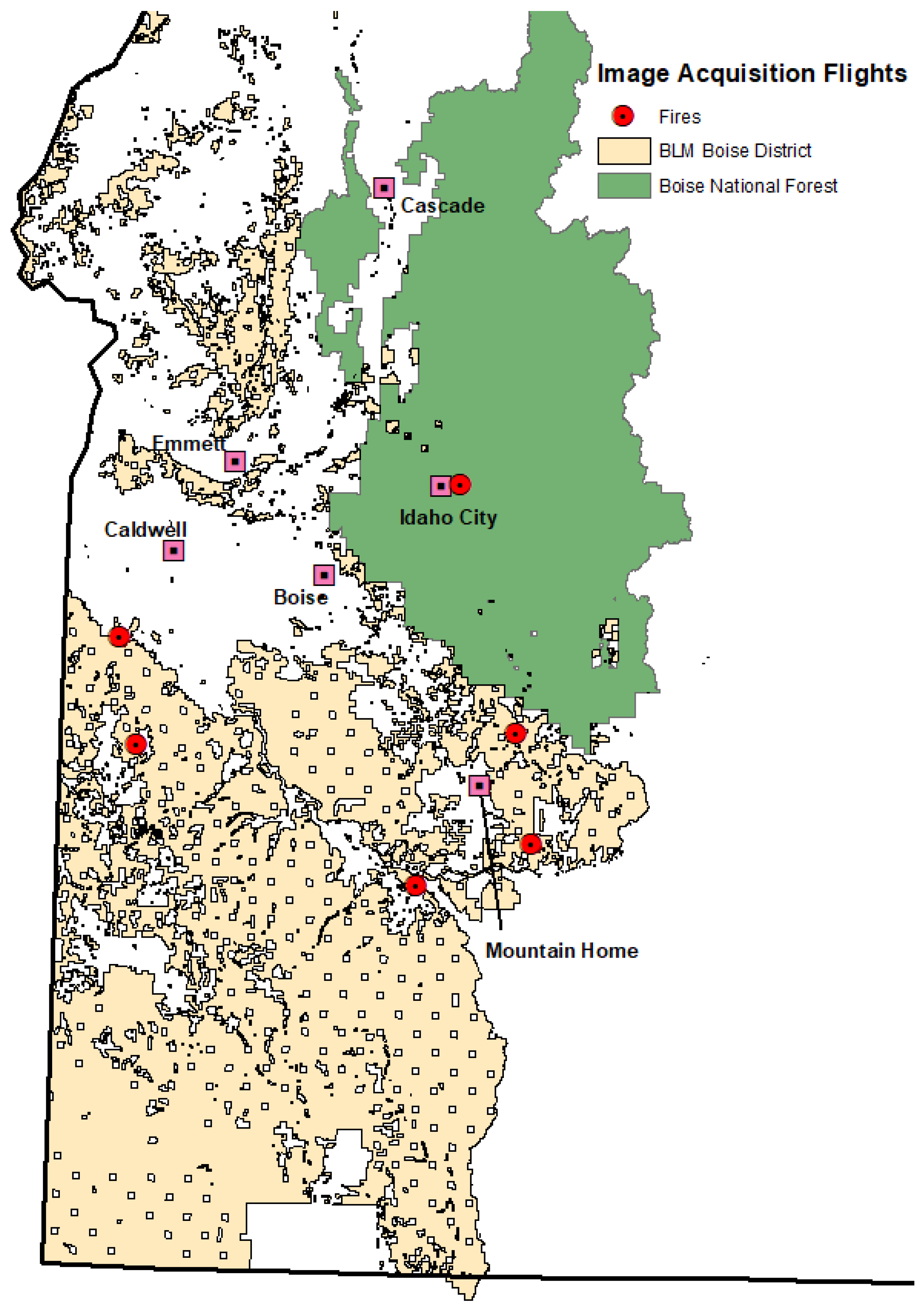

1.1.2. On Origins of Fires Used in This Study

1.1.3. Utilization of sUAS for Mapping Wildland Fire

1.1.4. Thirty Meter Burn Severity Mapping Analytics Trained with Hyperspatial Classification

- Hyperspatial orthomosaics (5 cm) were resampled to have medium resolution of 30 m (30 m), which is an equivalent spatial resolution to Landsat imagery, with the spatial reference for the 30 m imagery being calculated from the spatial reference from the 5 cm orthomosaic.

- Labeling 30 m training pixels using fuzzy logic by:

- Calculating 5 cm burn severity class pixel density within each 30 m pixel, where density is the percentage of 5 cm pixels for a specific class that are found within the containing 30 m pixel.

- Applying fuzzification, the post-fire class density is used to establish where fuzzy set membership transitions from 0 to 1 over a range of values. Burn extent transitioned from unburned to burned between 35 and 65 percent of 5 cm pixels being unburned as shown in Figure 3. Burn severity transitioned between low biomass consumption and high biomass consumption between 33% and 50% [25] of burned pixels being classified as white ash as shown in Figure 4.

- Activate fuzzy rules by applying fuzzy logic. When evaluating a series of fuzzy if statements, as shown in Figure 5, the data is defuzzified by selecting for activation the action associated with the if expression that has the highest value where a fuzzy AND is evaluated as taking the minimum value of either of the associated operands.

- Each of the 30 m pixels was labeled with training data labels with the SVM training on 70 percent of the 30 m training pixels. The remaining labeled 30 m pixels withheld for validation of the SVM.

2. Materials and Methods

2.1. Assembling Spatial Extent Burn Indicator

2.1.1. Coregistering Hyperspatial and Medium Resolution Images

2.1.2. Creating Hyperspatial Training Data

2.1.3. Resampling Training Data to 30 m Using Fuzzy Logic

2.1.4. Running the SVM on the Satellite Scene

2.1.5. Collecting Results

3. Results

4. Discussion

5. Conclusions

Implications for Local Management

6. Future Work

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Wildland Fire Leadership Council. Available online: https://www.forestsandrangelands.gov/documents/strategy/strategy/CSPhaseIIINationalStrategyApr2014.pdf (accessed on 30 July 2020).

- Hoover, K.; Hanson, L.A. Wildfire Statistics. Congressional Research Service. October 2019. Available online: https://crsreports.congress.gov/product/pdf/IF/IF10244 (accessed on 14 May 2020).

- National Interagency Fire Center (NIFC). Suppression Costs. 2020. Available online: https://www.nifc.gov/fireInfo/fireInfo_documents/SuppCosts.pdf (accessed on 18 May 2020).

- National Interagency Fire Center (NIFC). Wildland Fire Fatalities by Year. 2020. Available online: https://www.nifc.gov/safety/safety_documents/Fatalities-by-Year.pdf (accessed on 18 May 2020).

- Zhou, G.; Li, C.; Cheng, P. Unmanned aerial vehicle (UAV) real-time video registration for forest fire monitoring. In Proceedings of the 2005 IEEE International Geoscience and Remote Sensing Symposium, Seoul, Korea, 29 July 2005. [Google Scholar] [CrossRef]

- Insurance Information Institute. Facts + Statistics: Wildfires|III. Available online: https://www.iii.org/fact-statistic/facts-statistics-wildfires (accessed on 18 May 2020).

- Eidenshink, J.C.; Schwind, B.; Brewer, K.; Zhu, Z.-L.; Quayle, B.; Howard, S.M. A project for monitoring trends in burn severity. Fire Ecol. 2007, 3, 3–21. [Google Scholar] [CrossRef]

- Keeley, J.E. Fire intensity, fire severity and burn severity: A brief review and suggested usage. Int. J. Wildland Fire 2009, 18, 116–126. [Google Scholar] [CrossRef]

- Key, C.H.; Benson, N.C. Landscape Assessment (LA). In FIREMON: Fire Effects Monitoring and Inventory System. Gen. Tech. Rep. RMRS-GTR-164-CD; U.S. Department of Agriculture, Forest Service, Rocky Mountain Research Station: Fort Collins, CO, USA, 2016; Volume 164, p. 155. [Google Scholar]

- Hamilton, D.; Bowerman, M.; Colwell, J.; Donahoe, G.; Myers, B. A Spectroscopic Analysis for Mapping Wildland Fire Effects from Remotely Sensed Imagery. J. Unmanned Veh. Syst. 2017. [Google Scholar] [CrossRef] [PubMed]

- Lentile, L.B.; Holden, Z.A.; Smith, A.M.; Falkowski, M.J.; Hudak, A.T.; Morgan, P.; Benson, N.C. Remote sensing techniques to assess active fire characteristics and post-fire effects. Int. J. Wildland Fire 2006, 15. [Google Scholar] [CrossRef]

- Morgan, P.; Hardy, C.; Swetnam, T.; Rollins, M.; Long, D. Mapping fire regimes across time and space: Understanding coarse and fine-scale fire patterns. Int. J. Wildland Fire 2001, 10, 329–342. [Google Scholar] [CrossRef]

- Kolden, C.A.; Weisberg, P.J. Assessing Accuracy of Manually-mapped Wildfire Perimeters in Topographically Dissected Areas. Fire Ecol. 2007, 3, 22–31. [Google Scholar] [CrossRef]

- Escuin, S.; Navarro, R.; Fernandez, P. Fire severity assessment by using NBR (Normalized Burn Ratio) and NDVI (Normalized Difference Vegetation Index) derived from LANDSAT TM/ETM images. Int. J. Remote Sens. 2008, 29, 1053–1073. [Google Scholar] [CrossRef]

- USDA Forest Service Geospatial Technolgy and Applications Center (GTAC). Monitoring Trends in Burn Severity. Monit. Trends Burn Sev. 2020. Available online: https://www.mtbs.gov/ (accessed on 20 May 2020).

- Sparks, A.M.; Boschetti, L.; Smith, A.M.; Tinkham, W.T.; Lannom, K.O.; Newingham, B.A. An accuracy assessment of the MTBS burned area product for shrub–steppe fires in the northern Great Basin, United States. Int. J. Wildland Fire 2015, 24, 70–78. [Google Scholar] [CrossRef]

- Federal Aviation Administration (FAA). Frequently Asked Questions. Unmanned Aircr. Syst. Freq. Asked Quest. 2020. Available online: https://www.faa.gov/uas/resources/faqs/ (accessed on 20 May 2020).

- Lebourgeois, V.; Bégué, A.; Labbé, S.; Mallavan, B.; Prévot, L.; Roux, B. Can commercial digital cameras be used as multispectral sensors? A crop monitoring test. Sensors 2008, 8, 7300–7322. [Google Scholar] [CrossRef] [PubMed]

- Hamilton, D.; Hamilton, N.; Myers, B. Evaluation of Image Spatial Resolution for Machine Learning Mapping of Wildland Fire Effects. In Proceedings of the SAI Intelligent Systems Conference, London, UK, 5–6 September 2019; pp. 400–415. [Google Scholar]

- Rango, A.; Laliberte, A.; Herrick, J.E.; Winters, C.; Havstad, K.; Steele, C.; Browning, D. Unmanned aerial vehicle-based remote sensing for rangeland assessment, monitoring, and management. J. Appl. Remote Sens. 2009, 3, 033542. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Herrick, J.E.; Rango, A.; Winters, C. Acquisition, orthorectification, and object-based classification of unmanned aerial vehicle (UAV) imagery for rangeland monitoring. Photogramm. Eng. Remote Sens. 2010, 76, 661–672. [Google Scholar] [CrossRef]

- Hamilton, D. Improving Mapping Accuracy of Wildland Fire Effects from Hyperspatial Imagery Using Machine Learning; The University of Idaho: Moscow, ID, USA, 2018. [Google Scholar]

- Goodwin, J.; Hamilton, D. Archaeological Imagery Acquisition and Mapping Analytics Development; Boise National Forest: Boise, ID, USA, 2019.

- National Wildfire Coordinating Group (NWCG). Size Class of Fire. 2020. Available online: www.nwcg.gov/term/glossary/size-class-of-fire (accessed on 20 May 2020).

- Lewis, S.; Robichaud, P.; Frazier, B.; Wu, J.; Laes, D. Using hyperspectral imagery to predict post-wildfire soil water repellency. Geomorphology 2008, 95, 192–205. [Google Scholar] [CrossRef]

- Gonzalez, R.; Woods, R. Digital Image Processing: Pearson Prentice Hall; Pearson Prentice Hall: Upper New Jersey, NJ, USA, 2008. [Google Scholar]

- Russell, S.; Norvig, P. Artificial Intelligence: A Modern Approach, 3rd ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2010. [Google Scholar]

- Han, J.; Kamber, M.; Pei, J. Data Mining: Concepts and Techniques, 3rd ed.; Morgan Kaufmann: Boston, MA, USA, 2012. [Google Scholar]

- National Aeronautics and Space Administration (NASA). Landsat 8 Bands. 21 July 2020. Available online: https://landsat.gsfc.nasa.gov/landsat-8/landsat-8-bands/ (accessed on 22 July 2020).

- Hamilton, D.; Hann, W. Mapping landscape fire frequency for fire regime condition class. In Proceedings of the Large Fire Conference, Missoula, MT, USA, 19–23 May 2014. [Google Scholar]

| 5 cm Extent | 30 m Extent Results | 5 cm Severity | 30 m Severity Results | |

|---|---|---|---|---|

| Elephant | 98.5 | 58.52 | 98.67 | 95.30 |

| MM106 | 86.58 | 71.5 | 98.68 | 100 |

| Hoodoo | 99.29 | 73.92 | 95.22 | 41.50 |

| Immigrant | 98.34 | 77.67 | 97.97 | 100 |

| Jack | 94.74 | 57.09 | 96.97 | 19.06 |

| Owyhee | 87.65 | 85.40 | 87.42 | 100 |

| Mean | 93.32 | 73.116 | 95.252 | 72.112 |

| 30 m Color Extent | 30 m IR Extent | 30 m Color Severity | 30 m IR Severity | |

|---|---|---|---|---|

| Elephant | 58.52 | 55.95 | 95.30 | 97.83 |

| MM106 | 71.5 | 69.1 | 100 | 100 |

| Hoodoo | 73.92 | 71.75 | 41.50 | 36.48 |

| Immigrant | 77.67 | 78.19 | 100 | 100 |

| Jack | 57.09 | 56.68 | 19.06 | 17.40 |

| Owyhee | 85.40 | 56.61 | 100 | N/A 1 |

| Mean | 70.68 | 64.71 | 75.98 | 70.34 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hamilton, D.; Levandovsky, E.; Hamilton, N. Mapping Burn Extent of Large Wildland Fires from Satellite Imagery Using Machine Learning Trained from Localized Hyperspatial Imagery. Remote Sens. 2020, 12, 4097. https://doi.org/10.3390/rs12244097

Hamilton D, Levandovsky E, Hamilton N. Mapping Burn Extent of Large Wildland Fires from Satellite Imagery Using Machine Learning Trained from Localized Hyperspatial Imagery. Remote Sensing. 2020; 12(24):4097. https://doi.org/10.3390/rs12244097

Chicago/Turabian StyleHamilton, Dale, Enoch Levandovsky, and Nicholas Hamilton. 2020. "Mapping Burn Extent of Large Wildland Fires from Satellite Imagery Using Machine Learning Trained from Localized Hyperspatial Imagery" Remote Sensing 12, no. 24: 4097. https://doi.org/10.3390/rs12244097

APA StyleHamilton, D., Levandovsky, E., & Hamilton, N. (2020). Mapping Burn Extent of Large Wildland Fires from Satellite Imagery Using Machine Learning Trained from Localized Hyperspatial Imagery. Remote Sensing, 12(24), 4097. https://doi.org/10.3390/rs12244097