GNSS/INS-Assisted Structure from Motion Strategies for UAV-Based Imagery over Mechanized Agricultural Fields

Abstract

1. Introduction

- Image matching: Rather than conducting a traditional exhaustive search among the feature descriptors within the images of a stereo-pair, GNSS/INS information is used to reduce the search space. This can mitigate some of the matching ambiguity problems caused by repetitive patterns.

- Relative orientation parameter estimation: GNSS/INS-based ROPs are used as initial values in an iterative ROP estimation and outlier removal procedure.

- Exterior orientation parameter recovery: For this step, two strategies, denoted as partially GNSS/INS-assisted SfM and fully GNSS/INS-assisted SfM, are employed. The first strategy implements a traditional incremental EOP recovery, which derives the image EOPs in a local coordinate system while removing matching outliers. In the second strategy, however, as the georeferencing parameters can be directly derived from the GNSS/INS information, the EOP recovery step is removed from the SfM framework.

- Bundle adjustment: A GNSS/INS-assisted bundle adjustment is conducted to refine the derived object points, camera position and orientation parameters, and/or system calibration parameters. Also, in the fully GNSS/INS-assisted SfM framework, the bundle adjustment step is augmented with a preceding RANSAC strategy for removing matching outliers that were not detected through prior steps.

2. Related Works

2.1. Image Matching

2.2. Estimation of Relative Orientation Parameters

- The elements of the essential matrix can be only determined up to a scale,

- The essential matrix should have a rank of two, thus the determinant of the matrix should be zero, and

- Two trace constraints [46], as presented in Equation (3), should be satisfied.

2.3. Estimation of Exterior Orientation Parameters

3. Data Acquisition System Specifications and Configurations of Case Studies

3.1. Data Acquisition System

3.2. Dataset Description

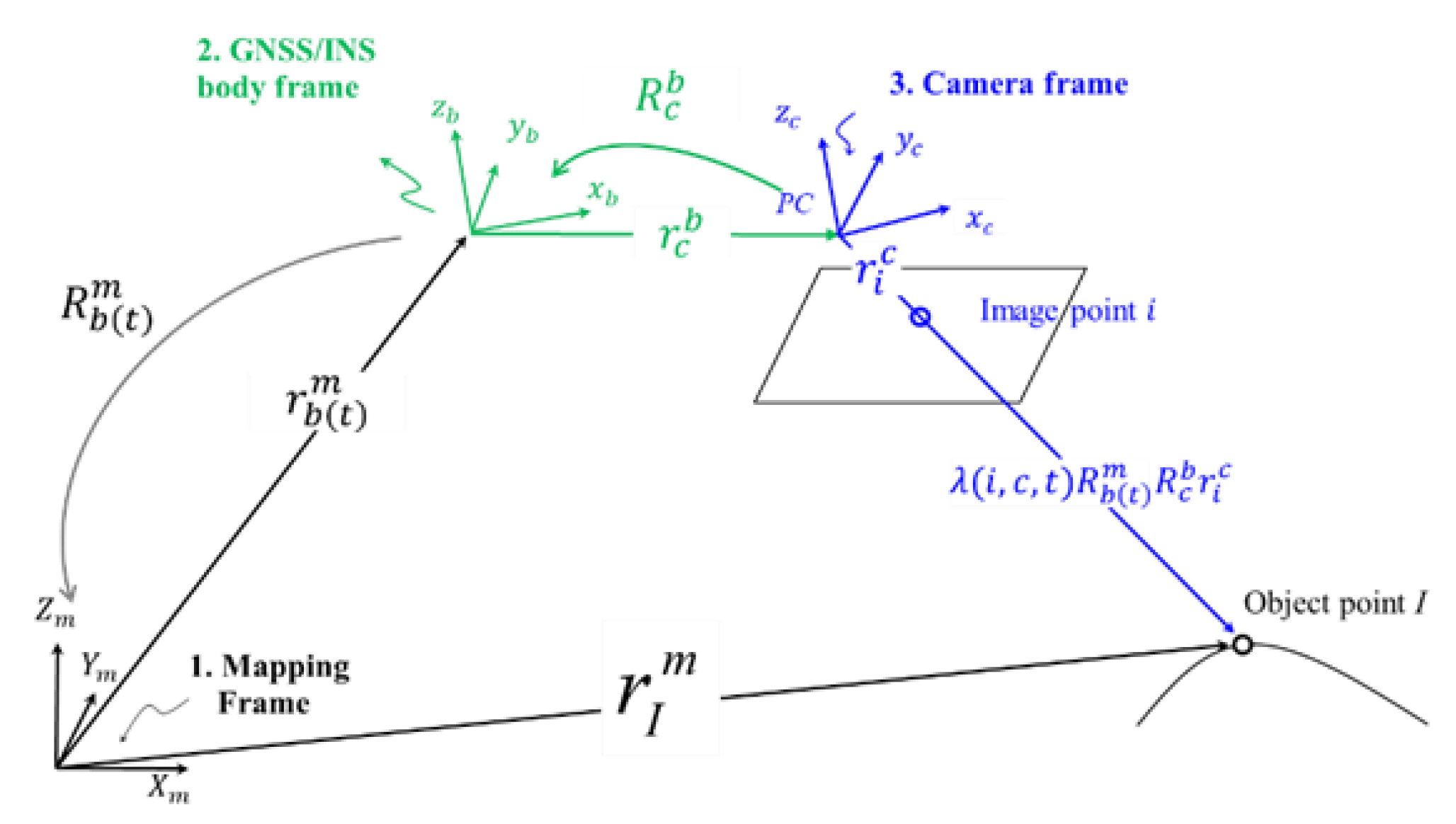

4. Methodology

4.1. Stereo Image Matching

4.2. Automated Relative Orientation

4.3. GNSS/INS-Assisted Bundle Adjustment

4.3.1. Bundle Adjustment when Adopting Partially GNSS/INS-Assisted SfM

4.3.2. Bundle Adjustment when Adopting Fully GNSS/INS-Assisted SfM

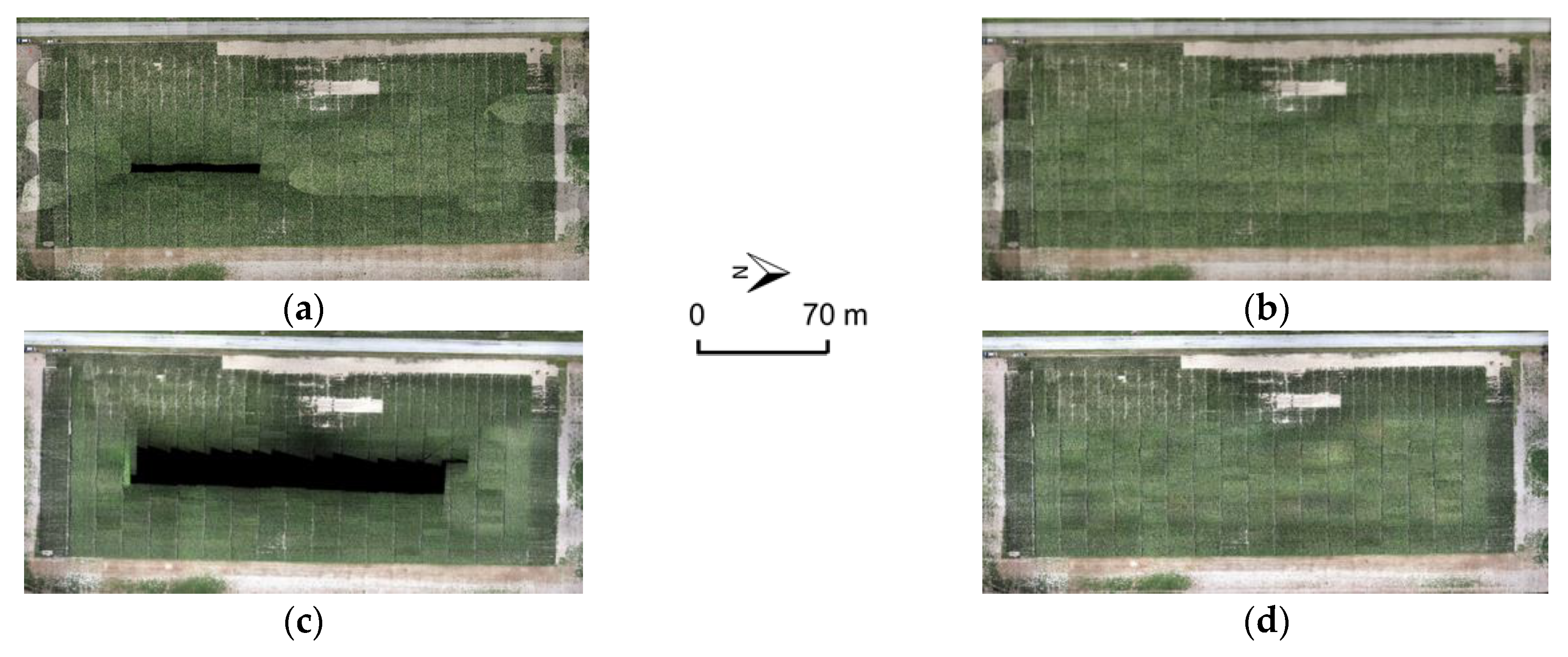

5. Experimental Results and Discussion

5.1. SfM Results for Datasets with Check Points

- Number of BA-input images: This refers to the number of images surviving the preprocessing steps up to bundle adjustment. This criterion indicates the ability of the used SfM framework to successfully establish enough conjugate features among the involved imagery. More specifically, in case of incremental EOP recovery adopted in the traditional and partially GNSS/INS-assisted SfM approaches, this number indicates all the images for which the EOPs have been successfully estimated. On the other hand, for the fully GNSS/INS-assisted SfM framework, this criterion pertains to the number of images with more than SIFT-based tie points (where N > 20).

- Number of reconstructed object points: This criterion represents the sparsity/density of the SfM-based point cloud.

- Square root of a-posteriori variance factor resulting from the bundle adjustment process (): This value illustrates the quality of fit between observations and unknowns as represented by the mathematical model of the modified collinearity equations. Small values for serve as an indication of small image residuals as a result of the back-projection process using the estimated unknowns.

- : The root-mean-square error (RMSE) values of differences between the bundle adjustment-derived and surveyed coordinates of the check points reflect the accuracy of the 3D reconstruction.

- Image matching and ROP estimation: This process includes the SIFT detector and descriptor evaluation, feature matching, and ROP estimation while removing some matching outliers. One should note that the partially GNSS/INS-assisted and fully GNSS/INS-assisted frameworks share the same implementations for those steps. Therefore, both frameworks will have the same time consumption.

- BA preparation: When using the partially GNSS/INS-assisted and traditional frameworks, the BA preparation pertains to the incremental approach for EOP recovery, feature tracking, and 3D similarity transformation to bring the derived 3D points from the local frame to the GNSS/INS trajectory reference frame. Given that the traditional and partially GNSS/INS-assisted frameworks deal with a different number of images and object points, the time consumption for these approaches is not expected to be identical. For fully GNSS/INS-assisted SfM, the BA preparation refers to the feature tracking and RANSAC procedure for removing matching outliers.

- BA: This process refers to the implemented GNSS/INS-assisted bundle adjustment process.

5.2. SfM Results for Datasets without Check Points

5.3. Comparison with Pix4D Mapper Pro

6. Conclusions and Recommendations for Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Gomiero, T.; Pimentel, D.; Paoletti, M.G. Is there a need for a more sustainable agriculture? Crit. Rev. Plant Sci. 2011, 30, 6–23. [Google Scholar] [CrossRef]

- Godfray, H.C.J.; Beddington, J.R.; Crute, I.R.; Haddad, L.; Lawrence, D.; Muir, J.F.; Toulmin, C. Food security: The challenge of feeding 9 billion people. Science 2010, 327, 812–818. [Google Scholar] [CrossRef]

- Sakschewski, B.; Von Bloh, W.; Huber, V.; Müller, C.; Bondeau, A. Feeding 10 billion people under climate change: How large is the production gap of current agricultural systems? Ecol. Model. 2014, 288, 103–111. [Google Scholar] [CrossRef]

- Wolfert, S.; Ge, L.; Verdouw, C.; Bogaardt, M.J. Big data in smart farming—A review. Agric. Syst. 2017, 153, 69–80. [Google Scholar] [CrossRef]

- Sedaghat, A.; Alizadeh Naeini, A. DEM orientation based on local feature correspondence with global DEMs. GISci. Remote Sens. 2018, 55, 110–129. [Google Scholar] [CrossRef]

- Aixia, D.; Zongjin, M.; Shusong, H.; Xiaoqing, W. Building Damage Extraction from Post-earthquake Airborne LiDAR Data. Acta Geol. Sin. Engl. Ed. 2016, 90, 1481–1489. [Google Scholar] [CrossRef]

- Mohammadi, M.E.; Watson, D.P.; Wood, R.L. Deep Learning-Based Damage Detection from Aerial SfM Point Clouds. Drones 2019, 3, 68. [Google Scholar] [CrossRef]

- Grenzdörffer, G.J.; Engel, A.; Teichert, B. The photogrammetric potential of low-cost UAVs in forestry and agriculture. Int. Arch. Photogramm. Sens. Spat. Inf. Sci. 2008, 31, 1207–1214. [Google Scholar]

- Ravi, R.; Hasheminasab, S.M.; Zhou, T.; Masjedi, A.; Quijano, K.; Flatt, J.E.; Habib, A. UAV-based multi-sensor multi-platform integration for high throughput phenotyping. In Proceedings of the Autonomous Air and Ground Sensing Systems for Agricultural Optimization and Phenotyping IV; International Society for Optics and Photonics: Baltimore, MD, USA, 2019; Volume 11008, p. 110080E. [Google Scholar]

- Shi, Y.; Thomasson, J.A.; Murray, S.C.; Pugh, N.A.; Rooney, W.L.; Shafian, S.; Rana, A. Unmanned aerial vehicles for high-throughput phenotyping and agronomic research. PLoS ONE 2016, 11, e0159781. [Google Scholar] [CrossRef] [PubMed]

- Yang, G.; Liu, J.; Zhao, C.; Li, Z.; Huang, Y.; Yu, H.; Zhang, R. Unmanned aerial vehicle remote sensing for field-based crop phenotyping: Current status and perspectives. Front. Plant Sci. 2017, 8, 1111. [Google Scholar] [CrossRef] [PubMed]

- Johansen, K.; Morton, M.J.; Malbeteau, Y.M.; Aragon, B.; Al-Mashharawi, S.K.; Ziliani, M.G.; Tester, M.A. Unmanned Aerial Vehicle-Based Phenotyping Using Morphometric and Spectral Analysis Can Quantify Responses of Wild Tomato Plants to Salinity Stress. Front. Plant Sci. 2019, 10, 370. [Google Scholar] [CrossRef] [PubMed]

- Santini, F.; Kefauver, S.C.; Resco de Dios, V.; Araus, J.L.; Voltas, J. Using unmanned aerial vehicle-based multispectral, RGB and thermal imagery for phenotyping of forest genetic trials: A case study in Pinus halepensis. Ann. Appl. Biol. 2019, 174, 262–276. [Google Scholar] [CrossRef]

- Lelong, C.; Burger, P.; Jubelin, G.; Roux, B.; Labbé, S.; Baret, F. Assessment of unmanned aerial vehicles imagery for quantitative monitoring of wheat crop in small plots. Sensors 2008, 8, 3557–3585. [Google Scholar] [CrossRef] [PubMed]

- Berni, J.A.; Zarco-Tejada, P.J.; Suárez, L.; Fereres, E. Thermal and narrowband multispectral remote sensing for vegetation monitoring from an unmanned aerial vehicle. IEEE Trans. Geosci. Remote Sens. 2009, 47, 722–738. [Google Scholar] [CrossRef]

- Hunt, E.R.; Hively, W.D.; Fujikawa, S.; Linden, D.; Daughtry, C.S.; McCarty, G. Acquisition of NIR-green-blue digital photographs from unmanned aircraft for crop monitoring. Remote Sens. 2010, 2, 290–305. [Google Scholar] [CrossRef]

- Zhao, J.; Zhang, X.; Gao, C.; Qiu, X.; Tian, Y.; Zhu, Y.; Cao, W. Rapid Mosaicking of Unmanned Aerial Vehicle (UAV) Images for Crop Growth Monitoring Using the SIFT Algorithm. Remote Sens. 2019, 11, 1226. [Google Scholar] [CrossRef]

- Masjedi, A.; Carpenter, N.R.; Crawford, M.M.; Tuinstra, M.R. Prediction of Sorghum Biomass Using Uav Time Series Data and Recurrent Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Zhang, X.; Zhao, J.; Yang, G.; Liu, J.; Cao, J.; Li, C.; Gai, J. Establishment of Plot-Yield Prediction Models in Soybean Breeding Programs Using UAV-based Hyperspectral Remote Sensing. Remote Sens. 2019, 11, 2752. [Google Scholar] [CrossRef]

- Masjedi, A.; Zhao, J.; Thompson, A.M.; Yang, K.W.; Flatt, J.E.; Crawford, M.M.; Chapman, S. Sorghum Biomass Prediction Using Uav-Based Remote Sensing Data and Crop Model Simulation. In Proceedings of the IGARSS 2018–2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 7719–7722. [Google Scholar]

- Ravi, R.; Lin, Y.J.; Shamseldin, T.; Elbahnasawy, M.; Masjedi, A.; Crawford, M.; Habib, A. Wheel-Based Lidar Data for Plant Height and Canopy Cover Evaluation to Aid Biomass Prediction. In Proceedings of the IGARSS 2018–2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 3242–3245. [Google Scholar]

- Su, W.; Zhang, M.; Bian, D.; Liu, Z.; Huang, J.; Wang, W.; Guo, H. Phenotyping of Corn Plants Using Unmanned Aerial Vehicle (UAV) Images. Remote Sens. 2019, 11, 2021. [Google Scholar] [CrossRef]

- Kitano, B.T.; Mendes, C.C.; Geus, A.R.; Oliveira, H.C.; Souza, J.R. Corn Plant Counting Using Deep Learning and UAV Images. IEEE Geosci. Remote Sens. Lett. 2019, 1–5. [Google Scholar] [CrossRef]

- Malambo, L.; Popescu, S.; Ku, N.W.; Rooney, W.; Zhou, T.; Moore, S. A Deep Learning Semantic Segmentation-Based Approach for Field-Level Sorghum Panicle Counting. Remote Sens. 2019, 11, 2939. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Van Gool, L. Surf: Speeded up robust features. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2006; pp. 404–417. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Ravi, R.; Lin, Y.J.; Elbahnasawy, M.; Shamseldin, T.; Habib, A. Simultaneous System Calibration of a Multi-LiDAR Multicamera Mobile Mapping Platform. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1694–1714. [Google Scholar] [CrossRef]

- Habib, A.; Zhou, T.; Masjedi, A.; Zhang, Z.; Flatt, J.E.; Crawford, M. Boresight Calibration of GNSS/INS-Assisted Push-Broom Hyperspectral Scanners on UAV Platforms. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1734–1749. [Google Scholar] [CrossRef]

- Khoramshahi, E.; Campos, M.B.; Tommaselli, A.M.G.; Vilijanen, N.; Mielonen, T.; Kaartinen, H.; Kukko, A. Accurate Calibration Scheme for a Multi-Camera Mobile Mapping System. Remote Sens. 2019, 11, 2778. [Google Scholar] [CrossRef]

- LaForest, L.; Hasheminasab, S.M.; Zhou, T.; Flatt, J.E.; Habib, A. New Strategies for Time Delay Estimation during System Calibration for UAV-based GNSS/INS-Assisted Imaging Systems. Remote Sens. 2019, 11, 1811. [Google Scholar] [CrossRef]

- Gabrlik, P.; Cour-Harbo, A.L.; Kalvodova, P.; Zalud, L.; Janata, P. Calibration and accuracy assessment in a direct georeferencing system for UAS photogrammetry. Int. J. Remote Sens. 2018, 39, 4931–4959. [Google Scholar] [CrossRef]

- He, F.; Zhou, T.; Xiong, W.; Hasheminnasab, S.; Habib, A. Automated aerial triangulation for UAV-Based mapping. Remote Sens. 2018, 10, 1952. [Google Scholar] [CrossRef]

- Fritz, A.; Kattenborn, T.; Koch, B. UAV-based photogrammetric point clouds-tree stem mapping in open stands in comparison to terrestrial laser scanner point clouds. In Proceedings of the ISPRS-International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Rostock, Germany, 4–6 September 2013; pp. 141–146. [Google Scholar]

- Turner, D.; Lucieer, A.; Watson, C. An automated technique for generating georectified mosaics from ultra-high resolution unmanned aerial vehicle (UAV) imagery, based on structure from motion (SfM) point clouds. Remote Sens. 2012, 4, 1392–1410. [Google Scholar] [CrossRef]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle adjustment—A modern synthesis. In International Workshop on Vision Algorithms; Springer: Berlin/Heidelberg, Germany, 1999; pp. 298–372. [Google Scholar]

- Rosten, E.; Drummond, T. Machine learning for high-speed corner detection. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2006; pp. 430–443. [Google Scholar]

- Mikolajczyk, K.; Schmid, C. A performance evaluation of local descriptors. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1615–1630. [Google Scholar] [CrossRef]

- Schmid, C.; Mohr, R.; Bauckhage, C. Evaluation of interest point detectors. Int. J. Comput. Vis. 2000, 37, 151–172. [Google Scholar] [CrossRef]

- Karami, E.; Prasad, S.; Shehata, M. Image matching using SIFT, SURF, BRIEF and ORB: Performance comparison for distorted images. arXiv 2017, arXiv:1710.02726. [Google Scholar]

- Yi, K.M.; Trulls, E.; Lepetit, V.; Fua, P. Lift: Learned invariant feature transform. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 467–483. [Google Scholar]

- Choy, C.B.; Gwak, J.; Savarese, S.; Chandraker, M. Universal correspondence network. In Advances in Neural Information Processing Systems; The MIT Press: Barcelona, Spain, 2016; pp. 2414–2422. [Google Scholar]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. Superpoint: Self-supervised interest point detection and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 224–236. [Google Scholar]

- Heymann, S.; Müller, K.; Smolic, A.; Froehlich, B.; Wiegand, T. SIFT implementation and optimization for general-purpose GPU. In Proceedings of the 15th International Conference in Central Europe on Computer Graphics, Visualization and Computer Vision, Plzen, Czech Republic, 29 January–1 February 2007. [Google Scholar]

- Wu, C. SiftGPU: A GPU Implementation of Scale Invariant Feature Transform (SIFT) Method. Available online: http://cs.unc.edu/~ccwu/siftgpu (accessed on 1 July 2019).

- Horn, B.K. Relative orientation. Int. J. Comput. Vis. 1990, 4, 59–78. [Google Scholar] [CrossRef]

- Longuet-Higgins, H.C. A computer algorithm for reconstructing a scene from two projections. Nature 1981, 293, 133. [Google Scholar] [CrossRef]

- Hartley, R.I. In defense of the eight-point algorithm. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 580–593. [Google Scholar] [CrossRef]

- Zhang, Z. Determining the epipolar geometry and its uncertainty: A review. Int. J. Comput. Vis. 1998, 27, 161–195. [Google Scholar] [CrossRef]

- Luong, Q.T.; Deriche, R.; Faugeras, O.; Papadopoulo, T. On Determining the Fundamental Matrix: Analysis of Different Methods and Experimental Results; Unit Ederechercheinria Sophiaantipolis: Valbonne, France, 1993. [Google Scholar]

- Nistér, D. An efficient solution to the five-point relative pose problem. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 756–777. [Google Scholar] [CrossRef]

- Li, H.; Hartley, R. Five-point motion estimation made easy. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; Volume 1, pp. 630–633. [Google Scholar]

- Cox, D.A.; Little, J.; O’shea, D. Using Algebraic Geometry; Springer Science Business Media: New York, NY, USA, 2006; Volume 185. [Google Scholar]

- He, F.; Habib, A. Three-point-based solution for automated motion parameter estimation of a multi-camera indoor mapping system with planar motion constraint. ISPRS J. Photogramm. Remote Sens. 2018, 142, 278–291. [Google Scholar] [CrossRef]

- Ortin, D.; Montiel, J.M.M. Indoor robot motion based on monocular images. Robotica 2001, 19, 331–342. [Google Scholar] [CrossRef]

- Scaramuzza, D.; Fraundorfer, F.; Siegwart, R. Real-time monocular visual odometry for on-road vehicles with 1-point ransac. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 4293–4299. [Google Scholar]

- Hoang, V.D.; Hernández, D.C.; Jo, K.H. Combining edge and one-point ransac algorithm to estimate visual odometry. In International Conference on Intelligent Computing; Springer: Berlin/Heidelberg, Germany, 2013; pp. 556–565. [Google Scholar]

- He, F.; Habib, A. Automated relative orientation of UAV-based imagery in the presence of prior information for the flight trajectory. Photogramm. Eng. Remote Sens. 2016, 82, 879–891. [Google Scholar] [CrossRef]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Photo tourism: Exploring photo collections in 3D. In ACM Transactions on Graphics (TOG); ACM: New York, NY, USA, 2006; Volume 25, pp. 835–846. [Google Scholar]

- Dunn, E.; Frahm, J.M. Next Best View Planning for Active Model Improvement. In BMVC; The British Machine Vision Association: Oxford, UK, 2009; pp. 1–11. [Google Scholar]

- Hartley, R.; Trumpf, J.; Dai, Y.; Li, H. Rotation averaging. Int. J. Comput. Vis. 2013, 103, 267–305. [Google Scholar] [CrossRef]

- Martinec, D.; Pajdla, T. Robust rotation and translation estimation in multiview reconstruction. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Fitzgibbon, A.W.; Zisserman, A. Automatic camera recovery for closed or open image sequences. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 1998; pp. 311–326. [Google Scholar]

- Haner, S.; Heyden, A. Covariance propagation and next best view planning for 3d reconstruction. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2012; pp. 545–556. [Google Scholar]

- Cornelis, K.; Verbiest, F.; Van Gool, L. Drift detection and removal for sequential structure from motion algorithms. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 1249–1259. [Google Scholar] [CrossRef] [PubMed]

- Govindu, V.M. Combining two-view constraints for motion estimation. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2001, Kauai, HI, USA, 8–14 December 2011; Volume 2, p. II. [Google Scholar]

- Chatterjee, A.; Madhav Govindu, V. Efficient and robust large-scale rotation averaging. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 521–528. [Google Scholar]

- Sinha, S.N.; Steedly, D.; Szeliski, R. A multi-stage linear approach to structure from motion. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2010; pp. 267–281. [Google Scholar]

- Arie-Nachimson, M.; Kovalsky, S.Z.; Kemelmacher-Shlizerman, I.; Singer, A.; Basri, R. Global motion estimation from point matches. In Proceedings of the 2012 Second International Conference on 3D Imaging, Modeling, Processing, Visualization & Transmission, Zurich, Switzerland, 13–15 October 2012; pp. 81–88. [Google Scholar]

- Cui, Z.; Jiang, N.; Tang, C.; Tan, P. Linear global translation estimation with feature tracks. arXiv 2015, arXiv:1503.01832. [Google Scholar]

- He, F.; Habib, A. Target-based and Feature-based Calibration of Low-cost Digital Cameras with Large Field-of-view. In Proceedings of the ASPRS 2015 Annual Conference, Tampa, FL, USA, 4–8 May 2015. [Google Scholar]

- Habib, A.; Xiong, W.; He, F.; Yang, H.L.; Crawford, M. Improving orthorectification of UAV-based push-broom scanner imagery using derived orthophotos from frame cameras. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 10, 262–276. [Google Scholar] [CrossRef]

- Lin, Y.C.; Cheng, Y.T.; Zhou, T.; Ravi, R.; Hasheminasab, S.M.; Flatt, J.E.; Habib, A. Evaluation of UAV LiDAR for Mapping Coastal Environments. Remote Sens. 2019, 11, 2893. [Google Scholar] [CrossRef]

- Alcantarilla, P.F.; Solutions, T. Fast explicit diffusion for accelerated features in nonlinear scale spaces. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 1281–1298. [Google Scholar]

| Acquisition Date | Field | Crop | Flying Height (m) | Ground Speed (m/s) | Lateral Distance 1 (m) | GSD 2 (cm) | Overlap/Side-Lap (%) | # 3 of Images |

|---|---|---|---|---|---|---|---|---|

| 20190810 | ACRE-42 | Sorghum | 47 | 5.0 | 13.0 | 0.76 | 80/78 | 562 |

| 20190905 | ACRE-42 | Sorghum | 47 | 5.0 | 13.0 | 0.76 | 80/78 | 555 |

| 20190823 | ACRE-21C | Popcorn | 47 | 5.0 | 8.5 | 0.76 | 80-85 | 215 |

| 20190904 | ACRE-21C | Popcorn | 44 | 4.1 | 9.0 | 0.71 | 84/84 | 217 |

| 20190724 | Romney | Popcorn | 45 | 6.0 | 14.0 | 0.72 | 76/76 | 846 |

| 20190718 | Windfall | Maize | 45 | 6.5 | 14.0 | 0.72 | 75/76 | 414 |

| 20190802 | Atlanta 1 | Maize | 45 | 5.5 | 13.0 | 0.72 | 78/77 | 254 |

| 20190802 | Atlanta 2 | Maize Soybeans | 45 | 5.5 | 13.0 | 0.72 | 78/77 | 355 |

| Framework | Matching Search Space | ROP Estimation | EOP Recovery | Bundle Adjustment |

|---|---|---|---|---|

| Traditional | Exhaustive search | Two-point + iterative five-point | Incremental strategy | GNSS/INS-assisted BA |

| Partially GNSS/INS-assisted | GNSS/INS-assisted, reduced search space | GNSS/INS-based ROP estimation + iterative five-point | Incremental strategy | GNSS/INS-assisted BA |

| Fully GNSS/INS-assisted | GNSS/INS-assisted, reduced search space | GNSS/INS-based ROP estimation + iterative five-point | N/A 1 | GNSS/INS-assisted BA |

| Threshold Description | Threshold Value |

|---|---|

| K parameter for finding neighboring images as candidate stereo-pairs | 20 |

| threshold for SIFT feature matching | 0.7 |

| Search window size for GNSS/INS matching | 500 × 500 pixels |

| Threshold for point-to-epipolar line distance for GNSS/INS matching | 40.0 pixels |

| y-parallax threshold () for removing matching outliers in the iterative five-point approach | 20.0 pixels |

| Minimum number of tracked features for an object point | 3 |

| for RANSAC normal distance () threshold | 0.2 m |

| N threshold for minimum number of SIFT-based tie points in an image for it to be considered in the BA process | 20 |

| Dataset | Total # of Images | SfM Technique | # of BA-Input Images | # of Object Points | ||||

|---|---|---|---|---|---|---|---|---|

| ACRE-42 20190810 | 562 | Traditional | 517 | 107,000 | 1.63 | 0.03 | 0.02 | 0.04 |

| Partially 1 | 562 | 214,000 | 2.31 | 0.02 | 0.03 | 0.03 | ||

| Fully 2 | 562 | 352,000 | 4.78 | 0.03 | 0.05 | 0.03 | ||

| ACRE-42 20190905 | 555 | Traditional | 525 | 123,000 | 1.56 | 0.04 | 0.03 | 0.04 |

| Partially | 555 | 331,000 | 2.47 | 0.04 | 0.03 | 0.02 | ||

| Fully | 555 | 464,000 | 4.47 | 0.04 | 0.03 | 0.03 | ||

| ACRE-21C 20190823 | 215 | Traditional | 202 | 55,000 | 1.77 | 0.02 | 0.02 | 0.03 |

| Partially | 215 | 101,000 | 2.36 | 0.02 | 0.02 | 0.02 | ||

| Fully | 215 | 175,000 | 4.97 | 0.02 | 0.03 | 0.03 | ||

| ACRE-21C 20190904 | 217 | Traditional | 198 | 74,000 | 2.01 | 0.03 | 0.01 | 0.03 |

| Partially | 217 | 149,000 | 2.99 | 0.03 | 0.02 | 0.03 | ||

| Fully | 217 | 210,000 | 4.87 | 0.03 | 0.01 | 0.05 |

| Dataset | SfM Technique | Processing Time (min) | |||

|---|---|---|---|---|---|

| Image Matching and ROP Estimation | BA Preprocessing | BA | Total | ||

| ACRE-42 20190810 | Traditional | 42.2 | 16.9 | 6.1 | 65.2 |

| Partially | 56.1 | 50.2 | 11.1 | 117.4 | |

| Fully | 56.1 | 14.5 | 18.8 | 89.4 | |

| ACRE-42 20190905 | Traditional | 42.2 | 24.7 | 12.4 | 79.3 |

| Partially | 71.6 | 52.3 | 21.5 | 145.4 | |

| Fully | 71.6 | 17.0 | 31.4 | 120.0 | |

| ACRE-21C 20190823 | Traditional | 14.4 | 3.1 | 3.3 | 20.8 |

| Partially | 26.9 | 13.6 | 5.3 | 45.8 | |

| Fully | 26.9 | 6.2 | 11.1 | 44.2 | |

| ACRE-21C 20190904 | Traditional | 25.0 | 4.4 | 4.2 | 33.6 |

| Partially | 28.5 | 14.7 | 7.4 | 50.6 | |

| Fully | 28.5 | 7.5 | 11.1 | 47.1 | |

| Dataset | SfM Technique | |||

|---|---|---|---|---|

| ACRE-42 20190810 | Traditional | −0.06 | 0.07 | 0.09 |

| Partially | −0.03 | 0.09 | 0.09 | |

| Fully | −0.02 | 0.10 | 0.10 | |

| ACRE-42 20190905 | Traditional | −0.06 | 0.06 | 0.08 |

| Partially | −0.03 | 0.08 | 0.09 | |

| Fully | −0.03 | 0.09 | 0.09 | |

| ACRE-21C 20190823 | Traditional | −0.04 | 0.07 | 0.08 |

| Partially | −0.02 | 0.08 | 0.08 | |

| Fully | −0.02 | 0.09 | 0.09 | |

| ACRE-21C 20190904 | Traditional | −0.04 | 0.08 | 0.09 |

| Partially | −0.02 | 0.09 | 0.09 | |

| Fully | −0.03 | 0.10 | 0.10 |

| Dataset | SfM Technique | Total # of Images | # of Object Points | (pixel) | (m) | (m) | (m) |

|---|---|---|---|---|---|---|---|

| Romney | Traditional | 846 | 53,000 | 1.81 | −0.01 | 0.07 | 0.08 |

| Partially | 428,000 | 2.80 | −0.01 | 0.12 | 0.12 | ||

| Fully | 731,000 | 4.31 | 0.01 | 0.10 | 0.10 | ||

| Windfall | Traditional | 441 | 39,000 | 1.69 | 0.01 | 0.11 | 0.11 |

| Partially | 286,000 | 3.02 | 0.01 | 0.09 | 0.10 | ||

| Fully | 351,000 | 4.65 | 0.01 | 0.10 | 0.10 | ||

| Atlanta-1 | Traditional | 254 | 33,000 | 1.60 | −0.01 | 0.07 | 0.08 |

| Partially | 93,000 | 2.04 | −0.05 | 0.08 | 0.09 | ||

| Fully | 152,000 | 4.25 | −0.04 | 0.09 | 0.09 | ||

| Atlanta-2 | Traditional | 355 | 125,000 | 1.00 | 0.02 | 0.08 | 0.09 |

| Partially | 261,000 | 1.89 | −0.04 | 0.07 | 0.08 | ||

| Fully | 300,000 | 3.07 | −0.04 | 0.08 | 0.09 |

| Dataset | Total # of Images | Number of BA-Input Images | ||||

|---|---|---|---|---|---|---|

| Traditional | Partially | Fully | Pix4D-1 | Pix4D-2 | ||

| Romney | 846 | 720 | 846 | 846 | 294 | 784 |

| Windfall | 441 | 409 | 441 | 441 | 263 | 441 |

| Atlanta-1 | 254 | 196 | 254 | 254 | 173 | 251 |

| Atlanta-2 | 355 | 316 | 355 | 355 | 328 | 350 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hasheminasab, S.M.; Zhou, T.; Habib, A. GNSS/INS-Assisted Structure from Motion Strategies for UAV-Based Imagery over Mechanized Agricultural Fields. Remote Sens. 2020, 12, 351. https://doi.org/10.3390/rs12030351

Hasheminasab SM, Zhou T, Habib A. GNSS/INS-Assisted Structure from Motion Strategies for UAV-Based Imagery over Mechanized Agricultural Fields. Remote Sensing. 2020; 12(3):351. https://doi.org/10.3390/rs12030351

Chicago/Turabian StyleHasheminasab, Seyyed Meghdad, Tian Zhou, and Ayman Habib. 2020. "GNSS/INS-Assisted Structure from Motion Strategies for UAV-Based Imagery over Mechanized Agricultural Fields" Remote Sensing 12, no. 3: 351. https://doi.org/10.3390/rs12030351

APA StyleHasheminasab, S. M., Zhou, T., & Habib, A. (2020). GNSS/INS-Assisted Structure from Motion Strategies for UAV-Based Imagery over Mechanized Agricultural Fields. Remote Sensing, 12(3), 351. https://doi.org/10.3390/rs12030351