Adaptive Network Detector for Radar Target in Changing Scenes

Abstract

:1. Introduction

2. Methods

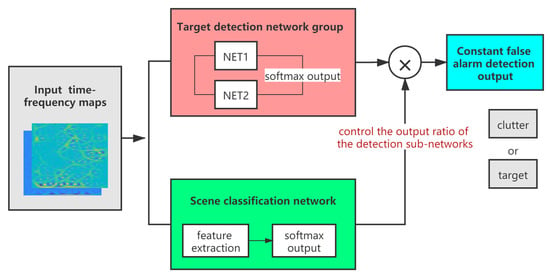

2.1. The Detector Framework

2.2. The Training Strategy

3. Experimental Setup

3.1. Experimental Dataset

3.2. Data Preprocessing

3.3. Training Details

4. Results and Discussion

4.1. Result Analysis

4.2. Comparison with Classical Detectors

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Haykin, S.; Li, X.B. Detection of signals in chaos. Proc. IEEE 1995, 83, 95–122. [Google Scholar] [CrossRef]

- He, Y.; Jian, T.; Su, F.; Qu, C.; Gu, X. Novel range-spread target detectors in non-gaussian clutter. IEEE Trans. Aerosp. Electron. Syst. 2010, 46, 1312–1328. [Google Scholar] [CrossRef]

- Robey, F.C.; Fuhrmann, D.R.; Kelly, E.J.; Nitzberg, R. A cfar adaptive matched filter detector. IEEE Trans. Aerosp. Electron. Syst. 1992, 28, 208–216. [Google Scholar] [CrossRef] [Green Version]

- Shuwen, X.; Xiaohui, B.; Zixun, G. Status and prospects of feature-based detection methods for floating targets on the sea surface. J. Radars 2020, 9, 684. [Google Scholar]

- Hua, X.Q.; Peng, L.Y. Mig median detectors with manifold filter. Signal Process. 2021, 83, 108176. [Google Scholar] [CrossRef]

- Rosebrock, J. Absolute attitude from monostatic radar measurements of rotating objects. IEEE Trans. Geosci. Remote Sens. 2011, 49, 3737–3744. [Google Scholar] [CrossRef]

- Joshi, S.K.; Baumgartner, S.V.; da Silva, A.B.C.; Krieger, G. Range-doppler based cfar ship detection with automatic training data selection. Remote Sens. 2019, 11, 1270. [Google Scholar] [CrossRef] [Green Version]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.; Dean, J. Distributed representations of words and phrases and their compositionality. Statistics 2013, 2, 3111–3119. [Google Scholar]

- Lo, T.; Leung, H.; Litva, J.; Haykin, S. Fractal characterisation of sea-scattered signals and detection of sea-surface targets. IEE Proc. F Radar Signal Process. 1993, 140, 243–250. [Google Scholar] [CrossRef]

- Hu, J.; Tung, W.W.; Gao, J. Detection of low observable targets within sea clutter by structure function based multifractal analysis. IEEE Trans. Antennas Propag. 2006, 54, 136–143. [Google Scholar] [CrossRef]

- Guan, J.; Liu, N.B.; Huang, Y.; He, Y. Fractal characteristic in frequency domain for target detection within sea clutter. IET Radar Sonar Navig. 2012, 6, 293–306. [Google Scholar] [CrossRef]

- Shi, S.N.; Shui, P.L. Sea-surface floating small target detection by one-class classifier in time-frequency feature space. IEEE Trans. Geoence Remote Sens. 2018, 56, 6395–6411. [Google Scholar] [CrossRef]

- Li, Y.; Xie, P.; Tang, Z.; Jiang, T. Svm-based sea-surface small target detection: A false-alarm-rate-controllable approach. IEEE Geosci. Remote Sens. Lett. 2018, 8, 1225–1229. [Google Scholar] [CrossRef] [Green Version]

- Xu, S.; Zheng, J.; Jia, P.; Shui, P. Sea-surface floating small target detection based on polarization features. IEEE Geosci. Remote Sens. Lett. 2018, 99, 1–5. [Google Scholar] [CrossRef]

- Wang, S.; Wang, Q.; Bailey, N.; Zhao, J. Deep neural networks for choice analysis: A statistical learning theory perspective. Transp. Res. Part B Methodol. 2021, 148, 60–81. [Google Scholar]

- Zhao, B.; Lu, H.; Chen, S.; Liu, J.; Wu, D. Convolutional neural networks for time series classification. J. Syst. Eng. Electron. 2017, 28, 162–169. [Google Scholar] [CrossRef]

- Yan, H.; Chen, C.; Jin, G.; Zhang, J.; Wang, X.; Zhu, D. Implementation of a modified faster r-cnn for target detection technology of coastal defense radar. Remote Sens. 2021, 13, 1703. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, J.; Niu, J.; Wu, Q.M.J.; Li, G. Track prediction for hf radar vessels submerged in strong clutter based on mscnn fusion with gru-am and ar model. Remote Sens. 2021, 13, 2164. [Google Scholar] [CrossRef]

- Mou, X.; Chen, X.; Guan, J.; Dong, Y.; Liu, N. Sea clutter suppression for radar ppi images based on scs-gan. IEEE Geosci. Remote Sens. Lett. 2020, 99, 1–5. [Google Scholar] [CrossRef]

- Liu, L.; Ouyang, W.; Wang, X.; Fieguth, P.; Chen, J.; Liu, X.; Pietikäinen, M. Deep learning for generic object detection: A survey. Int. J. Comput. Vis. 2020, 128, 261–318. [Google Scholar] [CrossRef] [Green Version]

- Kim, B.K.; Kang, H.-S.; Park, S.-O. Drone classification using convolutional neural networks with merged doppler images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 38–42. [Google Scholar] [CrossRef]

- Scannapieco, A.F.; Renga, A.; Fasano, G.; Moccia, A. Experimental analysis of radar odometry by commercial ultralight radar sensor for miniaturized UAS. J. Intell. Robot. Syst. 2017, 90, 485–503. [Google Scholar] [CrossRef] [Green Version]

- Jarabo-Amores, M.-P.; Rosa-Zurera, M.; Gil-Pita, R.; Lopez-Ferreras, F. Study of two error functions to approximate the neyman–pearson detector using supervised learning machines. IEEE Trans. Signal Process. 2009, 57, 4175–4181. [Google Scholar] [CrossRef]

- Vicen-Bueno, R.; Rubén, C.-É.; Rosa-Zurera, M.; Nieto-Borge, J.C. Sea clutter reduction and target enhancement by neural networks in a marine radar system. Sensors 2009, 9, 1913–1936. [Google Scholar] [CrossRef]

- Chen, X.; Su, N.; Huang, Y.; Guan, J. False-alarm-controllable radar detection for marine target based on multi features fusion via CNNs. IEEE Sens. J. 2021, 21, 9099–9111. [Google Scholar] [CrossRef]

- Su, X.; Suo, J.; Liu, X.; Xu, X. Prediction and analysis of sea clutter based on linear and nonlinear techniques. J. Inf. Comput. Sci. 2009, 6, 265–271. [Google Scholar]

- Su, N.; Chen, X.; Guan, J.; Huang, Y.; Liu, N. One-dimensional sequence signal detection method for marine target based on deep learning. J. Signal Process. 2021, 36, 1987–1997. [Google Scholar]

- Liu, J.; Li, C.; Nie, Y.; Cui, G. A target detection method based on deep learning. Radar Sci. Technol. 2020, 18, 667–671, 681. [Google Scholar]

- Wang, J.; Hua, X.; Zeng, X. Spectral-based spd matrix representation for signal detection using a deep neutral network. Entropy 2020, 22, 585. [Google Scholar] [CrossRef] [PubMed]

- Gustavo, L.-R.; Jesus, G.; Rosa, D.-O. Target detection in sea clutter using convolutional neural networks. In Radar–Exploring the Universe. In Proceedings of the 2003 IEEE Radar Conference, Huntsville, AL, USA, 5–8 May 2003. [Google Scholar]

- Liu, N.; Xu, Y.; Ding, H.; Xue, Y.; Guan, J. High-dimensional feature extraction of sea clutter and target signal for intelligent maritime monitoring network. Comput. Commun. 2019, 147, 76–84. [Google Scholar]

- Li, D.; Shui, P. Floating small target detection in sea clutter via normalised doppler power spectrum. IET Radar Sonar Navig. 2016, 10, 699–706. [Google Scholar] [CrossRef]

- Yilmaz, S.H.G.; Zarro, C.; Hayvaci, H.T.; Ullo, S.L. Adaptive waveform design with multipath exploitation radar in heterogeneous environments. Remote Sens. 2021, 13, 1628. [Google Scholar] [CrossRef]

- Hua, X.Q.; Ono, Y.; Peng, L.Y.; Cheng, Y.Q.; Wang, H.Q. Target detection within nonhomogeneous clutter via total Bregman divergence-based matrix information geometry detectors. IEEE Trans. Signal Process. 2021, 69, 4326–4340. [Google Scholar] [CrossRef]

- Zhou, W.; Xie, J.; Li, G.; Du, Y. Robust cfar detector with weighted amplitude iteration in nonhomogeneous sea clutter. IEEE Trans. Aerosp. Electron. Syst. 2017, 53, 1520–1535. [Google Scholar] [CrossRef]

- Song, Z.; Hui, B.; Fan, H. A Dataset for Detection and Tracking of Dim Aircraft Targets through Radar Echo Sequences; Science Data Bank, 2009; Available online: https://www.scidb.cn/en/detail?dataSetId=720626420979597312&dataSetType=journal (accessed on 17 September 2021).

- Haykin, S. The Dartmouth Database of Ipix Radar. 2001. Available online: http://soma.ece.mcmaster.ca/ipix/ (accessed on 1 July 2001).

- Shui, P.L.; Li, D.C.; Xu, S.W. Tri-feature-based detection of floating small targets in sea clutter. IEEE Trans. Aerosp. Electron. Syst. 2014, 50, 1416–1430. [Google Scholar] [CrossRef]

- Xu, Y.; Yu, G.; Wang, Y.; Wu, X.; Ma, Y. A hybrid vehicle detection method based on viola-jones and hog + svm from uav images. Sensors 2016, 16, 1325. [Google Scholar] [CrossRef] [Green Version]

- Radman, A.; Zainal, N.; Suandi, S. Automated segmentation of iris images acquired in an unconstrained environment using HOG-SVM and GrowCut. Digit. Signal Process. 2017, 64, 60–70. [Google Scholar] [CrossRef]

- Finn, H.M. A cfar design for a window spanning two clutter fields. IEEE Trans. Aerosp. Electron. Syst. 1986, 22, 155–169. [Google Scholar] [CrossRef]

- Nitzberg, R. Constant-false-alarm-rate signal processors for several types of interference. IEEE Trans. Aerosp. Electron. Syst. 1972, 8, 27–34. [Google Scholar] [CrossRef]

| Index Item | Content |

|---|---|

| Measurement time and place | In Meixian, China from 2017 to 2019 |

| Waveform | Linear Frequency Modulation(LFM) |

| Carrier frequency | 35 GHz |

| Pulse repetition frequency(PRF) | 32 kHz |

| Data format | A two-dimensional time-domain pulse sequence |

| Network | Loss Value | Accuracy % | Epochs | Training Time |

|---|---|---|---|---|

| Scene classification network | 0.3628 | 85.8 | 10 | 27.42 min |

| NET1 | 0.0287 | 97.9 | 8 | 1.43 min |

| NET2 | 0.0336 | 98.4 | 8 | 1.45 min |

| The adaptive network | 0.0307 | 97.3 | 8 | 24.35 min |

| Network | Ground Observation Data | Sea Observation Data |

|---|---|---|

| NET1 | 98.70% | 87.02% |

| NET2 | 55.40% | 99.53% |

| The adaptive network | 94.29% | 99.77% |

| Filename | Sea Level | Wave Height (m) | The Target Unit |

|---|---|---|---|

| 19931107_135603_starea_17 | 4 | 2.1 | 9 |

| 19931108_213827_starea_25 | 3 | 1 | 7 |

| Network | Level 4 Sea State Dataset | Level 3 Sea State Dataset |

|---|---|---|

| Level 4 sea state NET1 | 99.74% | 72.41% |

| Level 3 sea state NET2 | 76.59% | 98.10% |

| The adaptive network | 92.38% | 97.25% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jing, H.; Cheng, Y.; Wu, H.; Wang, H. Adaptive Network Detector for Radar Target in Changing Scenes. Remote Sens. 2021, 13, 3743. https://doi.org/10.3390/rs13183743

Jing H, Cheng Y, Wu H, Wang H. Adaptive Network Detector for Radar Target in Changing Scenes. Remote Sensing. 2021; 13(18):3743. https://doi.org/10.3390/rs13183743

Chicago/Turabian StyleJing, He, Yongqiang Cheng, Hao Wu, and Hongqiang Wang. 2021. "Adaptive Network Detector for Radar Target in Changing Scenes" Remote Sensing 13, no. 18: 3743. https://doi.org/10.3390/rs13183743