1. Introduction

The earthmoving excavator is the key piece of construction equipment used in earthmoving projects. The monitoring of the construction productivity of earthmoving excavators involves measuring, analyzing, and improving the operational efficiency and performance of the equipment. This is an important task in the management and successful completion of earthmoving projects [

1]. An important aspect of construction productivity assessment for earthmoving excavators is counting the number of work cycles completed per unit of time [

2]. A work cycle describes the working status of an earthmoving excavator; it is the process of repeatedly moving soil until the completion of the earthmoving project. Basic operational flow usually involves digging, rotating, unloading, and rotating. Three fundamental steps are generally involved in the recognition of work cycles [

2,

3,

4]: (1) recognizing the atomic actions of earthmoving excavators; (2) associating atomic actions to recognize work cycles; and (3) counting the number of work cycles. The atomic actions described in steps (1) and (2) are the various postures presented by earthmoving excavators in work cycles according to the operation process [

2,

3,

4].

Current methods of work cycle recognition for earthmoving excavators fall into four types: manual recognition, recognition based on onboard sensors, recognition based on fixed-carrier video monitoring, and recognition based on UAV remote monitoring. The third and fourth types both apply remote sensing technology and are further classified as recognition methods based on remote sensing data, while the former two types do not apply remote sensing data and are classified as non-remote-sensing.

Manual recognition requires construction managers to visually determine atomic action categories based on the posture of the earthmoving extractor and then associate atomic actions with one work cycle [

3]. An advantage of this method is that construction managers are able to make project-related decisions, such as construction equipment scheduling and construction site planning, at any time on the construction site based on the results [

4]. However, during this process, construction managers need to personally observe and record the operation of each earthmoving excavator on site [

5]. During this labor-intensive work process, mistakes can easily be made, and the method is time-consuming and costly [

6].

Recognition based on onboard sensors requires the installation of sensors, such as Global Positioning System (GPS) [

7], Radio Frequency Identification (RFID) [

8], or Inertial Measurement Units (IMU) [

5], on earthmoving excavators or other types of construction vehicles that work in cooperation with excavators, such as loaders and dump trucks. By analyzing the work status data automatically collected by the sensors, the work cycles of earthmoving excavators can be recognized indirectly or directly. For example, GPS data loggers were installed on construction vehicles, such as excavators and loaders, by Pradhananga et al. [

7]. They used GPS data to the plot trajectories of construction vehicles and record the working time. Then, they estimated the completed earth volume based on trajectories and the time that the loader took to cycle back and forth between loading and unloading areas. Thus, they indirectly estimated the number of working cycles completed by the earthmoving excavators. Montaser et al. [

8] installed RFID tags on dump trucks and used RFID readers installed in the loading and unloading areas to receive RFID signals when the dump trucks entered these two areas. They estimated the volume of the earthmoving project completed based on the cycle time and number of round trips completed by the dump trucks, thus indirectly estimating the number of work cycles completed by the earthmoving excavators. Obviously, recognizing the atomic actions of earthmoving excavators with GPS and RFID is not possible, which makes it difficult to recognize the work cycles of earthmoving excavators directly and accurately [

9]. The results in this case are highly questionable [

10]. In order to directly determine the number of working cycles of an earthmoving excavator, Kim et al. [

5] installed IMU sensors on an earthmoving excavator. The dynamic time regularization algorithm (DWT) was used to recognize atomic actions and work cycles based on automatically received velocity and angle data from movements of the cockpit and arm components. In this way, different atomic actions can be recognized, and thus, the work cycles of earthmoving excavators can be directly recognized. Nevertheless, the stability of data received by IMU sensors is easily impacted by external factors, thus reducing the recognition accuracy of atomic actions [

10]. For example, driver operation of the control lever may produce oscillating effects that interfere with signals [

5]; this happens at construction sites from time to time. In addition, recognition based on onboard sensors requires the installation of sensors on each piece of construction equipment, which may not be feasible for rented construction equipment [

11] and would entail higher costs [

6,

12].

Fixed-carrier video monitoring is a near-ground remote sensing method that was described in recent studies [

13,

14,

15,

16,

17,

18,

19,

20,

21]. This type of method uses monitoring cameras installed on fixed carriers of a certain height (usually higher than 3 m), such as towers [

3,

13,

14,

15,

20,

21], poles [

4,

16], or buildings [

19], to continuously monitor changes in the ground or environment. Compared with traditional satellite remote sensing methods, this method is continuous [

14,

17,

19], has a high spatial resolution [

13,

19], and is low-cost [

1,

6,

17,

22]. Fixed-carrier video monitoring allows much more ground to be covered than when handheld cameras are used on the ground [

23]. Furthermore, since the monitoring camera has a larger angle when taking pictures of the earthmoving excavators on the ground from a high position, it is possible to minimize or avoid the blocking of the monitoring object [

12], thus reducing the negative impact of blocking on the recognition results [

2]. By combining this method with computer vision techniques that more realistically represent human vision and manual reasoning processes, recognition based on fixed-carrier video monitoring is becoming a popular way to measure the work cycles of earthmoving excavators [

3]. Representative methods in this field include recognition based on temporal sequences and recognition based on sequential patterns.

The recognition based on the temporal sequences method involves the construction of a set of atomic actions in work cycles according to the temporal order in which they are recognized, where a set of temporal atomic actions is classified as a work cycle [

3]. Chen [

2] et al. classified atomic actions in work cycles of an earthmoving excavator into “Digging”, “Swinging”, and “Loading“, and then used three deep learning methods, Faster R-CNN, SORT, and 3D ResNet to identify atomic actions. Recognition with two “Digging” atomic actions is considered to be a single work cycle recognition condition according to the time order of atomic action recognition. However, based on observations at construction sites, Wu et al. [

3] found that abnormal work cycles occur from time to time due to driver misoperation. For example, only one “Digging” atomic action can occur in a normal work cycle, but when an abnormal work cycle is generated, both “Digging” and “Loading” atomic actions may occur again after “Loading”. Thus, the work cycle changes from “Digging → Swinging → Loading” to “Digging → Swinging → Loading → Swinging → Digging → Loading”. It follows that a single work cycle containing an abnormal work cycle can easily be incorrectly recognized as two work cycles using the recognition based on the temporal sequences method.

The recognition based on the sequential pattern method associates atomic actions in a work cycle with each other according to the actual operation order to achieve work cycle recognition [

3,

4]. This method more closely represents the actual working conditions of earthmoving excavators at a construction site from a design point of view. For example, Kim et al. [

4] constructed a sequential pattern containing four atomic actions, “Digging → Hauling → Dumping → Swinging”, based on the operation flow of earthmoving excavators in a work cycle. They then associate atomic action recognition work cycles in turn. Compared with the recognition based on the temporal sequences method, the use of sequential patterns can allow for the easy recognition of abnormal work cycles because it is not possible to have two instances of “Digging” in a normal work cycle. However, this sequential pattern is not perfect and when there are many similar visual appearances of atomic actions, it is difficult to distinguish them from one another in videos, increasing the difficulty of atomic action recognition [

4]. Wu et al. [

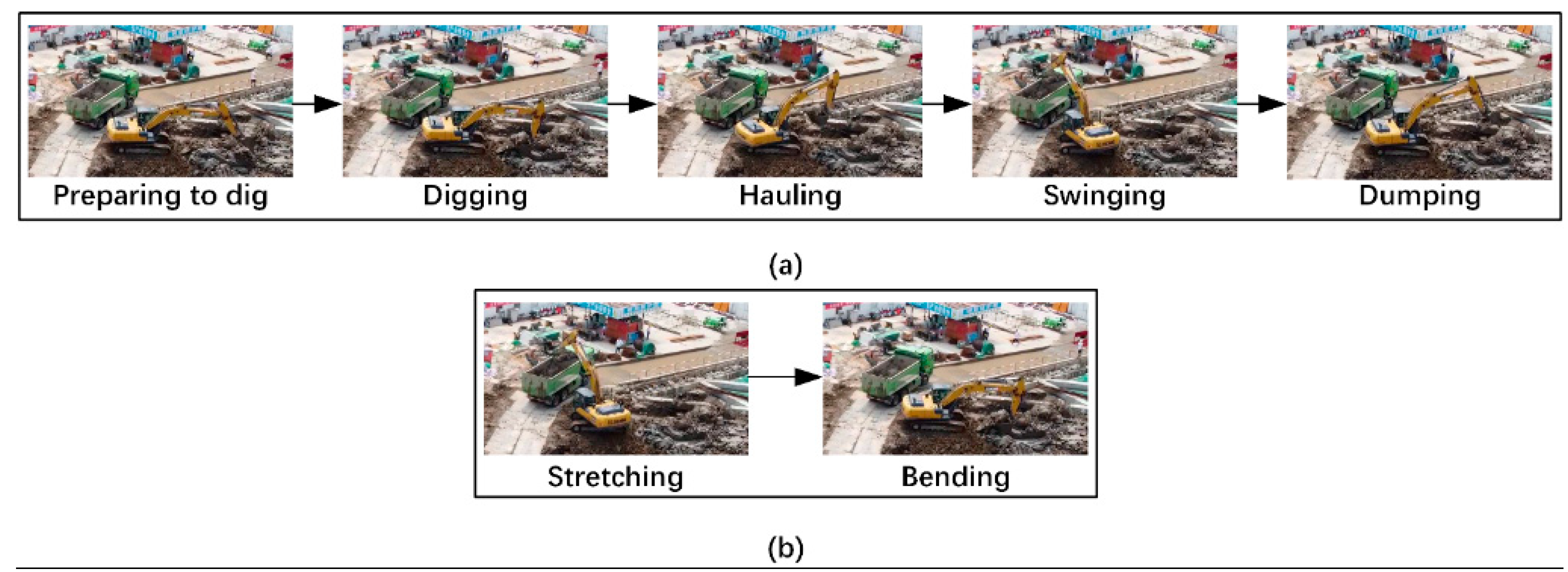

3] improved the sequential pattern with four atomic actions by adding the newly discovered atomic action “Preparing to dig”. This was used for observations at construction sites, and a sequential pattern with five atomic actions, “Preparing to dig → Digging → Hauling → Dumping → Swinging”, was constructed (see

Figure 1a). However, the actions “Preparing to dig”, “Dumping”, and “Swinging” are similar in visual appearance, as all three atomic actions involve a stretched arm. The “Digging” and “Hauling” postures both involve bending arms, so they also have similar visual appearances. Thus, it is easy to misrecognize atomic actions using this method. To solve this problem, Wu et al. [

3] combined “Preparing to dig”, “Dumping”, and “Swinging”, which have similar visual appearances, to form the category “Stretching”, while “Digging” and “Hauling” formed the category “Bending”. Thus, a sequential pattern (see

Figure 1b) consisting of “Stretching” and “Bending” actions with different visual appearances was constructed, i.e., the “Stretching-Bending” sequential pattern (SBSP). Then, atomic actions were associated to recognize work cycles. Combining atomic actions with similar visual appearances reduced the difficulty associated with the recognition of atomic actions and work cycles [

3,

22]. In general, the third method can automate the recognition of work cycles for earthmoving excavators, but its application requires the use of preconstructed installation carriers as monitoring cameras at the construction site as well as installed and commissioned monitoring systems that work in tandem with them. When the installation conditions are not available at construction sites or when the construction time is short, it may be difficult to monitor the work cycles of earthmoving excavators and carry out tasks related to other earthmoving project aspects.

The recognition based on the UAV remote sensing monitoring method is flexible and mobile [

24,

25]. It is possible to monitor construction sites and obtain visual data in areas where surveillance cameras are difficult to install [

24,

26]. In recent years, this method has been widely used in the fields of engineering surveying and mapping [

24], construction safety management [

25,

26,

27,

28,

29,

30], and construction process visualization [

31]. However, few studies have been done on the application of UAV remote sensing for monitoring the working status of earthmoving excavators. Research in this area is necessary. On the one hand, the application of UAV remote sensing can reduce the impact of occlusion on the recognition of atomic actions and work cycles [

32]. On the other hand, earthmoving projects are an important aspect of construction projects, and their expenditure cost is about 20% of the total cost [

33]. The use of UAV remote sensing to monitor work cycles of earthmoving excavators is beneficial, as it can reduce construction costs, especially in projects with no monitoring cameras installed or projects with short construction periods, such as post-disaster reconstruction projects with harsh construction environments [

34] and emergency projects with short construction periods [

35,

36]. UAV remote monitoring, as an alternative technology to fixed-carrier video monitoring for onsite proximity monitoring [

25], can provide construction managers with a clear understanding of construction site conditions and progress [

36]. This method also improves construction productivity by properly planning and deploying construction equipment based on monitoring and recording at the operational level [

37]. This saves a significant amount of time and reduces costs [

2,

4,

6,

38].

The purpose of this study was to investigate the applicability of the SBSP-based recognition of work cycles for earthmoving excavators oriented toward UAV remote sensing. Unlike in projects using video data from surveillance cameras (referred to as surveillance video), a possible challenge for this study was that the stability of video data acquired by UAV remote sensing (referred to as UAV video) is easily impacted by UAV shaking [

24]. At this time, undesired motion [

39] in videos causes interframe instability in the video images [

40]. Thus, higher-level vision tasks [

41], such as the recognition of earthmoving excavators’ work cycles, are inhibited.

The details of the method used are presented in

Section 2. In order to investigate the applicability of the SBSP method oriented toward UAV remote sensing, we used UAV and surveillance cameras to capture video data from earthmoving construction sites at the same location and within the same time interval. These data were then used to conduct applied and comparative experiments, as detailed in

Section 3.

Section 4 presents the discussion. In the last section, we conclude the study and describe future work.