An Advanced Photogrammetric Solution to Measure Apples

Abstract

:1. Introduction

- -

- 5 mm in the case of class extra, I and II apples packed in rows and layers.

- -

- 10 mm in the case of class I apples packed loose or in retail packages.

- facilitate and speed up the field work of farmers and agronomists using a common facility such as a smartphone;

- make apple measurements objective;

- provide a more extensive set of apples measured in the field, enabling the estimation of the harvesting/apple-picking date.

2. State of the Art

2.1. In-Field Inspection

2.2. Off-Tree Inspection

2.3. Machine Learning Approaches

2.4. Harvesting Robots

2.5. Fruit Size Measurement

3. Apple3D Tool for Smart Farming

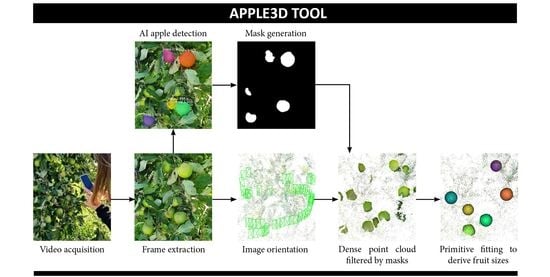

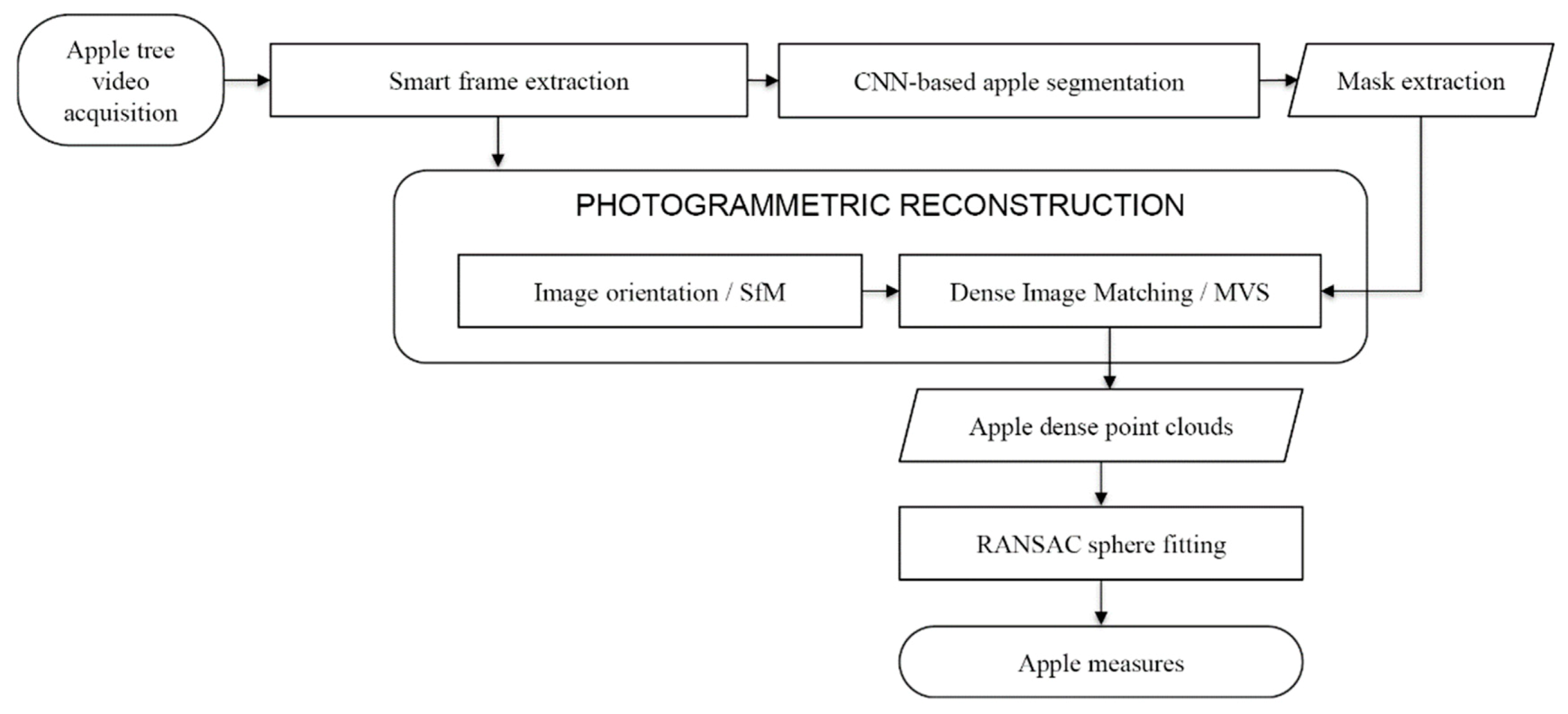

3.1. Apple3D Overall Process

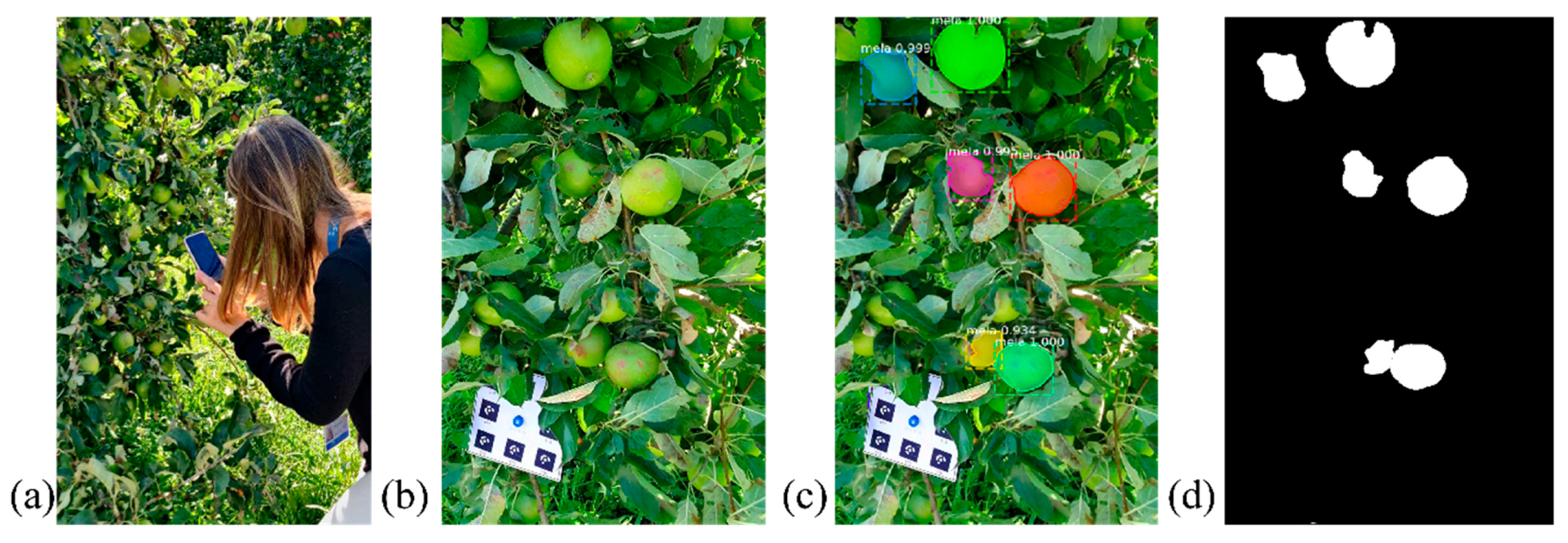

- Data acquisition: using a smartphone (Figure 2a), a video of an apple tree is recorded, trying to capture apples from multiple positions and angles. Some permanent targets should be located over the plant in order to calibrate the phone’s camera and scale the produced photogrammetric results.

- Frame extraction: keyframes are extracted from the acquired video on the smartphone in order to process them using a photogrammetric method. Numerous methods exist in the literature for extracting keyframes. Starting with the performance analysis presented in Torresani and Remodino [40], our tool employs a 2D feature-based approach that discards blurred and redundant frames unsuitable for the photogrammetric process.

- Apple segmentation: a pre-trained neural network model is used for an apple’s instance segmentation on keyframes (Figure 2c). The AI-based method is described in Section 3.2.2 and compared with a clustering approach (Section 3.2.1).

- Mask extraction: the instance segmentation results are converted into binary masks (Figure 2d) in order to isolate fruits and facilitate a dense point cloud generation within the photogrammetric process.

- Image orientation: the extracted keyframes are used for photogrammetric reconstruction purposes, starting from camera pose estimation and sparse point cloud generation (Figure 3a).

- Dense image matching: using the previously created masks within an MVS (Multi-View Stereo) process, the 3D geometry of the apples is derived (Figure 3b,c). The masking allows for removal of all unwanted areas and partly visible apples. The resulting point clouds are the main product where all measurement experiments are performed.

- Apple size measurement: fitting spheres to the photogrammetric point cloud, sizes and number of fruits are derived (Figure 3d). Two different measuring approaches are presented in Section 3.3.

3.2. Apple Segmentation

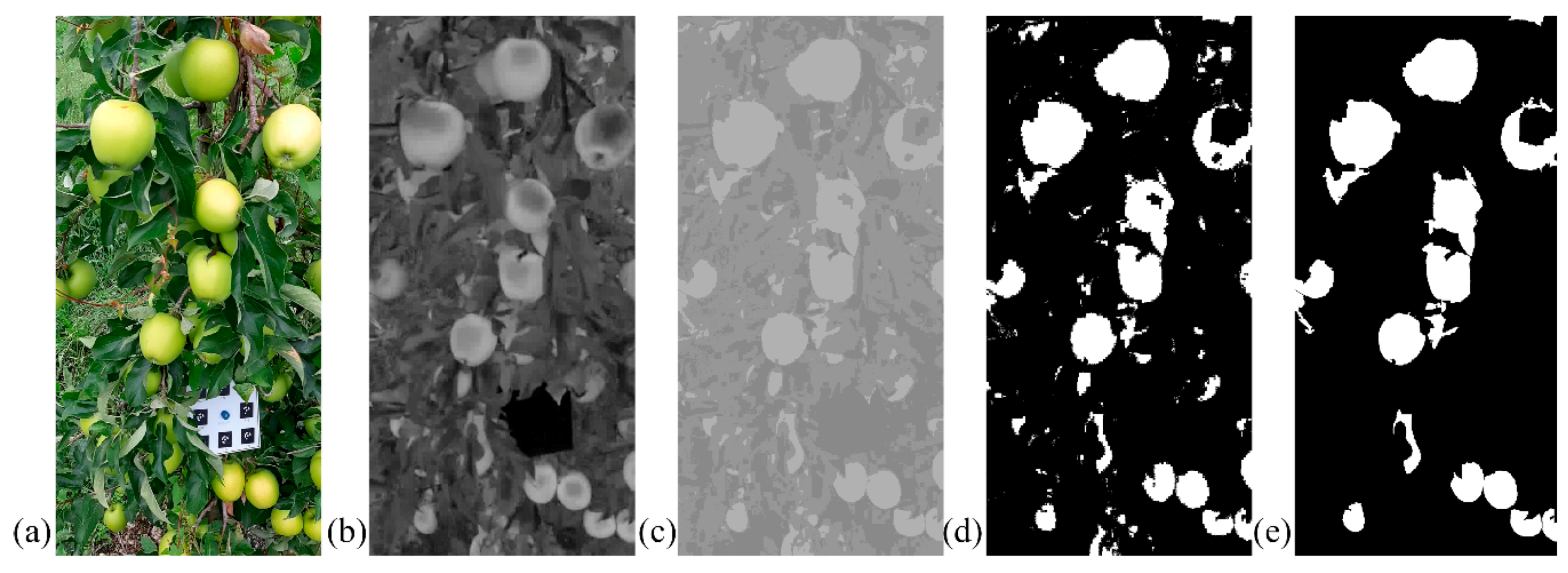

3.2.1. Clustering Approach (K-Means)

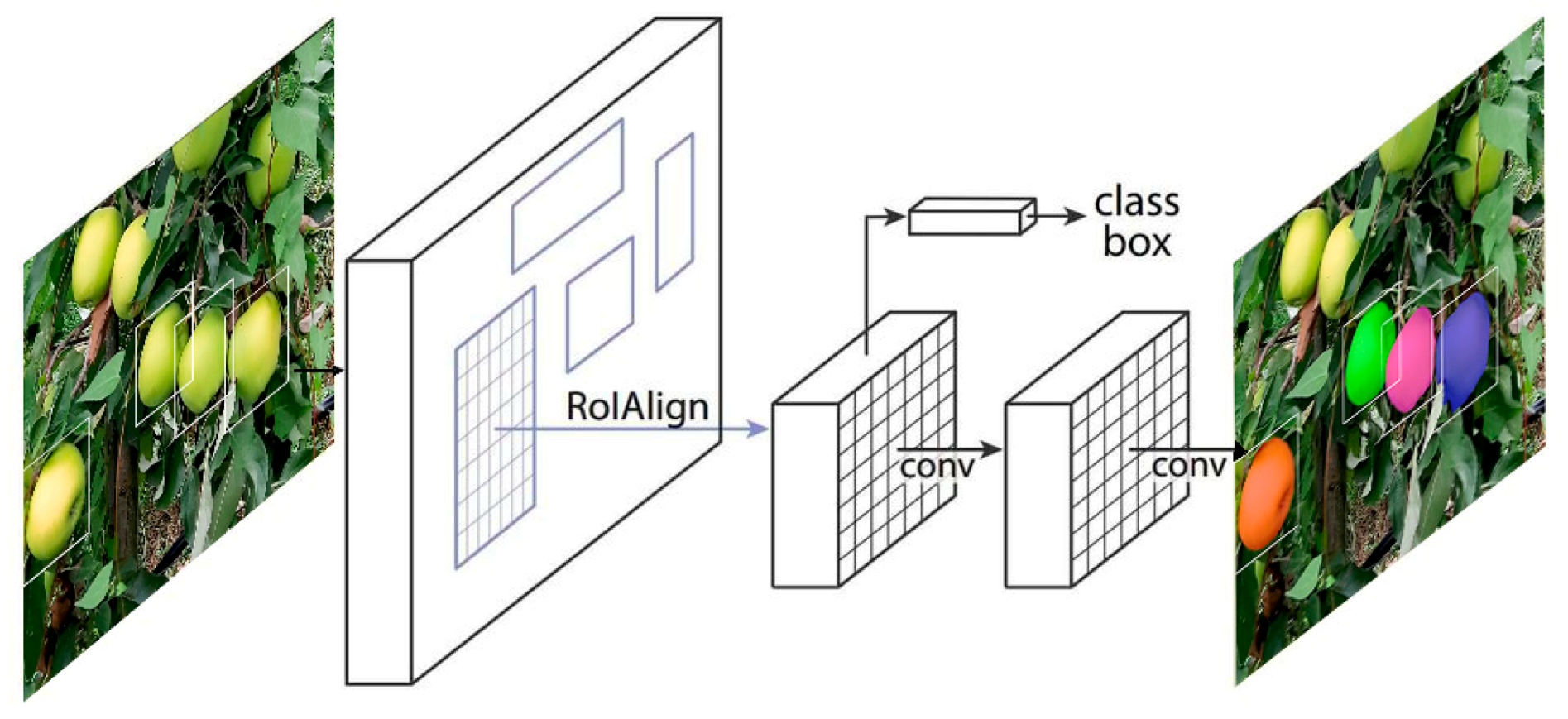

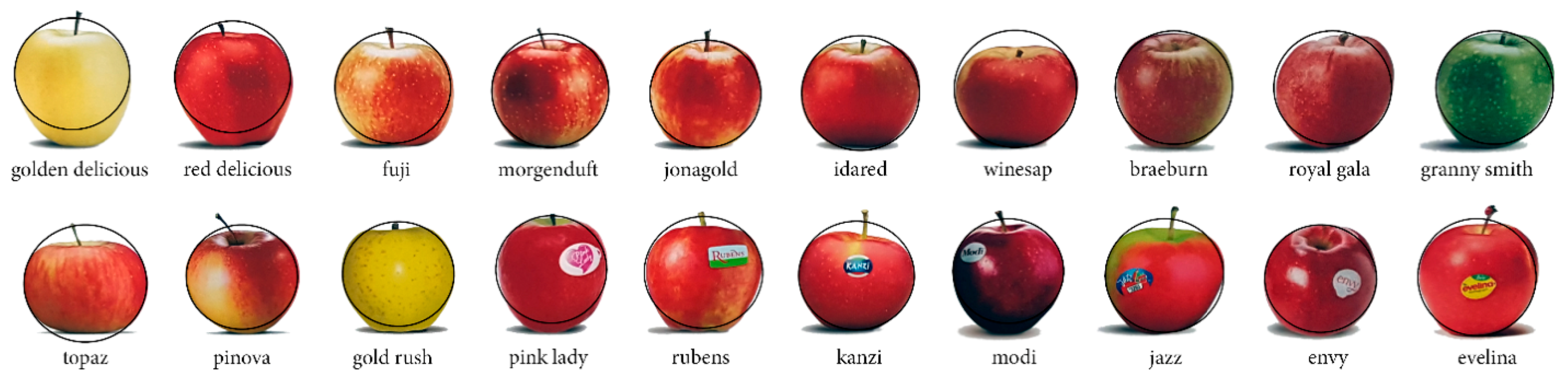

3.2.2. Neural-Network-Based Approach (Mask R-CNN)

3.3. Apple Size Measuring

3.3.1. Least Square Fitting

3.3.2. RANSAC

- (a)

- maximum distance to primitive

- (b)

- sampling resolution

- (c)

- minimum support points per primitive

4. Experimental Results

- synthetic (Section 4.1);

- laboratory (Section 4.2);

- on-field (Section 4.3).

4.1. Synthetic Datasets

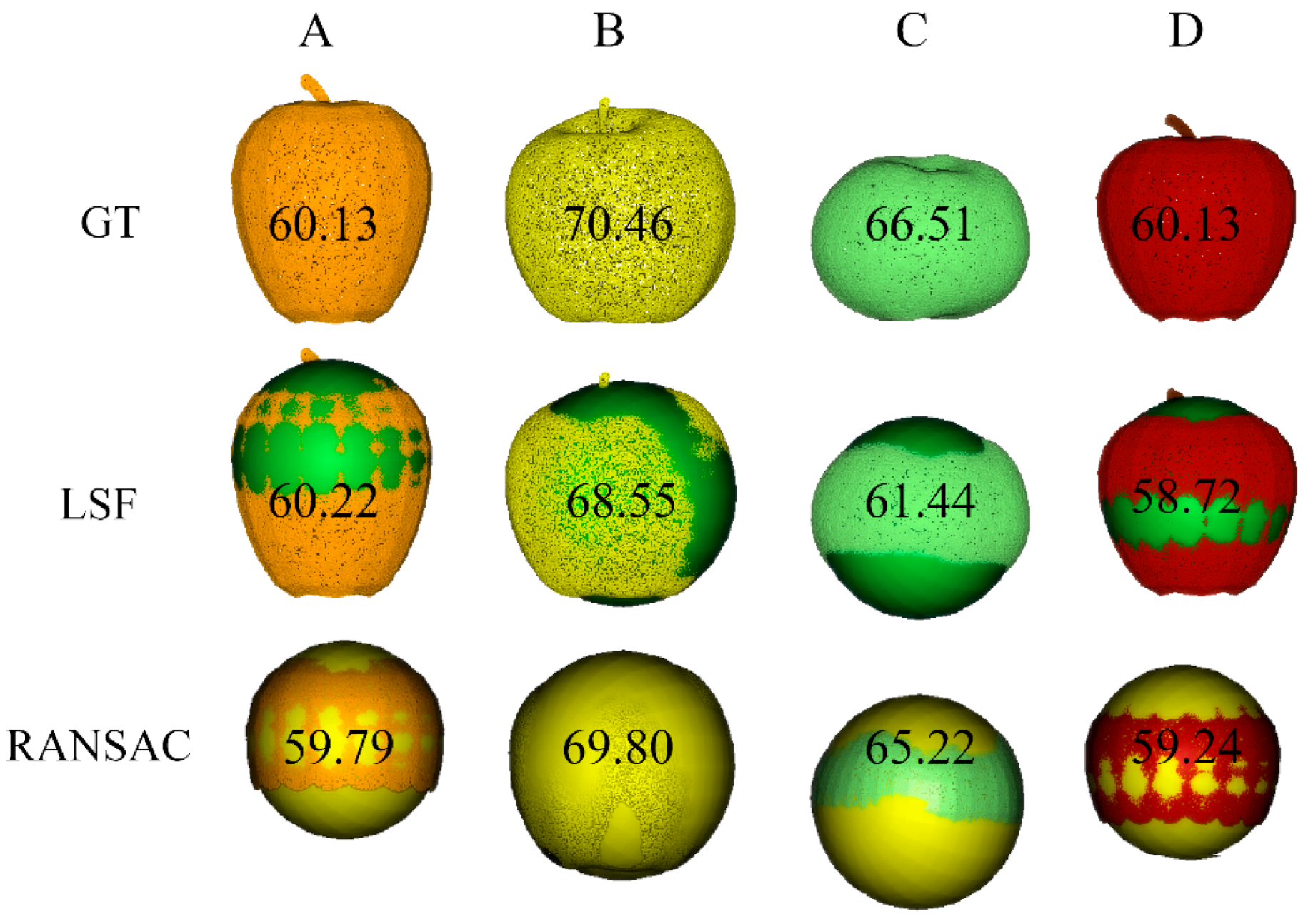

4.1.1. Shape (LSF vs. RANSAC)

4.1.2. Level of Completeness (LSF vs. RANSAC)

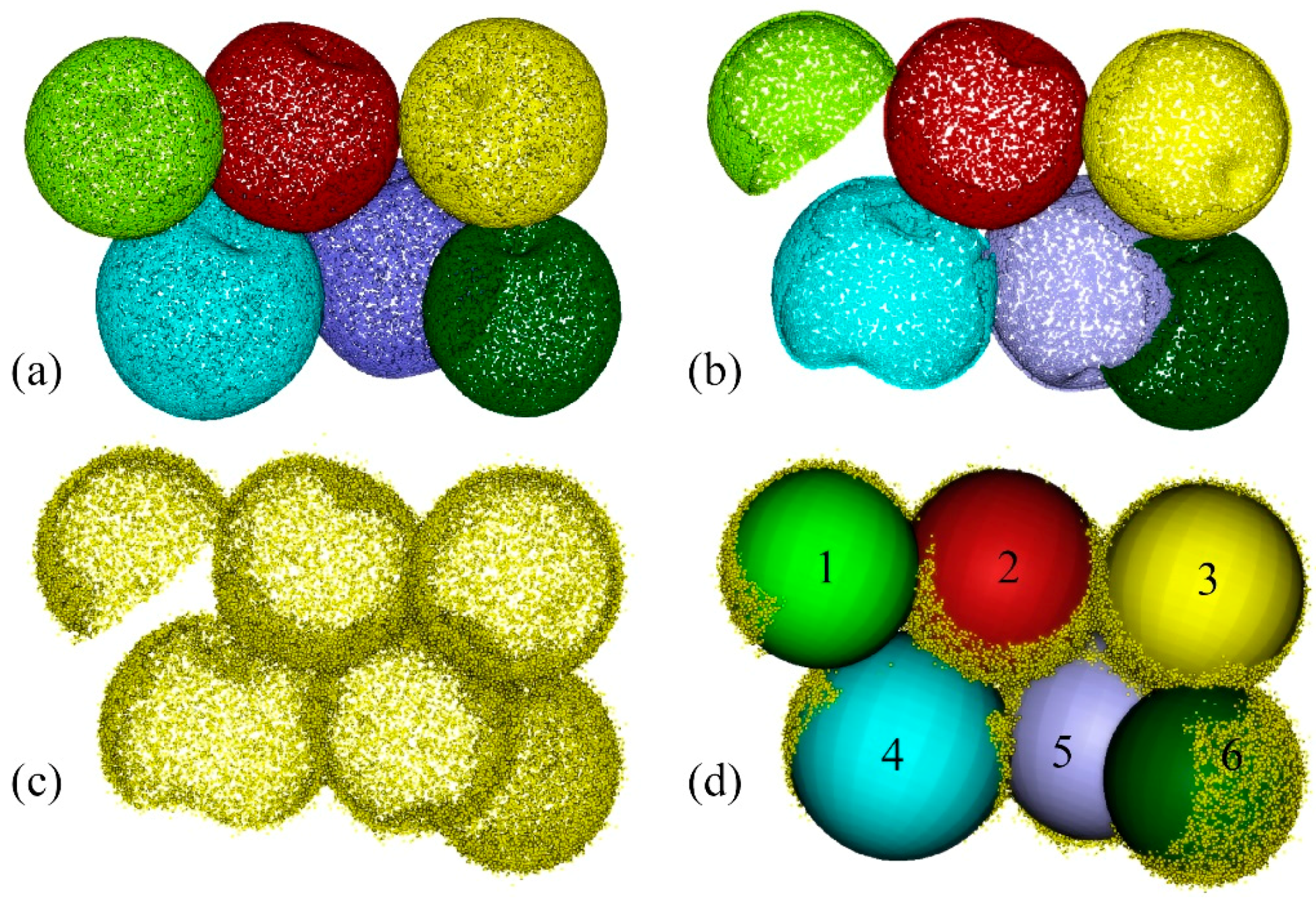

4.1.3. Occlusions and Noise (RANSAC)

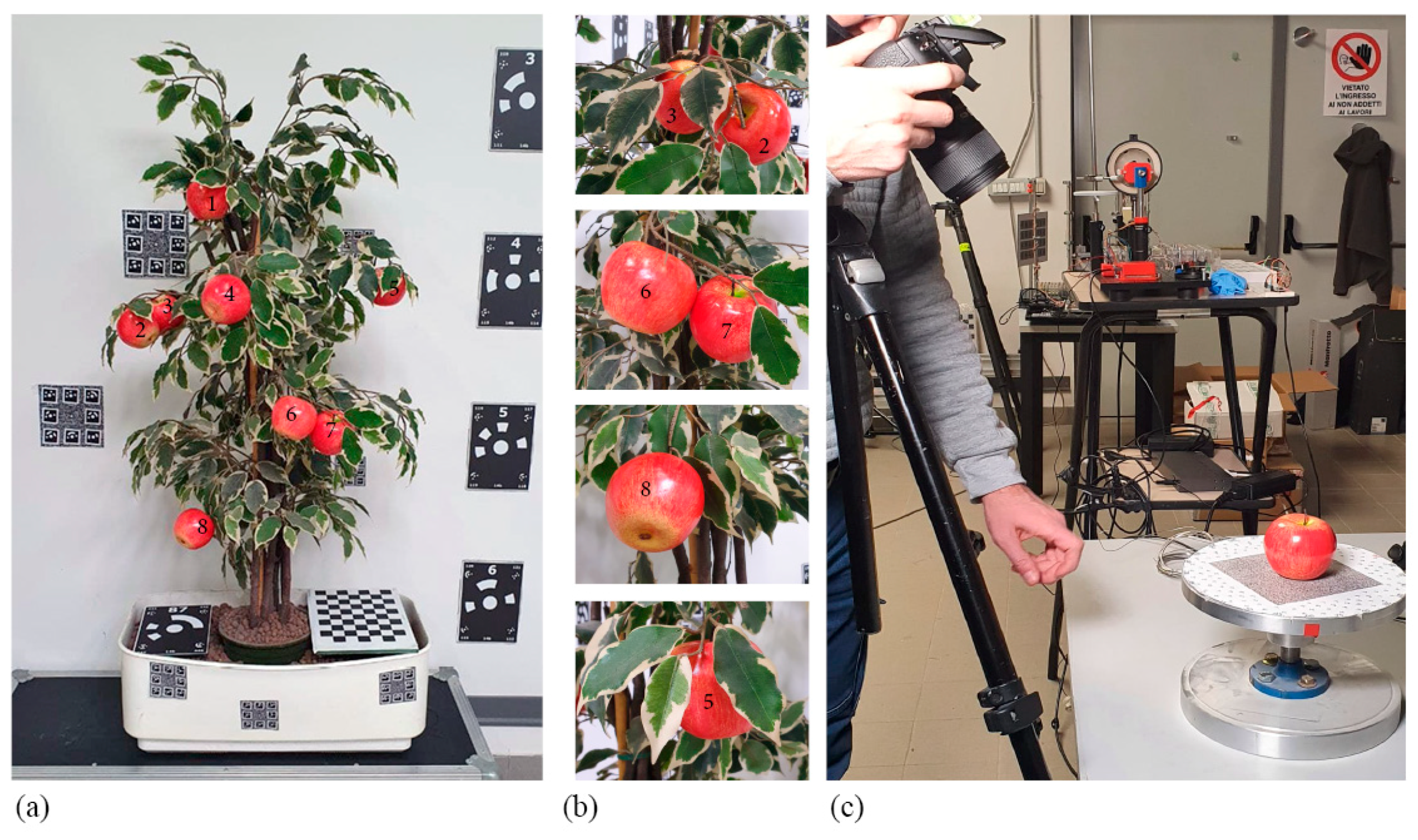

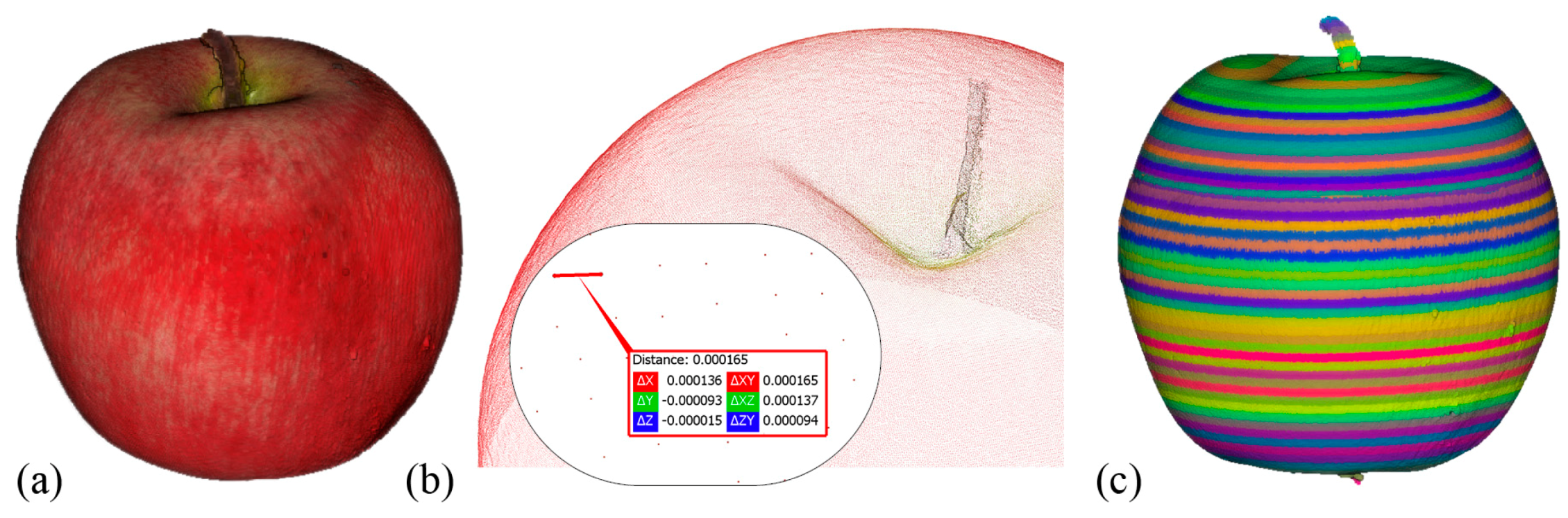

4.2. Laboratory Tests

- with a Vernier callipers, taking the average of four measurements for each apple;

4.3. On-Field Tests

5. Discussion and Conclusions

- The achievement of precise measurements using 3D point clouds.

- The use of low-cost and common instruments (smartphones) for fruit surveying.

- The introduction of a less subjective approach for measuring fruits compared to callipers, providing at the same time a more extensive set of apples measured in the field (Figure 16).

- The availability of videos for experiments, masking code, labelled images for re-training, and network weights at 3DOM-FBK-GitHub [56].

- The sensitivity to illumination variations and changes in the apple shapes: when the video is captured around midday or in cloudy conditions, the lighting issues are minimised.

- The need of placing a target in the field to derive metric results from the photogrammetric processing: the authors are planning new acquisitions with an in-house developed stereo-vision system [57], which does not require in-field targets to scale the results.

- The reliability in masking green apples, as their colour is very similar to the leaves: the authors are planning to refine the masking method, enriching the neural network with more training images.

- Verifying the application of the method to similar fruits (i.e., pears, kiwi, etc.).

- Using colour information, available in the videos, to support analyses related to fruit maturation.

- Adapting the proposed framework to on-field quality control, inspection and detection of damage over the fruit surface due, for example, to bad meteorological conditions.

- Finalization of the deployment of the entire framework in Cloud so users can acquire videos with a smartphone in the field and access Cloud resources to derive measurements almost in real time.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- European Commision Website. Available online: https://ec.europa.eu/info/food-farming-fisheries/plants-and-plant-products/fruits-and-vegetables_en (accessed on 1 September 2021).

- Jideani, A.I.; Anyasi, T.; Mchau, G.R.; Udoro, E.O.; Onipe, O.O. Processing and preservation of fresh-cut fruit and vegetable products. Postharvest Handling 2017, 47–73. [Google Scholar] [CrossRef] [Green Version]

- 543/2011/EU: Commission Implementing Regulation (EU) No 543/2011 of 7 June 2011 Laying Down Detailed Rules for the Application of Council Regulation (EC) No 1234/2007 in Respect of the Fruit and Vegetables and Processed Fruit and Vegetables Sectors; European Union: Luxembourg, 2011.

- Marini, R.P.; Schupp, J.R.; Baugher, T.A.; Crassweller, R. Estimating apple fruit size distribution from early-season fruit diameter measurements. HortScience 2019, 54, 1947–1954. [Google Scholar] [CrossRef] [Green Version]

- Navarro, E.; Costa, N.; Pereira, A. A systematic review of iot solutions for smart farming. Sensors 2020, 20, 4231. [Google Scholar] [CrossRef] [PubMed]

- Yousefi, M.R.; Razadari, A.M. Application of GIS and GPS in precision agriculture (A review). Int. J. Adv. Biol Biom Res. 2015, 3, 7–9. [Google Scholar]

- Pivoto, D.; Waquil, P.D.; Talamini, E.; Finocchio, C.P.S.; Dalla Corte, V.F.; de Vargas Mores, G. Scientific development of smart farming technologies and their application in Brazil. Inf. Process. Agric. 2018, 5, 21–32. [Google Scholar] [CrossRef]

- De Mauro, A.; Greco, M.; Grimaldi, M. A formal definition of Big Data based on its essential features. Libr. Rev. 2016, 65, 122–135. [Google Scholar] [CrossRef]

- Daponte, P.; De Vito, L.; Glielmo, L.; Iannelli, L.; Liuzza, D.; Picariello, F.; Silano, G. A Review on the Use of Drones for Precision Agriculture; IOP Publishing: Bristol, UK, 2019; Volume 275, p. 012022. [Google Scholar]

- Tsolakis, N.; Bechtsis, D.; Bochtis, D. AgROS: A robot operating system based emulation tool for agricultural robotics. Agronomy 2019, 9, 403. [Google Scholar] [CrossRef] [Green Version]

- Talaviya, T.; Shah, D.; Patel, N.; Yagnik, H.; Shah, M. Implementation of artificial intelligence in agriculture for optimisation of irrigation and application of pesticides and herbicides. Artif. Intell. Agric. 2020, 4, 58–73. [Google Scholar] [CrossRef]

- Linaza, M.; Posada, J.; Bund, J.; Eisert, P.; Quartulli, M.; Döllner, J.; Pagani, A.; Olaizola, I.G.; Barriguinha, A.; Moysiadis, T.; et al. Data-driven artificial intelligence applications for sustainable precision agriculture. Agronomy 2021, 11, 1227. [Google Scholar] [CrossRef]

- Blanpied, G.; Silsby, K. Predicting Harvest Date Windows for Apples; Cornell Coop. Extension: New York, NY, USA, 1992. [Google Scholar]

- Moreda, G.; Ortiz-Cañavate, J.; Ramos, F.J.G.; Altisent, M.R. Non-destructive technologies for fruit and vegetable size determination—A review. J. Food Eng. 2009, 92, 119–136. [Google Scholar] [CrossRef] [Green Version]

- Zujevs, A.; Osadcuks, V.; Ahrendt, P. Trends in robotic sensor technologies for fruit harvesting: 2010–2015. Procedia Comput. Sci. 2015, 77, 227–233. [Google Scholar] [CrossRef]

- Song, J.; Fan, L.; Forney, C.F.; Mcrae, K.; Jordan, M.A. The relationship between chlorophyll fluorescence and fruit quality indices in “jonagold” and “gloster” apples during ripening. In Proceedings of the 5th International Postharvest Symposium 2005, Verona, Italy, 6–11 June 2004; Volume 682, pp. 1371–1377. [Google Scholar]

- Das, A.J.; Wahi, A.; Kothari, I.; Raskar, R. Ultra-portable, wireless smartphone spectrometer for rapid, non-destructive testing of fruit ripeness. Sci. Rep. 2016, 6, srep32504. [Google Scholar] [CrossRef] [Green Version]

- Stajnko, D.; Lakota, M.; Hočevar, M. Estimation of number and diameter of apple fruits in an orchard during the growing season by thermal imaging. Comput. Electron. Agric. 2004, 42, 31–42. [Google Scholar] [CrossRef]

- Payne, A.; Walsh, K.; Subedi, P.; Jarvis, D. Estimating mango crop yield using image analysis using fruit at ‘stone hardening’ stage and night time imaging. Comput. Electron. Agric. 2014, 100, 160–167. [Google Scholar] [CrossRef]

- Regunathan, M.; Lee, W.S. Citrus fruit identification and size determination using machine vision and ultrasonic sensors. In Proceedings of the 2005 ASAE Annual Meeting, American Society of Agricultural and Biological Engineers, Tampa, FL, USA, 17–20 July 2005. [Google Scholar]

- Nguyen, T.T.; Vandevoorde, K.; Wouters, N.; Kayacan, E.; De Baerdemaeker, J.G.; Saeys, W. Detection of red and bicoloured apples on tree with an RGB-D camera. Biosyst. Eng. 2016, 146, 33–44. [Google Scholar] [CrossRef]

- Wang, Z.; Walsh, K.B.; Verma, B. On-tree mango fruit size estimation using RGB-D images. Sensors 2017, 17, 2738. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Font, D.; Pallejà, T.; Tresanchez, M.; Runcan, D.; Moreno, J.; Martínez, D.; Teixidó, M.; Palacín, J. A proposal for automatic fruit harvesting by combining a low cost stereovision camera and a robotic arm. Sensors 2014, 14, 11557–11579. [Google Scholar] [CrossRef] [Green Version]

- Gongal, A.; Karkee, M.; Amatya, S. Apple fruit size estimation using a 3D machine vision system. Inf. Process. Agric. 2018, 5, 498–503. [Google Scholar] [CrossRef]

- Cubero, S.; Aleixos, N.; Moltó, E.; Gómez-Sanchis, J.; Blasco, J. Advances in machine vision applications for automatic inspection and quality evaluation of fruits and vegetables. Food Bioprocess Technol. 2011, 4, 487–504. [Google Scholar] [CrossRef]

- Saldaña, E.; Siche, R.; Luján, M.; Quevedo, R. Review: Computer vision applied to the inspection and quality control of fruits and vegetables. Braz. J. Food Technol. 2013, 16, 254–272. [Google Scholar] [CrossRef] [Green Version]

- Naik, S.; Patel, B. Machine Vision based Fruit Classification and Grading—A Review. Int. J. Comput. Appl. 2017, 170, 22–34. [Google Scholar] [CrossRef]

- Hung, C.; Nieto, J.; Taylor, Z.; Underwood, J.; Sukkarieh, S. Orchard fruit segmentation using multi-spectral feature learning. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 5314–5320. [Google Scholar]

- Sa, I.; Ge, Z.; Dayoub, F.; Upcroft, B.; Perez, T.; McCool, C. DeepFruits: A fruit detection system using deep neural networks. Sensors 2016, 16, 1222. [Google Scholar] [CrossRef] [Green Version]

- Cheng, H.; Damerow, L.; Sun, Y.; Blanke, M. Early yield prediction using image analysis of apple fruit and tree canopy features with neural networks. J. Imaging 2017, 3, 6. [Google Scholar] [CrossRef]

- Hambali, H.A.; Sls, A.; Jamil, N.; Harun, H. Fruit classification using neural network model. J. Telecommun. Electron. Comput Eng. 2017, 9, 43–46. [Google Scholar]

- Hossain, M.S.; Al-Hammadi, M.H.; Muhammad, G. Automatic fruit classification using deep learning for industrial applications. IEEE Trans. Ind. Inform. 2019, 15, 1027–1034. [Google Scholar] [CrossRef]

- Siddiqi, R. Comparative performance of various deep learning based models in fruit image classification. In Proceedings of the 11th International Conference on Advances in Information Technology, Bangkok, Thailand, 1–3 July 2020; Association for Computing Machinery (ACM): New York, NY, USA, 2020; pp. 1–9. [Google Scholar]

- Mureşan, H.; Oltean, M. Fruit recognition from images using deep learning. Acta Univ. Sapientiae Inform. 2018, 10, 26–42. [Google Scholar] [CrossRef] [Green Version]

- Gongal, A.; Amatya, S.; Karkee, M.; Zhang, Q.; Lewis, K. Sensors and systems for fruit detection and localization: A review. Comput. Electron. Agric. 2015, 116, 8–19. [Google Scholar] [CrossRef]

- Tao, Y.; Zhou, J. Automatic apple recognition based on the fusion of color and 3D feature for robotic fruit picking. Comput. Electron. Agric. 2017, 142, 388–396. [Google Scholar] [CrossRef]

- Hua, Y.; Zhang, N.; Yuan, X.; Quan, L.; Yang, J.; Nagasaka, K.; Zhou, X.-G. Recent advances in intelligent automated fruit harvesting robots. Open Agric. J. 2019, 13, 101–106. [Google Scholar] [CrossRef] [Green Version]

- Onishi, Y.; Yoshida, T.; Kurita, H.; Fukao, T.; Arihara, H.; Iwai, A. An automated fruit harvesting robot by using deep learning. Robomech J. 2019, 6, 1–8. [Google Scholar] [CrossRef] [Green Version]

- Tang, Y.; Chen, M.; Wang, C.; Luo, L.; Li, J.; Lian, G.; Zou, X. Recognition and localization methods for vision-based fruit picking robots: A review. Front. Plant Sci. 2020, 11, 510. [Google Scholar] [CrossRef]

- Torresani, A.; Remondino, F. Videogrammetry vs. photogrammetry for heritage 3D reconstruction. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W15, 1157–1162. [Google Scholar] [CrossRef] [Green Version]

- Remondino, F.; Spera, M.G.; Nocerino, E.; Menna, F.; Nex, F.C. State of the art in high density image matching. Photogramm. Rec. 2014, 29, 144–166. [Google Scholar] [CrossRef] [Green Version]

- Stathopoulou, E.-K.; Welponer, M.; Remondino, F. Open-source image-based 3D reconstruction pipelines: Review, comparison and evaluation. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W17, 331–338. [Google Scholar] [CrossRef] [Green Version]

- Stathopoulou, E.; Battisti, R.; Cernea, D.; Remondino, F.; Georgopoulos, A. Semantically derived geometric constraints for MVS reconstruction of textureless areas. Remote Sens. 2021, 13, 1053. [Google Scholar] [CrossRef]

- Likas, A.; Vlassis, N.; Verbeek, J.J. The global k-means clustering algorithm. Pattern Recognit. 2003, 36, 451–461. [Google Scholar] [CrossRef] [Green Version]

- Bora, D.J.; Gupta, A.K. A New Approach towards clustering based color image segmentation. Int. J. Comput. Appl. 2014, 107, 23–30. [Google Scholar] [CrossRef]

- Robertson, A.R. The CIE 1976 color-difference formulae. Color Res. Appl. 1977, 2, 7–11. [Google Scholar] [CrossRef]

- Dasiopoulou, S.; Mezaris, V.; Kompatsiaris, I.; Papastathis, V.-K.; Strintzis, M. Knowledge-assisted semantic video object detection. IEEE Trans. Circuits Syst. Video Technol. 2005, 15, 1210–1224. [Google Scholar] [CrossRef]

- Minaee, S.; Boykov, Y.Y.; Porikli, F.; Plaza, A.J.; Kehtarnavaz, N.; Terzopoulos, D. Image segmentation using deep learning: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021. [Google Scholar] [CrossRef]

- Griffiths, D.; Boehm, J. A review on deep learning techniques for 3D sensed data classification. Remote Sens. 2019, 11, 1499. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R.B. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 386–397. [Google Scholar] [CrossRef] [PubMed]

- Abdulla, W. Mask R-CNN for Object Detection and Instance Segmentation on Keras and TensorFlow; GitHub Repository: San Francisco, CA, USA, 2017. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common objects in context. In Computer Vision—ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer: Cham, Switzerland, 2014. [Google Scholar] [CrossRef] [Green Version]

- Schnabel, R.; Wahl, R.; Klein, R. Efficient RANSAC for point-cloud shape detection. Comput. Graph. Forum 2007, 26, 214–226. [Google Scholar] [CrossRef]

- Grilli, E.; Menna, F.; Remondino, F. A review of point clouds segmentation and classification algorithms. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2/W3, 339–344. [Google Scholar] [CrossRef] [Green Version]

- Community BO. Blender—A 3D Modelling and Rendering Package; Stichting Blender Foundation: Amsterdam, The Netherlands; Available online: http://www.blender.org (accessed on 1 September 2021).

- 3DOM-FBK-GitHub. Available online: https://github.com/3DOM-FBK/Mask_RCNN/tree/master/samples/apples (accessed on 25 September 2021).

- Torresani, A.; Menna, F.; Battisti, R.; Remondino, F. A V-SLAM guided and portable system for photogrammetric applications. Remote Sens. 2021, 13, 2351. [Google Scholar] [CrossRef]

| Completeness | GT | A (60.13) | B (70.46) | C (66.51) | D (60.13) |

|---|---|---|---|---|---|

| 1 (i.e., 100%) | LSF | 60.22 (+0.09) | 68.55 (−1.91) | 61.44 (−5.07) | 58.72 (−1.41) |

| RANSAC | 59.79 (−0.34) | 69.80 (−0.66) | 65.22 (−1.29) | 59.24 (−0.89) | |

| 3/4 | LSF | 60.2 (+0.07) | 68.52 (−1.94) | 61 (−5.51) | 58.36 (−1.77) |

| RANSAC | 59.8 (+0.33) | 69.54 (−0.92) | 68.1 (+1.59) | 59.2 (−0.93) | |

| 1/2 | LSF | 58.88 (−1.25) | 64.92 (−5.54) | 57.9 (−8.61) | 58.8 (−1.33) |

| RANSAC | 59.9 (−0.23) | 71.66 (+1.2) | 63.5 (−3.01) | 59.34 (−0.79) | |

| 1/4 | LSF | 51.02 (−9.11) | 67.24 (−3.22) | 48.66 (−17.85) | 49.46 (−10.67) |

| RANSAC | 59.54 (−0.59) | 71.84 (+1.38) | 65.78 (−0.73) | 59.74 (−0.39) |

| Apple ID | 1 | 2 | 3 | 4 | 5 | 6 | RMSE |

|---|---|---|---|---|---|---|---|

| GT size | 69.06 | 69.43 | 65.56 | 65.05 | 68.72 | 74.37 | - |

| RANSAC | 68.45 (−0.61) | 68.43 (−1.0) | 65.80 (+0.24) | 65.60 (+0.55) | 70.18 (+1.46) | 75.00 (+0.63) | 0.84 |

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | MEDIAN | RMSE | |||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Callipers | 79.64 | 79.01 | 79.55 | 80.14 | 79.69 | 79.25 | 79.42 | 79.56 | 79.55 | - | ||

| Photogrammetry | Camera Reflex + Turn table | 79.69 | 79.86 | 80.28 | 79.41 | 80.08 | 79.59 | 79.84 | 79.23 | 79.75 | 1.53 | |

| Phone+ apple tree | LSF | 79.96 | 74.72 | / | 77.78 | 77.96 | 81.58 | 76.96 | 78.52 | 78.08 | 6.26 | |

| RANSAC | 79.70 | 78.68 | / | 78.70 | 80.92 | 81.48 | 78.48 | 82.43 | 79.87 | 4.22 | ||

| Precision % | Recall % | F1-Score % | |

|---|---|---|---|

| K-means | 73.97 | 87.30 | 80.08 |

| Mask R-CNN (COCO-based) | 49.30 | 81.16 | 52.20 |

| Mask R-CNN (re-trained) | 95.18 | 78.34 | 85.95 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Grilli, E.; Battisti, R.; Remondino, F. An Advanced Photogrammetric Solution to Measure Apples. Remote Sens. 2021, 13, 3960. https://doi.org/10.3390/rs13193960

Grilli E, Battisti R, Remondino F. An Advanced Photogrammetric Solution to Measure Apples. Remote Sensing. 2021; 13(19):3960. https://doi.org/10.3390/rs13193960

Chicago/Turabian StyleGrilli, Eleonora, Roberto Battisti, and Fabio Remondino. 2021. "An Advanced Photogrammetric Solution to Measure Apples" Remote Sensing 13, no. 19: 3960. https://doi.org/10.3390/rs13193960

APA StyleGrilli, E., Battisti, R., & Remondino, F. (2021). An Advanced Photogrammetric Solution to Measure Apples. Remote Sensing, 13(19), 3960. https://doi.org/10.3390/rs13193960