A Novel Bayesian Super-Resolution Method for Radar Forward-Looking Imaging Based on Markov Random Field Model

Abstract

:1. Introduction

2. Super-Resolution Echo Model for RASR

3. Methodology

3.1. Bayesian Framework

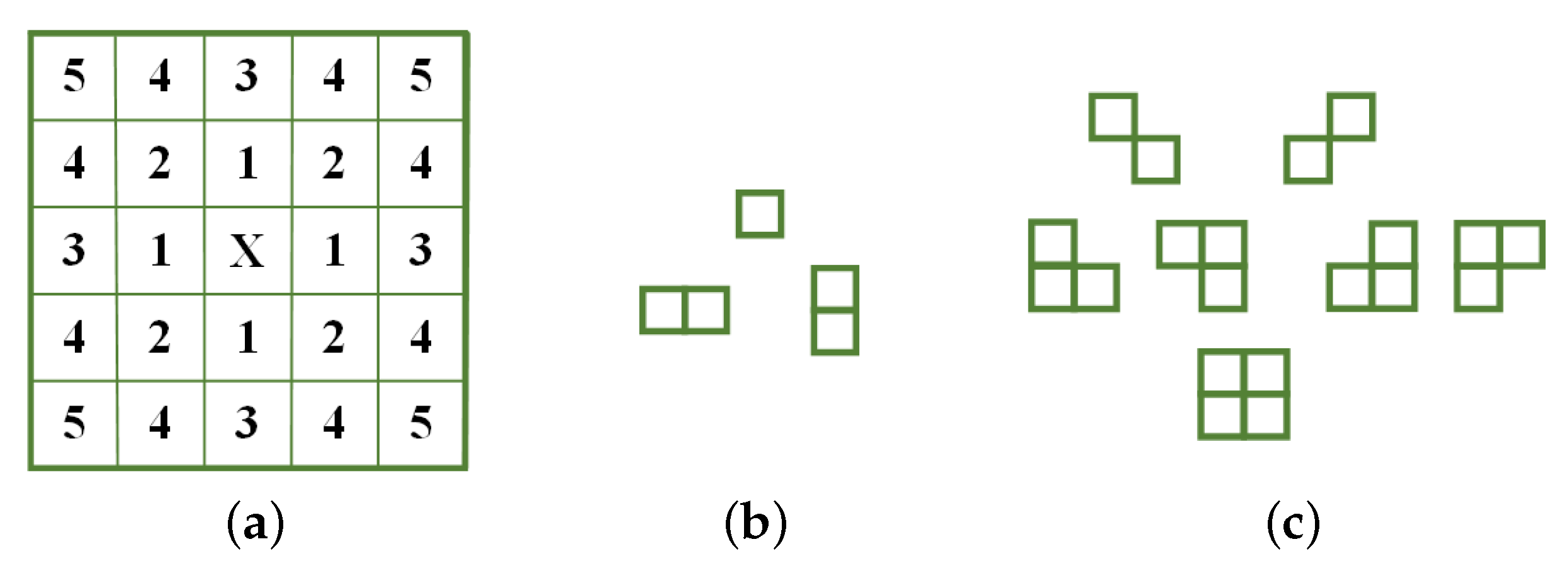

3.2. Markov Radom Field Model

3.3. Solution to the Objective Function

| Input: the parameters , , and |

| Initial Step: Give s for the initial iteration . |

| Calculate the threshold according to Equation (24) |

| Then: Calculate the first two iterative results and with the iteration |

| Equation (29) and the first two iterative vectors and |

| Repeat |

| Compute the extrapolation step size according to Equation (32) |

| Compute the prediction result according to Equation (31) |

| If , calculate the iterative point through the upper |

| iteration formula Equation (28): |

| If , calculate the iterative point through the lower |

| iteration formula Equation (28): |

| Update the iterative vector |

| Update the threshold according to Equation (24) |

| Until (convergence) |

| Export the final value |

4. Numerical Results

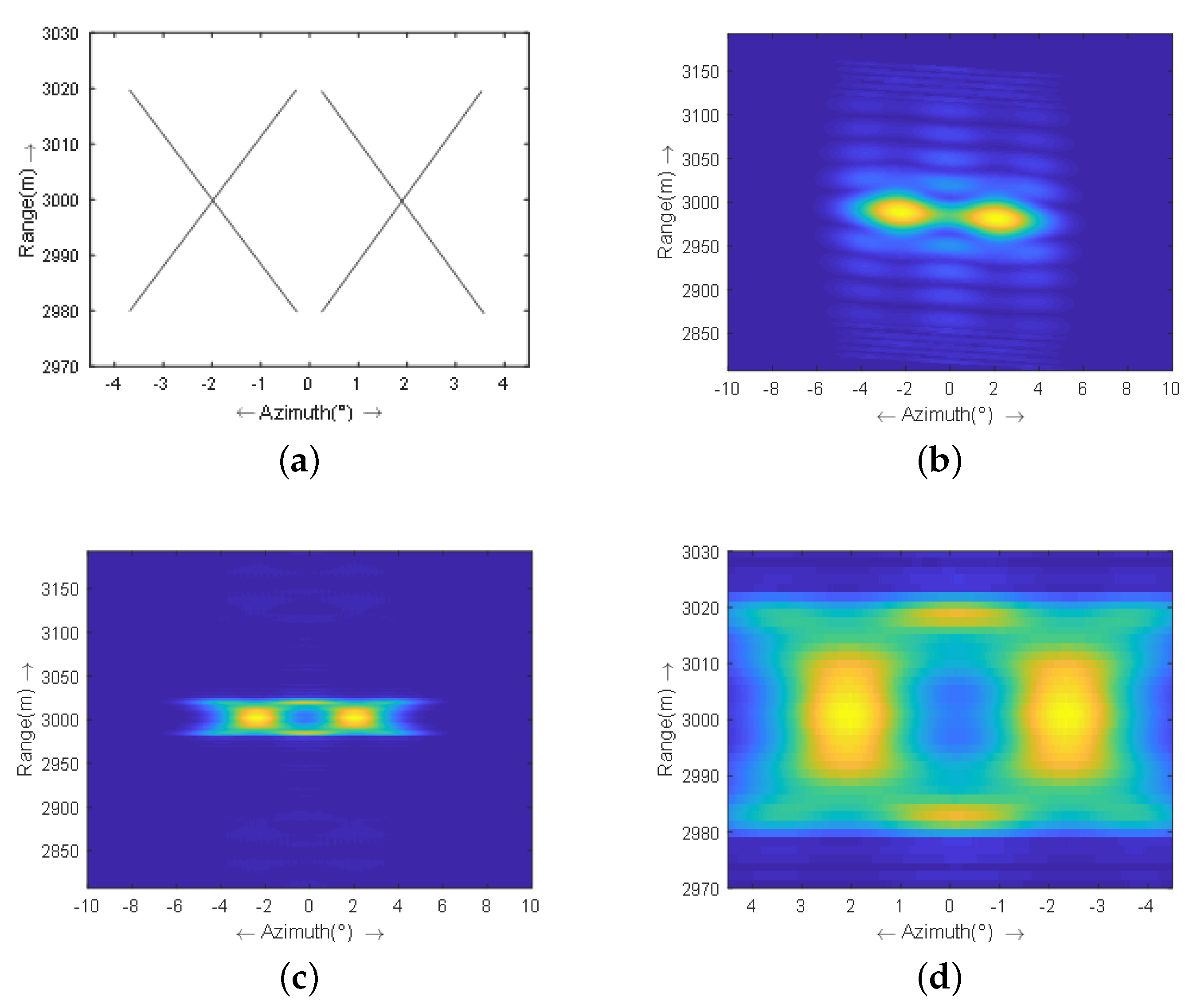

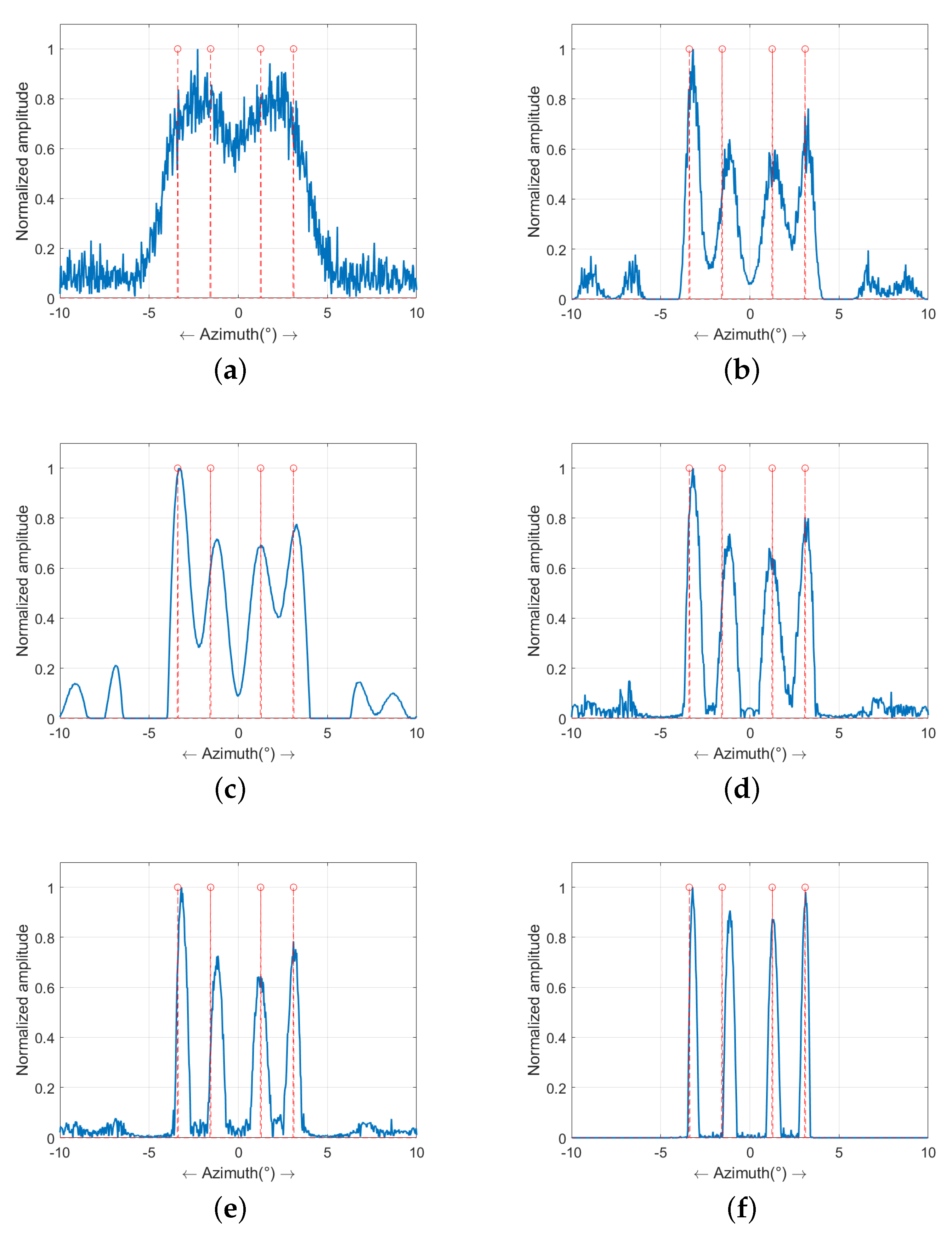

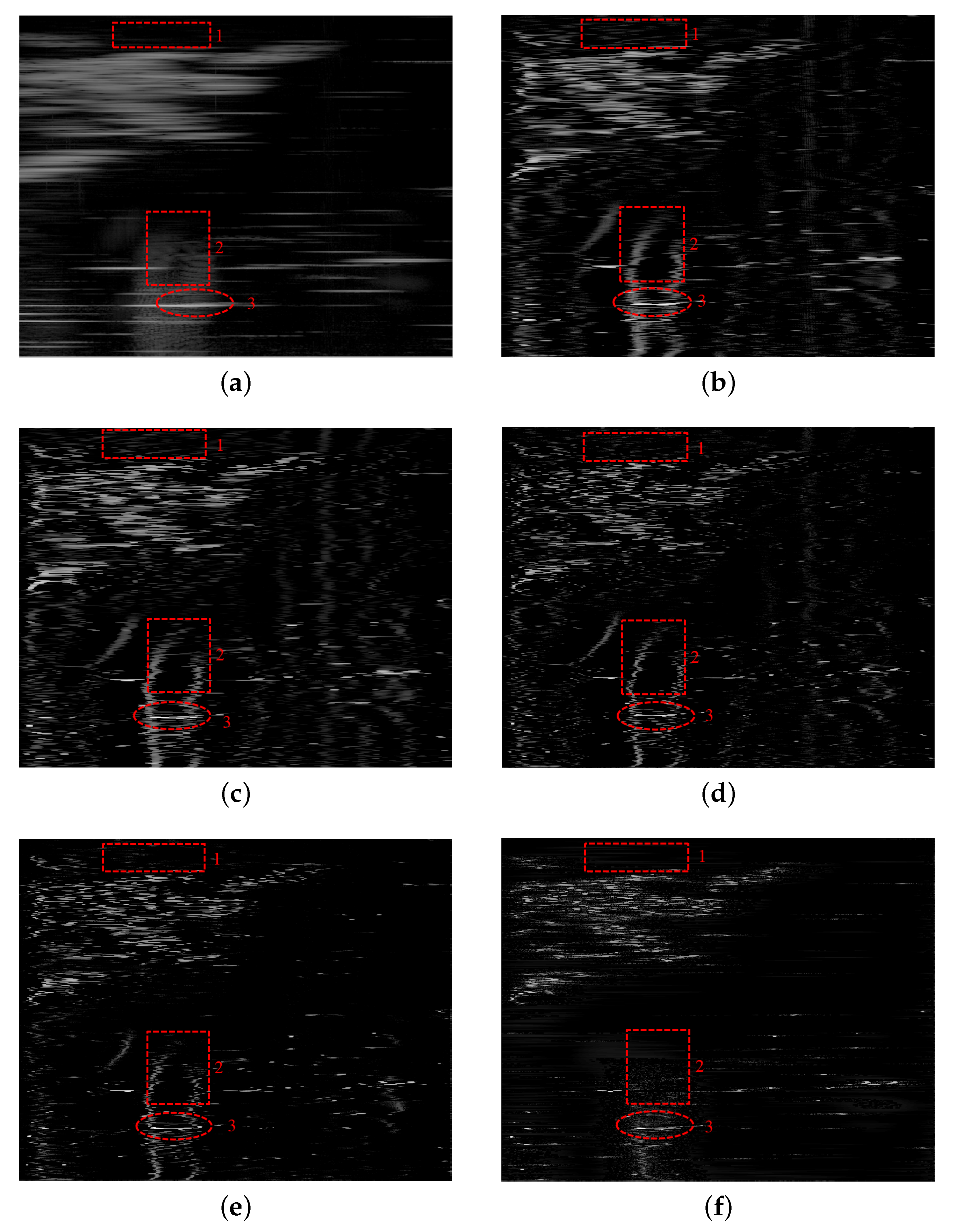

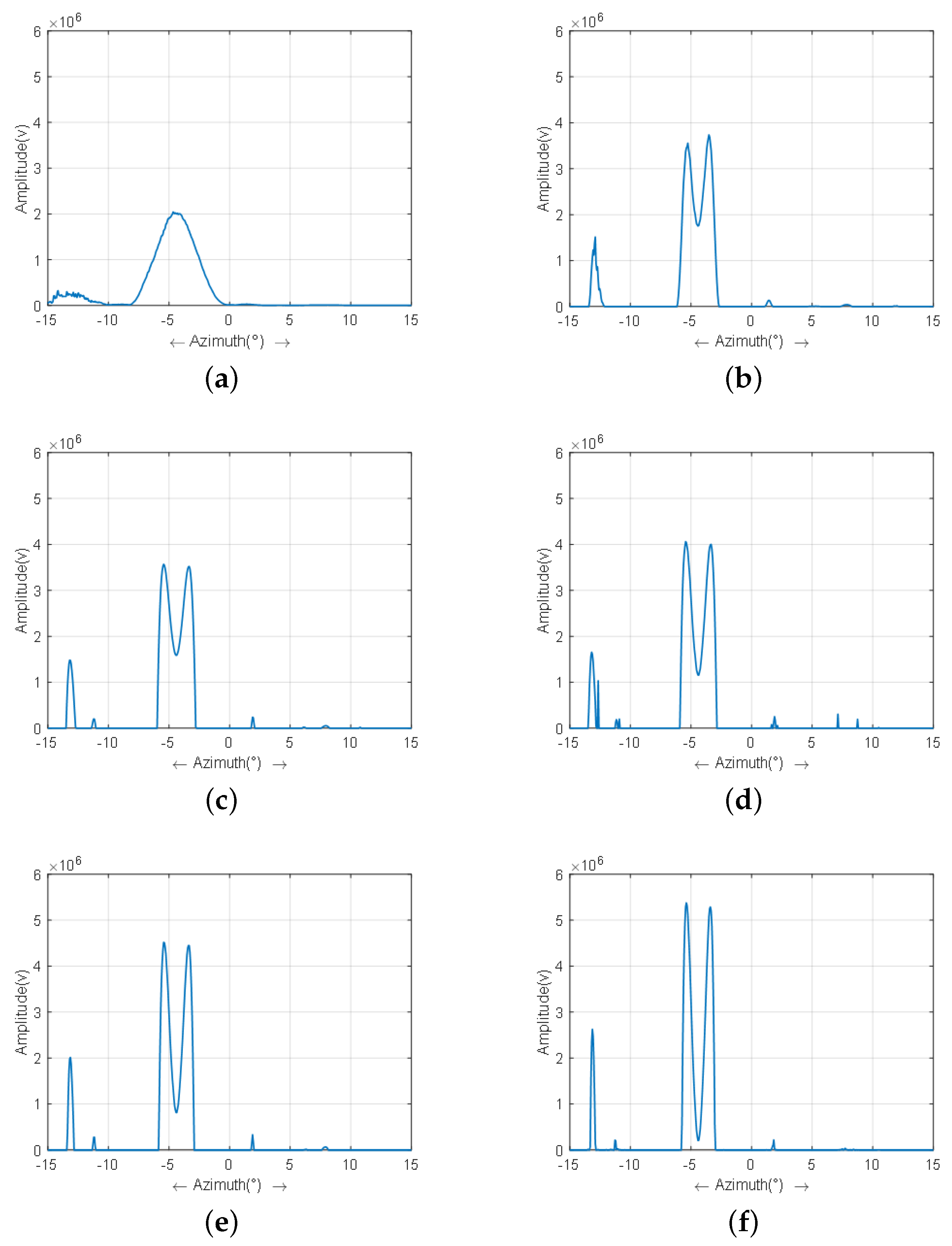

4.1. Experimental Results on Simulated Data

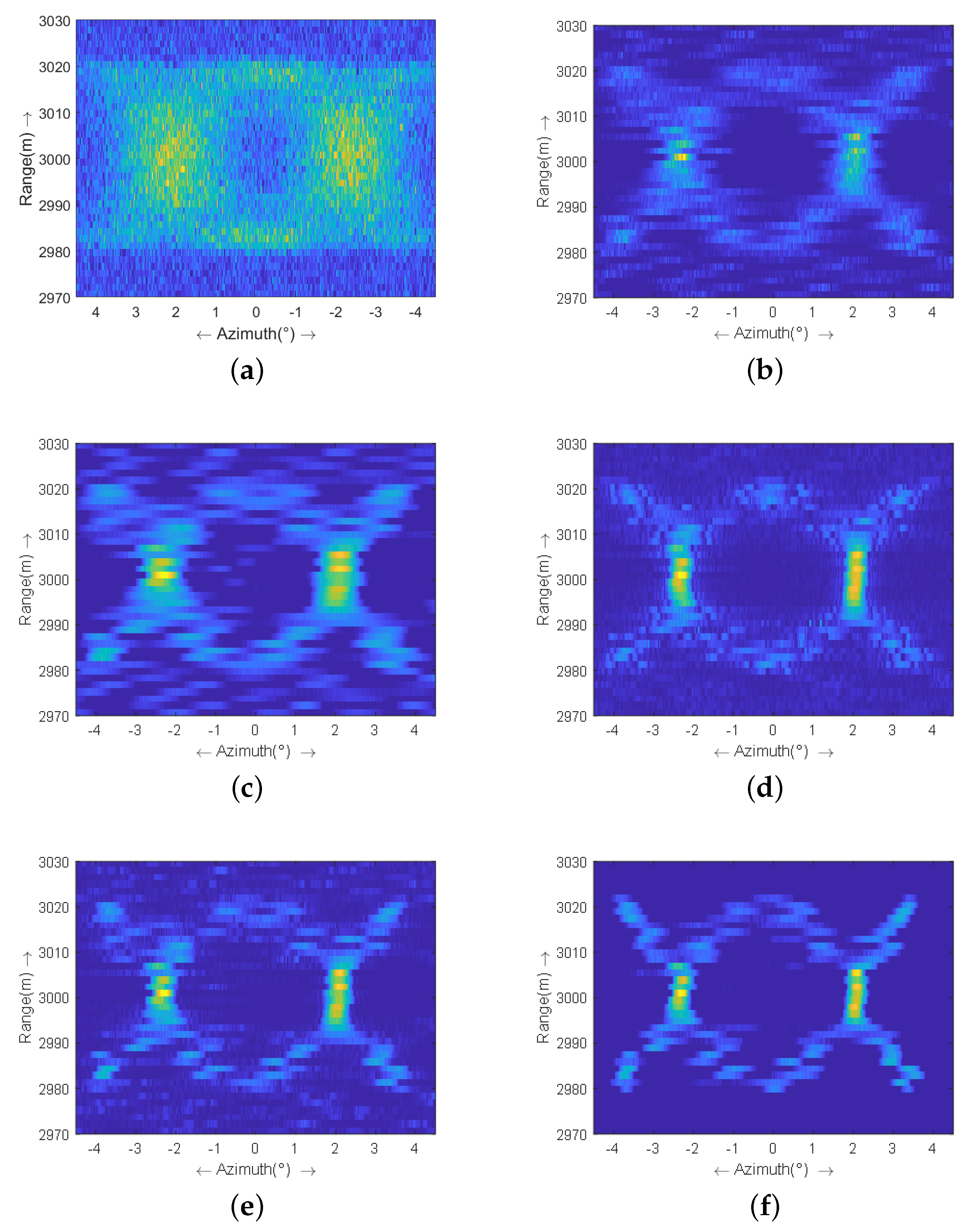

4.2. Experimental Results on Real Radar Data

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Peng, X.; Wang, Y.; Hong, W.; Tan, W.; Wu, Y. Autonomous navigation airborne forward-looking SAR high precision imaging with combination of pseudo-polar formatting and overlapped sub-aperture algorithm. Remote Sens. 2013, 5, 6063–6078. [Google Scholar] [CrossRef] [Green Version]

- Xia, J.; Lu, X.; Chen, W. Multi-channel deconvolution for forward-looking phase array radar imaging. Remote Sens. 2017, 9, 703. [Google Scholar] [CrossRef] [Green Version]

- Curlander, J.C.; McDonough, R.N. Synthetic Aperture Radar: Systems and Signal Processing; Wiley: New York, NY, USA, 1991; Volume 199. [Google Scholar]

- Tang, S.; Guo, P.; Zhang, L.; Lin, C. Modeling and precise processing for spaceborne transmitter/missile-borne receiver SAR signals. Remote Sens. 2019, 11, 346. [Google Scholar] [CrossRef] [Green Version]

- Wu, D.; Zhu, D.Y.; Zhu, Z.D. Research on nomopulse forward-looking imaging algorithm for airborne radar. J. Image Graph. 2010, 15, 462–469. [Google Scholar]

- Chen, H.; Lu, Y.; Mu, H.; Yi, X.; Liu, J.; Wang, Z.; Li, M. Knowledge-aided mono-pulse forward-looking imaging for airborne radar by exploiting the antenna pattern information. Electron. Lett. 2017, 53, 566–568. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, Y.; Huang, Y.; Li, W.; Yang, J. Angular superresolution for scanning radar with improved regularized itera-tive adaptive approach. IEEE Geosci. Remote Sens. Lett. 2016, 13, 846–850. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, Y.; Li, W.; Huang, Y.; Yang, J. Super-resolution surface mapping for scanning radar: Inverse filtering based on the fast iterative adaptive approach. IEEE Geosci. Remote Sens. Lett. 2017, 56, 127–144. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhang, Y.; Zhang, Y.; Huang, Y.; Yang, J. A Sparse Denoising-Based Super-Resolution Method for Scanning Radar Imaging. Remote Sens. 2021, 13, 2768. [Google Scholar] [CrossRef]

- Liu, P.Y.; Keenan, D.M.; Kok, P.; Padmanabhan, V.; O’Byrne, K.T.; Veldhuis, J.D. Sensitivity and specificity of pulse detection using a new deconvolution method. Am. J. -Physiol.-Endocrinol. Metab. 2009, 297, E538–E544. [Google Scholar] [CrossRef] [Green Version]

- Egger, H.; Engl, H.W. Tikhonov regularization applied to the inverse problem of option pricing: Convergence analysis and rates. Inverse Probl. 2005, 21, 1027. [Google Scholar] [CrossRef] [Green Version]

- Chen, H.M.; Li, M.; Wang, Z.; Lu, Y.; Zhang, P.; Wu, Y. Sparse super-resolution imaging for airborne single channel forward-looking radar in expanded beam space via lp regularisation. Electron. Lett. 2015, 15, 863–865. [Google Scholar] [CrossRef]

- Tuo, X.; Zhang, Y.; Huang, Y.; Yang, J. Fast sparse-TSVD super-resolution method of real aperture radar forward-looking imaging. IEEE Geosci. Remote Sens. Lett. 2020, 59, 6609–6620. [Google Scholar] [CrossRef]

- Zhang, Y.; Tuo, X.; Huang, Y.; Yang, J. A tv forward-looking super-resolution imaging method based on tsvd strategy for scanning radar. IEEE Geosci. Remote Sens. Lett. 2020, 58, 4517–4528. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhang, Y.; Huang, Y.; Zhang, Y.; Pei, J.; Yi, Q.; Yang, J. TV-sparse super-resolution method for radar for-ward-looking imaging. IEEE Geosci. Remote Sens. Lett. 2020, 58, 6534–6549. [Google Scholar] [CrossRef]

- Tuo, X.; Zhang, Y.; Huang, Y.; Yang, J. Fast total variation method based on iterative reweighted norm for airborne scanning radar super-resolution imaging. Remote Sens. 2020, 12, 2877. [Google Scholar] [CrossRef]

- Guan, J.; Yang, J.; Huang, Y.; Li, W. Maximum a posteriori based angular superresolution for scanning radar imaging. IEEE Trans. Aerosp. Electron. Syst. 2014, 50, 2389–2398. [Google Scholar] [CrossRef]

- Zha, Y.; Huang, Y.; Sun, Z.; Wang, Y.; Yang, J. Bayesian deconvolution for angular super-resolution in forward-looking scan-ning radar. Sensors 2015, 15, 6924–6946. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tan, K.; Li, W.; Pei, J.; Huang, Y.; Yang, J. An I/Q-channel modeling maximum likelihood super-resolution imaging method for forward-looking scanning radar. IEEE Geosci. Remote Sens. Lett. 2018, 15, 863–867. [Google Scholar] [CrossRef]

- Tan, K.; Li, W.; Zhang, Q.; Huang, Y.; Wu, J.; Yang, J. Penalized maximum likelihood angular super-resolution method for scanning radar forward-looking imaging. Sensors 2018, 18, 912. [Google Scholar] [CrossRef] [Green Version]

- Li, W.; Yang, J.; Huang, Y. Keystone transform-based space-variant range migration correction for airborne forward-looking scanning radar. Electron. Lett. 2012, 48, 121–122. [Google Scholar] [CrossRef]

- Rajagopalan, A.N.; Chaudhuri, S. An MRF model-based approach to simultaneous recovery of depth and restoration from defocused images. IEEE Trans. Pattern Anal. Mach. Intell. 1999, 21, 577–589. [Google Scholar] [CrossRef] [Green Version]

- Gleich, D. Markov random field models for non-quadratic regularization of complex SAR images. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2012, 5, 952–961. [Google Scholar] [CrossRef]

- Panić, M.; Aelterman, J.; Crnojević, V.; Pižurica, A. Sparse recovery in magnetic resonance imaging with a Markov random field prior. IEEE Trans. Med. Imag. 2017, 36, 2104–2115. [Google Scholar] [CrossRef]

- Soccorsi, M.; Gleich, D.; Datcu, M. Huber–Markov model for complex SAR image restoration. IEEE Geosci. Remote Sens. Lett. 2009, 7, 63–67. [Google Scholar] [CrossRef]

- Hansen, P.C.; O’Leary, D.P. The use of the L-curve in the regularization of discrete ill-posed problems. SIAM J. Sci. Comput. 1993, 14, 1487–1503. [Google Scholar] [CrossRef]

- Tan, K.; Li, W.; Huang, Y.; Zhang, Q.; Zhang, Y.; Wu, J.; Yang, J. Vector extrapolation accelerated iterative shrink-age/thresholding regularization method for forward-looking scanning radar super-resolution imaging. J. Appl. Remote Sens. 2018, 12, 045016. [Google Scholar]

- Su, L.; Shao, X.; Wang, L.; Wang, H.; Huang, Y. Richardson-lucy deblurring for the star scene under a thinning motion path. In Satellite Data Compression, Communications, and Processing XI; International Society for Optics and Photonics: Bellingham, DC, USA, 2015; Volume 9501, p. 95010L. [Google Scholar]

- Li, G.; Piccolomini, E.L.; Tomba, I. A stopping criterion for iterative regularization methods. Appl. Numer. Math. 2016, 106, 53–68. [Google Scholar]

- Xu, G.; Sheng, J.; Zhang, L.; Xing, M. Performance improvement in multi-ship imaging for ScanSAR based on sparse rep-resentation. Sci. China Inf. Sci. 2012, 55, 1860–1875. [Google Scholar] [CrossRef]

| Parameters | Value |

|---|---|

| Velocity of the platform | 100 m/s |

| Pulse repetition frequency | 2000 Hz |

| Main-lobe beam width | |

| Antenna scanning velocity | |

| Antenna scanning area | |

| Near range | 2.97 km |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tan, K.; Lu, X.; Yang, J.; Su, W.; Gu, H. A Novel Bayesian Super-Resolution Method for Radar Forward-Looking Imaging Based on Markov Random Field Model. Remote Sens. 2021, 13, 4115. https://doi.org/10.3390/rs13204115

Tan K, Lu X, Yang J, Su W, Gu H. A Novel Bayesian Super-Resolution Method for Radar Forward-Looking Imaging Based on Markov Random Field Model. Remote Sensing. 2021; 13(20):4115. https://doi.org/10.3390/rs13204115

Chicago/Turabian StyleTan, Ke, Xingyu Lu, Jianchao Yang, Weimin Su, and Hong Gu. 2021. "A Novel Bayesian Super-Resolution Method for Radar Forward-Looking Imaging Based on Markov Random Field Model" Remote Sensing 13, no. 20: 4115. https://doi.org/10.3390/rs13204115