Generation of High-Precision Ground Penetrating Radar Images Using Improved Least Square Generative Adversarial Networks

Abstract

:1. Introduction

2. Methodology

2.1. Generative Adversarial Networks (GAN)

2.2. Improved Least Square Generative Adversarial Networks

- (a)

- The generator is susceptible to collapse during the training process;

- (b)

- The generator gradient may vanish and learn nothing;

- (c)

- The generated images are not diverse.

2.3. Evaluation Index

3. Datasets of GPR Images

3.1. Data Collection

3.2. Data Augmentation Methods

3.3. Results of Other GANs

4. Results

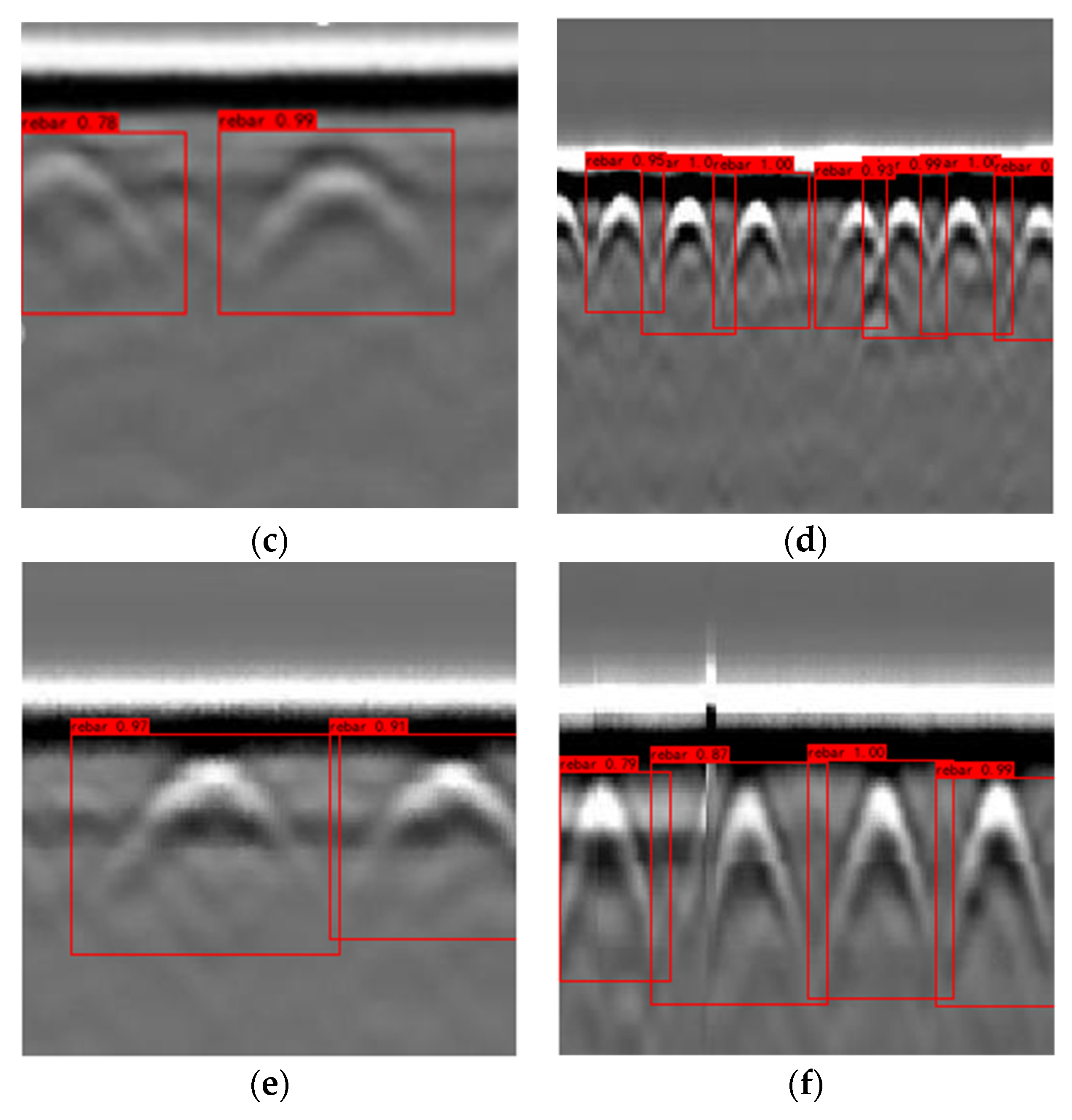

4.1. Pre-Trained YOLOv4

4.2. Testing Results

4.2.1. Training Dataset I and II

4.2.2. Training Dataset III

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Liu, H.; Lu, H.; Lin, J.; Han, F.; Liu, C.; Cui, J.; Spencer, B.F. Penetration Properties of Ground Penetrating Radar Waves Through Rebar Grids. IEEE Geosci. Remote Sens. Lett. 2020, 18, 1199–1203. [Google Scholar] [CrossRef]

- Wang, H.; Liu, H.; Cui, J.; Hu, X.; Sato, M. Velocity analysis of CMP gathers acquired by an array GPR system “Yakumo”: Results from field application to tsunami deposits. Explor. Geophys. 2017, 49, 669–674. [Google Scholar] [CrossRef]

- Bigman, D.P.; Lanzarone, P.M. Investigating Construction History, Labour Investment and Social Change at Ocmulgee National Monument’s Mound A, Georgia, USA, Using Ground-penetrating Radar. Archaeol. Prospect. 2014, 21, 213–224. [Google Scholar] [CrossRef]

- Bigman, D.P.; Day, D.J.; Balco, W.M. The roles of macro- and micro-scale geophysical investigations to guide and monitor excavations at a Middle Woodland site in northern Georgia, USA. Archaeol. Prospect. 2021, 10. [Google Scholar] [CrossRef]

- Liu, H.; Xie, X.; Cui, J.; Takahashi, K.; Sato, M. Groundwater level monitoring for hydraulic characterization of an unconfined aquifer by common mid-point measurements using GPR. J. Environ. Eng. Geophys. 2014, 19, 259–268. [Google Scholar] [CrossRef]

- Xiao, Y.; Su, Y.; Dai, S.; Feng, J.; Xing, S.; Ding, C.; Li, C. Ground experiments of Chang’e-5 lunar regolith penetrating radar. Adv. Space Res. 2019, 63, 3404–3419. [Google Scholar] [CrossRef]

- Kravitz, B.; Mooney, M.; Karlovsek, J.; Danielson, I.; Hedayat, A. Void detection in two-component annulus grout behind a pre-cast segmental tunnel liner using Ground Penetrating Radar. Tunn. Undergr. Space Technol. 2019, 83, 381–392. [Google Scholar] [CrossRef]

- Qin, H.; Zhang, D.; Tang, Y.; Wang, Y. Automation in Construction Automatic recognition of tunnel lining elements from GPR images using deep convolutional networks with data augmentation. Autom. Constr. 2021, 130, 103830. [Google Scholar] [CrossRef]

- Ye, Z.; Zhang, C.; Ye, Y.; Zhu, W. Application of transient electromagnetic radar in quality evaluation of tunnel composite lining. Constr. Build. Mater. 2020, 240, 117958. [Google Scholar] [CrossRef]

- Dinh, K.; Gucunski, N.; Duong, T.H. Automation in Construction An algorithm for automatic localization and detection of rebars from GPR data of concrete bridge decks. Autom. Constr. 2018, 89, 292–298. [Google Scholar] [CrossRef]

- Kaur, P.; Dana, K.J.; Romero, F.A.; Gucunski, N. Automated GPR Rebar Analysis for Robotic Bridge Deck Evaluation. IEEE Trans. Cybern. 2016, 46, 2265–2276. [Google Scholar] [CrossRef] [PubMed]

- Im, H.-C. Measurements of dielectric constants of soil to develop a landslide prediction system. Smart Struct. Syst. 2011, 7, 319–328. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, C.; Lu, Y.; Zheng, T.; Dong, Z.; Tian, Y.; Jia, Y. In-situ recognition of moisture damage in bridge deck asphalt pavement with time-frequency features of GPR signal. Constr. Build. Mater. 2020, 244, 118295. [Google Scholar] [CrossRef]

- Zhang, W.Y.; Hao, T.; Chang, Y.; Zhao, Y.H. Time-frequency analysis of enhanced GPR detection of RF tagged buried plastic pipes. NDT E Int. 2017, 92, 88–96. [Google Scholar] [CrossRef]

- Liu, H.; Shi, Z.; Li, J.; Liu, C.; Meng, X.; Du, Y.; Chen, J. Detection of road cavities in urban cities by 3D ground-penetrating radar. Geophysics 2021, 86, WA25–WA33. [Google Scholar] [CrossRef]

- Dou, Q.; Wei, L.; Magee, D.R.; Cohn, A.G. Real-Time Hyperbola Recognition and Fitting in GPR Data. IEEE Trans. Geosci. Remote Sens. 2017, 55, 51–62. [Google Scholar] [CrossRef] [Green Version]

- Liu, H.; Lin, C.; Cui, J.; Fan, L.; Xie, X.; Spencer, B.F. Detection and localization of rebar in concrete by deep learning using ground penetrating radar. Autom. Constr. 2020, 118, 103279. [Google Scholar] [CrossRef]

- Liu, H.; Wu, K.; Xu, H.; Xu, Y. Lithology Classification Using TASI Thermal Infrared Hyperspectral Data with Convolutional Neural Networks. Remote Sens. 2021, 13, 3117. [Google Scholar] [CrossRef]

- Besaw, L.E.; Stimac, P.J. Deep convolutional neural networks for classifying GPR B-scans. In Detection and Sensing of Mines, Explosive Objects, and Obscured Targets XX.; International Society for Optics and Photonics: Washington, DC, USA, 2015; Volume 9454, p. 945413. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Lei, W.; Hou, F.; Xi, J.; Tan, Q.; Xu, M.; Jiang, X.; Liu, G.; Gu, Q. Automatic hyperbola detection and fitting in GPR B-scan image. Autom. Constr. 2019, 106, 102839. [Google Scholar] [CrossRef]

- Xu, X.; Lei, Y.; Yang, F. Railway Subgrade Defect Automatic Recognition Method Based on Improved Faster R-CNN. Sci. Program. 2018, 2018, 4832972. [Google Scholar] [CrossRef] [Green Version]

- Pham, M.-T.; Evre, S.L. Buried object detection from B-scan ground penetrating radar data using Faster-RCNN. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 6808–6811. [Google Scholar]

- Giannakis, I.; Giannopoulos, A.; Warren, C. A Machine Learning Scheme for Estimating the Diameter of Reinforcing Bars Using Ground Penetrating Radar. IEEE Geosci. Remote Sens. Lett. 2021, 18, 461–465. [Google Scholar] [CrossRef] [Green Version]

- Giannopoulos, A. Modelling ground penetrating radar by GprMax. Constr. Build. Mater. 2005, 19, 755–762. [Google Scholar] [CrossRef]

- Warren, C.; Giannopoulos, A.; Giannakis, I. gprMax: Open source software to simulate electromagnetic wave propagation for Ground Penetrating Radar. Comput. Phys. Commun. 2016, 209, 163–170. [Google Scholar] [CrossRef] [Green Version]

- Veal, C.; Dowdy, J.; Brockner, B.; Anderson, D.T.; Ball, J.E.; Scott, G. Generative adversarial networks for ground penetrating radar in hand held explosive hazard detection. In Detection and Sensing of Mines, Explosive Objects, and Obscured Targets XXIII.; International Society for Optics and Photonics: Washington, DC, USA, 2015. [Google Scholar]

- Zhang, X.; Han, L.; Robinson, M.; Gallagher, A. A Gans-Based Deep Learning Framework for Automatic Subsurface Object Recognition from Ground Penetrating Radar Data. IEEE Access 2021, 9, 39009–39018. [Google Scholar] [CrossRef]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein GAN. arXiv 2017, arXiv:1701.07875. [Google Scholar]

- Uddin, M.S.; Hoque, R.; Islam, K.A.; Kwan, C.; Gribben, D.; Li, J. Converting Optical Videos to Infrared Videos Using Attention GAN and Its Impact on Target Detection and Classification Performance. Remote Sens. 2021, 13, 3257. [Google Scholar] [CrossRef]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Mao, X.; Li, Q.; Xie, H.; Lau, R.Y.K.; Wang, Z.; Smolley, S.P. Least Squares Generative Adversarial Networks. Proc. IEEE Int. Conf. Comput. Vis. 2017, 2017, 2813–2821. [Google Scholar] [CrossRef] [Green Version]

- Fan, Y.; Shao, M.; Zuo, W.; Li, Q. Unsupervised image-to-image translation using intra-domain reconstruction loss. Int. J. Mach. Learn. Cybern. 2020, 11, 2077–2088. [Google Scholar] [CrossRef]

- Venu, S.K.; Ravula, S. Evaluation of deep convolutional generative adversarial networks for data augmentation of chest x-ray images. Future Internet 2021, 13, 8. [Google Scholar] [CrossRef]

- Reichman, D.; Collins, L.M.; Malof, J.M. Some good practices for applying convolutional neural networks to buried threat detection in Ground Penetrating Radar. In Proceedings of the 2017 9th International Workshop on Advanced Ground Penetrating Radar, Edinburgh, UK, 28–30 June 2017. [Google Scholar] [CrossRef]

- Nie, J.; Xiao, Y.; Huang, L.; Lv, F. Time-Frequency Analysis and Target Recognition of HRRP Based on CN-LSGAN, STFT, and CNN. Complexity 2021, 2021, 6664530. [Google Scholar] [CrossRef]

- Xu, B.; Wang, N.; Chen, T.; Li, M. Empirical Evaluation of Rectified Activations in Convolutional Network. arXiv 2015, arXiv:1505.00853. [Google Scholar]

- Salimans, T.; Goodfellow, I.; Zaremba, W.; Cheung, V.; Radford, A.; Chen, X. Improved Techniques for Training GANs. Adv. Neural Inf. Process. 2018, 29, 2234–2242. [Google Scholar] [CrossRef] [Green Version]

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Hochreiter, S. GANs trained by a two time-scale update rule converge to a local Nash equilibrium. Adv. Neural Inf. Process. Syst. 2017, 2017, 6627–6638. [Google Scholar]

- Borji, A. Pros and cons of GAN evaluation measures. Comput. Vis. Image Underst. 2019, 179, 41–65. [Google Scholar] [CrossRef] [Green Version]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Laffin, M.A.; Mohamed, M.A.; Etebari, A.; Hibbard, M.W. Layer segmentation of GPR images using relaxation labeling for landmine detection. In Detection and Sensing of Mines, Explosive Objects, and Obscured Targets XVI.; International Society for Optics and Photonics: Washington, DC, USA, 2011; Volume 8017, p. 80171G. [Google Scholar] [CrossRef]

| Type | Layer | Output Shape |

|---|---|---|

| ConvTranspose1 | ConvTranspose2d | [1,4,4,4096] |

| ReLU | [1,4,4,4096] | |

| ConvTranspose2d | [1,2048,8,8] | |

| BatchNorm2d | [1,2048,8,8] | |

| ReLU | [1,2048,8,8] | |

| ConvTranspose2 (Up3) | ConvTranspose2d | [1,1024,16,16] |

| BatchNorm2d | [1,1024,16,16] | |

| ReLU | [1,1024,16,16] | |

| ConvTranspose2d | [1,512,32,32] | |

| BatchNorm2d | [1,512,32,32] | |

| ReLU | [1,512,32,32] | |

| ConvTranspose3 (Up2) | ConvTranspose2d | [1,256,64,64] |

| BatchNorm2d | [1,256,64,64] | |

| ReLU | [1,256,64,64] | |

| ConvTranspose2d | [1,128,128,128] | |

| BatchNorm2d | [1,128,128,128] | |

| ReLU | [1,128,128,128] | |

| ConvTranspose4 (Up1) | ConvTranspose2d | [1,64,256,256] |

| BatchNorm2d | [1,64,256,256] | |

| ReLU | [1,64,256,256] | |

| ConvTranspose2d | [1,64,256,256] | |

| BatchNorm2d | [1,1,512,512] | |

| ReLU | [1,1,512,512] | |

| ResNet | Residual Blocks | [1,1,512,512] |

| Up4 | ConvTranspose2d | [1,64,256,256] |

| BatchNorm2d | [1,64,256,256] | |

| ReLU | [1,64,256,256] | |

| ConvTranspose2d | [1,64,256,256] | |

| BatchNorm2d | [1,1,512,512] | |

| ReLU | [1,1,512,512] | |

| Tanh | [1,1,512,512] |

| Type | Layer | Output Shape |

|---|---|---|

| Conv1 | Conv2d | [1,32,512,512] |

| LeakyReLU | [1,32,512,512] | |

| Conv2 | Conv2d | [1,64,256,256] |

| BatchNorm2d | [1,64,256,256] | |

| LeakyReLU | [1,64,256,256] | |

| Conv2d | [1,128,128,128] | |

| BatchNorm2d | [1,128,128,128] | |

| LeakyReLU | [1,128,128,128] | |

| Conv3 | Conv2d | [1,256,64,64] |

| BatchNorm2d | [1,256,64,64] | |

| LeakyReLU | [1,256,64,64] | |

| Conv2d | [1,512,32,32] | |

| BatchNorm2d | [1,512,32,32] | |

| LeakyReLU | [1,512,32,32] | |

| Conv4 | Conv2d | [1,1024,16,16] |

| BatchNorm2d | [1,1024,16,16] | |

| LeakyReLU | [1,1024,16,16] | |

| Conv2d | [1,2048,8,8] | |

| BatchNorm2d | [1,2048,8,8] | |

| LeakyReLU | [1,2048,8,8] | |

| Conv5 | Conv2d | [1,4096,4,4] |

| BatchNorm2d | [1,4096,4,4] | |

| LeakyReLU | [1,4096,4,4] | |

| Conv2d | [1,1,1,1] | |

| BatchNorm2d | [1,1,1,1] | |

| LeakyReLU | [1,1,1,1] |

| Parameter | Value |

|---|---|

| Central frequency | 2 GHz |

| Trace interval | 0.04 m |

| Time window | 6 ns |

| Samples | 512 |

| Training Dataset | I | II | III |

|---|---|---|---|

| Field GPR image | √ | × | √ |

| Improved LSGAN image | × | √ | √ |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yue, Y.; Liu, H.; Meng, X.; Li, Y.; Du, Y. Generation of High-Precision Ground Penetrating Radar Images Using Improved Least Square Generative Adversarial Networks. Remote Sens. 2021, 13, 4590. https://doi.org/10.3390/rs13224590

Yue Y, Liu H, Meng X, Li Y, Du Y. Generation of High-Precision Ground Penetrating Radar Images Using Improved Least Square Generative Adversarial Networks. Remote Sensing. 2021; 13(22):4590. https://doi.org/10.3390/rs13224590

Chicago/Turabian StyleYue, Yunpeng, Hai Liu, Xu Meng, Yinguang Li, and Yanliang Du. 2021. "Generation of High-Precision Ground Penetrating Radar Images Using Improved Least Square Generative Adversarial Networks" Remote Sensing 13, no. 22: 4590. https://doi.org/10.3390/rs13224590

APA StyleYue, Y., Liu, H., Meng, X., Li, Y., & Du, Y. (2021). Generation of High-Precision Ground Penetrating Radar Images Using Improved Least Square Generative Adversarial Networks. Remote Sensing, 13(22), 4590. https://doi.org/10.3390/rs13224590