Efficient and Safe Robotic Autonomous Environment Exploration Using Integrated Frontier Detection and Multiple Path Evaluation

Abstract

:1. Introduction

- We propose an integrated frontier detection and maintenance method. A complete environmental exploration can be achieved by sufficient frontier detection and incrementally maintaining reachable and informative frontiers.

- A multiple path generation method is proposed using the wavefront propagation trend of the fast-marching method and a well-designed velocity field to generate safe paths with a good view.

- A multi-object utility function is proposed for frontier evaluation to obtain the optimal path, improving exploration efficiency. A path smoothing method with dynamic parameter adjustment improves the smoothness of the optimal path.

2. Related Works

2.1. Frontier Detection Methods

2.2. Decision-Making Methods

2.3. Path-Planning Methods

3. Problem Statement

4. Proposed Method

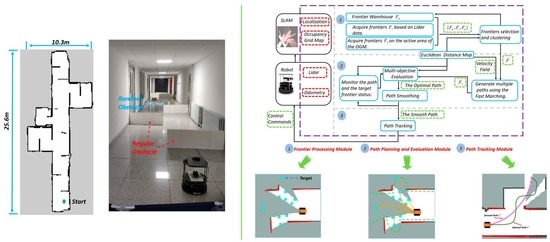

4.1. Method Overview

| Algorithm 1. Autonomous Exploration. | |

| Input: , RobotLocation, LidarData,←∅, ExplorationFlag←True | |

| Output: Complete map of environment | |

| 1 | whileExplorationFlag=True∧(ReplanTrigger=True∨ TimingTrigger=True) do |

| 2 | DistanceMap←UpdatingEuclideanDistanceMap(); |

| 3 | ←Frontier Detection and Maintenance(,, |

| DistanceMap, RobotLocation, LidarData); | |

| 4 | if = ∅ then |

| 5 | ExplorationFlag = False; |

| 6 | break; |

| 7 | end |

| 8 | OptimalPath←Multiple paths planning and evaluation(,, DistanceMap, RobotLocation); |

| 9 | SmoothPath←Smooth the path,, DistanceMap, OptimalPath); |

| 10 | Publish Smooth Path to Path Tracking Module; |

| 11 | end |

4.2. Frontier Detection and Maintenance

| Algorithm 2. Frontier Detection and Maintenance. | |

| Input: , RobotLocation, LidarData, , DistanceMap | |

| Output: | |

| 1 | ←∅, ←∅; |

| 2 | ActiveArea← AquireActiveArea (, RobotLocation, LidarData); |

| 3 | ← SearchFronteirsOnOGM (ActiveArea); |

| 4 | SetObstacle (RobotLocation, DistanceMap); |

| 5 | foreach frontier f in {,} do |

| 6 | if GetDistance(f)<safe dist ∨ (GetNearestObsCood (f)= |

| RobotLocation∧|| f- RobotLocation || <) ∨GetInformationCost< | |

| InfoThreshhold then | |

| 7 | remove f; |

| 8 | end |

| 9 | end |

| 10 | ← AquireFrontiersUsingLidarDate (LidarData, RobotLocation); |

| 11 | foreach frontier f indo |

| 12 | if GetInformationCost<InfoThreshhold then |

| 13 | remove f; |

| 14 | end |

| 15 | end |

| 16 | RemoveObstacle(RobotLocation, DistanceMap); |

| 17 | if {,,}= ∅ then |

| 18 | ←∅; |

| 19 | return; |

| 20 | else |

| 21 | ← Cluttering(,,); |

| 22 | ←∅, ←; |

| 23 | end |

4.3. Multiple Paths Planning and Evaluation

4.3.1. Multiple Path Generation Using Fast Marching

4.3.2. Path Evaluation

4.3.3. Path Smoothing and Tracking

| Algorithm 3. Smooth the path. | |

| Input: ,, DistanceMap, OptimalPath | |

| Output: SmoothPath | |

| 1 | OptTimes←0; |

| 2 | BsplinePath←GenerateBspline (OptimalPath); |

| 3 | SmoothPath←BsplinePathOptimization (BsplinePath); |

| 4 | whilePathCheck(SmoothPath)∧OptTimes < MaxOptTimesdo |

| 5 | OptTimes + +; |

| 6 | ←(MaxOptTimes—OptTimes)/MaxOptTimes; |

| 7 | SmoothPath←BsplinePathOptimization (BsplinePath); |

| 8 | end |

| 9 | ifOptTimes = MaxOptTimes then |

| 10 | ←0; |

| 11 | SmoothPath←BsplinePathOptimization (BsplinePath); |

| 12 | end |

5. Experimental Research and Results

5.1. Experiment Setup

5.2. Performance Comparison in Simulation

5.2.1. The Autonomous Exploration Process

5.2.2. Performance Comparison and Result

5.3. Real-World Experiments

6. Discussion

7. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Ghaffari Jadidi, M.; Valls Miro, J.; Dissanayake, G. Sampling-based incremental information gathering with applications to robotic exploration and environmental monitoring. Int. J. Robot. Res. 2019, 38, 658–685. [Google Scholar] [CrossRef] [Green Version]

- Girdhar, Y.; Dudek, G. Modeling curiosity in a mobile robot for long-term autonomous exploration and monitoring. Auton. Robot. 2016, 40, 1267–1278. [Google Scholar] [CrossRef] [Green Version]

- Fentanes, J.P.; Gould, I.; Duckett, T.; Pearson, S.; Cielniak, G. 3-d soil compaction mapping through kriging-based exploration with a mobile robot. IEEE Robot. Autom. Lett. 2018, 3, 3066–3072. [Google Scholar] [CrossRef]

- Niroui, F.; Zhang, K.; Kashino, Z.; Nejat, G. Deep reinforcement learning robot for search and rescue applications: Exploration in unknown cluttered environments. IEEE Robot. Autom. Lett. 2019, 4, 610–617. [Google Scholar] [CrossRef]

- Basilico, N.; Amigoni, F. Exploration strategies based on multi-criteria decision making for searching environments in rescue operations. Auton. Robot. 2011, 31, 401–417. [Google Scholar] [CrossRef]

- Goian, A.; Ashour, R.; Ahmad, U.; Taha, T.; Almoosa, N.; Seneviratne, L. Victim Localization in USAR Scenario Exploiting Multi-Layer Mapping Structure. Remote Sens. 2019, 11, 2704. [Google Scholar] [CrossRef] [Green Version]

- Palomeras, N.; Hurtós, N.; Vidal, E.; Carreras, M. Autonomous exploration of complex underwater environments using a probabilistic next-best-view planner. IEEE Robot. Autom. Lett. 2019, 4, 1619–1625. [Google Scholar] [CrossRef]

- Palomeras, N.; Carreras, M.; Andrade-Cetto, J. Active SLAM for Autonomous Underwater Exploration. Remote Sens. 2019, 11, 2827. [Google Scholar] [CrossRef] [Green Version]

- Tu, Z.; Lou, Y.; Guo, W.; Song, W.; Wang, Y. Design and Validation of a Cascading Vector Tracking Loop in High Dynamic Environments. Remote Sens. 2021, 13, 2000. [Google Scholar] [CrossRef]

- Yang, Z.; Liu, H.; Qian, C.; Shu, B.; Zhang, L.; Xu, X.; Zhang, Y.; Lou, Y. Real-Time Estimation of Low Earth Orbit (LEO) Satellite Clock Based on Ground Tracking Stations. Remote Sens. 2020, 12, 2050. [Google Scholar] [CrossRef]

- Umari, H.; Mukhopadhyay, S. Autonomous Robotic Exploration Based on Multiple Rapidly-Exploring Randomized Trees. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 1396–1402. [Google Scholar]

- Keidar, M.; Kaminka, G.A. Efficient frontier detection for robot exploration. Int. J. Robot. Res. 2014, 33, 215–236. [Google Scholar] [CrossRef]

- Yamauchi, B. A frontier-based approach for autonomous exploration. In Proceedings of the 1997 IEEE International Symposium on Computational Intelligence in Robotics and Automation CIRA’97. Towards New Computational Principles for Robotics and Automation, Monterey, CA, USA, 10–11 July 1997; pp. 146–151. [Google Scholar]

- Shapovalov, D.; Pereira, G.A.S. Tangle-Free Exploration with a Tethered Mobile Robot. Remote Sens. 2020, 12, 3858. [Google Scholar] [CrossRef]

- Bai, S.; Wang, J.; Chen, F.; Englot, B. Information-theoretic exploration with Bayesian optimization. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 1816–1822. [Google Scholar]

- Julian, B.J.; Karaman, S.; Rus, D. On mutual information-based control of range sensing robots for mapping applications. Int. J. Robot. Res. 2014, 33, 1375–1392. [Google Scholar] [CrossRef]

- Wang, C.; Chi, W.; Sun, Y.; Meng, M.Q. Autonomous robotic exploration by incremental road map construction. IEEE Trans. Autom. Sci. Eng. 2019, 16, 1720–1731. [Google Scholar] [CrossRef]

- Oršulić, J.; Miklić, D.; Kovačić, Z. Efficient dense frontier detection for 2-d graph slam based on occupancy grid submaps. IEEE Robot. Autom. Lett. 2019, 4, 3569–3576. [Google Scholar] [CrossRef] [Green Version]

- Senarathne, P.; Wang, D. Incremental algorithms for Safe and Reachable Frontier Detection for robot exploration. Robot. Auton. Syst. 2015, 72, 189–206. [Google Scholar] [CrossRef]

- Stachniss, C.; Grisetti, G.; Burgard, W. Information Gain-based Exploration Using Rao-Blackwellized Particle Filters. Robot. Sci. Syst. 2005, 2, 65–72. [Google Scholar]

- Li, L.; Zuo, X.; Peng, H.; Yang, F.; Zhu, H.; Li, D.; Liu, J.; Su, F.; Liang, Y.; Zhou, G. Improving Autonomous Exploration Using Reduced Approximated Generalized Voronoi Graphs. J. Intell. Robot. Syst. 2020, 99, 91–113. [Google Scholar] [CrossRef]

- Wang, C.; Ma, H.; Chen, W.; Liu, L.; Meng, M.Q. Efficient Autonomous Exploration With Incrementally Built Topological Map in 3-D Environments. IEEE Trans. Instrum. Meas. 2020, 69, 9853–9865. [Google Scholar] [CrossRef]

- Gao, H.; Zhang, X.; Wen, J.; Yuan, J.; Fang, Y. Autonomous indoor exploration via polygon map construction and graph-based SLAM using directional endpoint features. IEEE Trans. Autom. Sci. Eng. 2018, 16, 1531–1542. [Google Scholar] [CrossRef]

- Sun, Z.; Wu, B.; Xu, C.; Sarma, S.E.; Yang, J.; Kong, H. Frontier Detection and Reachability Analysis for Efficient 2D Graph-SLAM Based Active Exploration. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020. [Google Scholar]

- Qiao, W.; Fang, Z.; Si, B. A sampling-based multi-tree fusion algorithm for frontier detection. Int. J. Adv. Robot. Syst. 2019, 16, 1737022803. [Google Scholar] [CrossRef]

- LaValle, S.M. Rapidly-Exploring Random Trees: A New Tool for Path Planning; Iowa State University: Ames, IA, USA, 1998. [Google Scholar]

- Sethian, J.A. Level Set Methods and Fast Marching Methods: Evolving Interfaces in Computational Geometry, Fluid Mechanics, Computer Vision, and Materials Science; Cambridge University Press: Cambridge, UK, 1999. [Google Scholar]

- Gao, W.; Booker, M.; Adiwahono, A.; Yuan, M.; Wang, J.; Yun, Y.W. An improved frontier-based approach for autonomous exploration. In Proceedings of the 2018 15th International Conference on Control, Automation, Robotics and Vision (ICARCV), Singapore, 18–21 November 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 292–297. [Google Scholar]

- Fang, B.; Ding, J.; Wang, Z. Autonomous robotic exploration based on frontier point optimization and multistep path planning. IEEE Access. 2019, 7, 46104–46113. [Google Scholar] [CrossRef]

- Fox, D.; Burgard, W.; Thrun, S. The dynamic window approach to collision avoidance. IEEE Robot. Autom. Mag. 1997, 4, 23–33. [Google Scholar] [CrossRef] [Green Version]

- Lauri, M.; Ritala, R. Planning for robotic exploration based on forward simulation. Robot. Auton. Syst. 2016, 83, 15–31. [Google Scholar] [CrossRef] [Green Version]

- Ding, J.; Fang, Y. Multi-strategy based exploration for 3D mapping in unknown environments using a mobile robot. In Proceedings of the 2019 Chinese Control Conference (CCC), Guangzhou, China, 27–30 July 2019; pp. 4732–4738. [Google Scholar]

- Bircher, A.; Kamel, M.; Alexis, K.; Oleynikova, H.; Siegwart, R. Receding horizon “next-best-view” planner for 3d exploration. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1462–1468. [Google Scholar]

- Karaman, S.; Frazzoli, E. Sampling-based algorithms for optimal motion planning. Int. J. Robot. Res. 2011, 30, 846–894. [Google Scholar] [CrossRef]

- Pareekutty, N.; James, F.; Ravindran, B.; Shah, S.V. qRRT: Quality-Biased Incremental RRT for Optimal Motion Planning in Non-Holonomic Systems. arXiv 2021, arXiv:2101.02635. [Google Scholar]

- Lai, T.; Ramos, F.; Francis, G. Balancing global exploration and local-connectivity exploitation with rapidly-exploring random disjointed-trees. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QB, USA, 20–24 January 2019; pp. 5537–5543. [Google Scholar]

- Li, X.; Qiu, H.; Jia, S.; Gong, Y. Dynamic algorithm for safe and reachable frontier point generation for robot exploration. In Proceedings of the 2016 IEEE International Conference on Mechatronics and Automation, Harbin, China, 7–10 August 2016; pp. 2088–2093. [Google Scholar]

- Gómez, J.V.; Lumbier, A.; Garrido, S.; Moreno, L. Planning robot formations with fast marching square including uncertainty conditions. Robot. Auton. Syst. 2013, 61, 137–152. [Google Scholar] [CrossRef] [Green Version]

- Valero-Gomez, A.; Gomez, J.V.; Garrido, S.; Moreno, L. The path to efficiency: Fast marching method for safer, more efficient mobile robot trajectories. IEEE Robot. Autom. Mag. 2013, 20, 111–120. [Google Scholar] [CrossRef] [Green Version]

- Sun, Y.; Zhang, C.; Liu, C. Collision-free and dynamically feasible trajectory planning for omnidirectional mobile robots using a novel B-spline based rapidly exploring random tree. Int. J. Adv. Robot. Syst. 2021, 18, 202721185. [Google Scholar] [CrossRef]

- Garrido, S.; Moreno, L.; Blanco, D. Exploration of 2D and 3D environments using Voronoi transform and fast marching method. J. Intell. Robot. Syst. 2009, 55, 55–80. [Google Scholar] [CrossRef]

- Usenko, V.; Von Stumberg, L.; Pangercic, A.; Cremers, D. Real-time trajectory replanning for MAVs using uniform B-splines and a 3D circular buffer. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 215–222. [Google Scholar]

- Zhou, B.; Gao, F.; Wang, L.; Liu, C.; Shen, S. Robust and efficient quadrotor trajectory generation for fast autonomous flight. IEEE Robot. Autom. Lett. 2019, 4, 3529–3536. [Google Scholar] [CrossRef] [Green Version]

- Lau, B.; Sprunk, C.; Burgard, W. Improved updating of Euclidean distance maps and Voronoi diagrams. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18-22 October 2010; pp. 281–286. [Google Scholar]

- Bresenham, J.E. Algorithm for computer control of a digital plotter. IBM Syst. J. 1965, 4, 25–30. [Google Scholar] [CrossRef]

- Comaniciu, D.; Meer, P. Mean shift: A robust approach toward feature space analysis. IEEE Trans. Pattern Anal. 2002, 24, 603–619. [Google Scholar] [CrossRef] [Green Version]

- Lavalle, S.M. Planning Algorithms: Planning Algorithms; Cambridge University Press: Cambridge, UK, 2006. [Google Scholar]

- Qin, K. General matrix representations for B-splines. Vis. Comput. 2000, 16, 177–186. [Google Scholar] [CrossRef]

- Kong, J.; Pfeiffer, M.; Schildbach, G.; Borrelli, F. Kinematic and dynamic vehicle models for autonomous driving control design. In Proceedings of the 2015 IEEE Intelligent Vehicles Symposium (IV), Seoul, Korea, 29 June–1 July 2015; pp. 1094–1099. [Google Scholar]

- Gerkey, B.; Vaughan, R.T.; Howard, A. The player/stage project: Tools for multi-robot and distributed sensor systems. In Proceedings of the 11th International Conference on Advanced Robotics, Coimbra, Portugal, 30 June–3 July 2003; Volume 1, pp. 317–323. [Google Scholar]

- Grisetti, G.; Stachniss, C.; Burgard, W. Improved techniques for grid mapping with rao-blackwellized particle filters. IEEE Trans. Robot. 2007, 23, 34–46. [Google Scholar] [CrossRef] [Green Version]

| References | Map Type | Frontiers Detection | Decision Making | Path Planning | Experimental Scenario | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Occupancy Grid Map | Topological Map | Feature Map | Occupancy Grid Map | Lidar Data | Lidar Detection Range | Lidar Data Quality | Actual Path | Movement Consistency | Multiple Path Generation | Path Smoothing | Path Clearance | Simulation | Real-World | |

| [11] | √ | – | – | √ | – | – | – | – | – | – | – | – | √ | √ |

| [12] | √ | – | – | √ | √ | √ | – | – | – | – | – | – | √ | – |

| [13] | √ | – | – | √ | – | – | – | – | – | – | – | – | √ | √ |

| [15] | √ | – | – | √ | – | – | – | – | – | – | – | – | √ | √ |

| [17] | √ | √ | – | √ | – | √ | – | √ | – | √ | √ | – | √ | √ |

| [18] | √ | – | – | √ | – | – | – | – | – | – | – | – | √ | √ |

| [19] | √ | – | – | √ | – | √ | – | – | – | – | – | – | √ | – |

| [20] | √ | – | – | √ | – | – | – | √ | – | √ | – | – | √ | √ |

| [21] | √ | √ | – | √ | – | – | – | √ | – | – | – | – | √ | √ |

| [23] | – | – | √ | – | – | – | – | √ | – | √ | – | – | √ | √ |

| [24] | √ | – | – | √ | – | – | – | – | – | – | – | – | √ | √ |

| [25] | √ | – | – | √ | – | – | – | – | – | – | – | – | √ | √ |

| [37] | √ | √ | – | – | √ | – | – | √ | – | – | – | – | √ | √ |

| [28] | √ | – | – | √ | – | – | – | – | √ | – | – | – | √ | √ |

| [29] | √ | – | – | √ | – | – | – | – | – | √ | – | – | √ | √ |

| [41] | √ | – | – | √ | – | – | – | – | – | – | – | √ | √ | – |

| Our work | √ | – | – | √ | √ | √ | √ | √ | √ | √ | √ | √ | √ | √ |

| Occupancy grid map. | |

| Single frontier. | |

| The frontier warehouse is used to store the frontiers obtained in each exploration cycle. | |

| Stores the frontiers obtained from the active area of occupancy grid map . | |

| Stores frontiers acquired using lidar data. | |

| The clustered frontiers. | |

| Frontiers with valid paths. | |

| The maximum angular deviation that can be followed. | |

| , , | The coefficient in utility function, where is only related to the physical characteristics of the robot. |

| Lidar max range. | |

| The confidence range ratio. | |

| , | represents the path which is composed of waypoint . |

| Distance function, obtain the distance between any position on the map and the obstacle. |

| Method | Exploration Distance(m) | Exploration Time(s) | ||||||

|---|---|---|---|---|---|---|---|---|

| Avg | Std | Max | Min | Avg | Std | Max | Min | |

| Laboratory: 15 m × 15 m, Lidar: Filed of view 270°, Max range 6 m | ||||||||

| RRT-exploration (Geta = 4) | 86.1 | 6.9 | 97.0 | 75.7 | 346.3 | 27.3 | 410.0 | 313.5 |

| RRT-exploration (Geta = 6) | 80.9 | 5.1 | 90.4 | 75.1 | 366.6 | 71.6 | 515.5 | 293.0 |

| Nearest Frontier | 68.6 | 7.4 | 77.3 | 53.8 | 382.6 | 54.0 | 486.0 | 323.0 |

| Proposed (2,1) | 58.8 | 5.9 | 67.6 | 50.9 | 304.7 | 22.6 | 334.5 | 262.5 |

| Proposed (1,1) | 56.6 | 3.3 | 62.2 | 51.5 | 280.6 | 22.9 | 310.0 | 245.5 |

| Corridor: 20 m × 15 m, Lidar: Filed of view 360°, Max range 8 m | ||||||||

| RRT-exploration (Geta = 4) | 97.6 | 9.8 | 115.4 | 81.6 | 499.0 | 87.1 | 618.5 | 394.0 |

| RRT-exploration (Geta = 6) | 89.5 | 7.0 | 97.7 | 74.6 | 460.0 | 59.4 | 556.5 | 388.0 |

| Nearest Frontier | 88.2 | 5.6 | 94.7 | 77.3 | 430.8 | 33.4 | 497.5 | 369.0 |

| Proposed (2,1) | 77.9 | 2.4 | 82.6 | 74.1 | 346.2 | 24.2 | 398.5 | 316.0 |

| Proposed (1,1) | 80.9 | 3.3 | 85.6 | 75.0 | 336.6 | 15.9 | 360.0 | 301.0 |

| Office: 20 m × 20 m, Lidar: Filed of view 360°, Max range 6 m | ||||||||

| RRT-exploration (Geta = 4) | 132.3 | 17.9 | 163.8 | 109.4 | 517.6 | 73.7 | 656.0 | 462.0 |

| RRT-exploration (Geta = 6) | 129.5 | 18.1 | 146.4 | 96.0 | 535.2 | 64.5 | 619.0 | 442.0 |

| Nearest Frontier | 73.5 | 1.8 | 78.1 | 71.9 | 434.9 | 34.9 | 475.5 | 356.5 |

| Proposed (2,1) | 67.6 | 1.7 | 71.1 | 64.9 | 339.2 | 22.1 | 377.5 | 307.0 |

| Proposed (1,1) | 69.6 | 4.1 | 76.3 | 63.4 | 323.8 | 19.4 | 363.5 | 284.5 |

| Method | Time (ms) | Length (m) | Clearance (m) | Success Rate | Continuity |

|---|---|---|---|---|---|

| 1 | |||||

| RRT* | 89.5 | 26.7 | 1.0 | 100% | |

| Proposed Method | 54.9 | 27.0 | 1.2 | 100% | |

| 2 | |||||

| RRT* | 181.8 | 37.8 | 1.1 | 100% | |

| Proposed Method | 60.3 | 38.7 | 1.4 | 100% | |

| 3 | |||||

| RRT* | 332.7 | 34.0 | 0.9 | 98% | |

| Proposed Method | 56.8 | 34.3 | 1.1 | 100% | |

| 4 | |||||

| RRT* | 46.3 | 21.2 | 1.0 | 99% | |

| Proposed Method | 33.0 | 20.7 | 1.2 | 100% | |

| Method | Exploration Distance(m) | Exploration Time(s) | Completeness of the Mapping | Smoothness Comparison | |

|---|---|---|---|---|---|

| Proposed(2,1) | |||||

| 1 | 64.7 | 438.0 | 0.998 | 0.32 | |

| 2 | 57.0 | 435.0 | 0.982 | 0.31 | |

| 3 | 57.4 | 402.5 | 0.996 | 0.37 | |

| Avg | 59.7 | 425.2 | 0.992 | 0.33 | |

| Proposed(1,1) | |||||

| 1 | 54.0 | 394.0 | 0.996 | 0.33 | |

| 2 | 63.4 | 454.0 | 1.013 | 0.33 | |

| 3 | 59.8 | 408.0 | 0.996 | 0.31 | |

| Avg | 59.1 | 418.7 | 0.997 | 0.32 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, Y.; Zhang, C. Efficient and Safe Robotic Autonomous Environment Exploration Using Integrated Frontier Detection and Multiple Path Evaluation. Remote Sens. 2021, 13, 4881. https://doi.org/10.3390/rs13234881

Sun Y, Zhang C. Efficient and Safe Robotic Autonomous Environment Exploration Using Integrated Frontier Detection and Multiple Path Evaluation. Remote Sensing. 2021; 13(23):4881. https://doi.org/10.3390/rs13234881

Chicago/Turabian StyleSun, Yuxi, and Chengrui Zhang. 2021. "Efficient and Safe Robotic Autonomous Environment Exploration Using Integrated Frontier Detection and Multiple Path Evaluation" Remote Sensing 13, no. 23: 4881. https://doi.org/10.3390/rs13234881

APA StyleSun, Y., & Zhang, C. (2021). Efficient and Safe Robotic Autonomous Environment Exploration Using Integrated Frontier Detection and Multiple Path Evaluation. Remote Sensing, 13(23), 4881. https://doi.org/10.3390/rs13234881