Abstract

To pursue the development and validation of coupled fire-atmosphere models, the wildland fire modeling community needs validation data sets with scenarios where fire-induced winds influence fire front behavior, and with high temporal and spatial resolution. Helicopter-borne infrared thermal cameras have the potential to monitor landscape-scale wildland fires at a high resolution during experimental burns. To extract valuable information from those observations, three-step image processing is required: (a) Orthorectification to warp raw images on a fixed coordinate system grid, (b) segmentation to delineate the fire front location out of the orthorectified images, and (c) computation of fire behavior metrics such as the rate of spread from the time-evolving fire front location. This work is dedicated to the first orthorectification step, and presents a series of algorithms that are designed to process handheld helicopter-borne thermal images collected during savannah experimental burns. The novelty in the approach lies on its recursive design, which does not require the presence of fixed ground control points, hence relaxing the constraint on field of view coverage and helping the acquisition of high-frequency observations. For four burns ranging from four to eight hectares, long-wave and mid infra red images were collected at 1 and 3 Hz, respectively, and orthorectified at a high spatial resolution (<1 m) with an absolute accuracy estimated to be lower than 4 m. Subsequent computation of fire radiative power is discussed with comparison to concurrent space-borne measurements.

1. Introduction

High-resolution active fire monitoring and an associated fire behavior metric are growing needs in the fire science community, in particular in the development of coupled fire-atmosphere systems [1]. To support these efforts, we present here a new approach to process Infra Red (IR) observation from landscape-scale (>100 m) experimental fires, specifically helicopter-borne images collected by handheld Long Wave Infra Red (LWIR) and Middle Infra Red (MIR) cameras. This monitoring system enables far more spatial and temporal details to be extracted than from traditional overpasses by a fixed-wing survey airplane.

2. Background

The global wildfire activity shows sign of a decreasing trend over the last two decades [2]. While its health and societal impact was recently estimated on the basis of fine particle matter emissions [3], its associated environmental impact is still difficult to estimate as limited global data sets are available, and its economical impact lack data to be quantitative [4]. However, one clear trend is that in fire-prone regions such as western North-America or south-eastern Australia, wildfire activity has increased over the same time period [2,5] because of severe drought resulting from climate change [6] and increase of human density in Wildand-Urban Interface (WUI) [4]. To improve mitigation of wildfire effects in fire-prone regions, fire-atmosphere coupled systems have been developed and are now intended to become operational [7]. These coupled systems include CAWFE [8], WRF-SFIRE [9,10], and MesoNH-ForeFire [11,12].

To pursue this model validation and development effort, we need data sets that simultaneously monitor fire front (heat release rate, geometry, propagation), plume dynamics (convective flux, geometry), and atmospheric state (ambient profile), in ideally a complex scenario where fire-atmosphere interactions influence the fire front dynamics, and a timely manner that can capture the coupled system dynamics [13]. Several field-work campaigns have been designed with such intention, but unfortunately they came out with limited data on fire behavior monitoring. In the RxCADRE experiment [14], burns were set in heterogeneous vegetation and with complex ignition patterns that make them difficult to monitor without fast-return observations. The return time of the fixed-wing airplane that was operating the thermal camera could be as long as 1 min [15]. According to Rate Of Spread (ROS) ground measurement made on site [16] (e.g., m s), the fire can spread by up to 25 m during that time interval, while a maximum fire front depth of 3 m was recorded [16]. This means that between two observations, a fire front could spread a distance almost 10 times larger than its depth. If the fire was propagating through heterogeneous vegetation, then most certainly at the new observation time the fire would be completely different from what it was at the previous observation. The requirement of high-frequency airborne observations is also reported in the detailed analysis of [17] from a pine stand experimental burn campaign. More recently, in the FireFlux II experiment corresponding to a large homogeneous grass fire [18], thermal images were used to monitor the fire front but from a low vantage point. This made flame distortion effects too important to accurately estimate fire front geometry and fire activity over the whole fire duration. The RxCADRE and FireFlux II examples highlight the difficulty to acquire informative data for the different components of the coupled fire-atmosphere models.

Landscape-scale fire behavior monitoring is however achievable with an IR handheld thermal imager operated from a hovering helicopter. Such a platform makes it possible to acquire high-frequency observations from a near-nadir view point at a relatively low cost as compared to survey aircraft. Both LWIR [19,20] and MIR [21] imagers have been used to collect IR images from burns of several hectares at a high spatial (>1 m) and temporal (>1 s) resolution. Such images were used to compute comprehensive measures of fire behavior metrics, e.g., ROS [19,21], Fire Intensity (FI) [19], and fire radiative heat flux (Fire Radiative Power, FRP, [21]). Hovering Unmanned Aerial Vehicle (UAV) platforms are also quite promising for prescribed/experimental burn application. However, their use for active burn monitoring is still restricted to small burns [22,23,24] (10 m), making present UAV applications to landscape-scale monitoring difficult.

To extract fire metrics from the collected IR observations, image processing tasks are required and can be divided into three steps: orthorectification, which consists in warping the raw images on a fixed coordinate system grid to correct for camera lens distortion and perspective effects induced by camera orientation and terrain; segmentation, which involves delineating the fire front location out of each Orthorectified image; and finally fire behavior metrics computation (e.g., ROS) from consecutive fire front locations. One main difficulty when dealing with images that were collected with a handheld imager is that cameras are usually not coupled with an inertial measurement unit as in survey aircraft [15]. Therefore, images contain no information on the camera position and orientation (hereafter named camera pose), making their orthorectification challenging. For example, in the work of [19,25,26], each image is orthorectified using a manually-selected pixel location of a known geographic position (i.e., Ground Control Point, GCP). At least, four GCPs are required per image [27]. GCPs are commonly set with fires lighted at the corners of the burn plot [19,21,25]. Hereafter, these GCPs are named corner fires. The “corner fire” approach comes with several constraints. Every image for which less than four corner fires are visible or identifiable would have to be disregarded. This makes high-frequency observation acquisition more challenging because controlling a camera operated from an hovering helicopter can be difficult when the aircraft is subject to atmospheric turbulence, potentially enhanced by the fire. This also implies that the burn plot needs to be set with hot corner fires prior to fire ignition, imposing management constraints on the fire crew.

The development of a methodology to perform orthorectification (step a) to a large number of IR fire images collected from a moving platform and at a high frequency is a current need of the fire science community [23]. A recent work [28] proposed a first attempt to stabilize (image-to-image registration) a time series of IR images. However, the proposed methodology has some limitations since it only features good performance for images recorded from a stable vantage point (see drift in all footage of the Supplementary Material of [28]).

3. Objectives

This work is part of an effort to simplify IR monitoring during landscape-scale experimental burns, with the objective to compute fire behavior metrics from MIR and LWIR observations collected with a high frequency imager delivered without information on camera location and orientation. In continuity with the work of [21], we design and evaluate a method to orthorectify landscape-scale experimental burn observations. The constraint on corner fires is removed (there is no need of fixed GCPs present during the whole fire duration). However, the obligation of having a set scene remains (the final burnt area needs to be delimited), as well as a burning plot with a constant slope terrain.

This article is structured as follows. Section 4 presents the available data. Section 5 gives an overview of the orthorectification algorithms and their limitations. Section 6 shows the algorithm results. Finally, Section 7 discusses orthorectification accuracy as well as an application to compute FRP and its time integration, the Fire Radiative Energy (FRE). Supplementary material provides a detailed description of the image processing algorithms. Tables, images and equations from the supplementary material are referenced with a S (e.g., Figure S4).

4. Experimental Burn Data

Data were collected in late August 2014 during a series of four experimental burns conducted in Kruger National Park (KNP, South Africa) at a time around the peak of the region’s fire season (Table 1).

Table 1.

Summary of the four KNP14 experimental burns. Burns are named after the strings of plot it belongs and its number in the strings. There are 16 strings in KNP, which account for as many replicates used in the experimental burn plot trial initiated in 1954 [29]. The table entry “ terrain elevation” corresponds to the standard deviation of the plane surface approximation difference with the terrain model from the NASA Shuttle Radar Topography Mission [30]. All plots are shown here at the same scale.

Each fire is conducted in one of the long-term experimental burn plots (<8 ha) covered with savannah-type vegetation [31]. Plots are well approximated with a plane surface Digital Elevation Model (DEM) (see standard deviation of plane surface approximation to DEM from the NASA Shuttle Radar Topography Mission [30], “ terrain elevation”in Table 1). Following the KNP nomenclature plot name, the four fires are named: Shabeni1, Shabeni3, Skukuza4, and Skukuza6.

Fuel load and moisture are estimated with pre-fire in situ destructive sampling (Table 1). Whilst there were many standing trees on each plot (e.g., Combretum, Sclerocarya birrea, and Terminalia sericea), the fire appeared to leave them most unaffected. Grasses (which can exceed 1 m height) are the bulk of the consumed fuel. Further plot descriptions related to previous KNP field campaigns can be found in [32], who focused on pre-/post-fire reflectance simulations, or in [33], who developed an emission factor measurement method.

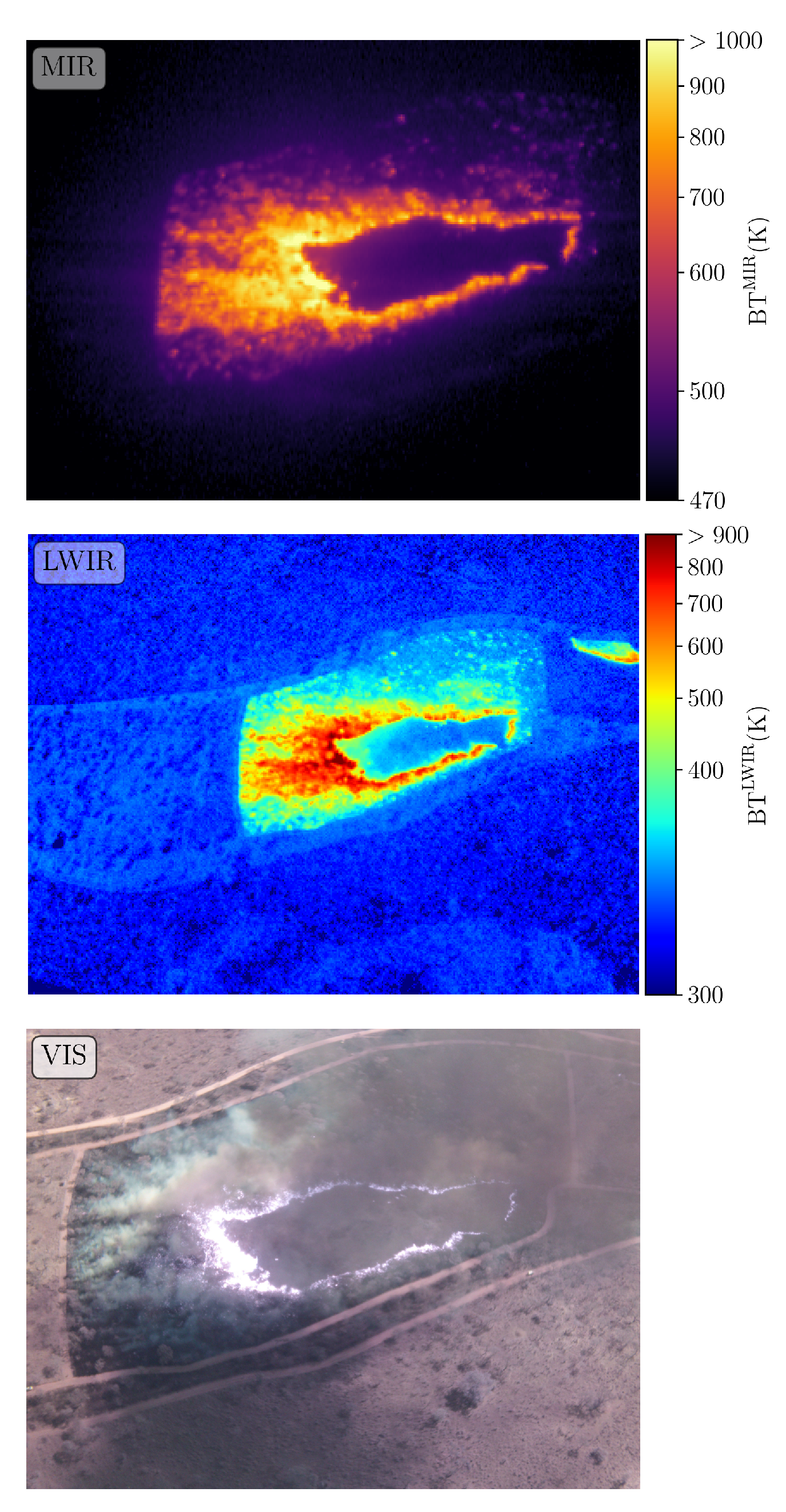

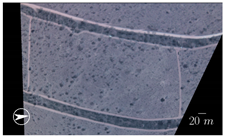

Each burn plot is first ignited with a backfire to create a fire break at the downwind side (see backfire examples in Table 1 for Skukuza4 and Shabeni1). Headfire ignition follows some minutes later at the upwind side of the plot. During each burn, radiative energy emissions from the burning fuel were assessed using two IR thermal imaging cameras, one operated in LWIR (Optris PI 400) and the other one in MIR (FLIR Agema 550). A third camera operating in the visible (VIS, Gopro Hero 2 with no IR filter) was set on the same portable mount than the IR cameras. It is used for qualitative purposes only to link IR fire activity observation to basic observer features (flame location, plume development), and its image orthorectification are then not discussed in this paper. Figure 1 shows an example of concurrent VIS, LWIR, and MIR camera observations around the peak activity of the Shabeni1 burn.

Figure 1.

Example of raw images captured by the three cameras operated during the KNP14 field campaign: visible (VIS), Long Wave Infra Red (LWIR) and Middle Infra Red (MIR). The concurrent images were collected at s after ignition of the Shabeni1 burn. Cameras specifications are reported in Table 2.

An important difference between the two IR cameras is their operating temperature range (Table 2). While the Optris PI 400 camera can record LWIR Brightness Temperature (BT) from the background temperature (290 K) to a temperature typical of a fire scene (600–1000 K), the Agema 550 camera, when setup to monitor in a temperature range above 600 K, cannot sense MIR background BT , [21].

Table 2.

Specification of the cameras used to monitor the KNP14 experimental burns. The three cameras were operated simultaneously, handheld on the same mount, from a helicopter hovering above the fire looking at the fire from off-nadir.

The limited plot size (<8 ha) allows for a hovering altitude of 600 m, which makes it possible to orthorectify IR images at a 1-m spatial resolution (Section S1.1). Skukuza4, which is a smaller plot, is monitored at a lower altitude, hence orthorectified at a higher resolution of 50 cm. The data set built up during this field work is named KNP14.

During the burn, the helicopter pilot tried to hover as much as possible at the same location near the plot edge, while the camera operator kept as much as possible the full extent of the burning plot in the MIR camera field of view (the MIR camera has the smallest field of view among the three cameras, Table 2). The resulting data set is three sequences of images from the LWIR, MIR, and VIS cameras, collected at different frame rates (3, 1, and 1 Hz, respectively). The three cameras are not time synchronized; two of them (LWIR and VIS) had an unstable frame rate. Therefore, warping transformations derived for one camera are not directly applicable to the other cameras. Another constraint of the KNP14 data set is the lack of detailed camera calibration. Using a pinhole model, we only have access to prior knowledge of the intrinsic camera matrix, but not the associated distortion coefficient. Implications are discussed in Section 6. Section 5 assumes that the camera calibration is known.

For each burn, the ignition time was chosen to match with satellite overpass, providing fire-dedicated sensor observation and in particular FRP measurements (Table 1). Two sensors were targeted: The Visible Infrared Imaging Radiometer Suite (VIIRS) aboard the joint NASA/NOAA Suomi spacecraft with two active fire products at 375 and 750-m [34], and the experimental TET-1 (Technologie Erprobungs Träger-1) micro-satellite developed by DLR with a 178-m resolution [35]. These two sensors deliver, at the time of the experiment, the highest resolution active fire satellite products. Section 7.3 provides a comparison of FRP computed from space-borne observation against FRP derived from the orthorectified helicopter-borne images.

5. Orthorectification Algorithms

This section presents the suite of algorithms designed to orthorectify the LWIR and MIR images (Section 4). Orthorectification corresponds to the process of taking an image in its original geometry and warping it onto a coordinate system (i.e., a fix grid). This process corrects for distortion due to topography variation and camera lens effects. Our approach comes with two main constrains: The terrain is limited to plane surface and the camera needs to keep a near constant line of sight (see Section S1.1 for more details).

There are three main orthorectification issues when dealing with the KNP14 data set: Camera locations are not fixed; relative camera poses are unknown because of the unsynchronized camera frame rates; and the observed scene is constantly modified while the fire propagates: Presence of smoke and alteration of vegetation from unburnt to burnt. These issues imply that each image requires its own warping transformation, and that only images of near-time acquisition can be matched, the time lapse depending on the fire dynamics and/or the ability of the helicopter to hover.

The cameras used in this experiment differ by their ability to sense or not the background scene. We discuss separately the algorithms developed for the LWIR camera (with background and fire sensing capability) and for the MIR camera (that can only record above 470 K).

5.1. Algorithms for Long Wave Infra Red

To orthorectify time-series of near-nadir LWIR images, the following procedure is proposed: Orthorectify the first image, , on a coordinate system using manually-selected GCPs (this yields the computation of ), and recursively align each image using a reference image picked from the set of previously-processed images ().

With the assumption of a constant slope terrain (plane surface DEM) and a pinhole camera model, the initial orthorectification and the image alignment can be formulated both with linear matrix transformations, i.e., homography matrix (Section S1.1). This matrix completes the warping between planar surfaces, yielding for the first image orthorectification, and for the pth image alignment. At the end, the recursive image alignment (i.e., ) is used to propagate the initial orthorectification projection to the pth image and thereby compute .

The constant change of the fire scene requires to adopt a moving reference image . If is changed too frequently, it may introduce unwanted drift resulting from the moving feature stabilization. This is probably the reason why the methodology of [28] is showing drift, as stabilization is performed on consecutive images (). Inversely, if is updated too slowly, image differences resulting from the fire evolution or from the view angle changes would degrade the alignment performance. The main challenge is to update the moving reference image while limiting error accumulation that is inevitably caused by slight image misalignment.

During the fire, three different areas can be identified in the fire scene: The flaming area located just behind the front where flaming combustion is active; the cooling area located behind the flaming area made of cooling ground and residual smoldering combustion; and finally the plume area that is mostly located ahead of the flaming zone but with overlap on the flaming area and potentially extending to smoldering spots of the cooling area. From an image alignment perspective, the flaming and plume areas are problematic as the front and plume can spread with minor changes in their appearance between consecutive images, creating potential outlier features. Conversely, the cooling area has potential interest since it is composed of fixed features whose aspect and/or temperature change at a lower time rate (typically, several minutes) than the camera frame rate (less than 1 s).

To minimize effects from the plume and front, a two-step approach for the calculation of the projection is designed. First, an alignment using a mask over the burning plot is done to focus on the background scene and provide an initial guess of (Algorithm 1). Second, the projection is optimized using cooling area information (Algorithm 2).

5.1.1. Algorithm 1

Algorithm 1 is summarized above. The calibration of the control parameters is discussed in Section 6.1. In the algorithm description, is the plot mask P projected on perspective. is the mask associated to image . It is designed to emphasize steady features, and is hereafter named the steady area mask. masks fire pixels using a conservative fix temperature threshold (420 K), but also undesirable foreground features like the helicopter skid (Section S1.2). is the 2D correlation defined in [36] that measures image similarity (Equation (S3)). SSIM is the Structural Similarity Index Metric [37]. is the ensemble of images indices at iteration p for which correlation to matching reference image was greater than the threshold , i.e., image aligned with “good” alignment flag. is the ensemble of image indices used as the reference image. The rules for updating the reference image (i.e., the first step in the loop of Algorithm 1) is central to the algorithm stability. The trend of evolution along the iterations was chosen to trigger the update, hence choosing a new reference image when remains below for four iterations. The new image is then selected within with the two conditions of having a correlation greater than , and being older than by at least seconds.

| Algorithm 1 Iterative orthorectification using LWIR image background | |

| ▹ manual orthorectification | |

| for do | |

| and | ▹ reference image update |

| ▹ feature and area-based alignment, see step 5 in Figure S4 | |

| ▹ projection on Im | |

| ▹ algorithm stability | |

| ▹ orthorectification | |

| ▹ performance assessment | |

| if | ▹ quality flag |

Following the work by [38,39], the homography matrix is built upon the combination of a feature-based method the pyramidal implementation of the (Lucas–Kanade feature tracker, PyLkOpt, [40]), which uses the last processed images as a template and a multi-resolution area-based (Enhanced Correlation Coefficient, ECC, [36]) method using the reference image as a template. See Section S1.2 for more details on these two methods.

According to [41], the Normalized Mutual Information (NMI) and 2D correlation are good image similarity metrics for a registration quality assessment of LWIR images in the context of fire airborne observations. In this work, since the 2D correlation is inherent to the area-based alignment method ECC (see Equation (S5)), it was selected to monitor algorithm stability.

One additional image similarity metric, SSIM, is introduced to assess orthorectification quality independently from 2D correlation. Note that SSIM application to fire observation has not been yet discussed in the literature [41]. SSIM is chosen here for its capability to estimate local matching between images according to a combination of intensity, contrast, and structure in a window around each pixel [37]. SSIM, when compared to 2D correlation, has the advantage of not being biased by maximum-to-maximum alignment. SSIM is only considered on orthorectified images, so that every pixel has the same weight when considering statistics per image. The metric used to assess the quality of image orthorectification is thus defined as , where is the -percentile and is the similarity map that uses a neighbor window 20 m in size to include the vegetation structure (e.g., tree, bushes). SSIM is used for the result analysis in Section 6.

5.1.2. Algorithm 2

The second optimization step requires the existence of a dynamical mask, which removes the plume and the front from the alignment procedure. The mask is composed of a fire front delimitation that masks out the flaming and plume, and of a filter that emphasizes feature contours in the cooling area (Section S2.1).

The fire front delimitation is based on: The fire front arrival time map () set with a fixed conservative temperature threshold (fire reaches a pixel when ), and a constant flaming residence time . The parameters and are adjusted for each burn. They depend on the orthorectified image resolution and the front depth variability for the fire duration, respectively. At time of image , the mask is therefore defined by pixels where . The feature filter applies a local normalization to a BT image to emphasize feature contours in the cooling area (Section S2.1). This filter, hereafter named local normalization and denoted by when applied to image , requires as input a window size ().

As in Algorithm 1, the optimization of (i.e., ) is performed recursively with a combination of feature- and area-based alignments (see function defined in Section S2.1) using the 10 previous images as reference templates. Algorithm 2 is summarized below.

| Algorithm 2 Recursive optimization of LWIR alignment | |

| for do | |

| ▹ reference image update, | |

| ▹ cooling area mask | |

| , ) | ▹ cooling area feature emphasis |

| ▹ alignment, see steps 4 and 5 in Figure S6 | |

| ▹ adjustment | |

| ▹ arrival time map update | |

Similarly to Algorithm 1, a steady area mask is defined. It is applied to the orthorectified image and fire pixel, which are filtered with a temperature threshold adjusted on a fire basis to better suite the fire intensity. also removes part of the template image where previous orthorectification quality is assessed low (this can be caused by unexpected appearance of the plume or the active fire front for example). Pixels are masked out when local SSIM, averaged over the last 20 images, drops below a certain threshold . This threshold also depends on the burn.

This concludes the description of the orthorectification algorithms for LWIR images with background scene. A final task of filtering outlier images within the orthorectified time series (i.e., ) is discussed in Section 6.1.2.

5.2. Algorithms for Mid Infra Red Images

The algorithms presented in Section 5.1 cannot be applied for the orthorectification of MIR images due to the limited temperature sensitivity of the Agema 550 camera [21]. This implies that fixed background features are not available to perform image alignment.

Ref. [21] designed a strategy that tracks four fixed GCPs, from image to image, to compute the homography projection. In the present work, MIR image orthorectification relies on the multi-resolution area-based alignment introduced in Algorithm 1 (see function defined in Section S1.2), which corresponds to a two-step algorithm. First, MIR images having near concurrent LWIR images are orthorectified using the full images, including the front and cooling areas (Algorithm 3). Second, the remaining MIR images are orthorectified using cooling area alignment with the closest processed MIR image (Algorithm 4). Concurrent LWIR images are required to observe:

Compared to [21], the new approach is more robust since it does not rely on tracking specific features, and since it has the advantage of not requiring corner fires. However, it requires one to align concurrent (MIR, LWIR) image pairs. These image pairs can show very different patterns due to the emissivity of the different fire elements (e.g., gas, soot particles, smoke), which have strong dependence on wavelength [42]. To overcome this issue, images are filtered through the normalized gradient of the locally normalized BT, hereafter denoted by . This filter helps to render image patterns consistently for LWIR and MIR. In , gradient is applied to the image where pixel values are set according to local Cumulative Distribution Frequency (CDF) of BT intensity (i.e., adaptative equalization of the local normalization). BT differences due to Planck’s law are therefore damped. Filtered image dissimilarity between LWIR and MIR is then mostly controlled by the local variability of emissivity. Varying emissivity can be important within the flaming front, but significantly lower in the cooling area, where it is less dependent to wavelength as it is controlled by solid material rather than hot gas or soot particles.

5.2.1. Algorithm 3

Algorithm 3 is summarized below. Image pairs (MIR, LWIR) are aligned on the LWIR perspective using: A priori warping, which corrects for the fixed perspective transformation induced by the camera orientation on the platform mount; and the image contours emphasized with the filter (Section S3.1). The MIR image is finally orthorectified using (corresponding to computed for the LWIR image in Algorithm 2).

| Algorithm 3 Orthorectification of MIR image | |

| for in set of LWIR orthorectified images do | |

| ▹ select near-concurrent MIR images | |

| ▹ apply initial warp to perspective | |

| , ) | ▹ enhanced cooling area similarity |

| = | ▹ apply area-based alignment (see Figure S7) |

| ▹ compute orthorectification | |

5.2.2. Algorithm 4

To orthorectify the MIR images that were not linked with concurrent LWIR images (), a last algorithm, Algorithm 4, is proposed (Section S3.2). It optimizes the cooling area alignment between consecutive MIR images. MIR images processed with Algorithm 3 are available as templates at the frame rate of the LWIR sensor (1 Hz). With a 3-Hz frame rate for MIR, each unwarped MIR image is never further apart than 0.5 s from a MIR template. Over such a time window, cooling is assumed to remain unchanged and each new MIR image, , is therefore aligned to the nearest original template perspective, , using a cooling area mask . This mask combines: A front mask set with the arrival time map of Algorithm 2 () warped on the template perspective; and a fire pixel mask using a fixed MIR BT-threshold of , which masks out fire pixels in the fire front depth. Unlike the LWIR alignment optimization, flaming residence time is not used to mask fire pixels of MIR images. A MIR BT-threshold is preferred to maximize the cooling area, which is limited in the MIR (). The final orthorectified image is computed using orthorectification homography , which was computed in Algorithm 3.

| Algorithm 4 Iterative Optimization of MIR image Orthorectification | |

| ▹ input set of MIR orthorectified images | |

| ) | |

| run twice: | |

| for in do | |

| ▹ select reference neighbor image | |

| , ) | ▹ enhanced cooling area similarity |

| = | ▹ apply area-based alignment |

| ▹ compute orthorectification | |

To strengthen the orthorectification process of the whole time series, the alignment on neighbor images is coupled with a filter removing outlier images (i.e., , Section 6.2). The combination of alignment and filter is run twice to reduce alignment error.

6. Application to KNP14 Data Set

The suite of orthorectification algorithms (Section 5) is applied to the KNP14 data set (Section 4). The algorithm parameter calibration and the image outlier filtering are discussed.

6.1. Application of Algorithms 1 and 2 to LWIR Images

6.1.1. Algorithm Parameter Calibration

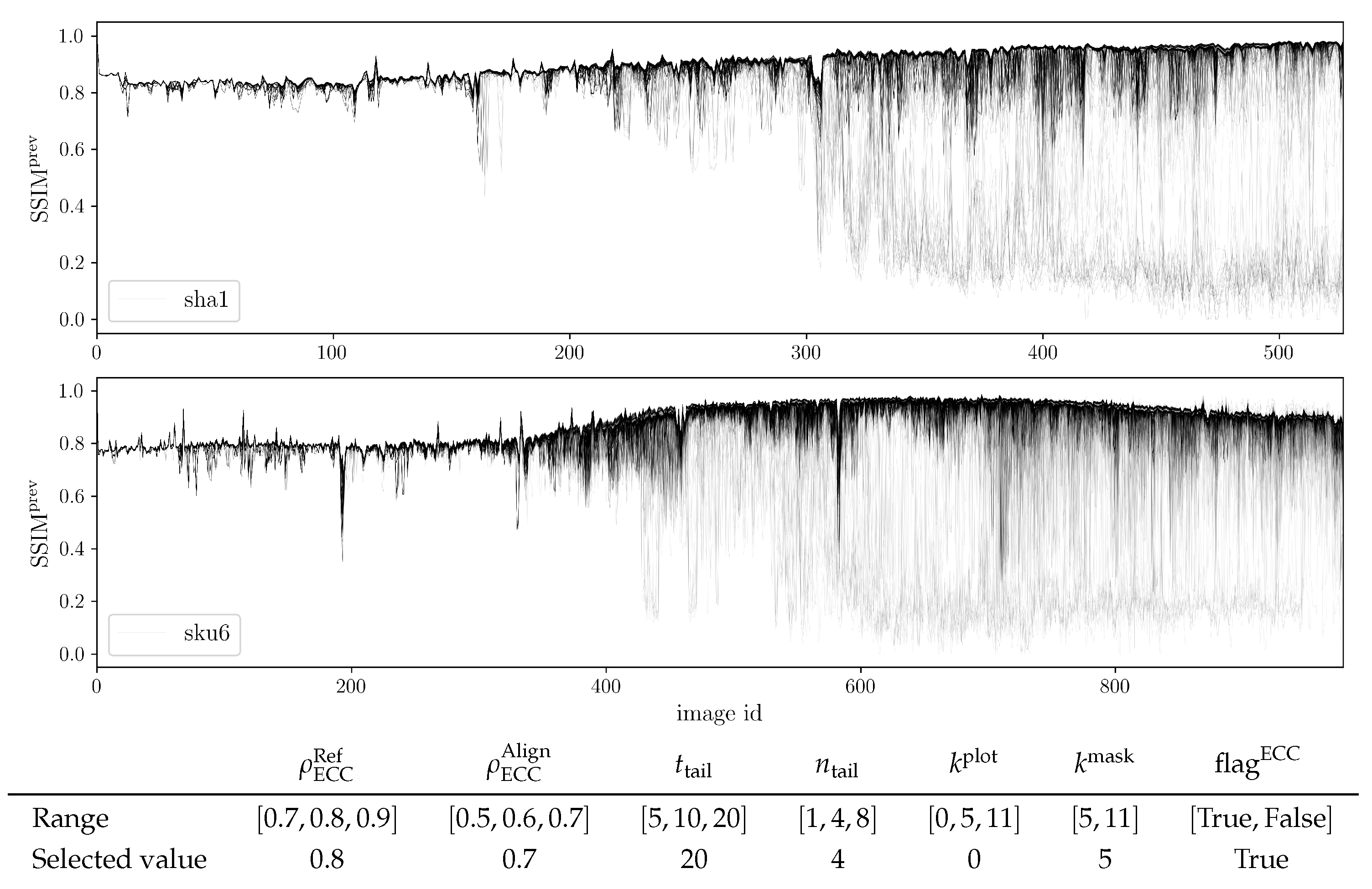

Algorithm 1 performs image orthorectification based on the background area alignment (Section 5.1). The following parameters are of first importance: Thresholds to monitor 2D correlation ( and ) and control parameters to adjust the stack of previous images used in the alignment (, , Section 5.1 and Section S1.2). Further parameters are included into Algorithm 1 to test its robustness: A switch to activate or not the area-based alignment, and a set of kernel sizes to tune mask size. Two kernels are set to expand the plot mask (P) and the steady area mask (). The two kernels help to control hot pixel effects in the vicinity of burning pixels during alignment. The set of seven parameters is set up through a simple sensitivity study based on a brute force approach and discrete ranges of parameter values (see table in Figure 2). The performance of a given parameter set is evaluated through the mean value of over the last 20 images, i.e.,

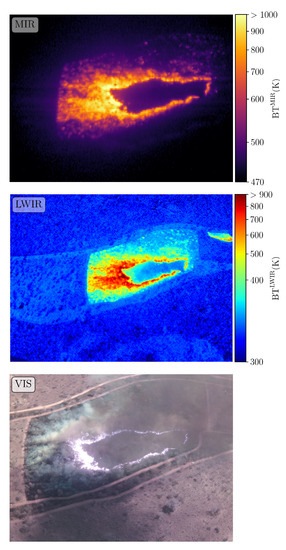

Figure 2.

Sensitivity analysis associated with the seven input parameters of Algorithm 1. Top and middle panels show the time series of the image-to-image structural similarity index metric () for Shabeni1 (sha1) and Skukuza6 (sku6) LWIR observations, respectively. A total of 972 parameters sets are tested, and each line represents one ot them. Ideally a value of 1 is expected. Darker areas show the convergence of the calibration. Bottom panel reports the discrete ranges and the selected values for the parameters.

This sensitivity study is applied to two fires with different fire activity: Skukuza6 and Shabeni1. Figure 2 presents time series and the parameter values that gives the best performance for both burns.

Although some of the selected parameter values belong to the range limits of the sensitivity analysis, the sensitivity analysis is not further developed since Algorithm 1 is only intended to provide a first guess of the orthorectification transformation. As explained in Section S1.1, parallax effects caused by objects in the scene (e.g., trees) and the moving camera are a critical problem when dealing with image alignment. To mitigate this effect, the methodology detailed in Section 5 imposes a near-constant line of sight between the camera and burning plot. To ease this constraint, the sensitivity analysis shows that increasing the number of images (and therefore view/template) in the stack of available images for the feature-based alignment (i.e., varying from 1 to 4) greatly improves the performance of Algorithm 1. Increasing further the image stack has negligible improvement in regard to computational cost.

Algorithm 2, which recursively optimizes the alignment of the orthorectified images resulting from Algorithm 1, is based on four parameters: , , , and . The values for these parameters are determined for each burn using a trial-and-error approach. Selected parameter values are reported in Section S2.2, together with explanations (e.g., data quality or fire activity) that led to this choice.

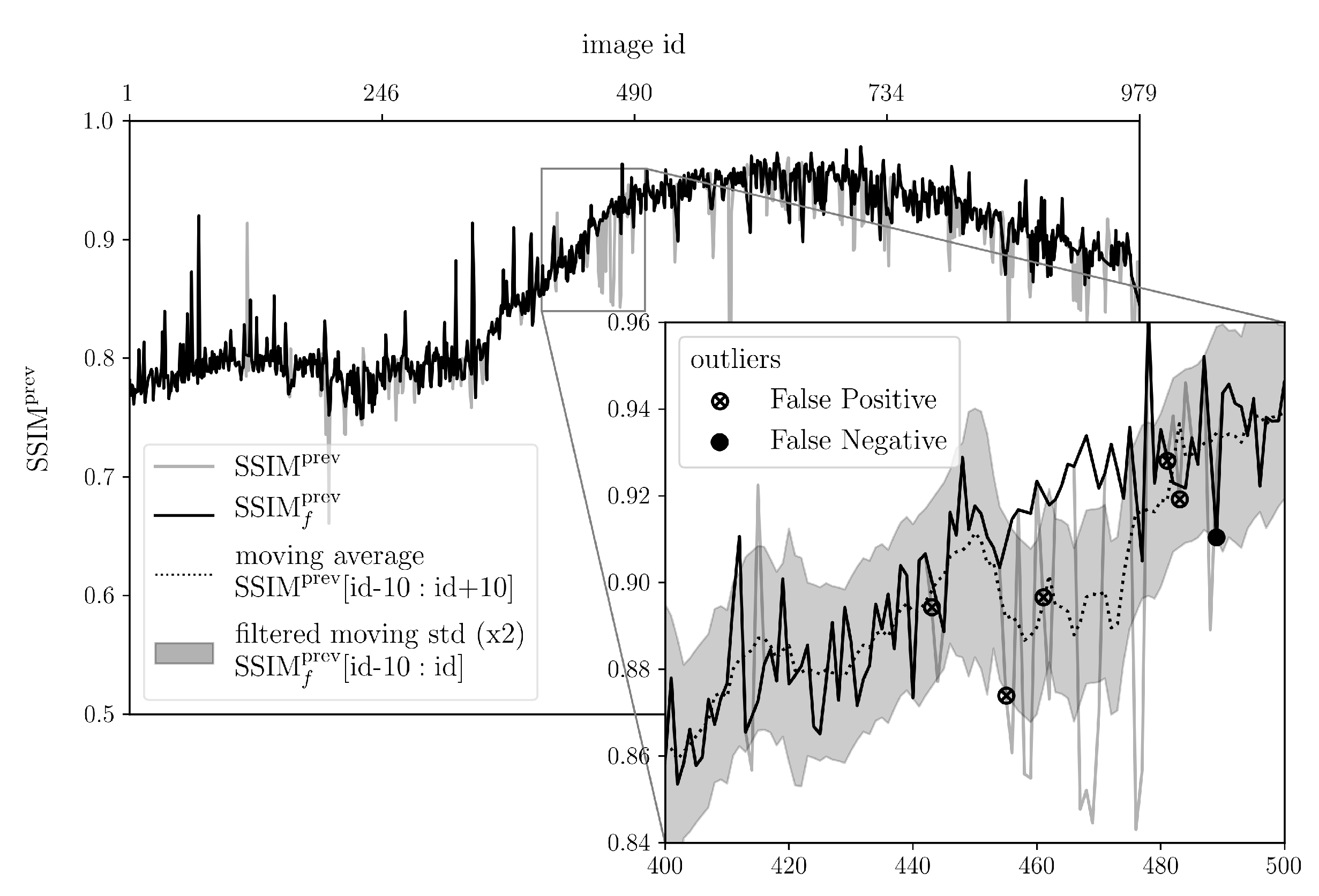

6.1.2. Image Outlier Filtering

To finalize LWIR image orthorectification, a filter () is applied to the image-to-image similarity measure () time series. The objective is to remove potential outliers from the images processed by Algorithm 2. It is set using rolling mean and standard deviation of , removing image when:

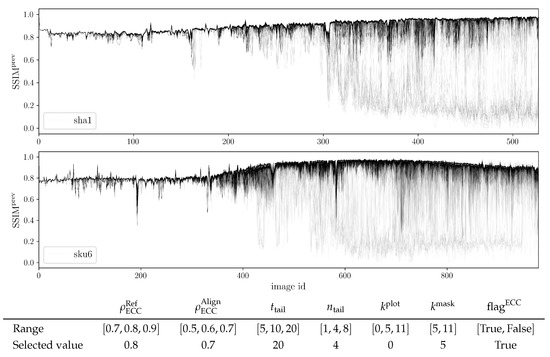

where is calculated using images from the filtered times series. The filter is recursive as (the right-hand term in Equation (2) only depends on previous filtered images). However, to avoid a divergence towards high values, the mean in Equation (2) is computed on the initial image time series using a centered window. Figure 3 shows filter improvement to the time series when applied to Skukuza6 LWIR images.

Figure 3.

Time series of image-to-image structural similarity index metric () for LWIR observations of Skukuza6. The gray line is for the image time series output by Algorithm 2 (). The black line is for the final filtered image time series (). The dotted line and gray area are the centered rolling average of and the left rolling standard deviation of (Equation (2)). Encapsulated zoom shows the filter action.

The KNP14 data set relies on a theoretical camera calibration that does not correct for distortion (Section 4). This is particularly a problem for the LWIR images as the Optris camera lens has a rather poor optical quality, amplified by its large field of view (larger than the other cameras, Table 2). Image distortion is therefore penalizing both feature- and area-based alignment as it alters local pixel distribution. To improve the stability of the final orthorectified LWIR image time series, a manual check is performed. It consists of manually evaluating the alignment of the current image against its neighbors. The number of manually-flagged images, i.e., incorrectly unfiltered images (False Positive) and filtered good alignment (False Negative), accounts for less than 6% of the total initial number of images in the worst case (Skukuza6).

6.2. Application of Algorithms 3 and 4 to MIR Images

Algorithms 3 and 4 are designed to be less dependent on parameters than the two previous algorithms tailored for LWIR images. The only required parameters are the window size of the local normalization used in the filter of Algorithm 3, and the fire BT-threshold used in Algorithm 4. As it is applied on the original image perspective, unlike in Algorithm 2 where it is applied to an orthorectified image, is expressed in pixels. A constant value of equal to 30 pixels is chosen. This value is relatively large to easily include vegetation structures that appear in the image bottom part. The lack of need to adjust for hovering altitude was noticed. is, however, tuned on a fire basis, leading to for all burns but Skukuza4 where .

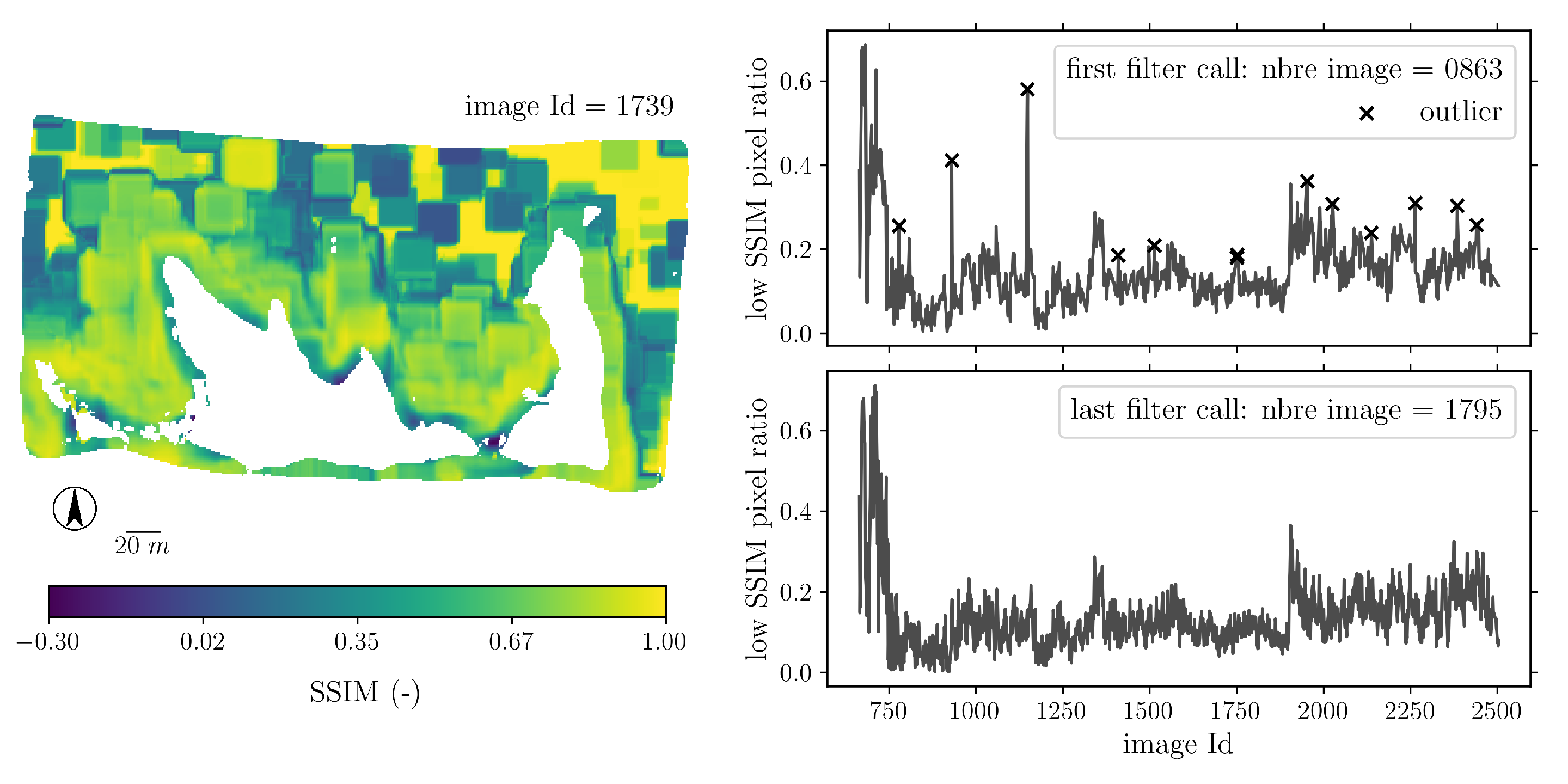

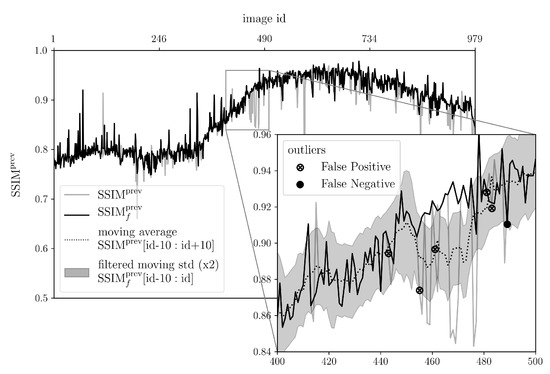

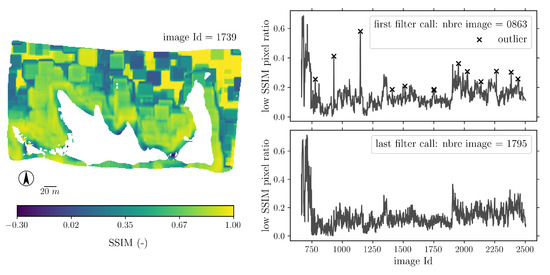

Another difference from Algorithm 2 is that the image outlier filter () is included in Algorithm 4 (Section S3.3). The MIR filter is based on a Hampel filter [43], which runs on a measure of low SSIM cover ratio. Figure 4 shows the application of for Skukuza6. Results from the initial and final filter calls are reported. The total number of images in the MIR time series passes from 863 after Algorithm 3, to 1795 after Algorithm 4 with a time series of low SSIM cover ratio showing significantly fewer outliers.

Figure 4.

Overview of used to clean the MIR image time series. Left panel shows the 20%-percentile of the SSIM similarity map for image against its 10 closest neighbor images (). Right panels show the time series of the cooling area cover fraction (mask ) with lower than 0.2. This metric is passed through a Hampel filter to flag image outliers. Algorithm 4 is designed over a recursive loop that progressively includes MIR images unsynchronized with LWIR images. Top and down right panels show filter application at its first and last calls (Figure S8).

7. Discussion

This section first offers a discussion on the orthorectification accuracy of LWIR and MIR images. Then, the resulting image time series for the four burns are presented. Finally the potential exploitation to such fire observations is shown with an application to compute FRP and FRE.

7.1. Orthorectification Accuracy

Estimating orthorectification accuracy is necessary for the computation of several fire behavior metrics that depend on multiple images. For example, ROS which is computed from the arrival time map [44], is impacted by image registration error, in particular when the fire is slow and information from multiple images is used at a same grid point. In this case, low absolute error is required. The computation of FRE is another example sensitive to absolute registration error as it is a time integration of FRP from all images.

To estimate orthorectified image absolute accuracy, a standard method is to compare GCP true locations to those retrieved in images. For example, good features to use as GCPs would be the road/fire break present at terrain level around all the burn plots. Unfortunately, for both LWIR and MIR cameras, these features were found impossible to track. Isolated road features (without vegetation) show either with too low contrast in the LWIR images, or not at all in the MIR images. If GCPs are formed by objects above the terrain (e.g., bush, tree), the parallax effect and moving camera introduce artificial feature displacement.This displacement increases when the object is further away from the image center, and is even more amplified when camera distortion is not corrected.

Due to the lack of ground features that can be identified along the image time series, we propose here to estimate the performance of both LWIR and MIR image orthorectification using GCPs formed by the corner fires that are present in Skukuza4 and Skukuza6 burns. Despite expected artificial displacement, this approach has the advantage to be independently applicable to both LWIR and MIR images. As these corner fires are located on image edges, they are more likely to be affected by the lack of distortion correction. The methodology discussed below is therefore expected to overestimate the orthorectification error. Tests with better camera calibration will be investigated in the future.

The corner fires are made of 50-cm tall metallic cylinders filled with burning logs. In both raw LWIR and MIR images, features associated with the corner fires (base and flame/smoke) show as a vertical structure spanning over several pixels. The number of involved pixels depends on the distance of the corner fire to the camera, view angle, and corner fire activity.

To identify the corner fires in LWIR and MIR images, we follow the same approach as in [21]. Once fire pixels are labeled with a simple threshold temperature () and clustered, using the initial locations that were manually selected, corner fire positions are defined recursively by: Tracking a fire cluster with similar temperature distribution and size, and using the temperature-weighted barycenter of the new cluster as the corner fire location.

Note that the corner fires are not included in the alignment processes of Algorithms 1, 2, and 4. They are either removed by the plot mask or a low pass temperature filter. They are only used in the first step of the MIR image alignment in Algorithm 3: Experience shows that they mostly help to start processing MIR images earlier in the time series. When corner fires are not present (i.e., Shabeni1 and Shabeni3), the first MIR image is taken later in the time series, as the algorithm requires at least two edges of the plot to be fully ignited with backfire to have enough fire pixels to start the MIR/LWIR alignment.

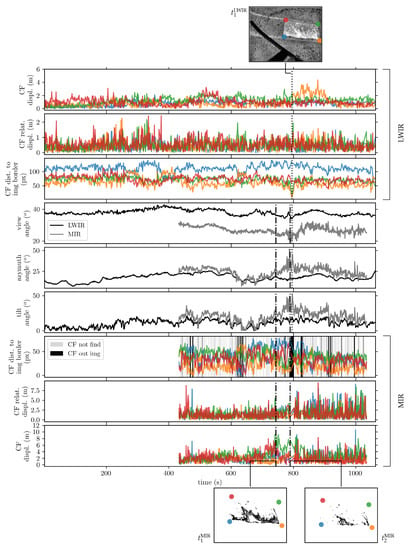

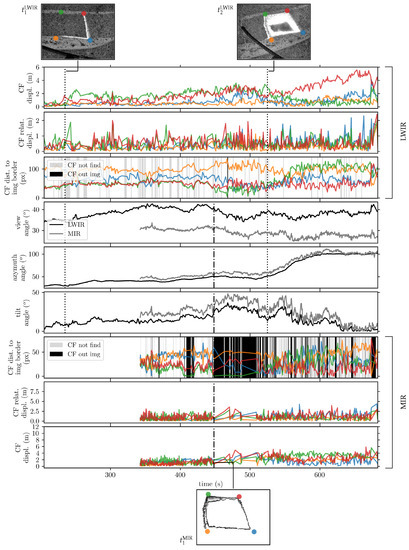

Figure 5 and Figure 6 show corner fire displacement along the image time series for Skukuza6 and Skukuza4, together with the corner fire distance to original image border, and with camera poses (azimuth, tilt, and view angles). Camera poses are computed from orthorectified images, corner fire being present or not in the image, and using a priori knowledge of camera calibration. Despite the use of a priori calibration data, camera angles come to a rather good match showing noticeable biases that certainly originate from the mount where cameras were not aligned.

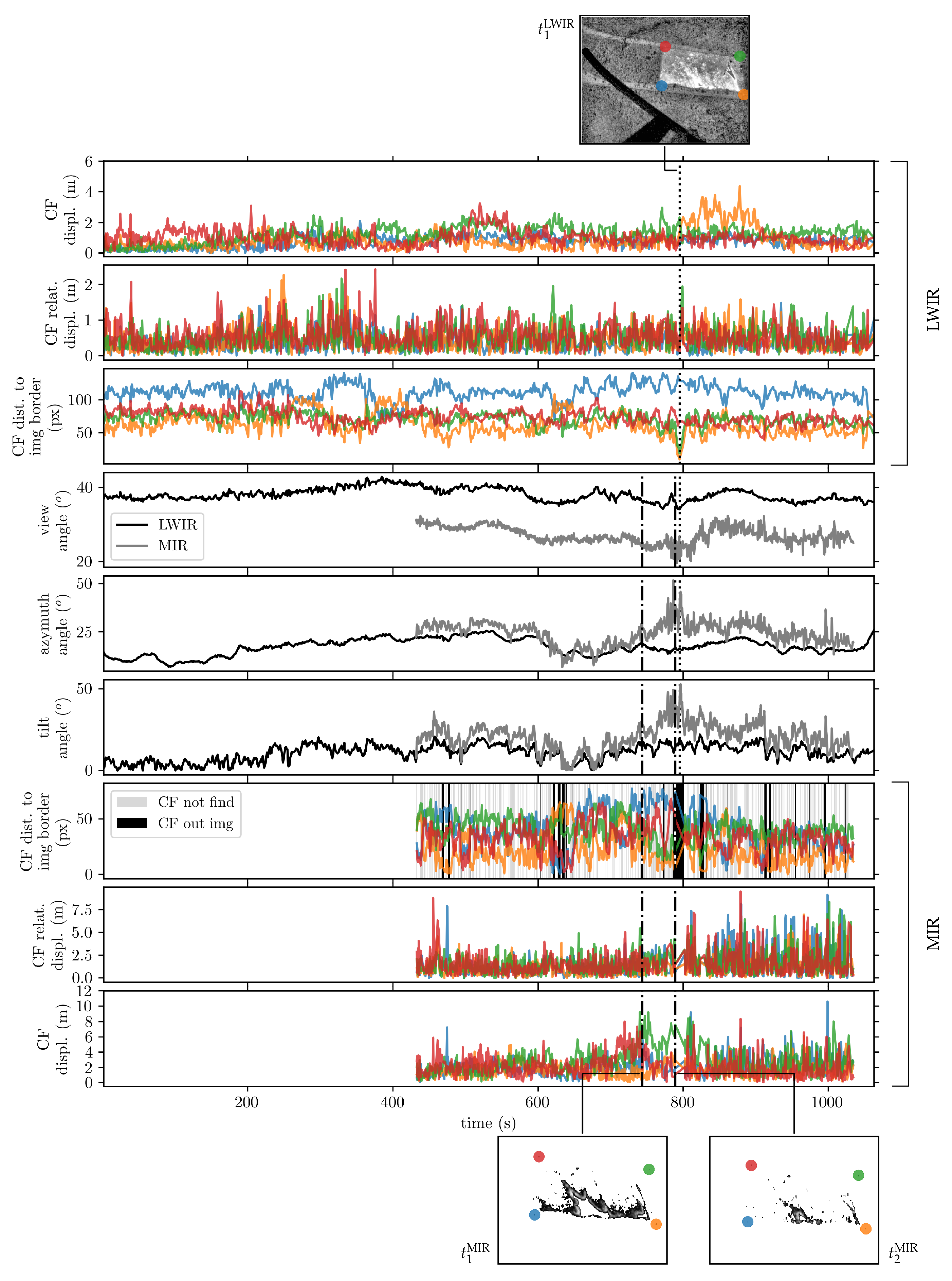

Figure 5.

Overview of LWIR and MIR images orthorectification accuracy for Skukuza6. The three top panels show the LWIR camera time series of corner fire (i.e., GCPs) displacements from their initial positions in the orthorectified image, the same corner fire displacement but relative to previous image, and corner fire distance (in pixels) to the image edge. The three middle panels show the evolution of LWIR and MIR cameras angles (namely the view, azimuth, and tilt angles). The camera pose is computed from the image alignment using a priori geometrical calibration camera data. The three bottom panels are the same as the top three panels but for the MIR camera. Gray and black vertical lines (in seventh panel from top) indicate times where at least one corner fire is missed by the tracking algorithm, and times where at least one corner fire is out of the field of view. Encapsulated images at the top and bottom show, within the raw image, the corner fire location, and the fire stage at specific times.

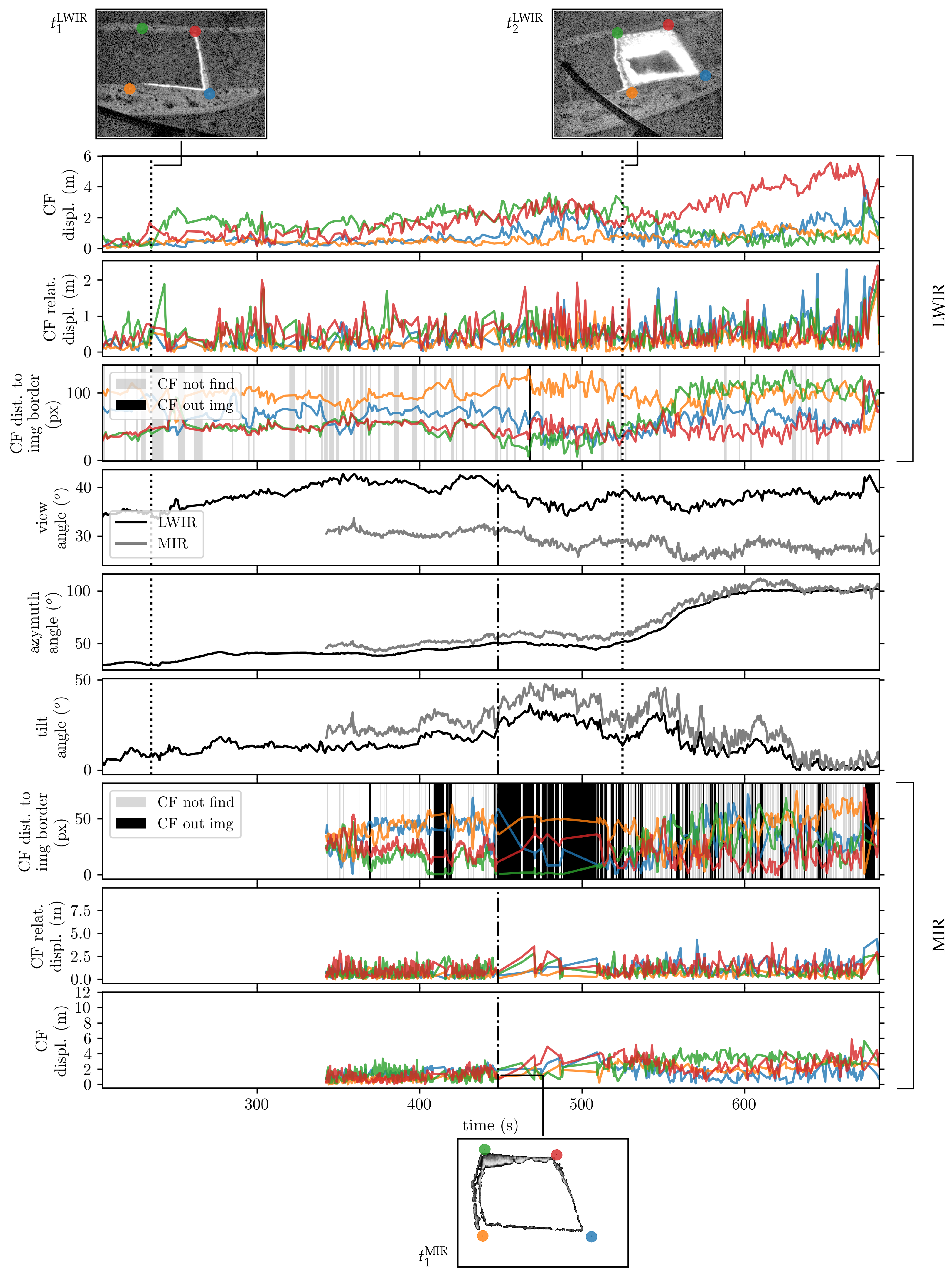

Figure 6.

Same as Figure 5 for Skukuza4.

Top and lower panels from Figure 5 show that Skukuza6 orthorectified LWIR and MIR images do not experience drift (Section 5.1) but do have absolute displacements that fluctuate within 3 and 8 m, respectively. Up to the times and (vertical dashed lines in Figure 5), absolute displacements are even lower, remaining within 2 and 5 m, respectively. At these specific times, the operational camera setup breaches certain of the assumptions listed in Section 5. In the case of the LWIR images, changes in the camera view angle, coupled with a corner fire getting close to the image border (where distortion is important since it is not corrected in the KNP14 data set), make one corner fire diverge from its expected location when compared to others at the same time. In the case of the MIR images, at time , the upper right corner fire becomes isolated from the bulk of the active fire pixels. As Algorithm 4 is set to better adjust the part of the burning plot covered with active fire pixels, the upper right corner fire displacement is less constrained. Note that the misplacement position of the green corner fire has direct effect on the MIR camera pose estimation, which diverges from the LWIR pose. After time , fronts merge and die, resulting in a more homogeneous distribution of the remaining smoldering fire pixels. Relative displacements increase slightly compared to the fire start, but the absolute displacement improves from the situation just after .

Now considering Skukuza4 (orthorectified at 50-cm resolution), Figure 6 shows that corner fire displacements are similar to Skukuza6 for the LWIR images, which on average remain below 3 m. Effects from camera pose changes are noticeable. At time , a change in view angle penalizes the alignment of the corner fires located at the top of the LWIR image (red and green corner fires). At time , a change in azimuth angle produces a drift of the red corner fire, which is at that time located the furthest away from the camera (i.e., with the poorest resolution). Meanwhile, the two corner fires located at the bottom of the image remain within a distance of 2 m from their initial position. Displacement of corner fires in the MIR images are, however, much smaller, remaining below 4 m over the fire duration. Until time when one corner fire (green corner fire) gets close to the image borders and even disappears from the field of view (see black background on third panel from bottom), displacements are even better, remaining around 2 m. After the four corner fires get back in the field of view, relative displacements are not so much impacted; only small drift (less than 2 m) shows on the upper corner fire (red and green corner fires). Unlike for Skukuza6, the MIR camera for Skukuza4 is not equipped with a filter, hence enabling lower temperature detection. This feature coupled with the higher data resolution makes the cooling area much better resolved in MIR images. This is also certainly improved by the larger fuel load, which increases the smoldering time compared to Skukuza6.

These results show that the alignment procedure of LWIR and MIR images performs better when feature appearance in the images is conserved. When changes are introduced (either induced by camera pose and/or distortion), features end up looking different from what the algorithms know from the available template images, and alignment quality is degraded. In the case of the MIR images (which do not show background), this is even more evident. Results from Skukuza4 show the importance of being able to capture the cooling area to improve MIR image orthorectification. To this end, the resolution increase (50 cm) helps improving the performance of Algorithms 3 and 4. However, the same resolution increase in LWIR does not provide the same improvement.

Both Skukuza6 and Skukuza4 burns show relative displacement in LWIR images of about 1.5 m and absolute displacement of about 3 m. For Skukuza6, if we do not consider times after that are impacted by the poorly resolved cooling area, MIR images show at the same time relative and absolute corner fire displacements of 2.5 and 4 m, respectively. This misregistration is of the same order of magnitude as artificial displacement (parallax effects) from tall shrubs and trees that can potentially occur during the alignment of the background (Algorithm 1) and of the cooling areas (Algorithms 2–4). Similar accuracy of the LWIR orthorectified images from Skukuza4 and Skukuza6 burns shows that we certainly reach the limit of the single vantage point, and that stereovision would have to be considered to get better alignment [45].

As discussed earlier, fire front misregistration is important when dealing with fire behavior metrics computation. Two sources of misregistration can be reported: Orthorectification, and segmentation. On the one hand, our methodology provides orthorectified images with an accuracy of at least 4 m (which can be lowered to 1.5 when only dealing with neighbor LWIR images). On the other hand, new fire front segmentation methods [46] report errors from 2 to 10 m on similar off-nadir experimental burn observations. Segmentation in orthorectified images obtained from homography transformation is affected by the flame vertical structure, which is distorted onto the projection gridded plan. With flames certainly higher than 4 m in the active front area and view angle of about (e.g., Figure 5), segmentation error could be potentially larger than orthorectification error. For fire behavior metrics computation, this error accumulation will need to be addressed to make the estimation of high-resolution fire behavior metrics possible. Nevertheless, it is worth noting that the final product delivered by the present four algorithms shows a good robustness to fire activity. In the four image time series considered here, the fire activity peak never impacts orthorectification. This is a strong point of the algorithms since complex fire behavior usually occurs with strong active fire.

7.2. Resulting KNP14 Data Set

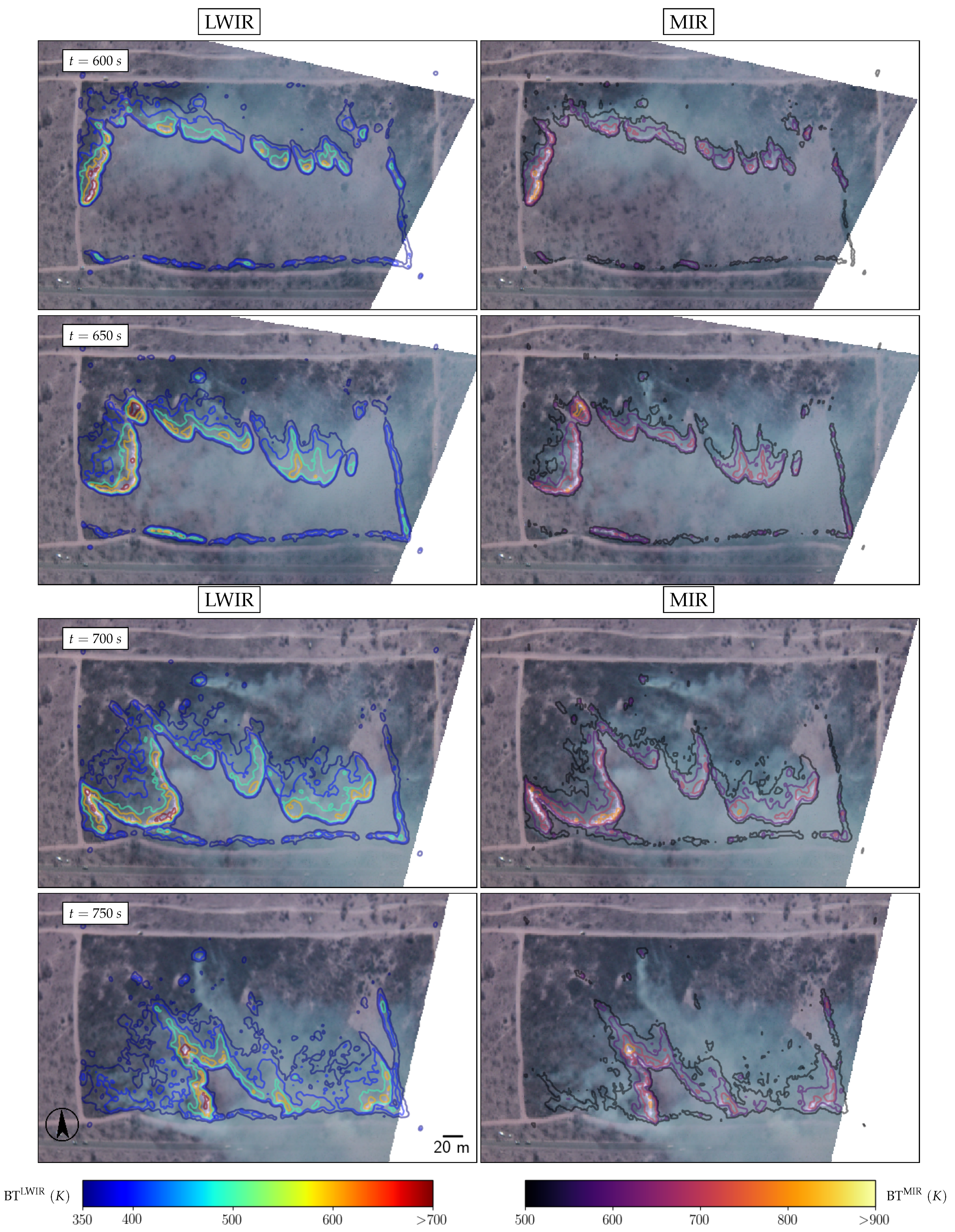

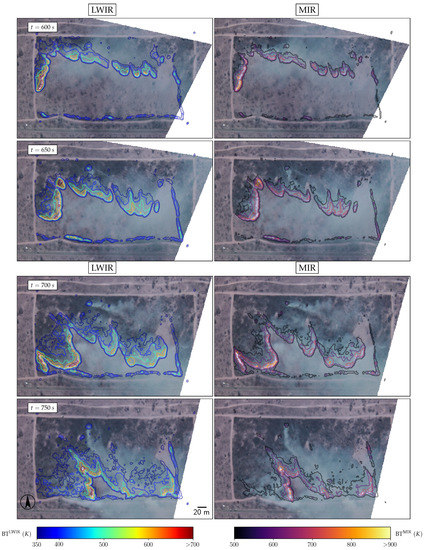

The final product delivered for each burn by the algorithm suite (Section 5) is formed by the combination of the orthorectified image time series delivered by the three cameras: LWIR, MIR, and VIS. Figure 7 shows overlay of LWIR and MIR contours over VIS images for Skukuza6. The final frequency of image time series (after orthorectification and outlier filtering) is on average for LWIR images between 0.3 and 2 Hz with large fluctuation among burns, and for MIR images between 1.5 and 3 Hz. Several gaps up to 10 s exist in both the LWIR and MIR time series, but never represent more than 5% of the images in one burn. In the worst scenario, 13.5% of raw images were disregarded (MIR images from Shabeni3). A dedicated web page to the KNP14 data set (https://3dfirelab.eu/knp14, last access date: 24 November 2021) proposes an overview of the full data set and an interactive display of the orthorectified images. The VIS images were orthorectified using Algorithm 1 and manually-tuned parameters. VIS images overlay with concurrent LWIR and MIR images features good results while the plume does not cover too much of the image, and/or is not too opaque. As shown on the dedicated web page, the VIS camera (from which the IR filter was removed) shows good ability to detect the flaming area, even in relatively dense smoke. A smoke mask is however necessary to improve the robustness of VIS orthorectification.

Figure 7.

LWIR (left panels) and MIR (right panels) Brightness Temperature (BT) contours overlaid over VIS images for Skukuza6 around the time of the peak fire activity. Contours are extracted from orthorectified images processed with the algorithm suite (Section 5). All maps have the same scale and orientation as reported on the bottom LWIR image.

7.3. Application to Fire Radiative Power Time Series Estimation

To compute metrics associated with front dynamics (e.g., ROS, front depth), fire front segmentation is required (i.e., step b introduced in Section 2). However, we can already compute spatially-integrated metrics like FRP from the MIR image time series using the formulation of [47], in order to provide insight to burns fire activity.

The FRP emitted by a pixel is then defined by:

where is the Stefan–Boltzmann constant, a is a sensor-specific constant, is the atmospheric transmittance between the fire and the sensor, is the pixel size, and and are the MIR radiance emitted by a pixel containing fire (fire pixel) and its associated background radiance. Assuming that, at the resolution of our observation (<1 m) the fire spatial distribution is fully resolved (), and that at the altitude of the helicopter the atmosphere is transparent in the MIR spectral region, Equation (3) simplifies to:

As the FRP is a conservative quantity, the perspective warping applied to raw MIR images based on the homography matrix computed in Algorithm 4 is modified to ensure energy conservation. Instead of linearly interpolating radiance values at every mesh point of our fixed grid from the un-gridded points provided by the homography transformation (i.e., Equation (S2) in Section S1.1), a perspective transformation applied at a pixel level is developed. The objective is to split each warped pixel from the original MIR image on the mesh of the assumed flat terrain, thus providing a temporal map of . This is much more time consuming and is therefore only performed once using the final optimized homography transformation of Algorithm 4.

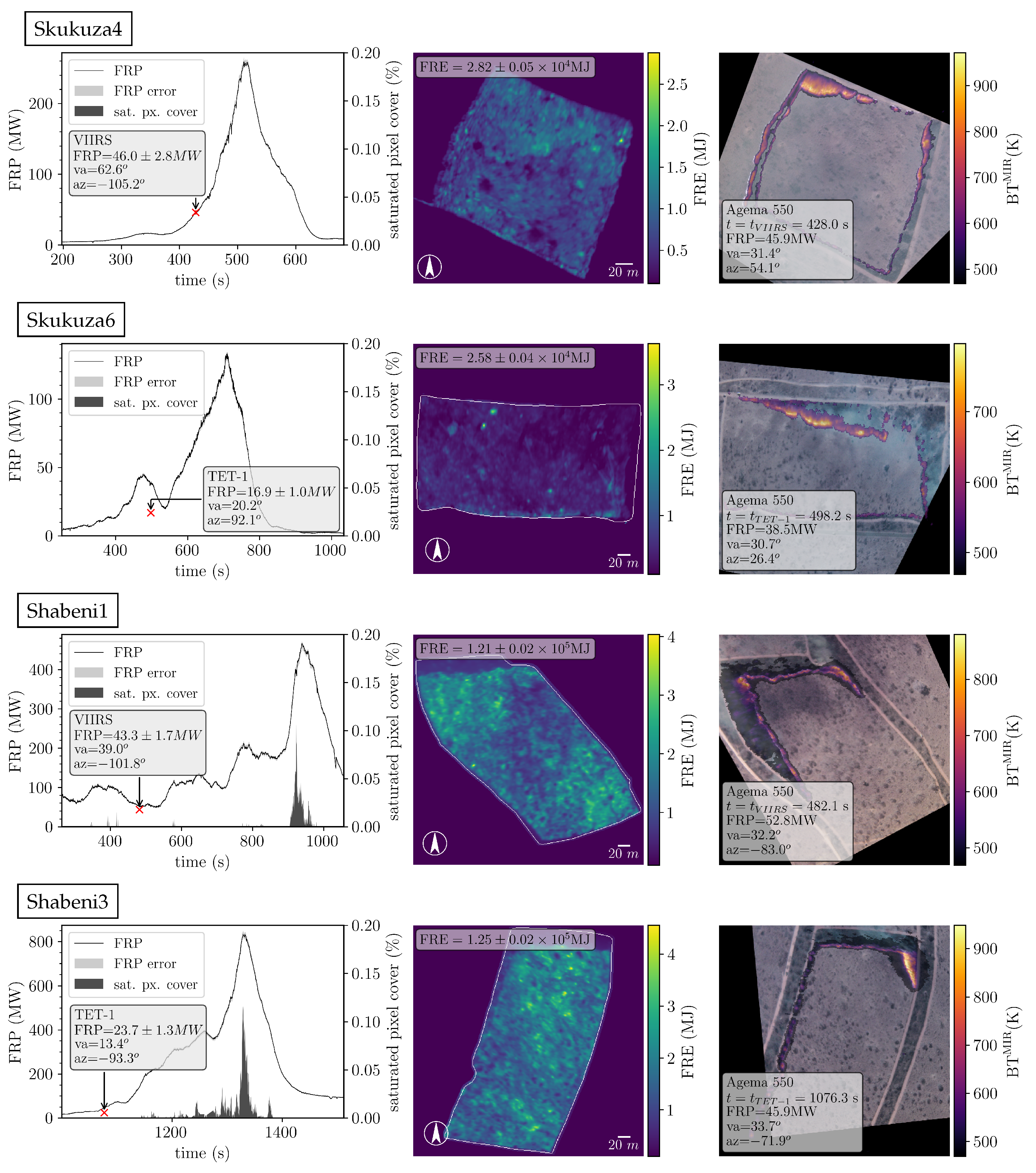

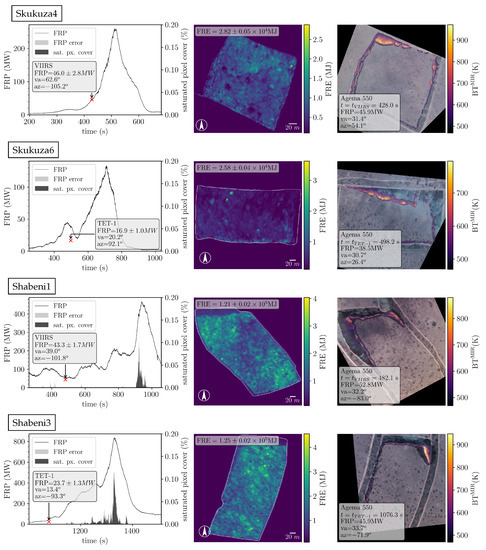

For each burn plot, Figure 8 shows the FRP time series over the experimental burn duration, the time-integrated FRP map (i.e., the FRE), and the MIR image overlaid over the VIS image at the time of the satellite overpass that was targeted for each burn (Table 1). FRP peak values range from 120 MW for Skukuza6 to 800 MW for Shabeni3. FRP error is also reported in Figure 8. For now, only geometrical effects are considered (see pixel size term in Equation (4)). The low number of saturated pixel counting at a maximum 0.1 % of fire active pixels (See Shabeni3 in Figure 8) is neglected. Increase by 50% of the saturated pixel radiance only showed marginal FRP changes. The high radiance sensibility of the Agema 550 is also neglected (). This threshold results in an underestimation of the FRP that depends on the fire activity. In the case of the Skukuza6 burn where the camera was operated with a filter (), the underestimation is even higher. In a future work, the integration of LWIR data in the calculation of FRP in the cooling trail of the fire will be considered.

Figure 8.

Fire Radiative Power (FRP) time series with associated MIR saturated pixel fraction (left panel) and Fire Radiative Energy (FRE) map (center panel) for the KNP14 four experimental burns. Information from the FRP product of concurrent satellite overpass are reported on the time series (va and az are the view and azimuth angles). See the text for details on satellite FRP error bar estimation. Right panels show helicopter-borne MIR and VIS images at the time of the satellite overpass.

Assuming that all burns are observed from similar camera poses, a map of pixel size error is computed using all available MIR images from Skukuza4 and Skukuza6 burn. For every pixel of every raw images, pixel size relative difference between the orthorectified standard output of the algorithms suite and the orthorectified output corrected to match corner fire displacements is calculated. The 10th and 90th percentiles of this relative error at every pixel of the raw image frame are used to estimate error bounds. The relative pixel size errors hence ranges from −6 to 10% with a spatial average for the lower and upper bounds equal to −2 and 2%, respectively. When applied to the FRP calculation of the 4 burns, this results in geometrical resampling error associated the FRP estimation that never goes above 8%.

Note that to complete the FRP error estimation, as the fire scene is not a Lambertian emitter (e.g., plume absorption, flame tilt), the effect of the camera pose on the measure of the radiance would also have to be estimated. This is however beyond the scope of the present work.

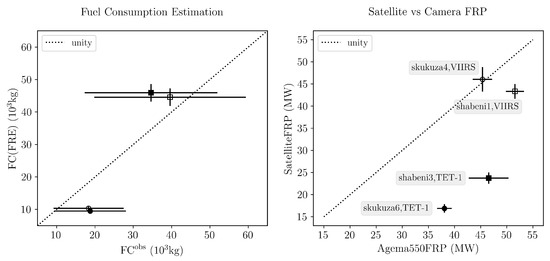

As stated in [48,49], the use of high-resolution IR fire observations would greatly contribute to revisit the current assumptions made in fire emission estimates based on FRP measurements. FRP-based emission models used in the atmospheric model e.g., [50] were partly designed on small-scale fire measurements [47], and not enough data are currently available to validate the upscaling impact [15,33,51]. With only four burns, the KNP14 data set is too small to derive a robust statistical conclusion. However, it provides insight into two questions relevant to the use of the FRP product for fire emission estimation: The relationship between FRE and Fuel Consumption (FC) (Figure 9—left panel), and the FRP computation upscaling from helicopter- to satellite-borne observations (Figure 9—right panel).

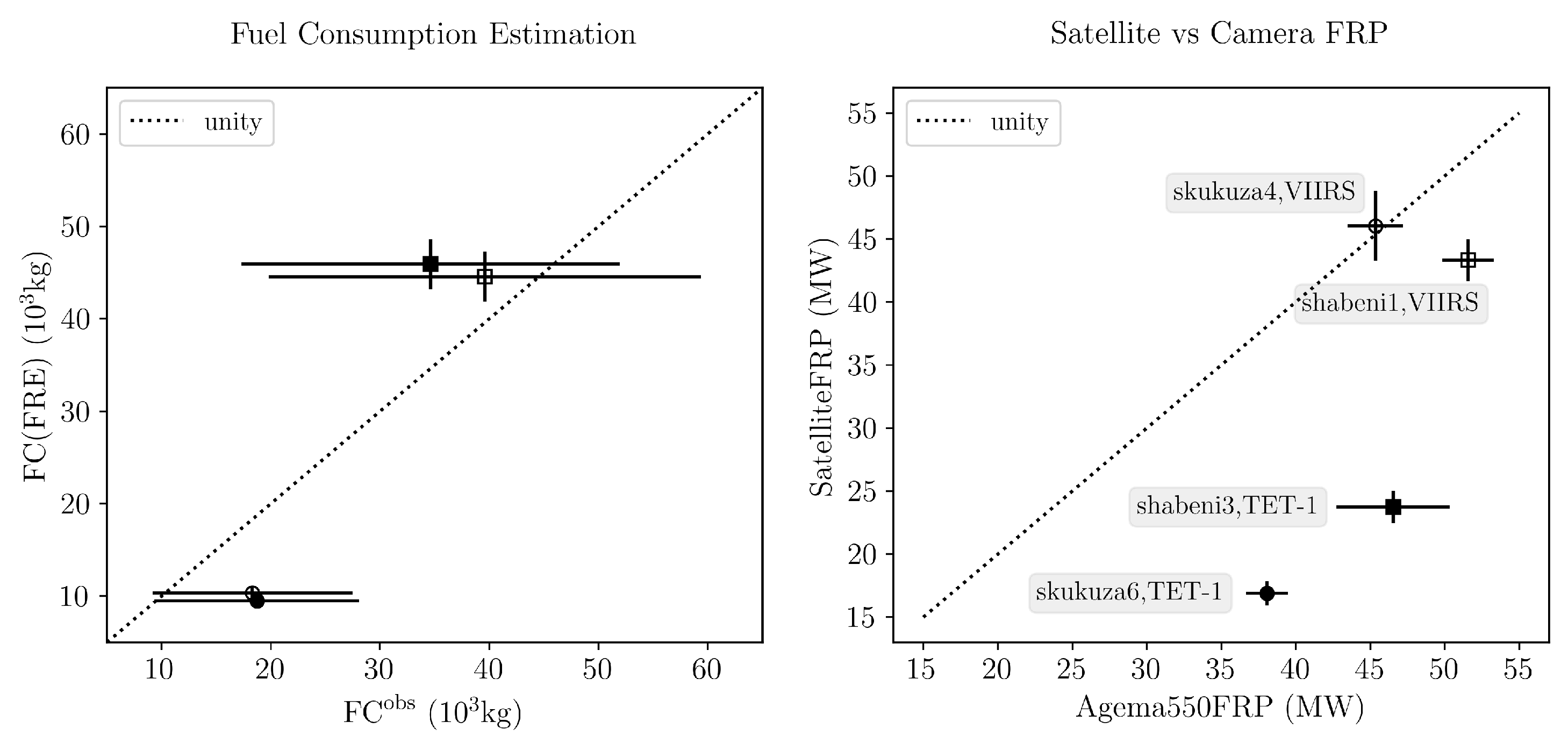

Figure 9.

(Left panel): Comparison between Fuel Consumption (FC) from in-situ measurement (, see Table 1) and estimated from Fire Radiative Energy () using the relationship of [47] for the four experimental burns of the KNP14 data set. (Right panel): Fire Radiative Power (FRP) comparison between satellite- and helicopter-borne images for the same four burns. Markers are identified to burn in the right panel.

FC is calculated assuming a full combustion of grass, whose fuel load was measured using a limited number of pre-fire destructive samples, which were essentially performed on the plot edges. As the fuel load measures () do not take into account the fuel spatial distribution across the burn plot (see for example north-eastern area of Skukuza6 in the visible image presented in Table 1), is therefore associated with a large error estimated here to at least 50 %. More fires with better FC measures are required to give any conclusion. Despite this large error and the underestimation of FRE inherent to the Agema 550 temperature threshold, the comparison between and derived from the small-scale experimental estimation of [47] shows good agreement (see Figure 9—left panel).

The space-borne FRP of the KNP14 data set are computed using the MIR formulation of [47] (Equation (3)) applied to the 178-m TET-1 and the 750-m VIIRS data. The 375-m VIIRS data were not included in the analysis as the sensor saturated for the two burns it overpasses (Skukuza4 and Shabeni1). For our 4 overpasses, in order to limit error from the estimation of the background radiance ( in Equation (3)), the fire pixel mask and were manually estimated. Therefore the source of surface FRP uncertainty is essentially controlled by the transmittance error. The error bars of the satellite FRP values in Figure 9 are computed using transmittance () associated to a different atmospheric profile. The Modtranv5 radiative transfer model [52] is used to compute () and atmospheric profiles representative of the day of the burn are set (a) varying ambient concentration from 360 to 420 ppm and (b) setting the water vapor profile to either the standard mid-latitude summer profile or profile extracted from the European Centre for Medium-range Weather Forecasts analysis data. The error bars reported for the Agema 550 FRP values in Figure 9 are estimated based on the geometrical error mentioned above plus a potential time misregistration of 3 s between the Agema and satellites. KNP14 cameras were manually time synchronized using a handheld GPS unit. A time shift of 3 s is a conservative error range. The comparison helicopter-satellite-borne FRP (Figure 9—right panel) show good agreement between the VIIRS sensor and Agema 550, even in the case of Skukuza4, which comes with a large VIIRS view angle (>). The TET-1 sensor however shows a clear underestimation of FRP by almost a factor 2. This is not in agreement with the work of [53] which reported a concordance between TET-1 and VIIRS using the same FRP formulation and synchronized overpasses of the same fire. As the TET-1 FRP seems to scale properly between Skukuza6 and Shabeni3 FRP, this does not seem to be a saturation problem. The KNP14 fires took place in the early days of the TET-1 operation, a bias might has been present and corrected later. The oldest fire in [53] is from 2016. Using only the four fires from the KNP14 data set, it is difficult to draw any conclusion on the upscaling of FRP measurement. More concurrent observations with more various satellite view angle would be necessary. The methodology presented here however shows that it can be used to provide FRP calulation from sevral hectares burn with high geometrical precision leading to a FRP error lower than 8%.

8. Conclusions

In this work, we presented a methodology to orthorectify helicopter-borne observations from savannah experimental burns. A suite of algorithms was designed to map high-resolution radiance measurements collected with handheld LWIR and MIR thermal cameras. It was successfully applied to four burn plots ranging in area from four to eight hectares, resulting in explicit maps at a spatial resolution of 1 m (50 cm in the case of the smallest burn plot) and at a frequency close to the imager frequency acquisition. Orthorectification accuracy is estimated to be within the range of errors associated with parallax effect induced by the moving camera and flickering flames. The main requirements of the methodology are the following: The camera points towards the plot along a near-constant direction (no spinning around the plot), the background scene around the plot is kept as much as possible in the field of view (no zoom inside the plot area), and the plot can be approximated as a planar surface. If these restrictions are satisfied, it is then possible to orthorectify images without the presence of fixed ground control points during the whole fire duration. The iterative structure of the algorithm ensures the alignment to the first image, which is manually orthorectified.

The present methodology offers a way to map fire behavior, such as an energy-released map or front merging at a scale and a level of detail that is not present in the literature yet. This provides data that can clearly contribute to the current open questions in fire emission and fire model development efforts. In this sense, a comparison between helicopter- and space-borne fire observation points out the need of larger data set to better scan satellite observation angle ranges and better validate the upscaling of FRP measurement.

Collecting detailed information of fire front propagation would also contribute to the further development of data assimilation for application in fire growth modeling [54,55]. The impact of the assimilation time interval on the data assimilation performance is currently an open question, which requires access to data with very high temporal resolution. Application of the methodology to new fire scenarios is being undertaken and with ease thanks to the algorithm parameters definitions presented in this work and the experience gained on the KNP14 data set. In particular, application to fire observations collected from a UAV quadracopter is investigated. Using such a hovering platform would allow a lower view angle and would increase flight/camera pose stability, providing improvements to the algorithm performance. Another potential development is the improvement of the visible image orthorectification, which requires a plume/smoke mask to run through optimization similar to the LWIR images of Algorithm 2. A visible camera with a removed IR filter has potential to map ROS and flame depth from fires with weak plume at a very low cost.

Supplementary Materials

The following are available online at https://www.mdpi.com/article/10.3390/rs13234913/s1.

Author Contributions

Conceptualization, R.P.; Data curation, G.R., O.F., E.L., W.S., B.M. and N.G.; Formal analysis, R.P. and J.-B.F.; Methodology, R.P.; Resources, M.J.W., W.E.M., M.C.R. and J.-B.F.; Supervision, M.J.W. and W.E.M.; Visualization, R.P.; Writing—original draft, R.P.; Writing—review & editing, M.J.W., W.E.M. and M.C.R. All authors have read and agreed to the published version of the manuscript.

Funding

This work has received funding from the European Union’s Horizon 2020 research and innovation program under the Marie Skłodowska-Curie, grant H2020-MSCA-IF-2019-892463. Parts of this work were also supported by the NERC, grant NE/M017729/1 made by the UK’s Natural Environment Research Council. The KNP14 Fieldwork campaign was also supported by the Ministry of Economy of Bavaria (Az. 20-08-3410.2-05-2012) and a START grant (www.start.org, last access date: 24 November 2021).

Acknowledgments

The authors would like to dedicate this paper to the memory of our co-author Eckehard Lorenz, principal investigator of the FireBird mission, who sadly passed away before the manuscript could be completed. The authors would also like to thank Anja Hoffman, the South African National Parks (SANParks) and the fire team from Kruger National Park for the organization of the experimental burns as well as the various staff and students of the Department of Geography, King’s College London, and other visitors, who graciously assisted with the different aspects of the fieldwork. Thanks also to the Bibliothèque Francois Mitterrand in Paris for opening its door to academics forced to pause.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| IR | Infra Red |

| BT | Brightness Temperature |

| LWIR | Long Wave Infra Red |

| MIR | Middle Infra Red |

| VIS | Visible |

| GCP | Ground Control Point |

| SSIM | Structural Similarity Index Metric |

| ROS | Rate Of Spread |

| FI | Fire Intensity |

| FRP | Fire Radiative Power |

| FRE | Fire Radiative Energy |

References

- Prichard, S.; Larkin, N.S.; Ottmar, R.; French, N.H.; Baker, K.; Brown, T.; Clements, C.; Dickinson, M.; Hudak, A.; Kochanski, A.; et al. The Fire and Smoke Model Evaluation Experiment—A Plan for Integrated, Large Fire–Atmosphere Field Campaigns. Atmosphere 2019, 10, 66. [Google Scholar] [CrossRef]

- Andela, N.; Morton, D.C.; Giglio, L.; Chen, Y.; Van Der Werf, G.R.; Kasibhatla, P.S.; DeFries, R.S.; Collatz, G.J.; Hantson, S.; Kloster, S.; et al. A human-driven decline in global burned area. Science 2017, 356, 1356–1362. [Google Scholar] [CrossRef] [PubMed]

- Roberts, G.; Wooster, M.J. Global impact of landscape fire emissions on surface level PM2.5 concentrations, air quality exposure and population mortality. Atmos. Environ. 2021, 252, 118210. [Google Scholar] [CrossRef]

- Doerr, S.H.; Santín, C. Global trends in wildfire and its impacts: Perceptions versus realities in a changing world. Philos. Trans. R. Soc. Lond. Ser. B Biol. Sci. 2016, 371. [Google Scholar] [CrossRef]

- Abram, N.J.; Henley, B.J.; Sen Gupta, A.; Lippmann, T.J.R.; Clarke, H.; Dowdy, A.J.; Sharples, J.J.; Nolan, R.H.; Zhang, T.; Wooster, M.J.; et al. Connections of climate change and variability to large and extreme forest fires in southeast Australia. Commun. Earth Environ. 2021, 2, 1–17. [Google Scholar] [CrossRef]

- Singleton, M.P.; Thode, A.E.; Sánchez Meador, A.J.; Iniguez, J.M. Increasing trends in high-severity fire in the southwestern USA from 1984 to 2015. For. Ecol. Manag. 2019, 433, 709–719. [Google Scholar] [CrossRef]

- Kochanski, A.K.; Jenkins, M.A.; Yedinak, K.; Mandel, J.; Beezley, J.; Lamb, B. Toward an integrated system for fire, smoke and air quality simulations. Int. J. Wildland Fire 2016, 25, 534. [Google Scholar] [CrossRef]

- Coen, J.L. Modeling Wildland Fires: Of the Coupled Atmosphere-Wildland Fire Environment Model (CAWFE); Technical Report, NCAR Technical Note NCAR/TN-500+STR; NCAR: Boulder, CO, USA, 2013. [Google Scholar] [CrossRef]

- Mandel, J.; Beezley, J.D.; Kochanski, A.K. Coupled atmosphere-wildland fire modeling with WRF 3.3 and SFIRE. Geosci. Model Dev. 2011, 4, 591–610. [Google Scholar] [CrossRef]

- Kochanski, A.K.; Jenkins, M.A.; Mandel, J.; Beezley, J.D.; Krueger, S.K. Real time simulation of 2007 Santa Ana fires. For. Ecol. Manag. J. 2013, 294, 136–149. [Google Scholar] [CrossRef]

- Filippi, J.B.; Bosseur, F.; Pialat, X.; Santoni, P.A.; Strada, S.; Mari, C. Simulation of Coupled Fire/Atmosphere Interaction with the MesoNH-ForeFire Models. J. Combust. 2011, 2011, 1–13. [Google Scholar] [CrossRef]

- Filippi, J.B.; Bosseur, F.; Mari, C.; Lac, C. Simulation of a Large Wildfire in a Coupled Fire-Atmosphere Model. Atmosphere 2018, 9, 218. [Google Scholar] [CrossRef]

- Liu, Y.; Kochanski, A.; Baker, K.R.; Mell, W.; Linn, R.; Paugam, R.; Mandel, J.; Fournier, A.; Jenkins, M.A.; Goodrick, S.; et al. Fire behaviour and smoke modelling: Model improvement and measurement needs for next-generation smoke research and forecasting systems. Int. J. Wildland Fire 2019, 28, 570. [Google Scholar] [CrossRef]

- Ottmar, R.D.; Hiers, J.K.; Butler, B.W.; Clements, C.B.; Dickinson, M.B.; Hudak, A.T.; O’Brien, J.J.; Potter, B.E.; Rowell, E.M.; Strand, T.M.; et al. Measurements, datasets and preliminary results from the RxCADRE project—2008, 2011 and 2012. Int. J. Wildland Fire 2016, 25, 1. [Google Scholar] [CrossRef]

- Hudak, A.T.; Dickinson, M.B.; Bright, B.C.; Kremens, R.L.; Loudermilk, E.L.; O’Brien, J.J.; Hornsby, B.S.; Ottmar, R.D. Measurements relating fire radiative energy density and surface fuel consumption—RxCADRE 2011 and 2012. Int. J. Wildland Fire 2016, 25, 25–37. [Google Scholar] [CrossRef]

- Butler, B.; Teske, C.; Jimenez, D.; O’Brien, J.; Sopko, P.; Wold, C.; Vosburgh, M.; Hornsby, B.; Loudermilk, E. Observations of energy transport and rate of spreads from low-intensity fires in longleaf pine habitat—RxCADRE 2012. Int. J. Wildland Fire 2016, 25, 76–89. [Google Scholar] [CrossRef]

- Mueller, E.V.; Skowronski, N.; Clark, K.; Gallagher, M.; Kremens, R.; Thomas, J.C.; El Houssami, M.; Filkov, A.; Hadden, R.M.; Mell, W.; et al. Utilization of remote sensing techniques for the quantification of fire behavior in two pine stands. Fire Saf. J. 2017, 91, 845–854. [Google Scholar] [CrossRef]

- Clements, C.B.; Kochanski, A.K.; Seto, D.; Davis, B.; Camacho, C.; Lareau, N.P.; Contezac, J.; Restaino, J.; Heilman, W.E.; Krueger, S.K.; et al. The FireFlux II experiment: A model-guided field experiment to improve understanding of fire–atmosphere interactions and fire spread. Int. J. Wildland Fire 2019, 28, 308. [Google Scholar] [CrossRef]

- McRae, D.J.; Jin, J.Z.; Conard, S.G.; Sukhinin, A.I.; Ivanova, G.A.; Blake, T.W. Infrared characterization of fine-scale variability in behavior of boreal forest fires. Can. J. For. Res. 2005, 35, 2194–2206. [Google Scholar] [CrossRef]

- Pastor, E.; Planas, E. Infrared imagery on wildfire research. Some examples of sound capabilities and applications. In Proceedings of the 2012 3rd International Conference on Image Processing Theory, Tools and Applications, IPTA 2012, Istanbul, Turkey, 15–18 October 2012; pp. 31–36. [Google Scholar] [CrossRef]

- Paugam, R.; Wooster, M.J.; Roberts, G. Use of handheld thermal imager data for airborne mapping of fire radiative power and energy and flame front rate of spread. IEEE Trans. Geosci. Remote Sens. 2013, 51, 3385–3399. [Google Scholar] [CrossRef]

- Zajkowski, T.J.; Dickinson, M.B.; Hiers, J.K.; Holley, W.; Williams, B.W.; Paxton, A.; Martinez, O.; Walker, G.W. Evaluation and use of remotely piloted aircraft systems for operations and research—RxCADRE 2012. Int. J. Wildland Fire 2016, 25, 114. [Google Scholar] [CrossRef]

- Allison, R.; Johnston, J.; Craig, G.; Jennings, S.; Allison, R.S.; Johnston, J.M.; Craig, G.; Jennings, S. Airborne Optical and Thermal Remote Sensing for Wildfire Detection and Monitoring. Sensors 2016, 16, 1310. [Google Scholar] [CrossRef] [PubMed]

- Moran, C.J.; Seielstad, C.A.; Cunningham, M.R.; Hoff, V.; Parsons, R.A.; Queen, L.; Sauerbrey, K.; Wallace, T. Deriving Fire Behavior Metrics from UAS Imagery. Fire 2019, 2, 36. [Google Scholar] [CrossRef]

- Pérez, Y.; Pastor, E.; Planas, E.; Plucinski, M.; Gould, J. Computing forest fires aerial suppression effectiveness by IR monitoring. Fire Saf. J. 2011, 46, 2–8. [Google Scholar] [CrossRef]

- Rios, O.; Pastor, E.; Valero, M.M.; Planas, E. Short-term fire front spread prediction using inverse modelling and airborne infrared images. Int. J. Wildland Fire 2016, 25, 1033. [Google Scholar] [CrossRef][Green Version]

- Pastor, E.; Àgueda, A.; Andrade-Cetto, J.; Muñoz, M.; Pérez, Y.; Planas, E. Computing the rate of spread of linear flame fronts by thermal image processing. Fire Saf. J. 2006, 41, 569–579. [Google Scholar] [CrossRef]

- Valero, M.M.; Verstockt, S.; Butler, B.; Jimenez, D.; Rios, O.; Mata, C.; Queen, L.; Pastor, E.; Planas, E. Thermal Infrared Video Stabilization for Aerial Monitoring of Active Wildfires. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2817–2832. [Google Scholar] [CrossRef]

- Biggs, R.; Biggs, H.C.; Dunne, T.T.; Govender, N.; Potgieter, A.L. Experimental burn plot trial in the Kruger National Park: History, experimental design and suggestions for data analysis. Koedoe 2003, 46, 1–15. [Google Scholar] [CrossRef]

- Farr, T.G.; Rosen, P.A.; Caro, E.; Crippen, R.; Duren, R.; Hensley, S.; Kobrick, M.; Paller, M.; Rodriguez, E.; Roth, L.; et al. The shuttle radar topography mission. Rev. Geophys. 2007, 45. [Google Scholar] [CrossRef]

- Govender, N.; Trollope, W.S.W.; van Wilgen, B.W. The effect of fire season, fire frequency, rainfall and management on fire intensities in savanna vegetation in South Africa. J. Appl. Ecol. 2006, 43, 748–758. [Google Scholar] [CrossRef]

- Disney, M.; Lewis, P.; Gomez-Dans, J.; Roy, D.; Wooster, M.; Lajas, D. 3D radiative transfer modelling of fire impacts on a two-layer savanna system. Remote Sens. Environ. 2011, 115, 1866–1881. [Google Scholar] [CrossRef]

- Wooster, M.J.; Freeborn, P.H.; Archibald, S.; Oppenheimer, C.; Roberts, G.J.; Smith, T.E.; Govender, N.; Burton, M.; Palumbo, I. Field determination of biomass burning emission ratios and factors via open-path FTIR spectroscopy and fire radiative power assessment: Headfire, backfire and residual smouldering combustion in African savannahs. Atmos. Chem. Phys. 2011, 11, 11591–11615. [Google Scholar] [CrossRef]

- Schroeder, W.; Oliva, P.; Giglio, L.; Csiszar, I.A. The New VIIRS 375m active fire detection data product: Algorithm description and initial assessment. Remote Sens. Environ. 2014, 143, 85–96. [Google Scholar] [CrossRef]

- Fischer, C.; Klein, D.; Kerr, G.; Stein, E.; Lorenz, E.; Frauenberger, O.; Borg, E. Data Validation and Case Studies using the TET-1 Thermal Infrared Satellite System. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, XL-7/W3, 1177–1182. [Google Scholar] [CrossRef]

- Evangelidis, G.D.; Psarakis, E.Z. Parametric image alignment using enhanced correlation coefficient maximization. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 1858–1865. [Google Scholar] [CrossRef] [PubMed]

- Bovik, A.; Wang, Z.; Sheikh, H.; Bovik, A.; Sheikh, H. Structural Similarity Based Image Quality Assessment. In Signal Processing and Communications; CRC Press: Boca Raton, FL, USA, 2005; Chapter 7; pp. 225–241. [Google Scholar] [CrossRef]

- Szeliski, R. Computer Vision; Springer: London, UK, 2011; pp. 43–1–43–23. [Google Scholar] [CrossRef]

- Evangelidis, G.D. IAT: A Matlab Toolbox for Image Alignment. 2013. Available online: https://sites.google.com/site/imagealignment/ (accessed on 24 November 2021).

- Bouguet, J.Y. Pyramidal Implementation of the Lucas Kanade Feature Tracker Description of the Algorithm; Technical Report; Microprocessor Research Labs, Intel Corporation: Santa Clara, CA, USA, 2001. [Google Scholar] [CrossRef]

- Valero, M.M.; Verstockt, S.; Mata, C.; Jimenez, D.; Queen, L.; Rios, O.; Pastor, E.; Planas, E. Image Similarity Metrics Suitable for Infrared Video Stabilization during Active Wildfire Monitoring: A Comparative Analysis. Remote Sens. 2020, 12, 540. [Google Scholar] [CrossRef]

- Wooster, M.J.; Roberts, G.; Smith, A.M.S.; Johnston, J.; Freeborn, P.; Amici, S.; Hudak, A.T. Thermal Remote Sensing of Active Vegetation Fires and Biomass Burning Events. In Remote Sensing and Digital Image Processing; Springer International Publishing: Berlin/Heidelberg, Germany, 2013; Volume 17, pp. 347–390. [Google Scholar] [CrossRef]

- Hampel, F.R. The influence curve and its role in robust estimation. J. Am. Stat. Assoc. 1974, 69, 383–393. [Google Scholar] [CrossRef]

- Mell, W.E.; Linn, R.R. FIRETEC and WFDS Modeling of Fire Behavior and Smoke in Support of FASMEE—Final Report to the Joint Fire Science Program | FRAMES. 2017. Available online: https://www.fs.usda.gov/pnw/projects/firetec-and-wfds-modeling-fire-behavior-and-smoke-support-fasmee (accessed on 24 November 2021).

- Ciullo, V.; Rossi, L.; Pieri, A. Experimental Fire Measurement with UAV Multimodal Stereovision. Remote Sens. 2020, 12, 3546. [Google Scholar] [CrossRef]

- Valero, M.M.; Rios, O.; Planas, E.; Pastor, E. Automated location of active fire perimeters in aerial infrared imaging using unsupervised edge detectors. Int. J. Wildland Fire 2018, 27, 241–256. [Google Scholar] [CrossRef]

- Wooster, M.J.; Roberts, G.; Perry, G.L.W.; Kaufman, Y.J. Retrieval of biomass combustion rates and totals from fire radiative power observations: FRP derivation and calibration relationships between biomass consumption and fire radiative energy release. J. Geophys. Res 2005, 110, 24311. [Google Scholar] [CrossRef]

- Kremens, R.L.; Smith, A.M.; Dickinson, M.B. Fire Metrology: Current and Future Directions in Physics-Based Measurements. Fire Ecol. 2010, 6, 13–35. [Google Scholar] [CrossRef]

- Dickinson, M.B.; Hudak, A.T.; Zajkowski, T.; Loudermilk, E.L.; Schroeder, W.; Ellison, L.; Kremens, R.L.; Holley, W.; Martinez, O.; Paxton, A.; et al. Measuring radiant emissions from entire prescribed fires with ground, airborne and satellite sensors—RxCADRE 2012. Int. J. Wildland Fire 2016, 25, 48–61. [Google Scholar] [CrossRef]

- Kaiser, J.W.; Heil, A.; Andreae, M.O.; Benedetti, A.; Chubarova, N.; Jones, L.; Morcrette, J.J.; Razinger, M.; Schultz, M.G.; Suttie, M.; et al. Biomass burning emissions estimated with a global fire assimilation system based on observed fire radiative power. Biogeosciences 2012, 9, 527–554. [Google Scholar] [CrossRef]

- Schroeder, W.; Ellicott, E.; Ichoku, C.; Ellison, L.; Dickinson, M.B.; Ottmar, R.D.; Clements, C.; Hall, D.; Ambrosia, V.; Kremens, R. Integrated active fire retrievals and biomass burning emissions using complementary near-coincident ground, airborne and spaceborne sensor data. Remote Sens. Environ. 2014, 140, 719–730. [Google Scholar] [CrossRef]

- Berk, A.; Anderson, G.P.; Acharya, P.K.; Bernstein, L.S.; Muratov, L.; Lee, J.; Fox, M.; Adler-Golden, S.M.; Chetwynd, J.H., Jr.; Hoke, M.L.; et al. MODTRAN5: 2006 update. Proc. SPIE 2006, 6233, 62331F. [Google Scholar] [CrossRef]

- Nolde, M.; Plank, S.; Richter, R.; Klein, D.; Riedlinger, T. The DLR FireBIRD Small Satellite Mission: Evaluation of Infrared Data for Wildfire Assessment. Remote Sens. 2021, 13, 1459. [Google Scholar] [CrossRef]

- Rochoux, M.C.; Ricci, S.; Lucor, D.; Cuenot, B.; Trouvé, A. Towards predictive data-driven simulations of wildfire spread—Part I: Reduced-cost ensemble Kalman filter based on a polynomial chaos surrogate model for parameter estimation. Nat. Hazards Earth Syst. Sci. 2014, 14, 2951–2973. [Google Scholar] [CrossRef]

- Rochoux, M.C.; Emery, C.; Ricci, S.; Cuenot, B.; Trouvé, A. Towards predictive data-driven simulations of wildfire spread—Part II: Ensemble Kalman Filter for the state estimation of a front-tracking simulator of wildfire spread. Nat. Hazards Earth Syst. Sci. 2015, 15, 1721–1739. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).