1. Introduction

Synthetic aperture radar (SAR) has been widely used in various fields due to its ability to acquire high-resolution images nearly all the time and in all weather conditions. With the development of high-resolution spaceborne SAR, high-resolution SAR data are becoming more abundant and easier to acquire. As one of the significant ocean applications of SAR images, ship detection plays an important role in shipwreck rescues, maritime traffic safety, and so on.

Traditional SAR image target detection methods can be divided into four main types, including detection algorithms based on statistical features and saliency, as well as shape and texture features [

1,

2]. Among these detection methods, the constant false alarm rate (CFAR) detection algorithm and its improved algorithm [

3,

4] are the most widely studied and applied. However, this type of algorithm has the problem of poor adaptability, and changes in the background often have a great impact on the detection results.

In recent years, convolutional neural networks have achieved great success in the field of computer vision through their powerful capability for of automatic feature extraction [

5,

6,

7,

8,

9]. The introduction of target detection technology based on convolutional neural networks has strongly promoted the development of SAR target detection. However, due to the inherent imaging mechanism of SAR, SAR image target detection still faces many challenges. For example, the speckle noise in SAR images will affect the performance of the detector, and the angular scintillation effect of radar scattering makes detection more difficult. Besides, the detected targets have different scales—some targets are small in size or even only a few pixels—and background interference, clutter interference, etc. will affect the performance of the detector.

At present, research into SAR ship detection methods based on deep learning has made great progress. In 2017, target detection in SAR images based on deep learning began to become a hotspot [

10,

11,

12,

13]. Since 2018, many SAR target detection algorithms have used FPN [

7] or its variants [

14,

15,

16] for multiscale fusion to solve multiscale, especially small, target detection problems [

17,

18,

19,

20]. Meanwhile, the reference of the attention mechanism effectively improves the detection performance [

18,

19,

20,

21]. In addition, much research has also looked into improving the detection speed to achieve real-time detection while ensuring detection accuracy [

19,

22,

23]. However, there have also been some problems. Currently, the ship detection performance in the offshore scenarios is satisfactory, while there is still much room for improvement in ship detection in inshore scenes. As for port ships, the buildings on the shore show a strong similarity to the SAR image, which create great interference in the detection of nearshore ships. In particular, ships usually have relatively large differences in length and width. The rectangular frame detection usually used will make the detection area contain more ground object interference, which will affect the detection. Cui et al. conducted comparative experiments using a SSDD dataset, and the detection performance on nearshore ships was much lower than that for the offshore part [

18]. Sun et al. conducted related experiments on the AIR-SARShip-1.0 dataset released by themselves [

24]. The experimental results indicated that there is still a large gap in the practicality of the detection of inshore ship targets.

In order to detect targets with a large difference in length and width, rotating frame detection networks have been proposed. Rotating frame detection was first applied in the field of text detection, such as RRPN, EAST, R2CNN, etc. [

25,

26,

27]. Subsequently, rotating frame detection was introduced into the field of optical remote sensing. For objects that are densely arranged and whose directions are arbitrary, the introduction of the rotatable box can effectively promote detection performance [

28]. Recently, the rotatable box was introduced into the field of SAR target detection. Jizhou Wang et al. conducted simultaneous ship detection and orientation estimation in SAR images based on the attention module and angle regression [

29]. An et al. proposed a one-stage DRBOX-v2, which improved the encoding scheme of the rotatable box [

30]. Chen Chen et al. proposed a multiscale adaptive recalibration network (MSARN) to detect multiscale and arbitrarily oriented ships in complex scenarios and modified the rotated non-maximum suppression (RNMS) method to solve the problem of the large overlap ratio of the detection box [

31]. Shiqi Chen et al. proposed a rotated refined feature alignment detector (R2FA-Det), which ingeniously balances the quality of bounding box prediction and the high speed of the one-stage framework [

32]. However, the datasets used by these researchers were all in a single category or labeled by themselves without a unified standard, and it is difficult to make effective comparisons.

Deep learning is data-driven, and the quantity and quality of datasets will have a great impact on a model’s performance. However, it is very difficult to label the SAR image targets, and this has become one of the limitations of the development of SAR target detection and recognition based on deep learning. At present, some public SAR target detection datasets have been released by some researchers. Among them, SSDD [

16] is a SAR ship detection dataset released in 2017, which is currently widely used. However, the resolution of the SSDD dataset is not very high. As the acquisition of high-resolution SAR images has become easier, the SSDD is no longer suitable for ship detection under high-resolution conditions. The SAR-Ship-Dataset [

33] is a dataset with many slices released in 2019. Nevertheless, the size of a single slice is 256 × 256. The features contained in a single slice are very limited, as the slice is too small. Consequently, some datasets have beenreleased recently, such as AIR-SARShip-1.0 [

24], HRSID [

34], and LS-SSDD-v1.0 [

35]. These datasets have a higher resolution, and the image size in the datasets has also been improved. However, these datasets only contain one category, namely ships, and it is not possible to conduct multicategory target detection research on sets such as the Pascal VOC [

36] and Microsoft COCO [

37] target detection datasets. In addition, most of these datasets are labeled with horizontal boxes. As ships are generally oriented, this inevitably creates strong background interference.

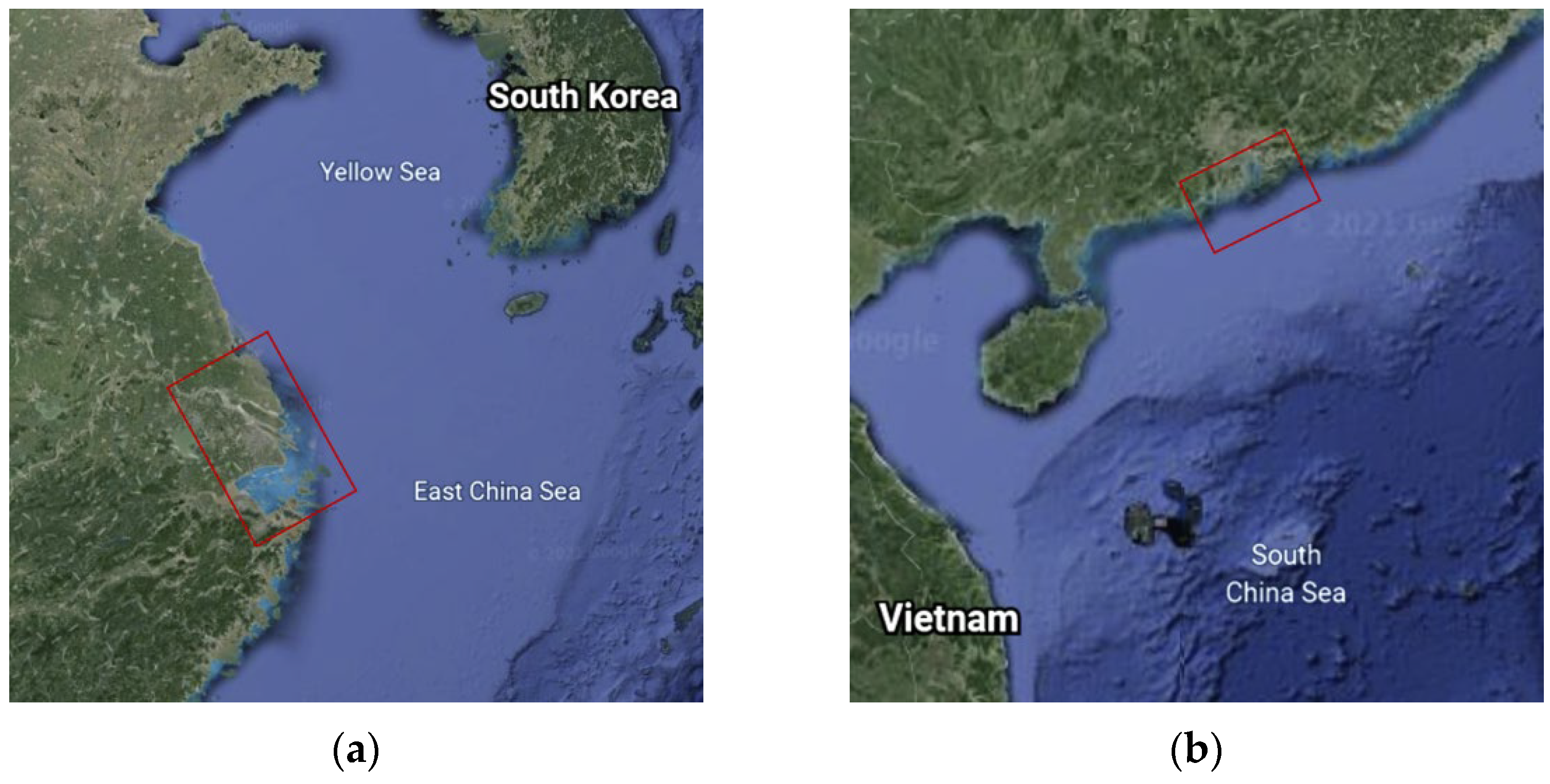

Based on the above considerations, we released the SRSDD-v1.0 dataset. Compared with other existing SAR ship datasets, the unique advantages of our SRSDD-v1.0 dataset are fourfold.

All data in the dataset are from GF-3 Spotlight (SL) mode with a 1-m resolution and each image has 1024 × 1024 pixels, which is relatively larger and can contain more abundant information.

The data of inshore scenes occupy a proportion of 63.1%, with complex backgrounds and much interference, making detection more challenging.

We used the rotatable box to label the target, which is helpful for detecting dense targets and effectively excluding interference.

Compared with other existing datasets, the dataset contains multiple categories, namely a total of six categories of 2884 ships.

4. Discussion

As is well known, many factors influence detection performance. In the experiments, background interference was a relatively large problem. When there is land in the background, some buildings or mountains on the land usually have similar characteristics to the targets, which creates great difficulties in ship detection. In addition, it is generally difficult to detect ships in shipyards, and the surrounding building structures cause great interference.

As seen from the experimental results, the two-stage detection algorithms performed better on this dataset than the single-stage and anchor-free detectors. In general, it is important to make the best trade-off when choosing a detector. In the experiments, Oriented RCNN achieved the best mAP on the dataset and an acceptable detection speed at the same time.

Given that the dataset is challenging, the detection algorithms inevitably have some missed detections, false alarms, and false detections. We give some examples in

Figure 19 for analysis. As depicted in

Figure 19, the left column represents the ground truth while the right column stands for the real detections. As for the false alarm in

Figure 19b, we can see that the shape of the wharf is very similar to that of the ship, which created interference and caused the false alarm. With respect to the false detections in

Figure 19d, some fishing boats were incorrectly detected as bulk cargo ships because there were no obvious distinguishable features between them. In terms of the missed ship on the left in

Figure 19f, the scattering characteristics of the wharf are mixed with the ship, causing false detection. As for the missed ship on the right, it can be inferred that the mixed scattering characteristics of the adjacent ships interfered with the detection, which caused the missed detection.

We know that there are still some shortcomings in this dataset. For example, the amount of data is not very large, and there are also some imbalances in several categories. However, for some categories with obvious characteristics, the detection performance is still satisfactory, on the condition that the number is not great enough. The experimental results of multiple models have also proved this point.