Reference Measurements in Developing UAV Systems for Detecting Pests, Weeds, and Diseases

Abstract

:1. Introduction

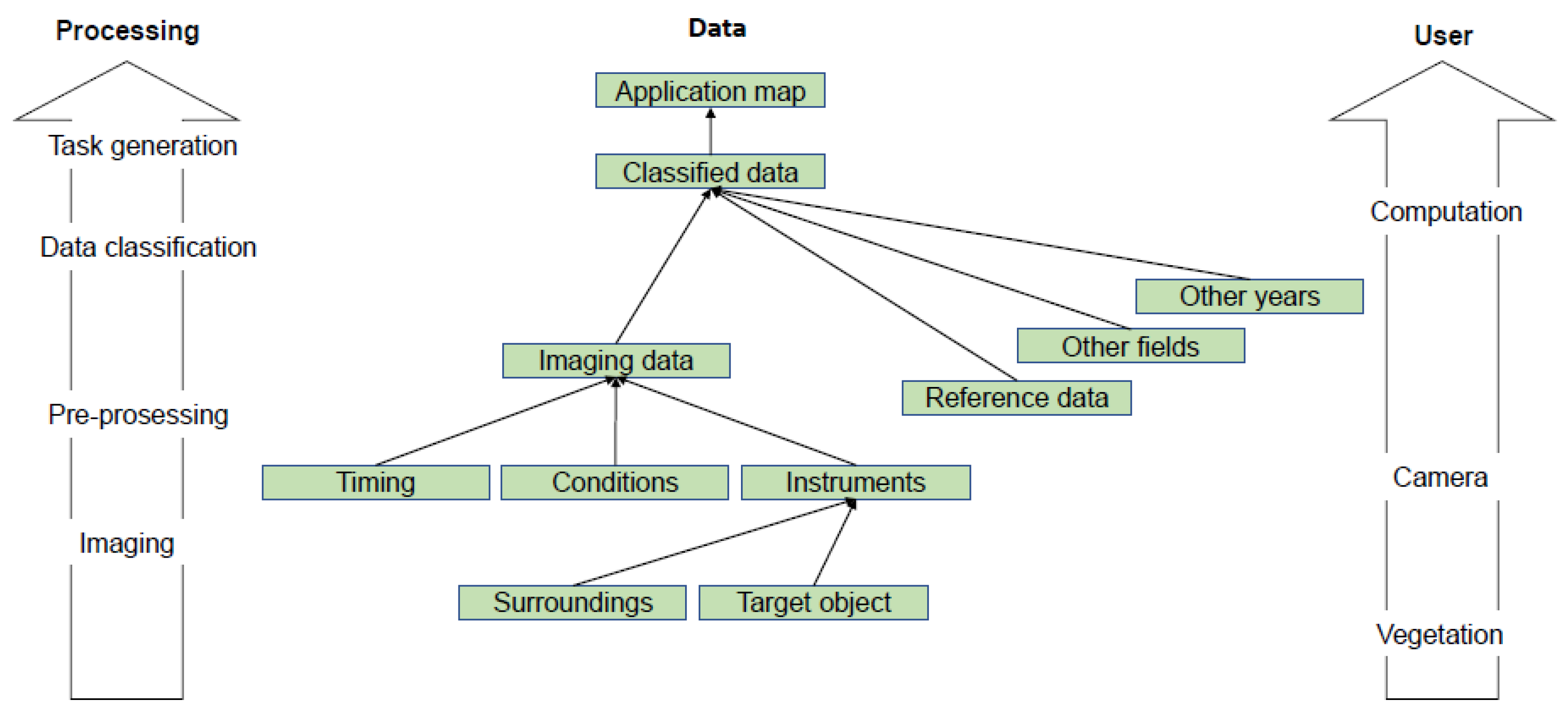

2. Methodology

- What was the research topic, and what were the studied crops and the precision farming scenario that the research targeted?

- What were the tools and parameters for the imaging of the weeds/pests/diseases?

- What was the applied reference data, and how were they used?

- How was the operational timing presented?

- What were the processing and analysis methods, including data resampling, for different resolutions?

- What are conclusive procedures for planning an imaging campaign?

- How were these methods differentiated from other agricultural imaging topics?

3. Reference Measurements

3.1. Study Topics

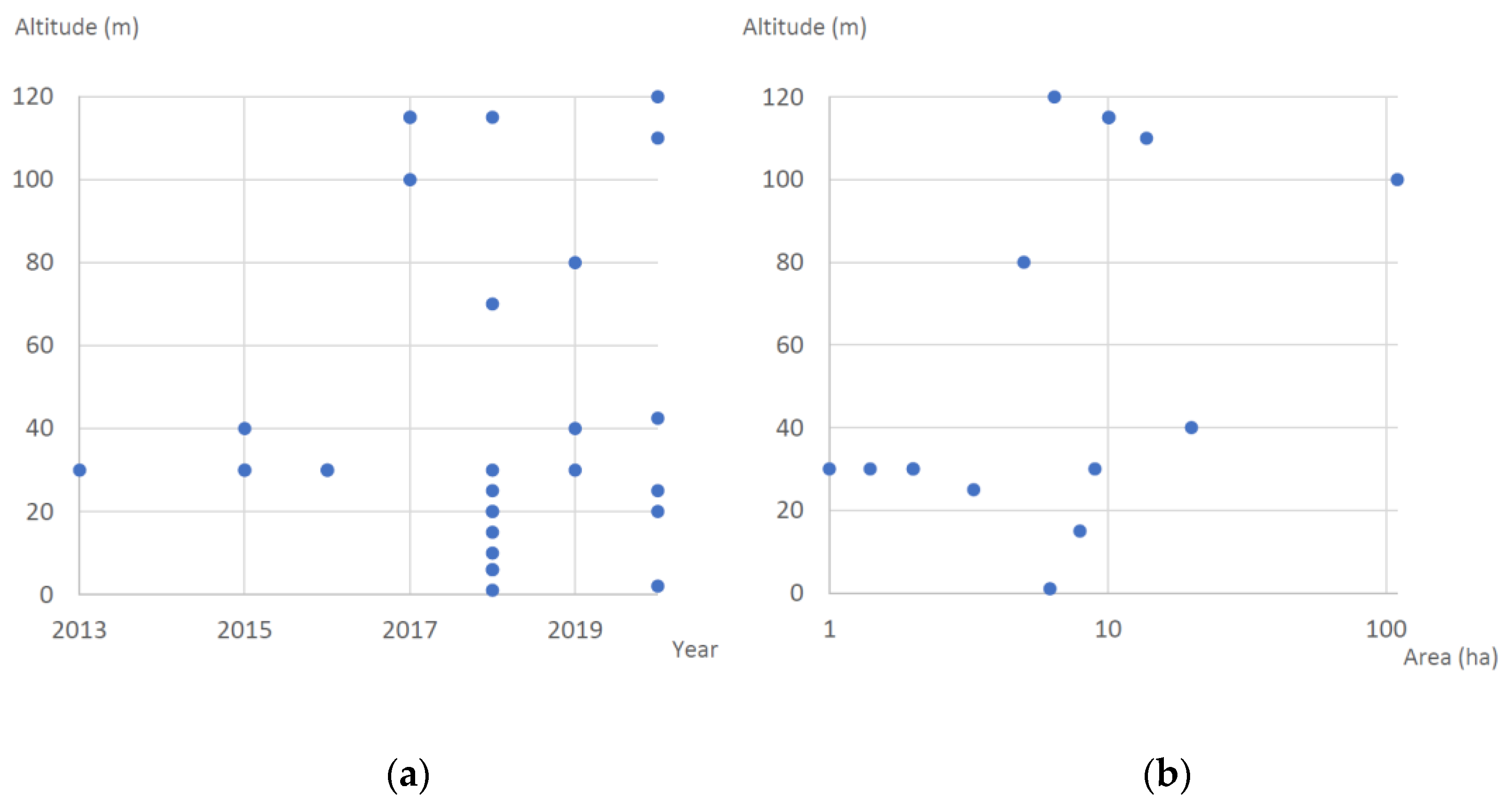

3.2. UAV Imaging Campaigns

3.3. Reference Measurements for Development and Evaluation

- Tree number

- Latitude

- Longitude

- Block number

- Row number

- Panel (group of four to six grapevines)

- Tree variety

- Expert visual vigour assessment

- Digital vigour model (calculated classes 2–5 based on imaging)

- Multispectral derived indices and bands

- Hyperspectral derived indices and bands

- Soil conductivity data

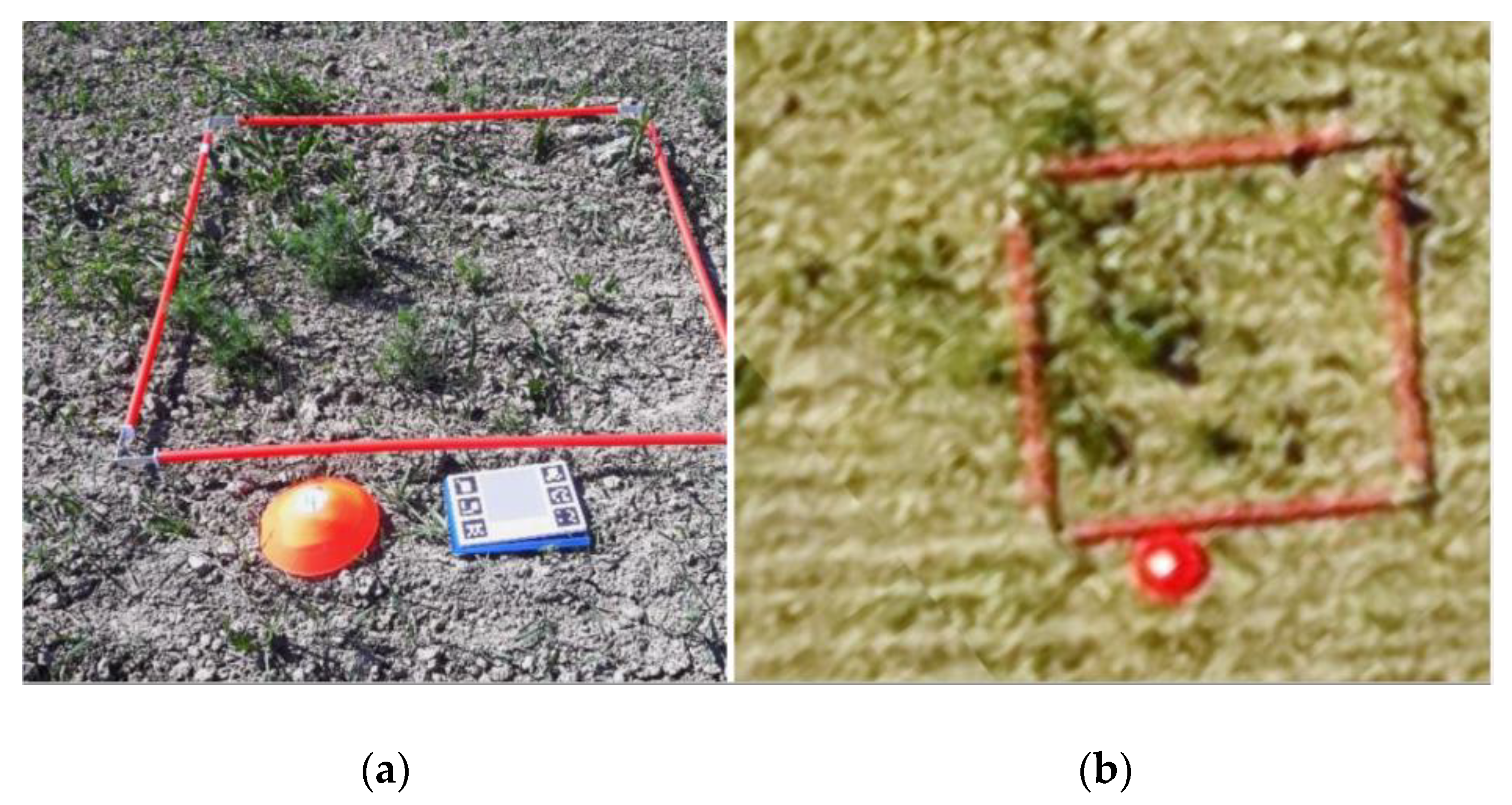

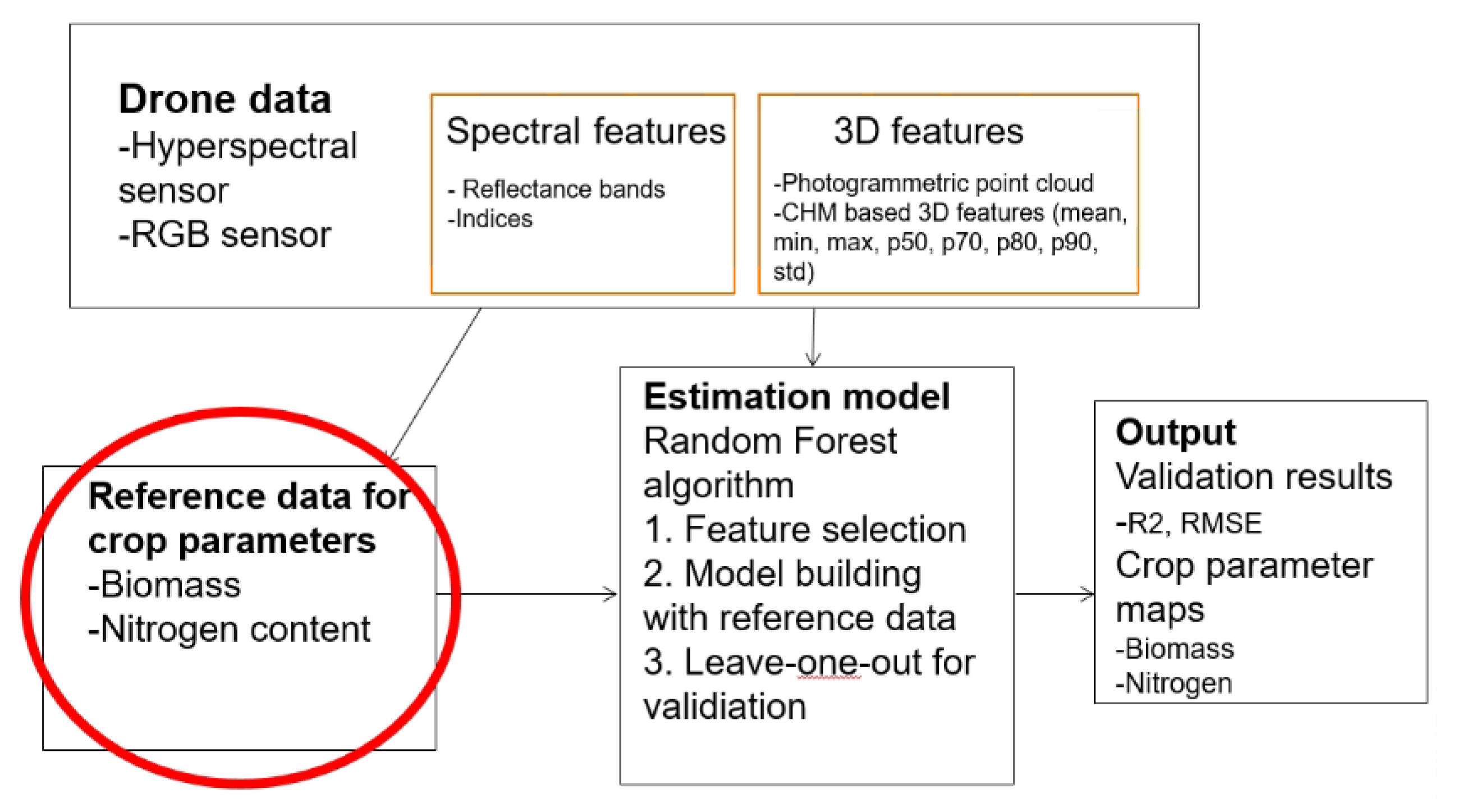

3.3.1. In Situ Measurements

3.3.2. Visual Image Analysis

3.4. Timing of the Imaging

3.5. Data Processing and Analysis Solutions

3.6. General Campaign Planning

3.7. Differences with Other UAV Imaging Applications

4. Discussion

5. Conclusions

- Carefully define the characteristics of the reference data and how they are measured in order to make the process repeatable. The reference data were often not defined accurately.

- Consider other imaging methods, camera directions, campaign timing, and imaging wavelengths to better make the target objects visible. Imaging possibilities were not considered in the studies.

- Focus on reference data quality and quantity. The studies did not focus on these, and the quantity was heavily affected by the convenience factor.

- Adjust classification methods to make them suitable for the reference data characteristics. There should be reference data for each class.

- Consider all remote sensing data quality aspects presented in [118]. Due to the nature of feasibility studies, these aspects were not met.

- Elaborate on the classification results in contrast to the collected reference data quality and quantity. This is very important. A majority of studies presented overwhelming results because the involved data were so limited.

- Evaluate the possibility to exploit synthetic data for reference at least for some level. This was not considered in the studies.

- Adapt general study goals and plans according to the TRL classification. TRLs were not mentioned in the studies, but the adaptation can help define the requirements and can especially give a realistic framework for the customer or for the end user.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kim, J.; Kim, S.; Ju, C.; Son, H. Unmanned Aerial Vehicles in Agriculture: A Review of Perspective of Platform, Control, and Applications. IEEE Access 2019, 7, 105100–105115. [Google Scholar] [CrossRef]

- Hunter, M.; Smith, R.; Schipanski, M.; Atwood, L.; Mortensen, D. Agriculture in 2050: Recalibrating Targets for Sustainable Intensification. Bioscience 2017, 67, 385–390. [Google Scholar] [CrossRef] [Green Version]

- Kruize, J.; Wolfert, J.; Scholten, H.; Verdouw, C.; Kassahun, A.; Beulens, A. A reference architecture for Farm Software Ecosystems. Comput. Electron. Agric. 2016, 125, 12–28. [Google Scholar] [CrossRef] [Green Version]

- Verdouw, C.N.; Kruize, J.W. Twins in farm management: Illustrations from the FIWARE accelerators SmartAgriFood and Fractals. In Proceedings of the PA17—The International Tri-Conference for Precision Agriculture in 2017, Hamilton, New Zealand, 16–18 October 2017. [Google Scholar]

- Kaivosoja, J. Role of Spatial Data Uncertainty in Execution of Precision Farming Operations; Aalto University Publication Series: Espoo, Finland, 2019; Volume 217, p. 66. [Google Scholar]

- Mogili, U.R.; Deepak, B. Review on Application of Drone Systems in Precision Agriculture. Procedia Comput. Sci. 2018, 133, 502–509. [Google Scholar] [CrossRef]

- Tsouros, D.; Bibi, S.; Sarigiannidis, P. A Review on UAV-Based Applications for Precision Agriculture. Information 2019, 10, 349. [Google Scholar] [CrossRef] [Green Version]

- Zhang, C.; Kovacs, J. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Libran-Embid, F.; Klaus, F.; Tscharntke, T.; Grass, I. Unmanned aerial vehicles for biodiversity-friendly agricultural landscapes-A systematic review. Sci. Total Environ. 2020, 732. [Google Scholar] [CrossRef] [PubMed]

- Chechetka, S.; Yu, Y.; Tange, M.; Miyako, E. Materially Engineered Artificial Pollinators. Chem 2017, 2, 224–239. [Google Scholar] [CrossRef] [Green Version]

- Romero, M.; Luo, Y.; Su, B.; Fuentes, S. Vineyard water status estimation using multispectral imagery from an UAV platform and machine learning algorithms for irrigation scheduling management. Comput. Electron. Agric. 2018, 147, 109–117. [Google Scholar] [CrossRef]

- Honkavaara, E.; Saari, H.; Kaivosoja, J.; Polonen, I.; Hakala, T.; Litkey, P.; Makynen, J.; Pesonen, L. Processing and Assessment of Spectrometric, Stereoscopic Imagery Collected Using a Lightweight UAV Spectral Camera for Precision Agriculture. Remote Sens. 2013, 5, 5006–5039. [Google Scholar] [CrossRef] [Green Version]

- Nasi, R.; Viljanen, N.; Kaivosoja, J.; Alhonoja, K.; Hakala, T.; Markelin, L.; Honkavaara, E. Estimating Biomass and Nitrogen Amount of Barley and Grass Using UAV and Aircraft Based Spectral and Photogrammetric 3D Features. Remote Sens. 2018, 10, 1082. [Google Scholar] [CrossRef] [Green Version]

- Viljanen, N.; Honkavaara, E.; Nasi, R.; Hakala, T.; Niemelainen, O.; Kaivosoja, J. A Novel Machine Learning Method for Estimating Biomass of Grass Swards Using a Photogrammetric Canopy Height Model, Images and Vegetation Indices Captured by a Drone. Agriculture 2018, 8, 70. [Google Scholar] [CrossRef] [Green Version]

- FAO. New Standards to Curb the Global Spread of Plant Pests and Diseases; Food and Agriculture Organization of the United Nations: Roma, Italy, 2019. [Google Scholar]

- Soltani, N.; Dille, J.; Robinson, D.; Sprague, C.; Morishita, D.; Lawrence, N.; Kniss, A.; Jha, P.; Felix, J.; Nurse, R.; et al. Potential yield loss in sugar beet due to weed interference in the United States and Canada. Weed Technol. 2018, 32, 749–753. [Google Scholar] [CrossRef]

- Zaman-Allah, M.; Vergara, O.; Araus, J.; Tarekegne, A.; Magorokosho, C.; Zarco-Tejada, P.; Hornero, A.; Alba, A.; Das, B.; Craufurd, P.; et al. Unmanned aerial platform-based multi-spectral imaging for field phenotyping of maize. Plant Methods 2015, 11. [Google Scholar] [CrossRef] [Green Version]

- Furbank, R.; Tester, M. Phenomics—Technologies to relieve the phenotyping bottleneck. Trends Plant Sci. 2011, 16, 635–644. [Google Scholar] [CrossRef] [PubMed]

- Ziska, L.; Blumenthal, D.; Runion, G.; Hunt, E.; Diaz-Soltero, H. Invasive species and climate change: An agronomic perspective. Clim. Chang. 2011, 105, 13–42. [Google Scholar] [CrossRef]

- Liakos, K.; Busato, P.; Moshou, D.; Pearson, S.; Bochtis, D. Machine Learning in Agriculture: A Review. Sensors 2018, 18, 2674. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liang, H.; He, J.; Lei, J.J. Monitoring of Corn Canopy Blight Disease Based on UAV Hyperspectral Method. Spectrosc. Spectr. Anal. 2020, 40, 1965–1972. [Google Scholar] [CrossRef]

- Chivasa, W.; Mutanga, O.; Biradar, C. UAV-Based Multispectral Phenotyping for Disease Resistance to Accelerate Crop Improvement under Changing Climate Conditions. Remote Sens. 2020, 12, 2445. [Google Scholar] [CrossRef]

- Gao, J.F.; Liao, W.Z.; Nuyttens, D.; Lootens, P.; Vangeyte, J.; Pizurica, A.; He, Y.; Pieters, J.G. Fusion of pixel and object-based features for weed mapping using unmanned aerial vehicle imagery. Int. J. Appl. Earth Obs. Geoinf. 2018, 67, 43–53. [Google Scholar] [CrossRef]

- Lopez-Granados, F.; Torres-Sanchez, J.; De Castro, A.I.; Serrano-Perez, A.; Mesas-Carrascosa, F.J.; Pena, J.M. Object-based early monitoring of a grass weed in a grass crop using high resolution UAV imagery. Agron. Sustain. Dev. 2016, 36. [Google Scholar] [CrossRef]

- Pena, J.M.; Torres-Sanchez, J.; de Castro, A.I.; Kelly, M.; Lopez-Granados, F. Weed Mapping in Early-Season Maize Fields Using Object-Based Analysis of Unmanned Aerial Vehicle (UAV) Images. PLoS ONE 2013, 8, e77151. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mink, R.; Dutta, A.; Peteinatos, G.G.; Sokefeld, M.; Engels, J.J.; Hahn, M.; Gerhards, R. Multi-Temporal Site-Specific Weed Control of Cirsium arvense (L.) Scop. and Rumex crispus L. in Maize and Sugar Beet Using Unmanned Aerial Vehicle Based Mapping. Agriculture 2018, 8, 65. [Google Scholar] [CrossRef] [Green Version]

- Huang, H.S.; Deng, J.Z.; Lan, Y.B.; Yang, A.Q.; Deng, X.L.; Wen, S.; Zhang, H.H.; Zhang, Y.L. Accurate Weed Mapping and Prescription Map Generation Based on Fully Convolutional Networks Using UAV Imagery. Sensors 2018, 18, 3299. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Huang, H.S.; Lan, Y.B.; Deng, J.Z.; Yang, A.Q.; Deng, X.L.; Zhang, L.; Wen, S. A Semantic Labeling Approach for Accurate Weed Mapping of High Resolution UAV Imagery. Sensors 2018, 18, 2113. [Google Scholar] [CrossRef] [Green Version]

- Huang, H.S.; Deng, J.Z.; Lan, Y.B.; Yang, A.Q.; Deng, X.L.; Zhang, L. A fully convolutional network for weed mapping of unmanned aerial vehicle (UAV) imagery. PLoS ONE 2018, 13, e0196302. [Google Scholar] [CrossRef] [Green Version]

- Stroppiana, D.; Villa, P.; Sona, G.; Ronchetti, G.; Candiani, G.; Pepe, M.; Busetto, L.; Migliazzi, M.; Boschetti, M. Early season weed mapping in rice crops using multi-spectral UAV data. Int. J. Remote Sens. 2018, 39, 5432–5452. [Google Scholar] [CrossRef]

- Mateen, A.; Zhu, Q.S. Weed detection in wheat crop using UAV for precision agriculture. Pak. J. Agric. Sci. 2019, 56, 809–817. [Google Scholar] [CrossRef]

- Lambert, J.P.T.; Hicks, H.L.; Childs, D.Z.; Freckleton, R.P. Evaluating the potential of Unmanned Aerial Systems for mapping weeds at field scales: A case study with Alopecurus myosuroides. Weed Res. 2018, 58, 35–45. [Google Scholar] [CrossRef] [Green Version]

- Rasmussen, J.; Nielsen, J.; Streibig, J.C.; Jensen, J.E.; Pedersen, K.S.; Olsen, S.I. Pre-harvest weed mapping of Cirsium arvense in wheat and barley with off-the-shelf UAVs. Precis. Agric. 2019, 20, 983–999. [Google Scholar] [CrossRef]

- Pflanz, M.; Nordmeyer, H.; Schirrmann, M. Weed Mapping with UAS Imagery and a Bag of Visual Words Based Image Classifier. Remote Sens. 2018, 10, 1530. [Google Scholar] [CrossRef] [Green Version]

- Rasmussen, J.; Nielsen, J.; Garcia-Ruiz, F.; Christensen, S.; Streibig, J.C. Potential uses of small unmanned aircraft systems (UAS) in weed research. Weed Res. 2013, 53, 242–248. [Google Scholar] [CrossRef]

- Gasparovic, M.; Zrinjski, M.; BarkoviC, D.; Radocaj, D. An automatic method for weed mapping in oat fields based on UAV imagery. Comput. Electron. Agric. 2020, 173. [Google Scholar] [CrossRef]

- Tetila, E.C.; Machado, B.B.; Menezes, G.K.; Oliveira, A.D.; Alvarez, M.; Amorim, W.P.; Belete, N.A.D.; da Silva, G.G.; Pistori, H. Automatic Recognition of Soybean Leaf Diseases Using UAV Images and Deep Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2020, 17, 903–907. [Google Scholar] [CrossRef]

- Sivakumar, A.N.V.; Li, J.T.; Scott, S.; Psota, E.; Jhala, A.J.; Luck, J.D.; Shi, Y.Y. Comparison of Object Detection and Patch-Based Classification Deep Learning Models on Mid- to Late-Season Weed Detection in UAV Imagery. Remote Sens. 2020, 12, 2136. [Google Scholar] [CrossRef]

- Bah, M.D.; Hafiane, A.; Canals, R. Deep Learning with Unsupervised Data Labeling for Weed Detection in Line Crops in UAV Images. Remote Sens. 2018, 10, 1690. [Google Scholar] [CrossRef] [Green Version]

- Kerkech, M.; Hafiane, A.; Canals, R. Vine disease detection in UAV multispectral images using optimized image registration and deep learning segmentation approach. Comput. Electron. Agric. 2020, 174. [Google Scholar] [CrossRef]

- Kerkech, M.; Hafiane, A.; Canals, R. Deep leaning approach with colorimetric spaces and vegetation indices for vine diseases detection in UAV images. Comput. Electron. Agric. 2018, 155, 237–243. [Google Scholar] [CrossRef]

- Vanegas, F.; Bratanov, D.; Powell, K.; Weiss, J.; Gonzalez, F. A Novel Methodology for Improving Plant Pest Surveillance in Vineyards and Crops Using UAV-Based Hyperspectral and Spatial Data. Sensors 2018, 18, 260. [Google Scholar] [CrossRef] [Green Version]

- del-Campo-Sanchez, A.; Ballesteros, R.; Hernandez-Lopez, D.; Ortega, J.F.; Moreno, M.A.; Agroforestry Cartography, P. Quantifying the effect of Jacobiasca lybica pest on vineyards with UAVs by combining geometric and computer vision techniques. PLoS ONE 2019, 14, e0215521. [Google Scholar] [CrossRef] [Green Version]

- Yue, J.W.; Lei, T.J.; Li, C.C.; Zhu, J.Q. The Application of Unmanned Aerial Vehicle Remote Sensing in Quickly Monitoring Crop Pests. Intell. Autom. Soft Comput. 2012, 18, 1043–1052. [Google Scholar] [CrossRef]

- de Castro, A.I.; Torres-Sanchez, J.; Pena, J.M.; Jimenez-Brenes, F.M.; Csillik, O.; Lopez-Granados, F. An Automatic Random Forest-OBIA Algorithm for Early Weed Mapping between and within Crop Rows Using UAV Imagery. Remote Sens. 2018, 10, 285. [Google Scholar] [CrossRef] [Green Version]

- Lopez-Granados, F.; Torres-Sanchez, J.; Serrano-Perez, A.; de Castro, A.I.; Mesas-Carrascosa, F.J.; Pena, J.M. Early season weed mapping in sunflower using UAV technology: Variability of herbicide treatment maps against weed thresholds. Precis. Agric. 2016, 17, 183–199. [Google Scholar] [CrossRef]

- Perez-Ortiz, M.; Pena, J.M.; Gutierrez, P.A.; Torres-Sanchez, J.; Hervas-Martinez, C.; Lopez-Granados, F. Selecting patterns and features for between- and within-crop-row weed mapping using UAV-imagery. Expert Syst. Appl. 2016, 47, 85–94. [Google Scholar] [CrossRef] [Green Version]

- Perez-Ortiz, M.; Pena, J.M.; Gutierrez, P.A.; Torres-Sanchez, J.; Hervas-Martinez, C.; Lopez-Granados, F. A semi-supervised system for weed mapping in sunflower crops using unmanned aerial vehicles and a crop row detection method. Appl. Soft Comput. 2015, 37, 533–544. [Google Scholar] [CrossRef]

- Borra-Serrano, I.; Pena, J.M.; Torres-Sanchez, J.; Mesas-Carrascosa, F.J.; Lopez-Granados, F. Spatial Quality Evaluation of Resampled Unmanned Aerial Vehicle-Imagery for Weed Mapping. Sensors 2015, 15, 19688–19708. [Google Scholar] [CrossRef]

- Pena, J.M.; Torres-Sanchez, J.; Serrano-Perez, A.; de Castro, A.I.; Lopez-Granados, F. Quantifying Efficacy and Limits of Unmanned Aerial Vehicle (UAV) Technology for Weed Seedling Detection as Affected by Sensor Resolution. Sensors 2015, 15, 5609–5626. [Google Scholar] [CrossRef] [Green Version]

- Wang, T.Y.; Thomasson, J.A.; Yang, C.H.; Isakeit, T.; Nichols, R.L. Automatic Classification of Cotton Root Rot Disease Based on UAV Remote Sensing. Remote Sens. 2020, 12, 1310. [Google Scholar] [CrossRef] [Green Version]

- Hunter, J.E.; Gannon, T.W.; Richardson, R.J.; Yelverton, F.H.; Leon, R.G. Integration of remote-weed mapping and an autonomous spraying unmanned aerial vehicle for site-specific weed management. Pest Manag. Sci. 2020, 76, 1386–1392. [Google Scholar] [CrossRef] [Green Version]

- Zisi, T.; Alexandridis, T.K.; Kaplanis, S.; Navrozidis, I.; Tamouridou, A.A.; Lagopodi, A.; Moshou, D.; Polychronos, V. Incorporating Surface Elevation Information in UAV Multispectral Images for Mapping Weed Patches. J. Imaging 2018, 4, 132. [Google Scholar] [CrossRef] [Green Version]

- Tamouridou, A.A.; Alexandridis, T.K.; Pantazi, X.E.; Lagopodi, A.L.; Kashefi, J.; Kasampalis, D.; Kontouris, G.; Moshou, D. Application of Multilayer Perceptron with Automatic Relevance Determination on Weed Mapping Using UAV Multispectral Imagery. Sensors 2017, 17, 2307. [Google Scholar] [CrossRef] [PubMed]

- Tamouridou, A.A.; Alexandridis, T.K.; Pantazi, X.E.; Lagopodi, A.L.; Kashefi, J.; Moshou, D. Evaluation of UAV imagery for mapping Silybum marianum weed patches. Int. J. Remote Sens. 2017, 38, 2246–2259. [Google Scholar] [CrossRef]

- Pantazi, X.E.; Tamouridou, A.A.; Alexandridis, T.K.; Lagopodi, A.L.; Kashefi, J.; Moshou, D. Evaluation of hierarchical self-organising maps for weed mapping using UAS multispectral imagery. Comput. Electron. Agric. 2017, 139, 224–230. [Google Scholar] [CrossRef]

- Commission, E. Technology readiness levels (TRL). Horizon 2020—Work programme 2014–2015, General Annexes; Extract from Part 19—Commission Decision C(2014)4995; Commission, E: Berlin, Germany, 2014. [Google Scholar]

- Rovira-Más, F.; Zhang, Q.; Reid, J.; Will, J. Hough-transform-based vision algorithm for crop row detection of an automated agricultural vehicle. Proc. Inst. of Mech. Eng. Part D J. Automob. Eng. 2005, 219, 999–1010. [Google Scholar] [CrossRef]

- Slaughter, D.; Giles, D.; Downey, D. Autonomous robotic weed control systems: A review. Comput. Electron. Agric. 2008, 61, 63–78. [Google Scholar] [CrossRef]

- Zadoks, J.C.; Chang, T.T.; Konzak, C.F. A decimal code for the growth stages of cereals. Weed Res. 1974, 14, 415–421. [Google Scholar] [CrossRef]

- Hack, H.; Bleiholder, H.; Buhr, L.; Meier, U.; Schnock-Fricke, U.; Stauss, R. Einheitliche Codierung der phänologischen En- twicklungsstadien mono- und dikotyler Pflanzen.—Er- weiterte BBCH-Skala. Allgemein–Nachrichtenbl. Deut. Pflanzenschutzd 1992, 44, 265–270. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Sørensen, R.; Rasmussen, J.; Nielsen, J.; Jørgensen, R. Thistle Detection using Convolutional Neural Networks. In Proceedings of the EFITA WCCA 2017 Conference, Montpellier Supagro, Montpellier, France, 2–6 July 2017; pp. 2–6. [Google Scholar]

- Li, C.; Wang, J.; Wang, L.; Hu, L.; Gong, P. Comparison of Classification Algorithms and Training Sample Sizes in Urban Land Classification with Landsat Thematic Mapper Imagery. Remote Sens. 2014, 6, 964–983. [Google Scholar] [CrossRef] [Green Version]

- Huang, C.; Davis, L.; Townshend, J. An assessment of support vector machines for land cover classification. Int. J. Remote Sens. 2002, 23, 725–749. [Google Scholar] [CrossRef]

- Hassanein, M.; Lari, Z.; El-Sheimy, N. A New Vegetation Segmentation Approach for Cropped Fields Based on Threshold Detection from Hue Histograms. Sensors 2018, 18, 1253. [Google Scholar] [CrossRef] [Green Version]

- Ali, I.; Cawkwell, F.; Dwyer, E.; Barrett, B.; Green, S. Satellite remote sensing of grasslands: From observation to management. J. Plant Ecol. 2016, 9, 649–671. [Google Scholar] [CrossRef] [Green Version]

- Castle, M. Simple disk instrument for estimating herbage yield. J. Br. Grassl. Soc. 1976, 31, 37–40. [Google Scholar] [CrossRef]

- Oliveira, R.A.; Nasi, R.; Niemelainen, O.; Nyholm, L.; Alhonoja, K.; Kaivosoja, J.; Jauhiainen, L.; Viljanen, N.; Nezami, S.; Markelin, L.; et al. Machine learning estimators for the quantity and quality of grass swards used for silage production using drone-based imaging spectrometry and photogrammetry. Remote Sens. Environ. 2020, 246. [Google Scholar] [CrossRef]

- Jenal, A.; Lussem, U.; Bolten, A.; Gnyp, M.; Schellberg, J.; Jasper, J.; Bongartz, J.; Bareth, G. Investigating the Potential of a Newly Developed UAV-based VNIR/SWIR Imaging System for Forage Mass Monitoring. PFG J. Photogramm. Remote Sens. Geoinf. Sci. 2020, 88, 493–507. [Google Scholar] [CrossRef]

- Barnetson, J.; Phinn, S.; Scarth, P. Estimating Plant Pasture Biomass and Quality from UAV Imaging across Queensland’s Rangelands. AgriEngineering 2020, 2, 523–543. [Google Scholar] [CrossRef]

- Michez, A.; Philippe, L.; David, K.; Sebastien, C.; Christian, D.; Bindelle, J. Can Low-Cost Unmanned Aerial Systems Describe the Forage Quality Heterogeneity? Insight from a Timothy Pasture Case Study in Southern Belgium. Remote Sens. 2020, 12, 1650. [Google Scholar] [CrossRef]

- Smith, C.; Karunaratne, S.; Badenhorst, P.; Cogan, N.; Spangenberg, G.; Smith, K. Machine Learning Algorithms to Predict Forage Nutritive Value of In Situ Perennial Ryegrass Plants Using Hyperspectral Canopy Reflectance Data. Remote Sens. 2020, 12, 928. [Google Scholar] [CrossRef] [Green Version]

- Maxwell, A.; Warner, T.; Fang, F. Implementation of machine-learning classification in remote sensing: An applied review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef] [Green Version]

- Ebrahimi, M.; Khoshtaghaz, M.; Minaei, S.; Jamshidi, B. Vision-based pest detection based on SVM classification method. Comput. Electron. Agric. 2017, 137, 52–58. [Google Scholar] [CrossRef]

- Pantazi, X.; Moshou, D.; Bravo, C. Active learning system for weed species recognition based on hyperspectral sensing. Biosyst. Eng. 2016, 146, 193–202. [Google Scholar] [CrossRef]

- Pantazi, X.; Tamouridou, A.; Alexandridis, T.; Lagopodi, A.; Kontouris, G.; Moshou, D. Detection of Silybum marianum infection with Microbotryum silybum using VNIR field spectroscopy. Comput. Electron. Agric. 2017, 137, 130–137. [Google Scholar] [CrossRef]

- Pantazi, X.; Moshou, D.; Oberti, R.; West, J.; Mouazen, A.; Bochtis, D. Detection of biotic and abiotic stresses in crops by using hierarchical self organizing classifiers. Precis. Agric. 2017, 18, 383–393. [Google Scholar] [CrossRef]

- Pantazi, X.; Moshou, D.; Tamouridou, A. Automated leaf disease detection in different crop species through image features analysis and One Class Classifiers. Comput. Electron. Agric. 2019, 156, 96–104. [Google Scholar] [CrossRef]

- Iost, F.H.; Heldens, W.B.; Kong, Z.D.; de Lange, E.S. Drones: Innovative Technology for Use in Precision Pest Management. J. Econ. Entomol. 2020, 113, 1–25. [Google Scholar] [CrossRef] [Green Version]

- Zhang, C.; Walters, D.; Kovacs, J. Applications of Low Altitude Remote Sensing in Agriculture upon ‘Farmers’ Requests- A Case Study in Northeastern Ontario, Canada. PLoS ONE 2014, 9. [Google Scholar] [CrossRef] [PubMed]

- Severtson, D.; Callow, N.; Flower, K.; Neuhaus, A.; Olejnik, M.; Nansen, C. Unmanned aerial vehicle canopy reflectance data detects potassium deficiency and green peach aphid susceptibility in canola. Precis. Agric. 2016, 17, 659–677. [Google Scholar] [CrossRef] [Green Version]

- Fry, W.; Thurston, H.; Stevenson, W. Late blight. In Compendiumof Potato Diseases, 2nd ed.; Stevenson, W.D., Loria, R., Franc, G.D., Weingartner, D.P., Eds.; The American Phytopathological Society: St. Paul, MN, USA, 2001; pp. 28–30. [Google Scholar]

- Nebiker, S.; Lack, N.; Abächerli, M.; Läderach, S. Light-Weight Multispectral UAV Senors and their Capabilities for Predicting Grain Yield and Detecting Plant Diseases; The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences: Prague, Czech Republic, 2016; Volume XLI-B1. [Google Scholar]

- Sugiura, R.; Tsuda, S.; Tamiya, S.; Itoh, A.; Nishiwaki, K.; Murakami, N.; Shibuya, Y.; Hirafuji, M.; Nuske, S. Field phenotyping system for the assessment of potato late blight resistance using RGB imagery from an unmanned aerial vehicle. Biosyst. Eng. 2016, 148, 1–10. [Google Scholar] [CrossRef]

- Duarte-Carvajalino, J.; Alzate, D.; Ramirez, A.; Santa-Sepulveda, J.; Fajardo-Rojas, A.; Soto-Suarez, M. Evaluating Late Blight Severity in Potato Crops Using Unmanned Aerial Vehicles and Machine Learning Algorithms. Remote Sens. 2018, 10, 1513. [Google Scholar] [CrossRef] [Green Version]

- Franceschini, M.; Bartholomeus, H.; van Apeldoorn, D.; Suomalainen, J.; Kooistra, L. Intercomparison of Unmanned Aerial Vehicle and Ground-Based Narrow Band Spectrometers Applied to Crop Trait Monitoring in Organic Potato Production. Sensors 2017, 17, 1428. [Google Scholar] [CrossRef] [PubMed]

- Franceschini, M.; Bartholomeus, H.; van Apeldoorn, D.; Suomalainen, J.; Kooistra, L. Feasibility of Unmanned Aerial Vehicle Optical Imagery for Early Detection and Severity Assessment of Late Blight in Potato. Remote Sens. 2019, 11, 224. [Google Scholar] [CrossRef] [Green Version]

- Griffel, L.; Delparte, D.; Edwards, J. Using Support Vector Machines classification to differentiate spectral signatures of potato plants infected with Potato Virus Y. Comput. Electron. Agric. 2018, 153, 318–324. [Google Scholar] [CrossRef]

- Polder, G.; Blok, P.; de Villiers, H.; van der Wolf, J.; Kamp, J. Potato Virus Y Detection in Seed Potatoes Using Deep Learning on Hyperspectral Images. Front. Plant Sci. 2019, 10. [Google Scholar] [CrossRef] [Green Version]

- Afonso, M.; Blok, P.; Polder, G.; van der Wolf, J.; Kamp, J. Blackleg Detection in Potato Plants using Convolutional Neural Networks. IFAC-PapersOnLine 2019, 52, 6–11. [Google Scholar] [CrossRef]

- McMullen, M.; Jones, R.; Gallenberg, D. Scab of wheat and barley: A re-emerging disease of devastating impact. Plant Dis. 1997, 81, 1340–1348. [Google Scholar] [CrossRef] [Green Version]

- Khan, M.; Pandey, A.; Athar, T.; Choudhary, S.; Deval, R.; Gezgin, S.; Hamurcu, M.; Topal, A.; Atmaca, E.; Santos, P.; et al. Fusarium head blight in wheat: Contemporary status and molecular approaches. 3 Biotech 2020, 10. [Google Scholar] [CrossRef]

- Kaukoranta, T.; Hietaniemi, V.; Ramo, S.; Koivisto, T.; Parikka, P. Contrasting responses of T-2, HT-2 and DON mycotoxins and Fusarium species in oat to climate, weather, tillage and cereal intensity. Eur. J. Plant Pathol. 2019, 155, 93–110. [Google Scholar] [CrossRef] [Green Version]

- Mesterhazy, A. Updating the Breeding Philosophy of Wheat to Fusarium Head Blight (FHB): Resistance Components, QTL Identification, and Phenotyping—A Review. Plants 2020, 9, 1702. [Google Scholar] [CrossRef]

- Bauriegel, E.; Giebel, A.; Herppich, W. Rapid Fusarium head blight detection on winter wheat ears using chlorophyll fluorescence imaging. J. Appl. Bot. Food Qual. 2010, 83, 196–203. [Google Scholar]

- Bauriegel, E.; Giebel, A.; Geyer, M.; Schmidt, U.; Herppich, W. Early detection of Fusarium infection in wheat using hyper-spectral imaging. Comput. Electron. Agric. 2011, 75, 304–312. [Google Scholar] [CrossRef]

- Qiu, R.; Yang, C.; Moghimi, A.; Zhang, M.; Steffenson, B.; Hirsch, C. Detection of Fusarium Head Blight in Wheat Using a Deep Neural Network and Color Imaging. Remote Sens. 2019, 11, 2658. [Google Scholar] [CrossRef] [Green Version]

- Liu, L.; Dong, Y.; Huang, W.; Du, X.; Ma, H. Monitoring Wheat Fusarium Head Blight Using Unmanned Aerial Vehicle Hyperspectral Imagery. Remote Sens. 2020, 12, 3811. [Google Scholar] [CrossRef]

- Dammer, K.; Moller, B.; Rodemann, B.; Heppner, D. Detection of head blight (Fusarium ssp.) in winter wheat by color and multispectral image analyses. Crop Prot. 2011, 30, 420–428. [Google Scholar] [CrossRef]

- Wegulo, S.; Baenziger, P.; Nopsa, J.; Bockus, W.; Hallen-Adams, H. Management of Fusarium head blight of wheat and barley. Crop Prot. 2015, 73, 100–107. [Google Scholar] [CrossRef]

- De Wolf, E.; Madden, L.; Lipps, P. Risk assessment models for wheat Fusarium head blight epidemics based on within-season weather data. Phytopathology 2003, 93, 428–435. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hautsalo, J.; Jauhiainen, L.; Hannukkala, A.; Manninen, O.; Vetelainen, M.; Pietila, L.; Peltoniemi, K.; Jalli, M. Resistance to Fusarium head blight in oats based on analyses of multiple field and greenhouse studies. Eur. J. Plant Pathol. 2020, 158, 15–33. [Google Scholar] [CrossRef]

- Crusiol, L.; Nanni, M.; Furlanetto, R.; Sibaldelli, R.; Cezar, E.; Mertz-Henning, L.; Nepomuceno, A.; Neumaier, N.; Farias, J. UAV-based thermal imaging in the assessment of water status of soybean plants. Int. J. Remote Sens. 2020, 41, 3243–3265. [Google Scholar] [CrossRef]

- Kratt, C.; Woo, D.; Johnson, K.; Haagsma, M.; Kumar, P.; Selker, J.; Tyler, S. Field trials to detect drainage pipe networks using thermal and RGB data from unmanned aircraft. Agric. Water Manag. 2020, 229. [Google Scholar] [CrossRef]

- Lu, F.; Sun, Y.; Hou, F. Using UAV Visible Images to Estimate the Soil Moisture of Steppe. Water 2020, 12, 2334. [Google Scholar] [CrossRef]

- Hassan-Esfahani, L.; Torres-Rua, A.; Jensen, A.; McKee, M. Assessment of Surface Soil Moisture Using High-Resolution Multi-Spectral Imagery and Artificial Neural Networks. Remote Sens. 2015, 7, 2627–2646. [Google Scholar] [CrossRef] [Green Version]

- Wu, K.; Rodriguez, G.; Zajc, M.; Jacquemin, E.; Clement, M.; De Coster, A.; Lambot, S. A new drone-borne GPR for soil moisture mapping. Remote Sens. Environ. 2019, 235. [Google Scholar] [CrossRef]

- Ore, G.; Alcantara, M.; Goes, J.; Oliveira, L.; Yepes, J.; Teruel, B.; Castro, V.; Bins, L.; Castro, F.; Luebeck, D.; et al. Crop Growth Monitoring with Drone-Borne DInSAR. Remote Sens. 2020, 12, 615. [Google Scholar] [CrossRef] [Green Version]

- Calderon, R.; Navas-Cortes, J.; Lucena, C.; Zarco-Tejada, P. High-resolution airborne hyperspectral and thermal imagery for early, detection of Verticillium wilt of olive using fluorescence, temperature and narrow-band spectral indices. Remote Sens. Environ. 2013, 139, 231–245. [Google Scholar] [CrossRef]

- Barbedo, J. A Review on the Use of Unmanned Aerial Vehicles and Imaging Sensors for Monitoring and Assessing Plant Stresses. Drones 2019, 3, 40. [Google Scholar] [CrossRef] [Green Version]

- Abhishek, K.; Truong, J.; Gokaslan, A.; Clegg, A.; Wijmans, E.; Lee, S.; Savva, M.; Chernova, S.; Batra, D. Sim2Real Predictivity: Does Evaluation in Simulation Predict Real-World Performance? IEEE Robot. Autom. Lett. 2020, 5, 6670–6677. [Google Scholar]

- Toda, Y.; Okura, F.; Ito, J.; Okada, S.; Kinoshita, T.; Tsuji, H.; Saisho, D. Learning from synthetic dataset for crop seed instance segmentation. BioRxiv. 2019. [Google Scholar] [CrossRef]

- Shrivastava, A.; Pfister, T.; Tuzel, O.; Susskind, J.; Wang, W.; Webb, R. Learning from simulated and unsupervised images through adversarial training. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2107–2116. [Google Scholar]

- Goondram, S.; Cosgun, A.; Kulic, D. Strawberry Detection using Mixed Training on Simulated and Real Data. arXiv 2020, arXiv:2008.10236. [Google Scholar]

- Ward, D.; Moghadam, P.; Hudson, N. Deep leaf segmentation using synthetic data. arXiv 2018, arXiv:1807.10931. [Google Scholar]

- Verdouw, C.; Tekinerdogan, B.; Beulens, A.; Wolfert, S. Digital Twins in Smart Farming. Agric. Syst. 2021, 189, 103046. [Google Scholar] [CrossRef]

- Batini, C.; Blaschke, T.; Lang, S.; Albrecht, F.; Abdulm utalib, H.; Basri, A.; Szab, O.; Kugler, Z. Data quality in remote sensing. In The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences; SPRS Geospatial Week: Wuhan, China, 2017; pp. 18–22. [Google Scholar]

| Category | Reference Data | Data Type | Number/Size of Study Areas | Differentiation between Weed Species | Reference |

|---|---|---|---|---|---|

| Diseases | Visual, digital records | Multispectral images, RGB | 1–3/0.3–33 ha | Not applicable | [21,22,37,40,41,51] |

| Pests | Traps count, visual | RGB, multi- and hyperspectral images | 1–2/3.2 ha-10 km2 | Not applicable | [42,43,44] |

| Weeds: | |||||

| Rice | Visual from images | RGB, multispectral images | 1/0.5–2 ha | No | [27,28,29,30] |

| Sunflower | Visual labelling | RGB, CIR | 1–3/1.0–4.2 ha | No | [45,46,47,48,49] |

| Maize | Visual, square frames, manual counting | RGB, CIR, multispectral images | 1–2/0.015–2.1 ha | No | [23,24,25,26] |

| Wheat | Visual from images, field observations | RGB, multispectral images | 1–5/2–3000 ha | Yes [34] | [31,32,33,34] |

| Barley | Visual from images, field observations | RGB, multispectral images | 1–3/0.2–3 ha | No | [33,35] |

| Other crops | Visual from images, field observations | RGB, CIR | 2/Not mentioned | No | [36,38,39,52] |

| Meadows | Visual and in situ polygons, points | CIR | 1/10 ha | No | [53,54,56] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kaivosoja, J.; Hautsalo, J.; Heikkinen, J.; Hiltunen, L.; Ruuttunen, P.; Näsi, R.; Niemeläinen, O.; Lemsalu, M.; Honkavaara, E.; Salonen, J. Reference Measurements in Developing UAV Systems for Detecting Pests, Weeds, and Diseases. Remote Sens. 2021, 13, 1238. https://doi.org/10.3390/rs13071238

Kaivosoja J, Hautsalo J, Heikkinen J, Hiltunen L, Ruuttunen P, Näsi R, Niemeläinen O, Lemsalu M, Honkavaara E, Salonen J. Reference Measurements in Developing UAV Systems for Detecting Pests, Weeds, and Diseases. Remote Sensing. 2021; 13(7):1238. https://doi.org/10.3390/rs13071238

Chicago/Turabian StyleKaivosoja, Jere, Juho Hautsalo, Jaakko Heikkinen, Lea Hiltunen, Pentti Ruuttunen, Roope Näsi, Oiva Niemeläinen, Madis Lemsalu, Eija Honkavaara, and Jukka Salonen. 2021. "Reference Measurements in Developing UAV Systems for Detecting Pests, Weeds, and Diseases" Remote Sensing 13, no. 7: 1238. https://doi.org/10.3390/rs13071238