1. Introduction

According to the global cloud cover data provided by the International Satellite Cloud Climatology Project (ISCCP), more than 66% area above the earth is covered by a large number of clouds [

1]. Cloud, an important member of the climate system, is the most common, extremely active and changeable weather phenomenon. It directly affects the radiation and water cycle of the earth-atmosphere system, and plays an important role in the global energy budget and water resources distribution [

2,

3]. Therefore, cloud observation is a significant content in meteorological work. It is fundamental for weather forecasting and climate research to correctly identifying such elements as cloud shape, cloud amount and cloud height, as well as the distribution and change of clouds, which also plays a key role in navigation and positioning, flight support and national economic development [

4]. There are four main types of cloud observation: ground-based artificial observation, ground-based instrument observation, aircraft or balloon observation and meteorological satellite observation. It is noted that the cloud classification standards of ground-based observation and meteorological satellite observation are different. For ground observation, the cloud type is determined according to the cloud base height and cloud shape. For satellite observation, cloud classification is usually based on the spectral characteristics, texture characteristics and spatio-temporal gradient of the cloud top. Ground-based artificial observation mainly relies on meteorologists, easily restricted by factors such as insufficient observation experience. Ground-based instrument observation also has the disadvantages of large nighttime errors and a limited observation area. Aircraft or balloon observation is too time-consuming and costly to apply to daily operations. Meteorological satellites are widely used for large-scale and continuous-time observations of clouds and the earth’s surface. With the continuous development of satellite remote sensing technology and imaging technology, the quality, spatial resolution and timeliness of cloud images has greatly improved. The new generation of geostationary satellites, such as the Himawari-8 and Himawari-9 satellites [

5] in Japan, the GEOS-R satellite [

6] in the United States, and the FY-4A satellite [

7] in China, can meet higher observation requirements. Since satellite cloud images cover a wide area and contain more surface features, they are more suitable for describing the cloud information and changes in a large range. Cloud recognition based on satellite cloud images has become an important application and research hotspot in the remote sensing field.

In our work, we focus on the overall cloud type distribution in China. Zhuo et al. built a ground-based cloud dataset of Beijing, China, which was collected from August 2010 to May 2011 and annotated by meteorologists from the China Meteorological Administration. It contains eight cloud types and the clear sky [

8]. Zhang et al. used three ground-based cloud datasets, captured in Wuxi, Jiangsu Province and Yangjiang, Guangdong Province, China. The datasets were labeled by experts from the Meteorological Observation Center of China Meteorological Administration, Chinese Academy of Meteorological Sciences and the Institute of Atmospheric Physics, Chinese Academy of Sciences. They all contain six cloud types and the clear sky [

9]. Fang et al. used the standard ground-based cloud dataset provided by Huayunshengda (Beijing) meteorological technology limited liability company, which was labeled as 10 cloud types and the clear sky [

10]. Liu et al. used the multi-modal ground-based cloud dataset (MGCD), the first one composed of ground-based cloud images and multi-modal information. MGCD was annotated by meteorological experts and ground-based cloud-related researchers as six cloud types and the clear sky [

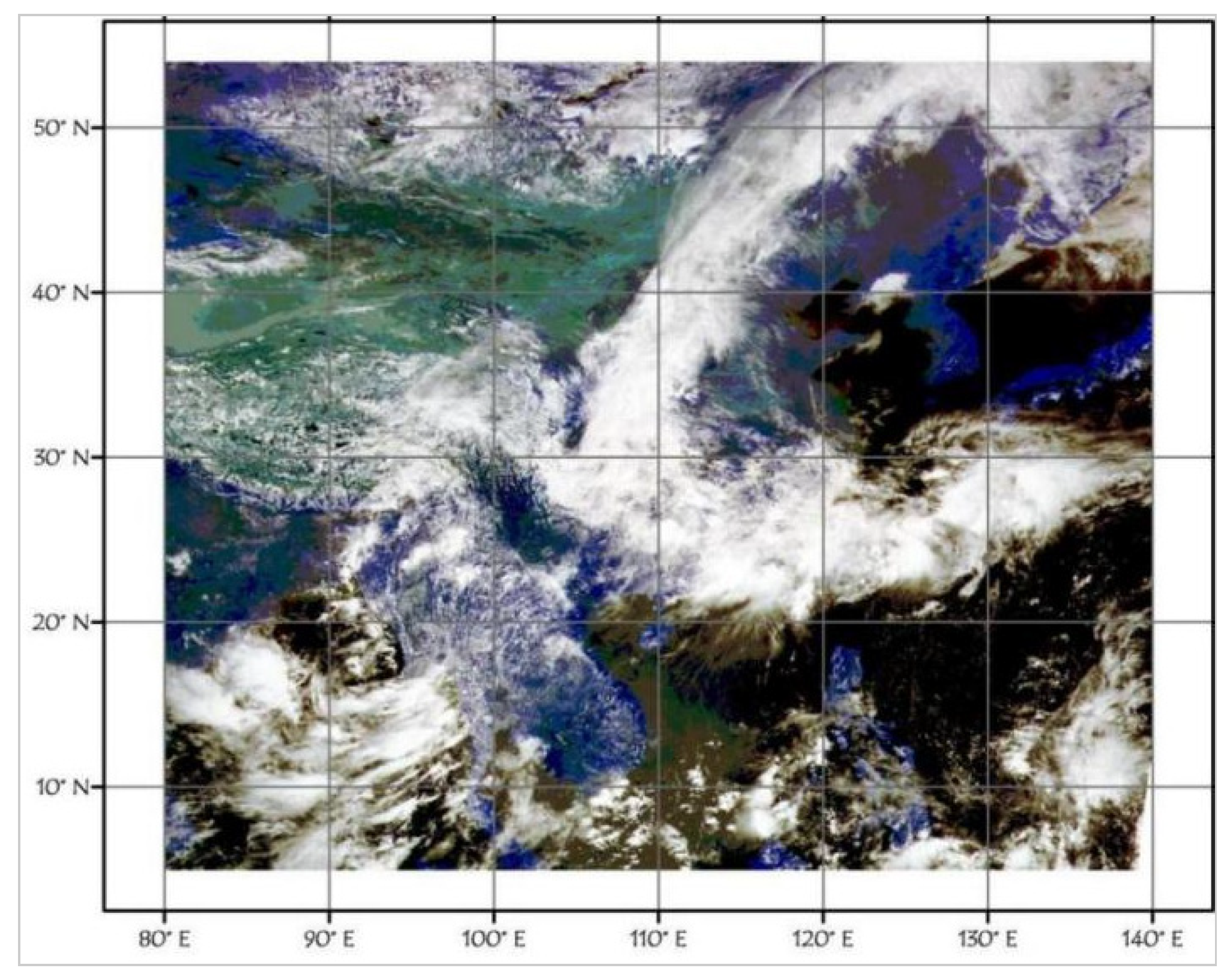

11]. Liu et al. selected the FY-2 satellite cloud image dataset. FY-2 is the first operational geostationary meteorological satellite of China. The experimental dataset was collected from June to August 2007 and annotated by meteorological experts with richly educated and trained experience as six cloud types, ocean and land [

12]. Bai et al. used Gao Fen-1 and Gao Fen-2 satellite cloud image datasets for cloud detection tasks. Gaofen is a series of Chinese high-resolution Earth imaging satellite for the state-sponsored program, China High-resolution Earth Observation System (CHEOS). The images and the manual cloud mask were acquired from the National Disaster Reduction Center of China. The majority of the images contain both cloud and non-cloud regions. Cloud regions include small-, medium-, and large-sized clouds. The backgrounds are common underlying surface environments including mountains, buildings, roads, agriculture, and rivers [

13]. Cai et al. used the FY-2 satellite cloud image dataset, which was labeled as four cloud types and the clear sky, but it is only suitable for image-level classification, not pixel-level classification [

14].

The coverage of the ground-based cloud image is relatively small, and it is impossible to show the overall cloud distribution of China in a single image. The existing China region satellite cloud image datasets have the limitations of fewer cloud types and a lack of pixel-level classification. Therefore, we construct a 4 km resolution meteorological satellite cloud image dataset covering the entire China region and propose a novel deep learning method to accurately identify the cloud type distribution in China, so that researchers can analyze the temporal and spatial distribution characteristics of cloud amount, cloud water path and cloud optical thickness in China from the perspective of different regions and different clouds.

Cloud recognition methods mainly include threshold-based methods, traditional machine learning methods, and deep learning methods. Threshold-based methods [

15,

16,

17] determine appropriate thresholds for different sensors through specific channels of the image (reflectivity, brightness temperature, etc.) to identify cloud regions in a fast calculation speed. However, they ignore the structure and texture of the cloud, for which is difficult to determine an appropriate threshold for the situation with many cloud types. In contrast, machine learning methods [

18,

19,

20,

21,

22,

23,

24,

25] are more robust. They first classify regions with the same or similar pixels into one class, and then analyze the spectral, spatial and texture information of the image with pixel-based or object-oriented methods. The texture measurement, location information, brightness temperature, reflectance and NDVI index are fed into SVM, KNN and Adaboost algorithms as features to realize automatic cloud classification. However, the traditional machine learning methods also have great limitations. Most features are extracted manually, and the accuracy is comparatively low when processing high-resolution images, which makes it difficult to distinguish clouds from highly similar objects.

Many studies show that deep learning methods can adaptively learn the deep features of clouds and have higher detection accuracy than traditional machine learning methods [

26,

27,

28,

29,

30,

31]. Liu et al. introduced a neural network for satellite cloud detection tasks, and conducted experiments on the FY-2C satellite cloud image dataset. Its nadir spatial resolution is 1.25 km for visible channels, and 5 km for infrared channels. Their model improved the results greatly not only in pixel-level accuracy but also in cloud patch-level classification by more accurately identifying cloud types such as cumulonimbus, cirrus and clouds at high latitudes [

12]. Cai et al. also constructed a convolution neural network for satellite cloud classification on the FY-2C satellite images, which could automatically learn features and obtain better classification results than those of traditional machine learning methods [

14]. Liu et al. presented a novel joint fusion convolutional neural network (JFCNN) to integrate the multimodal information, which learns the heterogeneous features (visual features and multimodal features) from the cloud data for cloud classification with robustness to environmental factors [

32]. Zhang et al. proposed transfer deep local binary patterns (TDLBP) and weighted metric learning (WML). The former can handle view shift well, and the latter solves the problem of an uneven number of different cloud types [

9]. Zhang et al. developed a new convolutional neural network model, CloudNet, to perform cloud recognition tasks in a self-built dataset Cirrus Cumulus Stratus Nimbus, which can accurately identify 11 cloud types, including one cloud generated by human activities [

33]. Lu et al. proposed two segnet-based architectures, P_Segnet and NP_Segnet, for the cloud recognition of remote sensing images, and adopted parallel structures in the architectures to improve the accuracy of cloud recognition [

34]. Fang et al. trained five network models by fine-tuning network parameters and freezing weights of different network layers based on the cloud image dataset provided by standard weather stations after data enhancement, and used five network migration configurations on the enhanced dataset. Experiments showed that the fine-tuned densenet model achieved good results [

10]. Liu et al. proposed a novel method named multi-evidence and multi-modal fusion network (MMFN) for cloud recognition, which can learn extended cloud information by fusing heterogeneous features in a unified framework [

11]. Zhang et al. presented LCCNet, a lightweight convolutional neural network model, which has the lower parameter amount and operation complexity, stronger characterization ability and higher classification accuracy than the existing network models [

35]. According to the analysis above, the existing research results still have the following two deficiencies: 1. Most cloud recognition methods are designed on ground-based cloud images, which can only be used to study local cloud distribution and changes without universality; 2. Current cloud recognition methods based on deep learning cannot fully capture the context information in images, and their feature extraction ability must be improved.

Context information is the key factor for improving image segmentation performance, and the receptive field roughly determines how much information can be utilized by the network. Existing deep learning cloud recognition methods are mostly implemented based on a convolutional neural network (CNN). However, relevant studies [

36] show that the actual receptive field of CNN is much smaller than its theoretical receptive field. Therefore, the limited receptive field seriously restricts the representation ability of the model. To solve this problem, Transformer [

37] is introduced into semantic segmentation tasks. Its characteristic is that it not only keeps the spatial resolution of input and output unchanged, but also effectively captures the global context information. Axial-DeepLab [

38] is the first independent attention model with large or global receptive fields, which can make good use of location information without increasing the computational cost and serve as the backbone network for semantic segmentation tasks. However, Axial-DeepLab uses a specially designed axial attention, which has poor scalability to standard computing equipment. By comparison, SETR [

39] is easier to use with standard self-attention. It adopts a structure similar to Vision Transformer (VIT) [

40] for feature extraction and combines with a decoder to restore resolution, achieving good results on segmentation tasks. Given the balance between computation cost and performance, we build a hierarchical transformer in encoder and divide the input image into windows, so that self-attention can be calculated in sub-windows to ensure a linear relationship between computational complexity and the size of the input image. We conduct attention operations along the channel axis between the encoder and decoder, so that the decoder can better integrate the features of the encoder and reduce the semantic gap.

In summary, the research on deep learning satellite cloud recognition methods is of great significance and application value for improving the accuracy of weather forecast, the effectiveness of climate model prediction and the understanding of global climate change. We construct a China region meteorological satellite cloud image dataset (CRMSCD) based on the L1 data product of FY-4A satellite and the cloud classification results of Himawari-8 satellite. CRMSCD contains nine cloud types and the clear sky (cloudless) and conforms to the world Meteorological Organization standard. In this paper, we propose a cloud recognition network model based on the U-shaped architecture, in which the transformer is introduced to build the encoder and encoder–decoder connection, and the attention mechanism is designed to integrate the features of both the encoder and decoder. Consequently, we name it as U-shape Attention-based Transformer Net (UATNet). UATNet has more powerful extracting capabilities of spectrum and spatial information features and stronger adaptabilities to the changing characteristics of clouds. In addition, we propose two models of different sizes to fit varying requirements.

To summarize, we make the following major contributions in this work:

- (1)

We propose the UATNet model and introduce a transformer into meteorological satellite cloud recognition task, which solves the problem of CNN receptive field limitation and captures global context information effectively while ensuring the computing efficiency.

- (2)

We use two transformer structures in UATNet to perform attention operations along the patch axis and channel axis, respectively, which can effectively integrate the spatial information and multi-channel information of clouds, extract more targeted cloud features, and then obtain pixel-level cloud classification results.

- (3)

We construct a China region meteorological satellite cloud image dataset named CRMSCD and carry out experiments on it. Experimental results demonstrate that the proposed model achieved a significant performance improvement compared with the existing state-of-the-art methods.

- (4)

We discover that replacing batch normalization with switchable normalization in the convolution layers of a fully convolutional network and using encoder–decoder connection in the transformer model can significantly improve the effect of cloud recognition.

4. Discussion

4.1. Findings and Implications

Meteorological satellite cloud images reflect the characteristics and changing processes of all kinds of cloud systems comprehensively, timely and dynamically. They also become an indispensable reference for meteorological and water conservancy departments in the decision-making process. Therefore, we construct a China region meteorological satellite cloud image dataset, CRMSCD, based on FY-4A satellite. It contains nine cloud types and the clear sky (cloudless). CRMSCD expands the satellite cloud image datasets in China, making it easier for researchers to make full use of the meteorological satellite cloud image information with its wide coverage, high timeliness and high resolution for carrying out cloud recognition research tasks.

According to the evaluation of experimental results and a visual analysis, our method has a higher recognition accuracy with smoother and clearer boundaries than existing image segmentation methods. At the same time, we introduce transformer structure in the encoder and encoder–decoder connection, demonstrating the excellent performance of transformer. The transformer model shows an excellent performance in semantic feature extraction, long-distance feature capture, comprehensive feature extraction and other aspects of natural language processing [

58,

59,

60,

61,

62], which overturns the architecture of traditional neural network models and makes up for the shortcomings of CNN and recursive neural network (RNN). Recently, the use of a transformer to complete visual tasks has become a new research direction, which can significantly improve the scalability and training efficiency of the model.

The FY-4A meteorological satellite cloud image contains 14 channels including visible light and near external light, shortwave infrared, midwave infrared, water vapor and longwave infrared, which carry a lot of rich semantic information. We innovatively introduce a transformer into meteorological satellite cloud recognition tasks, and use its powerful global feature extraction capabilities to enhance the overall perception and macro understanding of images. The transformer can capture the key features of different channels and explore the structure, range, and boundary of different cloud types. Features such as shape, hue, shadow, and texture are distinguished to achieve more accurate and efficient cloud recognition results. Existing visual transformer models, such as SETR and swin transformer, etc., require pre-training on large-scale data to obtain comparable or even better results than CNN. Compared with the above models, our models obtain excellent experimental results without pre-training on CRMSCD. Efficient and accurate cloud recognition with its high timeliness and strong objectivity provides a basis for weather analysis and weather forecasting. In areas lacking surface meteorological observation stations, such as oceans, deserts, and plateaus, meteorological satellite cloud recognition makes up for the lack of conventional detection data and plays an important role in improving the accuracy of weather forecasting, navigation and positioning.

4.2. Other Findings

During the experiments, we also find two skills, which can efficiently improve the effect of cloud recognition in meteorological satellite cloud images. Therefore, we conduct two groups of comparative experiments, and the experimental results are shown in

Table 11 and

Table 12. For demonstration purposes, we only select a fully convolutional neural network (U-Net) and a transformer-based model (Swin-B).

We use switchable normalization to replace the batch normalization in each convolution layer of U-Net. Experimental results show that the use of switchable normalization can significantly improve the effect of U-Net on cloud recognition tasks. The improvement is still applicable in other fully convolutional neural networks.

According to the experimental results in

Table 12, the introduction of encoder–decoder connection in Swin-B greatly improves the effect of cloud recognition.

4.3. Limitations

Although UATNet has shown superior performance in experiments, it still has two limitations.

First, we only trained and tested UATNet on CRMSCD without verifying it for other satellite cloud image datasets, which merely demonstrates the excellent cloud segmentation effect of UATNet on CRMSCD. The features captured on a single dataset may be relatively limited, and the versatility and universality of UATNet were not well verified in cloud recognition.

Second, we calculated the computational complexity of all the above models, including the total number of floating-point operations (FLOP) and the parameters of the model. As shown in

Table 13, the number of calculations and parameters of UATNet were higher than most mainstream image segmentation methods compared in the experiment. In UATNet, encoder and the feature fusion of encoder and decoder are completed by the transformer, and the encoder has a large number of transformer layers, which makes the total number of parameters large. Larger calculation scale and total number of parameters are a great challenge to computing resources and training duration.

4.4. Future Work

We analyzed the advantages and disadvantages of UATNet in detail. In this section, we discuss four possible research directions in the future.

In future experiments, we will expand the size of the dataset and train our model on other satellite cloud image datasets. It can not only enhance the ability of UATNet to learn more abundant and more critical features, but also verify the generalization ability and universal applicability of UATNet in cloud recognition.

UATNet has a relatively large number of calculations and parameters, resulting in a large consumption of computing resources and a long time for model convergence, which is not conducive to model deployment. How to reduce network parameters without losing model accuracy and realize the trade-off between calculation speed and calculation accuracy is a meaningful research direction. We will try to integrate the ideas of SqueezeNet [

63], MobileNet [

64] and other methods into our model for more refined model compression. Transfer learning [

65,

66] and knowledge distillation [

67,

68] can also be introduced into our cloud recognition work.

The convolution operation in CNN is ideal for extracting local features, but it has some limitations in capturing global feature representation. The UATNet encoder uses transformer blocks, whose self-attention mechanism and MLP block can reflect complex spatial transformation and long-distance feature dependence. The transformer focuses on global features such as contour representation and shape description, while ignoring local features. In cloud recognition, the fusion of transformer and CNN to improve the local sensitivity and global awareness of the model plays an important role in capturing rich and complex cloud feature information.

Finally, we will compare with physics-based algorithms and explore the robustness to noise in radiance data and to shifts in co-registration between channels.