Red Tide Detection Method for HY−1D Coastal Zone Imager Based on U−Net Convolutional Neural Network

Abstract

:1. Introduction

2. Materials and Methods

2.1. Satellite Data

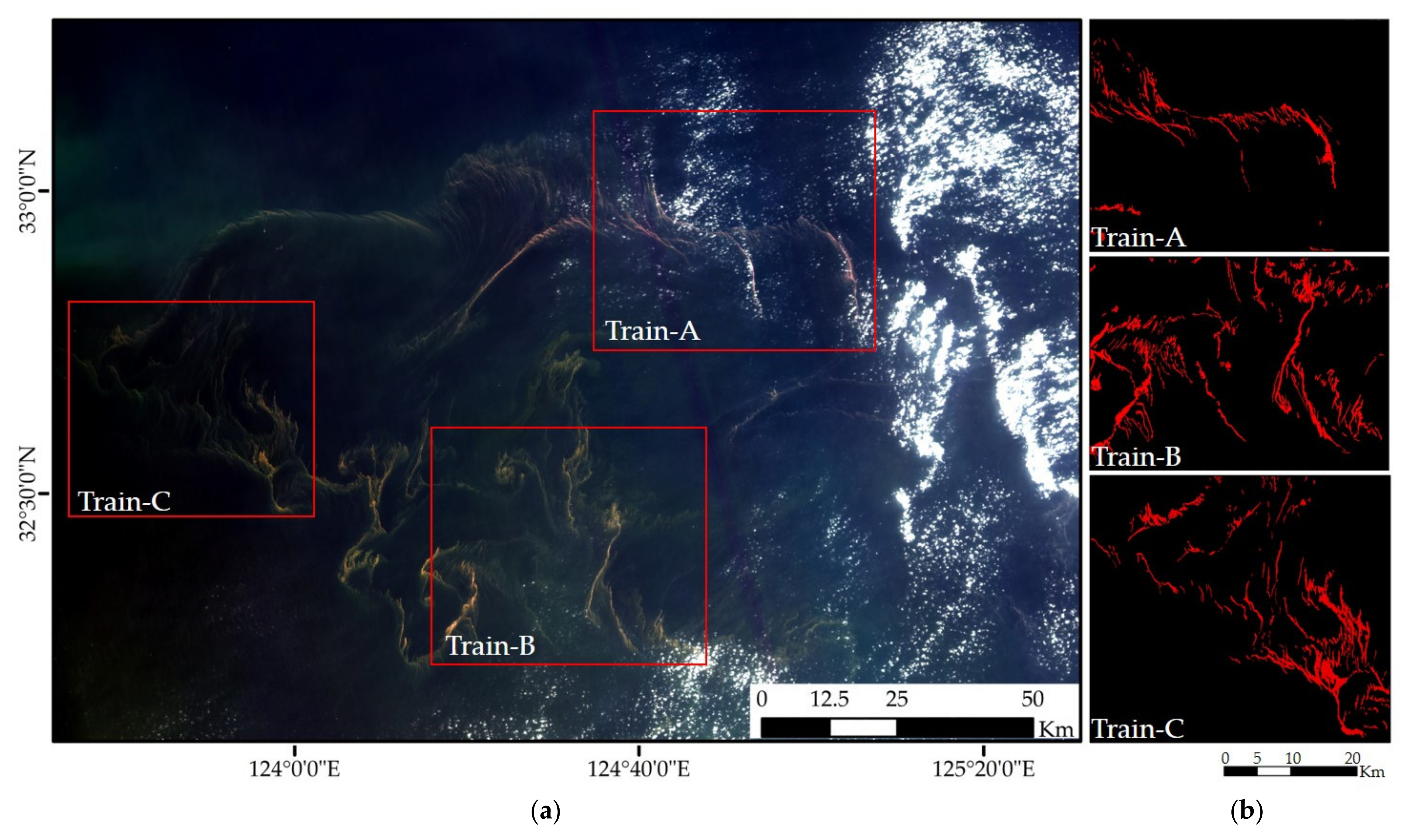

2.2. Dataset Construction

2.3. Related Methodology

2.3.1. U−Net Model

2.3.2. Comparison Methods

2.3.3. Accuracy Evaluation

3. RDU−Net Model for Red Tide Detection

3.1. RDU−Net Model Framework

3.1.1. Channel Attention Model

3.1.2. Boundary and Binary Cross Entropy Loss Function

3.2. Flowchart of Red Tide Detection Based on RDU−Net Model

3.2.1. Data Preprocessing

3.2.2. Splicing Method Based on Ignoring Boundary

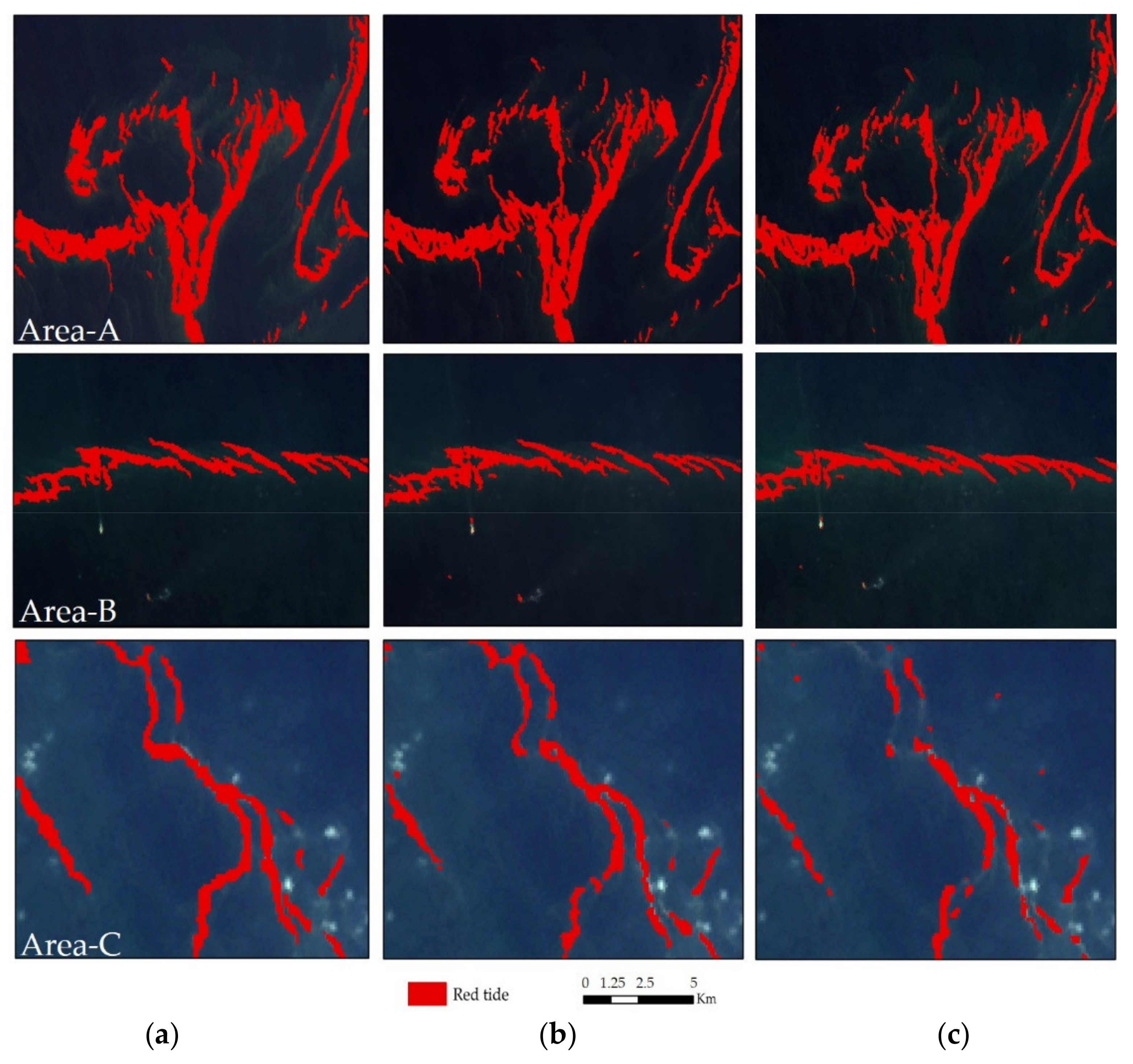

3.3. Results

4. Discussion

4.1. Sensitivity Analysis of Loss Function Parameters

4.2. Analysis of Multi−Feature Effect on Red Tide Detection

4.3. Applicability Analysis of Rayleigh Correction

4.4. Method Applicability Analysis

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Qin, M.; Li, Z.; Du, Z. Red tide time series forecasting by combining ARIMA and deep belief network. Knowl. -Based Syst. 2017, 125, 39–52. [Google Scholar] [CrossRef]

- Hu, C.; Feng, L. Modified MODIS fluorescence line height data product to improve image interpretation for red tide monitoring in the eastern Gulf of Mexico. J. Appl. Remote Sens. 2016, 11, 1–11. [Google Scholar] [CrossRef]

- Klemas, V. Remote sensing of algal blooms: An overview with case studies. J. Coast. Res. 2012, 28, 34–43. [Google Scholar] [CrossRef]

- Guzmán, L.; Varela, R.; Muller-Karger, F.; Lorenzoni, L. Bio-optical characteristics of a red tide induced by Mesodinium rubrum in the Cariaco Basin, Venezuela. J. Mar. Syst. 2016, 160, 17–25. [Google Scholar] [CrossRef] [Green Version]

- Cheng, K.H.; Chan, S.N.; Lee, J.H.W. Remote sensing of coastal algal blooms using unmanned aerial vehicles (UAVs). Mar. Pollut. Bull. 2020, 152, 110889. [Google Scholar] [CrossRef]

- Richlen, M.L.; Morton, S.L.; Jamali, E.A.; Rajan, A.; Anderson, D.M. The catastrophic 2008–2009 red tide in the Arabian gulf region, with observations on the identification and phylogeny of the fish-killing dinoflagellate Cochlodinium polykrikoides. Harmful Algae 2010, 9, 163–172. [Google Scholar] [CrossRef]

- Qi, L.; Tsai, S.F.; Chen, Y.; Le, C.; Hu, C. In Search of Red Noctiluca Scintillans Blooms in the East China Sea. Geophys. Res. Lett. 2019, 46, 5997–6004. [Google Scholar] [CrossRef]

- Shang, S.; Wu, J.; Huang, B.; Lin, G.; Lee, Z.; Liu, J.; Shang, S. A New Approach to Discriminate Dinoflagellate from Diatom Blooms from Space in the East China Sea. J. Geophys. Res. Ocean 2014, 3868–3882. [Google Scholar] [CrossRef]

- Wang, J.H.; Wu, J.Y. Occurrence and potential risks of harmful algal blooms in the East China Sea. Sci. Total Environ. 2009, 407, 4012–4021. [Google Scholar] [CrossRef]

- Lu, D.; Qi, Y.; Gu, H.; Dai, X.; Wang, H.; Gao, Y.; Shen, P.-P.; Zhang, Q.; Yu, R.; Lu, S. Causative Species of Harmful Algal Blooms in Chinese Coastal Waters. Arch. Hydrobiol. Suppl. Algol. Stud. 2014, 145–146, 145–168. [Google Scholar] [CrossRef]

- Hao, G.; Dewen, D.; Fengao, L.; Chunjiang, G. Characteristics and patterns of red tide in china coastal waters during the last 20a. Adv. Mar. Sci. 2015, 33, 547–558. [Google Scholar]

- Kong, F.Z.; Jiang, P.; Wei, C.J.; Zhang, Q.C.; Li, J.Y.; Liu, Y.T.; Yu, R.C.; Yan, T.; Zhou, M.J. Co-occurence of green tide, golden tide and red tides along the 35°n transect in the yellow sea during spring and summer in 2017. Oceanol. Limnol. Sin. 2018, 49, 1021–1030. [Google Scholar] [CrossRef]

- Lee, H.; Heo, Y.M.; Kwon, S.L.; Yoo, Y.; Kim, D.; Lee, J.; Kwon, B.O.; Khim, J.S.; Kim, J.J. Environmental drivers affecting the bacterial community of intertidal sediments in the Yellow Sea. Sci. Total Environ. 2021, 755, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Wang, Q.; Guan, C.; Shen, X.; Li, R. Study on the Occurrence Law of Red Tide and Its Influencing Factors in the Offshore Waters of China from 2001 to 2017. J. Peking Univ. 2020, 4, 16–17. [Google Scholar]

- Beltrán-Abaunza, J.M.; Kratzer, S.; Höglander, H. Using MERIS data to assess the spatial and temporal variability of phytoplankton in coastal areas. Int. J. Remote Sens. 2017, 38, 2004–2028. [Google Scholar] [CrossRef]

- Blondeau-Patissier, D.; Gower, J.F.R.; Dekker, A.G.; Phinn, S.R.; Brando, V.E. A review of ocean color remote sensing methods and statistical techniques for the detection, mapping and analysis of phytoplankton blooms in coastal and open oceans. Prog. Oceanogr. 2014, 123, 123–144. [Google Scholar] [CrossRef] [Green Version]

- Xu, X.; Pan, D.; Mao, Z.; Tao, B. A new algorithm based on the background field for red tide monitoring in the East China Sea. Acta Oceanol. Sin. 2014, 33, 62–71. [Google Scholar] [CrossRef]

- Zhao, J.; Ghedira, H. Monitoring red tide with satellite imagery and numerical models: A case study in the Arabian Gulf. Mar. Pollut. Bull. 2014, 79, 305–313. [Google Scholar] [CrossRef] [PubMed]

- Liu, R.; Zhang, J.; Cui, B.; Ma, Y.; Song, P.; An, J.B. Red tide detection based on high spatial resolution broad band satellite data: A case study of GF-1. J. Coast. Res. 2019, 90, 120–128. [Google Scholar] [CrossRef]

- Lee, M.S.; Park, K.A.; Chae, J.; Park, J.E.; Lee, J.S.; Lee, J.H. Red tide detection using deep learning and high-spatial resolution optical satellite imagery. Int. J. Remote Sens. 2019, 41, 5838–5860. [Google Scholar] [CrossRef]

- Ahn, J.H.; Park, Y.J.; Ryu, J.H.; Lee, B.; Oh, I.S. Development of atmospheric correction algorithm for Geostationary Ocean Color Imager (GOCI). Ocean. Sci. J. 2012, 47, 247–259. [Google Scholar] [CrossRef]

- Tao, B.; Mao, Z.; Lei, H.; Pan, D.; Shen, Y.; Bai, Y.; Zhu, Q.; Li, Z. A novel method for discriminating Prorocentrum donghaiense from diatom blooms in the East China Sea using MODIS measurements. Remote Sens. Environ. 2015, 158, 267–280. [Google Scholar] [CrossRef]

- Lou, X.; Hu, C. Diurnal changes of a harmful algal bloom in the East China Sea: Bservations from GOCI. Remote Sens. Environ. 2014, 140, 562–572. [Google Scholar] [CrossRef]

- Zhao, J.; Temimi, M.; Kitbi, S.A.; Mezhoud, N. Monitoring HABs in the shallow Arabian Gulf using a qualitative satellite-based index. Int. J. Remote Sens. 2016, 37, 1937–1954. [Google Scholar] [CrossRef]

- Moradi, M.; Kabiri, K. Red tide detection in the Strait of Hormuz (east of the Persian Gulf) using MODIS fluorescence data. Int. J. Remote Sens. 2012, 33, 1015–1028. [Google Scholar] [CrossRef]

- Carvalho, G.A.; Minnett, P.J.; Fleming, L.E.; Banzon, V.F.; Baringer, W. Satellite remote sensing of harmful algal blooms: A new multi-algorithm method for detecting the Florida Red Tide (Karenia brevis). Harmful Algae 2010, 9, 440–448. [Google Scholar] [CrossRef] [Green Version]

- Hu, C.; Muller-Karger, F.E.; Taylor, C.; Carder, K.L.; Kelble, C.; Johns, E.; Heil, C.A. Red tide detection and tracing using MODIS fluorescence data: A regional example in SW Florida coastal waters. Remote Sens. Environ. 2005, 97, 311–321. [Google Scholar] [CrossRef]

- Shin, J.; Kim, K.; Son, Y.B.; Ryu, J.H. Synergistic effect of multi-sensor data on the detection of Margalefidinium polykrikoides in the South Sea of Korea. Remote Sens. 2019, 11, 36. [Google Scholar] [CrossRef] [Green Version]

- Liu, W.; Wang, Z.; Liu, X.; Zeng, N.; Liu, Y.; Alsaadi, F.E. A survey of deep neural network architectures and their applications. Neurocomputing 2017, 234, 11–26. [Google Scholar] [CrossRef]

- Hu, F.; Xia, G.S.; Hu, J.W.; Zhang, L.P. Transferring Deep Convolutional Neural Networks for the Scene Classification of High-Resolution Remote Sensing Imagery. Remote Sens. 2015, 7, 14680–14707. [Google Scholar] [CrossRef] [Green Version]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep Learning Classification of Land Cover and Crop Types Using Remote Sensing Data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Fang, B.; Li, Y.; Zhang, H.K.; Chan, J.C.W. Hyperspectral Images Classification Based on Dense Convolutional Networks with Spectral-Wise Attention Mechanism. Remote Sens. 2019, 11, 159. [Google Scholar] [CrossRef] [Green Version]

- Chen, C.Y.; Gong, W.G.; Chen, Y.L.; Li, W.H. Object Detection in Remote Sensing Images Based on a Scene-Contextual Feature Pyramid Network. Remote Sens. 2019, 11, 339. [Google Scholar] [CrossRef] [Green Version]

- Yang, F.; Fan, H.; Chu, P.; Blasch, E.; Ling, H. Clustered Object Detection in Aerial Images. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 8310–8319. [Google Scholar]

- Liu, Y.; Chen, X.; Wang, Z.; Wang, Z.J.; Ward, R.K.; Wang, X. Deep Learning for Pixel-Level Image Fusion: Recent Advances and Future Prospects. Inf. Fusion 2018, 42, 158–173. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Z.; Ma, Y.; Jiang, T.; Chen, C. Research on the Extraction of Red Tide Hyperspectral Remote Sensing Based on the Deep Belief Network (DBN). J. Ocean Technol. 2019, 38, 1–7. [Google Scholar]

- Hu, Y.; Ma, Y.; An, J. Research on high accuracy detection of red tide hyperspecrral based on deep learning CNN. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2018, 42, 573–577. [Google Scholar] [CrossRef] [Green Version]

- El-habashi, A.; Ioannou, I.; Tomlinson, M.C.; Stumpf, R.P.; Ahmed, S. Satellite retrievals of Karenia brevis harmful algal blooms in the West Florida Shelf using neural networks and comparisons with other techniques. Remote Sens. 2016, 8, 377. [Google Scholar] [CrossRef] [Green Version]

- Grasso, I.; Archer, S.D.; Burnell, C.; Tupper, B.; Rauschenberg, C.; Kanwit, K.; Record, N.R. The hunt for red tides: Deep learning algorithm forecasts shellfish toxicity at site scales in coastal Maine. Ecosphere 2019, 10, 1–11. [Google Scholar] [CrossRef] [Green Version]

- NSOAS. Available online: http://www.nsoas.org.cn/news/content/2018-11/23/44_5226.html (accessed on 17 August 2020).

- CRESDA. Available online: http://www.cresda.com/CN/ (accessed on 15 August 2020).

- Xing, Q.; Guo, R.; Wu, L.; An, D.; Cong, M.; Qin, S.; Li, X. High-resolution satellite observations of a new hazard of Golden Tides caused by floating sargassum in winter in the Yellow Sea. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1815–1819. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. Lect. Notes Comput. Sci. 2015, 9351, 234–241. [Google Scholar] [CrossRef] [Green Version]

- Saood, A.; Hatem, I. COVID-19 lung CT image segmentation using deep learning methods: U-Net versus SegNet. BMC Med. Imaging 2021, 21, 1–10. [Google Scholar] [CrossRef]

- Cho, C.; Lee, Y.H.; Park, J.; Lee, S. A Self-Spatial Adaptive Weighting Based U-Net for Image Segmentation. Electronics 2021, 10, 348. [Google Scholar] [CrossRef]

- Kestur, R.; Farooq, S.; Abdal, R.; Mehraj, E.; Narasipura, O.; Mudigere, M. UFCN: A fully convolutional neural network for road extraction in RGB imagery acquired by remote sensing from an unmanned aerial vehicle. J. Appl. Remote Sens. 2018, 12, 016020. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, Q.; Wang, Y. Road extraction by deep residual u-net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef] [Green Version]

- Xu, Y.; Wu, L.; Xie, Z.; Chen, Z. Building Extraction in Very High Resolution Remote Sensing Imagery Using Deep Learning and Guided Filters. Remote Sens. 2018, 10, 1–18. [Google Scholar] [CrossRef]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 640–651. [Google Scholar] [CrossRef] [PubMed]

- Lu, H.; Liu, Q.; Liu, X.; Zhang, Y. A Survey of Semantic Construction and Application of Satellite Remote Sensing Images and Data. J. Organ. End User Comput. 2021, 33, 1–20. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Xavier, G.; Antoine, B.; Yoshua, B. Deep Sparse Rectifier Neural Networks. J. Mach. Learn. Res. 2011, 15, 315–323. [Google Scholar] [CrossRef] [Green Version]

- Vapnik, V.N. An overview of statistical learning theory. IEEE Trans. Neural Netw. 1999, 10, 988–999. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Guo, H.; Jeong, K.; Lim, J.; Jo, J.; Kim, Y.M.; Park, J.P.; Kim, J.H.; Cho, K.H. Prediction of effluent concentration in a wastewater treatment plant using machine learning models. J. Environ. Sci. 2015, 32, 90–101. [Google Scholar] [CrossRef] [PubMed]

- Park, Y.; Ligaray, M.; Kim, Y.M.; Kim, J.H.; Cho, K.H.; Sthiannopkao, S. Development of enhanced groundwater arsenic prediction model using machine learning approaches in Southeast Asian countries. Desalin. Water Treat. 2016, 57, 12227–12236. [Google Scholar] [CrossRef]

- Zhang, P.; Ke, Y.; Zhang, Z.; Wang, M.; Li, P.; Zhang, S. Urban land use and land cover classification using novel deep learning models based on high spatial resolution satellite imagery. Sensors 2018, 18, 3717. [Google Scholar] [CrossRef] [Green Version]

- Xia, M.; Qian, J.; Zhang, X.; Liu, J.; Xu, Y. River Segmentation Based on Separable Attention Residual Network. J. Appl. Remote Sens. 2019, 14, 1. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the 32nd International Conference on Machine Learning (ICML 2015), Lille, France, 6–11 July 2015; Volume 1, pp. 448–456. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the 15th European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Hu, J.; Shen, L.; Samuel, A.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 42, 2011–2023. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bokhovkin, A.; Burnaev, E. Boundary Loss for Remote Sensing Imagery Semantic Segmentation. In Proceedings of the International Symposium on Neural Networks, Moscow, Russia, 10–12 July 2019; pp. 388–401. [Google Scholar] [CrossRef] [Green Version]

- Kervadec, H.; Bouchtiba, J.; Desrosiers, C.; Granger, E.; Dolz, J.; Ben, I. Boundary Loss for Highly Unbalanced Segmentation. Med. Image Anal. 2021, 67, 101851. [Google Scholar] [CrossRef]

- Kreyszig, E. Advanced Engineering Mathematics, 10th ed.; Wiley: Indianapolis, IN, USA, 2011; pp. 154–196. ISBN 9780470458365. [Google Scholar]

- Bottou, L. Large-Scale Machine Learning with Stochastic Gradient Descent. In Proceedings of the 19th International Conference on Computational Statistics, Paris, France, 22–27 August 2010; pp. 177–186. [Google Scholar] [CrossRef] [Green Version]

- Wang, M. Remote sensing of the ocean contributions from ultraviolet to near-infrared using the shortwave infrared bands: Simulations. Appl. Opt. 2007, 46, 1535–1547. [Google Scholar] [CrossRef]

- Wang, M. Atmospheric Correction for Remotely-Sensed Ocean-Colour Products; IOCCG: Dartmouth, NS, Canada, 2010. [Google Scholar]

- Tong, C.; Mu, B.; Liu, R.; Ding, J.; Zhang, M.; Xiao, Y.; Liang, X.; Chen, X. Atmospheric Correction Algorithm for HY-1C CZI over Turbid Waters. J. Coast. Res. 2020, 90, 156–163. [Google Scholar] [CrossRef]

| Sensor | Band Number | Spectral Range (nm) | Central Wavelength (nm) | Resolution (m) | Swath (km) | Revisit Cycle (Day) |

|---|---|---|---|---|---|---|

| HY−1D CZI | 1 | 420–500 | 460 | 50 | 950 | 3 |

| 2 | 520–600 | 560 | ||||

| 3 | 610–690 | 650 | ||||

| 4 | 760–890 | 825 | ||||

| GF−1 WFV | 1 | 450–520 | 485 | 16 | 800 | 4 |

| 2 | 520–600 | 560 | ||||

| 3 | 630–690 | 660 | ||||

| 4 | 760–900 | 830 |

| Sensor | Date | Longitude | Latitude | Function |

|---|---|---|---|---|

| HY−1D CZI | August 17 2020 | 123°36′53ʺ–125°31′17ʺ | 31°58′20ʺ–33°17′11ʺ | Algorithm design and verification |

| GF−1 WFV | August 15 2020 | 122°57′07ʺ–125°46′19ʺ | 31°30′53ʺ–33°44′35ʺ | Exploration of algorithm applicability |

| Method | Precision (%) | Recall (%) | F1−Score | Kappa |

|---|---|---|---|---|

| RDU−Net | 87.47 | 86.62 | 0.87 | 0.87 |

| U−Net | 81.33 | 79.52 | 0.80 | 0.80 |

| FCN−8s | 72.34 | 73.66 | 0.73 | 0.73 |

| SegNet | 75.39 | 63.04 | 0.69 | 0.68 |

| SVM | 74.46 | 66.60 | 0.70 | 0.70 |

| GF1_RI | 67.49 | 64.08 | 0.66 | 0.65 |

| α | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | 1.0 |

|---|---|---|---|---|---|---|---|---|---|---|

| F1−score | 0.806 | 0.810 | 0.821 | 0.826 | 0.829 | 0.830 | 0.838 | 0.868 | 0.834 | 0.822 |

| Dataset | Precision (%) | Recall (%) | F1−Score | Kappa |

|---|---|---|---|---|

| Multi−feature dataset | 87.47 | 86.62 | 0.87 | 0.87 |

| Four−bands dataset | 79.54 | 84.69 | 0.82 | 0.82 |

| Data | Precision (%) | Recall (%) | F1−Score | Kappa |

|---|---|---|---|---|

| Rayleigh correction product | 85.47 | 82.23 | 0.83 | 0.82 |

| Data | Precision (%) | Recall (%) | F1−Score | Kappa |

|---|---|---|---|---|

| GF−1 WF2 image | 85.42 | 87.30 | 0.86 | 0.86 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, X.; Liu, R.; Ma, Y.; Xiao, Y.; Ding, J.; Liu, J.; Wang, Q. Red Tide Detection Method for HY−1D Coastal Zone Imager Based on U−Net Convolutional Neural Network. Remote Sens. 2022, 14, 88. https://doi.org/10.3390/rs14010088

Zhao X, Liu R, Ma Y, Xiao Y, Ding J, Liu J, Wang Q. Red Tide Detection Method for HY−1D Coastal Zone Imager Based on U−Net Convolutional Neural Network. Remote Sensing. 2022; 14(1):88. https://doi.org/10.3390/rs14010088

Chicago/Turabian StyleZhao, Xin, Rongjie Liu, Yi Ma, Yanfang Xiao, Jing Ding, Jianqiang Liu, and Quanbin Wang. 2022. "Red Tide Detection Method for HY−1D Coastal Zone Imager Based on U−Net Convolutional Neural Network" Remote Sensing 14, no. 1: 88. https://doi.org/10.3390/rs14010088