1. Introduction

Urbanization, along with population growth, aging and international migration, is one of four “demographic mega-trends” described by the United Nations. In 2018, more than 55 percent of the population around the world lived in urban areas, and this proportion will increase to two thirds by 2050 [

1]. The urbanization process has changed the urban surface structure and environment, resulting in artificial heat elevation, especially in megacities [

2,

3]. The phenomenon that urban heat is higher than surrounding areas, urban heat island (UHI), has caused climate issues such as heat waves and air pollution all over the world and has aroused people’s attention [

4,

5]. Traditional studies on UHI only analyze the difference between “rural” and “urban” areas, lacking a standard urban classification framework to evaluate temperature differences in urban regions [

6,

7,

8].

To fill the gap in the urban classification framework, Stewart and Oke proposed the local climate zones (LCZs) scheme in 2012 [

9], which divided the areas of interest into 17 standard LCZ types including 10 built types and 7 land cover types, describing diverse urban landscapes ranging from hundreds of meters to several kilometers. The detailed information on each LCZ type is shown in

Figure 1. Considering building height, spatial distribution and covering material of land surface structure, the system provides a standard evaluation framework for UHI studies. With the popularity of LCZ research in related fields, the demands for high-quality LCZ maps are also gradually increasing. However, until now, a variety of mapping quality assessments indicate that there is still a lot of room for improvement in LCZ mapping, which can only be improved when considering all built classes together or using weights defined by the morphological and climatic similarity of certain classes [

10].

Geographic information system (GIS) based mapping shows high potential in producing high-quality LCZ maps [

12], but large-scale GIS-based mapping is limited by data and complexity of operations, making RS-based mapping the mainstream. Most RS-based mapping products use pixels as mapping units, among which the Level 0 product mapping process proposed by the WUDAPT (The World Urban Database and Access Portal Tools) project is the most popular [

13]. The WUDAPT method uses free Landsat image and SAGA GIS software to resample remote sensing images to 100 m and realize LCZ mapping through random forest. Until now, LCZ maps for more than 100 cities around the world have been completed and shared. However, WUDAPT-based LCZ maps clearly show a disadvantage in accuracy. Overall accuracy (OA) of the 90 LCZs uploaded on the WUDAPT portal is 74.5%, and the average OA of the built LCZ types of the 90 LCZs is only 59.3%, leaving much room for improvement [

14]. To obtain high-quality LCZ maps, many researchers have made efforts in mapping methods. Verdonck used derived spectral information to help classification [

15], while Liu and Shi proposed new network architecture for LCZ classification [

16]. However, pixel-based classification still has two problems: (1) it cannot provide good boundaries for LCZ types; (2) most LCZ products are made with low resolution, resulting in mixed pixels.

Object-based classification has made great success in the field of high-resolution image analysis. In recent years, some scholars have begun to explore the application of object-based LCZ mapping. Collins and Dronova generated an object-based LCZ map for the urban areas of Salt Lake City, United States of America [

17]. They argued that the object-based LCZ classification paradigm could better describe the boundaries of LCZ types. In addition, Ma et al. proved the effectiveness of object-based LCZ mapping through land surface temperature analysis of three cities in China [

18]. Although the exploration of OBIA in the field of LCZ mapping has been gradually carried out, their research did not show the advantages of object-based LCZ mapping. If the object-based LCZ mapping is more advantageous, there is still no definite conclusion in existing research. In addition, many studies use auxiliary data to help LCZ mapping at present, which can be divided into two categories: (1) Derivation of related features. This method uses spectral index, mean, maximum, and minimum to aid classification [

14]. (2) Addition of datasets. This approach uses multiple datasets simultaneously, such as Landsat, Sentinel, Global Urban Footprint, and Open Street Map [

19,

20]. Although multi-source data were used in their studies, the mapping units are all pixels, limiting the effectiveness of multi-source data.

The comparison between the object-based method and pixel-based method has been carried out in various fields. Shi and Liu compared two methods for mapping quasi-circular vegetation patches and recommended the object-based SVM approach to map the QVPs [

21]. Nachappa proved that the object-based geon approach creates meaningful regional units and performs better than the pixel-based approach in landslide susceptibility mapping [

22]. For land use/land cover (LULC) mapping and change detection, similar research was conducted, and the object-based method showed greater potential compared with the pixel-based method [

23,

24,

25]. However, little research has systematically compared object-based and pixel-based methods in the field of LCZ mapping, especially with multi-source data. Since most LCZ products are made through the pixel-based paradigm, it is important to figure out the advantages and disadvantages of an object-based paradigm and whether image objects can maximize the benefits of multi-source data.

This study aims to systematically compare the object-based and pixel-based data with multi-source data. Since few studies have been carried out on object-based LCZ mapping, we further propose an improved object-based LCZ mapping workflow. The objects of this research are to: (1) complete the object-based LCZ mapping process with multi-source data and object-based sampling strategy; (2) compare the LCZ maps generated from two different types of paradigms; (3) assess multi-source features in the object-based LCZ mapping; (4) discuss the future direction for improving the object-based LCZ mapping.

3. Methods

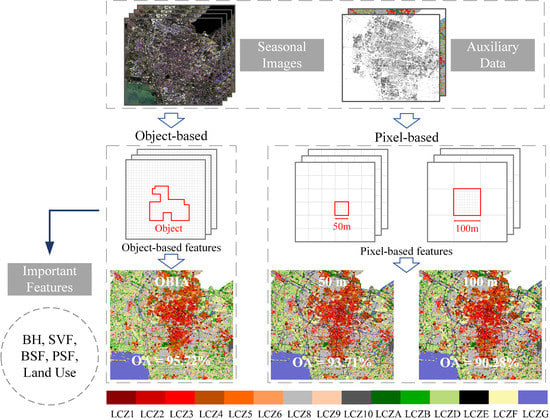

For LCZ mapping, four main steps were conducted in this study (

Figure 3):

Data preprocessing. To obtain high-quality data for LCZ mapping. Seasonal composite satellite images were obtained as basic mapping data, while building data and land use data were converted to raster form. All data are resampled into 10 m GSD.

Feature derivation for LCZ mapping. For ricing spectral information and better depicting the urban form, diverse features were selected and derived, including spectral reflectance, spectral indices, zonal information obtained through filtering, urban morphological parameters (UMPs) that depict urban morphology.

Feature extraction based on image objects and patches. After obtaining segmentation results by multi-resolution segmentation (MRS) and determining the resampling size, we extracted zonal mean, standard deviation and texture from objects and pixels based on a 10 m data block respectively.

LCZ classification. LCZ samples based on objects or pixels were input to the random forest classifier for training and testing. Finally, each object or pixel was predicted by the random forest classifier for producing the LCZ map.

3.1. Data Preprocessing

Synthetic seasonal cloudless S2 images, rasterized building data and land use data were obtained to integrate RS and GIS information. For S2 images, after geometric correction and atmospheric correction from Google Earth Engine, a series of image preprocessing operations were carried out to make sure to obtain images without cloud. The QA60 band, which is a bitmask band with cloud mask information, was used to remove the pixels with cloud. Subsequently, image sets of each season were created by a date filter for the study areas and were then used to generate synthetic images based on the median of individual pixels. Finally, synthetic multi-season S2 images including B2, B3, B4, B8, B11 and B12 with 10 m were created for LCZ mapping with bilinear resampling method. GIS data were converted and resampled into 10 m. Building layer was assigned based on height while land use layer was assigned based on categories with values 0–7.

3.2. Derived Raster Features for LCZ Mapping

In this study, diverse features were extracted and stacked as a multi-channel data block with a resolution of 10 m. This data block includes spectral reflectance layers, spectral indices layers, zonal information layers in four seasons and UMPs including BH, SVF, BSF, PSF and land use layers. More information is shown in

Table 2. The whole data block serves as the input for object-based and pixel-based methods to ensure that they are completely consistent for comparison. The input features in this data block are:

Spectral reflectance: blue (B2), green (B3), red (B4) and near-infrared (B8) were selected to provide spectral information. Considering that seasonal images were used in our study, 16 layers were finally obtained.

Spectral index: NDVI (Normalized Difference Vegetation Index), NDBI (Normalized Difference Built Index), NBAI (Normalized built-in Area Index), BRBA (Band Ratio for built-up Area), BSI (bare-soil Index) and MNDWI (Modified Normalized Difference Water Index) were used for rich spectral information. These spectral indices were demonstrated to be able to characterize vegetation, buildings, water and bare soil [

26,

27], aiding the classifier to mine underlying spectral differences and connections among diverse LCZ types. Notably, as the classification for built LCZ types is harder, three building-related spectral indices were selected for improving the performance of the classifier. Considering four seasons, a total of 24 spectral index layers were obtained.

Zonal information: Verdonck proved that using zonal information can effectively improve LCZ mapping quality on a fine scale [

15]. In this study, we used a mean filter to obtain zonal information based on seasonal images. In detail, the filter is defined with size 11*11 and moving with step = 1. When the filter is at the edge of the image, the extent of the processing is outside the image; thus, zero-padding was applied. Finally, we obtained 16 layers containing zonal information.

Urban morphological parameter: Stewart and Oke defined LCZs with measurable and stable physical properties including SVF, Aspect Ratio (AR), BSF, Impervious Surface Fraction (ISF), PSF, Height of Roughness Elements and Terrain Roughness Class [

9]. In this study, BH, SVF, BSF, and PSF were calculated, while the land use layer was obtained by assigning the value of every pixel according to the category, because they are available from our data and are widely used for LCZ mapping [

12,

28,

29]. SVF is defined as the visible proportion of the sky hemisphere from a ground point [

30].

Figure 4 displays the visible sky (S_sky) and the invisible sky (S_obstacle), which are determined by search radius (R) and building distribution. In this study, the SVF value for each pixel was obtained by SAGA GIS [

31]. Building height data with 10 m GSD were input, and R was determined as 100 m. The relevant calculation formulas are displayed in

Table 3.

3.3. Classification Schemes for LCZ Mapping

3.3.1. Object-Based Classification

MRS in Trimble eCognition software was used in this study, which adopts a bottom-up method to gradually merge homogeneous pixels into one object and determines the threshold of an object by parameters such as scale, color/shape, smoothness/tightness [

32,

33]. In addition to traditional LULC classification tasks, MRS has been successfully adopted in the field of urban functional zones mapping [

34,

35], which demonstrated that MRS has the potential for classifying diverse landscapes. Thus, MRS was selected for image segmentation in this study.

Regarding parameter settings, segmentation weights of spectral layers (16 layers) were set as 1 while others were set as 0. Segmentation parameters of color/shape and smoothness/compactness were considered as 0.9/0.1 and 0.5/0.5. A total of nine scales ranging from 30 to 150 were selected to evaluate mapping results in different scale scenarios.

For LCZ mapping, it has become well known that values extracted from an adjacent region (mean, standard deviation, maximum, minimum, et.) help classification [

20]. However, most researchers used a moving window to calculate statistical values as a new feature map. In the object-based method, we extracted features from image objects. Three types of features were obtained through Trimble eCongnition for our classifier: mean, standard deviation and texture. The total number of features is 87. The number of features for each type is shown in

Table 4.

The total number of mean features is 63. Sixty-one of them were calculated one by one from the layers we input. The remaining two features were MAX.DIFF and brightness. The total number of standard deviation features is 16, which were calculated from the composite S2 images. Gray Level Co-occurrence Matrix (GLGM) was used to extract texture features from the composite S2 images, which describes the distribution of co-occurring values of an image in a given area and provides a statistical view of the texture based on the image histogram. We selected eight features, including contrast, dissimilarity, homogeneity, angular second moment, entropy, mean, standard deviation and correlation with all angles.

3.3.2. Pixel-Based Classification

In pixel-based classification, two schemes with 50 and 100 m were designed for LCZ mapping. Although the most appropriate mapping scale for LCZ classification has not reached a consensus yet, Bechtel et al. argued that a “valid” LCZ may vary depending on the resolution, and mapping with 100–150 m is a good compromise while 10–30 m is too high [

13]. Furthermore, the WUDAPT project, which strongly promotes the development of LCZ research, produced LCZ maps in a resolution of 100 m, leading most scholars to achieve LCZ classification in a resolution of 100 m [

10,

14,

16,

36]. Thus, 100 m was selected in this study as the first choice. In addition, since multi-source data can provide detailed urban information, making LCZ mapping possible at a fine scale. We selected 50 m as the second choice, which is almost the highest resolution in the LCZ mapping research [

37]. Thus, after the optimal scale was selected for the object-based method, we compared the object-based method with the pixel-based method in 50 and 100 m.

In order to make the pixel-based method and the object-based method comparable, zonal mean, standard deviation and texture were extracted from image patches for LCZ classification instead of traditional resampled values. Similar to the object-based method, a total of 87 features were finally adopted: 63 of them were zonal mean calculated from the data block, 16 were standard deviation features, and 8 were texture features calculated from the composite S2 images.

3.4. Random Forest Classifier

Random forest has been widely adopted in the remote sensing field, showing great performance in classification and regression tasks [

38,

39,

40]. By producing independent trees with randomly selected subsets through bootstrapping from training samples and input variables, the classifier obtains the final result through votes of majority trees. In addition, due to the popularity of the WUDAPT project, which achieves LCZ classification through random forest, most of the current LCZ maps were produced by random forest. We selected the same classifier to better exhibit the difference between object-based and pixel-based methods.

Since random forest is composed of independent decision trees, it is a machine learning algorithm that is sensitive to the different input features, which is convenient for us to analyze feature importance in the LCZ mapping field. Finally, the classifier we used in this study consists of 476 trees. In addition, 60% of the total sample data was selected for training the classifier, and all samples were used for testing, as we performed an area-based accuracy assessment for the object-based method.

3.5. Sampling Strategy

Three steps were conducted for sampling in our study. First, the sample polygons were digitalized on Google Earth software. Second, we stacked the samples with the segmentation/resampled layer and finally extracted reference objects/pixels. Considering the actual condition of Changzhou, we adopted 15 LCZ types including nine built types (excluding LCZ7) and six land cover types (excluding LCZC).

Figure 5 displays the difference between object-based workflow and pixel-based workflow in sampling. Notably, when digitalizing on Google Earth, sampling criteria from Zhu et al. were conducted to identify diverse LCZ types [

41]. In particular, for built LCZ types, after determining the target area uniform, a large area was distinguished as LCZ8 or LCZ10 while others were distinguished by height, compactness and material according to

Figure 1.

Since the polygon samples obtained at the digitalization stage cannot completely coincide with the segmented image objects, it is hard to label objects correctly. Radoux set different overlay ratio thresholds for labeling different land cover categories, fully considering the differences among ground objects [

42]. In addition, LCZ classification is gradually regarded as a scene classification task, and many scholars attempted to utilize surrounding information of samples to improve the robustness of the classifier [

14,

16,

35]. For a similar purpose, diverse overlay ratios were set for each LCZ type. Taking 0.5 as a basic threshold (too high is meaningless while too low may introduce wrong samples), we adjusted the overlay ratio according to the complexity of an LCZ. Namely, simple scenarios require a higher threshold while complex ones need a lower threshold.

Table 5 displays the settings we used. Finally, the stratified random sampling strategy was used to select training and test samples.

3.6. Accuracy Assessment

Overall accuracy (

), kappa coefficient (

), user’s accuracy, producer’s accuracy and F1-Score (

) were used for accuracy assessment. Several accuracy metrics proposed for LCZ mapping including

,

and

were also taken into consideration [

43]. The related formulas are shown in

Table 6. For the object-based method, an area-based accuracy assessment was performed counting for the correct proportions of classified areas in segmented objects [

44]. For the pixel-based method, all metrics were calculated by counting the correct number of pixels. In the accuracy metrics we designed, user’s accuracy and producer’s accuracy, which reflect commission error and omission error, were performed with a normalized confusion matrix. F1-score can be regarded as a harmonic average of model accuracy for better assessing per-class performance.

is the

on only the built types, and

is the

of the built versus natural LCZ classes only.

is a metric that accounts for similarity and dissimilarity between classes.

5. Discussion

Currently, LCZ mapping is mostly divided into two streams: RS based and GIS based. LCZ mapping based on the integration of the two methods is considered to have great potential, and a few studies have begun to focus on the integration of the two [

35]. The methods used in this study can be regarded as an integration of the two mapping methods, which further proves the great potential of the integrated framework.

From the perspective of the mapping accuracy, mapping results of small-scale/high-resolution were still higher than all other cases, which is inconsistent with some studies showing that mapping with moderate scale/resolution is better [

13,

16]. This is mainly because all features adopted in this study have regional information to help mapping reflect the mean or standard deviation value of image objects. Verdonck obtained a similar conclusion to ours and obtained the optimal mapping result by utilizing neighborhood information features [

15]. However, an LCZ represents a region spanning at least hundreds of meters, which means that mapping at a too small scale will cause the result to deviate from the application purpose. Thus, it is not encouraged to map at a too small scale. In addition, the amount of computation brought by the small scale is huge, which is more obvious in the pixel-based method.

According to accuracy results and statistical analysis, object-based methods show advantages and are stable at multiple scales, which is because the boundaries offered the by object-based method reduce confusion among LCZs. In the pixel-based method, it is difficult for regular grids to correctly divide LCZ boundaries, making classification difficult. Object-based methods are not beneficial to all LCZ types. For example, segmentation makes labeling and classification of LCZ5 types more difficult. Segmentation makes various features separated, which is an urgent problem to be solved when applying the object-based method to LCZ mapping. Lehner and Blaschke proposed the use of object-based methods for urban structure type mapping [

47]. If it is applied to LCZ mapping, the potential of object-based LCZ mapping may be further explored.

In feature analysis, the maximum difference, convolution features, NDBI, BRBA, BH, BSF, PSF, and land use were frequently selected, indicating that the above features are extremely important in LCZ mapping. Among the features of remote sensing data, convolution features that contain neighborhood information and the two spectral indices that contain short-wave infrared information performed well. These features have also been adopted in previous studies and are proven to be effective [

20]. Mapping based on GIS data has always been considered as a method of high-precision LCZ mapping [

12]; we also demonstrate the validity of GIS data.

The performance of the machine learning classifier is closely related to the number of training samples [

44]. Considering that the number of training samples will decrease as the scale parameter increases, the mapping accuracies of multiple scales were similar to the object-based methods. In the case of similar mapping accuracy, the choice of scale should be determined by the application conditions. Misclassification is acceptable in some cases because similar LCZ types represent similar temperature conditions. However, if the 17 LCZ types cannot meet application requirements and if the mapping scale is small, an LCZ subclass can be considered to be added. Kotharkar solved the confusing LCZ types problem by employing 21 LCZ types [

48]. Perera also pointed out that using LCZ subclasses is necessary for further analysis of urban development status [

49].

6. Conclusions

This study describes an attempt to compare the object-based method and the pixel-based method for LCZ mapping with multi-source data, aiming to determine a more advantageous mapping paradigm. In addition, four types of features were derived for ensuring mapping quality: (1) composite seasonal satellite images; (2) diverse spectral indices; (3) zonal information; (4) urban morphological information.

In multiple scale cases, the object-based method performed stable unless in under-segmentation cases. When the scale was less than 90, the and of all maps exceeded 95.5% and 92%, respectively, and no significant difference behaved in visual performance. When the scale was larger than 105, the object-based method showed an obvious performance decline, especially in built types, which indicated that multi-source data cannot provide sufficient urban information with under-segmentation.

Comparing the object-based method with a pixel-based method in 50 and 100 m, the object-based method showed the best performance and statistical difference from pixel-based methods (p < 0.05). , , and of the object-based method increased by about 2%, 1%, 1% and 1%, respectively, compared with the 50 m case (the better one) while 10 times the amount of data was processed in the pixel-based method. In per-class analysis, the object-based method showed a significant advantage in the natural types and competitive performance in built types, while partial LCZ types such as LCZ2, LCZ5, and LCZ6 performed worse than the pixel-based method in 50 m. However, we found that built type accuracy can be improved with finer segmentation.

In feature analysis, CFS was selected to evaluate the importance of features, and frequency characterized feature importance. For urban morphological information, BH, SVF, BSF, PSF and land use exhibited an extremely high frequency of selection at all scales. Among other features, convolutional layers, BRBA, NBDI, NBAI, MNDWI of certain seasons performed well, while original image bands were hardly selected, indicating that derived spectral features can better characterize differences among LCZs.

In our study, the object-based method can achieve equivalent mapping results with less computation cost compared with the pixel-based method, making it more suitable for large-scale mapping. In the future, the object-based method will become more advantageous when the segmentation results of urban regions can be improved.