An Improved LAI Estimation Method Incorporating with Growth Characteristics of Field-Grown Wheat

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. UAV Data Acquisition and Pre-Processing

2.3. Field Data Acquisition

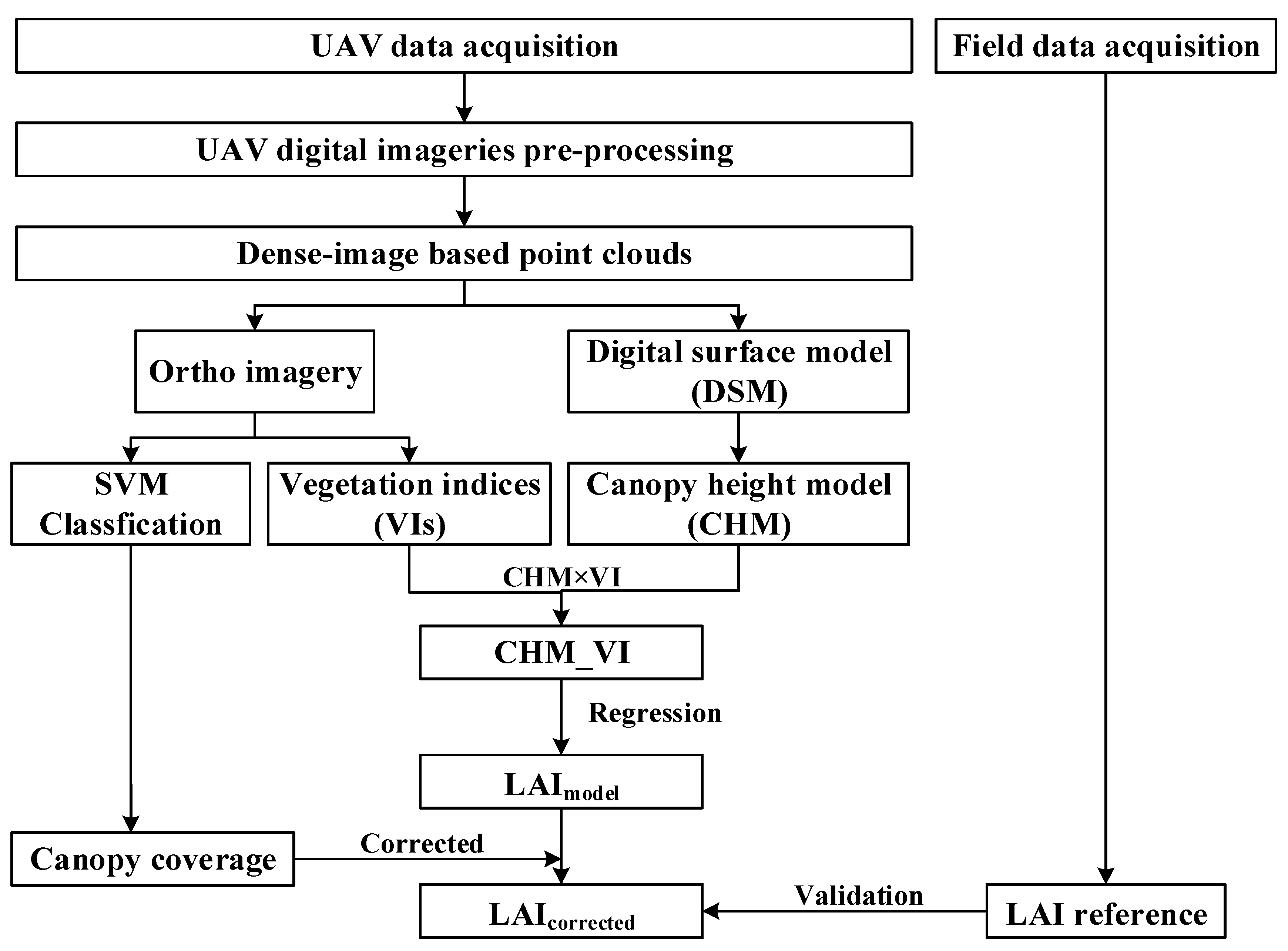

2.4. Method

2.4.1. An Improved LAI Estimation Method

2.4.2. Vegetation Index (VI) Calculation

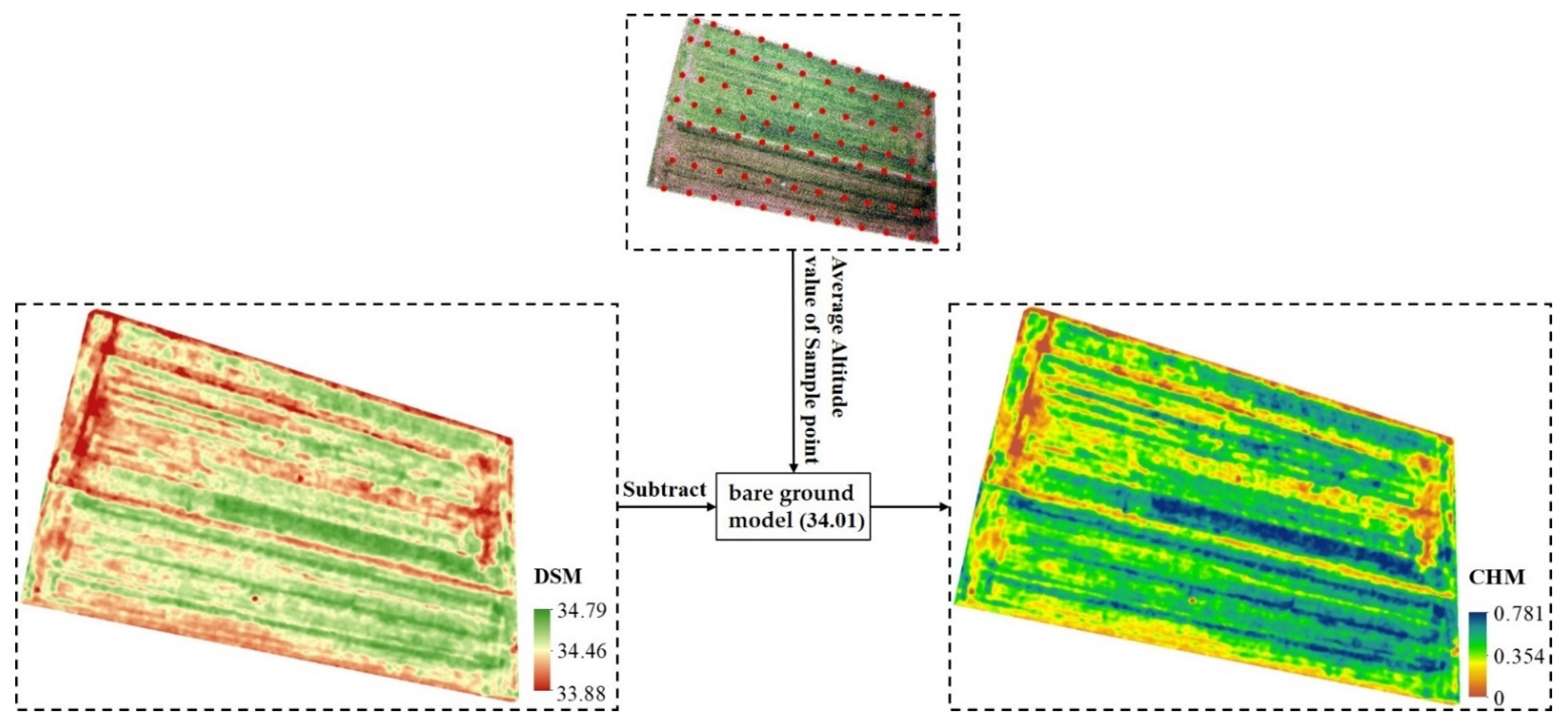

2.4.3. Canopy Height and Coverage Extraction

2.5. Evaluation Method

3. Results

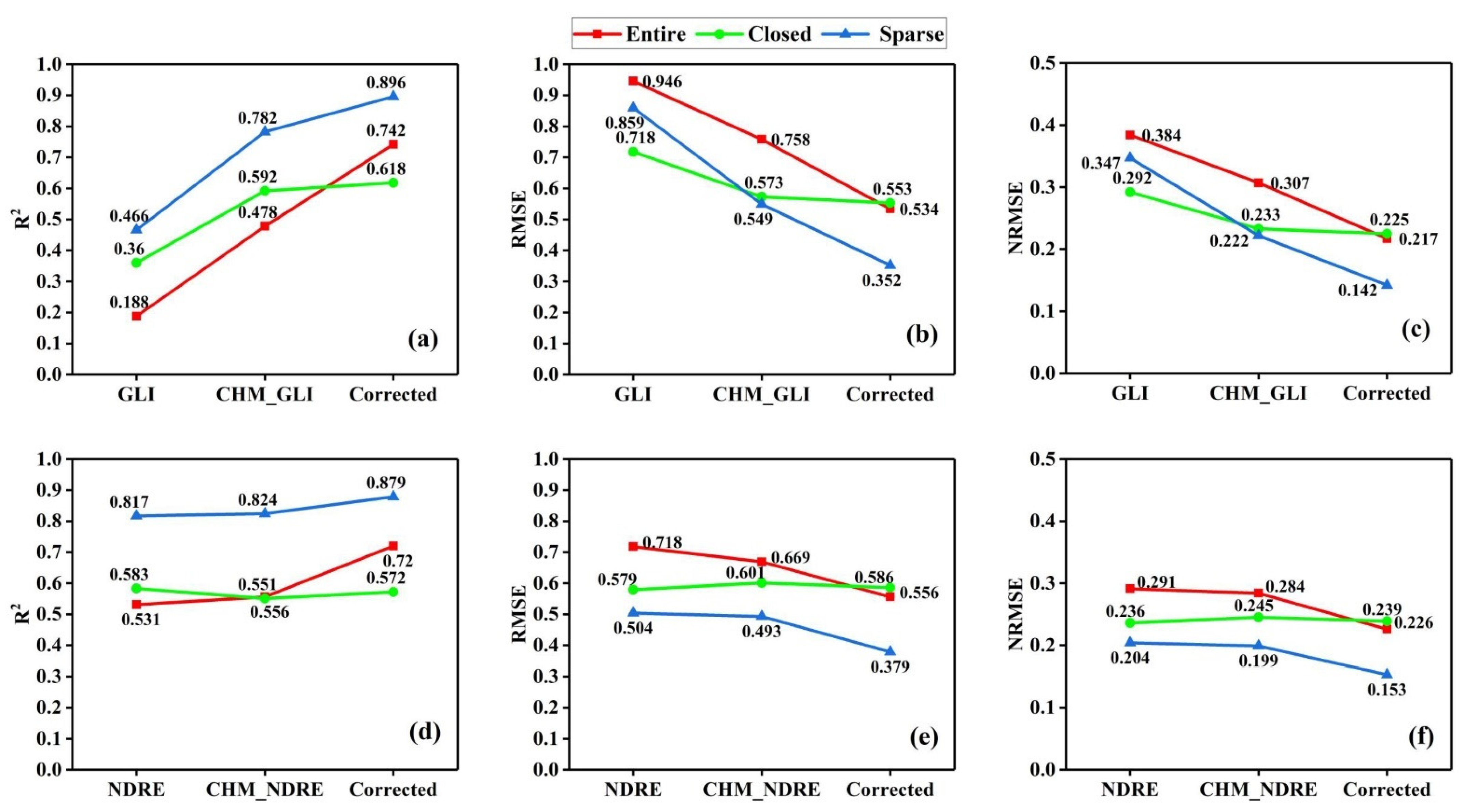

3.1. LAI Estimation Accuracy

3.2. Influence of Canopy Height

3.3. Influence of CC Correction

4. Discussion

4.1. The Role of UAV Remote Sensing

4.2. Potential of RGB Images

4.3. Estimation Method

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gower, S.T.; Kucharik, C.J.; Norman, J.M. Direct and Indirect Estimation of Leaf Area Index, f APAR, and Net Primary Production of Terrestrial Ecosystems. Remote Sens. Environ. 1999, 70, 29–51. [Google Scholar] [CrossRef]

- Brisco, B.; Brown, R.J.; Hirose, T.; McNairn, H.; Staenz, K. Precision Agriculture and the Role of Remote Sensing: A Review. Can. J. Remote Sens. 1998, 24, 315–327. [Google Scholar] [CrossRef]

- Marshall, M.; Thenkabail, P. Developing in situ Non-Destructive Estimates of Crop Biomass to Address Issues of Scale in Remote Sensing. Remote Sens. 2015, 7, 808–835. [Google Scholar] [CrossRef]

- Zhou, X.; Zheng, H.B.; Xu, X.Q.; He, J.Y.; Ge, X.K.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.X.; Tian, Y.C. Predicting grain yield in rice using multi-temporal vegetation indices from UAV-based multispectral and digital imagery. ISPRS J. Photogramm. 2017, 130, 246–255. [Google Scholar] [CrossRef]

- Thenkabail, P.S.; Gumma, M.K.; Teluguntla, P.; Mohammed, I.A. Hyperspectral remote sensing of vegetation and agricultural crops. Photogramm. Eng. Remote Sens. J. Am. Soc. Photogramm. 2014, 80, 695–723. [Google Scholar]

- Broge, N.H.; Leblanc, E. Comparing prediction power and stability of broadband and hyperspectral vegetation indices for estimation of green leaf area index and canopy chlorophyll density. Remote Sens. Environ. 2001, 76, 156–172. [Google Scholar] [CrossRef]

- Viña, A.; Gitelson, A.A.; Nguy-Robertson, A.L.; Peng, Y. Comparison of different vegetation indices for the remote assessment of green leaf area index of crops. Remote Sens. Environ. 2011, 115, 3468–3478. [Google Scholar] [CrossRef]

- Sha, Z.; Wang, Y.; Bai, Y.; Zhao, Y.; Jin, H.; Na, Y.; Meng, X. Comparison of leaf area index inversion for grassland vegetation through remotely sensed spectra by unmanned aerial vehicle and field-based spectroradiometer. J. Plant Ecol. 2018, 12, 395–408. [Google Scholar] [CrossRef]

- Feret, J.; François, C.; Asner, G.P.; Gitelson, A.A.; Martin, R.E.; Bidel, L.P.R.; Ustin, S.L.; le Maire, G.; Jacquemoud, S. PROSPECT-4 and 5: Advances in the leaf optical properties model separating photosynthetic pigments. Remote Sens. Environ. 2008, 112, 3030–3043. [Google Scholar] [CrossRef]

- Lang, Q.; Weijie, T.; Dehua, G.; Ruomei, Z.; Lulu, A.; Minzan, L.; Hong, S.; Di, S. UAV-based chlorophyll content estimation by evaluating vegetation index responses under different crop coverages. Comput. Electron. Agric. 2022, 196, 106775. [Google Scholar]

- Amarasingam, N.; Felipe, G.; Ashan, S.A.S.; Madhushanka, K.U.W.L.; Sampageeth, W.H.A.; Rasanjana, K.B. Predicting Canopy Chlorophyll Content in Sugarcane Crops Using Machine Learning Algorithms and Spectral Vegetation Indices Derived from UAV Multispectral Imagery. Remote Sens. 2022, 14, 11140. [Google Scholar]

- Thenkabail, P.S.; Smith, R.B.; De Pauw, E. Hyperspectral Vegetation Indices and Their Relationships with Agricultural Crop Characteristics. Remote Sens. Environ. 2000, 71, 158–182. [Google Scholar] [CrossRef]

- Hansen, P.M.; Schjoerring, J.K. Reflectance measurement of canopy biomass and nitrogen status in wheat crops using normalized difference vegetation indices and partial least squares regression. Remote Sens. Environ. 2003, 86, 542–553. [Google Scholar] [CrossRef]

- Dezhi, W.; Bo, W.; Jing, L.; Yanjun, S.; Qinghua, G.; Penghua, Q.; Xincai, W. Estimating aboveground biomass of the mangrove forests on northeast Hainan Island in China using an upscaling method from field plots, UAV-LiDAR data and Sentinel-2 imagery. Int. J. Appl. Earth Obs. 2020, 85, 101986. [Google Scholar]

- Zhang, J.; Qiu, X.; Wu, Y.; Zhu, Y.; Cao, Q.; Liu, X.; Cao, W. Combining texture, color, and vegetation indices from fixed-wing UAS imagery to estimate wheat growth parameters using multivariate regression methods. Comput. Electron. Agric. 2021, 185, 106138. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Maimaitiyiming, M.; Hartling, S.; Peterson, K.T.; Maw, M.J.W.; Shakoor, N.; Mockler, T.; Fritschi, F.B. Vegetation Index Weighted Canopy Volume Model (CVMVI) for soybean biomass estimation from Unmanned Aerial System-based RGB imagery. ISPRS J. Photogramm. 2019, 151, 27–41. [Google Scholar] [CrossRef]

- Yan, G.; Li, L.; Coy, A.; Mu, X.; Chen, S.; Xie, D.; Zhang, W.; Shen, Q.; Zhou, H. Improving the estimation of fractional vegetation cover from UAV RGB imagery by colour unmixing. ISPRS J. Photogramm. 2019, 158, 23–34. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, X.; Liang, Y.; Cao, Q.; Tian, Y.; Zhu, Y.; Cao, W.; Liu, X. Using a Portable Active Sensor to Monitor Growth Parameters and Predict Grain Yield of Winter Wheat. Sensors 2019, 19, 1108. [Google Scholar] [CrossRef]

- Hassan, M.A.; Yang, M.; Rasheed, A.; Yang, G.; Reynolds, M.; Xia, X.; Xiao, Y.; He, Z. A rapid monitoring of NDVI across the wheat growth cycle for grain yield prediction using a multi-spectral UAV platform. Plant Sci. 2019, 282, 95–103. [Google Scholar] [CrossRef]

- Martin, K.; Insa, K.; Dieter, T.; Thomas, J. High-Resolution UAV-Based Hyperspectral Imagery for LAI and Chlorophyll Estimations from Wheat for Yield Prediction. Remote Sens. 2018, 10, 2000. [Google Scholar]

- Songyang, L.; Xingzhong, D.; Qianliang, K.; Tahir, A.S.; Tao, C.; Xiaojun, L.; Yongchao, T.; Yan, Z.; Weixing, C.; Qiang, C. Potential of UAV-Based Active Sensing for Monitoring Rice Leaf Nitrogen Status. Front. Plant Sci. 2018, 9, 1834. [Google Scholar]

- Yao, X.; Wang, N.; Liu, Y.; Cheng, T.; Tian, Y.; Chen, Q.; Zhu, Y. Estimation of Wheat LAI at Middle to High Levels Using Unmanned Aerial Vehicle Narrowband Multispectral Imagery. Remote Sens. 2017, 9, 1304. [Google Scholar] [CrossRef]

- Zhu, W.; Sun, Z.; Huang, Y.; Lai, J.; Li, J.; Zhang, J.; Yang, B.; Li, B.; Li, S.; Zhu, K.; et al. Improving Field-Scale Wheat LAI Retrieval Based on UAV Remote-Sensing Observations and Optimized VI-LUTs. Remote Sens. 2019, 11, 2456. [Google Scholar] [CrossRef]

- Dandois, J.P.; Ellis, E.C. High spatial resolution three-dimensional mapping of vegetation spectral dynamics using computer vision. Remote Sens. Environ. 2013, 136, 259–276. [Google Scholar] [CrossRef]

- Puliti, S.; Ørka, H.; Gobakken, T.; Næsset, E. Inventory of Small Forest Areas Using an Unmanned Aerial System. Remote Sens. 2015, 7, 9632–9654. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, C.; Yang, C.; Xie, T.; Jiang, Z.; Hu, T.; Luo, Z.; Zhou, G.; Xie, J. Assessing the Effect of Real Spatial Resolution of In Situ UAV Multispectral Images on Seedling Rapeseed Growth Monitoring. Remote Sens. 2020, 12, 1207. [Google Scholar] [CrossRef]

- Cunliffe, A.M.; Brazier, R.E.; Anderson, K. Ultra-fine grain landscape-scale quantification of dryland vegetation structure with drone-acquired structure-from-motion photogrammetry. Remote Sens. Environ. 2016, 183, 129–143. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Giannetti, F.; Chirici, G.; Gobakken, T.; Næsset, E.; Travaglini, D.; Puliti, S. A new approach with DTM-independent metrics for forest growing stock prediction using UAV photogrammetric data. Remote Sens. Environ. 2018, 213, 195–205. [Google Scholar] [CrossRef]

- Heidarian Dehkordi, R.; Burgeon, V.; Fouche, J.; Placencia Gomez, E.; Cornelis, J.; Nguyen, F.; Denis, A.; Meersmans, J. Using UAV Collected RGB and Multispectral Images to Evaluate Winter Wheat Performance across a Site Characterized by Century-Old Biochar Patches in Belgium. Remote Sens. 2020, 12, 2504. [Google Scholar] [CrossRef]

- Zhang, D.; Liu, J.; Ni, W.; Sun, G.; Zhang, Z.; Liu, Q.; Wang, Q. Estimation of Forest Leaf Area Index Using Height and Canopy Cover Information Extracted From Unmanned Aerial Vehicle Stereo Imagery. IEEE J. Stars 2019, 12, 471–481. [Google Scholar] [CrossRef]

- Sun, B.; Wang, C.; Yang, C.; Xu, B.; Zhou, G.; Li, X.; Xie, J.; Xu, S.; Liu, B.; Xie, T.; et al. Retrieval of rapeseed leaf area index using the PROSAIL model with canopy coverage derived from UAV images as a correction parameter. Int. J. Appl. Earth Obs. 2021, 102, 102373. [Google Scholar] [CrossRef]

- Meyer, G.E.; Neto, J.C. Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 2008, 63, 282–293. [Google Scholar] [CrossRef]

- Woebbecke, D.M.U.O.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color Indices for Weed Identification Under Various Soil, Residue, and Lighting Conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef]

- Mounir, L.; Michael, M.B.; Douglas, E.J. Spatially Located Platform and Aerial Photography for Documentation of Grazing Impacts on Wheat. Geocarto Int. 2001, 16, 65–70. [Google Scholar]

- Anatoly, G.; Merzlyak, M.N. Spectral Reflectance Changes Associated with Autumn Senescence of Aesculus hippocastanum L. and Acer platanoides L. Leaves. Spectral Features and Relation to Chlorophyll Estimation. Urban Fischer 1994, 143, 286–292. [Google Scholar]

- Peñuelas, J.; Isla, R.; Filella, I.; Araus, J.L. Visible and Near-Infrared Reflectance Assessment of Salinity Effects on Barley. Crop Sci. 1997, 37, 198–202. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Jordan, C.F. Derivation of Leaf-Area Index from Quality of Light on the Forest Floor. Ecology 1969, 50, 663–666. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Ortega, G.A.; Henrique, F.M.D.S.; Goncalves, G.L.; Mantelatto, R.J.; Stephanie, C.; Martins, F.J.I.; Ricardo, M.F. Improving indirect measurements of the lea area index using canopy height. Pesqui. Agropecu. Bras. 2020, 55. [Google Scholar] [CrossRef]

- Jose, C.J.; Rodrigo, D.S.S.E.; Vieira, D.C.M.; Virginia, F.D.S.M.; Carlos, B.D.J.J.; de Mello Alexandre Carneiro, L. Correlations between plant height and light interception in grasses by different light meter devices. Rev. Bras. De Cienc. Agrar. Agrar. 2020, 15, 1–6. [Google Scholar]

- Ma, H.; Song, J.; Wang, J.; Xiao, Z.; Fu, Z. Improvement of spatially continuous forest LAI retrieval by integration of discrete airborne LiDAR and remote sensing multi-angle optical data. Agric. For. Meteorol. 2014, 189, 60–70. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating Biomass of Barley Using Crop Surface Models (CSMs) Derived from UAV-Based RGB Imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef]

- Iqbal, F.; Lucieer, A.; Barry, K.; Wells, R. Poppy Crop Height and Capsule Volume Estimation from a Single UAS Flight. Remote Sens. 2017, 9, 647. [Google Scholar] [CrossRef]

- WATSON, D.J. Comparative Physiological Studies on the Growth of Field Crops: II. The Effect of Varying Nutrient Supply on Net Assimilation Rate and Leaf Area. Ann. Bot. 1947, 11, 375–407. [Google Scholar] [CrossRef]

- Cai, L.; Zhao, Y.; Huang, Z.; Gao, Y.; Li, H.; Zhang, M. Rapid Measurement of Potato Canopy Coverage and Leaf Area Index Inversion. Appl. Eng. Agric. 2020, 36, 557–564. [Google Scholar] [CrossRef]

- Logsdon, S.D.; Cambardella, C.A. An Approach for Indirect Determination of Leaf Area Index. Trans. Asabe 2019, 62, 655–659. [Google Scholar] [CrossRef]

- Linsheng, H.; Furan, S.; Wenjiang, H.; Jinling, Z.; Huichun, Y.; Xiaodong, Y.; Dong, L. New Triangle Vegetation Indices for Estimating Leaf Area Index on Maize. J. Indian Soc. Remote 2018, 46, 1907–1914. [Google Scholar]

- Zhang, J.; Yang, C.; Zhao, B.; Song, H.; Hoffmann, W.C.; Shi, Y.; Zhang, D.; Zhang, G. Crop Classification and LAI Estimation Using Original and Resolution-Reduced Images from Two Consumer-Grade Cameras. Remote Sens. 2017, 9, 1054. [Google Scholar] [CrossRef]

- Darvishzadeh, R.; Skidmore, A.; Schlerf, M.; Atzberger, C.; Corsi, F.; Cho, M. LAI and chlorophyll estimation for a heterogeneous grassland using hyperspectral measurements. ISPRS J. Photogramm. 2008, 63, 409–426. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Pattey, E.; Zarco-Tejada, P.J.; Strachan, I.B. Hyperspectral vegetation indices and novel algorithms for predicting green LAI of crop canopies: Modeling and validation in the context of precision agriculture. Remote Sens. Environ. 2003, 90, 337–352. [Google Scholar] [CrossRef]

- Liang, L.; Di, L.; Zhang, L.; Deng, M.; Qin, Z.; Zhao, S.; Lin, H. Estimation of crop LAI using hyperspectral vegetation indices and a hybrid inversion method. Remote Sens. Environ. 2015, 165, 123–134. [Google Scholar] [CrossRef]

- Zarate-Valdez, J.L.; Whiting, M.L.; Lampinen, B.D.; Metcalf, S.; Ustin, S.L.; Brown, P.H. Prediction of leaf area index in almonds by vegetation indexes. Comput. Electron. Agric. 2012, 85, 24–32. [Google Scholar] [CrossRef]

| Sensor | Flight Data | Altitude (m) | Speed (m/s) | Overlap | Image GSD (cm) |

|---|---|---|---|---|---|

| COMS camera | 12:05 a.m. | 55 | 3 | 80% (forward) | 1.45 |

| 70% (side) | |||||

| Micasense Altum | 12:27 a.m. | 55 | 3 | 70%(forward) | 2.61 |

| 70% (side) |

| Vegetation Index | Formula 1 | Reference |

|---|---|---|

| Excess Green Index (ExG) | 2 g – r − b | [34] |

| Excess Green minus Excess Red Index (ExGR) | 3 g − 2.4 r − b | [33] |

| Normalized Green minus Red Difference Index (NGRDI) | (g − r)/(g + r) | [34] |

| Visible Atmospherically Resistant Index (VARI) | (g − r)/(g + r − b) | [35] |

| Green Leaf Index (GLI) | (2 g – b − r)/(2 g + b + r) | [36] |

| Red-edge Normalized Difference Vegetation Index (NDRE) | (pnir − pred edge)/(pnir + pred edge) | [37] |

| Normalized Difference Vegetation Index (NDVI) | (pnir − pred)/(pnir + pred) | [38] |

| Green Normalized Difference Vegetation Index (GNDVI) | (pnir − pgreen)/(pnir + pgreen) | [39] |

| Difference Vegetation Index (DVI) | pnir − pred | [40] |

| Optimized Soil Adjusted Vegetation Index (OSAVI) | (1 + 0.16) × (pnir − pred)/(pnir + pred + 0.16) | [41] |

| Index Type | Spectral Index | Pearson Correlation Coefficient (R) |

|---|---|---|

| RGB-VIs | ExG | 0.433 ** |

| ExGR | 0.408 ** | |

| NGRDI | 0.379 ** | |

| VARI | 0.372 ** | |

| GLI | 0.434 ** | |

| MS-VIs | NDRE | 0.729 ** |

| NDVI | 0.570 ** | |

| GNDVI | 0.727 ** | |

| DVI | 0.453 ** | |

| OSAVI | 0.542 ** |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, Z.; Deng, L.; Lu, H. An Improved LAI Estimation Method Incorporating with Growth Characteristics of Field-Grown Wheat. Remote Sens. 2022, 14, 4013. https://doi.org/10.3390/rs14164013

Lu Z, Deng L, Lu H. An Improved LAI Estimation Method Incorporating with Growth Characteristics of Field-Grown Wheat. Remote Sensing. 2022; 14(16):4013. https://doi.org/10.3390/rs14164013

Chicago/Turabian StyleLu, Zhuo, Lei Deng, and Han Lu. 2022. "An Improved LAI Estimation Method Incorporating with Growth Characteristics of Field-Grown Wheat" Remote Sensing 14, no. 16: 4013. https://doi.org/10.3390/rs14164013