Automatic Detection and Assessment of Pavement Marking Defects with Street View Imagery at the City Scale

Abstract

:1. Introduction

2. Literature Review

2.1. Pavement Marking Defect Detection

2.2. Application of Street-View Imageries

2.3. Pavement-Marking Defect Assessment

2.4. Semantic Image Segmentation Based on Deep Learning

3. Materials and Methods

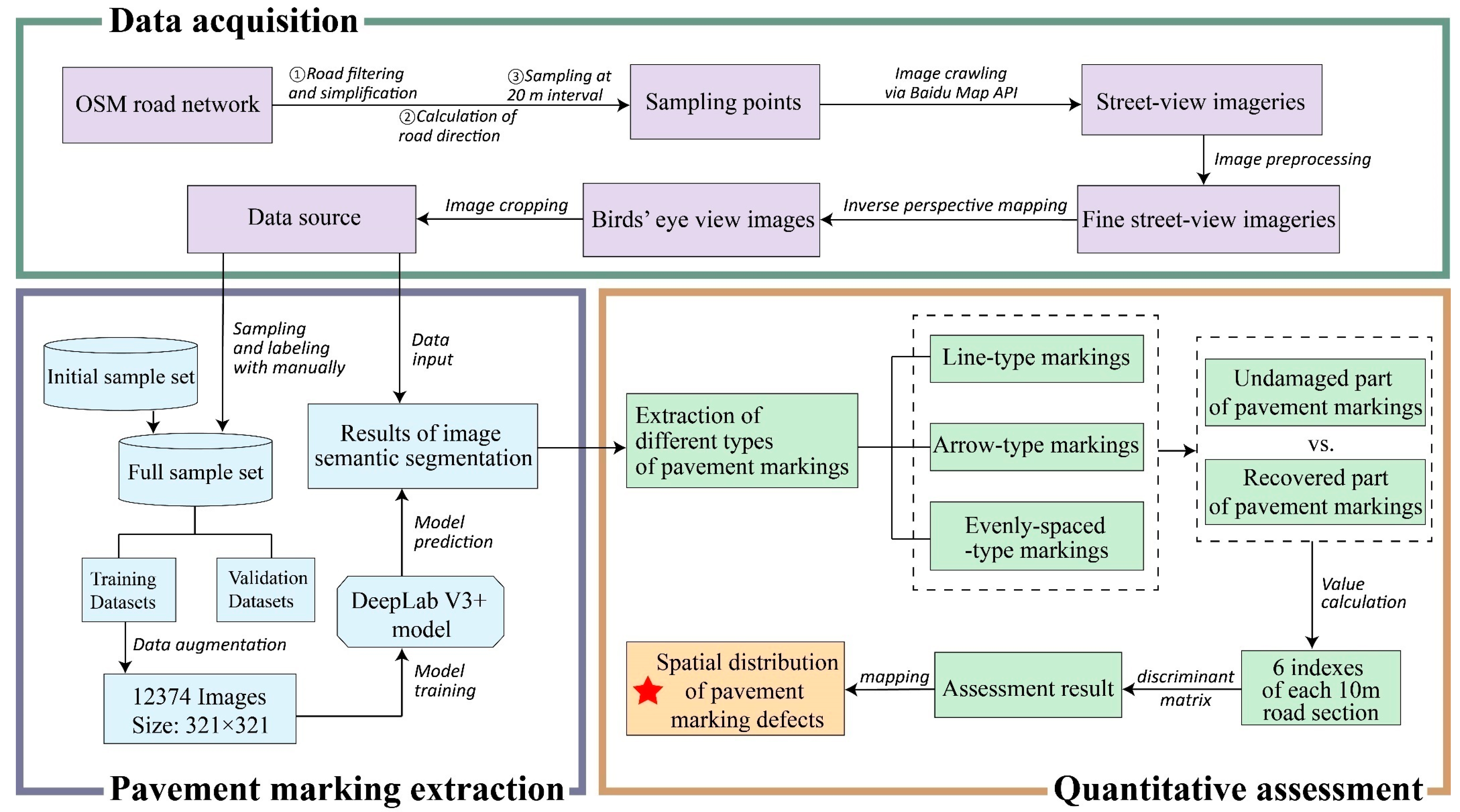

3.1. Research Framework

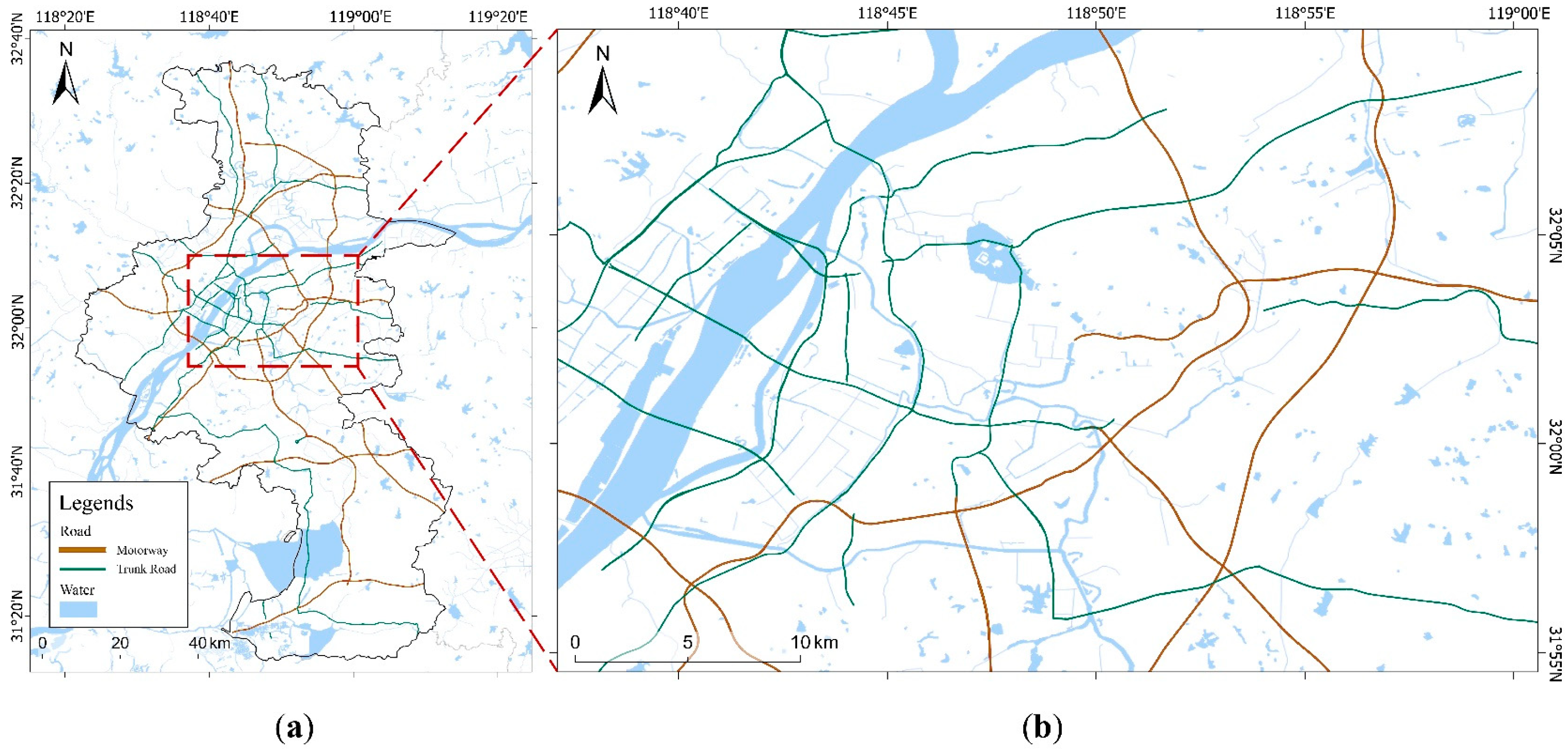

3.2. Experimental Data Acquisition

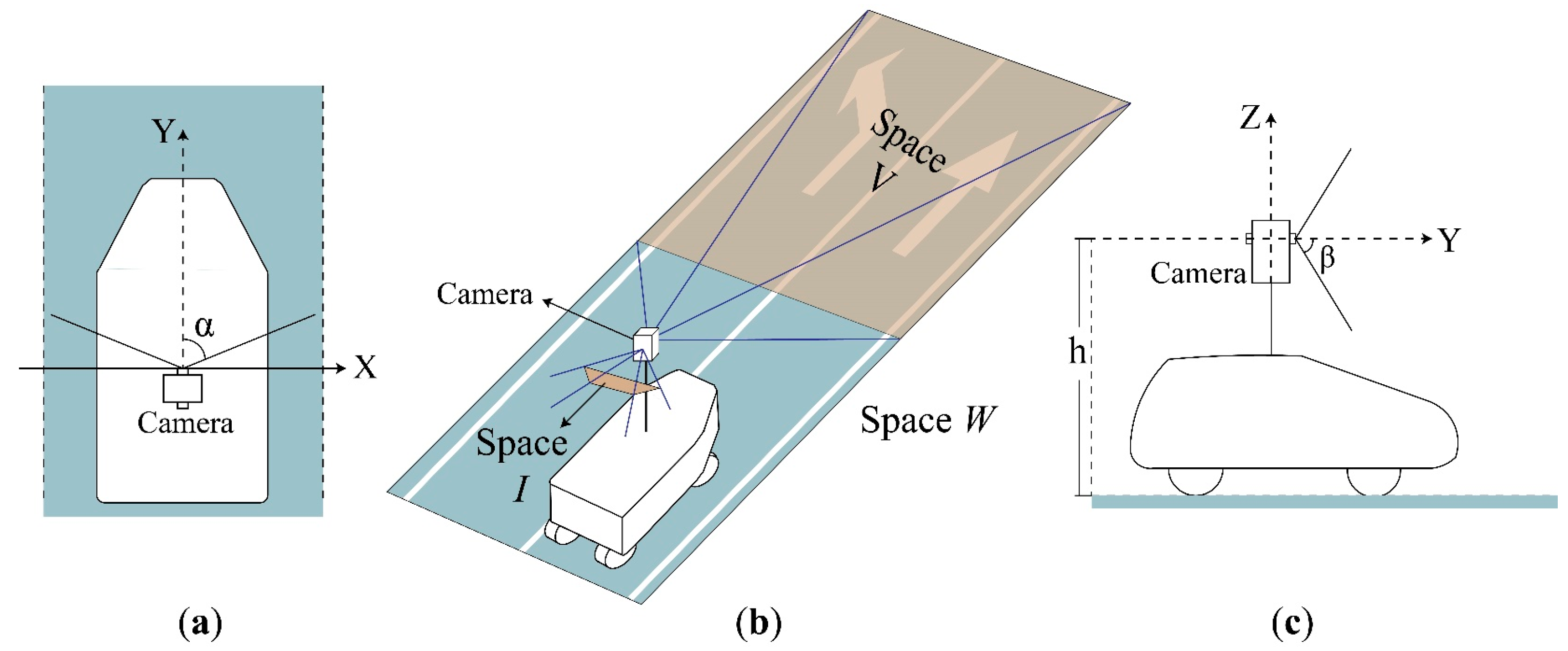

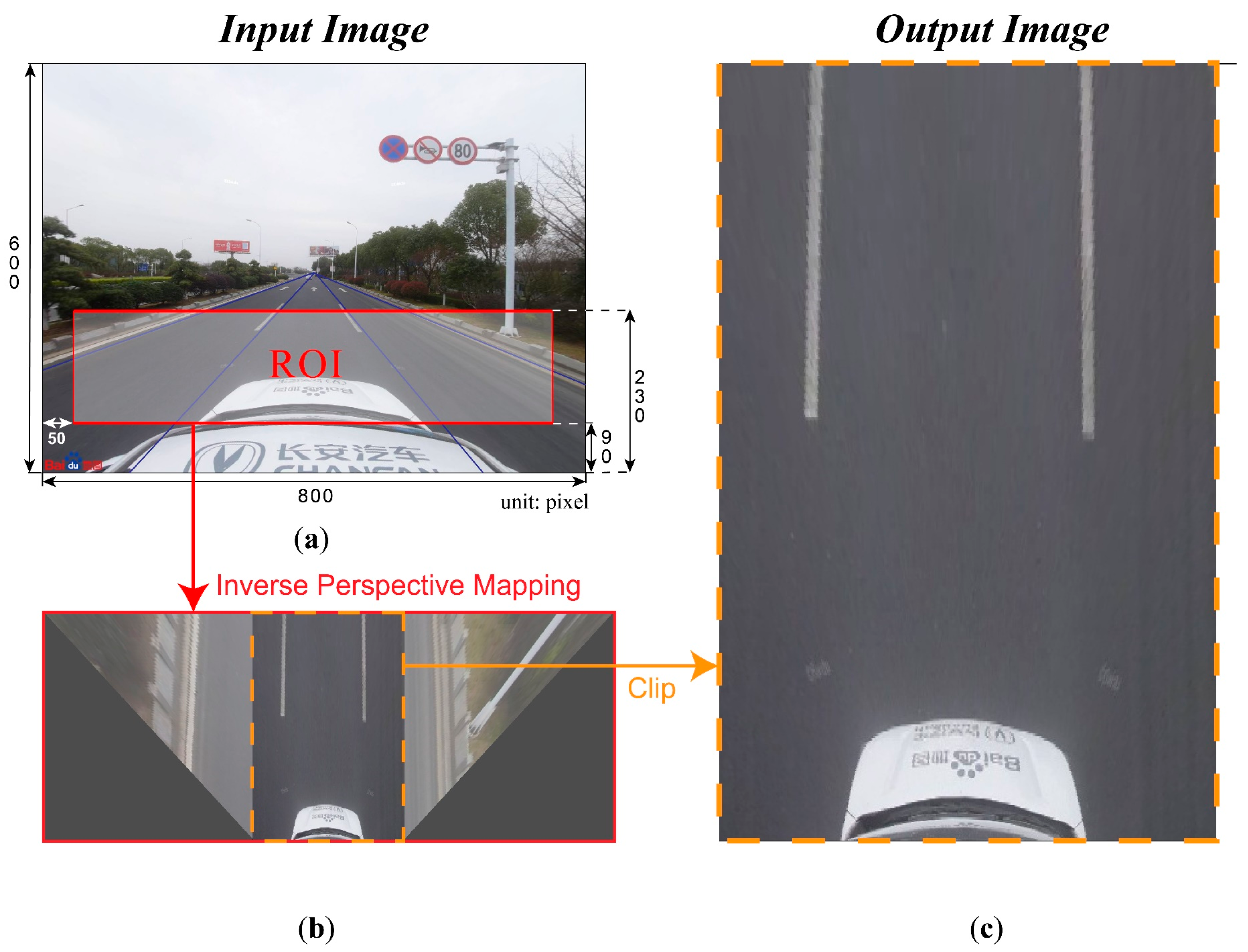

3.3. Inverse Perspective Mapping on Photographs Taken by Vehicle-Mounted Camera

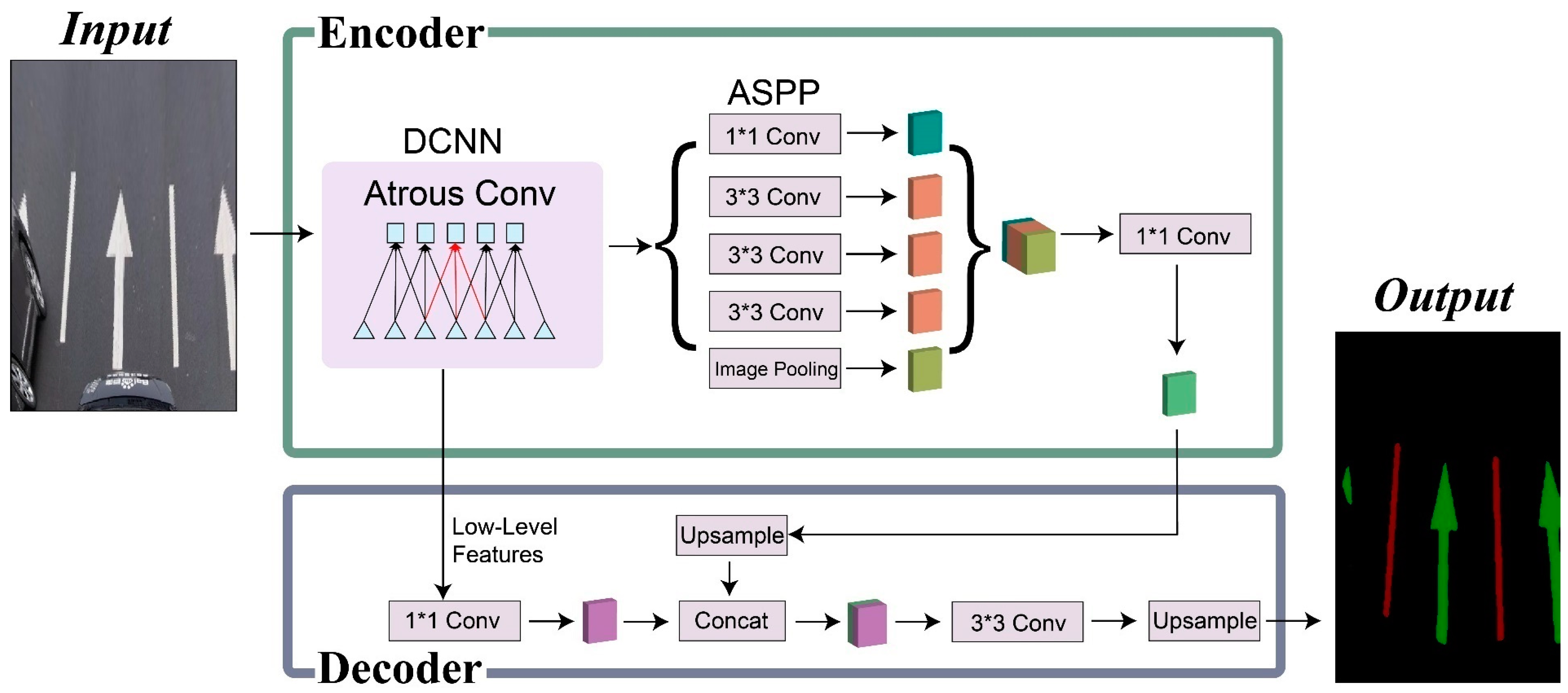

3.4. Deep-Learning-Based Extraction of Complete Pavement Markings

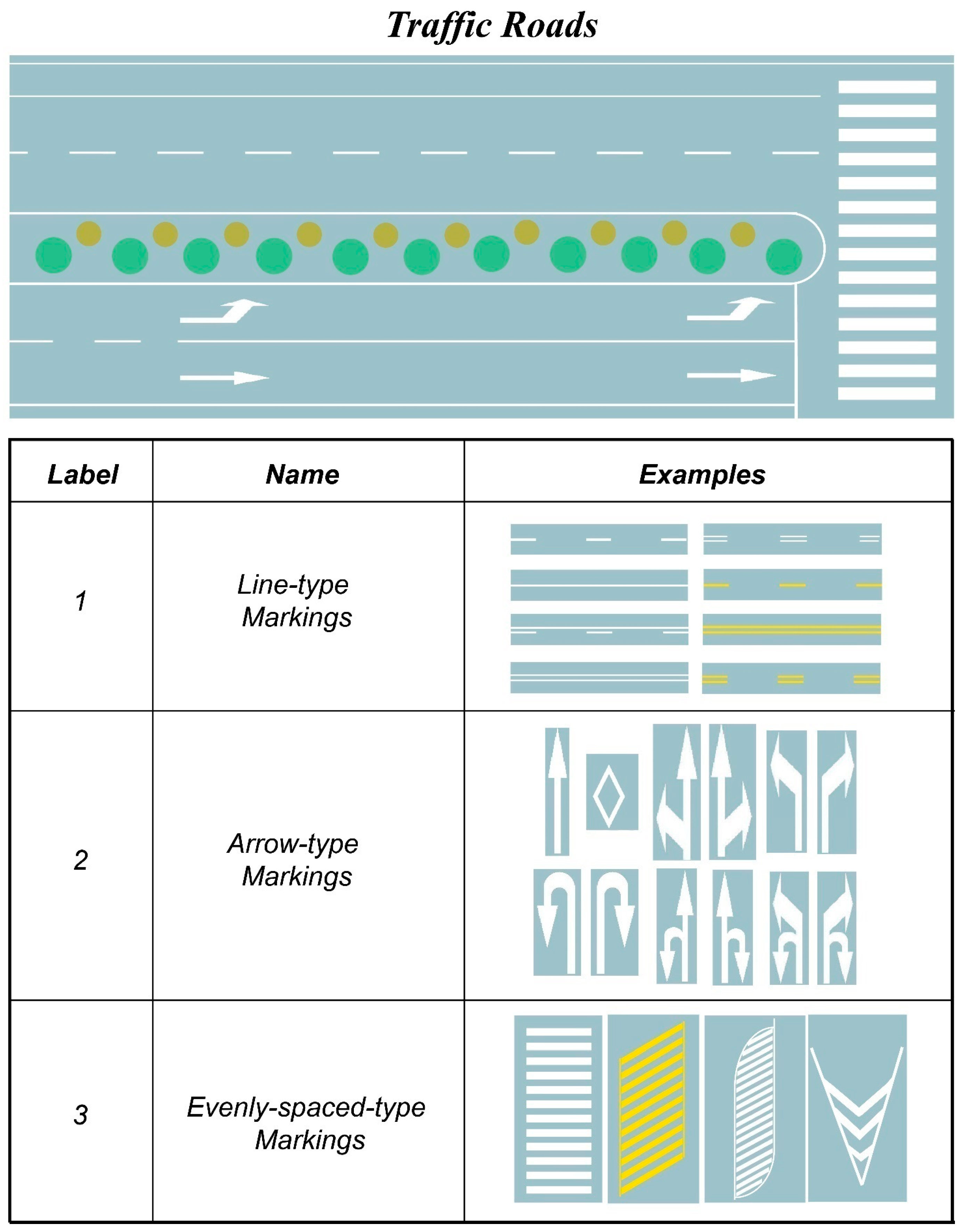

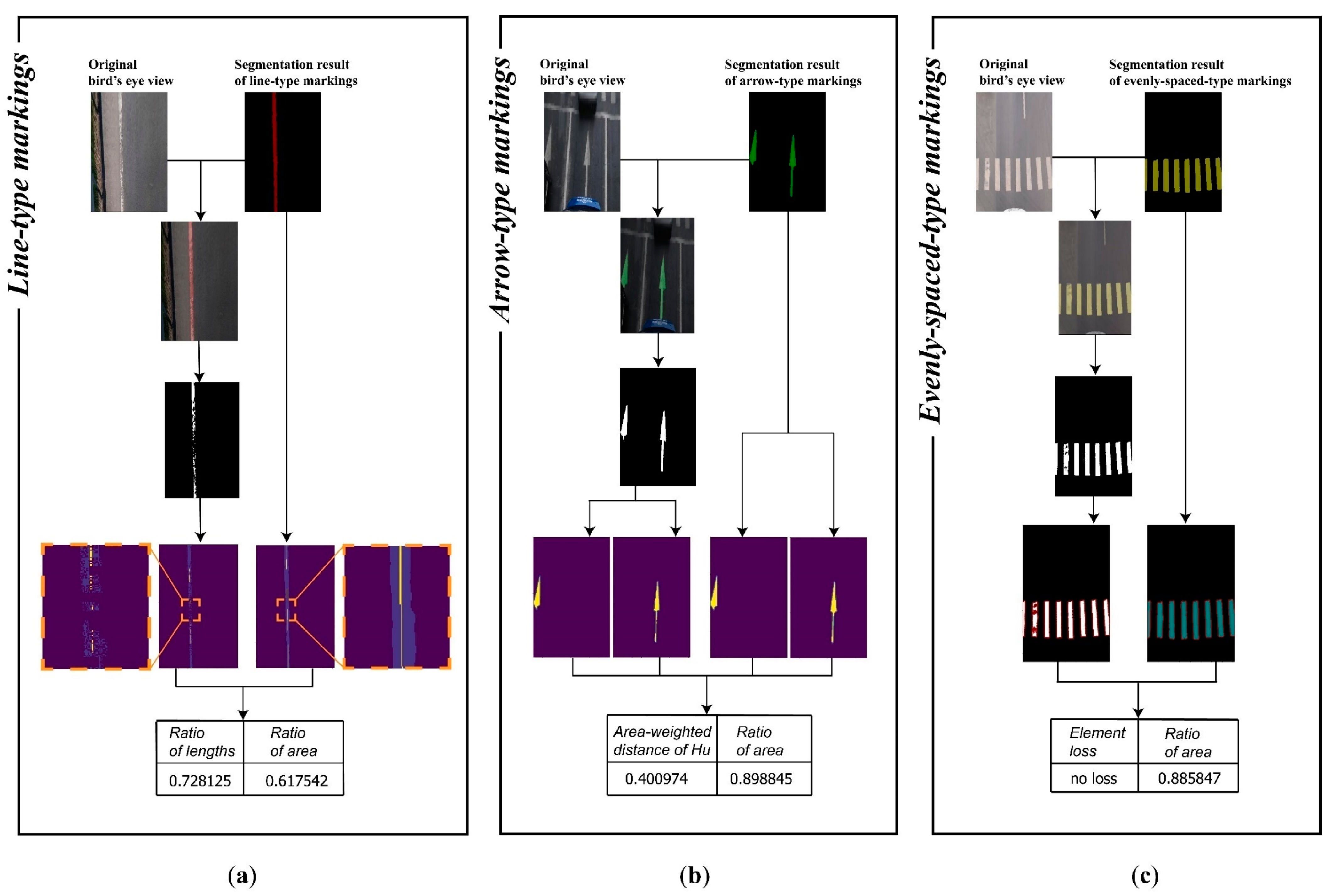

3.5. Quantitative and Qualitative Assessment of Pavement-Marking Defects

4. Results

4.1. Validation of Semantic Image Segmentation Model

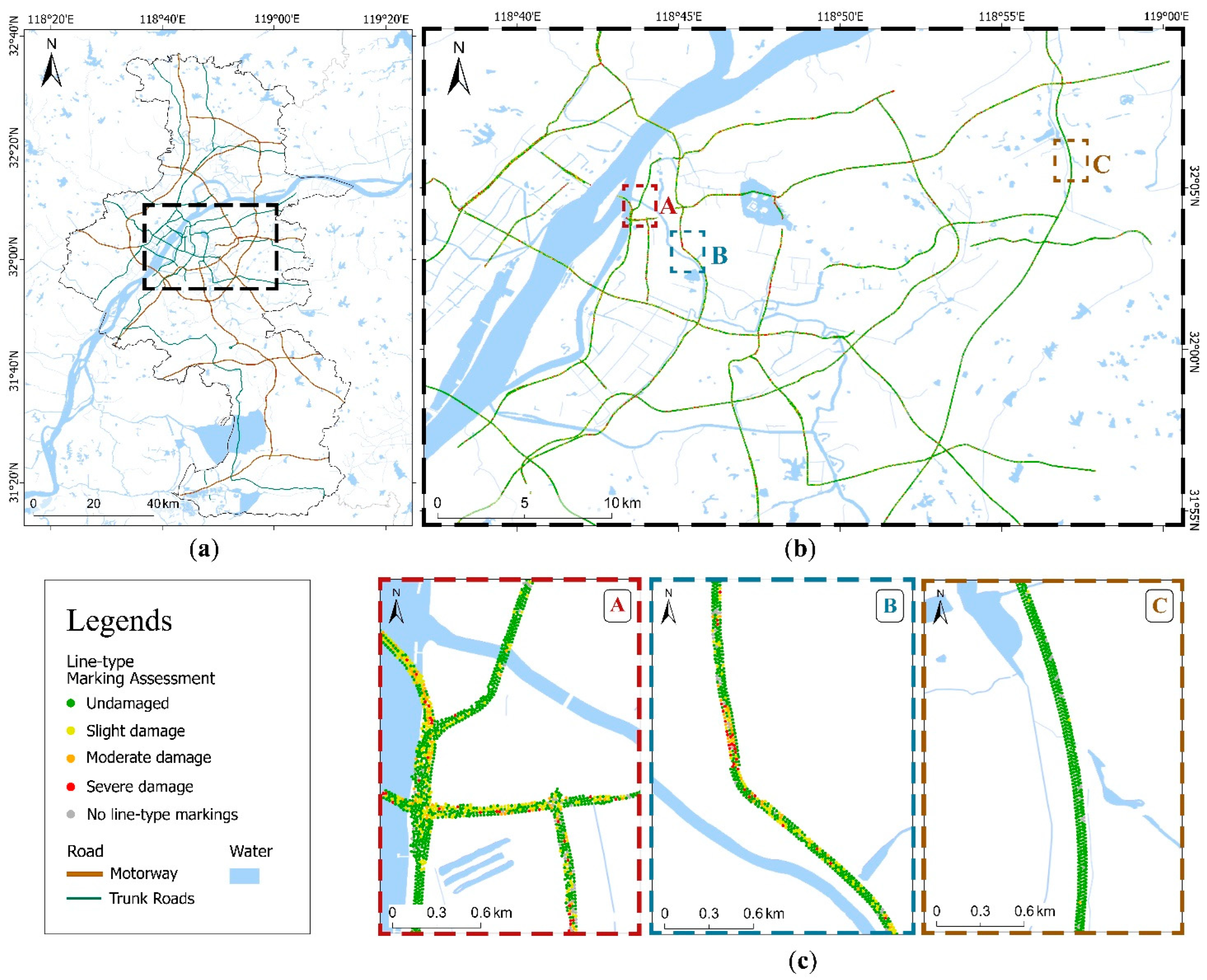

4.2. Spatial Distribution of Pavement-Marking Defects

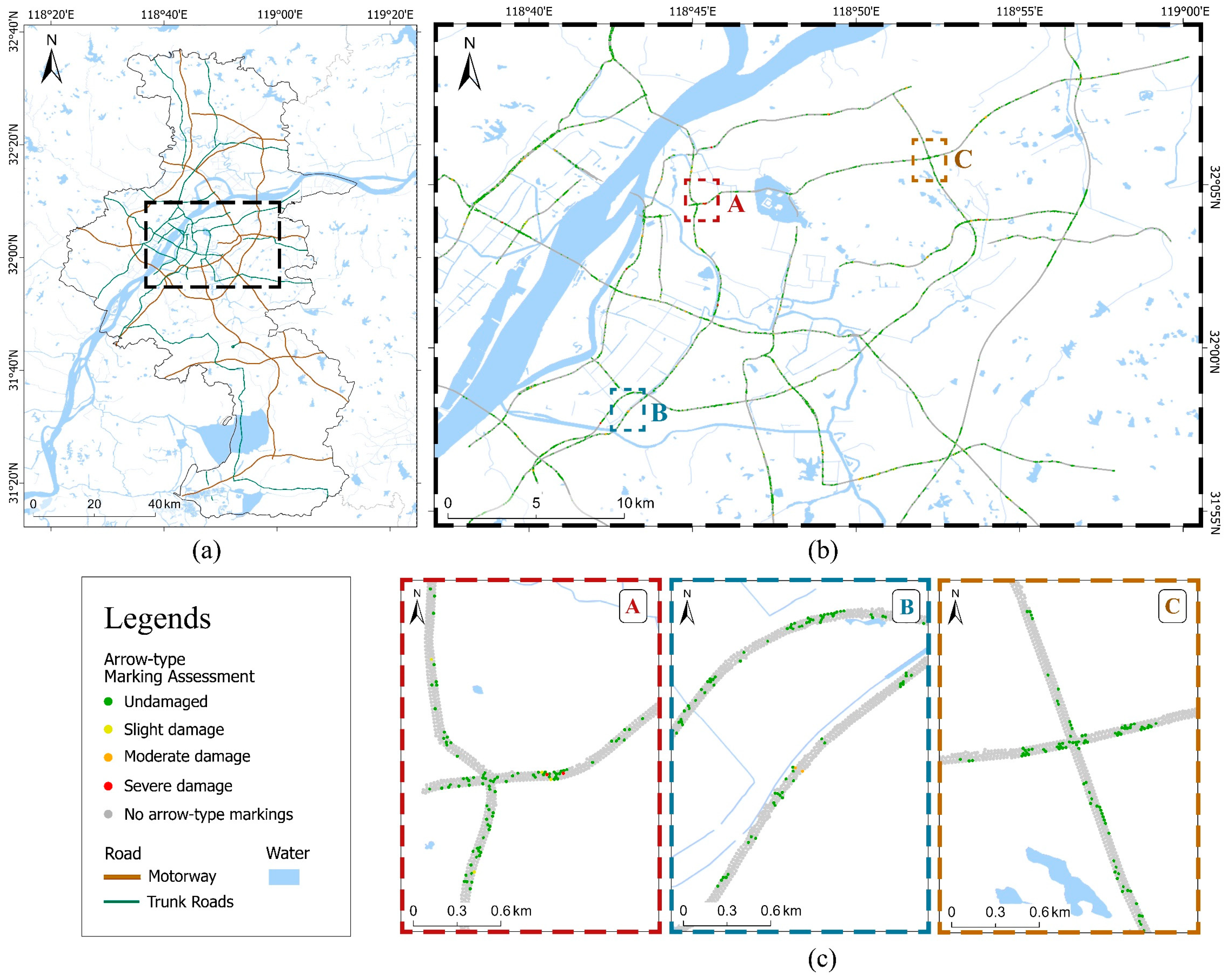

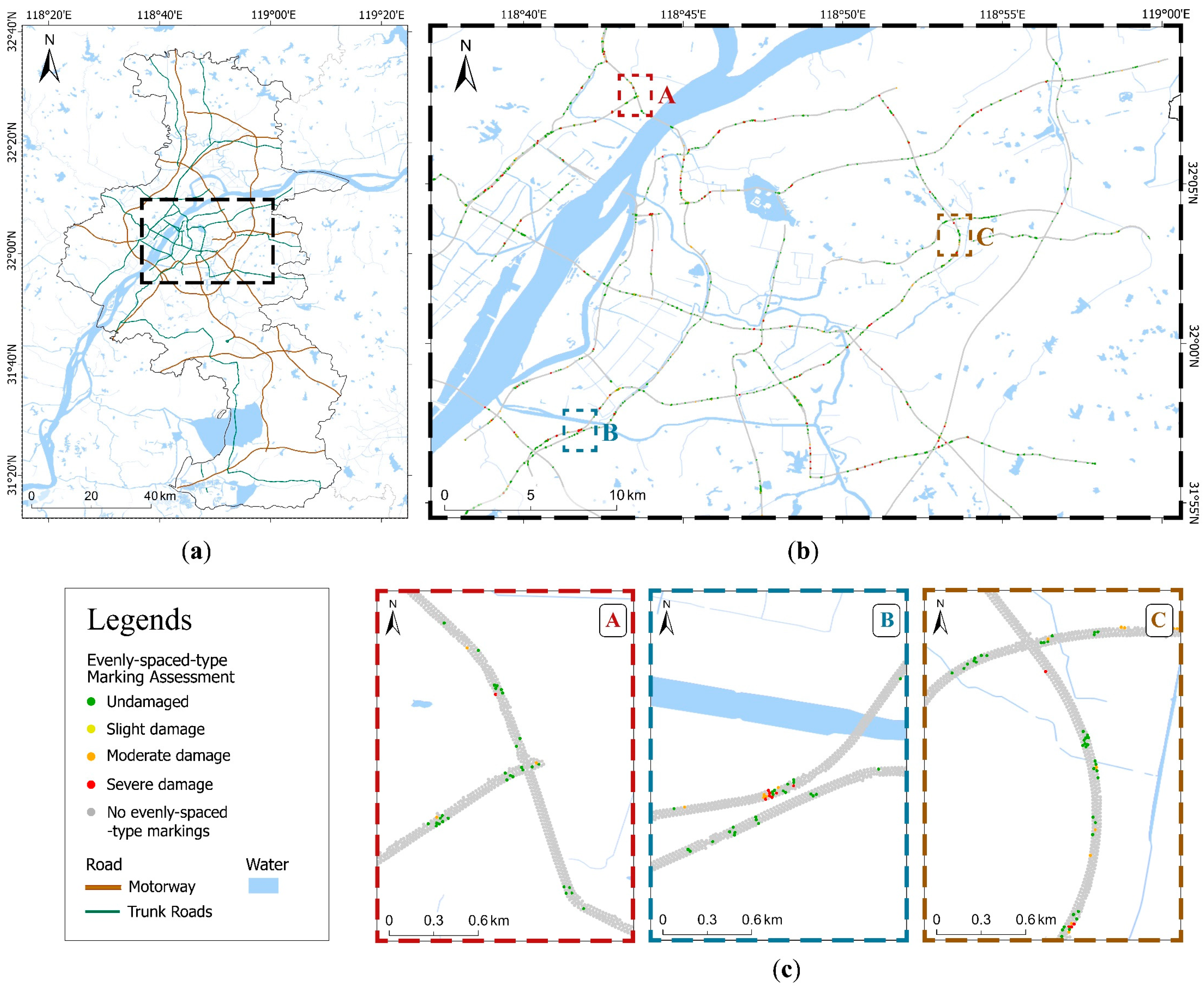

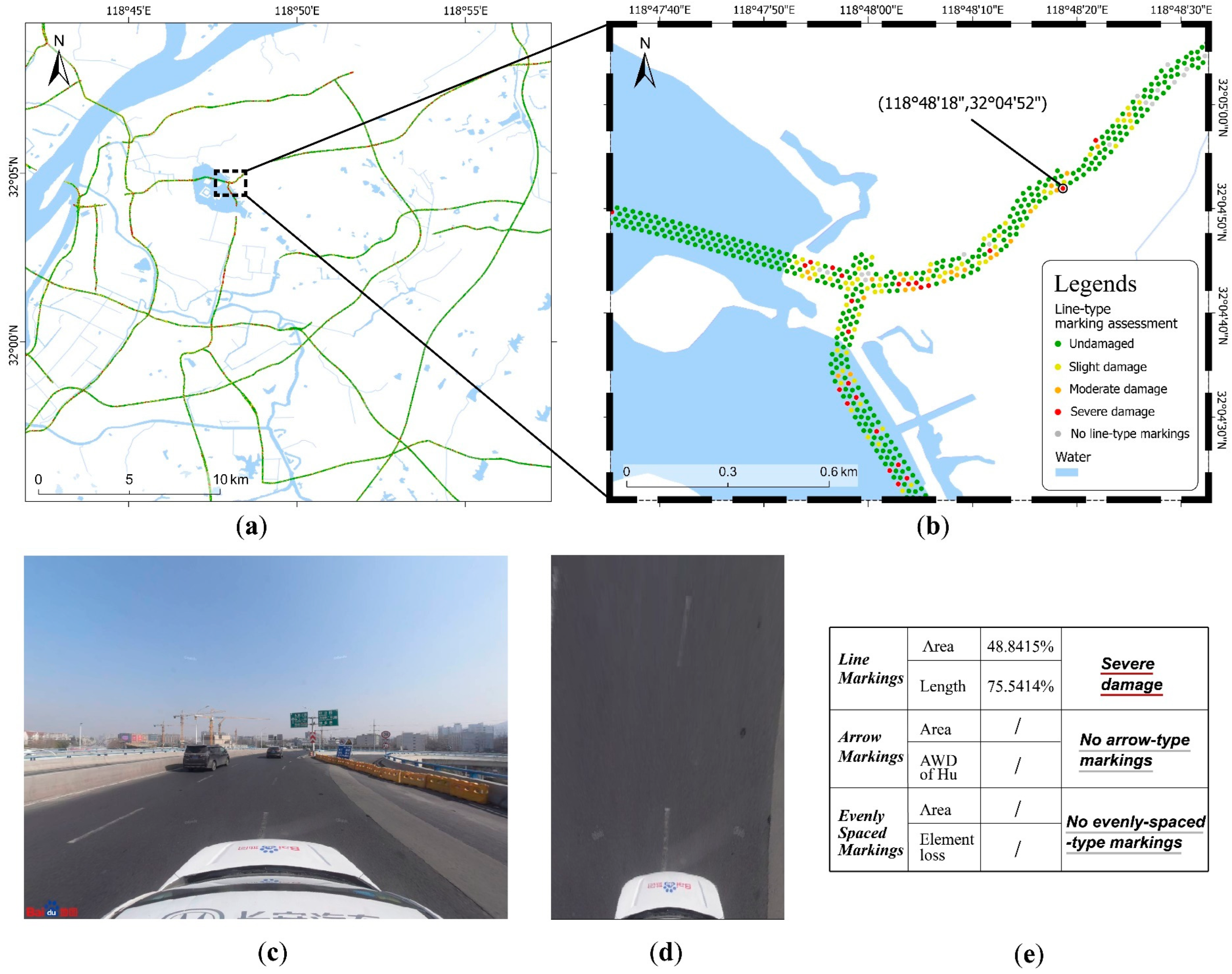

4.2.1. Mapping of Pavement-Marking Defects of Three Types of Markings

4.2.2. Clustering Characteristics of Pavement-Marking Defects

4.2.3. Conjectures of Causes of Defects Based on Spatial Analysis

5. Discussion

5.1. Evaluating the Spatial Distribution of Pavement-Marking Defects at the City Scale

5.2. Contributions for Precise Urban Road Maintenance

5.3. Limitations and Future Steps

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Carlson, P.J.; Park, E.S.; Andersen, C.K. Benefits of pavement markings: A renewed perspective based on recent and ongoing research. Transp. Res. Rec. 2009, 2107, 59–68. [Google Scholar] [CrossRef]

- Yao, L.; Qin, C.; Chen, Q.; Wu, H. Automatic road marking extraction and vectorization from vehicle-borne laser scanning data. Remote Sens. 2021, 13, 2612. [Google Scholar] [CrossRef]

- Karvonen, A.; Cugurullo, F.; Caprotti, F. Inside Smart Cities; Routledge: London, UK, 2019. [Google Scholar] [CrossRef]

- Yigitcanlar, T.; Desouza, K.C.; Butler, L.; Roozkhosh, F. Contributions and risks of artificial intelligence (AI) in building smarter cities: Insights from a systematic review of the literature. Energies 2020, 13, 1473. [Google Scholar] [CrossRef]

- Liu, Z.; Wu, W.; Gu, X.; Li, S.; Wang, L.; Zhang, T. Application of combining YOLO models and 3D GPR images in road detection and maintenance. Remote Sens. 2021, 13, 1081. [Google Scholar] [CrossRef]

- AAoSHaT, O. Bridging the Gap-Restoring and Rebuilding the Nation’s Bridges; American Association of State Highway and Transportation Officials: Washington, DC, USA, 2008. [Google Scholar]

- Maeda, H.; Sekimoto, Y.; Seto, T.; Kashiyama, T.; Omata, H. Road damage detection and classification using deep neural networks with smartphone images. Comput. Aided Civ. Infrastruct. Eng. 2018, 33, 1127–1141. [Google Scholar] [CrossRef]

- Cugurullo, F. Urban artificial intelligence: From automation to autonomy in the smart city. Front. Sustain. Cities 2020, 2, 38. [Google Scholar] [CrossRef]

- Biljecki, F.; Ito, K. Street view imagery in urban analytics and GIS: A review. Landsc. Urban Plan. 2021, 215, 104217. [Google Scholar] [CrossRef]

- Zhong, T.; Ye, C.; Wang, Z.; Tang, G.; Zhang, W.; Ye, Y. City-scale mapping of urban façade color using street-view imagery. Remote Sens. 2021, 13, 1591. [Google Scholar] [CrossRef]

- Stubbings, P.; Peskett, J.; Rowe, F.; Arribas-Bel, D. A hierarchical urban forest index using street-level imagery and deep learning. Remote Sens. 2019, 11, 1395. [Google Scholar] [CrossRef]

- Wang, Z.; Tang, G.; Lü, G.; Ye, C.; Zhou, F.; Zhong, T. Positional error modeling of sky-view factor measurements within urban street canyons. Trans. GIS 2021, 25, 1970–1990. [Google Scholar] [CrossRef]

- Kang, K.; Chen, D.; Peng, C.; Koo, D.; Kang, T.; Kim, J. Development of an automated visibility analysis framework for pavement markings based on the deep learning approach. Remote Sens. 2020, 12, 3837. [Google Scholar] [CrossRef]

- Kawano, M.; Mikami, K.; Yokoyama, S.; Yonezawa, T.; Nakazawa, J. Road marking blur detection with drive recorder. In Proceedings of the 2017 IEEE International Conference on Big Data (Big Data), Boston, MA, USA, 11–14 December 2017; pp. 4092–4097. [Google Scholar] [CrossRef]

- Chimba, D.; Kidando, E.; Onyango, M. Evaluating the service life of thermoplastic pavement markings: Stochastic approach. J. Transp. Eng. Part B Pavements 2018, 144, 4018029. [Google Scholar] [CrossRef]

- Choubane, B.; Sevearance, J.; Holzschuher, C.; Fletcher, J.; Wang, C. Development and Implementation of a Pavement Marking Management System in Florida. Transp. Res. Rec. 2018, 2672, 209–219. [Google Scholar] [CrossRef]

- Kawasaki, T.; Kawano, M.; Iwamoto, T.; Matsumoto, M.; Yonezawa, T.; Nakazawa, J.; Tokuda, H. Damage detector: The damage automatic detection of compartment lines using a public vehicle and a camera. In Proceedings of the Adjunct Proceedings of the 13th International Conference on Mobile and Ubiquitous Systems: Computing Networking and Services, Hiroshima, Japan, 28 November–1 December 2016; pp. 53–58. [Google Scholar] [CrossRef]

- Cao, W.; Liu, Q.; He, Z. Review of pavement defect detection methods. IEEE Access 2020, 8, 14531–14544. [Google Scholar] [CrossRef]

- Bu, T.; Zhu, J.; Ma, T. A UAV photography–based detection method for defective road marking. J. Perform. Constr. Facil. 2022, 36, 04022035. [Google Scholar] [CrossRef]

- Khan, M.A.; Ectors, W.; Bellemans, T.; Janssens, D.; Wets, G. UAV-based traffic analysis: A universal guiding framework based on literature survey. Transp. Res. Procedia 2017, 22, 541–550. [Google Scholar] [CrossRef]

- Ruiza, A.L.; Alzraieeb, H. Automated Pavement Marking Defects Detection. In Proceedings of the ISARC, International Symposium on Automation and Robotics in Construction, Kitakyushu, Japan, 26–30 October 2020; pp. 67–73. [Google Scholar] [CrossRef]

- Alzraiee, H.; Leal Ruiz, A.; Sprotte, R. Detecting of pavement marking defects using faster R-CNN. J. Perform. Constr. Facil. 2021, 35, 04021035. [Google Scholar] [CrossRef]

- Nguyen, T.S.; Avila, M.; Begot, S. Automatic detection and classification of defect on road pavement using anisotropy measure. In Proceedings of the 2009 17th European Signal Processing Conference, Glasgow, UK, 24–28 August 2009; pp. 617–621. [Google Scholar]

- Mulry, B.; Jordan, M.; O’Brien, D.A. Automated pavement condition assessment using laser crack measurement system (LCMS) on airfield pavements in Ireland. In Proceedings of the 9th International Conference on Managing Pavement Assets, Washington, DC, USA, 18–21 May 2015. [Google Scholar]

- Li, B.; Song, D.; Li, H.; Pike, A.; Carlson, P. Lane marking quality assessment for autonomous driving. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1–9. [Google Scholar] [CrossRef]

- Jeong, Y.; Kim, W.; Lee, I.; Lee, J. Bridge inspection practices and bridge management programs in China, Japan, Korea, and US. J. Struct. Integr. Maint. 2018, 3, 126–135. [Google Scholar] [CrossRef]

- Zhang, D.; Zou, Q.; Lin, H.; Xu, X.; He, L.; Gui, R.; Li, Q. Automatic pavement defect detection using 3D laser profiling technology. Autom. Constr. 2018, 96, 350–365. [Google Scholar] [CrossRef]

- Estrin, D.; Chandy, K.M.; Young, R.M.; Smarr, L.; Odlyzko, A.; Clark, D.; Reding, V.; Ishida, T.; Sharma, S.; Cerf, V.G. Participatory sensing: Applications and architecture [internet predictions]. IEEE Internet Comput. 2009, 14, 12–42. [Google Scholar] [CrossRef]

- Chen, C.; Seo, H.; Zhao, Y.; Chen, B.; Kim, J.; Choi, Y.; Bang, M. Pavement damage detection system using big data analysis of multiple sensor. In International Conference on Smart Infrastructure and Construction 2019 (ICSIC) Driving Data-Informed Decision-Making; ICE Publishing: London, UK, 2019; pp. 559–569. [Google Scholar] [CrossRef]

- Li, X.; Li, J.; Hu, X.; Yang, J. Line-cnn: End-to-end traffic line detection with line proposal unit. IEEE Trans. Intell. Transp. Syst. 2019, 21, 248–258. [Google Scholar] [CrossRef]

- Lee, S.; Kim, J.; Shin Yoon, J.; Shin, S.; Bailo, O.; Kim, N.; Lee, T.-H.; Seok Hong, H.; Han, S.-H.; So Kweon, I. Vpgnet: Vanishing point guided network for lane and road marking detection and recognition. In Proceedings of the IEEE International Conference on Computer Vision, Cambridge, MA, USA, 20–23 June 2017; pp. 1947–1955. [Google Scholar] [CrossRef]

- Kim, Z. Robust lane detection and tracking in challenging scenarios. IEEE Trans. Intell. Transp. Syst. 2008, 9, 16–26. [Google Scholar] [CrossRef]

- Cheng, Y.-T.; Patel, A.; Wen, C.; Bullock, D.; Habib, A. Intensity thresholding and deep learning based lane marking extraction and lane width estimation from mobile light detection and ranging (LiDAR) point clouds. Remote Sens. 2020, 12, 1379. [Google Scholar] [CrossRef]

- Song, W.; Jia, G.; Zhu, H.; Jia, D.; Gao, L. Automated pavement crack damage detection using deep multiscale convolutional features. J. Adv. Transp. 2020, 2020, 6412562. [Google Scholar] [CrossRef]

- El-Wakeel, A.S.; Li, J.; Rahman, M.T.; Noureldin, A.; Hassanein, H.S. Monitoring road surface anomalies towards dynamic road mapping for future smart cities. In Proceedings of the 2017 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Montreal, QC, Canada, 14–16 November 2017; pp. 828–832. [Google Scholar] [CrossRef]

- Mahmoudzadeh, A.; Golroo, A.; Jahanshahi, M.R.; Firoozi Yeganeh, S. Estimating pavement roughness by fusing color and depth data obtained from an inexpensive RGB-D sensor. Sensors 2019, 19, 1655. [Google Scholar] [CrossRef]

- Lei, X.; Liu, C.; Li, L.; Wang, G. Automated pavement distress detection and deterioration analysis using street view map. IEEE Access 2020, 8, 76163–76172. [Google Scholar] [CrossRef]

- Cao, M.-T.; Tran, Q.-V.; Nguyen, N.-M.; Chang, K.-T. Survey on performance of deep learning models for detecting road damages using multiple dashcam image resources. Adv. Eng. Inform. 2020, 46, 101182. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Srinivas, S.; Sarvadevabhatla, R.K.; Mopuri, K.R.; Prabhu, N.; Kruthiventi, S.S.; Babu, R.V. A taxonomy of deep convolutional neural nets for computer vision. Front. Robot. AI 2016, 2, 36. [Google Scholar] [CrossRef]

- Zhang, A.; Wang, K.C.; Li, B.; Yang, E.; Dai, X.; Peng, Y.; Fei, Y.; Liu, Y.; Li, J.Q.; Chen, C. Automated pixel-level pavement crack detection on 3D asphalt surfaces using a deep-learning network. Comput. Aided Civ. Infrastruct. Eng. 2017, 32, 805–819. [Google Scholar] [CrossRef]

- Chen, L.-C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar] [CrossRef]

- Aly, M. Real time detection of lane markers in urban streets. In Proceedings of the 2008 IEEE Intelligent Vehicles Symposium, Eindhoven, The Netherlands, 4–6 June 2008; pp. 7–12. [Google Scholar] [CrossRef]

- Bertozz, M.; Broggi, A.; Fascioli, A. Stereo inverse perspective mapping: Theory and applications. Image Vis. Comput. 1998, 16, 585–590. [Google Scholar] [CrossRef]

- Chatfield, K.; Simonyan, K.; Vedaldi, A.; Zisserman, A. Return of the devil in the details: Delving deep into convolutional nets. arXiv 2014, arXiv:1405.3531. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Latecki, L.J.; Lakamper, R. Shape similarity measure based on correspondence of visual parts. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1185–1190. [Google Scholar] [CrossRef]

- Flusser, J.; Zitova, B.; Suk, T. Moments and Moment Invariants in Pattern Recognition; John Wiley & Sons: Hoboken, NJ, USA, 2009. [Google Scholar] [CrossRef]

- Yeh, A.G.; Zhong, T.; Yue, Y. Hierarchical polygonization for generating and updating lane-based road network information for navigation from road markings. Int. J. Geogr. Inf. Sci. 2015, 29, 1509–1533. [Google Scholar] [CrossRef]

| Area Ratio | ||||||

|---|---|---|---|---|---|---|

| 0 | 90–100% | 80–90% | 60–80% | 0–60% | ||

| Length Ratio | 0 | / 1 | / | / | / | / |

| 90–100% | / | Un-d 2 | Un-d | Slt-d | M-d | |

| 80–90% | / | Un-d | Slt-d 3 | M-d | M-d | |

| 70–80% | / | Slt-d | M-d | M-d 4 | Sv-d | |

| 0–70% | / | M-d | M-d | Sv-d | Sv-d 5 | |

| Area Ratio | ||||||

|---|---|---|---|---|---|---|

| 0 | 85–100% | 75–85% | 60–75% | 0–60% | ||

| AWD of Hu | No Markings | / 1 | / | / | / | / |

| 0–1 | / | Un-d | Un-d | Slt-d | M-d | |

| 1–5 | / | Un-d | Slt-d | M-d | M-d | |

| >5 | / | Slt-d | M-d | M-d | Sv-d | |

| Area Ratio | ||||||

|---|---|---|---|---|---|---|

| 0 | 85–100% | 75–85% | 50–75% | 0–50% | ||

| Element Loss | No Markings | / 1 | / | / | / | / |

| No Loss | / | Un-d | Un-d | Slt-d | Sv-d | |

| Loss | / | Slt-d | M-d | Sv-d | Sv-d | |

| Predicted Outcome | Ground Truth | |||

|---|---|---|---|---|

| Background | Line | Arrow | Evenly Spaced | |

| Background | 293,049,472 | 1,476,021 | 248,676 | 302,936 |

| Line | 2,238,121 | 9,454,275 | 193,597 | 86,328 |

| Arrow | 453,794 | 287,205 | 2,581,344 | 35,489 |

| Evenly spaced | 615,122 | 231,184 | 305 | 3,626,131 |

| Sum | 296,356,509 | 11,448,685 | 3,023,922 | 4,050,884 |

| Type | Precision | Recall | F1-Score |

|---|---|---|---|

| Background | 0.99 | 0.99 | 0.99 |

| Line | 0.83 | 0.79 | 0.81 |

| Arrow | 0.85 | 0.77 | 0.81 |

| Evenly spaced | 0.90 | 0.81 | 0.85 |

| Macro avg | 0.89 | 0.84 | 0.86 |

| Weighted avg | 0.98 | 0.98 | 0.98 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kong, W.; Zhong, T.; Mai, X.; Zhang, S.; Chen, M.; Lv, G. Automatic Detection and Assessment of Pavement Marking Defects with Street View Imagery at the City Scale. Remote Sens. 2022, 14, 4037. https://doi.org/10.3390/rs14164037

Kong W, Zhong T, Mai X, Zhang S, Chen M, Lv G. Automatic Detection and Assessment of Pavement Marking Defects with Street View Imagery at the City Scale. Remote Sensing. 2022; 14(16):4037. https://doi.org/10.3390/rs14164037

Chicago/Turabian StyleKong, Wanyue, Teng Zhong, Xin Mai, Shuliang Zhang, Min Chen, and Guonian Lv. 2022. "Automatic Detection and Assessment of Pavement Marking Defects with Street View Imagery at the City Scale" Remote Sensing 14, no. 16: 4037. https://doi.org/10.3390/rs14164037

APA StyleKong, W., Zhong, T., Mai, X., Zhang, S., Chen, M., & Lv, G. (2022). Automatic Detection and Assessment of Pavement Marking Defects with Street View Imagery at the City Scale. Remote Sensing, 14(16), 4037. https://doi.org/10.3390/rs14164037