Hyperspectral Panoramic Image Stitching Using Robust Matching and Adaptive Bundle Adjustment

Abstract

:1. Introduction

- 1.

- First, a feature extraction method based on SuperPoint is introduced to simultaneously improve the quantity and quality of feature points. Then, a robust and fast mismatch removal approach, linear adaptive filtering (LAF), is used to establish accurate correspondences that can handle rigid and nonrigid image deformations, and avoid much calculation.

- 2.

- Second, an adaptive bundle adjustment by continually reselecting the reference image was designed to eliminate the accumulation of errors.

- 3.

- Lastly, a covariance-correspondence-based spectral correction algorithm is proposed to ensure the spectral consistency of the panorama.

2. Related Works

3. Methodology

3.1. Feature Extraction and Matching

3.1.1. Self-Supervised Interest-Point Detection and Description

3.1.2. Robust Feature Matching via Linear Adaptive Filtering

3.2. Adaptive Bundle Adjustment

3.3. Spectral Correction and Multiband Blending

| Algorithm 1 Hyperspectral panoramic image stitching using robust matching and adaptive bundle adjustment |

|

4. Experiments and Analysis

4.1. Datasets

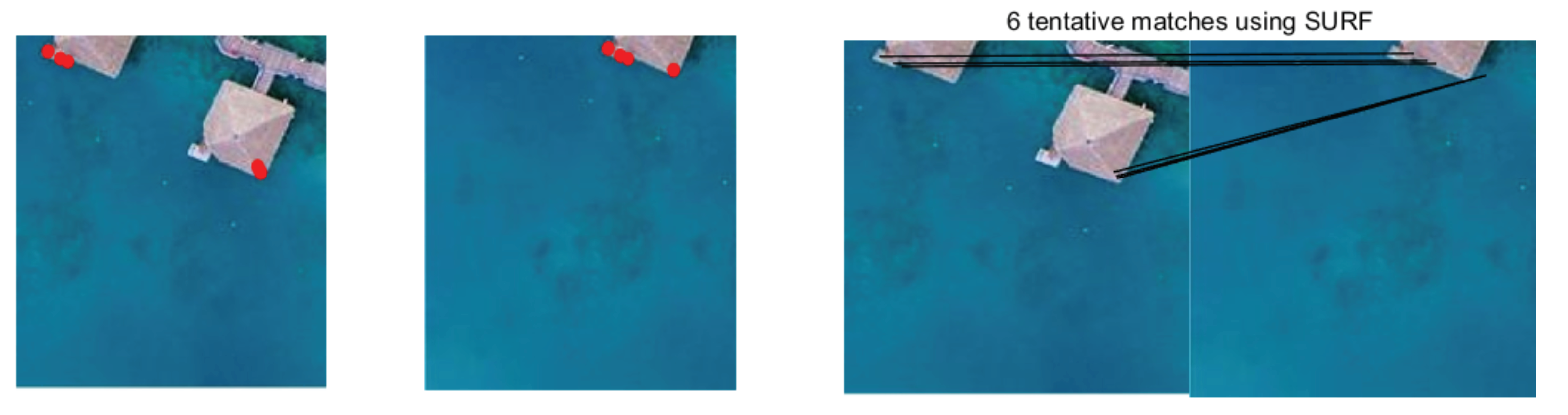

4.2. Results on Feature Matching

4.3. Results on Image Stitching

4.4. Spectral Analysis

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Połap, D.; Wawrzyniak, N.; Włodarczyk-Sielicka, M. Side-Scan Sonar Analysis Using ROI Analysis and Deep Neural Networks. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–8. [Google Scholar] [CrossRef]

- Tian, X.; Chen, Y.; Yang, C.; Ma, J. Variational Pansharpening by Exploiting Cartoon-Texture Similarities. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Luo, X.; Lai, G.; Wang, X.; Jin, Y.; He, X.; Xu, W.; Hou, W. UAV Remote Sensing Image Automatic Registration Based on Deep Residual Features. Remote Sens. 2021, 13, 3605. [Google Scholar] [CrossRef]

- Chen, J.; Li, Z.; Peng, C.; Wang, Y.; Gong, W. UAV Image Stitching Based on Optimal Seam and Half-Projective Warp. Remote Sens. 2022, 14, 1068. [Google Scholar] [CrossRef]

- Xu, Q.; Chen, J.; Luo, L.; Gong, W.; Wang, Y. UAV Image Stitching Based on Mesh-Guided Deformation and Ground Constraint. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4465–4475. [Google Scholar] [CrossRef]

- Rasti, B.; Hong, D.; Hang, R.; Ghamisi, P.; Kang, X.; Chanussot, J.; Benediktsson, J.A. Feature Extraction for Hyperspectral Imagery: The Evolution From Shallow to Deep: Overview and Toolbox. IEEE Geosci. Remote Sens. Mag. 2020, 8, 60–88. [Google Scholar] [CrossRef]

- Xue, W.; Zhang, Z.; Chen, S. Ghost Elimination via Multi-Component Collaboration for Unmanned Aerial Vehicle Remote Sensing Image Stitching. Remote Sens. 2021, 13, 1388. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Van Gool, L. SURF: Speeded Up Robust Features. In Proceedings of the Computer Vision—ECCV 2006; Leonardis, A., Bischof, H., Pinz, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 404–417. [Google Scholar]

- Gong, X.; Yao, F.; Ma, J.; Jiang, J.; Lu, T.; Zhang, Y.; Zhou, H. Feature Matching for Remote-Sensing Image Registration via Neighborhood Topological and Affine Consistency. Remote Sens. 2022, 14, 2606. [Google Scholar] [CrossRef]

- Ma, J.; Tang, L.; Fan, F.; Huang, J.; Mei, X.; Ma, Y. SwinFusion: Cross-domain Long-range Learning for General Image Fusion via Swin Transformer. IEEE/CAA J. Autom. Sin. 2022, 9, 1200–1217. [Google Scholar] [CrossRef]

- Peng, Z.; Ma, Y.; Mei, X.; Huang, J.; Fan, F. Hyperspectral Image Stitching via Optimal Seamline Detection. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Nie, L.; Lin, C.; Liao, K.; Liu, S.; Zhao, Y. Unsupervised Deep Image Stitching: Reconstructing Stitched Features to Images. IEEE Trans. Image Process. 2021, 30, 6184–6197. [Google Scholar] [CrossRef]

- Al-khafaji, S.L.; Zhou, J.; Zia, A.; Liew, A.W.C. Spectral-Spatial Scale Invariant Feature Transform for Hyperspectral Images. IEEE Trans. Image Process. 2018, 27, 837–850. [Google Scholar] [CrossRef] [PubMed]

- Lowe, D. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Tian, Y.; Barroso-Laguna, A.; Ng, T.; Balntas, V.; Mikolajczyk, K. HyNet: Learning Local Descriptor with Hybrid Similarity Measure and Triplet Loss. arXiv 2020, arXiv:2006.10202. [Google Scholar]

- Luo, Z.; Zhou, L.; Bai, X.; Chen, H.; Zhang, J.; Yao, Y.; Li, S.; Fang, T.; Quan, L. ASLFeat: Learning Local Features of Accurate Shape and Localization. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 6588–6597. [Google Scholar] [CrossRef]

- Ma, J.; Jiang, X.; Fan, A.; Jiang, J.; Yan, J. Image Matching from Handcrafted to Deep Features: A Survey. Int. J. Comput. Vis. 2021, 129, 23–79. [Google Scholar] [CrossRef]

- Ye, X.; Ma, J.; Xiong, H. Local Affine Preservation With Motion Consistency for Feature Matching of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Ma, J.; Zhou, H.; Zhao, J.; Gao, Y.; Jiang, J.; Tian, J. Robust Feature Matching for Remote Sensing Image Registration via Locally Linear Transforming. IEEE Trans. Geosci. Remote Sens. 2015, 53, 6469–6481. [Google Scholar] [CrossRef]

- Ma, J.; Zhao, J.; Jiang, J.; Zhou, H.; Guo, X. Locality Preserving Matching. Int. J. Comput. Vis. 2019, 127, 512–531. [Google Scholar] [CrossRef]

- Ma, J.; Jiang, J.; Zhou, H.; Zhao, J.; Guo, X. Guided Locality Preserving Feature Matching for Remote Sensing Image Registration. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4435–4447. [Google Scholar] [CrossRef]

- Jiang, X.; Jiang, J.; Fan, A.; Wang, Z.; Ma, J. Multiscale Locality and Rank Preservation for Robust Feature Matching of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6462–6472. [Google Scholar] [CrossRef]

- Brown, M.; Lowe, D.G. Automatic Panoramic Image Stitching using Invariant Features. Int. J. Comput. Vis. 2007, 74, 59–73. [Google Scholar] [CrossRef]

- Lin, C.C.; Pankanti, S.U.; Ramamurthy, K.N.; Aravkin, A.Y. Adaptive as-natural-as-possible image stitching. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June2015; pp. 1155–1163. [Google Scholar] [CrossRef]

- Chen, Y.S.; Chuang, Y.Y. Natural Image Stitching with the Global Similarity Prior. In Proceedings of the Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 186–201. [Google Scholar]

- Li, J.; Wang, Z.; Lai, S.; Zhai, Y.; Zhang, M. Parallax-Tolerant Image Stitching Based on Robust Elastic Warping. IEEE Trans. Multimed. 2018, 20, 1672–1687. [Google Scholar] [CrossRef]

- Yahyanejad, S.; Wischounig-Strucl, D.; Quaritsch, M.; Rinner, B. Incremental Mosaicking of Images from Autonomous, Small-Scale UAVs. In Proceedings of the 2010 7th IEEE International Conference on Advanced Video and Signal Based Surveillance, Boston, MA, USA, 29 August–1 September 2010; pp. 329–336. [Google Scholar] [CrossRef]

- Xing, C.; Wang, J.; Xu, Y. A Robust Method for Mosaicking Sequence Images Obtained from UAV. In Proceedings of the 2010 2nd International Conference on Information Engineering and Computer Science, Wuhan, China, 25–26 December 2010; pp. 1–4. [Google Scholar] [CrossRef]

- Xia, M.; Yao, M.; Li, L.; Lu, X. Globally consistent alignment for mosaicking aerial images. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 3039–3043. [Google Scholar] [CrossRef]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. SuperPoint: Self-Supervised Interest Point Detection and Description. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 337–33712. [Google Scholar] [CrossRef]

- Neubeck, A.; Van Gool, L. Efficient Non-Maximum Suppression. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; Volume 3, pp. 850–855. [Google Scholar] [CrossRef]

- Xiao, G.; Luo, H.; Zeng, K.; Wei, L.; Ma, J. Robust Feature Matching for Remote Sensing Image Registration via Guided Hyperplane Fitting. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Zhang, Y.; Wan, Z.; Jiang, X.; Mei, X. Automatic Stitching for Hyperspectral Images Using Robust Feature Matching and Elastic Warp. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3145–3154. [Google Scholar] [CrossRef]

- Xia, M.; Yao, J.; Xie, R.; Lu, X.; Li, L. Robust alignment for UAV images based on adaptive adjustment. In Proceedings of the 2016 9th IAPR Workshop on Pattern Recogniton in Remote Sensing (PRRS), Cancun, Mexico, 4 December 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Lourakis, M.I.A. Sparse Non-linear Least Squares Optimization for Geometric Vision. In Proceedings of the Computer Vision—ECCV 2010; Daniilidis, K., Maragos, P., Paragios, N., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 43–56. [Google Scholar]

- Alcantarilla, P.F.; Bartoli, A.; Davison, A.J. KAZE Features. In Proceedings of the Computer Vision—ECCV 2012; Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 214–227. [Google Scholar]

- Ma, J.; Zhao, J.; Tian, J.; Yuille, A.L.; Tu, Z. Robust Point Matching via Vector Field Consensus. IEEE Trans. Image Process. 2014, 23, 1706–1721. [Google Scholar] [CrossRef]

- Kruse, F. The spectral image processing system (SIPS)-interactive visualization and analysis of imaging spectrometer data. Remote Sens. Environ. 1993, 44, 145–163. [Google Scholar] [CrossRef]

| Algorithm | Variance | EOG | DFT |

|---|---|---|---|

| ANAP | |||

| NISwGSP | |||

| ELA | |||

| Our method |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Mei, X.; Ma, Y.; Jiang, X.; Peng, Z.; Huang, J. Hyperspectral Panoramic Image Stitching Using Robust Matching and Adaptive Bundle Adjustment. Remote Sens. 2022, 14, 4038. https://doi.org/10.3390/rs14164038

Zhang Y, Mei X, Ma Y, Jiang X, Peng Z, Huang J. Hyperspectral Panoramic Image Stitching Using Robust Matching and Adaptive Bundle Adjustment. Remote Sensing. 2022; 14(16):4038. https://doi.org/10.3390/rs14164038

Chicago/Turabian StyleZhang, Yujie, Xiaoguang Mei, Yong Ma, Xingyu Jiang, Zongyi Peng, and Jun Huang. 2022. "Hyperspectral Panoramic Image Stitching Using Robust Matching and Adaptive Bundle Adjustment" Remote Sensing 14, no. 16: 4038. https://doi.org/10.3390/rs14164038

APA StyleZhang, Y., Mei, X., Ma, Y., Jiang, X., Peng, Z., & Huang, J. (2022). Hyperspectral Panoramic Image Stitching Using Robust Matching and Adaptive Bundle Adjustment. Remote Sensing, 14(16), 4038. https://doi.org/10.3390/rs14164038