Three-Dimensional Coordinate Extraction Based on Radargrammetry for Single-Channel Curvilinear SAR System

Abstract

:1. Introduction

- 3-D SAR coordinate extraction based on 2-D synthetic aperture: the 2-D synthetic aperture in the azimuth and height directions can be formed by controlling the motion trajectory of the aircraft in space. Hence, after combining with the large bandwidth signal, the 3-D coordinate information of targets can be extracted. This phase-based method is one of the mainstream methods for extracting 3-D coordinates of targets. Several technologies based on that have been proposed and utilized in recent years, including the interferometric SAR (In-SAR) [11,12,13], tomography SAR (Tomo-SAR) [14,15,16], and Linear Array SAR (LA-SAR) [17,18,19], which are shown below.

- 2.

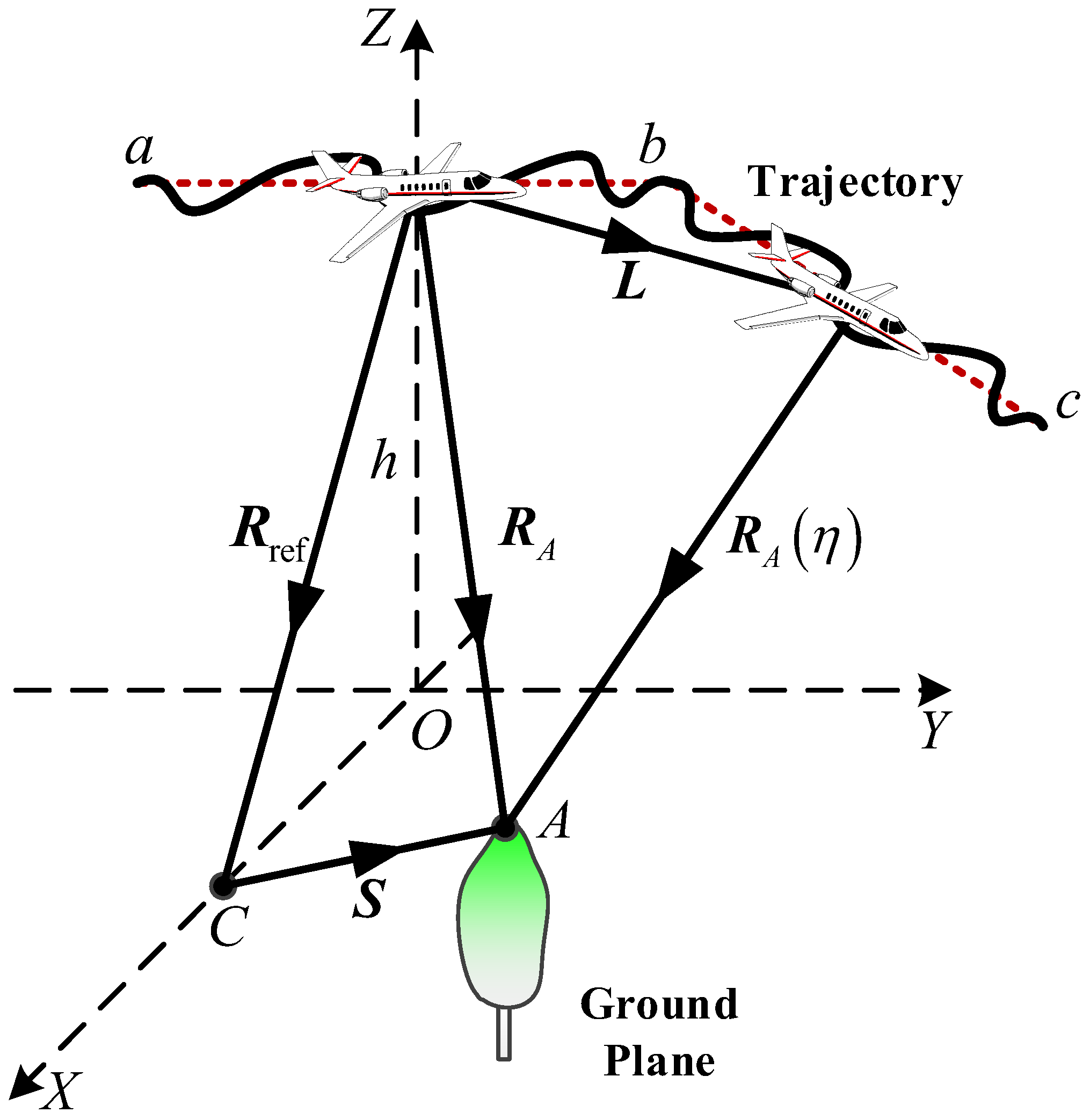

- 3-D SAR coordinate extraction based on radargrammetry: with respect to the phase-based techniques, an alternative method called radargrammetry has been implemented [24]. Although radargrammetry theory was first introduced in 1950 and was the first method used to derive Digital Surface Models (DSMs) from airborne radar data in 1986 [25], the accuracy achieved has been in the order of 50–100 m, which is not satisfactory for application. Thus, it is less used than In-SAR and Tomo-SAR. In the last decade, with the emergence of more and more high-resolution SAR systems, radargrammetry has again become a hot topic. The radargrammetry technique exploits only the amplitude of SAR images taken from the same side but different view angles, resulting in a relative change of the position [26], as shown in Figure 2, which can avoid the phase unwrapping errors and the temporal decorrelation problems [27,28]. Moreover, compared with the techniques based on 2-D synthetic aperture, the radargrammetry technique has fewer restrictions on the radar flight path and installation space [29]. Therefore, it can be implemented with a single-channel airborne CLSAR.

2. Geometry Model

3. Extraction Approach

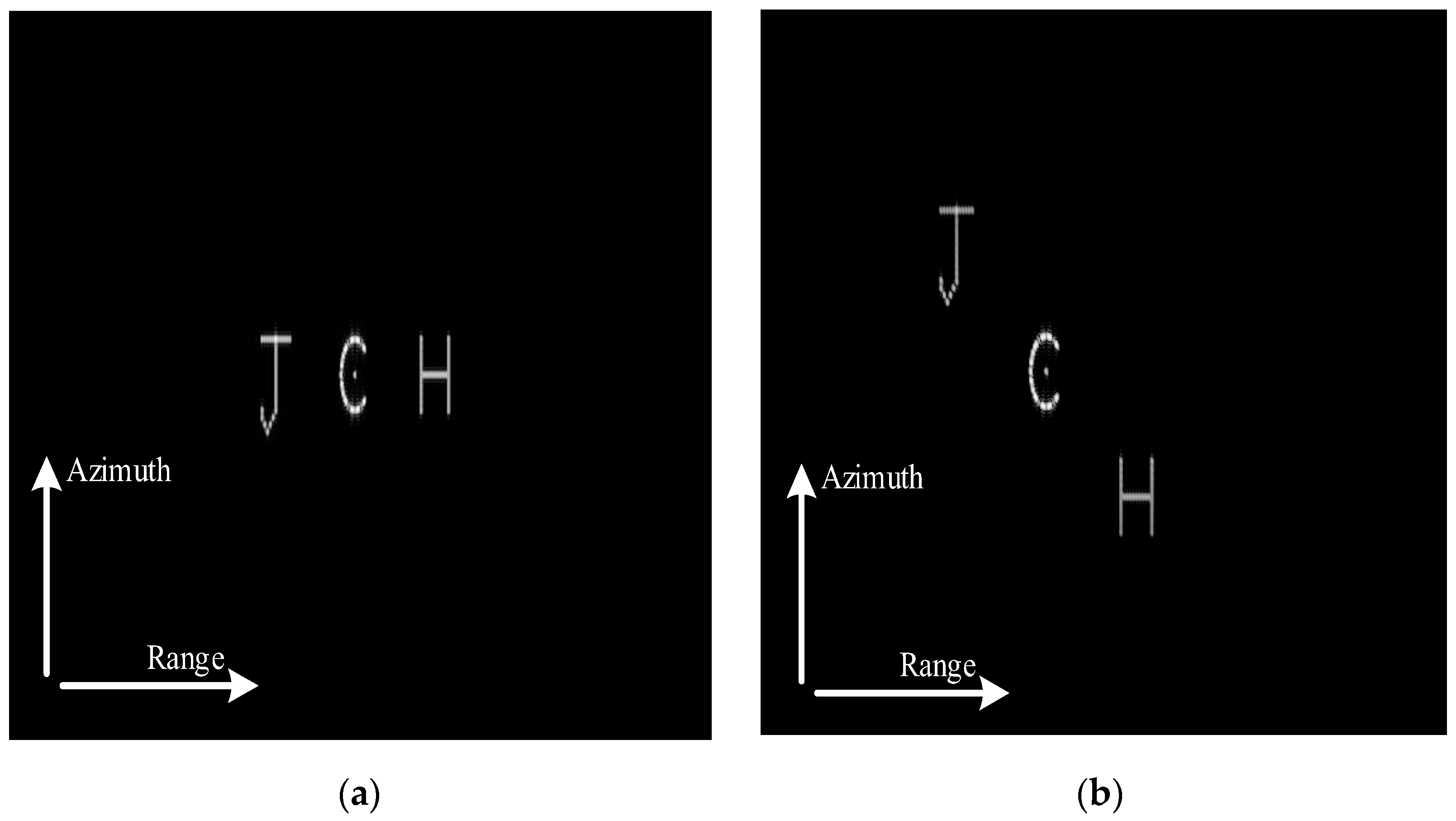

3.1. Imaging Focusing of 2-D SAR Image Pair

3.1.1. 2-D Slave Image Focusing

3.1.2. 2-D Master Image Focusing

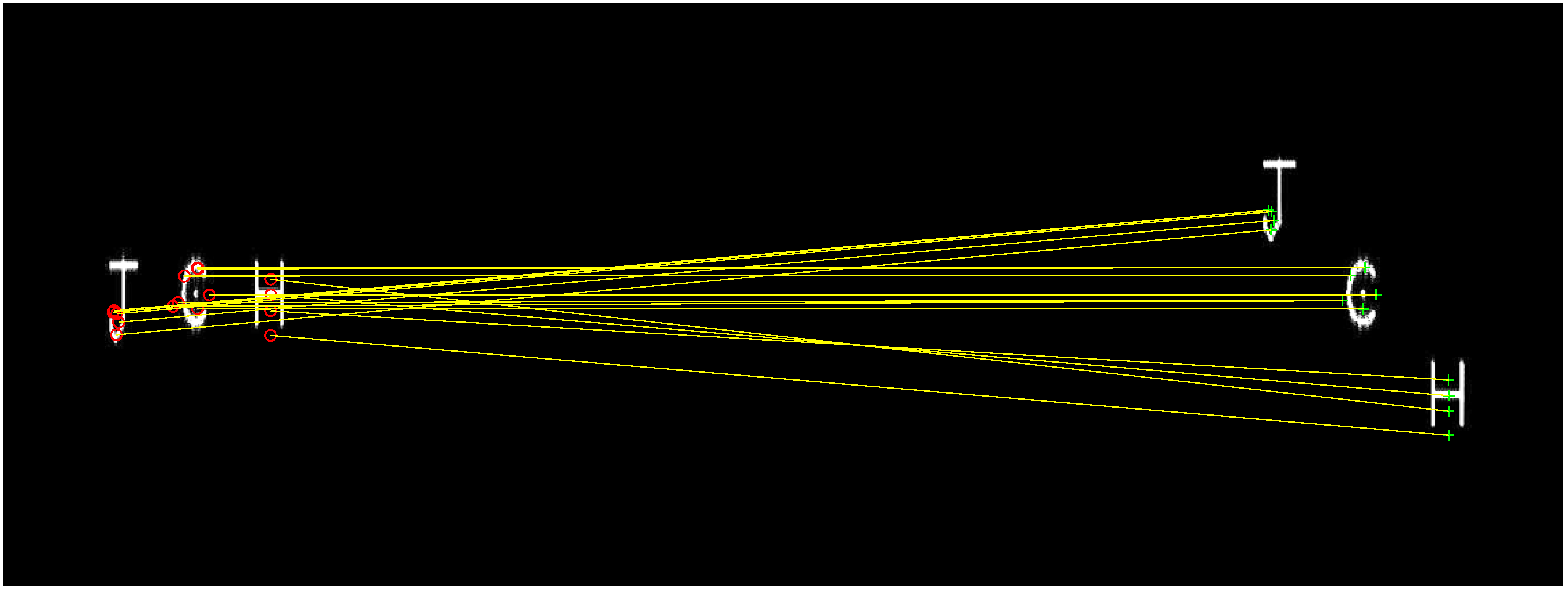

3.2. Image Registration

3.3. 3-D Coordinate Extraction Model

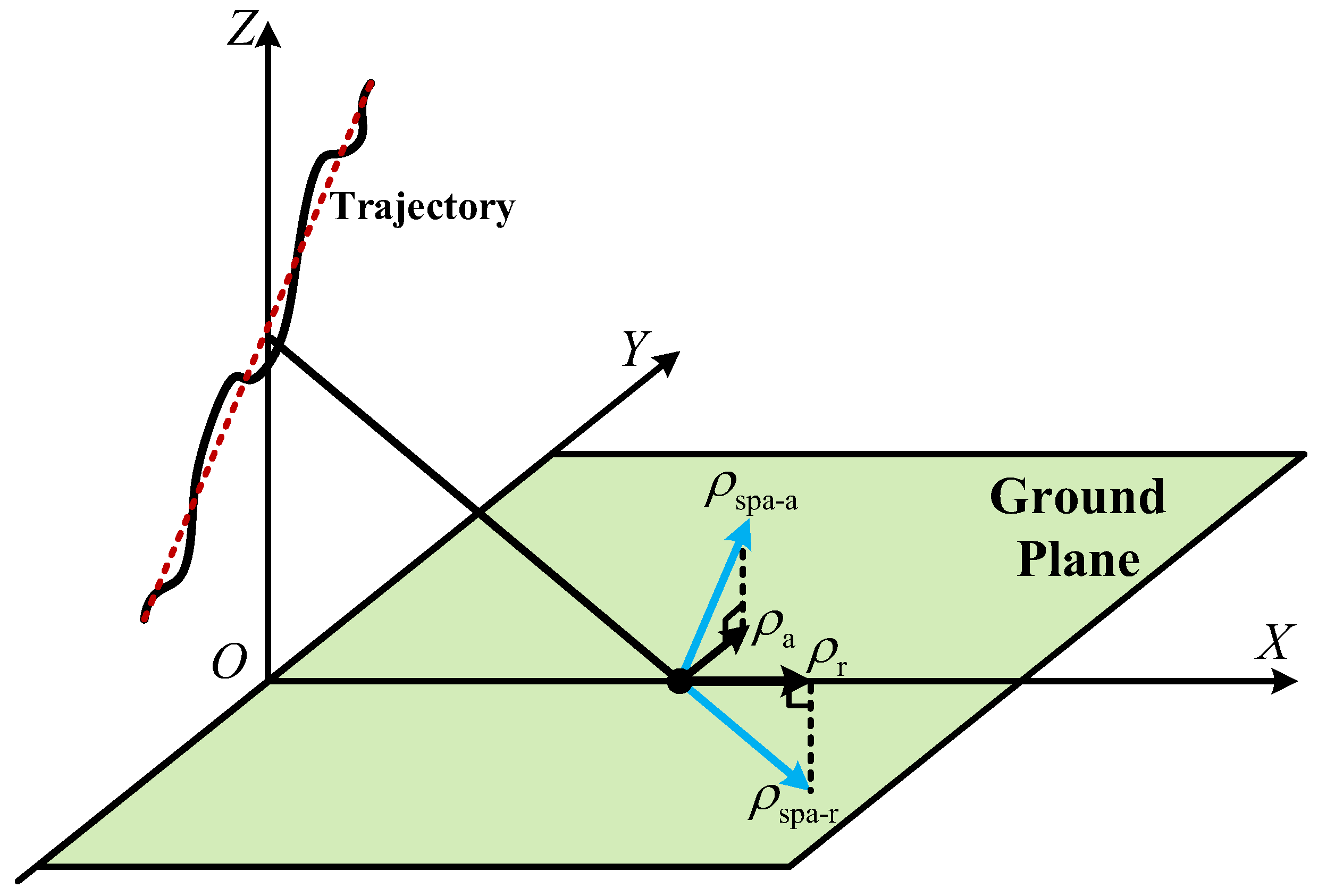

3.3.1. Geometric Relationship in the Slave Image

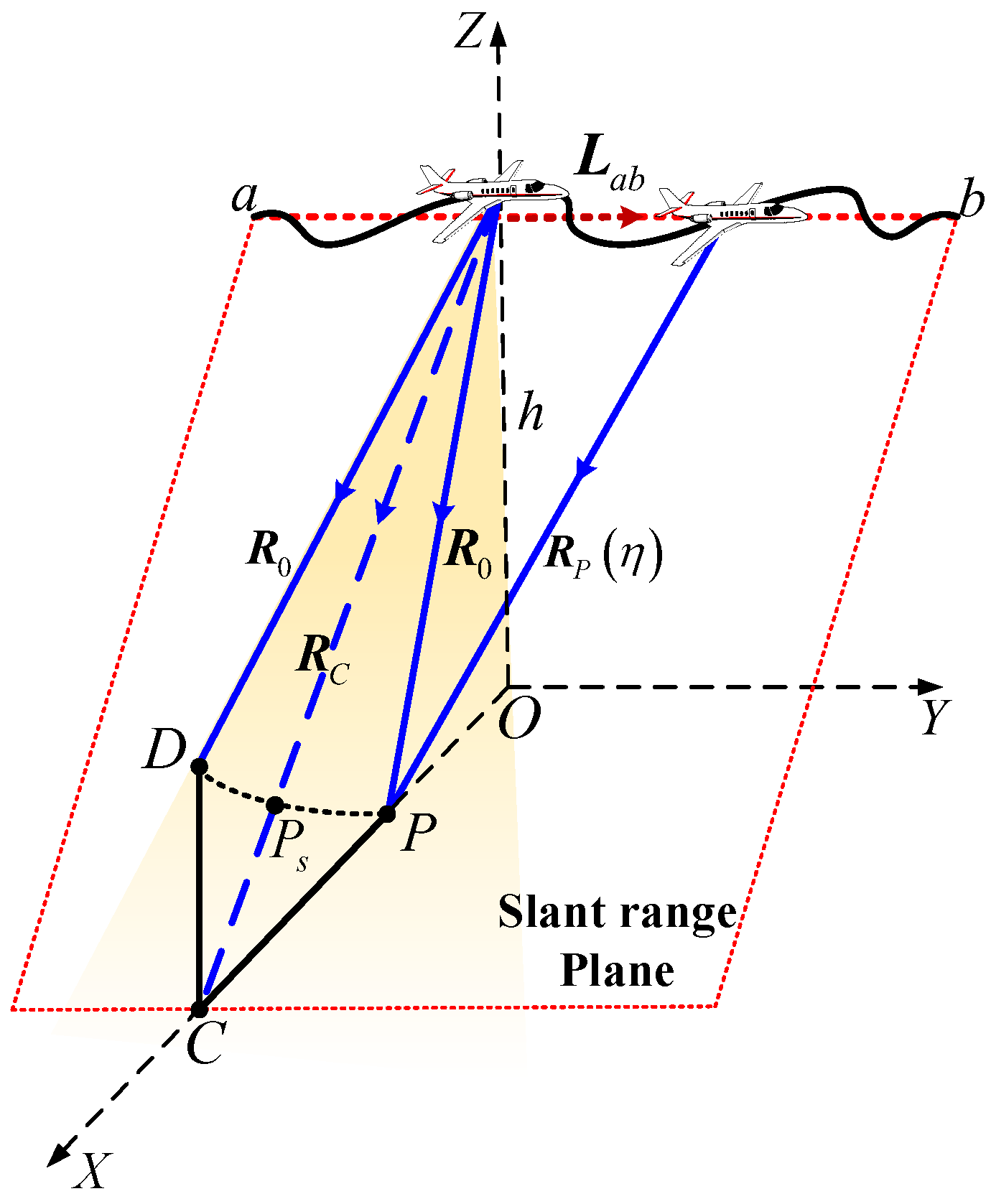

3.3.2. Geometric Relationship in the Master Image

- (a)

- Orientation errors of sensor platform: the orientation errors of the sensor platform are mainly caused by the errors of the orientation equipment (GPS/INS) mounted on the platform. They affect the accuracy of the baseline vector and the slant range vector, which will reduce the accuracy of the model established by the master image and ultimately affect the 3-D coordinate extraction result. After analysis, it could be found that the 3-D coordinate extraction error caused by the platform orientation errors (generally within 2 m) was less than 5 m, which meets the actual application requirements.

- (b)

- Phase errors of the 2-D SAR image: the phase errors of the 2-D SAR image are mainly caused by the motion errors of the sensor platform, since the platform cannot maintain uniform motion. The phase errors include linear phase error and nonlinear phase error. Among them, the nonlinear phase error will only cause defocus of the target without affecting the position of the target, while the linear phase error will cause the azimuth offset of the target in the 2-D SAR image pair. Therefore, it is necessary to use the motion error compensation algorithm to minimize the linear phase error to ensure the accuracy of 3-D coordinate extraction.

3.4. Flowchart of Extraction

- 2-D SAR image pair focusing. Divide the full curved aperture into two sub-apertures according to different view angles and baselines. The sub-aperture with a small height variation, called the slave sub-aperture, is used to obtain the slave image on the slant range plane. The sub-aperture with a large height variation, called the master sub-aperture, is used to obtain the master image on the ground plane.

- Image pair registration. Match the master and slave images based on target features. After that, the accurate 2-D coordinate position of the same target on different SAR images can be extracted;

- 3-D coordinates extraction. Apply the CS model to extract the real 3-D coordinates of the targets from the SAR image pair.

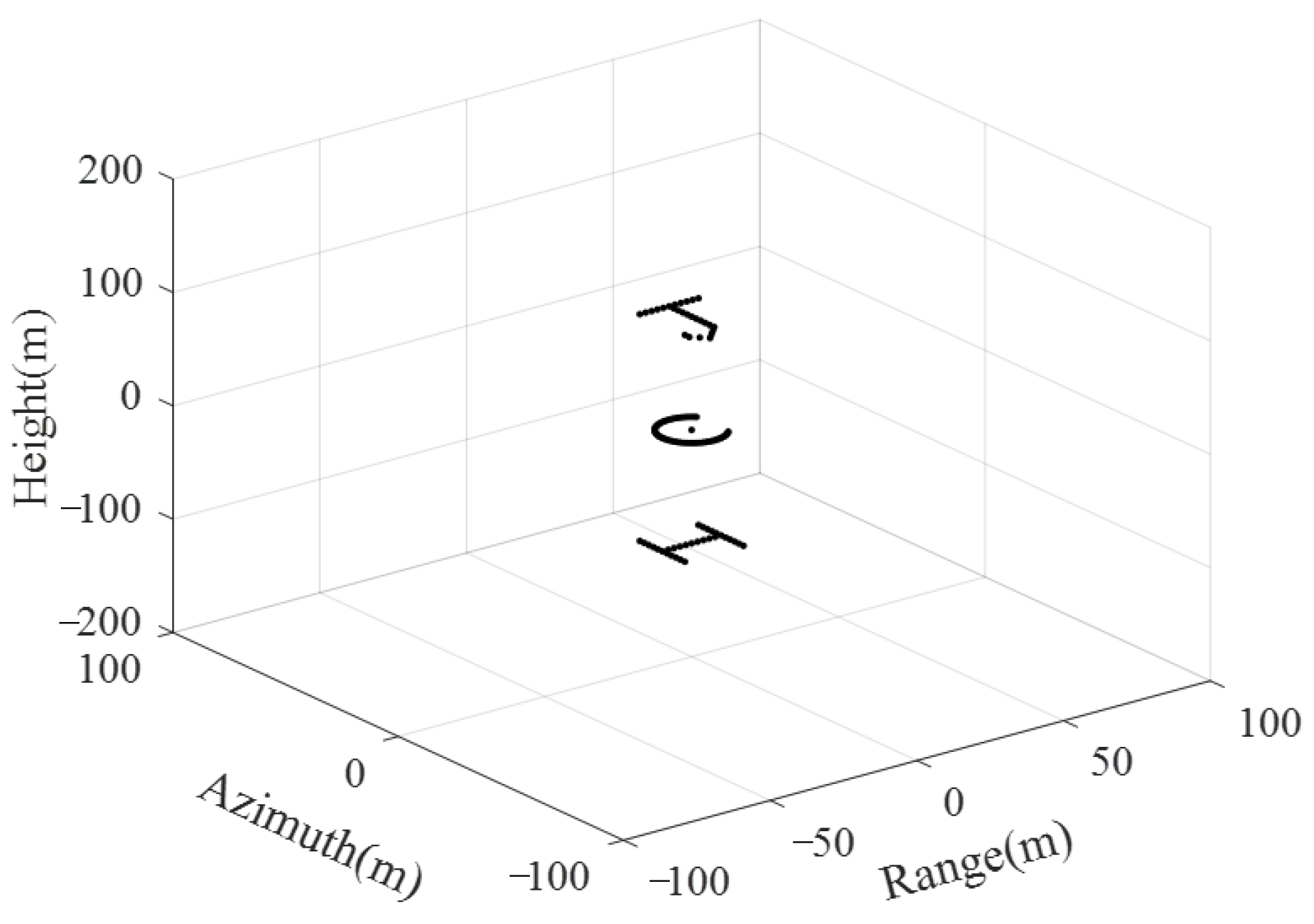

4. Simulation Results

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Ausherman, D.A.; Kozma, A.; Walker, J.L.; Jones, H.M.; Poggio, E.C. Developments in radar imaging. IEEE Trans. Aerosp. Electron. Syst. 1984, AES-20, 363–400. [Google Scholar] [CrossRef]

- Carrara, W.G.; Goodman, R.S.; Majewski, R.M. Spotlight Synthetic Aperture Radar: Signal Processing Algorithms; Artech House: Norwood, MA, USA, 1995. [Google Scholar]

- Curlander, J.C.; McDonough, R.N. Synthetic Aperture Radar: Systems and Signal Processing; Wiley: Hoboken, NJ, USA, 1991. [Google Scholar]

- Cumming, I.G.; Wong, F.H. Digital Processing of Synthetic Aperture Radar Data: Algorithms and Implementation; Artech House: Boston, MA, USA, 2005. [Google Scholar]

- Chen, K.S. Principles of Synthetic Aperture Radar Imaging: A System Simulation Approach; CRC Press: Boca Raton, FL, USA, 2015. [Google Scholar]

- Liu, Y.; Xing, M.; Sun, G.; Lv, X.; Bao, Z.; Hong, W.; Wu, Y. Echo model analyses and imaging algorithm for high-resolution SAR on high-speed platform. IEEE Trans. Geosci. Remote Sens. 2012, 50, 933–950. [Google Scholar] [CrossRef]

- Frey, O.; Magnard, C.; Rüegg, M.; Meier, E. Focusing of airborne synthetic aperture radar data from highly nonlinear flight tracks. IEEE Trans. Geosci. Remote Sens. 2009, 47, 1844–1858. [Google Scholar] [CrossRef] [Green Version]

- Ran, W.L.; Liu, Z.; Zhang, T.; Li, T. Autofocus for correcting three dimensional trajectory deviations in synthetic aperture radar. In Proceedings of the 2016 CIE International Conference on Radar (RADAR), Guangzhou, China, 10–13 October 2016; pp. 1–4. [Google Scholar]

- Bryant, M.L.; Gostin, L.L.; Soumekh, M. 3-D E-CSAR imaging of a T-72 tank and synthesis of its SAR reconstructions. IEEE Trans. Aerosp. Electron. Syst. 2003, 39, 211–227. [Google Scholar] [CrossRef]

- Austin, C.D.; Ertin, E.; Moses, R.L. Sparse signal methods for 3-D radar imaging. IEEE J. Sel. Top. Signal Process. 2011, 5, 408–423. [Google Scholar] [CrossRef]

- Bamler, R.; Hartl, P. Synthetic aperture radar interferometry—Topical review. Inverse Probl. 1998, 14, R1–R54. [Google Scholar] [CrossRef]

- Ferretti, A.; Prati, C.; Rocca, F. Permanent scatterers in SAR interferometry. IEEE Trans. Geosci. Remote Sens. 2001, 39, 8–20. [Google Scholar] [CrossRef]

- Rosen, P.A.; Hensley, S.; Joughin, I.R.; Li, F.K.; Madsen, S.N.; Rodriguez, E.; Goldstein, R.M. Synthetic aperture radar interferometry. Proc. IEEE 2000, 88, 333–382. [Google Scholar] [CrossRef]

- Reigber, A.; Moreira, A. First demonstration of airborne SAR tomography using multibaseline L-band data. IEEE Trans. Geosci. Remote Sens. 2000, 38, 2142–2152. [Google Scholar] [CrossRef]

- Ferraiuolo, G.; Meglio, F.; Pascazio, V.; Schirinzi, G. DEM reconstruction accuracy in multi-channel SAR interferometry. IEEE Trans. Geosci. Remote Sens. 2009, 47, 191–201. [Google Scholar] [CrossRef]

- Zhu, X.; Bamler, R. Very high resolution spaceborne SAR tomography in urban environment. IEEE Trans. Geosci. Remote Sens. 2010, 48, 4296–4308. [Google Scholar] [CrossRef] [Green Version]

- Donghao, Z.; Xiaoling, Z. Downward-Looking 3-D linear array SAR imaging based on Chirp Scaling algorithm. In Proceedings of the 2009 2nd Asian-Pacific Conference on Synthetic Aperture Radar, Xi’an, China, 26–30 October 2009; pp. 1043–1046. [Google Scholar]

- Chen, S.; Yuan, Y.; Xu, H.; Zhang, S.; Zhao, H. An efficient and accurate three-dimensional imaging algorithm for forward-looking linear-array sar with constant acceleration based on FrFT. Signal Process. 2021, 178, 107764. [Google Scholar] [CrossRef]

- Ren, X.; Sun, J.; Yang, R. A new three-dimensional imaging algorithm for airborne forward-looking SAR. IEEE Geosci. Remote Sens. Lett. 2010, 8, 153–157. [Google Scholar] [CrossRef]

- Budillon, A.; Evangelista, A.; Schirinzi, G. Three-dimensional SAR focusing from multipass signals using compressive sampling. IEEE Trans. Geosci. Remote Sens. 2011, 49, 488–499. [Google Scholar] [CrossRef]

- Lombardini, F.; Pardini, M.; Gini, F. Sector interpolation for 3D SAR imaging with baseline diversity data. In Proceedings of the 2007 International Waveform Diversity and Design Conference, Pisa, Italy, 4–8 June 2007; pp. 297–301. [Google Scholar]

- Wei, S.; Zhang, X.; Shi, J. Linear array SAR imaging via compressed sensing. Prog. Electromagn. Res. 2011, 117, 299–319. [Google Scholar] [CrossRef] [Green Version]

- Zhang, S.; Kuang, G.; Zhu, Y.; Dong, G. Compressive sensing algorithm for downward-looking sparse array 3-D SAR imaging. In Proceedings of the IET International Radar Conference, Hangzhou, China, 14–16 October 2015; pp. 1–5. [Google Scholar]

- Crosetto, M.; Aragues, F.P. Radargrammetry and SAR interferometry for DEM generation: Validation and data fusion. In Proceedings of the CEOS SAR Workshop, Toulouse, France, 26–29 October 1999; p. 367. [Google Scholar]

- Leberl, F.; Domik, G.; Raggam, J.; Cimino, J.; Kpbrocl, M. Multiple Incidence Angle SIR-B Expenment Over Argentina: Stereo-Radargrammetrc Analysis. IEEE Trans. Geosci. Remote Sens. 1986, GE-24, 482–491. [Google Scholar] [CrossRef]

- Goel, K.; Adam, N. Three-Dimensional Positioning of Point Scatterers Based on Radargrammetry. IEEE Trans. Geosci. Remote Sens. 2012, 50, 2355–2363. [Google Scholar] [CrossRef]

- Yang, J.; Liao, M.; Du, D. Extraction of DEM from single SAR based on radargrammetry. In Proceedings of the 2001 International Conferences on Info-Tech and Info-Net. Proceedings (Cat. No.01EX479), Beijing, China, 29 October–1 November 2001; Volume 1, pp. 212–217. [Google Scholar]

- Toutin, T.; Gray, L. State-of-the-art of elevation extraction from satellite SAR data. ISPRS J. Photogramm. Remote Sens. 2000, 55, 13–33. [Google Scholar] [CrossRef]

- Meric, S.; Fayard, F.; Pottier, É. A Multiwindow Approach for Radargrammetric Improvements. IEEE Trans. Geosci. Remote Sens. 2011, 49, 3803–3810. [Google Scholar] [CrossRef] [Green Version]

- Sansosti, E.; Berardino, P.; Manunta, M.; Serafino, F.; Fornaro, G. Geometrical SAR image registration. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2861–2870. [Google Scholar] [CrossRef]

- Capaldo, P.; Crespi, M.; Fratarcangeli, F.; Nascetti, A.; Pieralice, F. High-Resolution SAR Radargrammetry: A First Application With COSMO-SkyMed SpotLight Imagery. IEEE Geosci. Remote Sens. Lett. 2011, 8, 1100–1104. [Google Scholar] [CrossRef]

- Hanley, H.B.; Fraser, C.S. Sensor orientation for high-resolution satellite imagery: Further insights into bias-compensated RPC. In Proceedings of the XX ISPRS Congress, Istanbul, Turkey, 12–23 July 2004; pp. 24–29. [Google Scholar]

- Zhang, G.; Li, Z.; Pan, H.; Qiang, Q.; Zhai, L. Orientation of Spaceborne SAR Stereo Pairs Employing the RPC Adjustment Model. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2782–2792. [Google Scholar] [CrossRef]

- Zhang, L.; He, X.; Balz, T.; Wei, X.; Liao, M. Rational function modeling for spaceborne SAR datasets. ISPRS J. Photogramm. Remote Sens. 2011, 66, 133–145. [Google Scholar] [CrossRef]

- Raggam, H.; Gutjahr, K.; Perko, R.; Schardt, M. Assessment of the Stereo-Radargrammetric Mapping Potential of TerraSAR-X Multibeam Spotlight Data. IEEE Trans. Geosci. Remote Sens. 2010, 48, 971–977. [Google Scholar] [CrossRef]

- Tang, S.; Lin, C.; Zhou, Y.; So, H.C.; Zhang, L.; Liu, Z. Processing of long integration time spaceborne SAR data with curved orbit. IEEE Trans. Geosci. Remote Sens. 2018, 56, 888–904. [Google Scholar] [CrossRef]

- Tang, S.; Guo, P.; Zhang, L.; So, H.C. Focusing hypersonic vehicle-borne SAR data using radius/angle algorithm. IEEE Trans. Geosci. Remote Sens. 2020, 58, 281–293. [Google Scholar] [CrossRef]

- Chen, J.; Sun, G.-C.; Wang, Y.; Guo, L.; Xing, M.; Gao, Y. An analytical resolution evaluation approach for bistatic GEOSAR based on local feature of ambiguity function. IEEE Trans. Geosci. Remote Sens. 2018, 56, 2159–2169. [Google Scholar] [CrossRef]

- Chen, J.; Xing, M.; Xia, X.-G.; Zhang, J.; Liang, B.; Yang, D.-G. SVD-based ambiguity function analysis for nonlinear trajectory SAR. IEEE Trans. Geosci. Remote Sens. 2021, 59, 3072–3087. [Google Scholar] [CrossRef]

- Guo, P.; Zhang, L.; Tang, S. Resolution calculation and analysis in high-resolution spaceborne SAR. Electron. Lett. 2015, 51, 1199–1201. [Google Scholar] [CrossRef]

- Tang, S.; Zhang, L.; Guo, P.; Liu, G.; Sun, G. Acceleration model analyses and imaging algorithm for highly squinted airborne spotlight-mode SAR with maneuvers. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 1120–1131. [Google Scholar] [CrossRef]

- Ding, H.; Zhang, J.; Huang, G.; Zhu, J. An Improved Multi-Image Matching Method in Stereo-Radargrammetry. IEEE Geosci. Remote Sens. Lett. 2017, 14, 806–810. [Google Scholar] [CrossRef]

- Jing, G.; Wang, H.; Xing, M.; Lin, X. A Novel Two-Step Registration Method for Multi-Aspect SAR Images. In Proceedings of the 2018 China International SAR Symposium (CISS), Shanghai, China, 10–12 October 2018; pp. 1–4. [Google Scholar]

| Parameter | Value |

|---|---|

| Carrier frequency | 10 GHz |

| Pulse bandwidth | 150 MHz |

| System PRF | 800 Hz |

| Reference slant range | 16 km |

| Initial height | 8000 m |

| Velocity vector | [50, 200, −100] m/s |

| Acceleration vector | [5, 0, −5] m/s2 |

| Pitch angle | 30° |

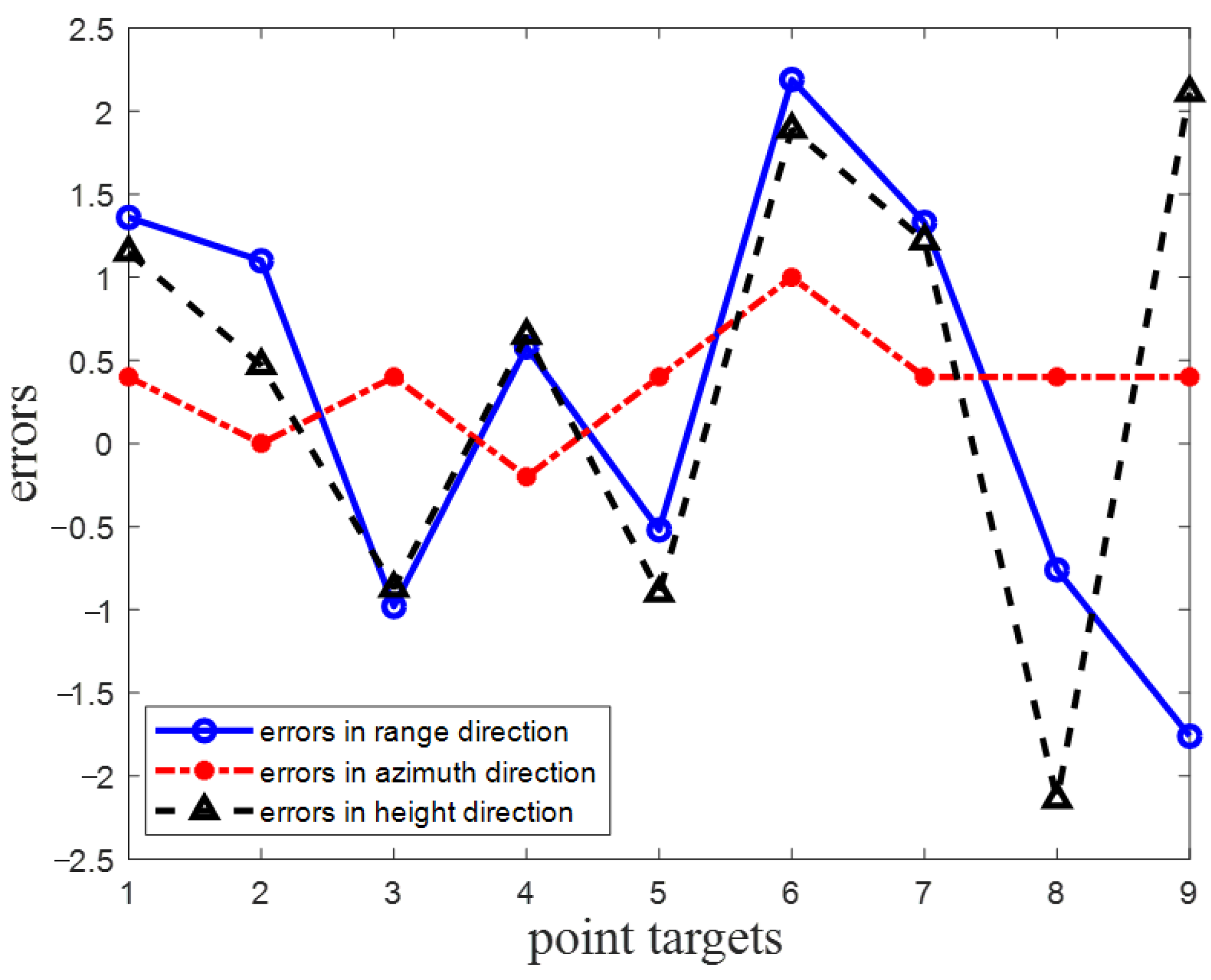

| Target | Real 3-D Coordinate | 2-D Coordinate in Slave Image | 2-D Coordinate in Master Image | Extracted 3-D Coordinate |

|---|---|---|---|---|

| PT1 | (0, −10, 100) | (−49.15, −9.6) | (−56.17, −45.2) | (1.36, −9.60, 101.15) |

| PT2 | (−10, 10, 100) | (−57.73, 10) | (−66.32, −25,6) | (−8.90, 10.00, 100.47) |

| PT3 | (−6, 16, 100) | (−54.61, 16.4) | (−62.42, −19.2) | (−6.98, 16.40, 99.13) |

| PT4 | (7, −7, 0) | (7.24, −7.2) | (7.8, −7.2) | (7.58, −7.2, 0.65) |

| PT5 | (0, 0, 0) | (0, 0.4) | (0.78, 0) | (−0.52, 0.4, −0.9) |

| PT6 | (7, 7, 0) | (7.02, 8) | (7.8, 8) | (9.19, 8.0, 1.89) |

| PT7 | (−10, −10, −100) | (42.13, −9.6) | (49.15, 15.6) | (−8.67, −9.6, −98.78) |

| PT8 | (10, 10, −100) | (59.29, 10.4) | (68.65, 45.6) | (9.24, 10.4, −102.14) |

| PT9 | (−10, 0, −100) | (39.01, 0.4) | (50.71, 35.6) | (−11.76, 0.4, −97.89) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, C.; Tang, S.; Ren, Y.; Li, Y.; Zhang, J.; Li, G.; Zhang, L. Three-Dimensional Coordinate Extraction Based on Radargrammetry for Single-Channel Curvilinear SAR System. Remote Sens. 2022, 14, 4091. https://doi.org/10.3390/rs14164091

Jiang C, Tang S, Ren Y, Li Y, Zhang J, Li G, Zhang L. Three-Dimensional Coordinate Extraction Based on Radargrammetry for Single-Channel Curvilinear SAR System. Remote Sensing. 2022; 14(16):4091. https://doi.org/10.3390/rs14164091

Chicago/Turabian StyleJiang, Chenghao, Shiyang Tang, Yi Ren, Yinan Li, Juan Zhang, Geng Li, and Linrang Zhang. 2022. "Three-Dimensional Coordinate Extraction Based on Radargrammetry for Single-Channel Curvilinear SAR System" Remote Sensing 14, no. 16: 4091. https://doi.org/10.3390/rs14164091

APA StyleJiang, C., Tang, S., Ren, Y., Li, Y., Zhang, J., Li, G., & Zhang, L. (2022). Three-Dimensional Coordinate Extraction Based on Radargrammetry for Single-Channel Curvilinear SAR System. Remote Sensing, 14(16), 4091. https://doi.org/10.3390/rs14164091