Multisensor Data Fusion by Means of Voxelization: Application to a Construction Element of Historic Heritage

Abstract

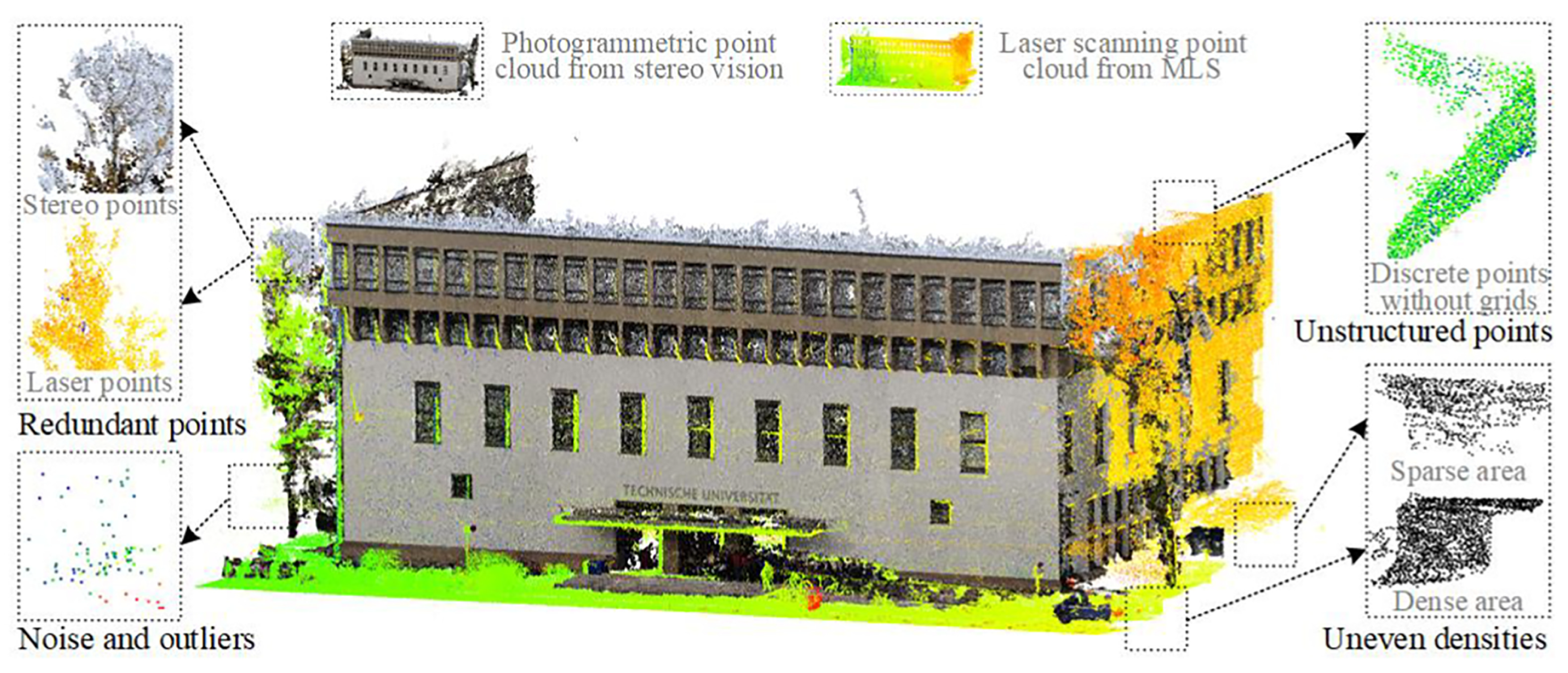

:1. Introduction

2. Materials and Methods

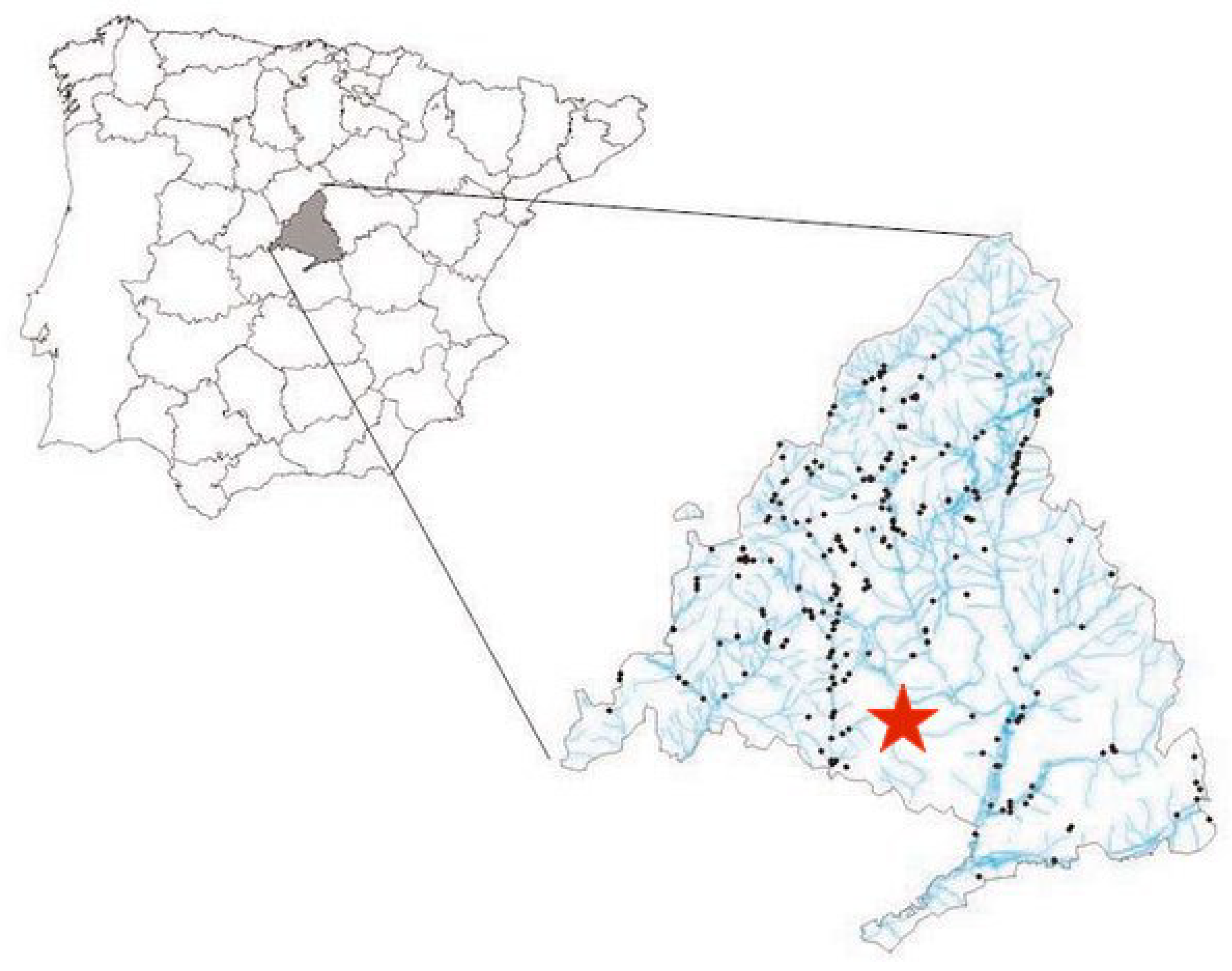

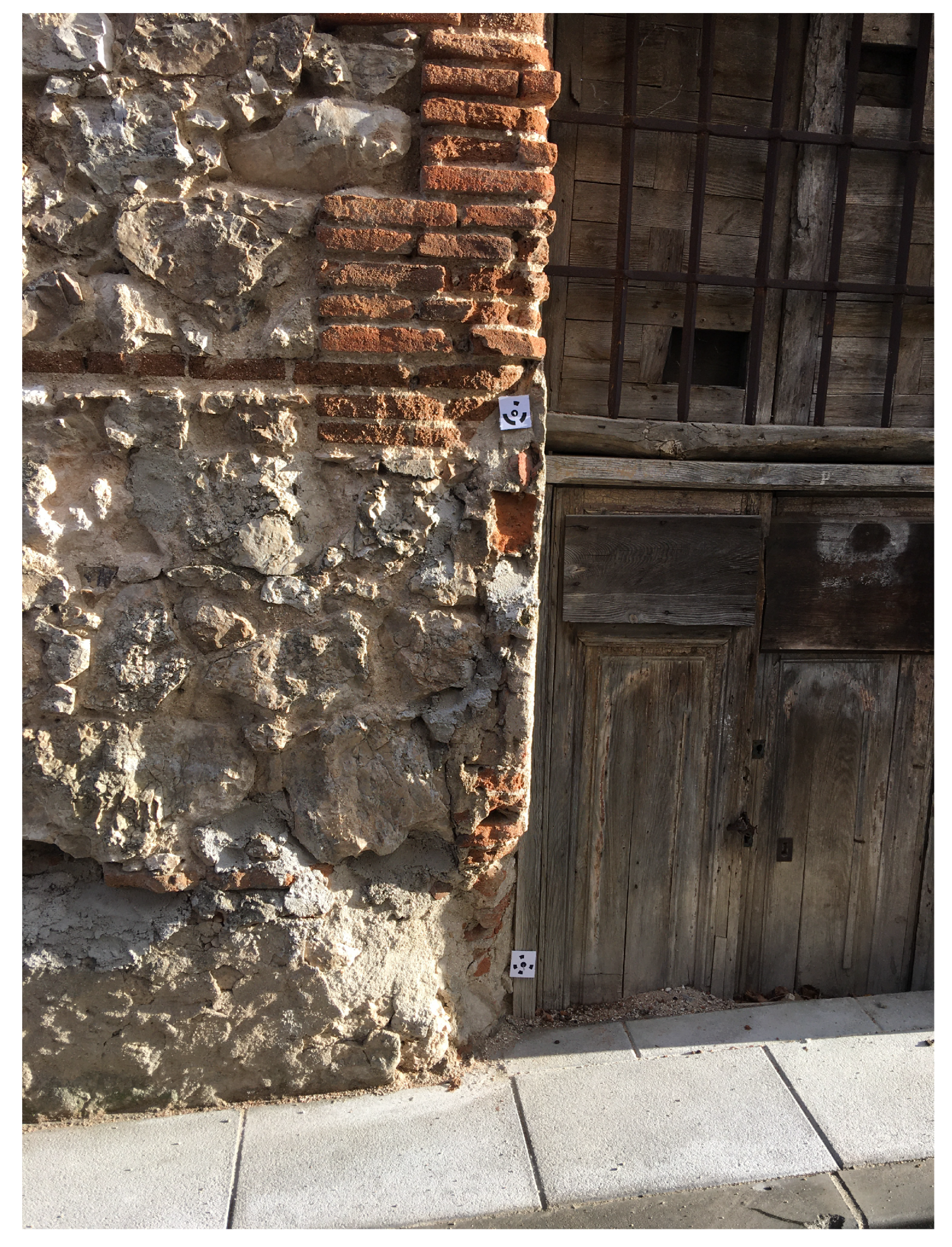

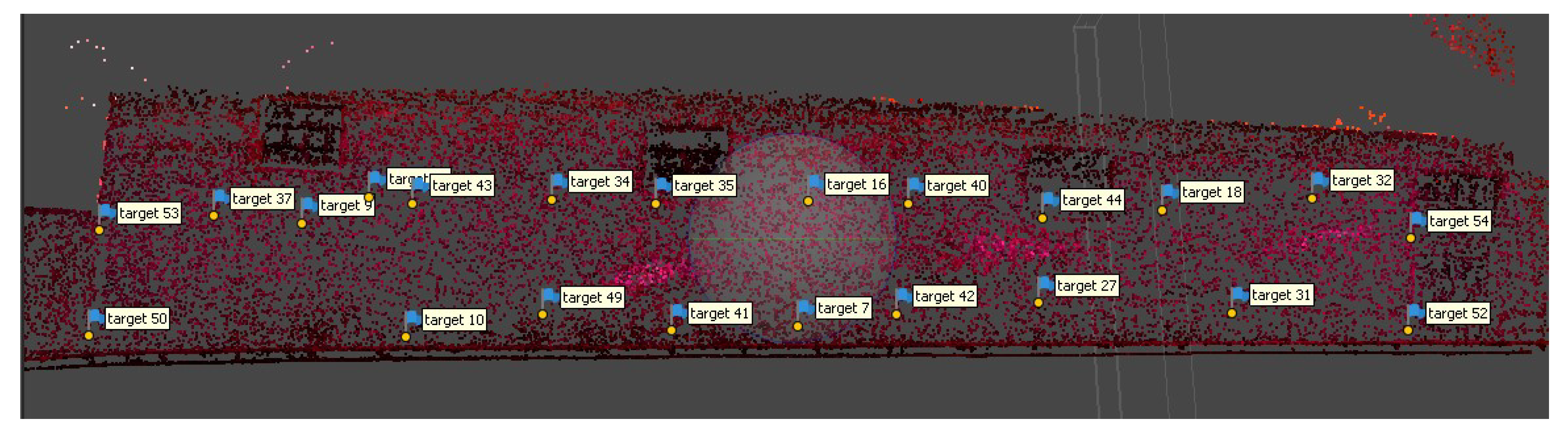

2.1. Data Collection

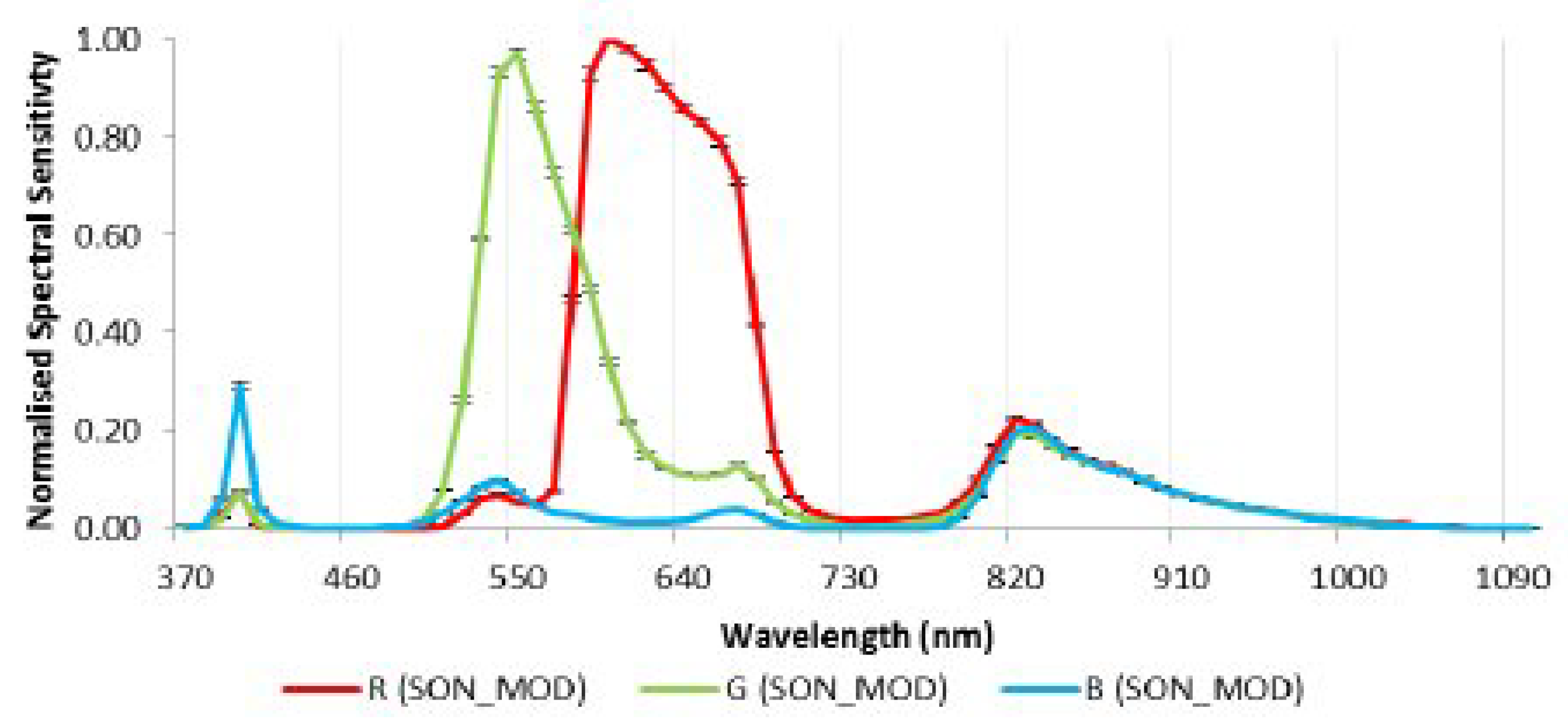

- Sony digital image camera model Nex-7, with a 19 mm fixed-length optical lens. This sensor records the spectral components corresponding to the red (590 nm), green (520 nm) and blue (460 nm) bands. In Table 2 we show these used sensor parameters.

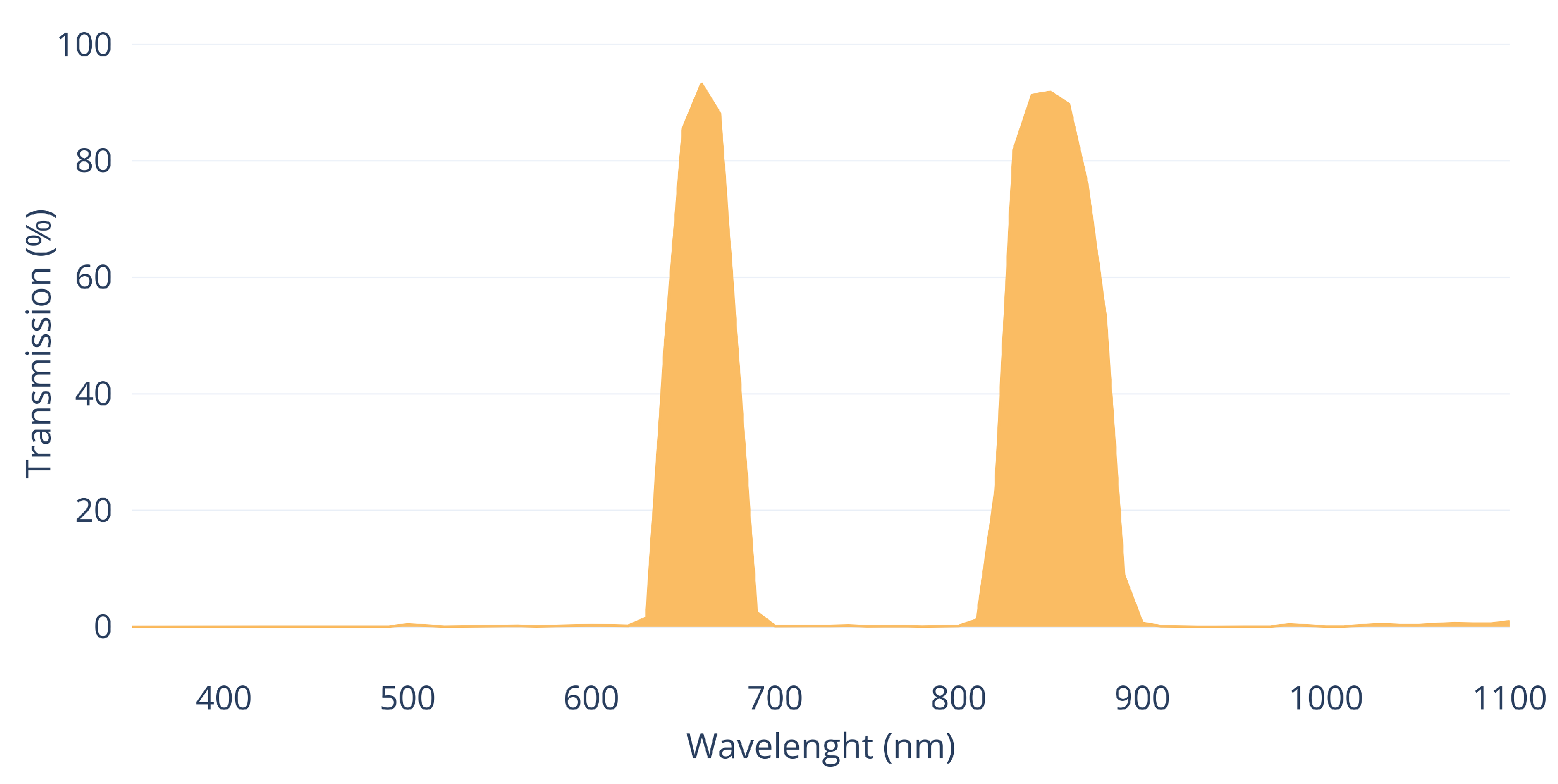

- Modified digital image camera model Sony Nex-5N (Table 3), equipped with a 16 mm fixed-length lens, with near infrared filter and an ultraviolet filter.

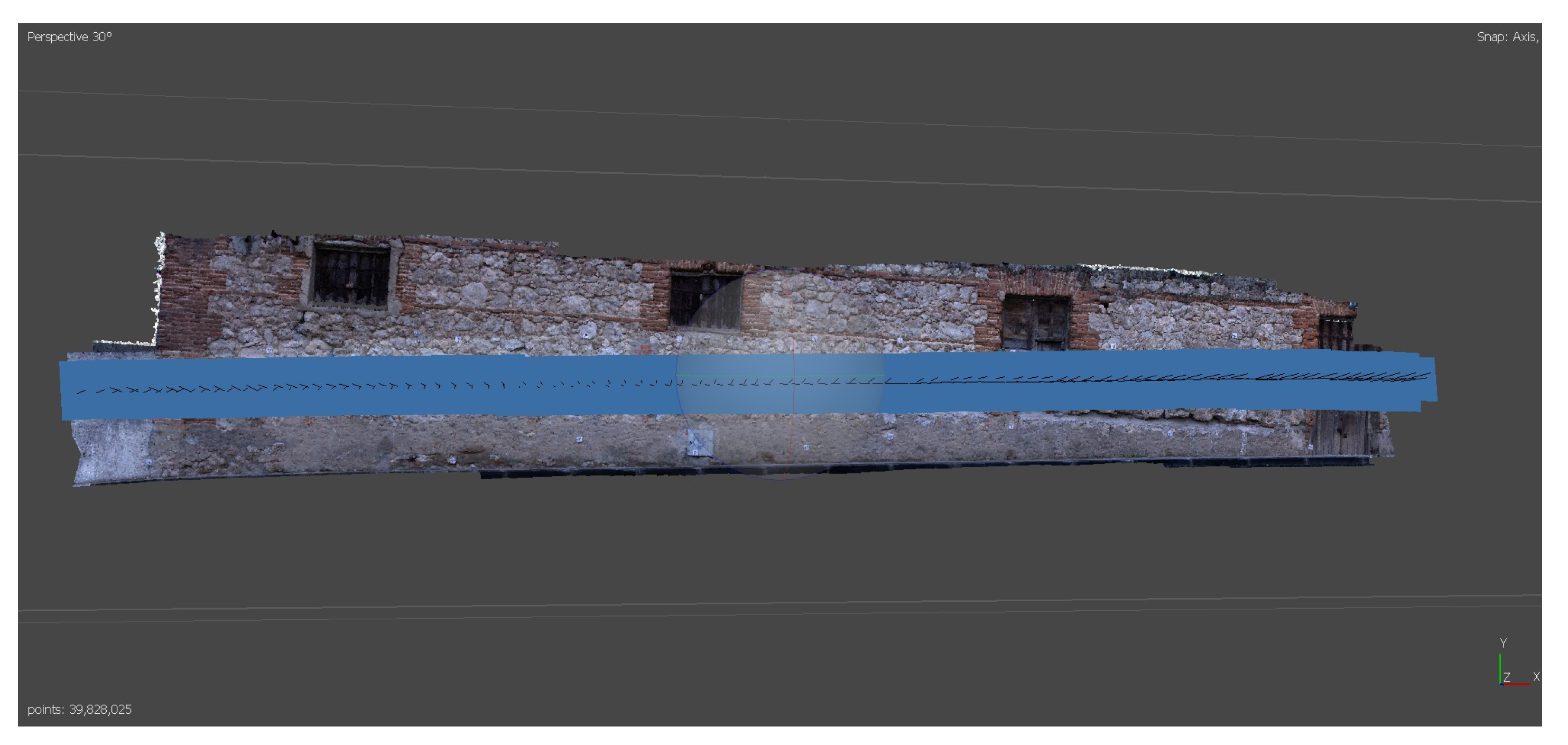

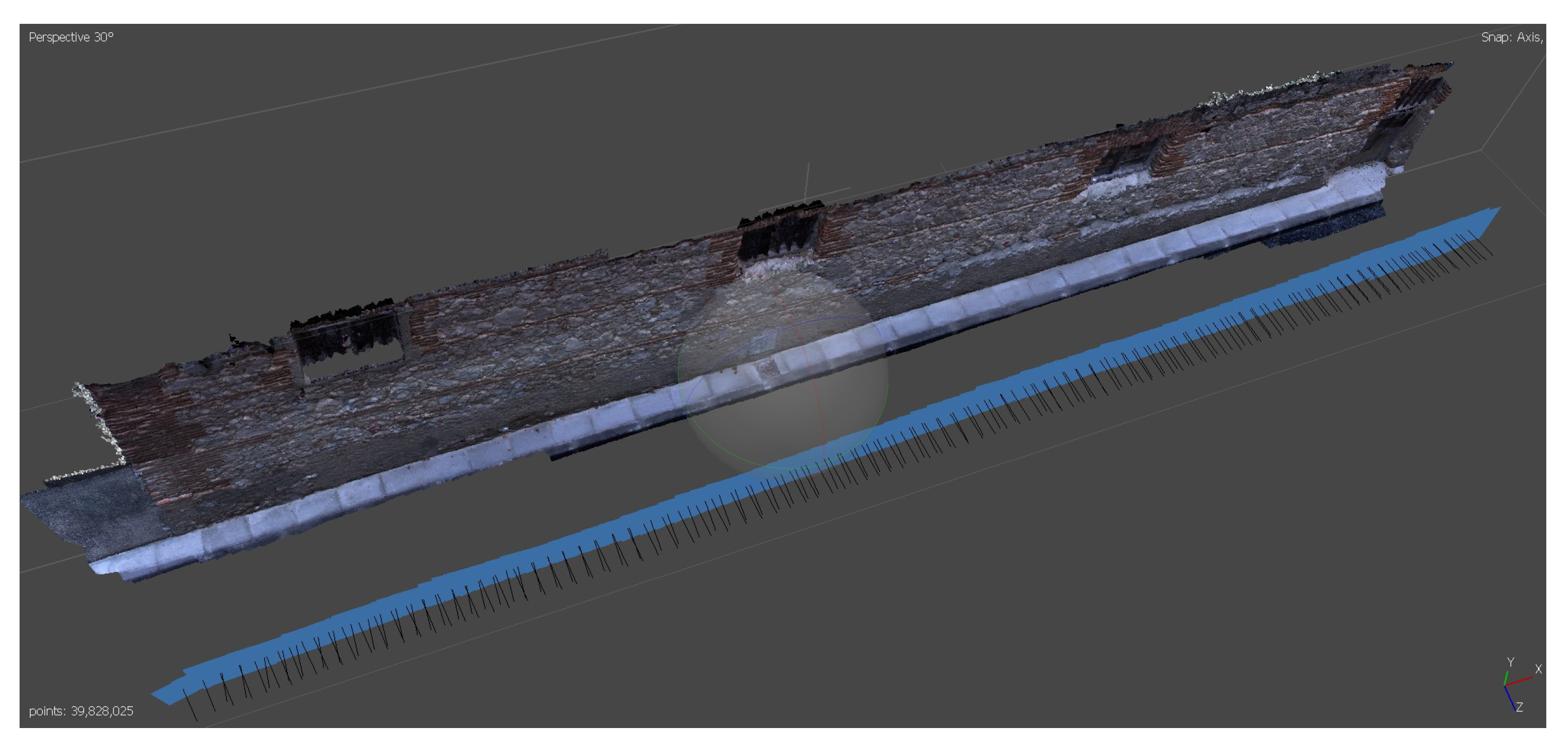

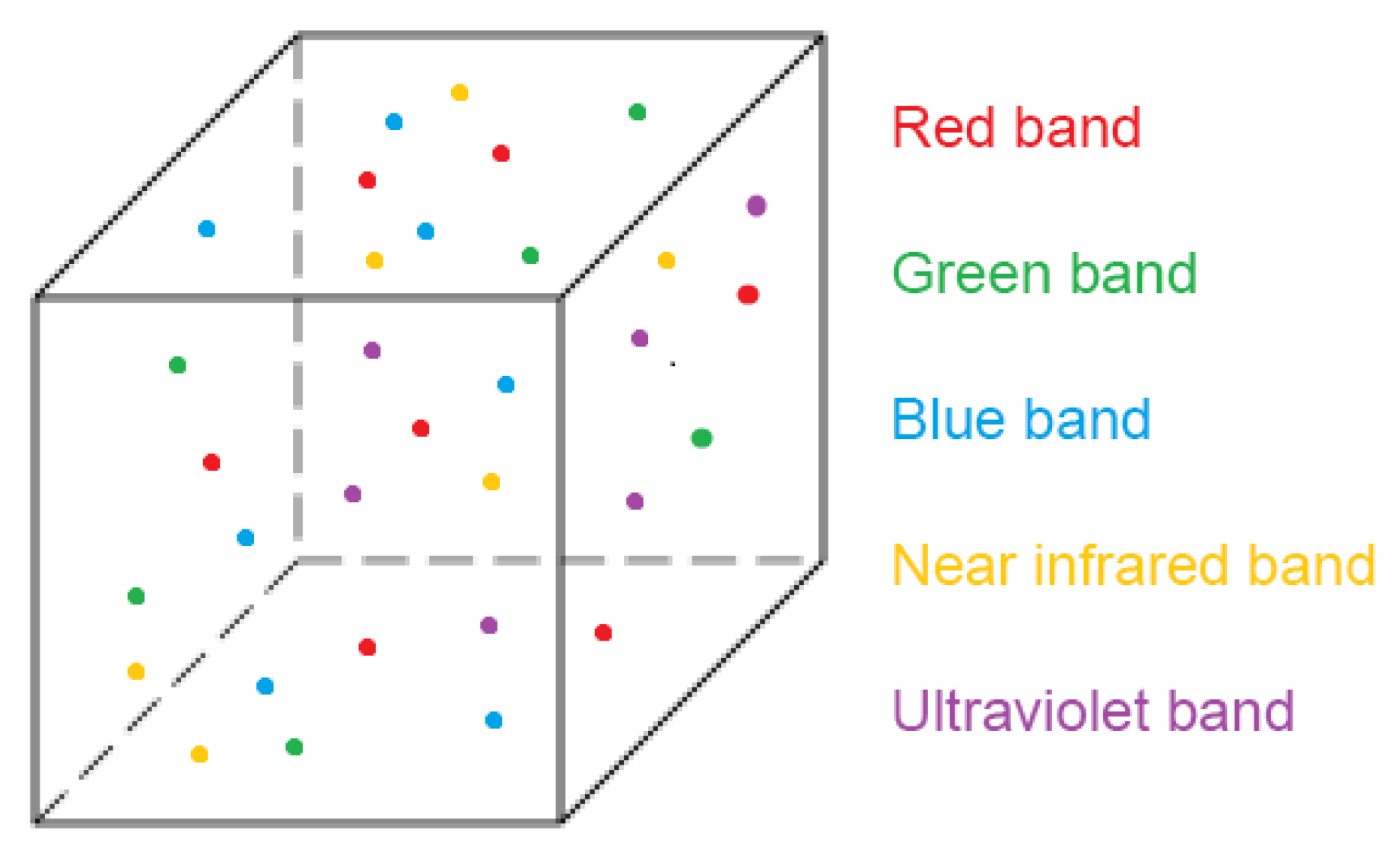

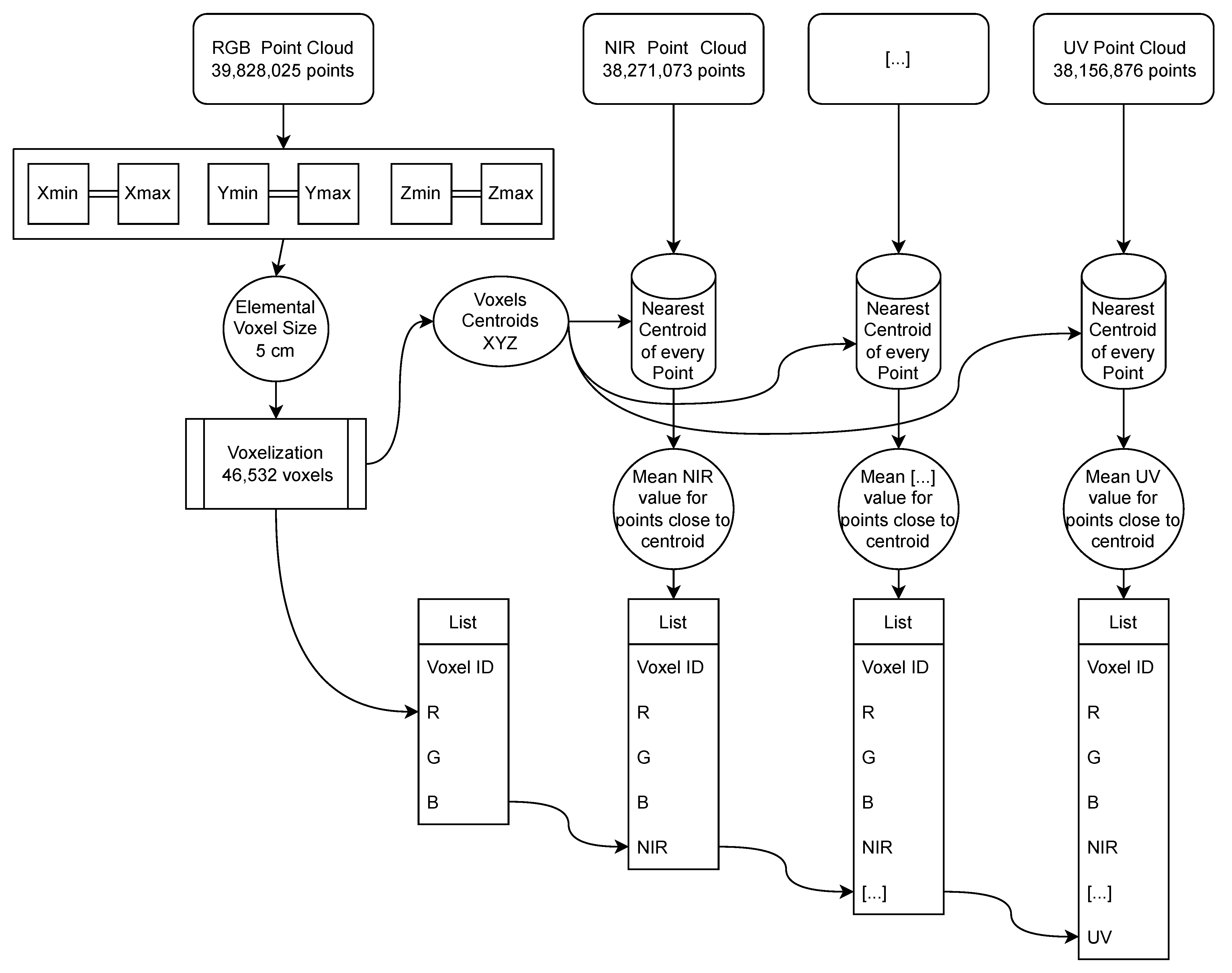

2.2. Fusion of Point Clouds by Voxelization

3. Discussion

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| TLS | Terrestrial Laser Scanning |

| MLS | Mobile Laser Scanning |

| ALS | Aerial Laser Scanning |

| MRI | Magnetic Resonance Imaging |

| LIDAR | Light Detection and Ranging |

| RADAR | Radio Detection and Ranging |

| GAN | Generative Adversarial Networks |

References

- Xu, Y.; Tong, X.; Stilla, U. Voxel-based representation of 3D point clouds: Methods, applications, and its potential use in the construction industry. Autom. Constr. 2021, 126, 103675. [Google Scholar] [CrossRef]

- Poux, F.; Billen, R. Voxel-based 3D Point Cloud Semantic Segmentation: Unsupervised Geometric and Relationship Featuring vs Deep Learning Methods. ISPRS Int. J. Geo-Inf. 2019, 8, 213. [Google Scholar] [CrossRef]

- Poux, F.; Neuville, R.; Van Wersch, L.; Nys, G.A.; Billen, R. 3D point clouds in archaeology: Advances in acquisition, processing and knowledge integration applied to quasi-planar objects. Geosciences 2017, 7, 96. [Google Scholar] [CrossRef]

- Foley, J.D. Computer Graphics: Principles and Practice; Addison Wesley: Reading, UK, 1990. [Google Scholar]

- Okhrimenko, M.; Coburn, C.; Hopkinson, C. Multi-spectral lidar: Radiometric calibration, canopy spectral reflectance, and vegetation vertical SVI profiles. Remote Sens. 2019, 11, 1556. [Google Scholar] [CrossRef]

- Goodbody, T.R.; Tompalski, P.; Coops, N.C.; Hopkinson, C.; Treitz, P.; van Ewijk, K. Forest Inventory and Diversity Attribute Modelling Using Structural and Intensity Metrics from Multi-Spectral Airborne Laser Scanning Data. Remote Sens. 2020, 12, 2109. [Google Scholar] [CrossRef]

- Jurado, J.M.; Ortega, L.; Cubillas, J.J.; Feito, F.R. Multispectral Mapping on 3D Models and Multi-Temporal Monitoring for Individual Characterization of Olive Trees. Remote Sens. 2020, 12, 1106. [Google Scholar] [CrossRef]

- Castellazzi, G.; D’Altri, A.; Bitelli, G.; Selvaggi, I.; Lambertini, A. From Laser Scanning to Finite Element Analysis of Complex Buildings by Using a Semi-Automatic Procedure. Sensors 2015, 15, 18360–18380. [Google Scholar] [CrossRef]

- Li, D.; Shen, X.; Yu, Y.; Guan, H.; Li, J.; Zhang, G.; Li, D. Building Extraction from Airborne Multi-Spectral LiDAR Point Clouds Based on Graph Geometric Moments Convolutional Neural Networks. Remote Sens. 2020, 12, 3186. [Google Scholar] [CrossRef]

- Zhou, Z.; Gong, J.; Guo, M. Image-Based 3D Reconstruction for Posthurricane Residential Building Damage Assessment. J. Comput. Civ. Eng. 2016, 30, 04015015. [Google Scholar] [CrossRef]

- Dai, W.; Yang, B.; Dong, Z.; Shaker, A. A new method for 3D individual tree extraction using multispectral airborne LiDAR point clouds. ISPRS J. Photogramm. Remote Sens. 2018, 144, 400–411. [Google Scholar] [CrossRef]

- Xie, H.; Li, G.; Ning, H.; Ménard, C.; Colemah, C.N.; Miller, R.W. 3D voxel fusion of multi-modality medical images in a clinical treatment planning system. IEEE Symp. Comput.-Based Med. Syst. 2004, 17, 48–53. [Google Scholar] [CrossRef]

- Sun, L.; Zu, C.; Shao, W.; Guang, J.; Zhang, D.; Liu, M. Reliability-based robust multi-atlas label fusion for brain MRI segmentation. Artif. Intell. Med. 2019, 96, 12–24. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Wang, L.; Gao, J.; Risacher, S.L.; Yan, J.; Li, G.; Liu, T.; Zhu, D. Deep Fusion of Brain Structure-Function in Mild Cognitive Impairment. Med. Image Anal. 2021, 72, 102082. [Google Scholar] [CrossRef]

- Li, J.; Liu, P.R.; Liang, Z.X.; Wang, X.Y.; Wang, G.Y. Three-dimensional geological modeling method of regular voxel splitting based on multi-source data fusion. Yantu Lixue Rock Soil Mech. 2021, 42, 1170–1177. [Google Scholar] [CrossRef]

- Yang, B.; Guo, R.; Liang, M.; Casas, S.; Urtasun, R. RadarNet: Exploiting Radar for Robust Perception of Dynamic Objects. arXiv 2020, arXiv:2007.14366. [Google Scholar]

- Nobis, F.; Shafiei, E.; Karle, P.; Betz, J.; Lienkamp, M. Radar Voxel Fusion for 3D Object Detection. Appl. Sci. 2021, 11, 5598. [Google Scholar] [CrossRef]

- Li, Y.; Xie, H.; Shin, H. 3D Object Detection Using Frustums and Attention Modules for Images and Point Clouds. Signals 2021, 2, 98–107. [Google Scholar] [CrossRef]

- Wang, N.; Sun, P. Multi-fusion with attention mechanism for 3D object detection. Int. Conf. Softw. Eng. Knowl. Eng. SEKE 2021, 2021, 475–480. [Google Scholar] [CrossRef]

- Choe, J.; Joo, K.; Imtiaz, T.; Kweon, I.S. Volumetric Propagation Network: Stereo-LiDAR Fusion for Long-Range Depth Estimation. IEEE Robot. Autom. Lett. 2021, 6, 4672–4679. [Google Scholar] [CrossRef]

- Schulze-Brüninghoff, D.; Wachendorf, M.; Astor, T. Remote sensing data fusion as a tool for biomass prediction in extensive grasslands invaded by L. polyphyllus. Remote Sens. Ecol. Conserv. 2021, 7, 198–213. [Google Scholar] [CrossRef]

- Yang, C.; Li, Y.; Wei, M.; Wen, J. Voxel-Based Texture Mapping and 3-D Scene-data Fusion with Radioactive Source. In Proceedings of the 2020 The 8th International Conference on Information Technology: IoT and Smart City, Xi’an, China, 25–27 December 2020; ACM: New York, NY, USA, 2020; pp. 105–109. [Google Scholar] [CrossRef]

- Wang, X.; Xu, D.; Gu, F. 3D model inpainting based on 3D deep convolutional generative adversarial network. IEEE Access 2020, 8, 170355–170363. [Google Scholar] [CrossRef]

- Al-Manasir, K.; Fraser, C.S. Registration of terrestrial laser scanner data using imagery. Photogramm. Rec. 2006, 21, 255–268. [Google Scholar] [CrossRef]

- Hedeaard, S.B.; Brøns, C.; Drug, I.; Saulins, P.; Bercu, C.; Jakovlev, A.; Kjær, L. Multispectral photogrammetry: 3D models highlighting traces of paint on ancient sculptures. CEUR Workshop Proc. 2019, 2364, 181–189. [Google Scholar]

- Raimundo, J.; Medina, S.L.C.; Prieto, J.F.; de Mata, J.A. Super resolution infrared thermal imaging using pansharpening algorithms: Quantitative assessment and application to uav thermal imaging. Sensors 2021, 21, 1265. [Google Scholar] [CrossRef] [PubMed]

- Berra, E.; Gibson-Poole, S.; MacArthur, A.; Gaulton, R.; Hamilton, A. Estimation of the spectral sensitivity functions of un-modified and modified commercial off-the-shelf digital cameras to enable their use as a multispectral imaging system for UAVs. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2015, 40, 207–214. [Google Scholar] [CrossRef]

- Bitelli, G.; Castellazzi, G.; D’Altri, A.; De Miranda, S.; Lambertini, A.; Selvaggi, I. Automated Voxel model from point clouds for structural analysis of Cultural Heritage. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B5, 191–197. [Google Scholar] [CrossRef]

- Zhang, C.; Jamshidi, M.; Chang, C.C.; Liang, X.; Chen, Z.; Gui, W. Concrete Crack Quantification using Voxel-Based Reconstruction and Bayesian Data Fusion. IEEE Trans. Ind. Inform. 2022, 1. [Google Scholar] [CrossRef]

- Wang, Y.; Xiao, Y.; Xiong, F.; Jiang, W.; Cao, Z.; Zhou, J.T.; Yuan, J. 3DV: 3D dynamic voxel for action recognition in depth video. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 508–517. [Google Scholar] [CrossRef]

- Poux, F.; Neuville, R.; Nys, G.A.; Billen, R. 3D point cloud semantic modelling: Integrated framework for indoor spaces and furniture. Remote Sens. 2018, 10, 1412. [Google Scholar] [CrossRef]

- Zhou, Q.Y.; Park, J.; Koltun, V. Open3D: A Modern Library for 3D Data Processing. arXiv 2018, arXiv:1801.09847. [Google Scholar]

- Shi, G.; Gao, X.; Dang, X. Improved ICP point cloud registration based on KDTree. Int. J. Earth Sci. Eng. 2016, 9, 2195–2199. [Google Scholar]

- Musicco, A.; Galantucci, R.A.; Bruno, S.; Verdoscia, C.; Fatiguso, F. Automatic Point Cloud Segmentation for the Detection of Alterations on Historical Buildings Through an Unsupervised and Clustering-Based Machine Learning Approach. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, V-2-2021, 129–136. [Google Scholar] [CrossRef]

| Coordinates | Colour Information | |||||

|---|---|---|---|---|---|---|

| X (m) | Y (m) | Z (m) | I | R | G | B |

| 532,238.001 | 4,274,986.273 | 5.923 | −1319 | 29 | 22 | 16 |

| 532,238.342 | 4,274,986.295 | 5.910 | −1252 | 36 | 27 | 20 |

| 532,238.348 | 4,274,986.297 | 5.912 | −1359 | 40 | 27 | 19 |

| […] | ||||||

| 532,238.432 | 42,749,867.987 | 5.922 | −1342 | 25 | 18 | 12 |

| Parameter | Value |

|---|---|

| Sensor | APS-C type CMOS sensor |

| Focal length (mm) | 19 |

| Sensor width (mm) | 23.5 |

| Sensor lenght (mm) | 15.6 |

| Effective pixels (megapixels) | 24.3 |

| Pixel size (microns) | 3.92 |

| ISO sensitivity range | 100–1600 |

| Image format | RAW (Sony ARW 2.3 format), |

| Weight (g) | 350 |

| Parameter | Value |

|---|---|

| Sensor | APS-C type CMOS sensor |

| Focal length (mm) | 16 |

| Sensor width (mm) | 23.5 |

| Sensor length (mm) | 15.6 |

| Effective pixels (megapixels) | 16.7 |

| Pixel size (microns) | 4.82 |

| ISO sensitivity range | 100–3200 |

| Image format | RAW (Sony ARW 2.2 format), |

| Weight (g) | 269 |

| Voxel Size (cm) | Number of Voxels |

|---|---|

| 0.5 | 4,621,255 |

| 1 | 1,193,994 |

| 5 | 46,532 |

| 10 | 11,292 |

| 15 | 4984 |

| 20 | 2658 |

| 30 | 1281 |

| 50 | 461 |

| Point Cloud | Number of Elements | Memory Space Needed (Mbytes) |

|---|---|---|

| RGB 1 | 39,828,025 | 1911.75 |

| NIR | 38,271,073 | 1224.67 |

| UV | 25,115,133 | 803.68 |

| Total | 103,214,231 | 3940.10 |

| Voxel (0.5 cm size) | 4,621,255 | 295.76 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Raimundo, J.; Lopez-Cuervo Medina, S.; Aguirre de Mata, J.; Prieto, J.F. Multisensor Data Fusion by Means of Voxelization: Application to a Construction Element of Historic Heritage. Remote Sens. 2022, 14, 4172. https://doi.org/10.3390/rs14174172

Raimundo J, Lopez-Cuervo Medina S, Aguirre de Mata J, Prieto JF. Multisensor Data Fusion by Means of Voxelization: Application to a Construction Element of Historic Heritage. Remote Sensing. 2022; 14(17):4172. https://doi.org/10.3390/rs14174172

Chicago/Turabian StyleRaimundo, Javier, Serafin Lopez-Cuervo Medina, Julian Aguirre de Mata, and Juan F. Prieto. 2022. "Multisensor Data Fusion by Means of Voxelization: Application to a Construction Element of Historic Heritage" Remote Sensing 14, no. 17: 4172. https://doi.org/10.3390/rs14174172

APA StyleRaimundo, J., Lopez-Cuervo Medina, S., Aguirre de Mata, J., & Prieto, J. F. (2022). Multisensor Data Fusion by Means of Voxelization: Application to a Construction Element of Historic Heritage. Remote Sensing, 14(17), 4172. https://doi.org/10.3390/rs14174172