Generalized LiDAR Intensity Normalization and Its Positive Impact on Geometric and Learning-Based Lane Marking Detection

Abstract

:1. Introduction

- Level 0—no intensity modification;

- Level 1—intensity correction: adjust the intensity values of a LiDAR unit using geometric scanning parameters (e.g., range and/or incidence angle);

- Level 2—intensity normalization: normalize the intensity readings of a LiDAR unit based on the assumption that intensity values across laser beams/LiDAR units/MMS should be similar for the same object;

- Level 3—intensity calibration: rectify the intensity values of a LiDAR unit based on known reflectance readings derived from a reference target.

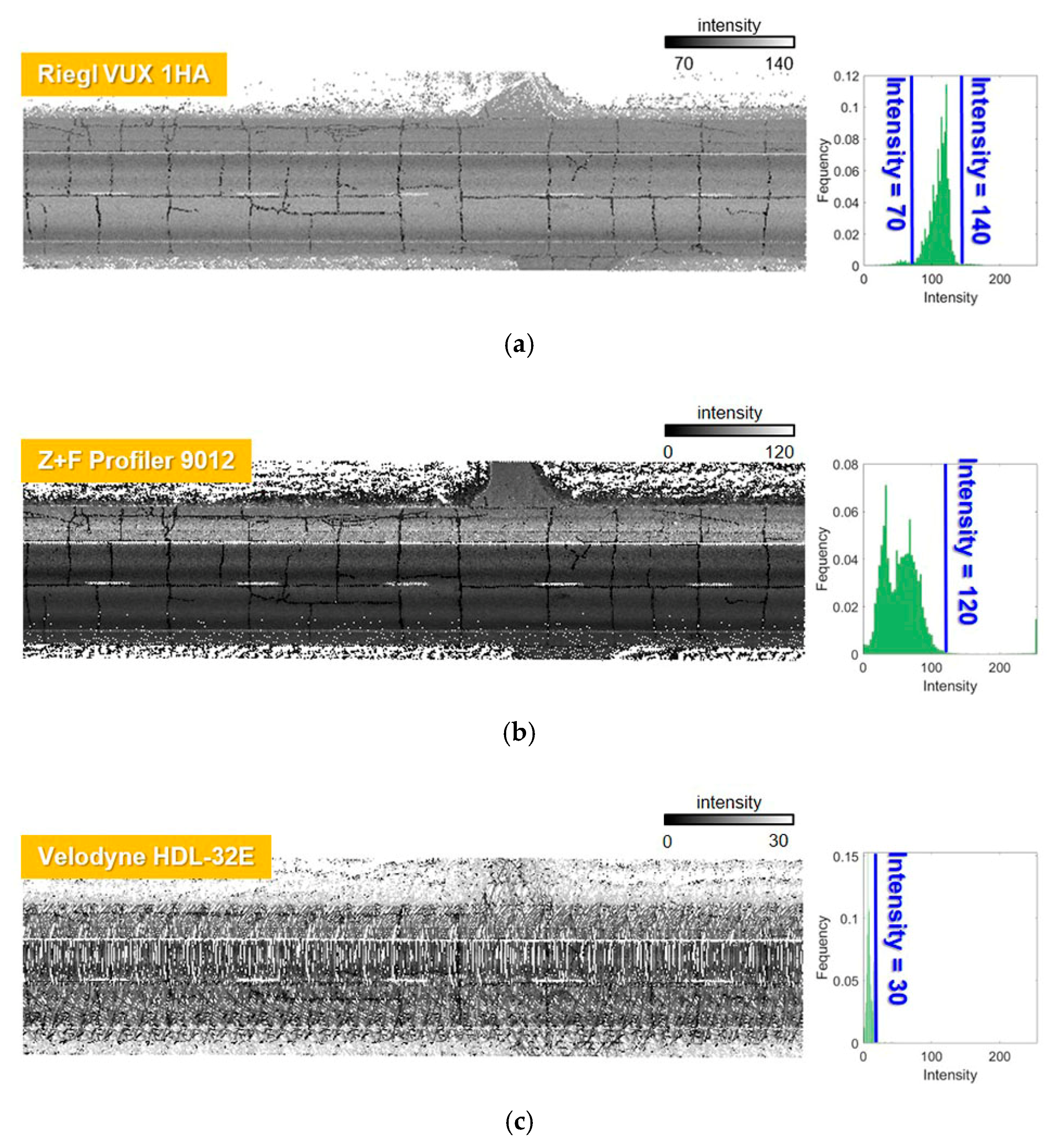

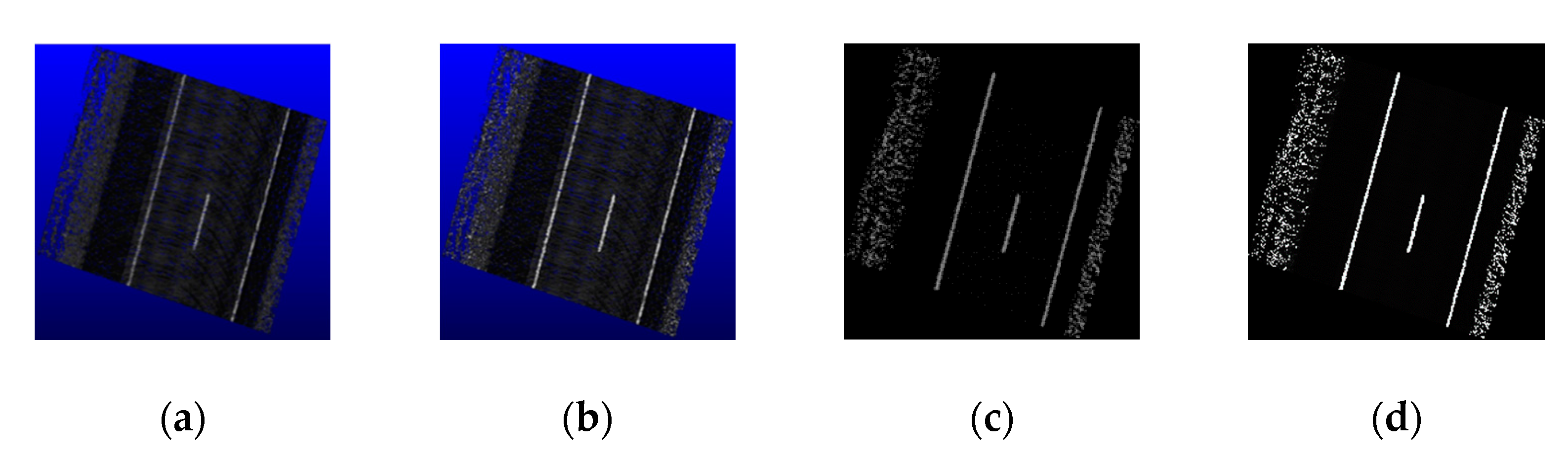

- Recently, several MMS equipped with multiple LiDAR units (e.g., single and/or multi-beam scanners from different manufacturers) have been developed [9]. Figure 1 shows sample point clouds and corresponding intensity histograms (along the same road surface) captured by Riegl VUX 1HA [10] (single-beam), Z+F Profiler 9012 [11] (single-beam), and Velodyne HDL-32E [12] (multi-beam) LiDAR units. For the same road region, the Riegl VUX 1HA intensity readings range from 70 to 140, while those from Z+F Profiler 9012 are in the 0 to 120 range. The Velodyne HDL-32E intensity values, on the other hand, are between 0 and 30. Thus, a generalized framework that can handle different LiDAR units onboard the same or different systems is required for lane marking extraction.

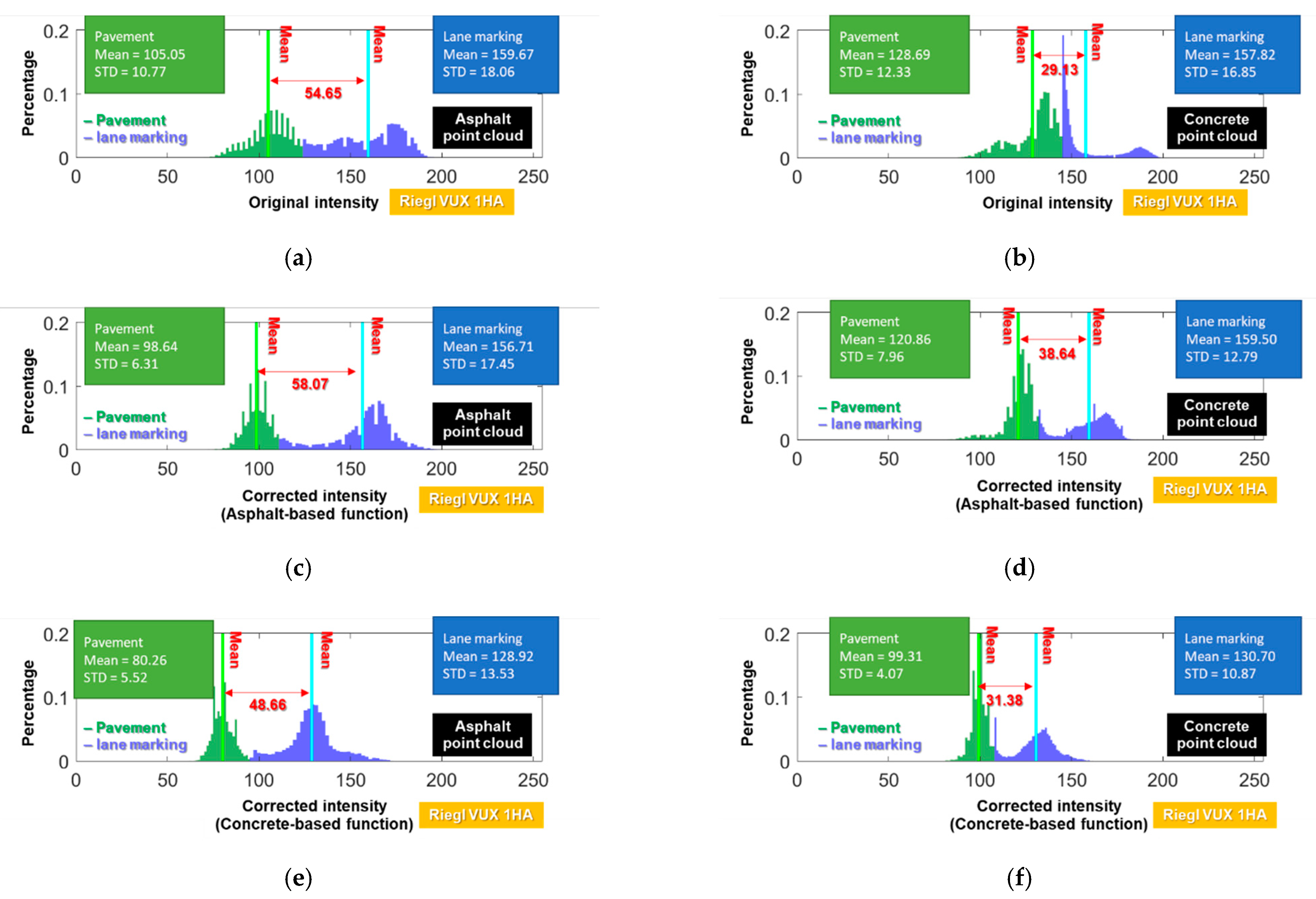

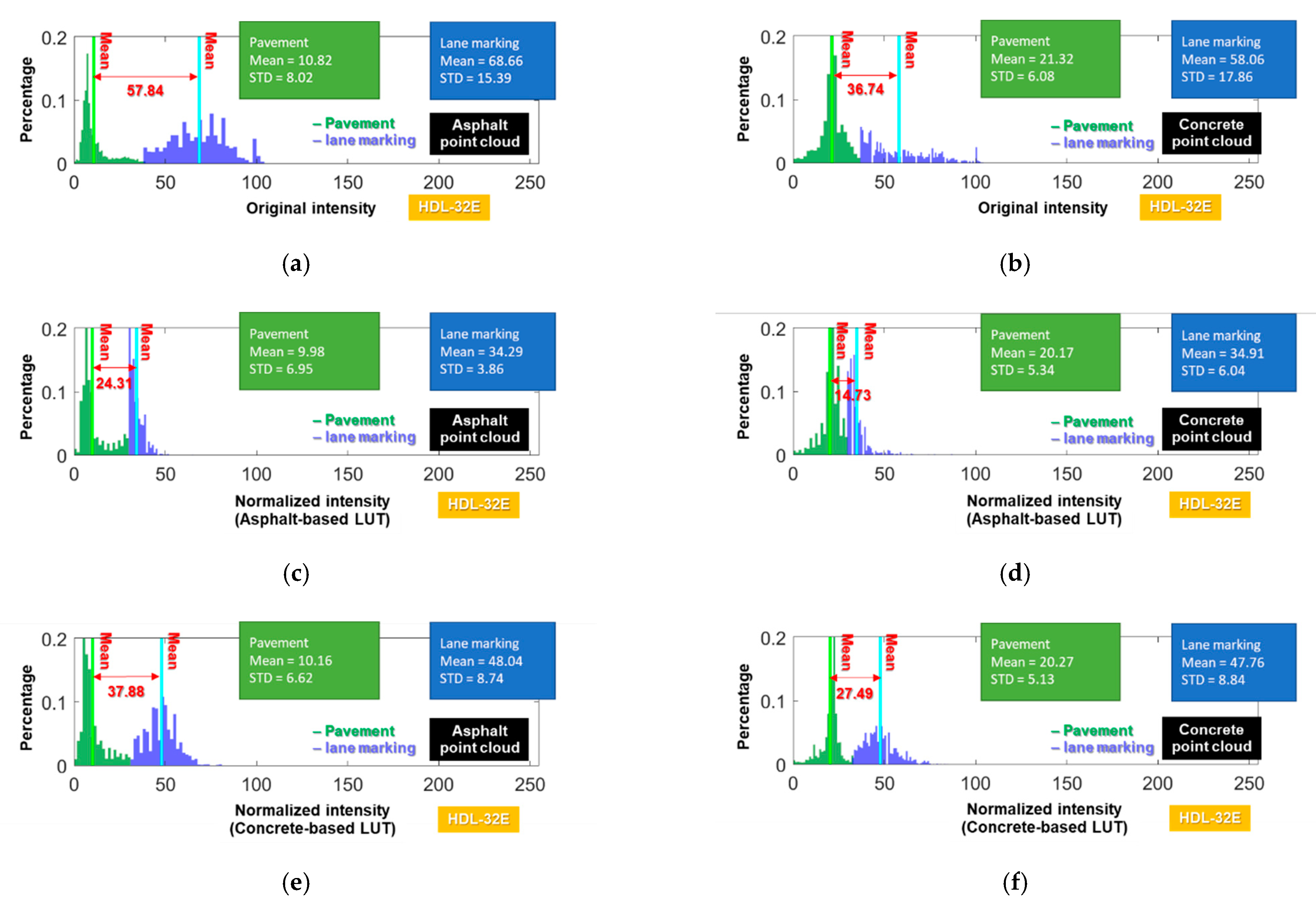

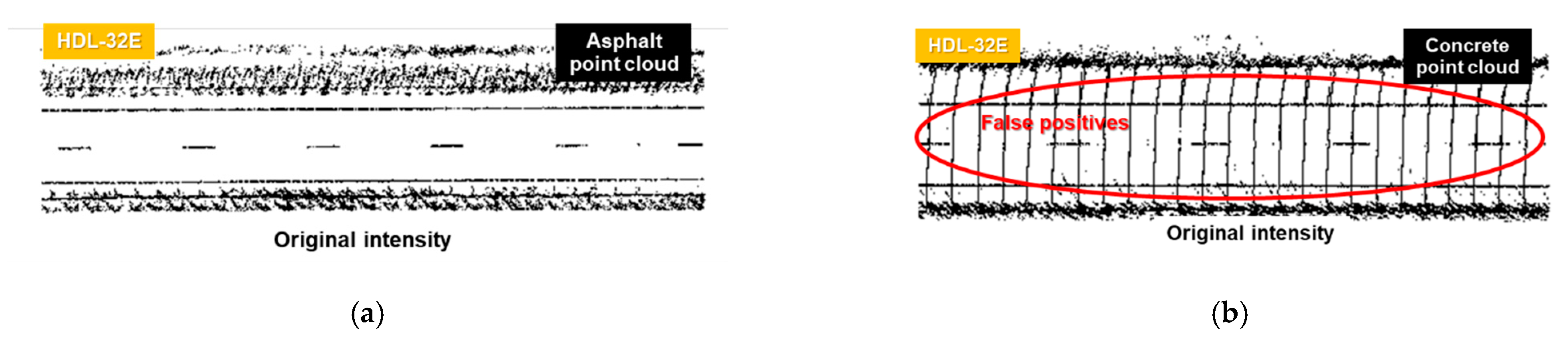

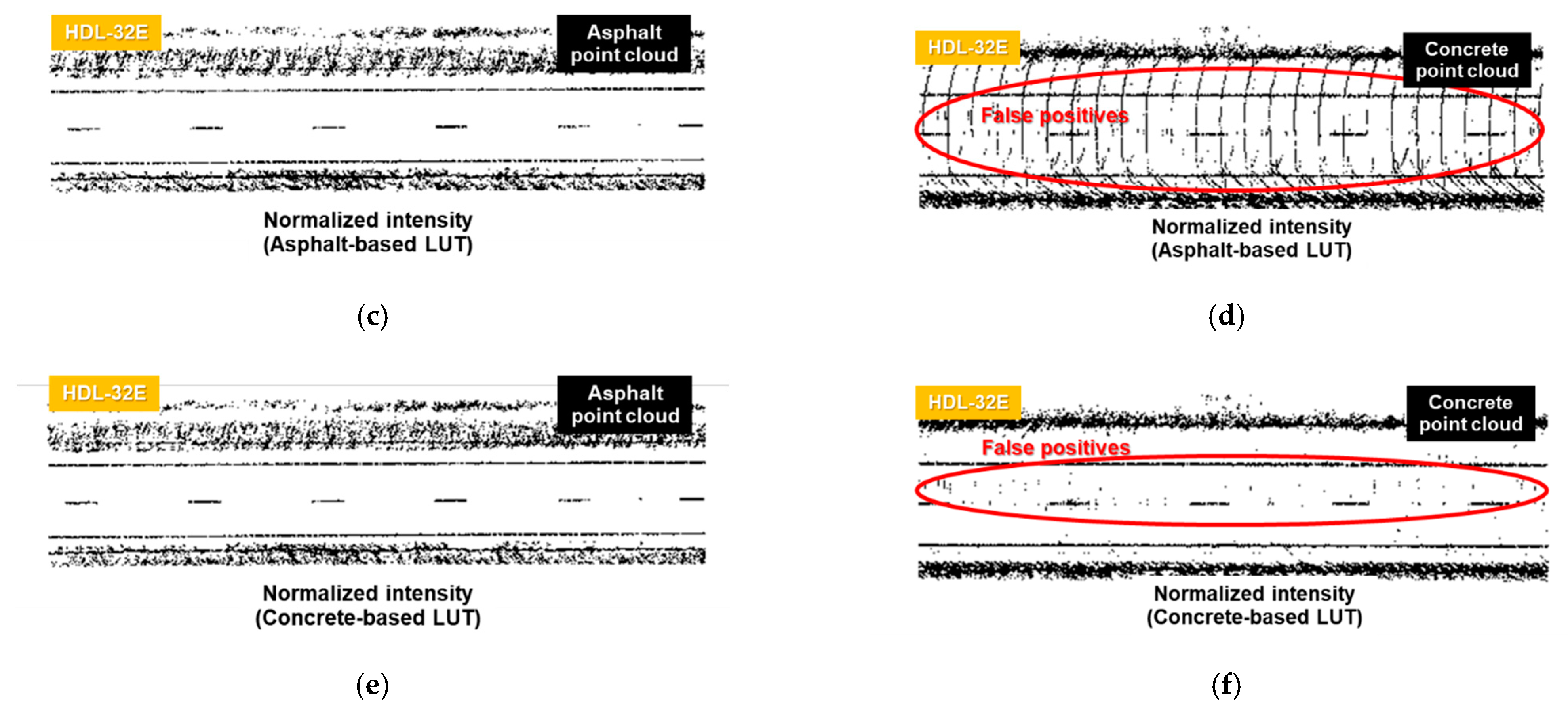

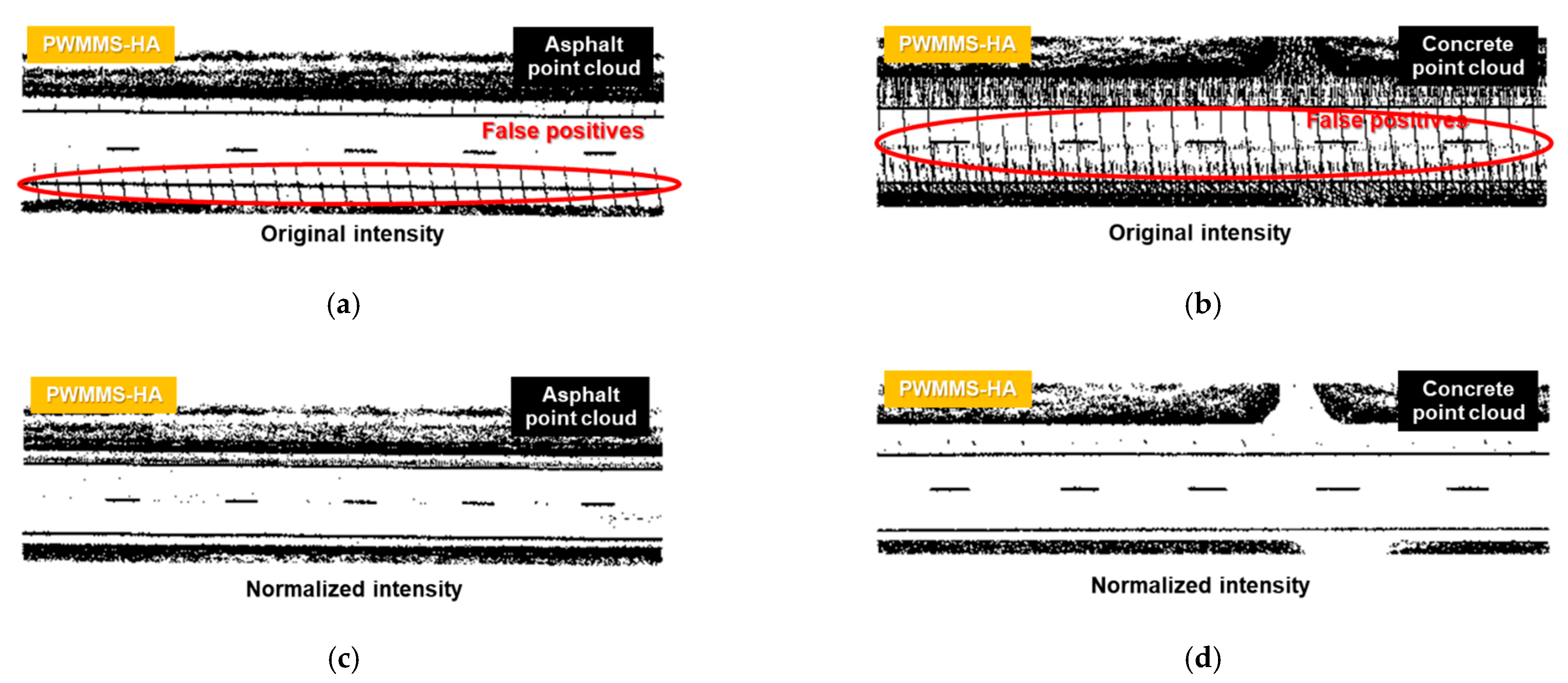

- Asphalt and concrete are the most common pavement types. According to Kashani et al. [7], asphalt and concrete pavement regions show distinctly different intensity values (i.e., most asphalt intensity readings are lower than concrete ones). Thus, intensity readings from asphalt/concrete pavement regions should be considered while developing a generalized normalization framework.

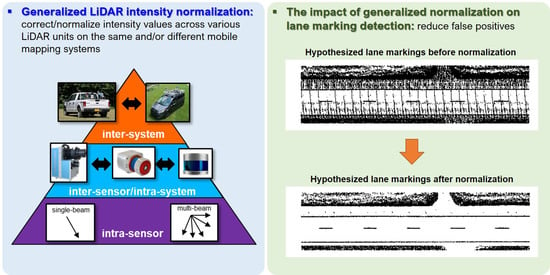

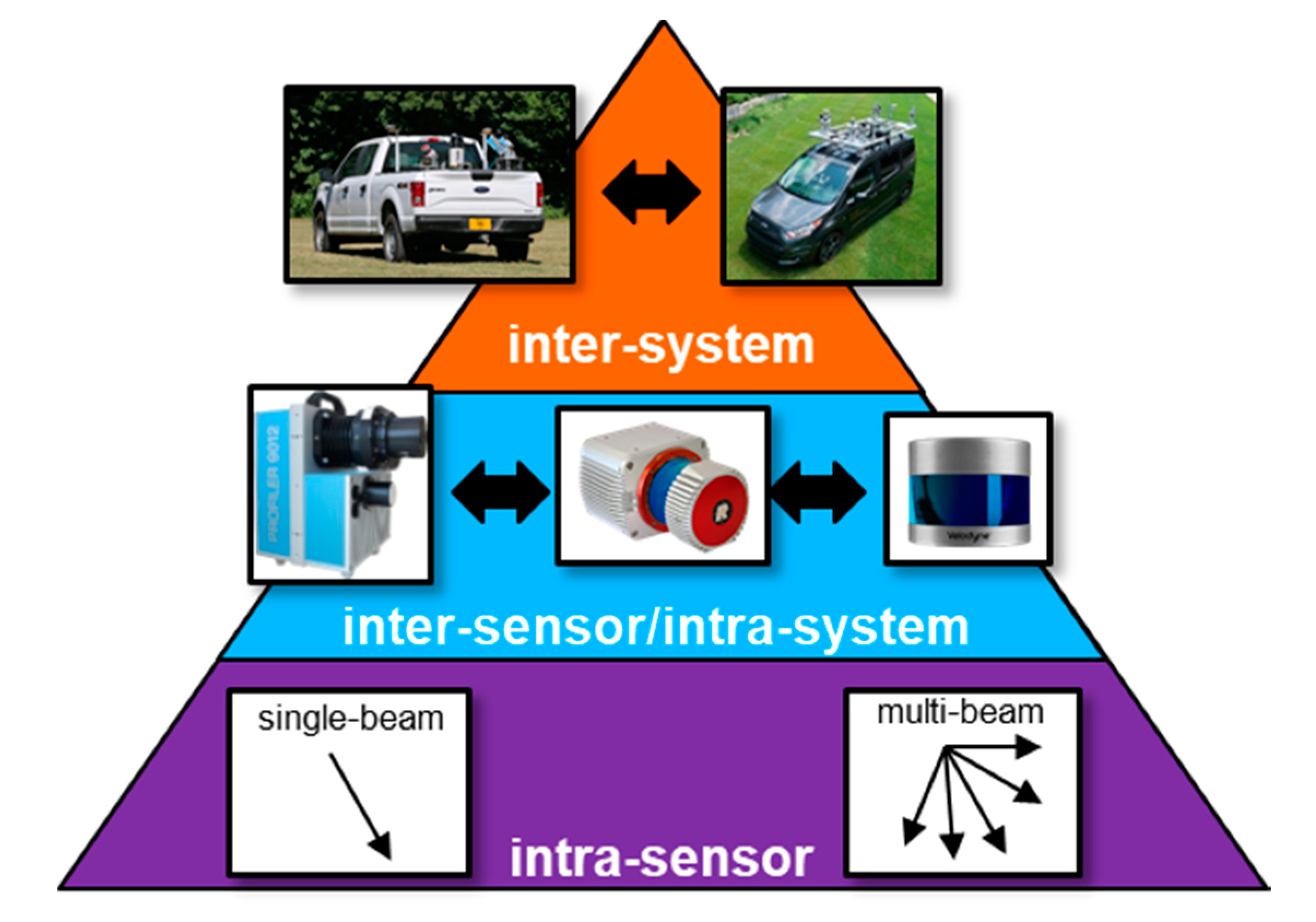

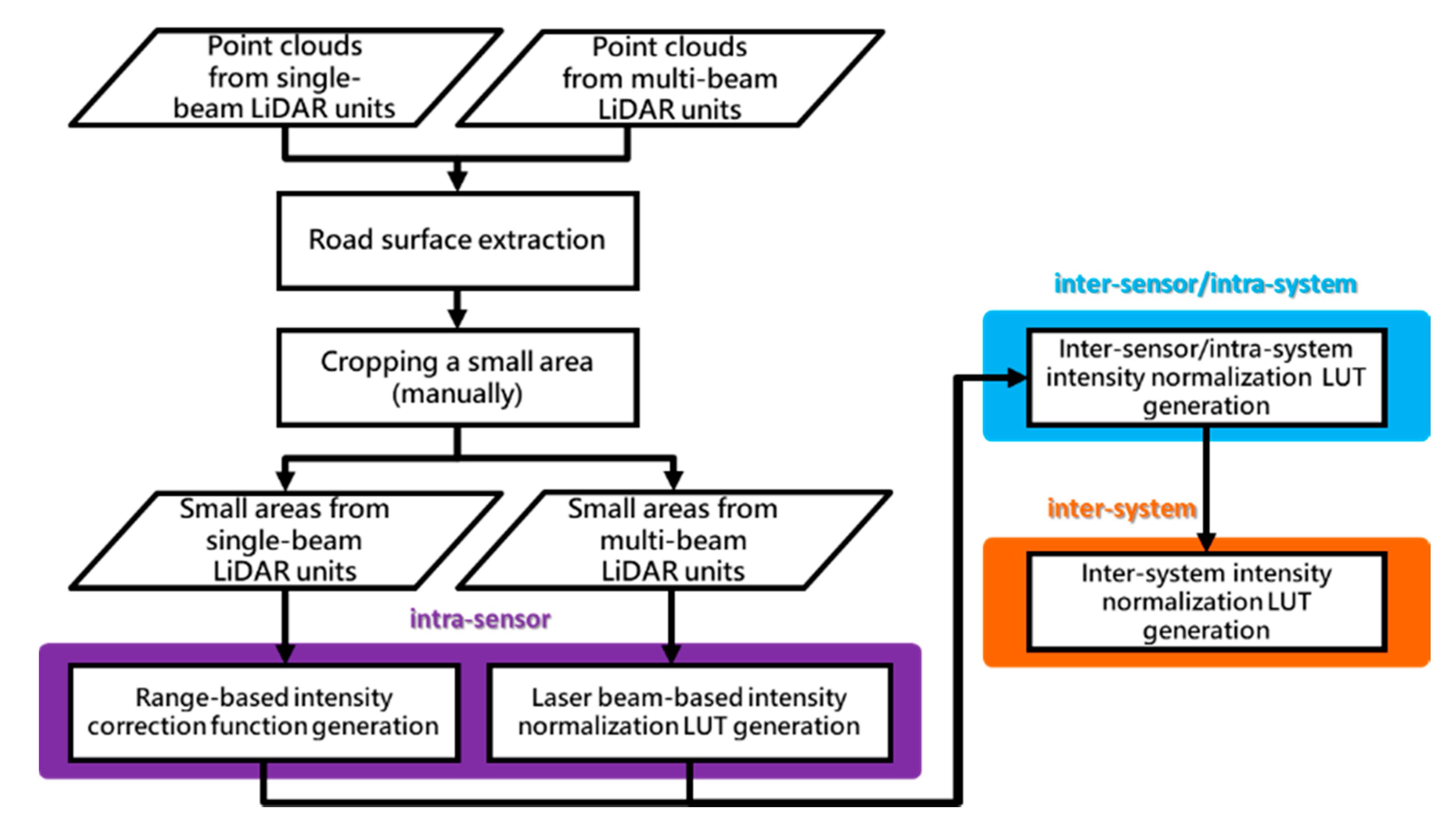

- A generalized intensity correction/normalization approach (as illustrated in Figure 2) is proposed for:

- A single-beam or multi-beam LiDAR scanner (intra-sensor).

- LiDAR units onboard a mobile mapping system (inter-sensor/intra-system).

- Point clouds from several mobile mapping systems (inter-system).

- The generalized correction/normalization approach is developed while considering the impact of observed intensity values along asphalt and concrete pavement regions.

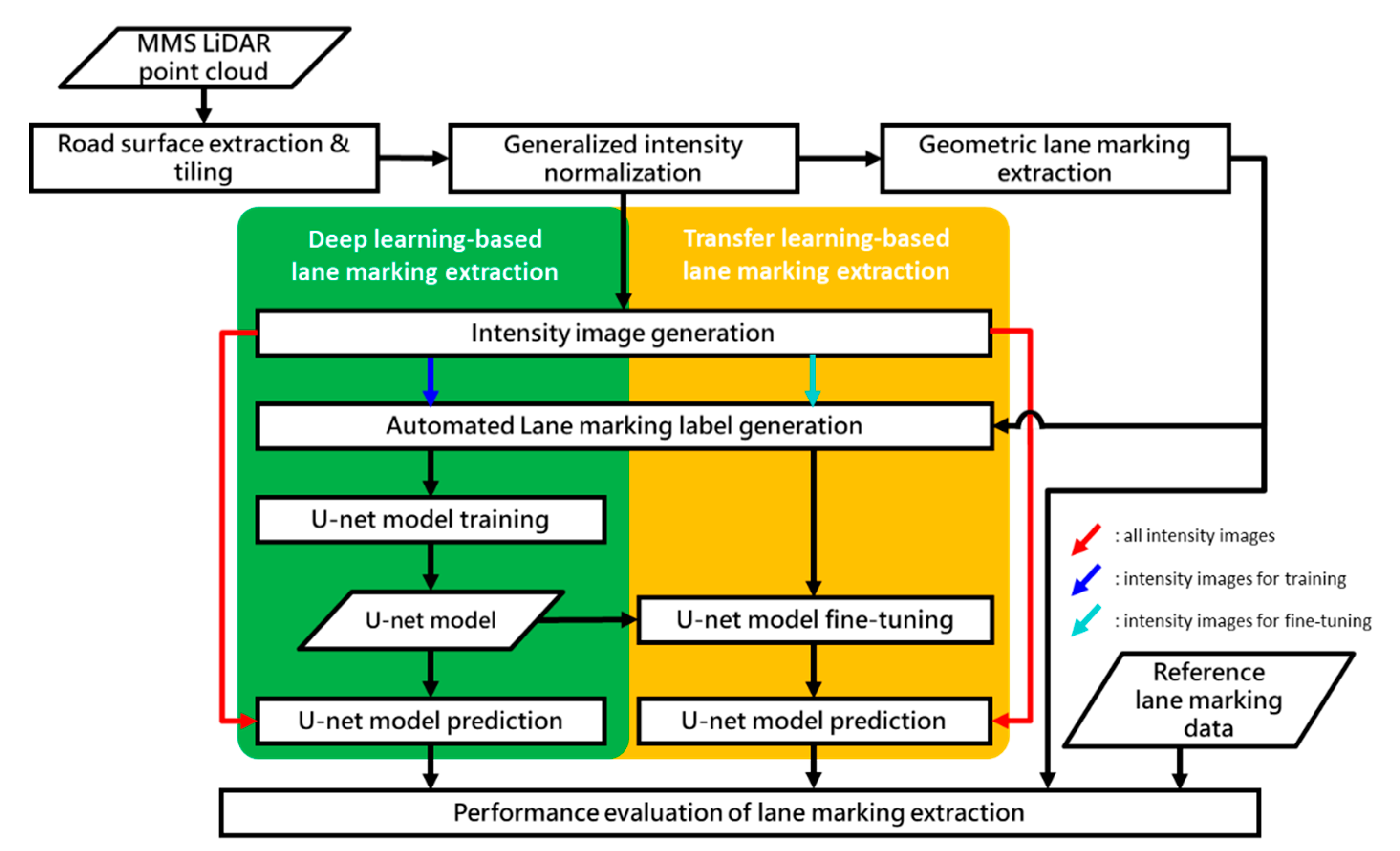

- To evaluate the performance of the proposed approach, geometric/morphological and deep-learning-based lane marking extraction with and without intensity correction/normalization are conducted.

- To further evaluate the proposed approach, transfer learning is applied to a deep learning model for handling datasets from different MMS. The performance of a fine-tuned model with and without intensity correction/normalization is also compared.

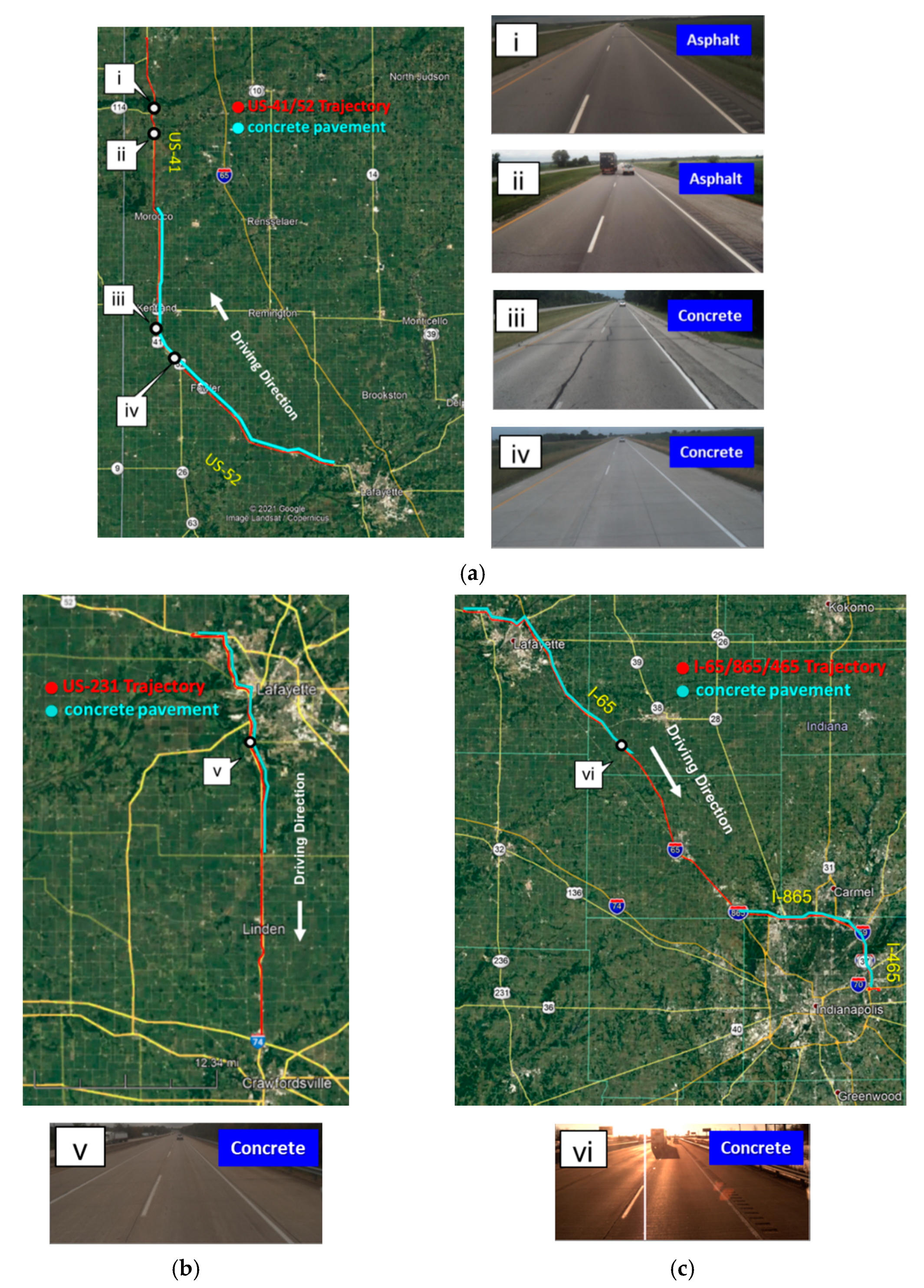

- Considering asphalt/concrete pavement and different patterns of lane markings (such as dotted, dash, or solid lines), 168-mile-long LiDAR data collected by two MMS on three highways are used for comprehensive performance evaluation.

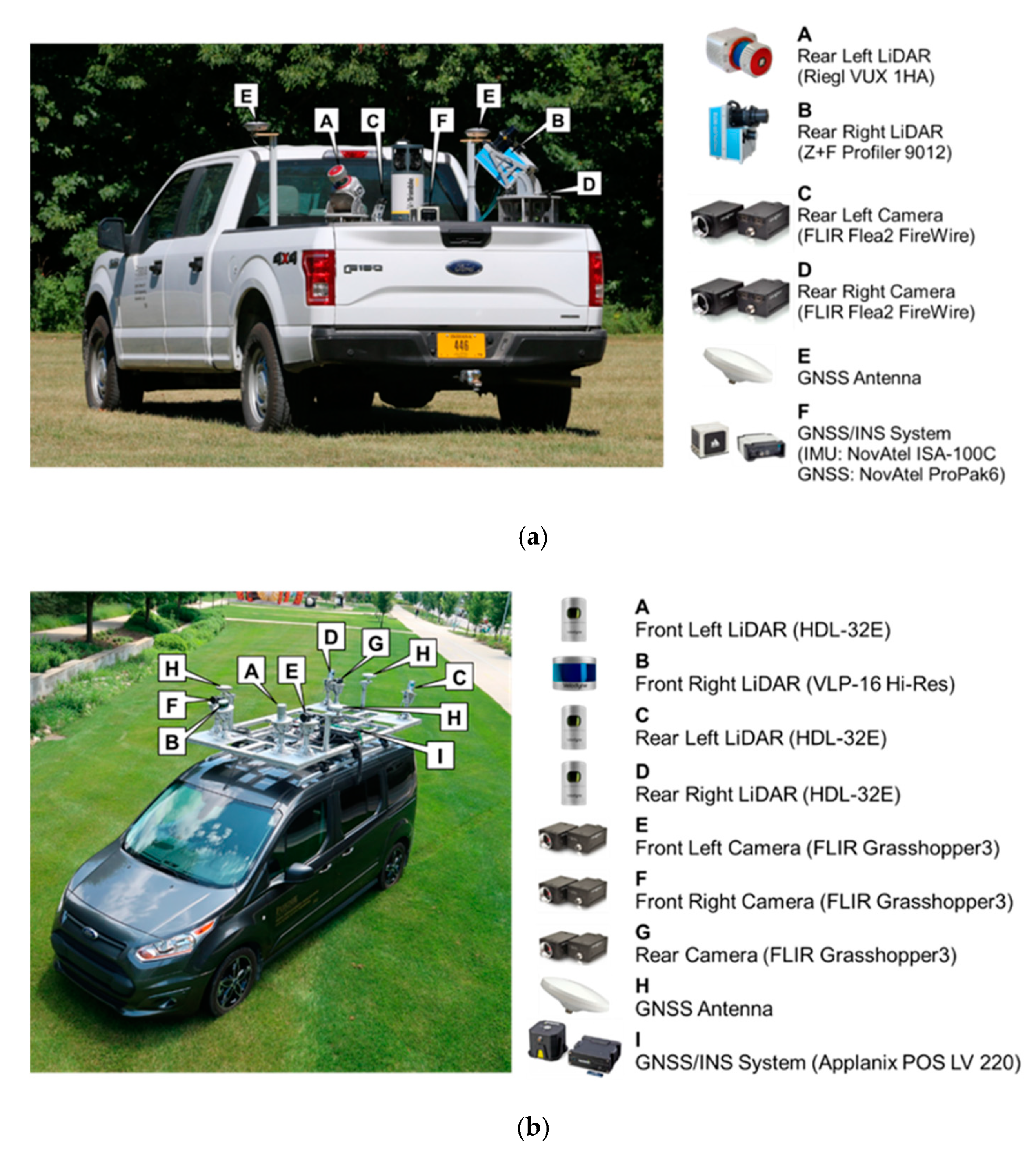

2. Data Acquisition Systems and Dataset Description

2.1. Mobile Mapping Systems

2.2. Study Site and Dataset Description

3. Methodology

3.1. Generalized Intensity Normalization

3.1.1. Intra-Sensor Intensity Correction—Single-Beam LiDAR

3.1.2. Intra-Sensor Intensity Normalization—Multi-Beam LiDAR

3.1.3. Inter-Sensor/Intra-System and Inter-System Intensity Normalization

- Intra-sensor: the range-based intensity correction is applied for each single-beam LiDAR unit and the laser beam-based intensity normalization is conducted for every multi-beam LiDAR sensor.

- Inter-sensor/intra-system: the laser-beam-based normalization is utilized while replacing the laser beam ID with LiDAR scanner ID . More specifically, for an intensity captured by LiDAR scanner —denoted as a tuple —all cells containing such pair are identified. The normalized intensity of the pair is the average intensity of all points from other scanners over these cells.

- Inter-system: the laser beam-based normalization is conducted while substituting the laser beam ID with MMS ID . More specifically, for an intensity captured by MMS , its normalized value is the average intensity of all points from other MMS within the cells containing the pair .

3.2. Lane Marking Extraction

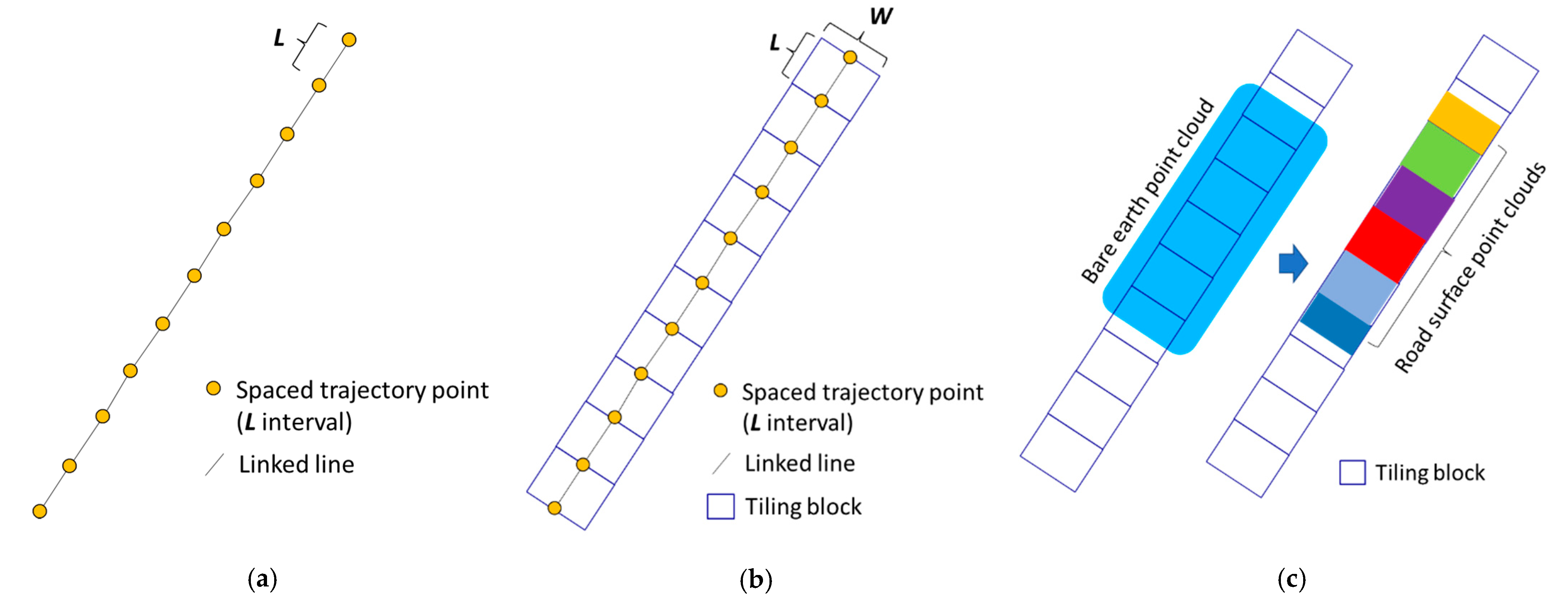

3.2.1. Road Surface Extraction and Tiling

3.2.2. Geometric/Morphological Lane Marking Extraction

3.2.3. Learning-Based Lane Marking Extraction

3.3. Performance Evaluation

4. Experimental Results

4.1. Impact of Pavement Type on Intensity Correction/Normalization

4.1.1. Best Pavement Type for Intensity Correction

4.1.2. Best Pavement Type for Intensity Normalization

4.2. Performance of Generalized Intensity Normalization

4.3. Impact of Generalized Intensity Normalization on Lane Marking Extraction

4.3.1. Test on US-41/52

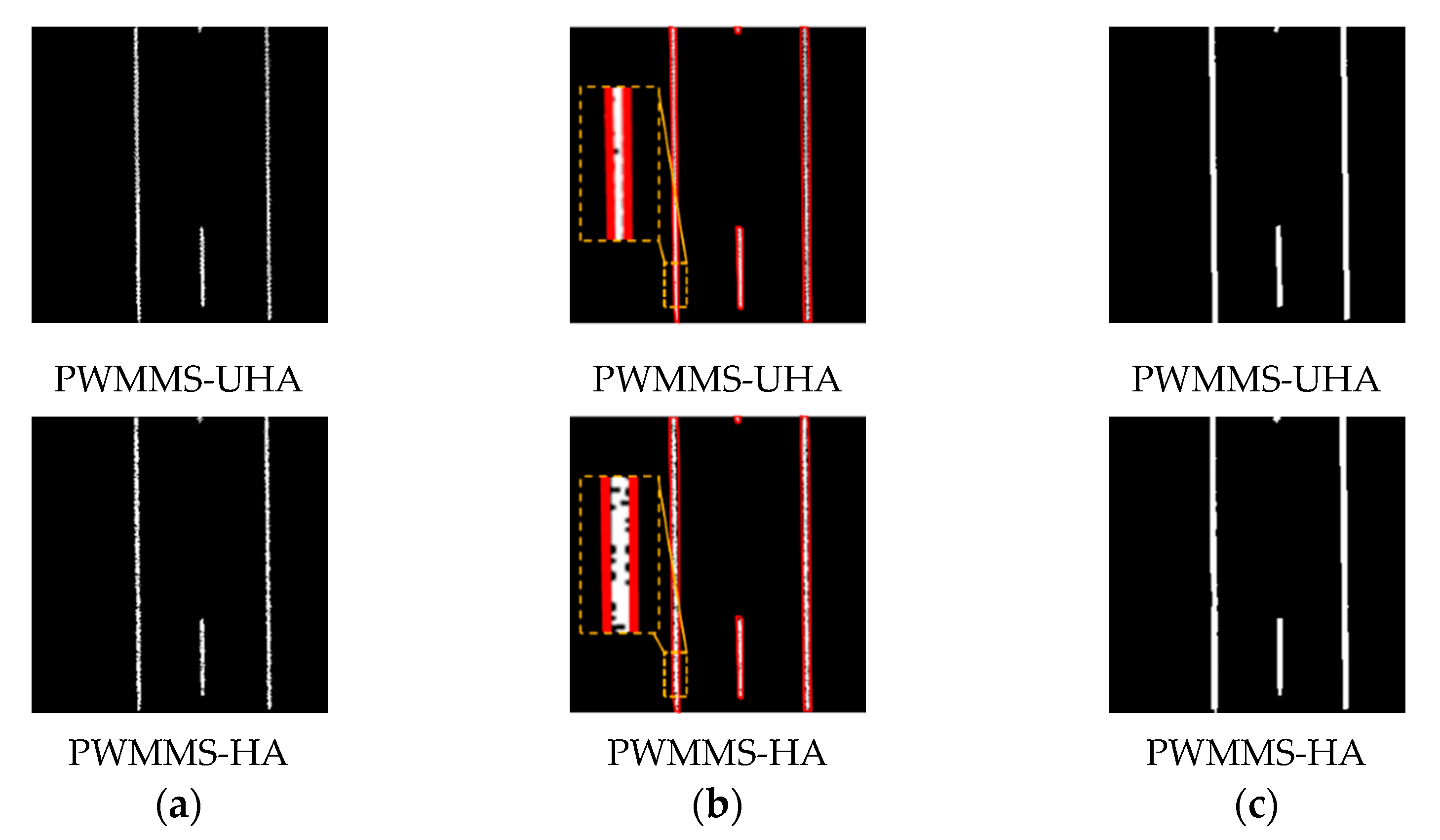

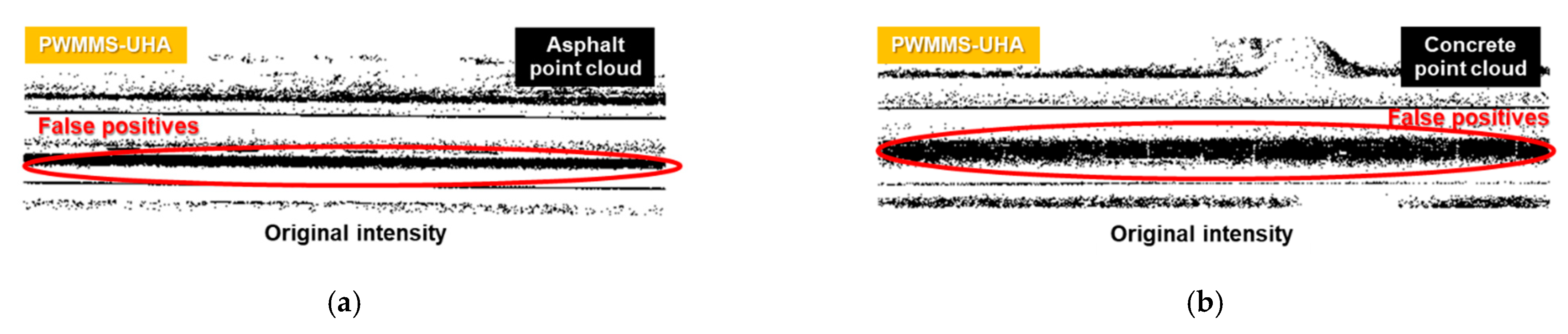

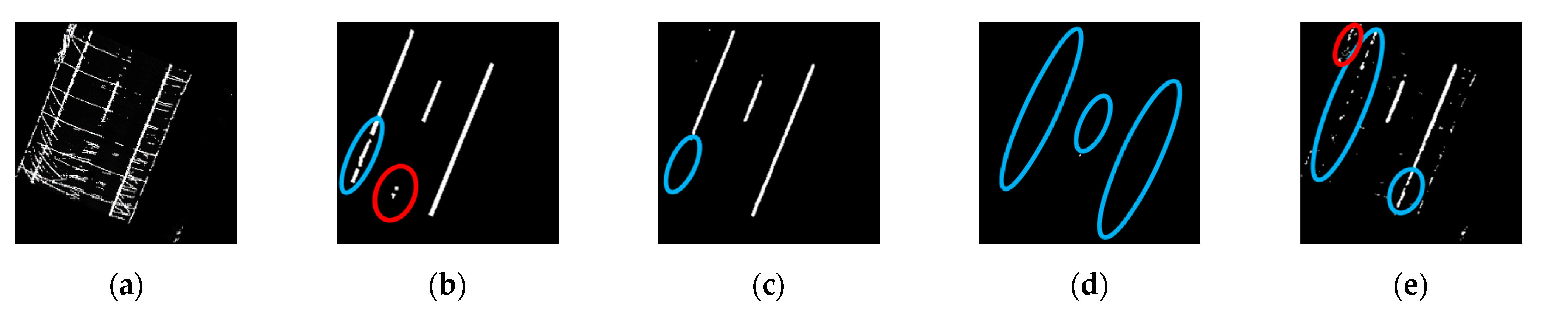

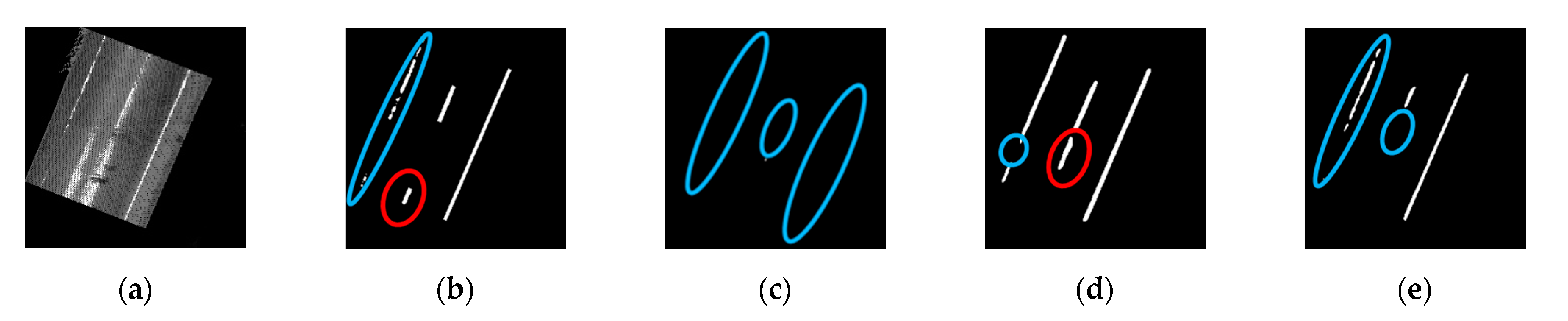

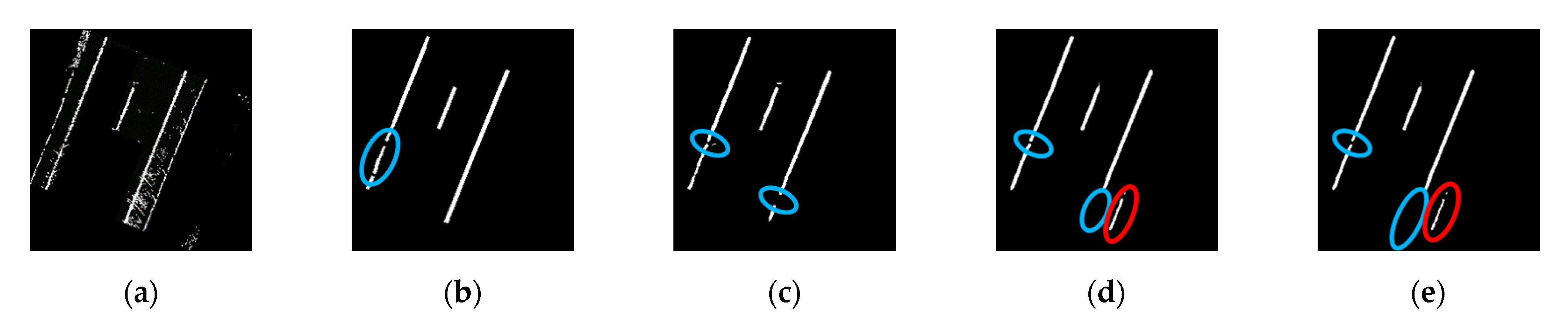

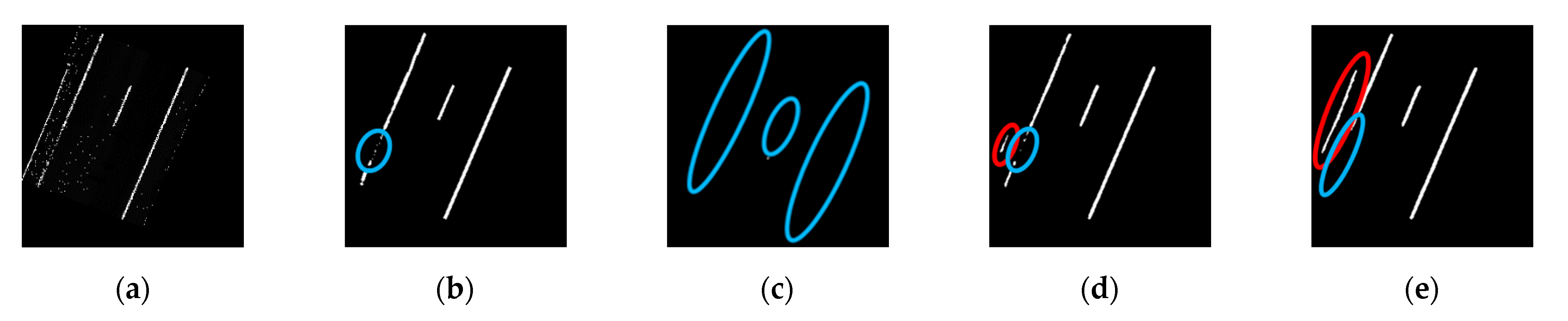

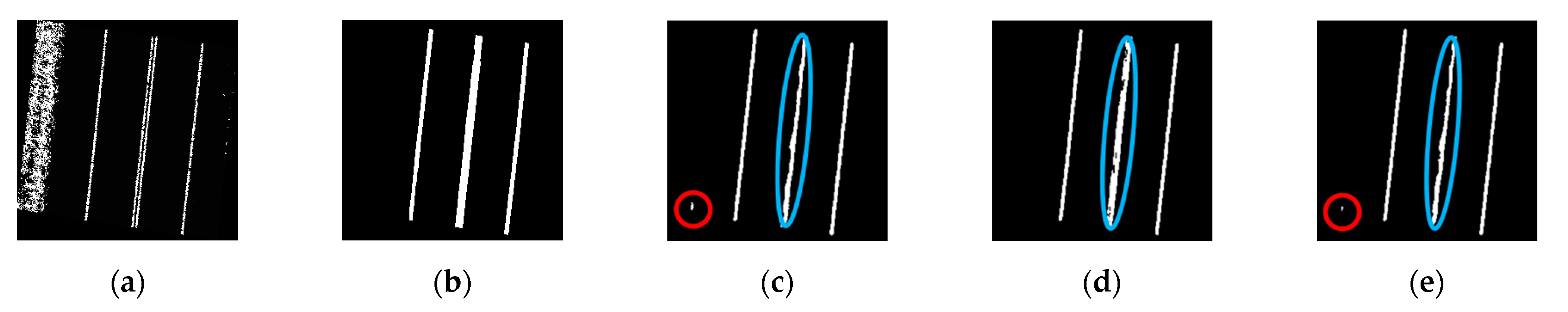

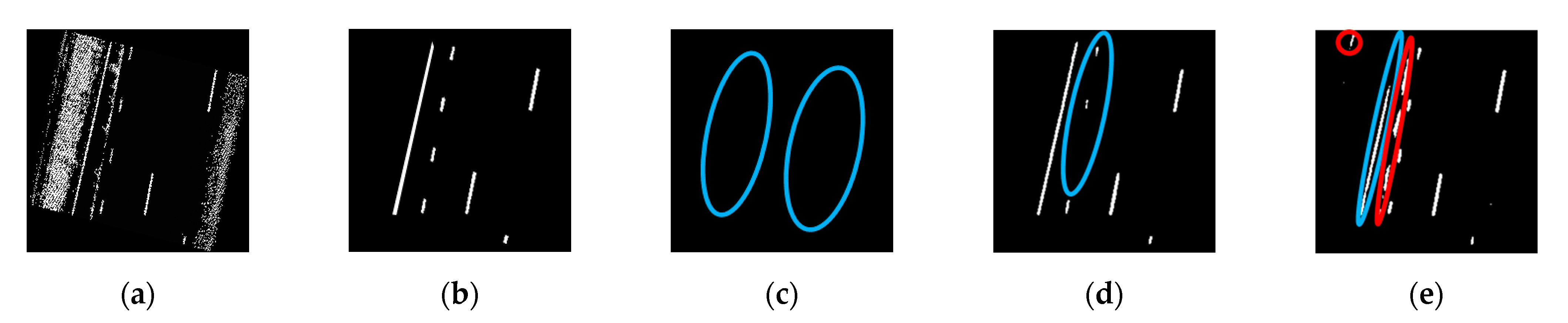

- With the original intensity, Model-G achieves F1-scores of 90.5% and 76.7% for the PWMMS-HA and PWMMS-UHA data, respectively. The lower F1-score for PWMMS-UHA is caused by larger false negatives (hence, lower recall)—see the example in Figure 19b.

- After intensity normalization, the F1-scores increase by 5.8% and 15.9% for the PWMMS-HA and PWMMS-UHA data, respectively. The greater improvement for the PWMMS-UHA data (Figure 21b) is not surprising since the variability in intensity distributions between the LiDAR units onboard the PWMMS-UHA is larger than the PWMMS-HA counterparts, as per the discussion related to Table 4.

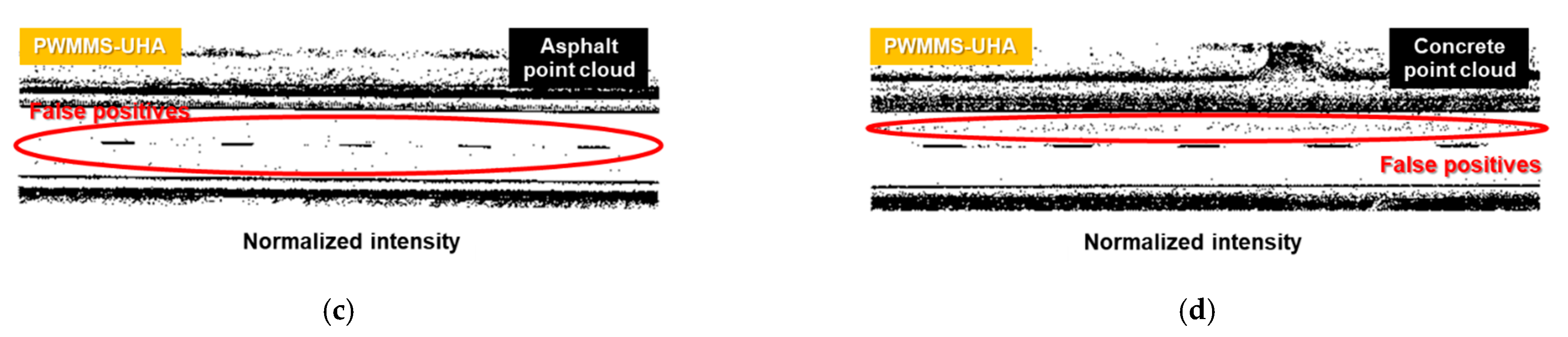

- With the original intensity, a model trained on data from one MMS does not perform well on that from another MMS, as can be observed in Figure 18d and Figure 19c. This is also reflected by the performance metrics shown in Table 8, where the F1-scores of Model-LO-HA and Model-LO-UHA are lower than 1% when testing on data from different MMS.

- Intensity normalization significantly improves the ability of Model-LN-UHA to handle PWMMS-HA data (F1-score increases by 86.2%)—see the example in Figure 20d. On the other hand, Model-LN-HA still shows a poor performance (F1-score lower than 6%) when testing on PWMMS-UHA data, as evident in Figure 21c. A possible reason for the poor performance is that the relatively high noise level in the PWMMS-HA data results in inferior quality of lane markings in the intensity images (an example can be found in Figure 10b).

- Overall, the results suggest that using normalized intensity, a model trained on a higher-resolution system (i.e., Model-LN-UHA) would generalize well to data from a lower-resolution system (i.e., PWMMS-HA)—not the other way around.

- With the original intensity, the fine-tuned models have some ability to detect lane markings in the target domain (Figure 18e and Figure 19e). However, the performance is worse when compared to the models trained from scratch. The F1-scores of Model-LO-UHA-HA and Model-LO-HA-UHA are 39.4% and 16.1% lower than those of Model-LO-HA and Model-LO-UHA when testing on the PWMMS-HA and PWMMS-UHA data, respectively.

- With the normalized intensity, such differences decrease to 2.9% (Model-LN-UHA-HA vs. Model-LN-HA) and 10.5% (Model-LN-HA-UHA vs. Model-LN-UHA), respectively, confirming the positive impact of intensity normalization on the fine-tuned models.

- The performance of the fine-tuned Model-LN-UHA-HA increases slightly when compared to Model-LN-UHA when applied to PWMMS-HA data (F1-score increases by 0.9%). In contrast, a major improvement is observed for Model-LN-HA-UHA when compared to Model-LN-HA when applied to PWMMS-UHA data (F1-score increases by 66.7%). This result indicates that fine-tuning is necessary for a model trained with PWMMS-HA data to handle PWMMS-UHA data; this is not the case for Model-LN-UHA to have it applied to PWMMS-HA data.

4.3.2. Test on US-231 and I-65/865/465

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Jaakkola, A.; Hyyppä, J.; Hyyppä, H.; Kukko, A. Retrieval algorithms for road surface modelling using laser-based mobile mapping. Sensors 2008, 8, 5238–5249. [Google Scholar] [CrossRef]

- Guan, H.; Li, J.; Yu, Y.; Wang, C.; Chapman, M.; Yang, B. Using mobile laser scanning data for automated extraction of road markings. ISPRS J. Photogramm. Remote Sens. 2014, 87, 93–107. [Google Scholar] [CrossRef]

- Teo, T.-A.; Yu, H.-L. Empirical radiometric normalization of road points from terrestrial mobile LiDAR system. Remote Sens. 2015, 7, 6336–6357. [Google Scholar] [CrossRef]

- Cheng, M.; Zhang, H.; Wang, C.; Li, J. Extraction and classification of road markings using mobile laser scanning point clouds. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 10, 1182–1196. [Google Scholar] [CrossRef]

- Levinson, J.; Thrun, S. Robust vehicle localization in urban environments using probabilistic maps. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 4372–4378. [Google Scholar]

- Levinson, J.; Thrun, S. Unsupervised Calibration for Multi-Beam Lasers; Springer: New York, NY, USA, 2014; pp. 179–193. [Google Scholar]

- Kashani, A.; Olsen, M.; Parrish, C.; Wilson, N. A review of LiDAR radiometric processing: From ad hoc intensity correction to rigorous radiometric calibration. Sensors 2015, 15, 28099–28128. [Google Scholar] [CrossRef]

- Shepard, D. A two-dimensional interpolation function for irregularly-spaced data. In Proceedings of the 1968 23rd ACM National Conference, New York, NY, USA, 27–29 August 1968; pp. 517–524. [Google Scholar]

- Leica Pegasus:Two Ultimate Mobile Sensor Platform. Available online: https://leica-geosystems.com/en-in/products/mobile-mapping-systems/capture-platforms/leica-pegasus_two-ultimate (accessed on 6 March 2022).

- RIEGL. RIEGL VUX-1 Series Info Sheet. Available online: http://www.riegl.com/uploads/tx_pxpriegldownloads/Infosheet_VUX-1series_2017-12-04.pdf (accessed on 6 March 2022).

- Zoller + Fröhlich GmbH. Z+F PROFILER 9012 Datasheet. Available online: https://www.zf-laser.com/fileadmin/editor/Datenblaetter/Z_F_PROFILER_9012_Datasheet_E_final_compr.pdf (accessed on 6 March 2022).

- Velodyne LiDAR. HDL-32E User Manual and Programming Guide. Available online: https://velodynelidar.com/lidar/products/manual/63-9113%20HDL-32E%20manual_Rev%20E_NOV2012.pdf (accessed on 6 March 2022).

- Wen, C.; Sun, X.; Li, J.; Wang, C.; Guo, Y.; Habib, A. A deep learning framework for road marking extraction, classification and completion from mobile laser scanning point clouds. ISPRS J. Photogramm. Remote Sens. 2019, 147, 178–192. [Google Scholar] [CrossRef]

- Cheng, Y.-T.; Patel, A.; Wen, C.; Bullock, D.; Habib, A. Intensity thresholding and deep learning based lane marking extraction and lane width estimation from mobile light detection and ranging (LiDAR) point clouds. Remote Sens. 2020, 12, 1379. [Google Scholar] [CrossRef]

- Patel, A.; Cheng, Y.-T.; Ravi, R.; Lin, Y.-C.; Bullock, D.; Habib, A. Transfer learning for LiDAR-based lane marking detection and intensity profile generation. Geomatics 2021, 1, 287–309. [Google Scholar] [CrossRef]

- Cui, Y.; Chen, R.; Chu, W.; Chen, L.; Tian, D.; Li, Y.; Cao, D. Deep learning for image and point cloud fusion in autonomous driving: A review. IEEE Trans. Intell. Transp. Syst. 2021, 23, 722–739. [Google Scholar] [CrossRef]

- Novatel IMU-ISA-100C. Available online: https://docs.novatel.com/OEM7/Content/Technical_Specs_IMU/ISA_100C_Overview.htm (accessed on 6 March 2022).

- Velodyne LiDAR. VLP-16 User Manual and Programming Guide. Available online: https://usermanual.wiki/Pdf/VLP1620User20Manual20and20Programming20Guide2063924320Rev20A.1947942715/view (accessed on 6 March 2022).

- Applanix. POSLV Specifications. Available online: https://www.applanix.com/pdf/specs/POSLV_Specifications_dec_2015.pdf (accessed on 6 March 2022).

- Lari, Z.; Habib, A. New approaches for estimating the local point density and its impact on LiDAR data segmentation. Photogramm. Eng. Remote Sens. 2013, 79, 195–207. [Google Scholar] [CrossRef]

- Mahlberg, J.A.; Cheng, Y.-T.; Bullock, D.M.; Habib, A. Leveraging LiDAR intensity to evaluate roadway pavement markings. Future Transp. 2021, 1, 720–736. [Google Scholar] [CrossRef]

- Zhang, W.; Qi, J.; Wan, P.; Wang, H.; Xie, D.; Wang, X.; Yan, G. An easy-to-use airborne LiDAR data filtering method based on cloth simulation. Remote Sens. 2016, 8, 501. [Google Scholar] [CrossRef]

- Lin, Y.-C.; Habib, A. Quality control and crop characterization framework for multi-temporal UAV LiDAR data over mechanized agricultural fields. Remote Sens. Environ. 2021, 256, 112299. [Google Scholar] [CrossRef]

- FHWA. Manual on Uniform Traffic Control Devices 2009; U.S. Department of Transportation: Washington, DC, USA, 2009. [Google Scholar]

- Ester, M.; Kriegel, H.-P.; Sander, J.; Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. In Proceedings of the Second International Conference on Knowledge Discovery and Data Mining, Portland, OR, USA, 2–4 August 1996; pp. 226–231. [Google Scholar]

- Schubert, E.; Sander, J.; Ester, M.; Kriegel, H.P.; Xu, X. DBSCAN revisited, revisited: Why and how you should (still) use DBSCAN. ACM Trans. Database Syst. 2017, 42, 19. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Dice, L.R. Measures of the amount of ecologic association between species. Ecology 1945, 26, 297–302. [Google Scholar] [CrossRef]

- Amiri, M.; Brooks, R.; Rivaz, H. Fine tuning U-net for ultrasound image segmentation: Which layers? In Domain Adaptation and Representation Transfer and Medical Image Learning with Less Labels and Imperfect Data; Springer: New York, NY, USA, 2019; pp. 235–242. [Google Scholar]

- Ma, L.; Li, Y.; Li, J.; Yu, Y.; Junior, J.M.; Gonçalves, W.N.; Chapman, M.A. Capsule-based networks for road marking extraction and classification from mobile LiDAR point clouds. IEEE Trans. Intell. Transp. Syst. 2020, 22, 1981–1995. [Google Scholar]

- Fuchs, D.J. The dangers of human-like bias in machine-learning algorithms. Mo. ST’s Peer Peer 2018, 2, 1. [Google Scholar]

- Howard, A.; Zhang, C.; Horvitz, E. Addressing bias in machine learning algorithms: A pilot study on emotion recognition for intelligent systems. In Proceedings of the 2017 IEEE Workshop on Advanced Robotics and its Social Impacts (ARSO), Austin, TX, USA, 8–10 March 2017; pp. 1–7. [Google Scholar]

- Adadi, A.; Berrada, M. Peeking inside the black-box: A survey on explainable artificial intelligence (XAI). IEEE Access 2018, 6, 52138–52160. [Google Scholar] [CrossRef]

- Romano, J.D.; Le, T.T.; Fu, W.; Moore, J.H. Is deep learning necessary for simple classification tasks? arXiv 2020, arXiv:2006.06730. [Google Scholar]

- Li, Y.; Ma, L.; Zhong, Z.; Liu, F.; Chapman, M.A.; Cao, D.; Li, J. Deep learning for lidar point clouds in autonomous driving: A review. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 3412–3432. [Google Scholar] [CrossRef] [PubMed]

| PWMMS-UHA | PWMMS-HA | |||

|---|---|---|---|---|

| LiDAR Sensors | Riegl VUX 1HA | Z+F Profiler 9012 | Velodyne VLP-16 Hi-Res | Velodyne HDL-32E |

| No. of laser beams | 1 | 1 | 16 | 32 |

| Pulse repetition rate (point/s) | Up to 1,000,000 | Up to 1,000,000 | ~300,000 | ~695,000 |

| Maximum range | 135 m | 119 m | 100 m | 100 m |

| Range accuracy | 5 mm | 2 mm | 3 cm | cm |

| Location and WGS 84 Coordinates | Date and Acquisition Duration | Length (Mile) | Driving Speed (mph) | ||

|---|---|---|---|---|---|

| Asphalt | Concrete | Total | |||

| US-41/52 Start: 40°28′03″N, 86°59′17″W End: 41°34′28″N, 87°28′51″W | 15 July 2021 Duration: ~1.3 h | 19 | 51 | 70 | ~55 |

| US-231 Start: 40°28′03″N, 86°59′17″W End: 40°04′46″N, 86°54′15″W | 29 October 2021 Duration: ~0.5 h | 13 | 15 | 28 | ~54 |

| I-65/865/465 Start: 40°28′03″N, 86°59′12″W End: 39°47′56″N, 86°02′06″W | 23 February 2021 Duration: ~1.4 h | 31 | 39 | 70 | ~50 |

| MMS | LiDAR Unit | Grid Size (m) | ||

|---|---|---|---|---|

| Intra-Sensor | Inter-Sensor/ Intra-System | Inter-System | ||

| PWMMS -UHA | left LiDAR sensor (Riegl) 1 | N/A | 0.10 | 0.05 |

| right LiDAR sensor (Z+F) 1 | N/A | |||

| PWMMS -HA | rear left LiDAR sensor (HDL-32E) 2 | 0.20 | 0.15 | |

| front left LiDAR sensor (HDL-32E) 2 | 0.20 | |||

| rear right LiDAR sensor (HDL-32E) 2 | 0.20 | |||

| front right LiDAR sensor (VLP-16 Hi-Res) 2 | 0.30 | |||

| Intensity | Class | Region | PWMMS-UHA | PWMMS-HA | ||

|---|---|---|---|---|---|---|

| Riegl VUX-1HA (Mean ± STD) | Z+F Profiler 9012 (Mean ± STD) | Velodyne HDL-32E (Mean ± STD) | VLP-16 Hi-Res (Mean ± STD) | |||

| Original | Pavement | Asphalt | 105 ± 11 | 48 ± 21 | 11 ± 8 | 5 ± 4 |

| Concrete | 129 ± 12 | 54 ± 23 | 21 ± 6 | 6 ± 4 | ||

| Lane marking | Asphalt | 160 ± 18 | 231 ± 40 | 69 ± 15 | 56 ± 21 | |

| Concrete | 158 ± 17 | 234 ± 35 | 58 ± 18 | 46 ± 20 | ||

| Normalized | Pavement | Asphalt | 75 ± 3 | 71 ± 3 | 74 ± 4 | 79 ± 2 |

| Concrete | 76 ± 4 | 71 ± 3 | 74 ± 4 | 79 ± 2 | ||

| Lane marking | Asphalt | 88 ± 8 | 88 ± 2 | 85 ± 3 | 92 ± 4 | |

| Concrete | 87 ± 4 | 88 ± 2 | 84 ± 5 | 89 ± 4 | ||

| Threshold | Description | Value |

|---|---|---|

| Distance threshold for scan-line-based outlier removal | 20 cm | |

| Neighborhood distance threshold for DBSCAN | 6.5 cm | |

| Minimum number of points threshold for DBSCAN | 10 points | |

| Normal distance threshold for geometry-based outlier removal | 10 cm | |

| Linearity ratio threshold for geometry-based outlier removal | 0.8 | |

| Distance threshold for local refinement | 2.5 cm | |

| Distance threshold for global refinement | 2.5 cm |

| Original Intensity | Normalized Intensity | |

|---|---|---|

| Trained on PWMMS-HA | Model-LO-HA (training: 1220; validation: 150) | Model-LN-HA (training: 1220; validation: 150) |

| Trained on PWMMS-UHA | Model-LO-UHA (training: 1220; validation: 150) | Model-LN-UHA (training: 1220; validation: 150) |

| Fine-tuned on PWMMS-UHA 1 | Model-LO-HA-UHA (fine-tuning: 252; validation: 30) | Model-LN-HA-UHA (fine-tuning: 252; validation: 30) |

| Fine-tuned on PWMMS-HA 1 | Model-LO-UHA-HA (fine-tuning: 252; validation: 30) | Model-LN-UHA-HA (fine-tuning: 252; validation: 30) |

| Dataset (PWMMS-UHA and HA) | # of Testing Images 1 in Concrete Pavement Area | # of Testing Images 1 in Asphalt Pavement Area | Total # of Testing Images 1 |

|---|---|---|---|

| US-41/52 | 187 | 113 | 300 |

| US-231 | 78 | 72 | 150 |

| I-65/865/465 | 167 | 133 | 300 |

| Model | Test Data | Precision (%) | Recall (%) | F1-Score (%) | |

|---|---|---|---|---|---|

| Intensity | System | ||||

| Model-G | Original | PWMMS-HA | 90.3 | 91.0 | 90.5 |

| PWMMS-UHA | 92.7 | 66.7 | 76.7 | ||

| Normalized | PWMMS-HA | 97.7 | 95.2 | 96.3 | |

| PWMMS-UHA | 95.7 | 90.5 | 92.6 | ||

| Model-LO-HA | Original | PWMMS-HA | 92.9 | 83.5 | 87.7 |

| PWMMS-UHA | 5.6 | 0.5 | 0.9 | ||

| Model-LO-UHA | Original | PWMMS-UHA | 77.7 | 89.7 | 82.1 |

| PWMMS-HA | 4.1 | <0.1 | <0.1 | ||

| Model-LN-HA | Normalized | PWMMS-HA | 92.4 | 88.1 | 90.0 |

| PWMMS-UHA | 18.8 | 3.5 | 5.3 | ||

| Model-LN-UHA | Normalized | PWMMS-UHA | 90.3 | 75.4 | 82.5 |

| PWMMS-HA | 85.5 | 88.1 | 86.2 | ||

| Model-LO-UHA-HA | Original | PWMMS-HA | 63.6 | 41.4 | 48.3 |

| Model-LO-HA-UHA | Original | PWMMS-UHA | 83.9 | 58.9 | 66.0 |

| Model-LN-UHA-HA | Normalized | PWMMS-HA | 84.7 | 90.5 | 87.1 |

| Model-LN-HA-UHA | Normalized | PWMMS-UHA | 91.5 | 62.0 | 72.0 |

| Location | Model | Test Data (Normalized Intensity) | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|---|---|

| US-231 | Model-G | PWMMS-HA | 93.1 | 98.8 | 96.5 |

| PWMMS-UHA | 91.7 | 98.3 | 95.5 | ||

| Model-LN-HA | PWMMS-HA | 89.8 | 73.8 | 80.4 | |

| PWMMS-UHA | 9.3 | 5.1 | 6.5 | ||

| Model-LN-UHA | PWMMS-UHA | 87.8 | 67.5 | 75.5 | |

| PWMMS-HA | 84.9 | 78.6 | 81.4 | ||

| Model-LN-UHA-HA | PWMMS-HA | 84.3 | 70.2 | 79.7 | |

| Model-LN-HA-UHA | PWMMS-UHA | 93.0 | 62.4 | 73.1 | |

| I-65/865/465 | Model-G | PWMMS-HA | 92.3 | 98.6 | 95.3 |

| PWMMS-UHA | 90.0 | 98.5 | 94.1 | ||

| Model-LN-HA | PWMMS-HA | 86.3 | 56.5 | 66.2 | |

| PWMMS-UHA | 7.6 | 5.3 | 6.2 | ||

| Model-LN-UHA | PWMMS-UHA | 77.1 | 66.4 | 69.1 | |

| PWMMS-HA | 85.2 | 73.5 | 78.3 | ||

| Model-LN-UHA-HA | PWMMS-HA | 89.3 | 67.3 | 75.8 | |

| Model-LN-HA-UHA | PWMMS-UHA | 66.7 | 60.4 | 61.3 |

| Approach | Time Taken (s) for 1-Mile-Long Lane Marking Detection | Platform |

|---|---|---|

| Geometric/morphological 1 | ~450 | 32 GB RAM computer |

| Deep/transfer-learning-based 1 | ~25 | Google Collaboratory |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, Y.-T.; Lin, Y.-C.; Habib, A. Generalized LiDAR Intensity Normalization and Its Positive Impact on Geometric and Learning-Based Lane Marking Detection. Remote Sens. 2022, 14, 4393. https://doi.org/10.3390/rs14174393

Cheng Y-T, Lin Y-C, Habib A. Generalized LiDAR Intensity Normalization and Its Positive Impact on Geometric and Learning-Based Lane Marking Detection. Remote Sensing. 2022; 14(17):4393. https://doi.org/10.3390/rs14174393

Chicago/Turabian StyleCheng, Yi-Ting, Yi-Chun Lin, and Ayman Habib. 2022. "Generalized LiDAR Intensity Normalization and Its Positive Impact on Geometric and Learning-Based Lane Marking Detection" Remote Sensing 14, no. 17: 4393. https://doi.org/10.3390/rs14174393

APA StyleCheng, Y.-T., Lin, Y.-C., & Habib, A. (2022). Generalized LiDAR Intensity Normalization and Its Positive Impact on Geometric and Learning-Based Lane Marking Detection. Remote Sensing, 14(17), 4393. https://doi.org/10.3390/rs14174393