Comparison of Different Transfer Learning Methods for Classification of Mangrove Communities Using MCCUNet and UAV Multispectral Images

Abstract

:1. Introduction

2. Materials and Methods

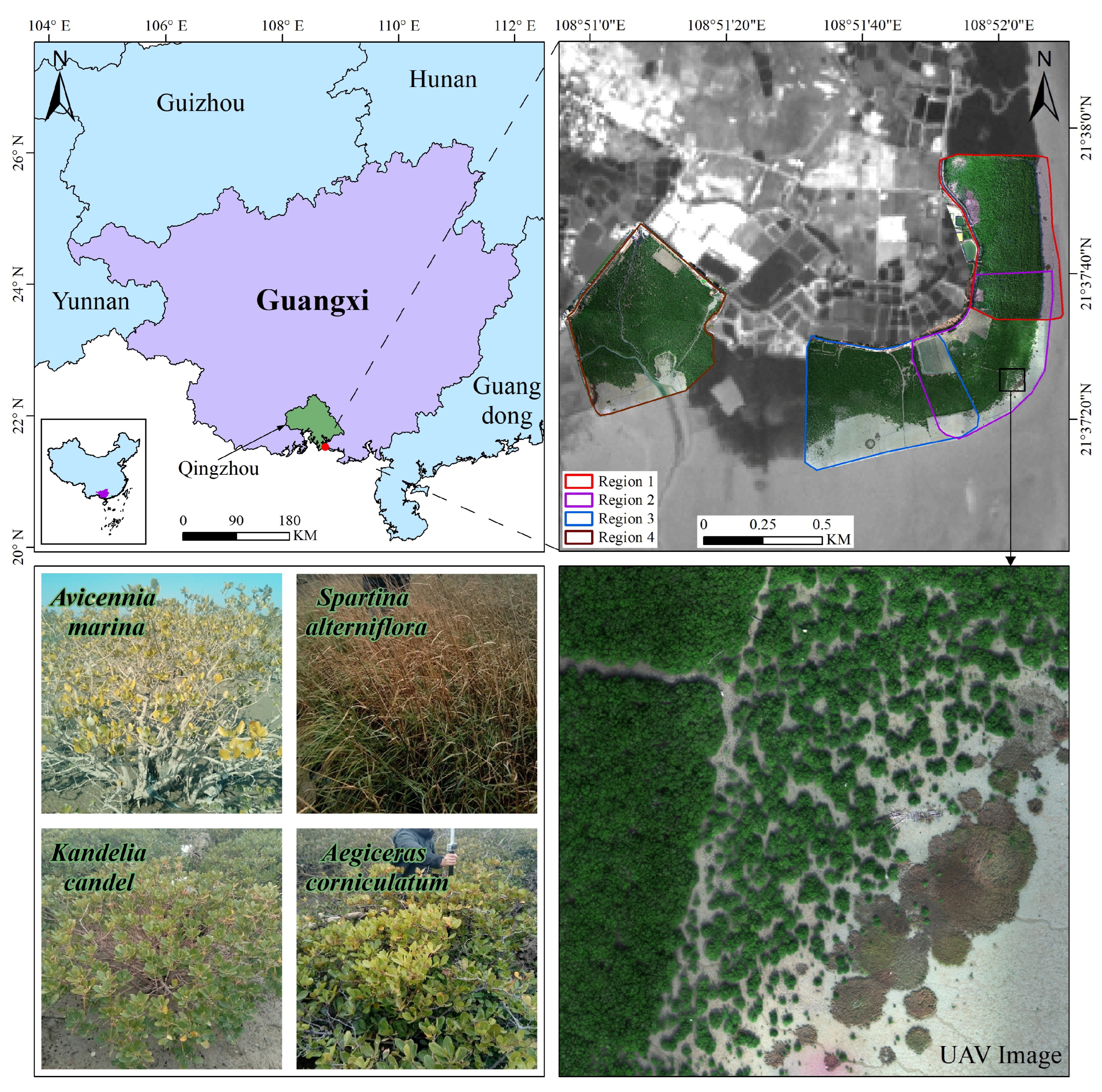

2.1. Study Area

2.2. Data Source

2.2.1. UAV Data Acquisition and Preprocessing

2.2.2. Field Investigation and Generation of a Semantic Label

2.3. Methods

2.3.1. Construction and Dimension Reduction of Multidimensional Image Datasets

2.3.2. Construction of Mangrove Community Classification Model Based on CNN Algorithms

- DeepLabV3+

- HRNet

- MCCUNet

- (1)

- As shown in Figure 6, we replaced the depth-wise convolution of size 3 × 3 for down-sampling in Xception, the backbone network of the encoder, with mixed depth-wise convolution (MixConv) [35], by adding convolution kernels of a larger size to increase the receptive field, thereby improving the performance. So that larger convolution kernels were added without increasing the number of parameters too much, the number of convolution kernels increased layer by layer, that is, from the first down-sampling layer containing two convolution kernels to the third down-sampling layer containing four convolution kernels, and the number of channels of convolution kernels of different sizes adopted an exponential partition (for example, if a down-sampling layer with four convolution kernels has a total of 64 channels, then the number of channels divided exponentially by each convolution kernel is (32, 16, 8, 8)). In addition, the convolutions in ASPP were replaced with depth-wise separable convolutions to reduce the number of parameters without affecting the accuracy.

- (2)

- To obtain edge detail features and further enhance the segmentation performance, more low-level features were added to the decoder (Figure 5) on the basis of the original encoder. After improvement, low-level features with sampling coefficients of 1/2, 1/4, and 1/8 were added. To not influence the expression of high-level semantic features, the number of channels of the three low-level features was reduced to 48 and then merged with the high-level semantic features. The features were then refined by two 3 × 3 depth-wise separable convolutions.

- Models for the classification of mangrove communities

- (1)

- When the algorithm is identical but the feature dataset is different: Taking the MCCUNet algorithm as an example, we compared Scenarios 2 and 1 in Group 3 to explore the impact of adding TFs on the categorization of mangrove communities. We compared Scenarios 3 and 1 to explore the impact of adding VIs, and we compared Scenarios 4 and 1 to explore the impact of adding TFs and VIs.

- (2)

- When the feature dataset is identical but the algorithm is different: Taking the OSTV feature dataset as an example, we compared Scenario 4 of Groups 1~3 to explore the differences in the classification performance of DeepLabV3+, HRNet, and MCCUNet and then evaluated the effectiveness of the improved MCCUNet.

2.3.3. Transfer Learning Strategies for Mangrove Communities

- Frozen Transfer Learning (F-TL)

- Fine-Tuned Transfer Learning (Ft-TL)

- Sensor-and-Phase Transfer Learning (SaP-TL)

2.3.4. Accuracy Assessment

3. Results

3.1. Results Analysis of Mangrove-Community Classification with Different Feature Datasets

3.2. Evaluation of Classification Performance Using the MCCUNet Algorithm

- (1)

- In Region 1, the three algorithms achieved satisfactory OA when using four feature datasets, and all the OA values were above 75%. The trend of change in the OA of the MCCUNet algorithm was more stable than that of the DeepLabV3+ and HRNet, and the MCCUNet algorithm achieved the highest OA (96.7%), which was 3.5% and 4.08% higher than those of the DeepLabV3+ and HRNet algorithms, respectively. Furthermore, when using the OSV feature dataset, the OA of the MCCUNet and DeepLabV3+ algorithms differed the most, reaching 13.59%, and there was a significant difference between the classification results of the MCCUNet and DeepLabV3+ algorithms within the 95% confidence interval (Table 7). When using the OSTV feature dataset, the OA of the MCCUNet and HRNet algorithms differed by a maximum of 8.93%, and within the 95% confidence interval, the classification results of the MCCUNet and HRNet algorithms also differed significantly (Table 7).

- (2)

- In Region 4, the lowest OA among the three algorithms was 90%, and the trend of variation in the OA of the DeepLabV3+ and HRNet algorithms was more stable than that in Region 1. When employing the OSTV feature dataset, all three algorithms had the highest OA, among which the highest OA (97.24%) of the MCCUNet algorithm was 1.03% and 2.07% higher than that of the DeepLabV3+ and HRNet algorithms, respectively. Furthermore, according to McNemar’s chi-square test (Table 7), within the 95% confidence interval, there was a significant difference between the classification results of MCCUNet and HRNet algorithms when using the OSTV feature dataset, while the opposite was true between the MCCUNet and DeepLabV3+ algorithms.

- (1)

- In Region 1 (Figure 13a,b), when using the OST feature dataset (Figure 13a), there was a large difference between the abilities of the three algorithms to identify the DM. Among them, the MCCUNet algorithm had the best ability to identify the DM, and the overall distribution and the boundary contour of the DM in its classification results were relatively complete, while the DeepLabV3+ algorithm misclassified most of the DM as part of the MF. This phenomenon corresponded to what the confusion matrix exhibited (Figure 14a). Furthermore, when comparing the classifications while using the MCCUNet algorithm, we can see that the edge and the middle part of the DM in the HRNet-algorithm-based classifications appeared to be confused with AF and TV. When using the OSTV dataset (Figure 13b), there were certain differences in the descriptions of KC between the classification results of the three algorithms. The MCCUNet algorithm outperformed the others in identifying KC, while the classification results of the DeepLabV3+ and HRNet algorithms showed confusion between AM and KC, and this was consistent with the phenomenon that KC was misclassified as AM in the confusion matrix (Figure 14b).

- (2)

- In Region 4 (Figure 13c,d), when using the OST feature dataset (Figure 13c), the three algorithms also showed large differences in the ability to identify the DM. The distribution range of the DM was the most complete in the classification result of the MCCUNet algorithm, while both DeepLabV3+ and HRNet algorithms misclassified the DM as TV and part of the MF. Furthermore, DeepLabV3+ and HRNet misclassified AC as KC to different degrees, while in the classification result of MCCUNet, this situation was greatly improved, and this is consistent with the description of the corresponding confusion matrix (Figure 14c). When using the OSTV feature dataset (Figure 13d), there were differences in the distribution range of AC in the classification results of the three algorithms. Among them, the MCCUNet had the best ability to identify AC, and only a small number of instances of AC and KC were confused, while in the classification results of DeepLabV3+ and HRNet, the degree of confusion between AC and KC was more serious, and this phenomenon was also reflected in the corresponding confusion matrix (Figure 14d).

3.3. Evaluation of the Effect of Different Transfer-Learning Strategies on Mapping Mangrove Communities

3.3.1. Classification Results of Mangrove Communities Based on the Frozen-Transfer-Learning Strategy

- (1)

- In Region 2 (Figure 15), the classification accuracy of AM and KC was good; that is, both had an F1–score above 80% and both had the highest F1–score when using the OS dataset (F1–scores were 92.73% and 94.16%, respectively). Except for the mangrove communities, the F1–scores of the MF and SA fluctuated widely, with a difference of 26.55% and 44.53% between the highest and lowest F1–scores of the two, respectively.

- (2)

- Compared to Region 2, in Region 3 (Figure 15), the classification accuracy of both AM and KC improved (F1–scores of both were higher than 90%), with AM achieving the highest accuracy, i.e., 93.8%, using the OSV feature dataset, and KC achieving the highest accuracy, i.e., 99.5%, using the OST feature dataset. Except for mangrove communities, the range of variation in F1–scores of the MF was more stable in Region 3, with the D-value between the highest and lowest F1–scores being 4.65%, while the F1–scores of SA varied greatly, with the highest F1–score at 59.38% and the lowest F1–score at 15.09%.

- (1)

- In Region 2 (Figure 16a,b), the classification results of the four feature datasets showed large differences in the descriptions of AM and KC (Figure 16a). The OST feature dataset was not satisfactory, misclassifying KC in most cases as AM. In the classification results using the other three feature datasets, this phenomenon improved. However, compared with the OSV feature dataset, the OS and OSTV feature datasets misclassified some of the AM as KC. The four feature datasets also showed differences in describing SA (Figure 16b). When using the OSV feature dataset, most of the SA was misclassified as part of the MF, and this phenomenon was improved to a certain extent when using the OSV feature dataset, while the overall outline of the SA was still incomplete. When using the OS dataset, the SA edge was relatively complete, while a small part of its interior was still misclassified as part of the MF, and when using the OST dataset, the problem of the missing inside and edge of the SA was almost resolved.

- (2)

- In Region 3 (Figure 16c,d), there was a certain difference between the classification results of the four feature datasets in describing the WB and SA. As shown in Figure 16c, the classification result from using the OST feature dataset was disappointing, and most of the WB was misclassified as part of the MF, while the single WB in the lower left corner was relatively complete. In the classification result using the OS feature dataset, the tributary could not be identified, while in that using the OSV and OSTV feature datasets, the WB was well identified. Moreover, the WB seemed to be overestimated when using OSTV the feature dataset. It is evident from Figure 16d that most of the SA was misclassified as part of the MF in all classification results. Except for the OSV feature dataset, some of the SA was misclassified as AM when using other feature datasets. Similarly, SA was misclassified as KC when using all other feature datasets, except for the OST feature dataset.

3.3.2. Classification Results of Mangrove Communities Based on the Fine-Tuned Transfer Learning Strategy

- (1)

- In Region 2 (Figure 18), both mangrove communities (AM and KC) showed good F1–scores, with an F1–score of more than 84%. AM achieved the highest F1–score (93.04%) when the OSV feature dataset was used, while KC achieved the highest F1–score (94.69%) when the OS feature dataset was used. Except for the mangrove communities, the F1–scores of the MF and SA fluctuated a lot, and the MF achieved the highest and lowest F1–scores when the OS and OSTV feature datasets were used, with a difference of 22.25%, while SA achieved the highest and lowest F1–scores when the OST and OSTV feature datasets were used, with a difference of 29.61%.

- (2)

- In Region 3 (Figure 18), the F1–score of mangrove communities (AM and KC) was above 90%, higher than that in Region 2, and KC and AM achieved the highest F1–scores, of 98.02% and 92.87%, using the OS and OST feature datasets, respectively. Except for the mangrove communities, the trend of change in the F1–scores of the MF was more stable in Region 3 than in Region 2, and the D-value between the highest and lowest F1–scores was 6.55%. The F1–score of SA showed a larger variation, and its highest F1–score was 68.42% (using the OS feature dataset) and lowest F1–score was 40% (using the OSTV feature dataset).

- (1)

- In Region 2 (Figure 19a,b), after transfer-learning implementation, the classification results of the four feature datasets showed a large difference in the descriptions of the distribution ranges of AM and KC (Figure 19a). The description of the distribution range of AM and KC had a large deviation when using the OST feature dataset, where some KC was misclassified as AM, and the situation was improved to varying degrees when using the remaining feature datasets. However, the classification results of OS and OSTV feature datasets overestimated KC. In comparison, the OSV feature dataset was more accurate in describing the distribution of AM and KC. Furthermore, the classification results of the four feature datasets differed significantly in describing the distribution range of SA (Figure 19b); all four feature datasets misclassified SA as part of the MF. The accuracy with which the four feature datasets described SA is ranked from high to low as follows: OST > OS > OSV > OSTV. A small part of SA was misclassified as AM in the classification results of the OST feature dataset.

- (2)

- In Region 3 (Figure 19c,d), there were large differences in the classification results of the four feature datasets in describing the WB and SA. As shown in Figure 19c, part of the WB was misclassified as part of the MF when using the OST feature dataset. However, this phenomenon was improved in the classification results of the remaining feature datasets, while the WB was overestimated when using the OSTV feature dataset. The description of the WB was similar when using OS and OST feature datasets, while there was a hole in the middle of the WB when using the OS feature dataset; that is, a small part of the WB was misclassified as part of the MF, and this phenomenon was improved when using the OSV feature dataset. As shown in Figure 19d, most of the SA was misclassified as part of the MF, using the four feature datasets. Among them, the OS feature dataset was the best in classifying SA, followed by the OSV feature dataset, while the OSTV feature dataset was unsatisfactory in classifying SA.

3.3.3. Classification Results of Mangrove Communities Based on the Sensor-and-Phase Transfer-Learning Strategy

- (1)

- In Region 1 (Figure 21), the F1–score of three mangrove communities, namely AM, KC, and AC, was above 85%, and the three mangrove communities all achieved the highest F1–score when using the OS feature dataset. Among them, the F1–score of AC had the smallest variation (the D-value between the highest and lowest F1–scores was 2.44%) and the F1–score of KC had the largest variation (the D-value between the highest and lowest F1–scores was 10.07%). Except for the mangrove communities, the trends of change in the F1–scores of the MF and the WB had a certain similarity; that is, the lowest F1–score was achieved when using the OST feature dataset and the highest F1–score was achieved when using the OSTV feature dataset, and the D-values between the highest and lowest F1–scores of the MF and the WB was 14.51% and 30.88%, respectively.

- (2)

- In Region 4 (Figure 21), among the three mangrove communities, the F1–scores of AM and AC was above 70%, while KC achieved the lowest F1–score, i.e., 16.67%, when the OST feature dataset was used. In addition, compared with Region 1, the F1–scores of AM and AC fluctuated more, with the D-values between the highest and lowest F1–scores being 23.03% and 15.42%, respectively. Except for the mangrove communities, the trend of change in the F1–scores of the MF and the WB was consistent when using the four feature datasets; that is, the MF and the WB obtained the highest and lowest F1–scores when using the identical dataset (lowest when using the OST feature dataset and highest when using the OSV feature dataset), and the D-values between the highest and lowest F1–scores of the two was 5.69% and 6.17%, respectively.

- (1)

- In Region 1 (Figure 22a,b), the classification results from using the four feature datasets were significantly different in describing the distribution range of KC (Figure 22a). In all classification results, KC was misclassified as AM, and the KC classification accuracy of each feature dataset ranked from low to high as follows: OS > OSV > OSTV > OST. Except for the OSV feature dataset, the others had misclassified KC as AC. There were also great differences in the ability of the four feature datasets in recognizing the WB (Figure 22b). Among them, the OSTV feature dataset was the best in classifying the WB, and its edge contour was relatively clear, with almost no missing edge, while most of the WB in the classification results using the remaining feature datasets was misclassified as being part of the MF, and the contour of the WB was missing to a greater extent.

- (2)

- In Region 4 (Figure 22c,d), the classification results of the four feature datasets had large differences in describing the distribution range of KC and AM (Figure 22c). The OST feature dataset misclassified most of the KC as AM, but when using the remaining feature datasets, the situation was greatly improved, while when using the OS and OSTV feature datasets, there was some confusion in KC and AC. In contrast, the OSV feature dataset was the most accurate in describing KC. The four feature datasets provided different descriptions of the AC distribution range (Figure 22d). When using the OS and OST feature datasets, some AC was misclassified as AM, and this situation was improved to a certain extent when using the other two feature datasets, while when using the OSTV feature dataset, some AM was misclassified as KC. In contrast, when using the OSV feature dataset, the description of the distribution range of KC was more accurate.

3.3.4. Statistical Analysis of the Classification Accuracy of Mangrove Communities for Three Transfer-Learning Strategies

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Vegetation Indices and Calculation Formula

| Indices | Abbreviations | Formulas |

| Anthocyanin Reflectance Index 1 | ARI1 | |

| Anthocyanin Reflectance Index 2 | ARI2 | |

| Atmospherically Resistant Vegetation Index | ARVI | |

| Blue–Green Ratio Index | BGRI | |

| Color Index of Vegetation | CIVE | |

| Difference Vegetation Index | DVI | |

| Enhanced Vegetation Index | EVI | |

| Excess Green index | ExG | |

| Excess Green minus excess Red | ExGR | |

| Green Atmospherically Resistant Index | GARI | |

| Green Difference Vegetation Index | GDVI | |

| Global Environment Monitoring Index | GEMI | |

| Green Normalized Difference Vegetation Index | GNDVI | |

| Red–Green–Blue vegetation index | RGBVI | |

| Green Ratio Vegetation Index | GRVI | |

| Infrared Percentage Vegetation Index | IPVI | |

| Leaf Area Index | LAI | |

| Modified Chlorophyll Absorption Ratio Index | MCARI | |

| Modified Chlorophyll Absorption Ratio Index Improved | MCARI2 | |

| Modified Non-Linear Index | MNLI | |

| Modified Red Edge Normalized Difference Vegetation Index | MRENDVI | |

| Modified Red Edge Simple Ratio | MRESR | |

| Modified Simple Ratio | MSR | |

| Modified Triangular Vegetation Index | MTVI | |

| Modified Triangular Vegetation Index—Improved | MTVI2 | |

| Normalized Multiband Drought Index | NDVI | |

| Normalized Green–Blue Difference Index | NGBDI | |

| Normalized Green–Red Difference Index | NGRDI | |

| Nonlinear Vegetation Index | NLI | |

| Optimized Soil Adjusted Vegetation Index | OSAVI | |

| Plant Senescence Reflectance Index | PSRI | |

| Renormalized Difference Vegetation Index | RDVI | |

| Red Edge Normalized Difference Vegetation Index | RENDVI | |

| Red–Green Ratio Index | RGRI | |

| Soil Adjusted Vegetation Index | SAVI | |

| Structure Insensitive Pigment Index | SIPI | |

| Simple Ratio | SR | |

| Simple Ratio Red | SRRed | |

| Transformed Chlorophyll Absorption Reflectance Index | TCARI | |

| Transformed Difference Vegetation Index | TDVI | |

| Triangular Vegetation Index | TVI | |

| Visible Atmospherically Resistant Index | VARI | |

| Visible Light Difference Vegetation Index | VDVI | |

| Vegetative color vegetation index | VEG | |

| Vogelmann Red Edge Index 1 | VREI1 |

References

- Maurya, K.; Mahajan, S.; Chaube, N. Remote sensing techniques: Mapping and monitoring of mangrove ecosystem—A review. Complex Intell. Syst. 2021, 7, 2797–2818. [Google Scholar] [CrossRef]

- Donato, D.C.; Kauffman, J.B.; Murdiyarso, D.; Kurnianto, S.; Stidham, M.; Kanninen, M. Mangroves among the most carbon-rich forests in the tropics. Nat. Geosci. 2011, 4, 293–297. [Google Scholar] [CrossRef]

- Fu, B.; He, X.; Yao, H.; Liang, Y.; Deng, T.; He, H.; Fan, D.; Lan, G.; He, W. Comparison of RFE-DL and stacking ensemble learning algorithms for classifying mangrove species on UAV multispectral images. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102890. [Google Scholar] [CrossRef]

- Wang, L.; Jia, M.; Yin, D.; Tian, J. A review of remote sensing for mangrove forests: 1956–2018. Remote Sens. Environ. 2019, 231, 111223. [Google Scholar] [CrossRef]

- Li, Q.; Wong, F.K.K.; Fung, T. Classification of Mangrove Species Using Combined WordView-3 and LiDAR Data in Mai Po Nature Reserve, Hong Kong. Remote Sens. 2019, 11, 2114. [Google Scholar] [CrossRef] [Green Version]

- Yan, Y.; Deng, L.; Liu, X.; Zhu, L. Application of UAV-Based Multi-angle Hyperspectral Remote Sensing in Fine Vegetation Classification. Remote Sens. 2019, 11, 2753. [Google Scholar] [CrossRef] [Green Version]

- Diez, Y.; Kentsch, S.; Fukuda, M.; Caceres, M.; Moritake, K.; Cabezas, M. Deep Learning in Forestry Using UAV-Acquired RGB Data: A Practical Review. Remote Sens. 2021, 13, 2837. [Google Scholar] [CrossRef]

- Ahmed, O.S.; Shemrock, A.; Chabot, D.; Dillon, C.; Williams, G.; Wasson, R.; Franklin, S.E. Hierarchical land cover and vegetation classification using multispectral data acquired from an unmanned aerial vehicle. Int. J. Remote Sens. 2017, 38, 2037–2052. [Google Scholar] [CrossRef]

- Villoslada, M.; Bergamo, T.; Ward, R.; Burnside, N.; Joyce, C.; Bunce, R.; Sepp, K. Fine scale plant community assessment in coastal meadows using UAV based multispectral data. Ecol. Indic. 2020, 111, 105979. [Google Scholar] [CrossRef]

- Manna, S.; Raychaudhuri, B. Mapping distribution of Sundarban mangroves using Sentinel-2 data and new spectral metric for detecting their health condition. Geocarto Int. 2018, 35, 434–452. [Google Scholar] [CrossRef]

- Cao, J.; Leng, W.; Liu, K.; Liu, L.; He, Z.; Zhu, Y. Object-Based Mangrove Species Classification Using Unmanned Aerial Vehicle Hyperspectral Images and Digital Surface Models. Remote Sens. 2018, 10, 89. [Google Scholar] [CrossRef] [Green Version]

- Jiang, Y.; Zhang, L.; Yan, M.; Qi, J.; Fu, T.; Fan, S.; Chen, B. High-Resolution Mangrove Forests Classification with Machine Learning Using Worldview and UAV Hyperspectral Data. Remote Sens. 2021, 13, 1529. [Google Scholar] [CrossRef]

- Mahdianpari, M.; Salehi, B.; Rezaee, M.; Mohammadimanesh, F.; Zhang, Y. Very Deep Convolutional Neural Networks for Complex Land Cover Mapping Using Multispectral Remote Sensing Imagery. Remote Sens. 2018, 10, 1119. [Google Scholar] [CrossRef] [Green Version]

- Hasan, M.; Ullah, S.; Khan, M.J.; Khurshid, K. Comparative analysis of svm, ann and cnn for classifying vegetation species using hyperspectral thermal infrared data. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W13, 1861–1868. [Google Scholar] [CrossRef] [Green Version]

- Wei, W.; Gu, H.; Deng, W.; Xiao, Z.; Ren, X. ABL-TC: A lightweight design for network traffic classification empowered by deep learning. Neurocomputing 2022, 489, 333–344. [Google Scholar] [CrossRef]

- Lou, P.; Fu, B.; He, H.; Li, Y.; Tang, T.; Lin, X.; Fan, D.; Gao, E. An Optimized Object-Based Random Forest Algorithm for Marsh Vegetation Mapping Using High-Spatial-Resolution GF-1 and ZY-3 Data. Remote Sens. 2020, 12, 1270. [Google Scholar] [CrossRef] [Green Version]

- Zhou, R.; Yang, C.; Li, E.; Cai, X.; Yang, J.; Xia, Y. Object-Based Wetland Vegetation Classification Using Multi-Feature Selection of Unoccupied Aerial Vehicle RGB Imagery. Remote Sens. 2021, 13, 4910. [Google Scholar] [CrossRef]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Computer Vision—ECCV 2018; Springer International Publishing: Cham, Switzerland, 2018; pp. 833–851. [Google Scholar]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep High-Resolution Representation Learning for Human Pose Estimation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Xu, Z.; Zhou, Y.; Wang, S.; Wang, L.; Li, F.; Wang, S.; Wang, Z. A Novel Intelligent Classification Method for Urban Green Space Based on High-Resolution Remote Sensing Images. Remote Sens. 2020, 12, 3845. [Google Scholar] [CrossRef]

- Ayhan, B.; Kwan, C. Tree, Shrub, and Grass Classification Using Only RGB Images. Remote Sens. 2020, 12, 1333. [Google Scholar] [CrossRef] [Green Version]

- Liu, G.; Chai, Z. Image semantic segmentation based on improved DeepLabv3+ network and superpixel edge optimization. J. Electron. Imaging 2022, 31, 013011. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Adhitya, Y.; Prakosa, S.W.; Köppen, M.; Leu, J.-S. Convolutional Neural Network Application in Smart Farming. In Communications in Computer and Information Science; Springer: Singapore, 2019; pp. 287–297. [Google Scholar]

- Adhiwibawa, M.A.S.; Ariyanto, M.R.; Struck, A.; Prilianti, K.R.; Brotosudarmo, T.H.P. Convolutional neural network in image analysis for determination of mangrove species. In Proceedings of the Third International Seminar on Photonics, Optics, and Its Applications (ISPhOA 2018), Surabaya, Indonesia, 1–2 August 2018. [Google Scholar] [CrossRef]

- Ahlswede, S.; Asam, S.; Röder, A. Hedgerow object detection in very high-resolution satellite images using convolutional neural networks. J. Appl. Remote Sens. 2021, 15. [Google Scholar] [CrossRef]

- Memon, N.; Parikh, H.; Patel, S.B.; Patel, D.; Patel, V.D. Automatic land cover classification of multi-resolution dualpol data using convolutional neural network (CNN). Remote Sens. Appl. Soc. Environ. 2021, 22, 100491. [Google Scholar] [CrossRef]

- Li, X.; Zhang, L.; Du, B.; Zhang, L.; Shi, Q. Iterative Reweighting Heterogeneous Transfer Learning Framework for Supervised Remote Sensing Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 2022–2035. [Google Scholar] [CrossRef]

- Hussain, M.; Bird, J.J.; Faria, D.R. A Study on CNN Transfer Learning for Image Classification. In Advances in Intelligent Systems and Computing; Springer: Cham, Switzerland, 2018; pp. 191–202. [Google Scholar]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D.D. A survey of transfer learning. J. Big Data 2016, 3, 1345–1459. [Google Scholar] [CrossRef] [Green Version]

- Bhuiyan, A.E.; Witharana, C.; Liljedahl, A. Use of Very High Spatial Resolution Commercial Satellite Imagery and Deep Learning to Automatically Map Ice-Wedge Polygons across Tundra Vegetation Types. J. Imaging 2020, 6, 137. [Google Scholar] [CrossRef]

- Zhang, D.; Ding, Y.; Chen, P.; Zhang, X.; Pan, Z.; Liang, D. Automatic extraction of wheat lodging area based on transfer learning method and deeplabv3+ network. Comput. Electron. Agric. 2020, 179, 105845. [Google Scholar] [CrossRef]

- Liu, M.; Fu, B.; Fan, D.; Zuo, P.; Xie, S.; He, H.; Liu, L.; Huang, L.; Gao, E.; Zhao, M. Study on transfer learning ability for classifying marsh vegetation with multi-sensor images using DeepLabV3+ and HRNet deep learning algorithms. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102531. [Google Scholar] [CrossRef]

- Wang, H.; Yao, Y.; Dai, X.; Chen, Z.; Wu, J.; Qiu, G.; Feng, T. How do ecological protection policies affect the restriction of coastal development rights? Analysis of choice preference based on choice experiment. Mar. Policy 2022, 136, 104905. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. MixConv: Mixed Depthwise Convolutional Kernels. arXiv 2019, arXiv:1907.09595. [Google Scholar] [CrossRef]

- Kingma, D.P.; Jimmy, B. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar] [CrossRef]

- Shao, G.; Tang, L.; Liao, J. Overselling overall map accuracy misinforms about research reliability. Landsc. Ecol. 2019, 34, 2487–2492. [Google Scholar] [CrossRef] [Green Version]

- McNemar, Q. Note on the sampling error of the difference between correlated proportions or percentages. Psychometrika 1947, 12, 153–157. [Google Scholar] [CrossRef]

- Zhang, Q.; Yang, Z.; Zhao, W.; Yu, X.; Yin, Z. Polarimetric SAR Landcover Classification Based on CNN with Dimension Reduction of Feature. In Proceedings of the 2021 IEEE 6th International Conference on Signal and Image Processing (ICSIP), Nanjing, China, 22–24 October 2021. [Google Scholar] [CrossRef]

- Liu, X.; Sun, Q.; Liu, B.; Huang, B.; Fu, M. Hyperspectral image classification based on convolutional neural network and dimension reduction. In Proceedings of the 2017 Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017. [Google Scholar] [CrossRef]

- Li, Q.; Wong, F.K.K.; Fung, T. Mapping multi-layered mangroves from multispectral, hyperspectral, and LiDAR data. Remote Sens. Environ. 2021, 258, 112403. [Google Scholar] [CrossRef]

- da Costa, L.B.; de Carvalho, O.L.F.; de Albuquerque, A.O.; Gomes, R.A.T.; Guimarães, R.F.; Júnior, O.A.D.C. Deep semantic segmentation for detecting eucalyptus planted forests in the Brazilian territory using sentinel-2 imagery. Geocarto Int. 2021, 37, 6538–6550. [Google Scholar] [CrossRef]

- Garg, R.; Kumar, A.; Bansal, N.; Prateek, M.; Kumar, S. Semantic segmentation of PolSAR image data using advanced deep learning model. Sci. Rep. 2021, 11, 1–18. [Google Scholar] [CrossRef]

- Zhang, X.; Bian, H.; Cai, Y.; Zhang, K.; Li, H. An improved tongue image segmentation algorithm based on Deeplabv3+ framework. IET Image Process. 2022, 16, 1473–1485. [Google Scholar] [CrossRef]

- Zeng, H.; Peng, S.; Li, D. Deeplabv3+ semantic segmentation model based on feature cross attention mechanism. J. Phys. Conf. Ser. 2020, 1678, 012106. [Google Scholar] [CrossRef]

- Liu, R.; He, D. Semantic Segmentation Based on Deeplabv3+ and Attention Mechanism. In Proceedings of the 2021 IEEE 4th Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), Chongqing, China, 18–20 June 2021. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, C.; Wu, H.; Chen, P. An improved Deeplabv3+ semantic segmentation algorithm with multiple loss constraints. PLoS ONE 2022, 17, e0261582. [Google Scholar] [CrossRef]

- Espejo-Garcia, B.; Mylonas, N.; Athanasakos, L.; Fountas, S.; Vasilakoglou, I. Towards weeds identification assistance through transfer learning. Comput. Electron. Agric. 2020, 171, 105306. [Google Scholar] [CrossRef]

- Maung, W.; Sasaki, J. Assessing the Natural Recovery of Mangroves after Human Disturbance Using Neural Network Classification and Sentinel-2 Imagery in Wunbaik Mangrove Forest, Myanmar. Remote Sens. 2020, 13, 52. [Google Scholar] [CrossRef]

- Nowakowski, A.; Mrziglod, J.; Spiller, D.; Bonifacio, R.; Ferrari, I.; Mathieu, P.P.; Garcia-Herranz, M.; Kim, D.-H. Crop type mapping by using transfer learning. Int. J. Appl. Earth Obs. Geoinf. 2021, 98, 102313. [Google Scholar] [CrossRef]

- Asgarian, A.; Sobhani, P.; Zhang, J.C.; Mihailescu, M.; Sibilia, A.; Ashraf, A.B.; Babak, T. A hybrid instance-based transfer learning method. arXiv 2018, arXiv:1812.01063. [Google Scholar] [CrossRef]

- Mo, Y.; Zhang, Z.; Wang, Y. Cross-view object classification in traffic scene surveillance based on transductive transfer learning. In Proceedings of the 2012 19th IEEE International Conference on Image Processin, Orlando, FL, USA, 30 September–3 October 2012. [Google Scholar] [CrossRef]

- Qin, X.; Yang, J.; Zhao, L.; Li, P.; Sun, K. A Novel Deep Forest-Based Active Transfer Learning Method for PolSAR Images. Remote Sens. 2020, 12, 2755. [Google Scholar] [CrossRef]

| Sensors | Number of Bands | Spectral Bands | Sensor’s Resolution | Spatial Resolutions (cm) | Number of Images |

|---|---|---|---|---|---|

| FC6360 | 5 | Blue (434–466 nm) Green (544–576 nm) Red (634–666 nm) Red edge (714–746 nm) NIR (814–866 nm) | 1600 × 1300 | 5.84 | 59,658 |

| RedEdge-MX | 5 | Blue (455–495 nm) Green (540–580 nm) Red (658–678 nm) Red edge (707–727 nm) NIR (800–880 nm) | 1280 × 960 | 6.67 | 28,370 |

| Land-Cover Types | Abbreviations | Samples | Image Features | Interpretation Features |

|---|---|---|---|---|

| Avicennia marina | AM | 850 |  | Dark green, irregular shape, close to the sea |

| Death mangrove | DM | 235 |  | Dark purple and claybank, irregular shape |

| Kandelia candel | KC | 525 |  | Bottle green, a circle or ellipse, close to the shore |

| Terrestrial vegetation | TV | 230 |  | Green, no uniform shape, scattered on the shore |

| Aegiceras corniculatum | AC | 205 |  | Light green, relatively fine texture, closest to the shore |

| Artifact | AF | 470 |  | White and bright, square, scattered in mangroves |

| Mudflat | MF | 730 |  | Hoary and flesh-colored, mostly on the periphery of vegetation |

| Spartina alterniflora | SA | 400 |  | Taupe and black, most are circles, closest to the sea |

| Water Body | WB | 415 |  | Wathet blue, most are banded |

| Scenarios | Feature Datasets | Descriptions | Number of Original Features | After High Correlation Elimination | After RFE→PCA | Number of Final Features |

|---|---|---|---|---|---|---|

| 1 | OS | DOM and DSM | 6 | - | - | 6 |

| 2 | OST | DOM, DSM, and TFs | 46 | 24 | 14→9 | 9 |

| 3 | OSV | DOM, DSM, and VIs | 51 | 27 | 16→9 | 9 |

| 4 | OSTV | DOM, DSM, TFs, and VIs | 91 | 45 | 24→12 | 12 |

| Regions | Algorithms | Groups | Feature Datasets | Scenarios | Number of Images of Each Scenario |

|---|---|---|---|---|---|

| Region 1 + Region 4 | DeepLabV3+ | Group 1 | OS | 1 | 1 × 105 |

| OST | 2 | ||||

| OSV | 3 | ||||

| OSTV | 4 | ||||

| HRNet | Group 2 | OS | 1 | 1 × 105 | |

| OST | 2 | ||||

| OSV | 3 | ||||

| OSTV | 4 | ||||

| MCCUNet | Group 3 | OS | 1 | 1 × 105 | |

| OST | 2 | ||||

| OSV | 3 | ||||

| OSTV | 4 |

| Algorithms | Strategies | Groups | Source Domain | Target Domain | Feature Datasets | Scenarios | Number of Each Scenario |

|---|---|---|---|---|---|---|---|

| MCCUNet | “F-TL” | Group I | Region 1 + Region 4 | Region 2 + Region 3 | OS | 1 | 1 × 105 |

| OST | 2 | ||||||

| OSV | 3 | ||||||

| OSTV | 4 | ||||||

| “Ft-TL” | Group II | Region 1 + Region 4 | Region 2 + Region 3 | OS | 1 | 1 × 105 | |

| OST | 2 | ||||||

| OSV | 3 | ||||||

| OSTV | 4 | ||||||

| “SaP-TL” | Group III | Region 1 + Region 4 (2021.01) | Region 1 (2020.11) | OS | 1 | 1 × 105 | |

| OST | 2 | ||||||

| OSV | 3 | ||||||

| OSTV | 4 | ||||||

| Region 4 (2020.11) | OS | 1 | 1 × 105 | ||||

| OST | 2 | ||||||

| OSV | 3 | ||||||

| OSTV | 4 |

| Regions | Algorithms | OS vs. OST | OS vs. OSV | OS vs. OSTV | OST vs. OSTV | OSV vs. OSTV |

|---|---|---|---|---|---|---|

| Region 1 | DeepLabV3+ | 17.29 * | 14.52 * | 34.13 * | 65.69 * | 65.64 * |

| HRNet | 5.49 * | 2.89 | 21.60 * | 6.21 * | 9.39 * | |

| MCCUNet | 2.56 | 7.26 * | 20.10 * | 10.32 * | 7.04 * | |

| Region 4 | DeepLabV3+ | 1.39 | 0.00 | 0.06 | 0.45 | 0.00 |

| HRNet | 8.31 * | 2.37 | 4.67 * | 0.84 | 0.14 | |

| MCCUNet | 6.72 * | 7.26 * | 8.65 * | 0.50 | 0.00 |

| Regions | Feature Datasets | MCCUNet vs. DeepLabV3+ | MCCUNet vs. HRNet | DeepLabV3+ vs. HRNet |

|---|---|---|---|---|

| Region 1 | OS | 7.14 * | 16.10 * | 30.23 * |

| OST | 38.64 * | 0.03 | 42.67 * | |

| OSV | 43.56 * | 0.02 | 45.38 * | |

| OSTV | 0.19 | 26.68 * | 21.60 * | |

| Region 4 | OS | 2.21 | 2.57 | 9.09 * |

| OST | 5.94 * | 2.53 | 0.64 | |

| OSV | 0.70 | 3.45 | 9.38 * | |

| OSTV | 1.79 | 6.86 * | 2.12 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Fu, B.; Sun, X.; Fan, D.; Wang, Y.; He, H.; Gao, E.; He, W.; Yao, Y. Comparison of Different Transfer Learning Methods for Classification of Mangrove Communities Using MCCUNet and UAV Multispectral Images. Remote Sens. 2022, 14, 5533. https://doi.org/10.3390/rs14215533

Li Y, Fu B, Sun X, Fan D, Wang Y, He H, Gao E, He W, Yao Y. Comparison of Different Transfer Learning Methods for Classification of Mangrove Communities Using MCCUNet and UAV Multispectral Images. Remote Sensing. 2022; 14(21):5533. https://doi.org/10.3390/rs14215533

Chicago/Turabian StyleLi, Yuyang, Bolin Fu, Xidong Sun, Donglin Fan, Yeqiao Wang, Hongchang He, Ertao Gao, Wen He, and Yuefeng Yao. 2022. "Comparison of Different Transfer Learning Methods for Classification of Mangrove Communities Using MCCUNet and UAV Multispectral Images" Remote Sensing 14, no. 21: 5533. https://doi.org/10.3390/rs14215533