Classification of Ground-Based Cloud Images by Contrastive Self-Supervised Learning

Abstract

:1. Introduction

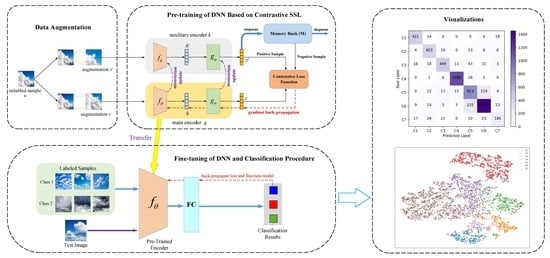

- The contrastive self-supervised learning (CSSL) is adopted to learn discriminating features of ground-based cloud images. To the best of our knowledge, this is the first work to utilize a self-supervised learning framework for ground-based remote sensing cloud classification, which provides a new perspective for the better utilization of cloud measuring instruments.

- The deep model learned from CSSL is transferred and serves as the appropriate initial parameters of the fine-tuning procedure. The overall approach integrates the advantages of unsupervised and supervised learning to boost the classification performance.

- The proposed method is demonstrated to outperform several state-of-the-art deep learning-based methods on a real dataset of ground-based cloud images, showing that CSSL is an effective strategy for exploiting the information of unlabeled cloud images.

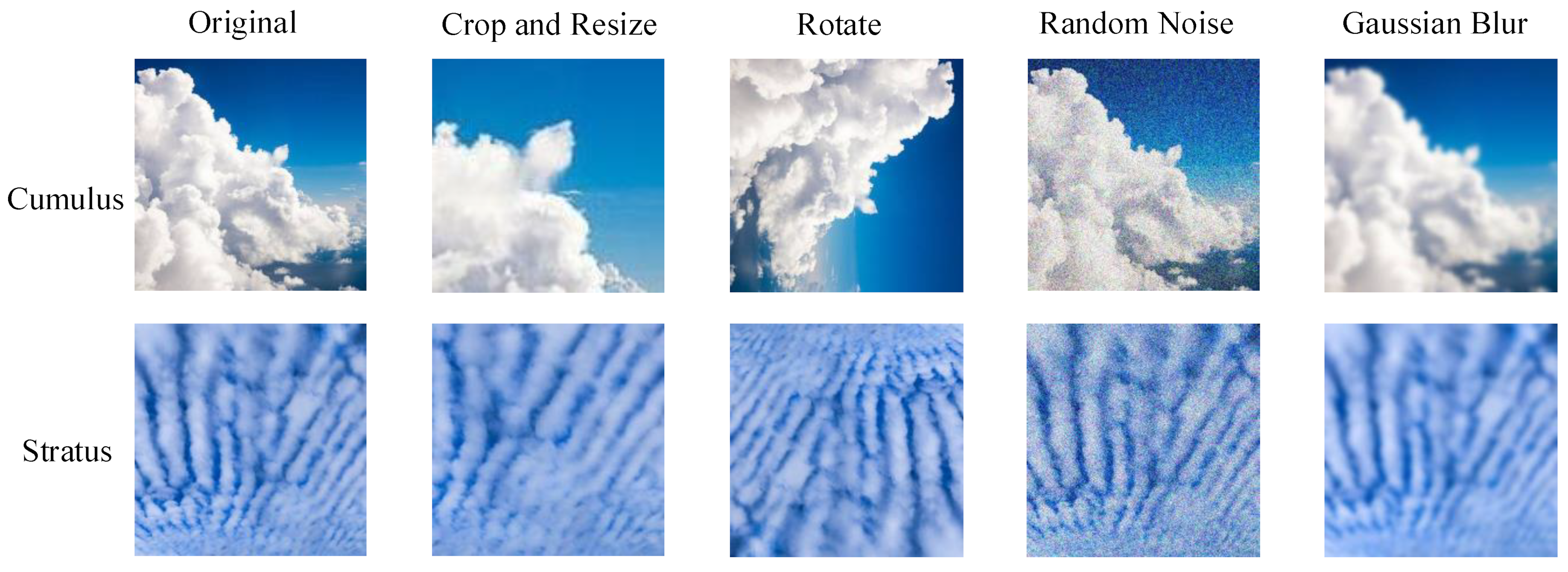

2. Method

3. Experiments and Results

3.1. Dataset Description

3.2. Evaluation Metrics

3.3. Experimental Settings

3.4. Experimental Results

3.4.1. Classification Performance

3.4.2. Visualization of Features

3.4.3. Comparison with Other Methods

4. Analysis and Discussion

4.1. Effect of the Temperature Parameter

4.2. Effect of the Momentum Coefficient

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| SSL | Self-supervised learning |

| CSSL | Contrastive self-supervised learning |

| TSI | Total Sky Imager |

| WSI | Whole Sky Imager |

| ASI | All Sky Imager |

| KNN | K-nearest neighbors |

| SVM | Support vector machine |

| ELM | Extreme learning machine |

| LDA | Linear discriminant analysis |

| MLP | Multilayer perceptron |

| LBP | Local binary pattern |

| LEP | Local edge pattern |

| DL | Deep learning |

| CNN | Convolutional neural networks |

| GNN | Graph neural networks |

| GCN | Graph convolutional networks |

| GAT | Graph attention network |

| DNN | Deep neural networks |

| FV | Fisher vector |

| SGD | Stochastic gradient descent |

| OA | Overall accuracy |

| AA | Average accuracy |

References

- Gorodetskaya, I.V.; Kneifel, S.; Maahn, M.; Van Tricht, K.; Thiery, W.; Schween, J.; Mangold, A.; Crewell, S.; Van Lipzig, N. Cloud and precipitation properties from ground-based remote-sensing instruments in East Antarctica. Cryosphere 2015, 9, 285–304. [Google Scholar] [CrossRef] [Green Version]

- Goren, T.; Rosenfeld, D.; Sourdeval, O.; Quaas, J. Satellite observations of precipitating marine stratocumulus show greater cloud fraction for decoupled clouds in comparison to coupled clouds. Geophys. Res. Lett. 2018, 45, 5126–5134. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Y.; Rosenfeld, D.; Zhu, Y.; Li, Z. Satellite-based estimation of cloud top radiative cooling rate for marine stratocumulus. Geophys. Res. Lett. 2019, 46, 4485–4494. [Google Scholar] [CrossRef]

- Liu, S.; Duan, L.; Zhang, Z.; Cao, X.; Durrani, T.S. Ground-Based Remote Sensing Cloud Classification via Context Graph Attention Network. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5602711. [Google Scholar] [CrossRef]

- Norris, J.R.; Allen, R.J.; Evan, A.T.; Zelinka, M.D.; O’Dell, C.W.; Klein, S.A. Evidence for climate change in the satellite cloud record. Nature 2016, 536, 72–75. [Google Scholar] [CrossRef] [Green Version]

- Zhong, B.; Chen, W.; Wu, S.; Hu, L.; Luo, X.; Liu, Q. A cloud detection method based on relationship between objects of cloud and cloud-shadow for Chinese moderate to high resolution satellite imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 4898–4908. [Google Scholar] [CrossRef]

- Young, A.H.; Knapp, K.R.; Inamdar, A.; Hankins, W.; Rossow, W.B. The international satellite cloud climatology project H-Series climate data record product. Earth Syst. Sci. Data 2018, 10, 583–593. [Google Scholar] [CrossRef] [Green Version]

- Calbó, J.; Sabburg, J. Feature extraction from whole-sky ground-based images for cloud-type recognition. J. Atmos. Ocean. Technol. 2008, 25, 3–14. [Google Scholar] [CrossRef] [Green Version]

- Nouri, B.; Wilbert, S.; Segura, L.; Kuhn, P.; Hanrieder, N.; Kazantzidis, A.; Schmidt, T.; Zarzalejo, L.; Blanc, P.; Pitz-Paal, R. Determination of cloud transmittance for all sky imager based solar nowcasting. Sol. Energy 2019, 181, 251–263. [Google Scholar] [CrossRef] [Green Version]

- Liu, S.; Li, M.; Zhang, Z.; Cao, X.; Durrani, T.S. Ground-Based Cloud Classification Using Task-Based Graph Convolutional Network. Geophys. Res. Lett. 2020, 47. [Google Scholar] [CrossRef]

- Dev, S.; Wen, B.; Lee, Y.H.; Winkler, S. Ground-based image analysis: A tutorial on machine-learning techniques and applications. IEEE Geosci. Remote Sens. Mag. 2016, 4, 79–93. [Google Scholar] [CrossRef]

- Chow, C.W.; Urquhart, B.; Lave, M.; Dominguez, A.; Kleissl, J.; Shields, J.; Washom, B. Intra-hour forecasting with a total sky imager at the UC San Diego solar energy testbed. Sol. Energy 2011, 85, 2881–2893. [Google Scholar] [CrossRef] [Green Version]

- Feister, U.; Shields, J. Cloud and radiance measurements with the VIS/NIR daylight whole sky imager at Lindenberg (Germany). Meteorol. Z. 2005, 14, 627–639. [Google Scholar] [CrossRef]

- Urquhart, B.; Kurtz, B.; Dahlin, E.; Ghonima, M.; Shields, J.; Kleissl, J. Development of a sky imaging system for short-term solar power forecasting. Atmos. Meas. Tech. 2015, 8, 875–890. [Google Scholar] [CrossRef] [Green Version]

- Cazorla, A.; Olmo, F.; Alados-Arboledas, L. Development of a sky imager for cloud cover assessment. J. Opt. Soc. Amer. A, Opt. Image Sci. 2008, 25, 29–39. [Google Scholar] [CrossRef]

- Nouri, B.; Kuhn, P.; Wilbert, S.; Hanrieder, N.; Prahl, C.; Zarzalejo, L.; Kazantzidis, A.; Blanc, P.; Pitz-Paal, R. Cloud height and tracking accuracy of three all sky imager systems for individual clouds. Sol. Energy 2019, 177, 213–228. [Google Scholar] [CrossRef] [Green Version]

- Ye, L.; Cao, Z.; Xiao, Y. DeepCloud: Ground-based cloud image categorization using deep convolutional features. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5729–5740. [Google Scholar] [CrossRef]

- Huertas-Tato, J.; Rodríguez-Benítez, F.; Arbizu-Barrena, C.; Aler-Mur, R.; Galvan-Leon, I.; Pozo-Vázquez, D. Automatic cloud-type classification based on the combined use of a sky camera and a ceilometer. J. Geophys. Res. Atmos. 2017, 122, 11045–11061. [Google Scholar] [CrossRef] [Green Version]

- Li, X.; Qiu, B.; Cao, G.; Wu, C.; Zhang, L. A Novel Method for Ground-Based Cloud Image Classification Using Transformer. Remote Sens. 2022, 14, 3978. [Google Scholar] [CrossRef]

- Buch Jr, K.A.; Sun, C.H. Cloud classification using whole-sky imager data. In Proceedings of the 9th Symposium on Meteorological Observations and Instrumentation, Charlotte, NC, USA, 27–31 May 1995; pp. 35–39. [Google Scholar]

- Singh, M.; Glennen, M. Automated ground-based cloud recognition. Pattern Anal. Appl. 2005, 8, 258–271. [Google Scholar] [CrossRef]

- Heinle, A.; Macke, A.; Srivastav, A. Automatic cloud classification of whole sky images. Atmos. Meas. Tech. 2010, 3, 557–567. [Google Scholar] [CrossRef] [Green Version]

- Liu, L.; Sun, X.; Chen, F.; Zhao, S.; Gao, T. Cloud classification based on structure features of infrared images. J. Atmos. Ocean. Technol. 2011, 28, 410–417. [Google Scholar] [CrossRef]

- Isosalo, A.; Turtinen, M.; Pietikäinen, M.; Isosalo, A.; Turtinen, M.; Pietikäinen, M. Cloud characterization using local texture information. In Proceedings of the 2007 Finnish Signal Processing Symposium (FINSIG 2007), Oulu, Finland, 30 August 2007. [Google Scholar]

- Liu, S.; Wang, C.; Xiao, B.; Zhang, Z.; Shao, Y. Salient local binary pattern for ground-based cloud classification. Acta Meteorol. Sin. 2013, 27, 211–220. [Google Scholar] [CrossRef]

- Wang, Y.; Shi, C.; Wang, C.; Xiao, B. Ground-based cloud classification by learning stable local binary patterns. Atmos. Res. 2018, 207, 74–89. [Google Scholar] [CrossRef]

- Oikonomou, S.; Kazantzidis, A.; Economou, G.; Fotopoulos, S. A local binary pattern classification approach for cloud types derived from all-sky imagers. Int. J. Remote Sens. 2019, 40, 2667–2682. [Google Scholar] [CrossRef]

- Zhuo, W.; Cao, Z.; Xiao, Y. Cloud classification of ground-based images using texture–structure features. J. Atmos. Ocean. Technol. 2014, 31, 79–92. [Google Scholar] [CrossRef]

- Li, Q.; Zhang, Z.; Lu, W.; Yang, J.; Ma, Y.; Yao, W. From pixels to patches: A cloud classification method based on a bag of micro-structures. Atmos. Meas. Tech. 2016, 9, 753–764. [Google Scholar] [CrossRef] [Green Version]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef] [Green Version]

- Liu, W.; Wang, Z.; Liu, X.; Zeng, N.; Liu, Y.; Alsaadi, F.E. A survey of deep neural network architectures and their applications. Neurocomputing 2017, 234, 11–26. [Google Scholar] [CrossRef]

- Khanafer, M.; Shirmohammadi, S. Applied AI in instrumentation and measurement: The deep learning revolution. IEEE Instrum. Meas. Mag. 2020, 23, 10–17. [Google Scholar] [CrossRef]

- Wen, G.; Gao, Z.; Cai, Q.; Wang, Y.; Mei, S. A novel method based on deep convolutional neural networks for wafer semiconductor surface defect inspection. IEEE Trans. Instrum. Meas. 2020, 69, 9668–9680. [Google Scholar] [CrossRef]

- Lin, Y.H.; Chang, L. An Unsupervised Noisy Sample Detection Method for Deep Learning-Based Health Status Prediction. IEEE Trans. Instrum. Meas. 2022, 71, 2502211. [Google Scholar] [CrossRef]

- Bengio, Y.; Courville, A.; Vincent, P. Representation learning: A review and new perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1798–1828. [Google Scholar] [CrossRef] [Green Version]

- Reichstein, M.; Camps-Valls, G.; Stevens, B.; Jung, M.; Denzler, J.; Carvalhais, N. Deep learning and process understanding for data-driven Earth system science. Nature 2019, 566, 195–204. [Google Scholar] [CrossRef]

- Yuan, Q.; Shen, H.; Li, T.; Li, Z.; Li, S.; Jiang, Y.; Xu, H.; Tan, W.; Yang, Q.; Wang, J.; et al. Deep learning in environmental remote sensing: Achievements and challenges. Remote Sens. Environ. 2020, 241, 111716. [Google Scholar] [CrossRef]

- Ye, L.; Cao, Z.; Xiao, Y.; Li, W. Ground-based cloud image categorization using deep convolutional visual features. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 4808–4812. [Google Scholar]

- Shi, C.; Wang, C.; Wang, Y.; Xiao, B. Deep convolutional activations-based features for ground-based cloud classification. IEEE Geosci. Remote Sens. Lett. 2017, 14, 816–820. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, P.; Zhang, F.; Song, Q. CloudNet: Ground-based cloud classification with deep convolutional neural network. Geophys. Res. Lett. 2018, 45, 8665–8672. [Google Scholar] [CrossRef]

- Wang, M.; Zhou, S.; Yang, Z.; Liu, Z. CloudA: A Ground-Based Cloud Classification Method with a Convolutional Neural Network. J. Atmos. Ocean. Technol. 2020, 37, 1661–1668. [Google Scholar] [CrossRef]

- Liu, S.; Duan, L.; Zhang, Z.; Cao, X.; Durrani, T.S. Multimodal ground-based remote sensing cloud classification via learning heterogeneous deep features. IEEE Trans. Geosci. Remote Sens. 2020, 58, 7790–7800. [Google Scholar] [CrossRef]

- Liu, S.; Li, M.; Zhang, Z.; Xiao, B.; Cao, X. Multimodal ground-based cloud classification using joint fusion convolutional neural network. Remote Sens. 2018, 10, 822. [Google Scholar] [CrossRef] [Green Version]

- Liu, S.; Li, M.; Zhang, Z.; Xiao, B.; Durrani, T.S. Multi-evidence and multi-modal fusion network for ground-based cloud recognition. Remote Sens. 2020, 12, 464. [Google Scholar] [CrossRef] [Green Version]

- Jing, L.; Tian, Y. Self-supervised visual feature learning with deep neural networks: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 4037–4058. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, F.; Hou, Z.; Mian, L.; Wang, Z.; Zhang, J.; Tang, J. Self-supervised learning: Generative or contrastive. IEEE Trans. Knowl. Data Eng. 2021, in press. [Google Scholar] [CrossRef]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. In Proceedings of the the 37th International Conference on Machine Learning (ICML 2020), Virtual, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- He, K.; Fan, H.; Wu, Y.; Xie, S.; Girshick, R. Momentum contrast for unsupervised visual representation learning. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2020), Seattle, WA, USA, 13–19 June 2020; pp. 9729–9738. [Google Scholar]

- Caron, M.; Misra, I.; Mairal, J.; Goyal, P.; Bojanowski, P.; Joulin, A. Unsupervised learning of visual features by contrasting cluster assignments. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS 2020), Virtual, 6–12 December 2020. [Google Scholar]

- Grill, J.B.; Strub, F.; Altché, F.; Tallec, C.; Richemond, P.H.; Buchatskaya, E.; Doersch, C.; Pires, B.A.; Guo, Z.D.; Azar, M.G.; et al. Bootstrap your own latent: A new approach to self-supervised learning. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS 2020), Virtual, 6–12 December 2020. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2016), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Oord, A.v.d.; Li, Y.; Vinyals, O. Representation learning with contrastive predictive coding. arXiv 2018, arXiv:1807.03748. [Google Scholar]

- Zhang, L.; Zhang, S.; Zou, B.; Dong, H. Unsupervised deep representation learning and few-shot classification of PolSAR images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5100316. [Google Scholar] [CrossRef]

- Lv, Q.; Dou, Y.; Xu, J.; Niu, X.; Xia, F. Hyperspectral image classification via local receptive fields based random weights networks. In Proceedings of the 2015 International Conference on Natural Computation (ICNC), Zhangjiajie, China, 15–17 August 2015; pp. 971–976. [Google Scholar]

- Thompson, W.D.; Walter, S.D. A reappraisal of the kappa coefficient. J. Clin. Epidemiol. 1988, 41, 949–958. [Google Scholar] [CrossRef]

- Powers, D.M.W. Evaluation: From precision, recall and F-measure to ROC, informedness, markedness and correlation. J. Mach. Learn. Technol. 2011, 2, 37–63. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Van der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Hou, S.; Shi, H.; Cao, X.; Zhang, X.; Jiao, L. Hyperspectral Imagery Classification Based on Contrastive Learning. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5521213. [Google Scholar] [CrossRef]

| Cloud Type | Number of Samples | Descriptions | |||

|---|---|---|---|---|---|

| ID | Name | Total | Training | Test | |

| C1 | Cumulus | 1525 | 1068 | 457 | Puffy clouds with sharp outlines, flat bottom and raised top, white or light-gray |

| C2 | Altocumulus and Cirrocumulus | 1475 | 1033 | 442 | Patch, sheet or layer of clouds, mosaic-like, white or gray |

| C3 | Cirrus and Cirrostratus | 1906 | 1335 | 571 | Thin clouds, with fibrous (hair-like) appearance, whitish |

| C4 | Clear Sky | 3739 | 2618 | 1121 | Very few or no clouds, blue |

| C5 | Stratocumulus, Stratus and Altostratus | 3636 | 2546 | 1090 | Cloud sheet or layer of striated or uniform appearance, cause fog or fine precipitation, gray or whitish |

| C6 | Cumulonimbus and Nimbostratus | 5764 | 4035 | 1729 | Dark, thick clouds, mostly overcast, cause falling rain or snow, gray |

| C7 | Mixed Clouds | 955 | 669 | 286 | Two or more types of clouds |

| Category | Precision | Recall | F1-Score |

|---|---|---|---|

| C1 | 0.9015 | 0.9212 | 0.9113 |

| C2 | 0.8060 | 0.9118 | 0.8556 |

| C3 | 0.8891 | 0.7863 | 0.8346 |

| C4 | 0.9742 | 0.9777 | 0.9760 |

| C5 | 0.7246 | 0.7459 | 0.7351 |

| C6 | 0.8359 | 0.8398 | 0.8379 |

| C7 | 0.7750 | 0.6503 | 0.7072 |

| Method | OA(%) | AA(%) | Kappa |

|---|---|---|---|

| BOMS [29] | 61.76 | 52.91 | 0.5092 |

| CloudNet [41] | 75.58 | 69.33 | 0.6930 |

| VGG-19 [58] | 75.32 | 69.44 | 0.6921 |

| ResNet-50 [52] | 81.00 | 76.59 | 0.7637 |

| CSSL | 84.62 | 83.33 | 0.8093 |

| 0.01 | 0.05 | 0.1 | 0.5 | 0.8 | 1.0 | |

|---|---|---|---|---|---|---|

| OA(%) | 82.02 | 82.53 | 83.27 | 84.62 | 83.58 | 82.25 |

| m | 0.8 | 0.9 | 0.99 | 0.999 | 0.9999 |

|---|---|---|---|---|---|

| OA(%) | 80.78 | 81.34 | 83.16 | 84.62 | 83.97 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lv, Q.; Li, Q.; Chen, K.; Lu, Y.; Wang, L. Classification of Ground-Based Cloud Images by Contrastive Self-Supervised Learning. Remote Sens. 2022, 14, 5821. https://doi.org/10.3390/rs14225821

Lv Q, Li Q, Chen K, Lu Y, Wang L. Classification of Ground-Based Cloud Images by Contrastive Self-Supervised Learning. Remote Sensing. 2022; 14(22):5821. https://doi.org/10.3390/rs14225821

Chicago/Turabian StyleLv, Qi, Qian Li, Kai Chen, Yao Lu, and Liwen Wang. 2022. "Classification of Ground-Based Cloud Images by Contrastive Self-Supervised Learning" Remote Sensing 14, no. 22: 5821. https://doi.org/10.3390/rs14225821

APA StyleLv, Q., Li, Q., Chen, K., Lu, Y., & Wang, L. (2022). Classification of Ground-Based Cloud Images by Contrastive Self-Supervised Learning. Remote Sensing, 14(22), 5821. https://doi.org/10.3390/rs14225821